p Miner A Semantic Overlay Network Ronaldo Ferreira

![Search with Probabilistic Guarantees Simulation Results • Cohen and Shenker [SIGCOMM 2002] showed that Search with Probabilistic Guarantees Simulation Results • Cohen and Shenker [SIGCOMM 2002] showed that](https://slidetodoc.com/presentation_image/9084c0ca51c55dc7b6141eb3161dd5bf/image-47.jpg)

![Duplicate Elimination • Each contender sends sqrt(n ln 2/(E[Xi]-1)) in round i of the Duplicate Elimination • Each contender sends sqrt(n ln 2/(E[Xi]-1)) in round i of the](https://slidetodoc.com/presentation_image/9084c0ca51c55dc7b6141eb3161dd5bf/image-54.jpg)

![Uniform Sampling • Random Weight Distribution (RWD) [Awan et al 04] Ø Initialization: Assign Uniform Sampling • Random Weight Distribution (RWD) [Awan et al 04] Ø Initialization: Assign](https://slidetodoc.com/presentation_image/9084c0ca51c55dc7b6141eb3161dd5bf/image-78.jpg)

- Slides: 81

p. Miner: A Semantic Overlay Network Ronaldo Ferreira, Mehmet Koyuturk, Ananth Grama Department of Computer Science Purdue University

Background • Peer-to-Peer (P 2 P) networks are self-organizing distributed systems where participating nodes both provide and receive services from each other in a cooperative manner without distinguished roles as pure clients or pure servers. • P 2 P Internet applications have recently been popularized by file sharing applications, such as Napster and Gnutella. • P 2 P systems have many interesting technical aspects, such as decentralized control, self-organization, adaptation and scalability. • One of the key problems in large-scale P 2 P applications is to provide efficient algorithms for object location and routing within the network.

Background • Central server (Napster) Ø Search is centralized, data transfer is peer-to-peer. • Structured solution – “DHT” (Chord, Pastry, Tapestry, CAN) Ø Regular topologies (emulations of hypercube or mesh). Ø Guaranteed file lookup using hashes. Ø Lacks keyword search. • Unstructured solution (Gnutella) Ø Peers have constant degrees and organize themselves in an unstructured topology. Ø Controlled flooding used by real-world softwares: high message overhead. Ø Recent proposals: random walks, replication. Ø Trend towards distributed randomized algorithms.

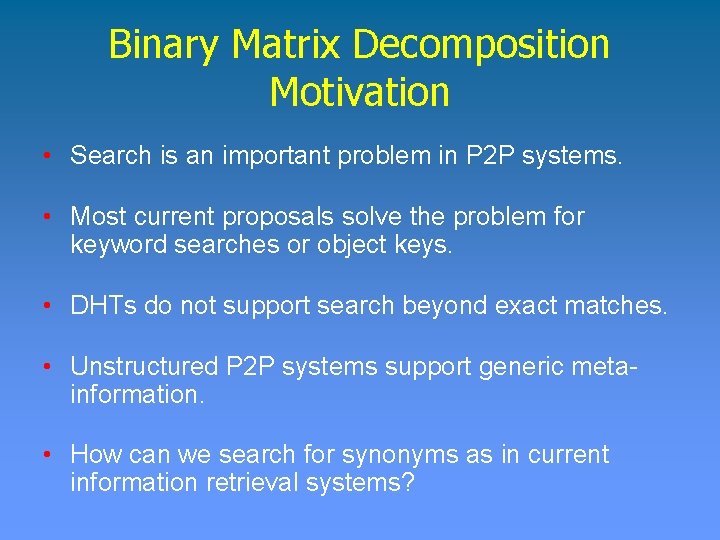

Background - DHT • All known proposals take as input a key and, in response, route a message to the node responsible for that key. • The keys are random binary strings of fixed length (generally 128 bits). Nodes have identifiers taken from the same space as the keys. • Each node maintains a routing table consisting of a small subset of nodes in the system. • Nodes route queries to neighboring nodes that make the most “progress” towards resolving the query.

Background - DHT 0 XXX 1 XXX 2 XXX 3 XXX 0112 2321 START 0112 routes a message to key 2000. 2032 First hop fixes first digit (2) 2001 END 2001 closest live node to 2000. Second hop fixes second digit (20)

Background - DHT • Scalable, efficient, and decentralized systems: ØO(log(N)) routing table entries per node ØRoute in O(log(N)) number of hops

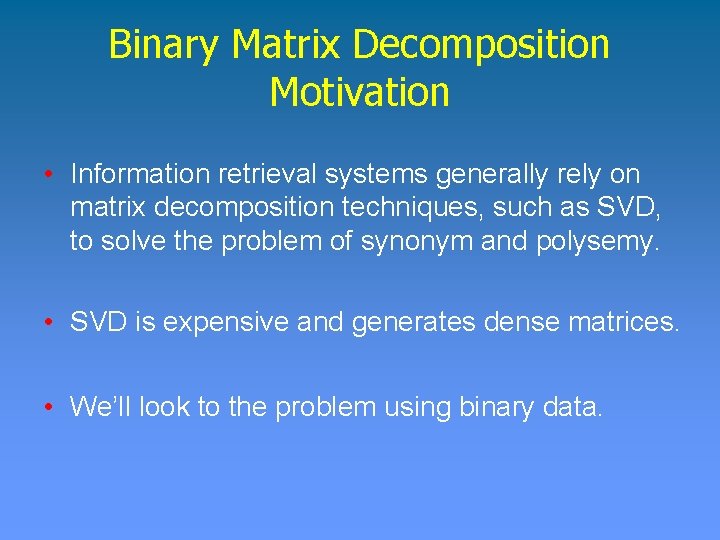

Binary Matrix Decomposition Motivation • Search is an important problem in P 2 P systems. • Most current proposals solve the problem for keyword searches or object keys. • DHTs do not support search beyond exact matches. • Unstructured P 2 P systems support generic metainformation. • How can we search for synonyms as in current information retrieval systems?

Binary Matrix Decomposition Motivation • Information retrieval systems generally rely on matrix decomposition techniques, such as SVD, to solve the problem of synonym and polysemy. • SVD is expensive and generates dense matrices. • We’ll look to the problem using binary data.

Binary Matrix Decomposition Motivation • Several high-dimensional datasets of modern applications are binary or can be transformed into binary format ØRetailer’s transactions • A centralized solution is not viable: ØVolume of data ØReal-time response ØPrivacy

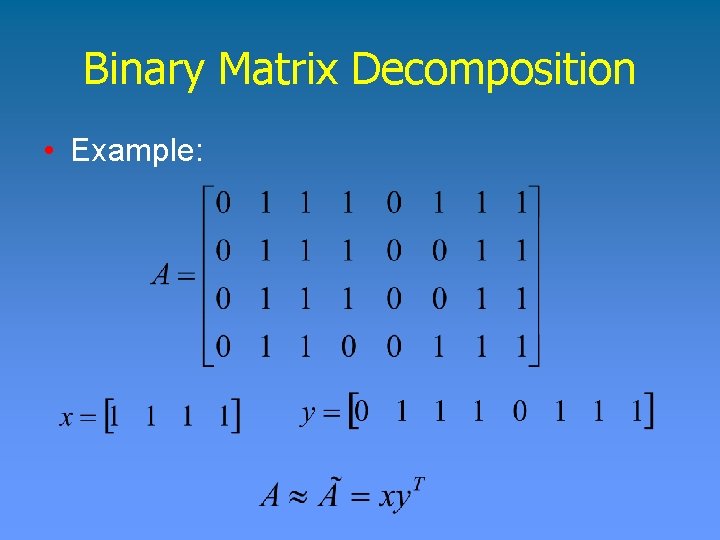

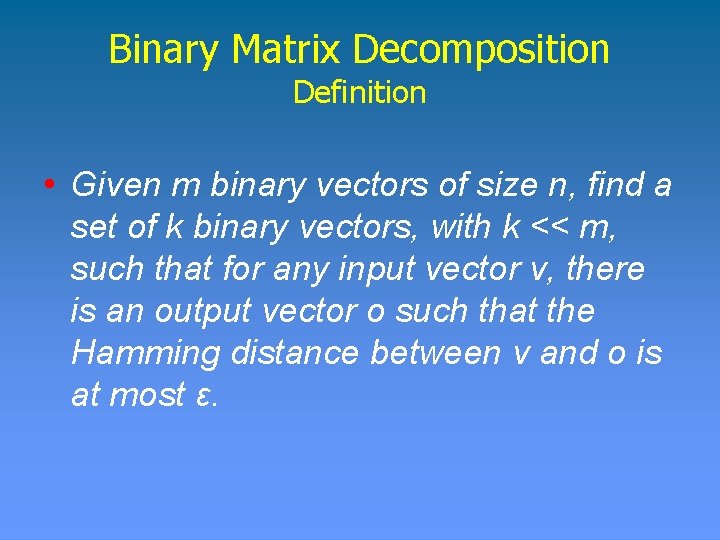

Binary Matrix Decomposition Definition • Given m binary vectors of size n, find a set of k binary vectors, with k << m, such that for any input vector v, there is an output vector o such that the Hamming distance between v and o is at most ε.

Binary Matrix Decomposition • Example:

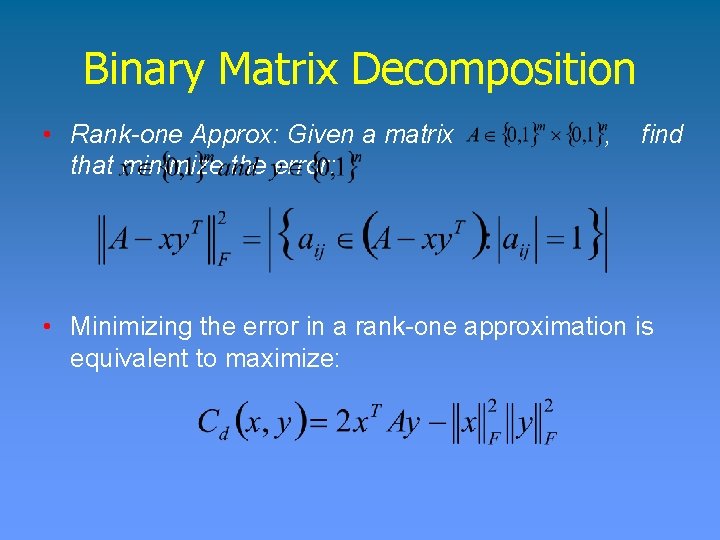

Binary Matrix Decomposition • Proximus provides a serial solution to the problem. • The matrix is recursively partitioned based on successive computations of rank-one approximations.

Binary Matrix Decomposition • Rank-one Approx: Given a matrix that minimize the error: , find • Minimizing the error in a rank-one approximation is equivalent to maximize:

Binary Matrix Decomposition • The maximization problem can be solved in linear time in the number of non-zeros of the matrix A using an alternating iterative heuristic. ØFind the optimal solution to x for a fixed y, and then use the computed value of x to find a new y. The new value of y is again used to find a new value of x. This process is repeated until there is no change to the values of x and y.

Binary Matrix Decomposition

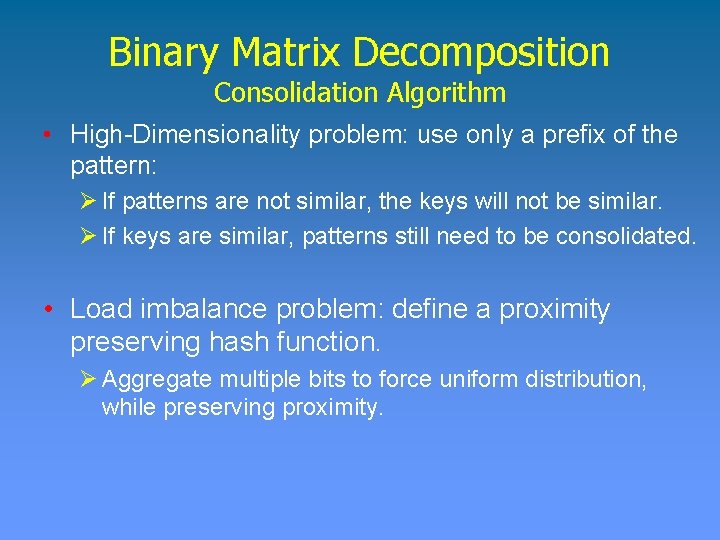

Binary Matrix Decomposition Distributed Approach • The matrix is distributed across multiple peers. • One needs an approximation to the serial solution. • To minimize communication overhead, we rely on subsampling. • Peers exchange rank-one approximations and consolidate the patterns to achieve common approximations. • Similar approach has been successfully used in Conquest [Jie, Koyuturk, Grama, Algorithmica, 2006].

Binary Matrix Decomposition Consolidation Algorithm • Straightforward solution using DHT: ØUse presence vector as a key. ØNodes at Hamming distance one communicate their patterns during consolidation. • Problems with this approach: ØPatterns are high-dimensional ØPatterns are not uniformly distributed (load imbalance).

Binary Matrix Decomposition Consolidation Algorithm • High-Dimensionality problem: use only a prefix of the pattern: Ø If patterns are not similar, the keys will not be similar. Ø If keys are similar, patterns still need to be consolidated. • Load imbalance problem: define a proximity preserving hash function. Ø Aggregate multiple bits to force uniform distribution, while preserving proximity.

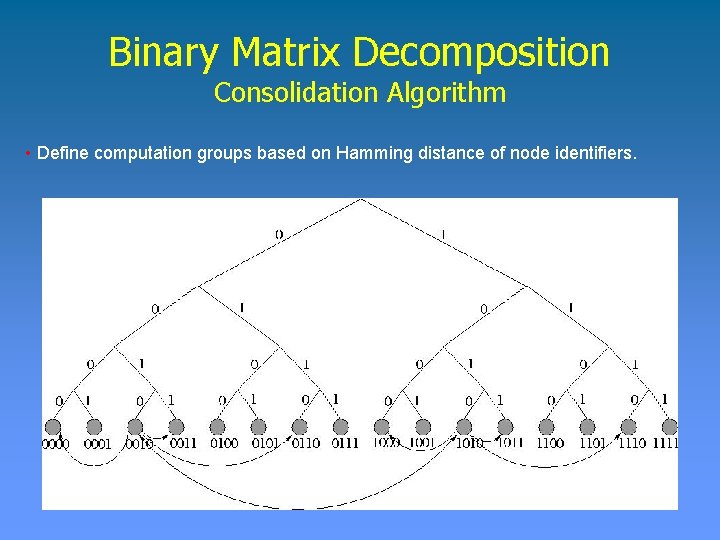

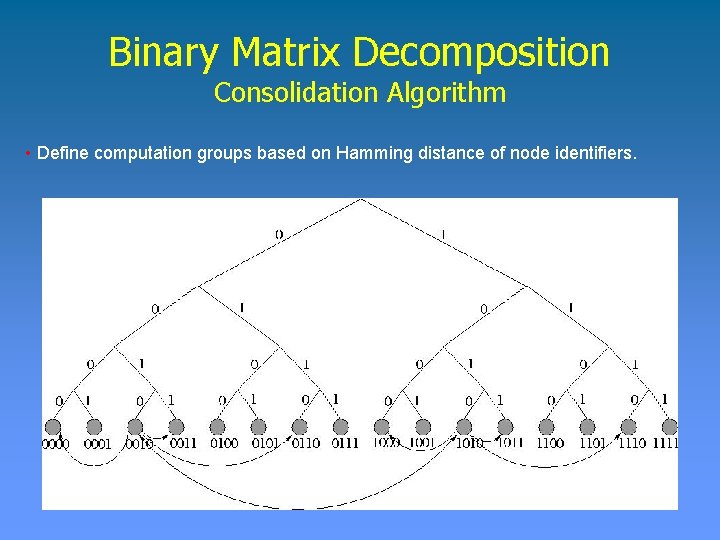

Binary Matrix Decomposition Consolidation Algorithm • Define computation groups based on Hamming distance of node identifiers.

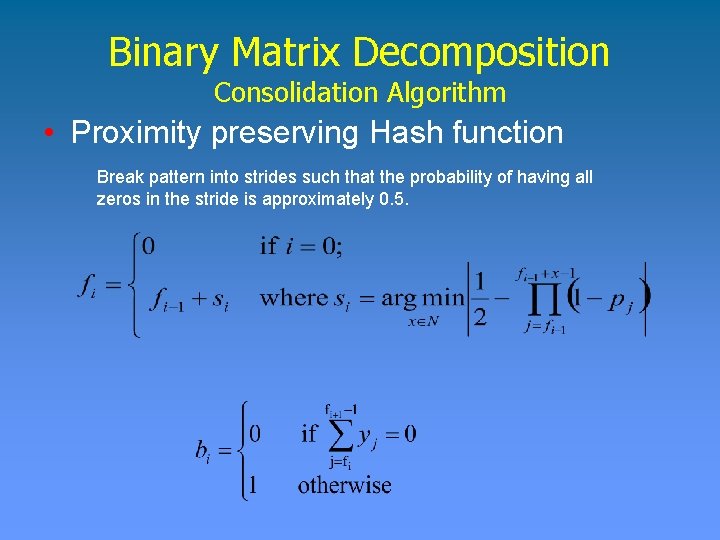

Binary Matrix Decomposition Consolidation Algorithm • Algorithm works in rounds: Ø Peers exchange their rank-one approximations with their computation neighbors. Ø Patterns are locally consolidated and forwarded to computation neighbors. Ø This process is repeated in as many rounds as the bound on the error of the approximation (Hamming radius).

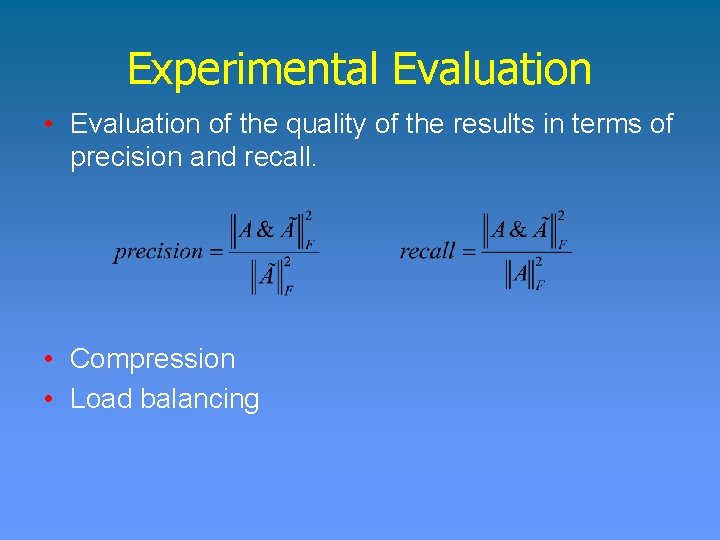

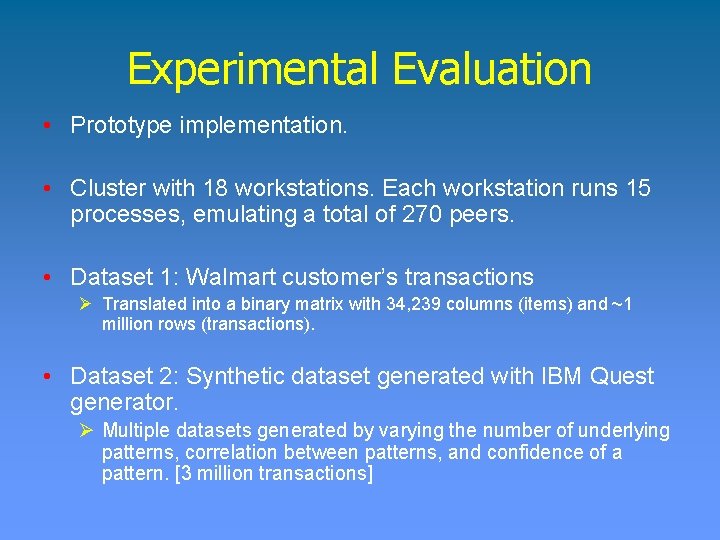

Binary Matrix Decomposition Consolidation Algorithm • Proximity preserving Hash function Break pattern into strides such that the probability of having all zeros in the stride is approximately 0. 5.

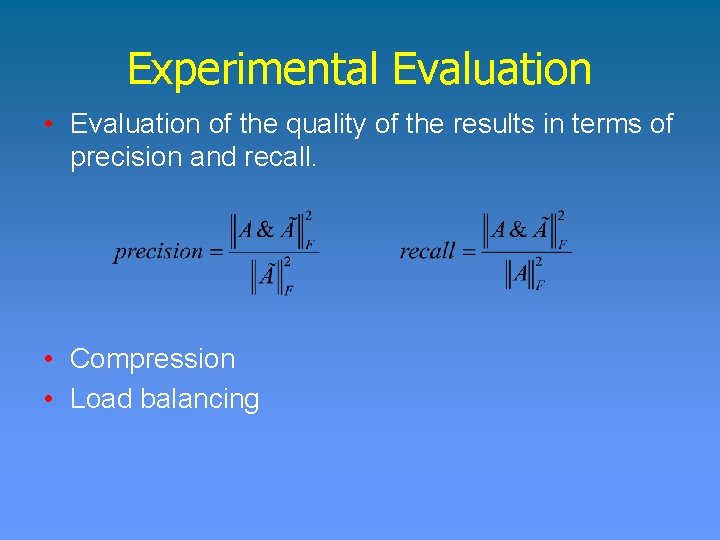

Experimental Evaluation • Prototype implementation. • Cluster with 18 workstations. Each workstation runs 15 processes, emulating a total of 270 peers. • Dataset 1: Walmart customer’s transactions Ø Translated into a binary matrix with 34, 239 columns (items) and ~1 million rows (transactions). • Dataset 2: Synthetic dataset generated with IBM Quest generator. Ø Multiple datasets generated by varying the number of underlying patterns, correlation between patterns, and confidence of a pattern. [3 million transactions]

Experimental Evaluation • Evaluation of the quality of the results in terms of precision and recall. • Compression • Load balancing

Experimental Results • Precision and recall for the Walmart dataset.

Experimental Results • Precision for the synthetic datasets.

Experimental Results • Recall for the synthetic datasets.

Experimental Results • Compression for the Walmart dataset.

Experimental Results • Compression for the synthetic datasets (60% and 90%).

Experimental Results • Load balancing (Walmart dataset).

Experimental Results • Load balancing (Synthetic datasets).

Thank You! • Questions?

Structured P 2 P Systems Locality in DHT

Enhancing Locality in DHT • Computers (nodes) have unique ID Ø Typically 128 bits long Ø Assignment should lead to uniform distribution in the node ID space, for example SHA-1 of node’s IP • Scalable, efficient Ø O(log(N)) routing table entries per node Ø Route in O(log(N)) number of hops • Virtualization destroys locality. • Messages may have to travel around the world to reach a node in the same LAN. • Query responses do not contain locality information.

Enhancing Locality in DHT • Two-level overlay Ø One global overlay Ø Several local overlays • Global overlay is the main repository of data. Any prefix-based DHT protocol can be used. • Global overlay helps nodes organize themselves into local overlays. • Local overlays explore the organization of the Internet in ASs. • Local overlays use a modified version of Pastry. • Size of the local overlay is controlled by a local overlay leader. Ø Uses efficient distributed algorithms for merging and splitting local overlays. The algorithms are based on equivalent operations in hypercubes.

Simulation Setup • Underlying topology is simulated using data collected from the Internet by the Skitter project at CAIDA. • Data contains link delays and IP addresses of the routers. IP addresses are mapped to their ASs using BGP data collected by the Route Views project at University of Oregon. • The resulting topology contains 218, 416 routers, 692, 271 links, and 7, 704 ASs. • We randomly select 10, 000 routers and connect a LAN with 10 hosts in each one of them. • 10, 000 overlay nodes selected randomly from the hosts.

Simulation Setup • NLANR web proxy trace with 500, 254 objects. • Zipf distribution parameters: {0. 70, 0. 75, 0. 80, 0. 85, 0. 90} • Maximum overlay sizes: {200; 300; 400; 500; 1, 000; 2, 000} • Local cache size: 5 MB (LRU replacement policy).

Simulation Results • Performance gains in delay response

Simulation Results • Performance gains in number of messages

Unstructured P 2 P Systems Search with Probabilistic Guarantees

Unstructured P 2 P Networks Randomized Algorithms • Randomization and Distributed Systems Ø Break symmetry (e. g. Ethernet backoff) Ø Load balancing. Ø Fault handling. Ø Solutions to problems that are unsolvable deterministically. (e. g. , Consensus under failure) • Uniform sampling is a key component in the development of randomized algorithms.

Uniform Sampling • Substrate for the development of randomized algorithms Ø Search with probabilistic guarantees. Ø Duplicate elimination. Ø Job distribution with load balancing. • Definition: An algorithm samples uniformly at random from a set of nodes in a connected network if and only if it selects a node i belonging to the network with probability 1/n, where n is the number of nodes in the network.

Uniform Sampling • In a complete network, the problem is trivial. • Random walks of a prescribed minimum length gives random sampling – independent of origin of walk. • A long walk reaches stationary distribution π, πi = di / 2|E|. Ø Not a uniform sample if network nodes have different degrees. • In [Awan et al 04], we study different algorithms that change the transition probabilities among neighbors in order to achieve uniform sampling using random walks. We show, using simulation, that the length of the random walk is O(log n).

Search with Probabilistic Guarantees Algorithm • To share its content with other peers in the network, a peer p installs references to each of its objects at a set Qp of peers. Any metainformation can be published. • To provide guarantees that content published by a peer p can be found by any other peer in the network, three fundamental questions need to be answered: 1. Where should the nodes in Qp be located in the network? 2. What is the size of the set Qp? 3. When a peer q attempts to locate an object, how many peers must q contact?

Search with Probabilistic Guarantees Algorithm • We select nodes in Qp uniformly at random from the network. Ø It provides fault tolerance. In the event of a node failure, the node can be easily replaced. Ø It facilitates search, since there is no consistent global routing infrastructure. • Questions 2 and 3 can be answered using the “birthday paradox” (or a “balls and bins” abstraction). Ø “How many people must there be in a room before there is a 50% chance that two of them were born on the same day of the year? ” (at least 23) Ø If we set Qp to and we search in an independent set of the same size, we have high probability of intersection.

Search with Probabilistic Guarantees Controlled Installation of References • • • If every replica inserts reference pointers, popular objects may have their reference pointers on all peers. We use a probabilistic algorithm to decide if a node should install pointers to its objects. When a peer p joins the network, it sends a query for an object using a random walk of length γ√n: Ø If the query is unsuccessful, then p installs the pointers with probability one. Ø If the query is successful and the responding peer q is at a distance l from p, then p installs pointers with probability l/γ√n.

Search with Probabilistic Guarantees Simulation Setup • Overlay topology composed of 30, 607 nodes Ø Partial view of the Gnutella network. Ø Power-law graph. Ø Random graph. • Node dynamics: Ø Static Ø Dynamic: simulates changes of connections Ø Failures without updates Ø Failures with updates • Measurements: Ø Distribution of replication ratios. Ø Average number of hops (unbounded TTL). Ø Percentage of query failures (bounded TTL). Ø Percentage of object owners that install pointers. Ø Number of messages per peer.

![Search with Probabilistic Guarantees Simulation Results Cohen and Shenker SIGCOMM 2002 showed that Search with Probabilistic Guarantees Simulation Results • Cohen and Shenker [SIGCOMM 2002] showed that](https://slidetodoc.com/presentation_image/9084c0ca51c55dc7b6141eb3161dd5bf/image-47.jpg)

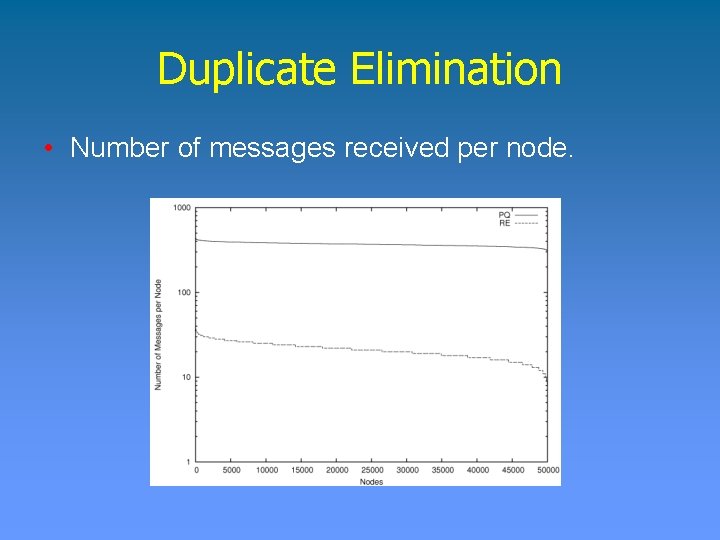

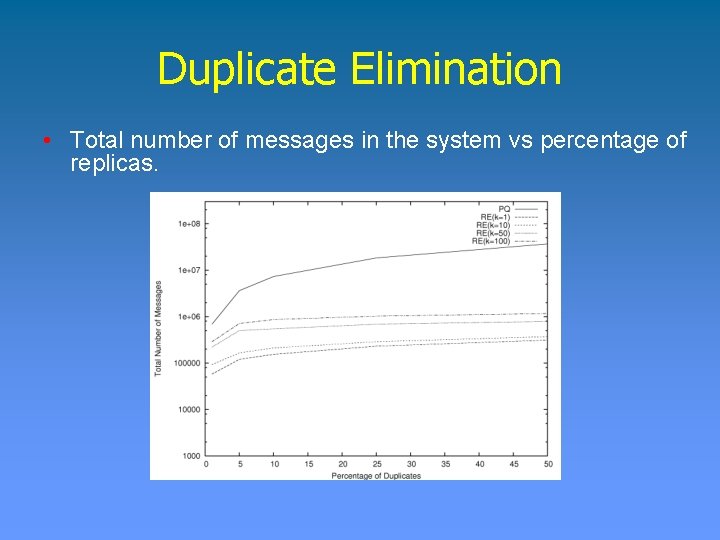

Search with Probabilistic Guarantees Simulation Results • Cohen and Shenker [SIGCOMM 2002] showed that a replication proportional to the square root of the access frequency is optimal. • Distribution of replication ratios.

Search with Probabilistic Guarantees Simulation Results • Percentage of failures of a query as a function of object popularity (left γ=1, right γ=2).

Search with Probabilistic Guarantees Simulation Results • Fraction of replicas installing pointers.

Unstructured P 2 P Systems Duplicate Elimination

Duplicate Elimination • Consider a peer-to-peer storage system, designed without any assumptions on the structure of the overlay network, and which contains multiple peers, each peer holding numerous files. • How can a peer determine which files need to be kept using minimum communication overhead? • How can the storage system as a whole make sure that each file is present in at least k peers, where k is a system parameter chosen to satisfy a client or application’s availability requirements?

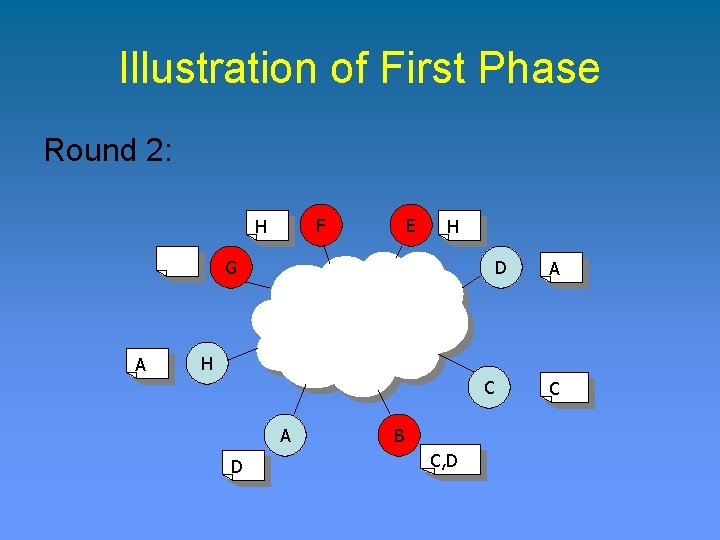

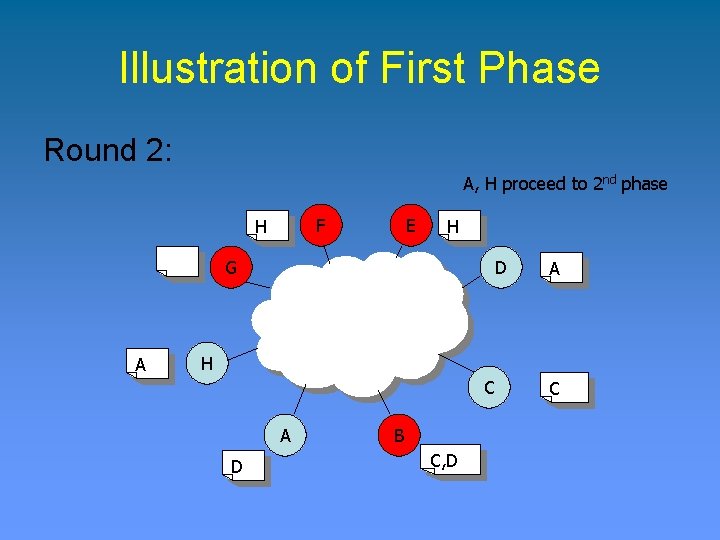

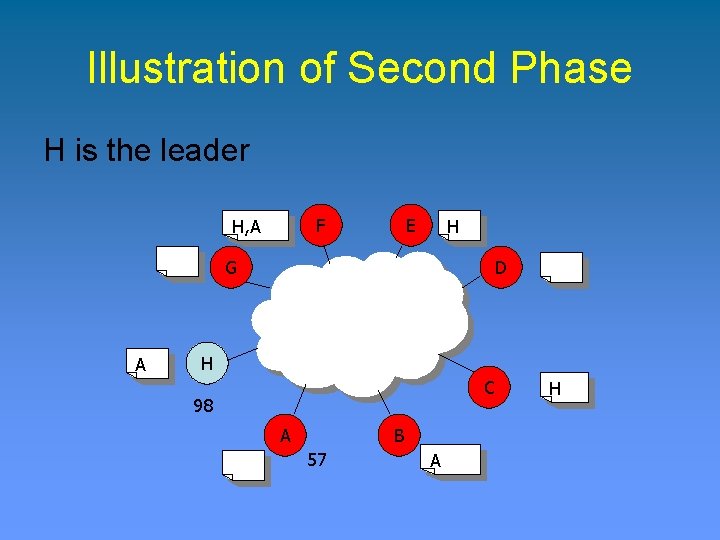

Duplicate Elimination • Problem can be abstracted to a relaxed and probabilistic version of the leader election problem. • Divide the process of electing a leader into two phases • First Phase: Ø Reduce the number of potential leaders by half in every round. Ø Number of messages exchanged in the system is O(n) Ø At the end of the first phase, have at least C contenders. (0 < C < sqrt(n/lnn)) • Second Phase: Ø Use birthday paradox (Probabilistic Quorum) solution.

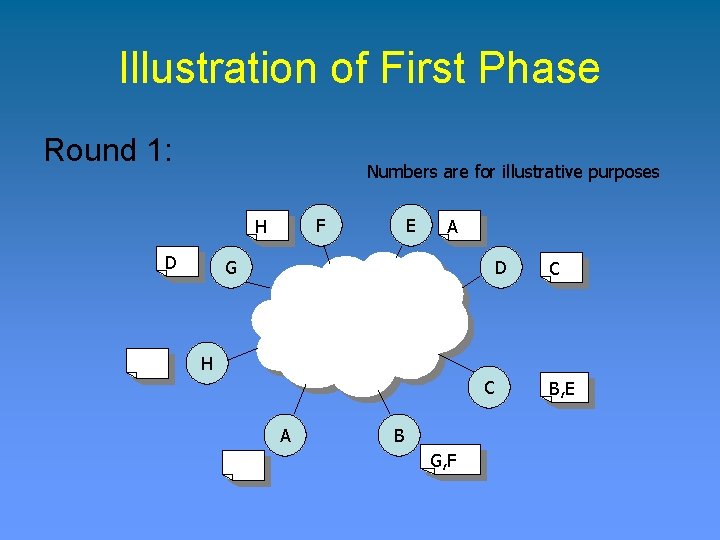

Duplicate Elimination • Contender – Node that wants to be a leader • Mediator – Node that arbitrates between any two contenders for a given round • Winner – Node that proceeds as contender to the next round

![Duplicate Elimination Each contender sends sqrtn ln 2EXi1 in round i of the Duplicate Elimination • Each contender sends sqrt(n ln 2/(E[Xi]-1)) in round i of the](https://slidetodoc.com/presentation_image/9084c0ca51c55dc7b6141eb3161dd5bf/image-54.jpg)

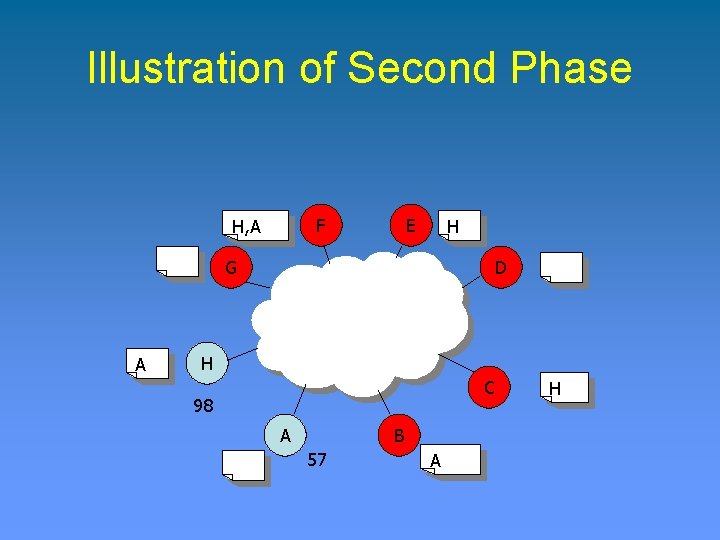

Duplicate Elimination • Each contender sends sqrt(n ln 2/(E[Xi]-1)) in round i of the first phase. Where E[Xi] is the expected number of contenders in round i. • The total number of messages in the first phase is O(n). • Each contender sends sqrt(nln n) messages in the second phase. • The total number of messages in second phase is O(n).

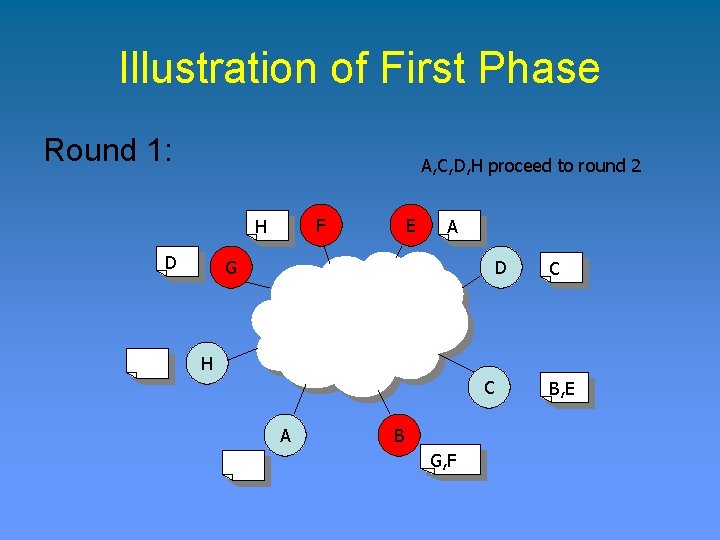

Illustration of First Phase Round 1: Numbers are for illustrative purposes F H D E A G D C H C A B G, F B, E

Illustration of First Phase Round 1: A, C, D, H proceed to round 2 F H D E A G D C H C A B G, F B, E

Illustration of First Phase Round 2: F H E H G A D A H C A D B C, D C

Illustration of First Phase Round 2: A, H proceed to 2 nd phase F H E H G A D A H C A D B C, D C

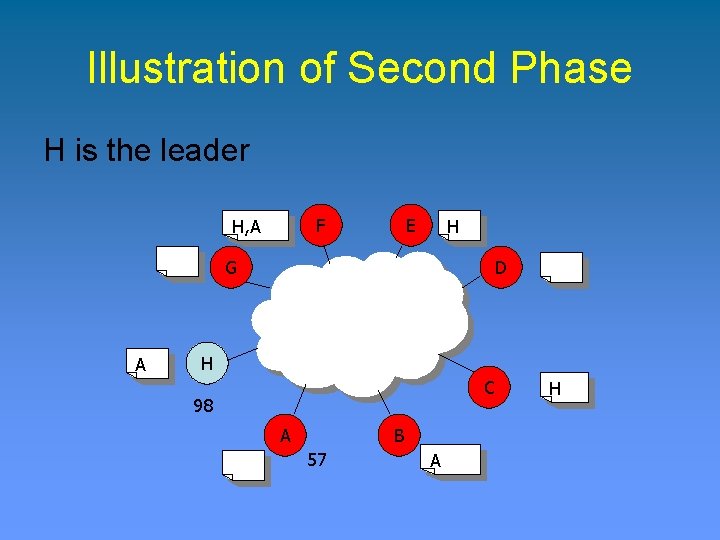

Illustration of Second Phase F H, A E H G A D H C 98 A B 57 A H

Illustration of Second Phase H is the leader F H, A E H G A D H C 98 A B 57 A H

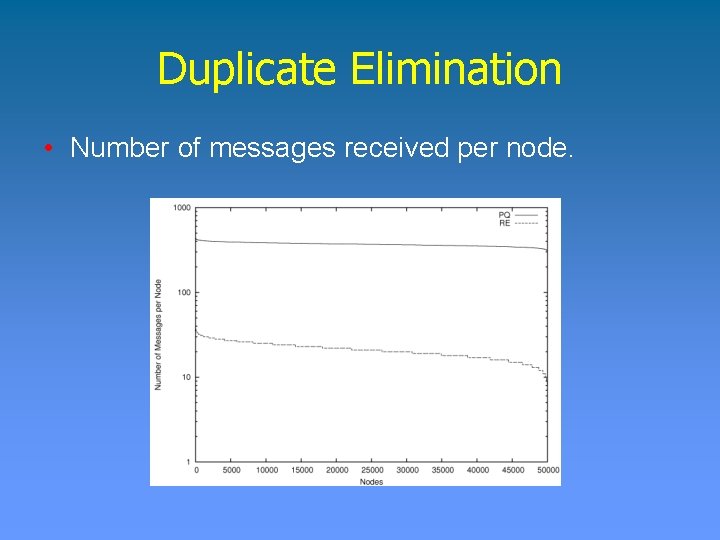

Duplicate Elimination Simulation Setup • Power-law random graph with 50, 000 nodes. • One object is replicated at the nodes (1% to 50%) • Measurements Ø Message overhead Ø Load distribution Ø Accuracy of the protocols

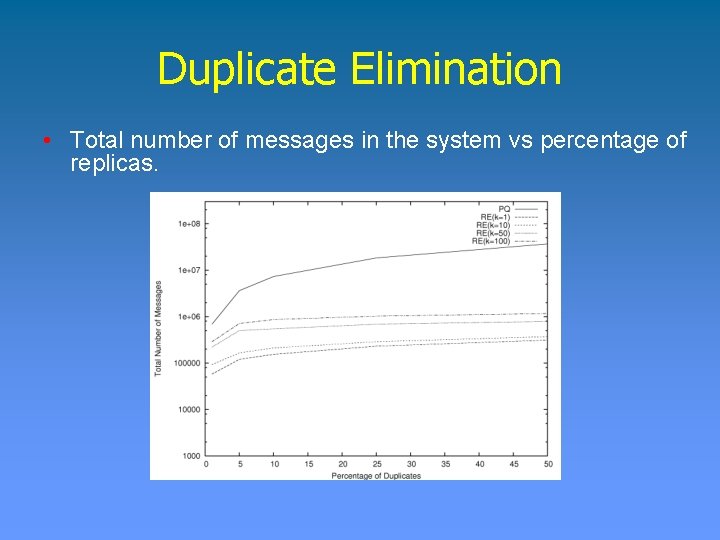

Duplicate Elimination • Total number of messages in the system vs percentage of replicas.

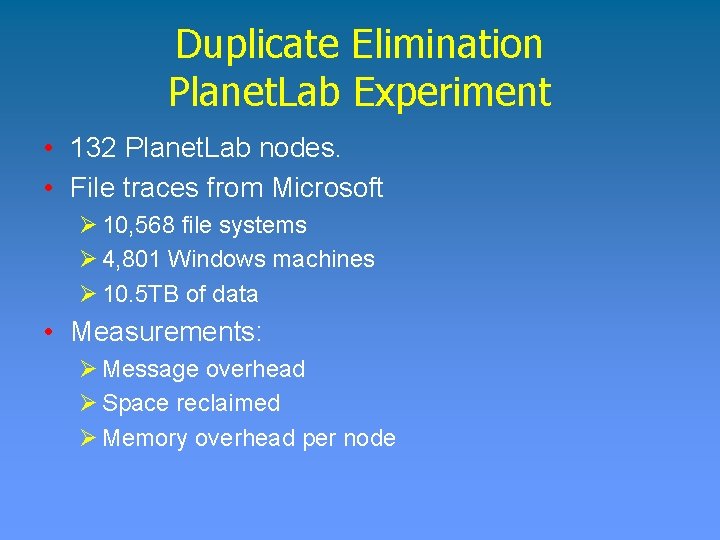

Duplicate Elimination • Number of messages received per node.

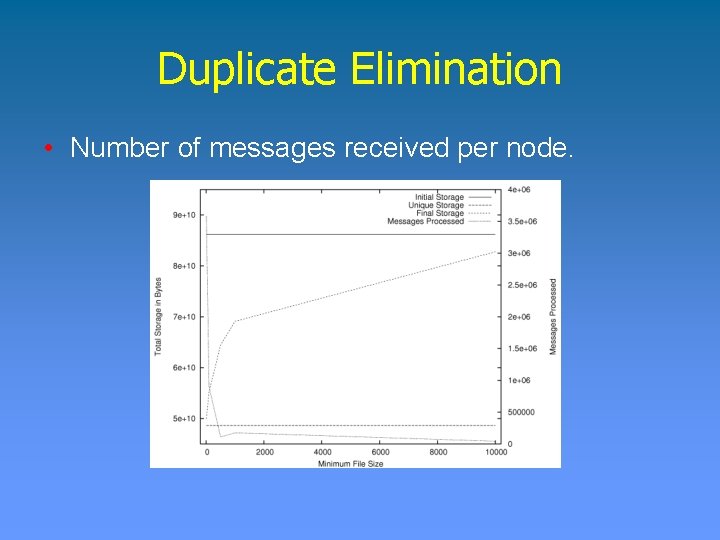

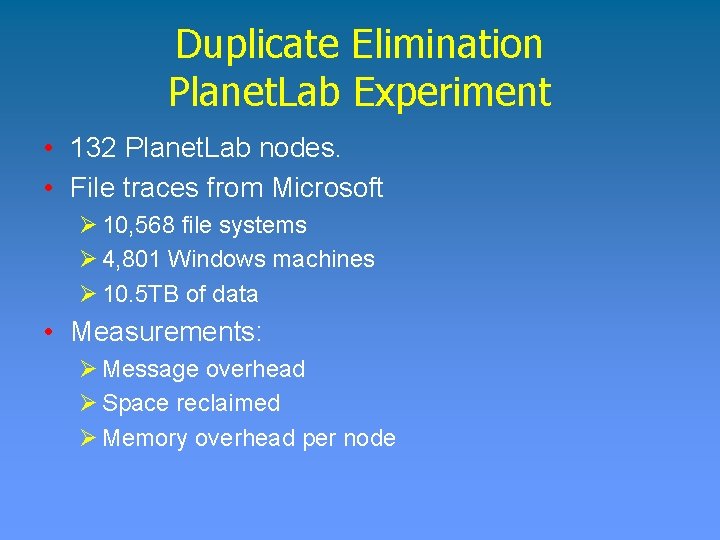

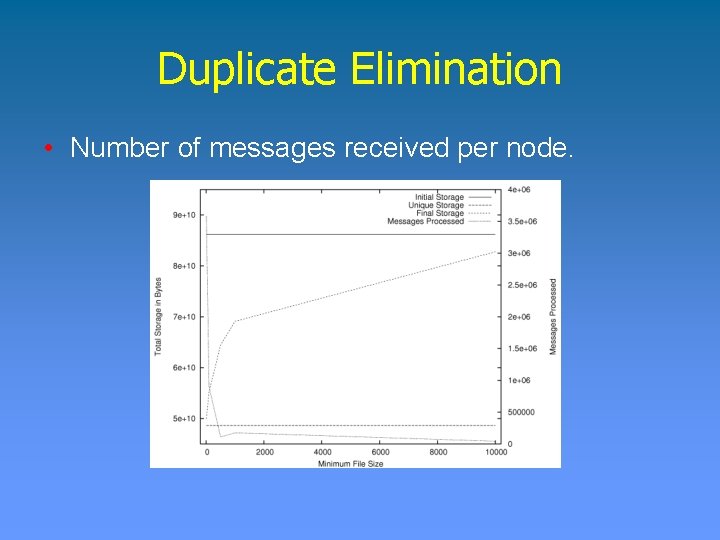

Duplicate Elimination Planet. Lab Experiment • 132 Planet. Lab nodes. • File traces from Microsoft Ø 10, 568 file systems Ø 4, 801 Windows machines Ø 10. 5 TB of data • Measurements: Ø Message overhead Ø Space reclaimed Ø Memory overhead per node

Duplicate Elimination • Number of messages received per node.

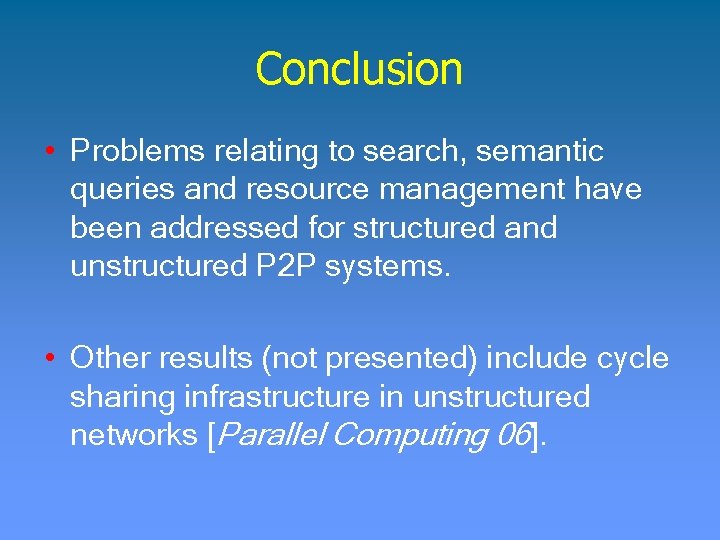

Conclusion • Problems relating to search, semantic queries and resource management have been addressed for structured and unstructured P 2 P systems. • Other results (not presented) include cycle sharing infrastructure in unstructured networks [Parallel Computing 06].

Conclusion • A number of challenges still remain:

Acknowledgments • Professors Ananth Grama and Suresh Jagannathan (Advisors). • Professors Sonia Fahmy and Zhiyuan Li (Committee members). • My labmates: Asad, Deepak, Jayesh, Mehmet, Metin, Murali.

Extra Slides

Motivation - P 2 P Systems • Proliferation of cheap computing resources: cpu, storage, bandwidth. • Global self-adaptive system ØUtilize resources wherever possible. ØLocalize effects of single failures. ØNo single point of vulnerability.

Motivation - P 2 P Systems • Reduce reliance on servers ØEliminate bottlenecks, improve scalability. ØLower deployment costs and complexity. ØBetter resilience – no single point of failure. • Direct client connection ØFaster data dissemination.

Related Work • Duplicate Elimination ØSALAD • P 2 P Algorithms ØCohen and Shenker ØChord/Pastry/Tapestry/CAN

Uniform Sampling • In a complete network, the problem is trivial. • Random walks of a prescribed minimum length gives random sampling – independent of origin of walk. • Random walks are conveniently analyzed using Markov model: Ø Represent the network as a graph G(V, E), and define probability matrix P, where pij = 1/di, (i, j) ϵ E. Ø A long walk reaches stationary distribution π, πi = di / 2|E|. Ø Not a uniform sample if network nodes have nonuniform degree distribution.

Uniform Sampling • Random sampling using random walk on a power-law graph

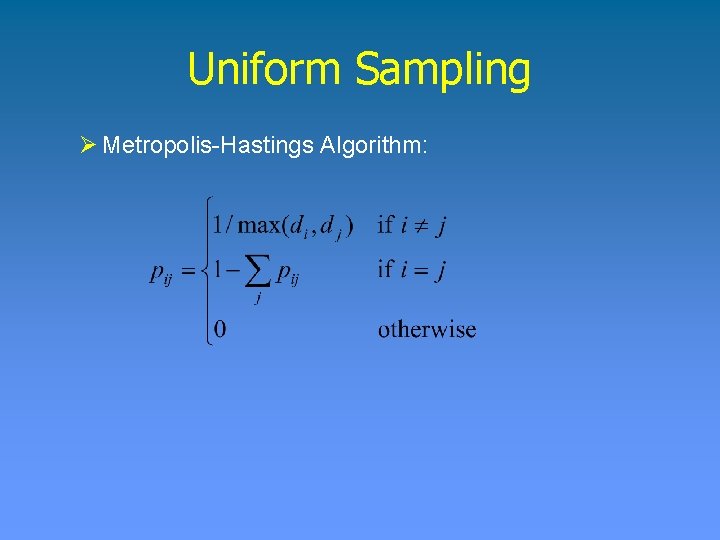

Uniform Sampling • To get uniform sampling we need to change the transition probabilities. • Symmetric transition probability, or doubly stochastic, matrix yields random walks with πi = 1/ n. • Length of walk = O(log n).

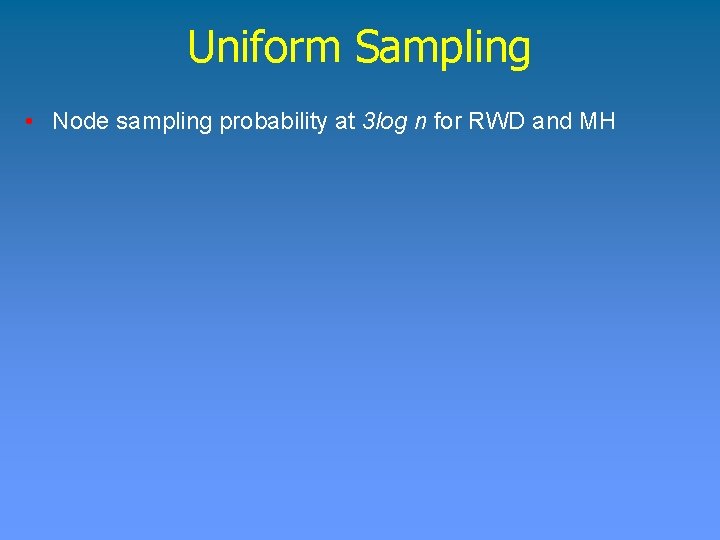

Uniform Sampling • Aim: Design distributed algorithms to locally compute transitions between neighboring nodes, resulting in a global transition matrix with stationary uniform distribution. • Known algorithms: Ø Maximum-Degree Algorithm:

Uniform Sampling Ø Metropolis-Hastings Algorithm:

![Uniform Sampling Random Weight Distribution RWD Awan et al 04 Ø Initialization Assign Uniform Sampling • Random Weight Distribution (RWD) [Awan et al 04] Ø Initialization: Assign](https://slidetodoc.com/presentation_image/9084c0ca51c55dc7b6141eb3161dd5bf/image-78.jpg)

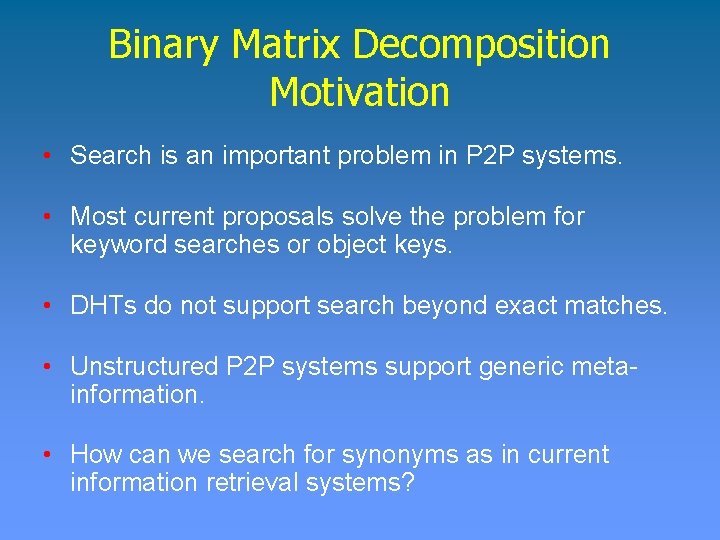

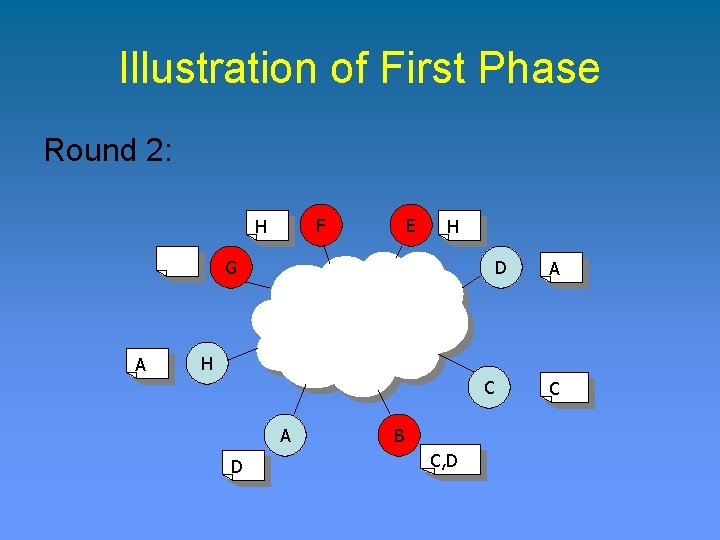

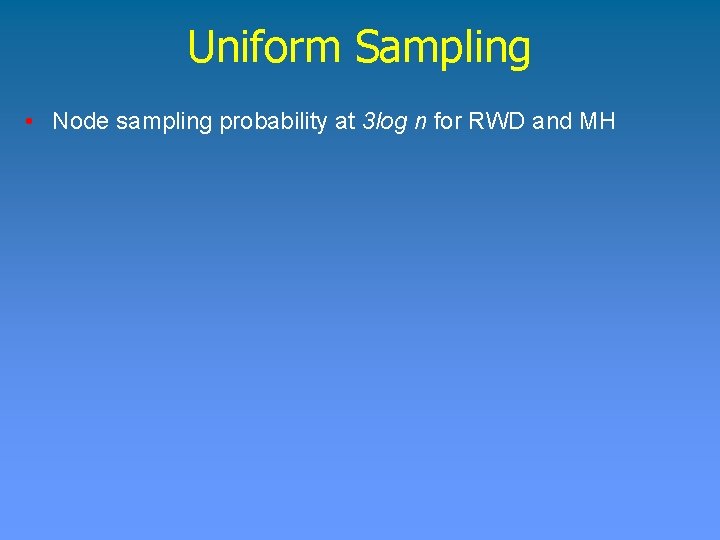

Uniform Sampling • Random Weight Distribution (RWD) [Awan et al 04] Ø Initialization: Assign a small constant transition probability, 1/ρ (system parameter ρ ≥ dmax), to each edge. This leaves back a high self-transition probability (called weight) Ø Iteration: Each node i, randomly distributes its weight to neighbors by incrementing transition probability with them symmetrically – using ACKs and NACKs. Ø Termination: For node i, either pii = 0 or pij = 0 is a neighbor of i. Ø Each increment is done by a quantum value (system parameter).

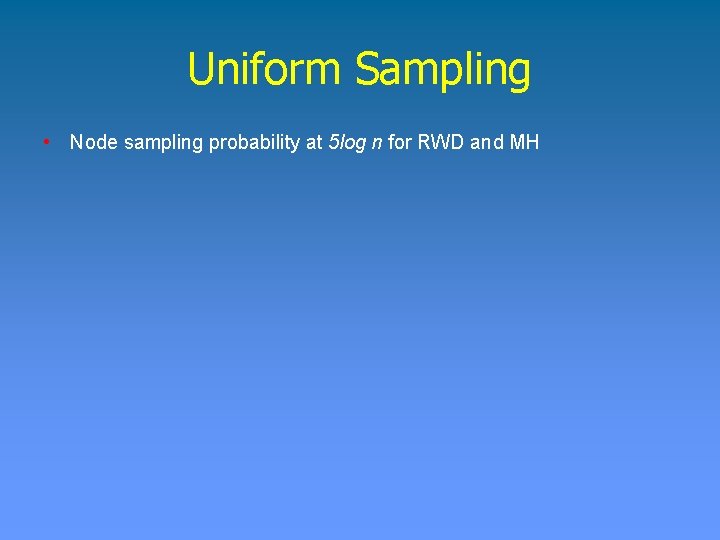

Uniform Sampling • Node sampling probability at 3 log n for RWD and MH

Uniform Sampling • Node sampling probability at 5 log n for RWD and MH

Potential Scenarios • Communication Ø Instant messaging Ø Voice, video • Collaboration Ø Project workspaces Ø File sharing Ø Gaming • Content distribution Ø Sports scores, weather, news, stock tickers, RSS Ø File bulk transfer, streamed media, live content