P 4 P Provider Portal for P 2

- Slides: 59

P 4 P : Provider Portal for (P 2 P) Applications Y. Richard Yang Laboratory of Networked Systems Yale University Sept. 25, 2008 STIET Research Seminar

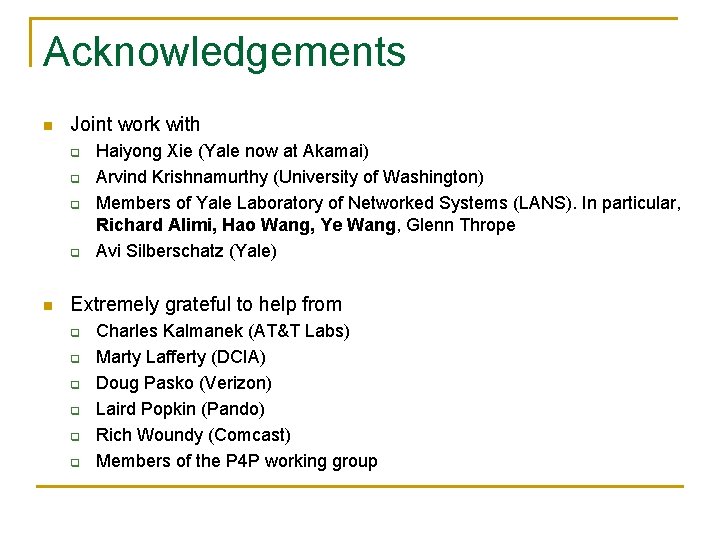

Acknowledgements n Joint work with q q n Haiyong Xie (Yale now at Akamai) Arvind Krishnamurthy (University of Washington) Members of Yale Laboratory of Networked Systems (LANS). In particular, Richard Alimi, Hao Wang, Ye Wang, Glenn Thrope Avi Silberschatz (Yale) Extremely grateful to help from q q q Charles Kalmanek (AT&T Labs) Marty Lafferty (DCIA) Doug Pasko (Verizon) Laird Popkin (Pando) Rich Woundy (Comcast) Members of the P 4 P working group

Outline n n n The problem space The P 4 P framework The P 4 P interface Evaluations Discussions and ongoing work

Content Distribution using the Internet A projection “Within five years, all media will be delivered across the Internet. ” - Steve Ballmer, CEO Microsoft, D 5 Conference, June 2007 n The Internet is increasingly being used for digital content and media delivery.

Challenges: Content Owner’s Perspective n Content protection/security/monetization Ø Distribution costs

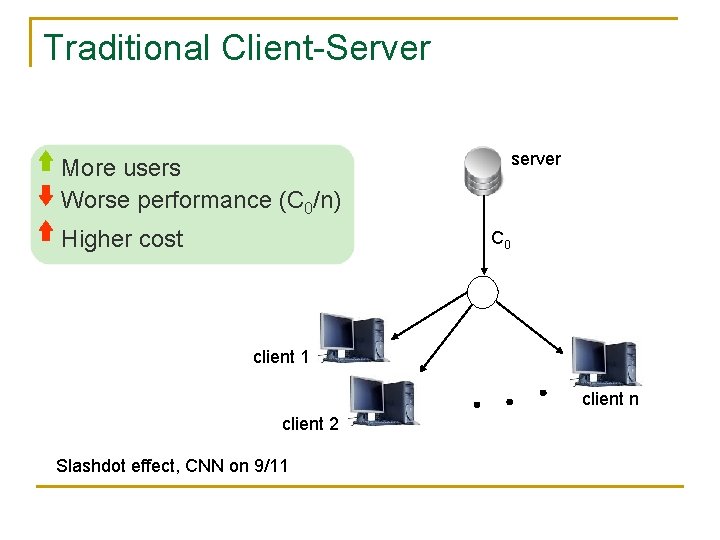

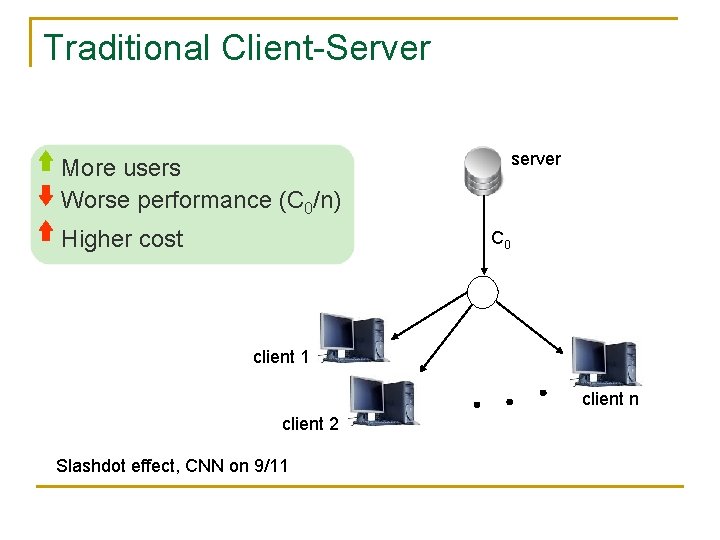

Traditional Client-Server server More users Worse performance (C 0/n) Higher cost C 0 client 1 client n client 2 Slashdot effect, CNN on 9/11

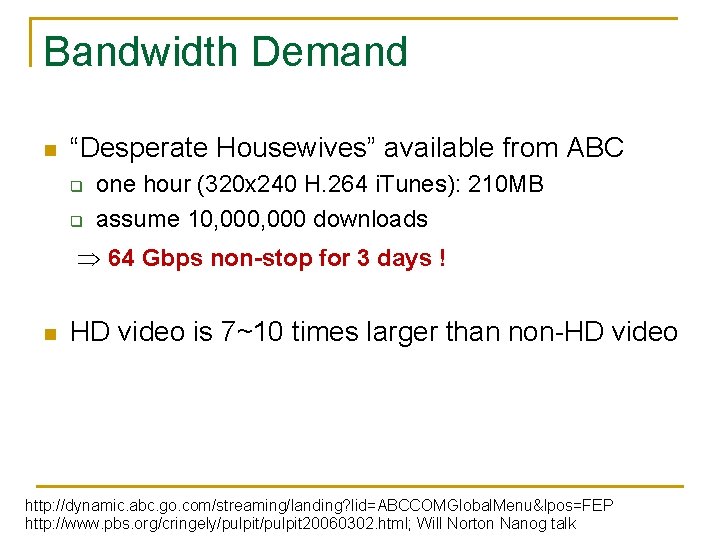

Bandwidth Demand n “Desperate Housewives” available from ABC q q one hour (320 x 240 H. 264 i. Tunes): 210 MB assume 10, 000 downloads 64 Gbps non-stop for 3 days ! n HD video is 7~10 times larger than non-HD video http: //dynamic. abc. go. com/streaming/landing? lid=ABCCOMGlobal. Menu&lpos=FEP http: //www. pbs. org/cringely/pulpit 20060302. html; Will Norton Nanog talk

Classical Solutions n IP multicast: replication by routers q q q n overhead less effective for asynchronous content lacking of billing model, require multi-ISP coop. Cache, content distribution network (CDN), e. g. , Akamai q q expensive limited capacity: “The combined streaming capacity of the top 3 CDNs supports one Nielsen point. ”

Scalable Content Distribution: P 2 P n Peer-to-peer (P 2 P) as an extreme case of multiple servers: q each client is also a server

Example: Bit. Torrent HTTP GET MYFILE. torrent webserver user “register” list of random peers app. Tracker ID 1 169. 237. 234. 1: 6881 ID 2 190. 50. 34. 6: 5692 ID 3 34. 275. 89. 143: 4545 … ID 50 231. 456. 31. 95: 6882 … Peer 40 Peer 2 Peer 1

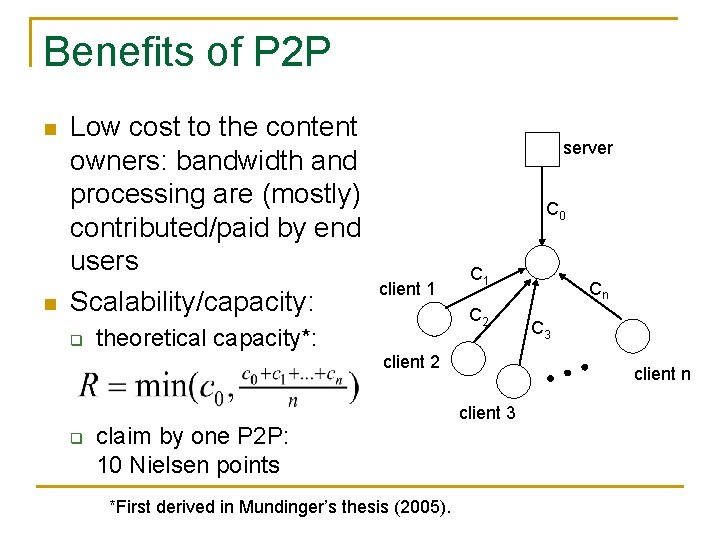

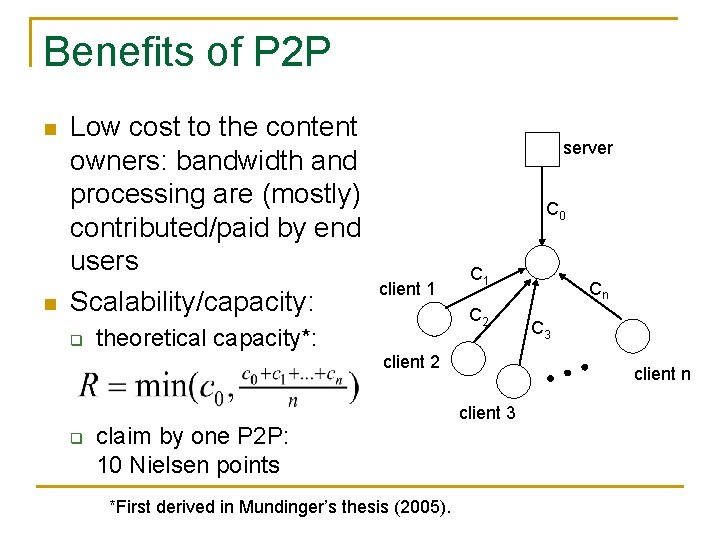

Benefits of P 2 P n n Low cost to the content owners: bandwidth and processing are (mostly) contributed/paid by end users Scalability/capacity: q server C 0 client 1 C 2 theoretical capacity*: client 2 q claim by one P 2 P: 10 Nielsen points *First derived in Mundinger’s thesis (2005). Cn C 3 client n client 3

Integrating P 2 P into Content Distribution n P 2 P is becoming a key component of content delivery infrastructure for legal content q some projects n n n i. Player (BBC), Joost, Pando (NBC Direct), PPLive, Zattoo, BT (Linux) Verizon P 2 P, Thomson/Telephonica nano Data Center Some statistics q q 15 mil. average simultaneous users 80 mil. licensed transactions/month

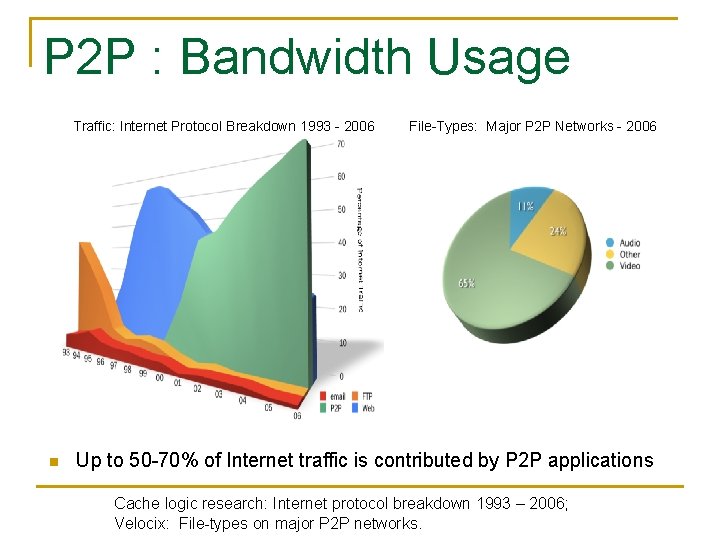

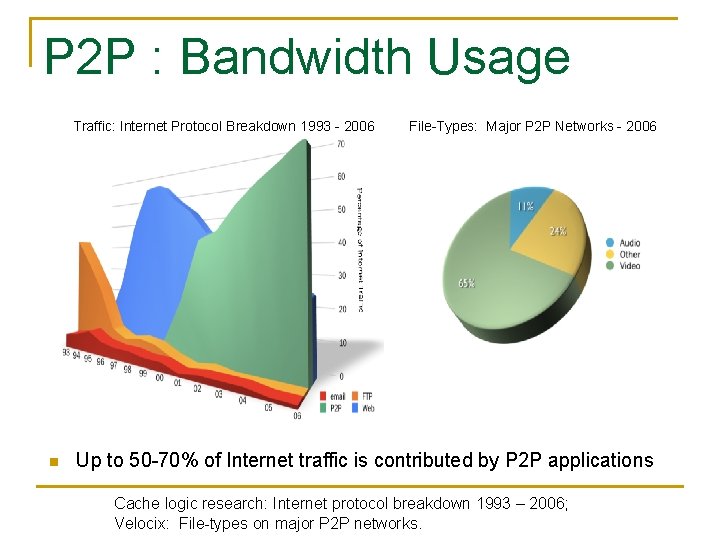

P 2 P : Bandwidth Usage Traffic: Internet Protocol Breakdown 1993 - 2006 n File-Types: Major P 2 P Networks - 2006 Up to 50 -70% of Internet traffic is contributed by P 2 P applications Cache logic research: Internet protocol breakdown 1993 – 2006; Velocix: File-types on major P 2 P networks.

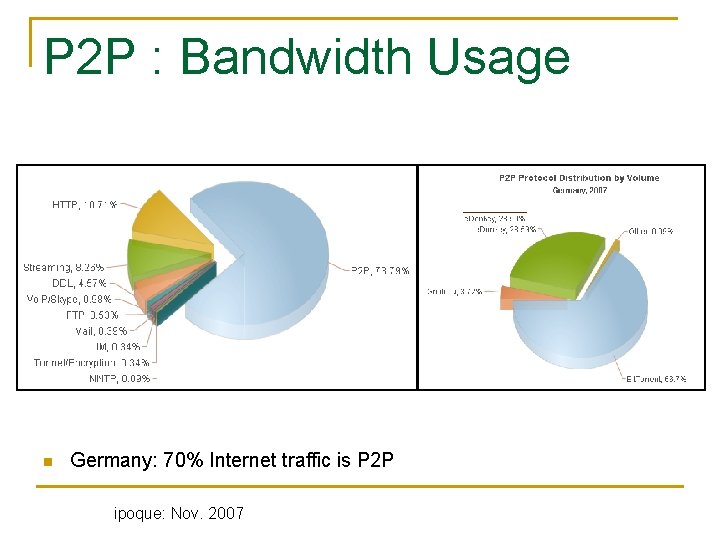

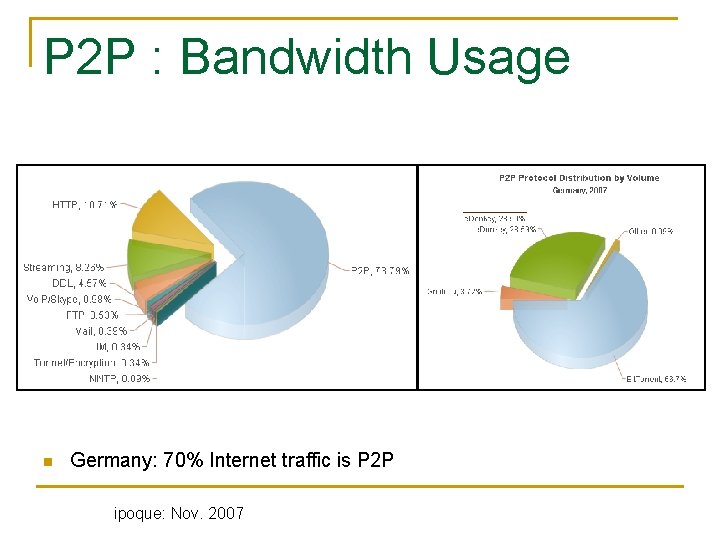

P 2 P : Bandwidth Usage n Germany: 70% Internet traffic is P 2 P ipoque: Nov. 2007

P 2 P Problem : Network Inefficiency n P 2 P applications are largely networkoblivious and may not be network efficient q Verizon (2008) n n q average P 2 P bit traverses 1, 000 miles on network average P 2 P bit traverses 5. 5 metro-hops Karagiannis et al. on Bit. Torrent, a university network (2005) n 50%-90% of existing local pieces in active users are downloaded externally

ISP’s Attempts to Address P 2 P Issues n Upgrade infrastructure Usage-based charging model Rate limiting, or termination of services n P 2 P caching n n ISPs cannot effectively address network efficiency alone.

P 2 P’s Attempt to Improve Network Efficiency n P 2 P has flexibility in shaping communication patterns n Adaptive P 2 P tries to use this flexibility to adapt to network topologies and conditions q e. g. , selfish routing, Karagiannis et al. 2005, Bindal et al. 2006, Choffnes et al. 2008 (Ono)

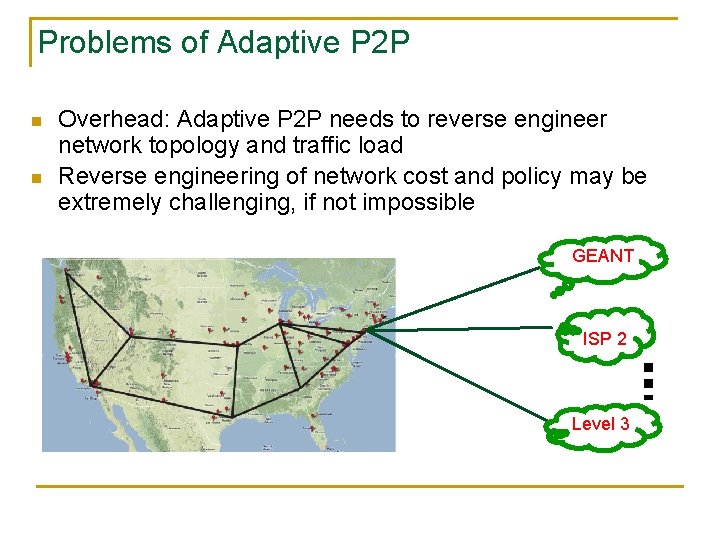

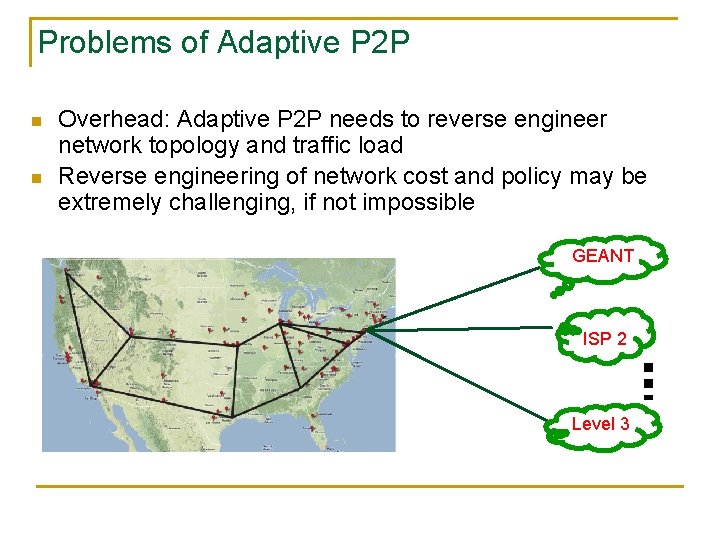

Problems of Adaptive P 2 P n n Overhead: Adaptive P 2 P needs to reverse engineer network topology and traffic load Reverse engineering of network cost and policy may be extremely challenging, if not impossible GEANT ISP 2 Level 3

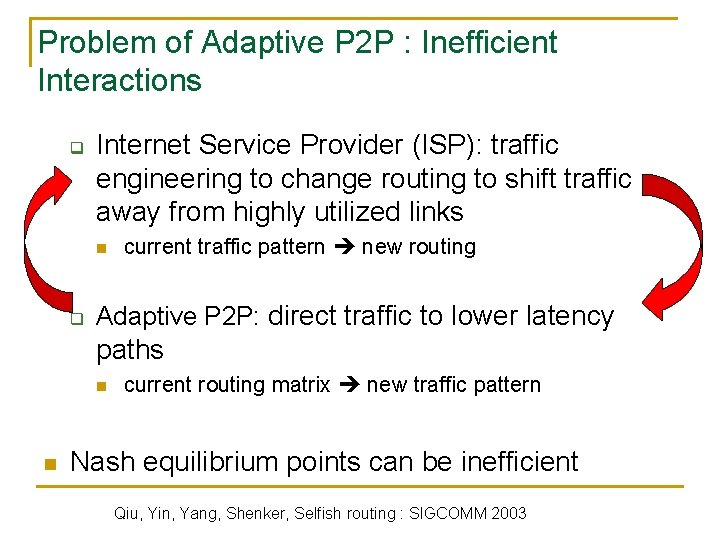

Problem of Adaptive P 2 P : Inefficient Interactions q Internet Service Provider (ISP): traffic engineering to change routing to shift traffic away from highly utilized links n q current traffic pattern new routing Adaptive P 2 P: direct traffic to lower latency paths n n current routing matrix new traffic pattern Nash equilibrium points can be inefficient Qiu, Yin, Yang, Shenker, Selfish routing : SIGCOMM 2003

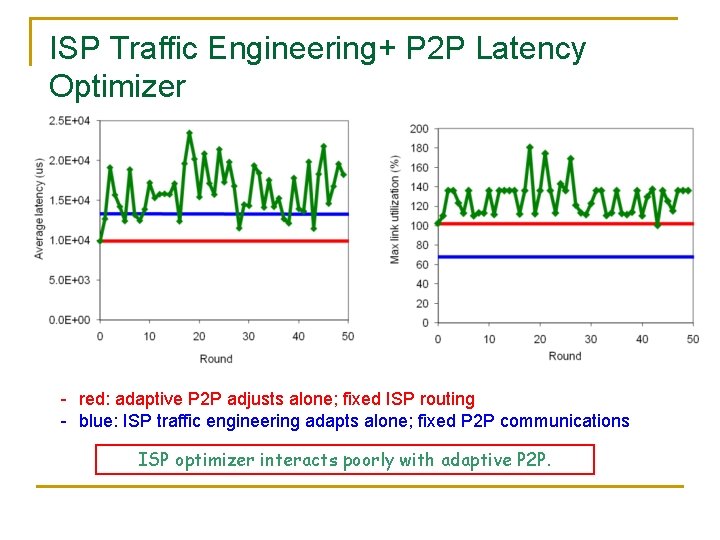

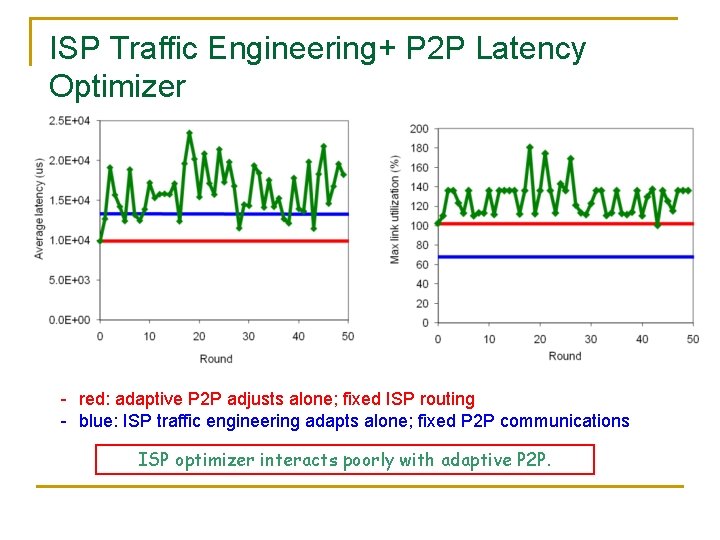

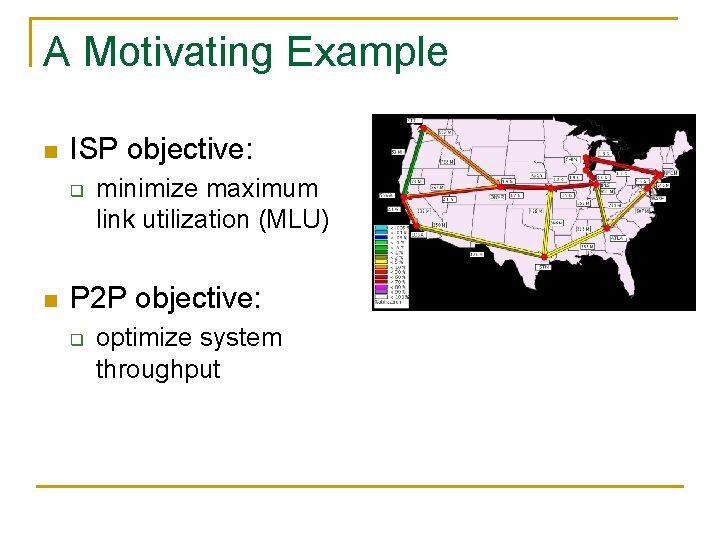

ISP Traffic Engineering+ P 2 P Latency Optimizer - red: adaptive P 2 P adjusts alone; fixed ISP routing - blue: ISP traffic engineering adapts alone; fixed P 2 P communications ISP optimizer interacts poorly with adaptive P 2 P.

A Fundamental Problem in Internet Architecture n Feedback from Internet networks to network applications is extremely limited q e. g. , end-to-end flow measurements and limited network feedback

P 4 P Objective n Design an open framework to enable better cooperation between network providers and network applications P 4 P: Provider Portal for (P 2 P) Applications

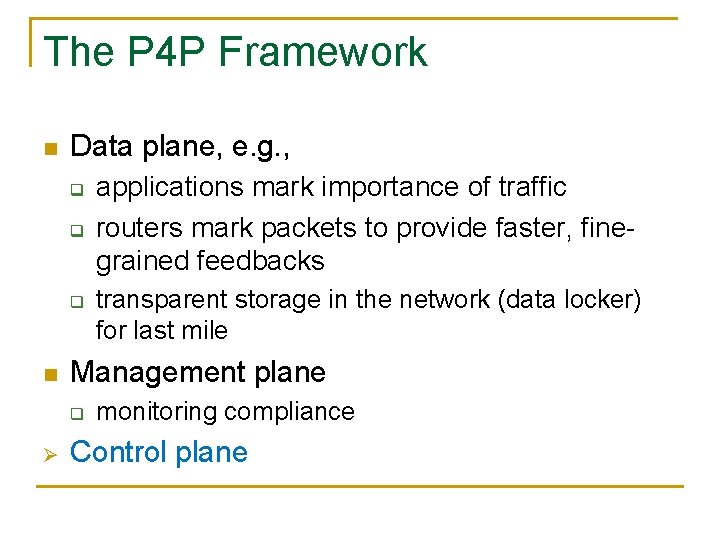

The P 4 P Framework n Data plane, e. g. , q q q n transparent storage in the network (data locker) for last mile Management plane q Ø applications mark importance of traffic routers mark packets to provide faster, finegrained feedbacks monitoring compliance Control plane

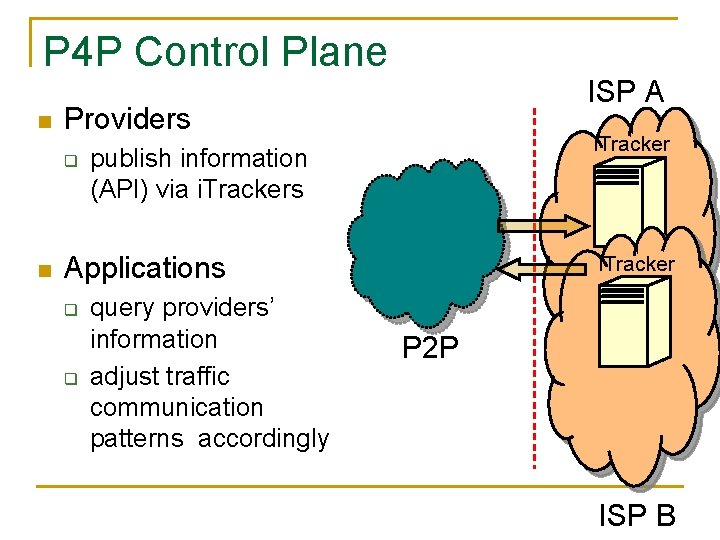

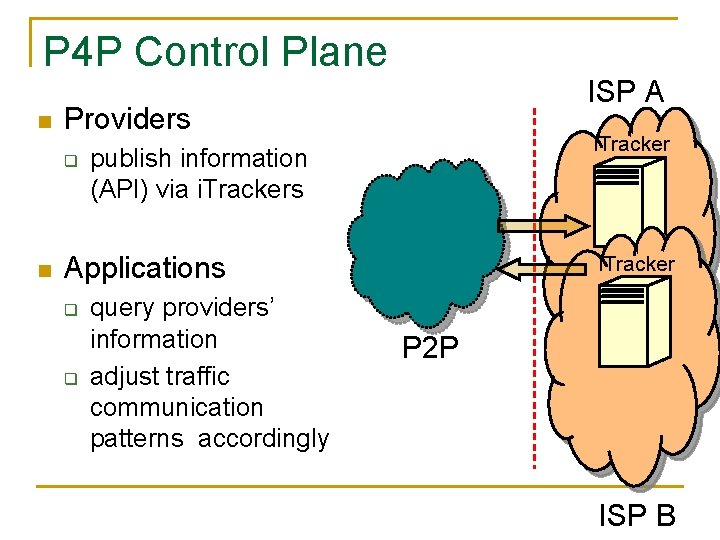

P 4 P Control Plane n Providers q n ISP A i. Tracker publish information (API) via i. Trackers Applications q q query providers’ information adjust traffic communication patterns accordingly i. Tracker P 2 P ISP B

Example: Tracker-based P 2 P n Information flow q q q 1. peer queries app. Tracker 2 i. Tracker 3 2/3. app. Tracker queries 1 i. Tracker 4 4. app. Tracker selects a set of active peers ISP A peer

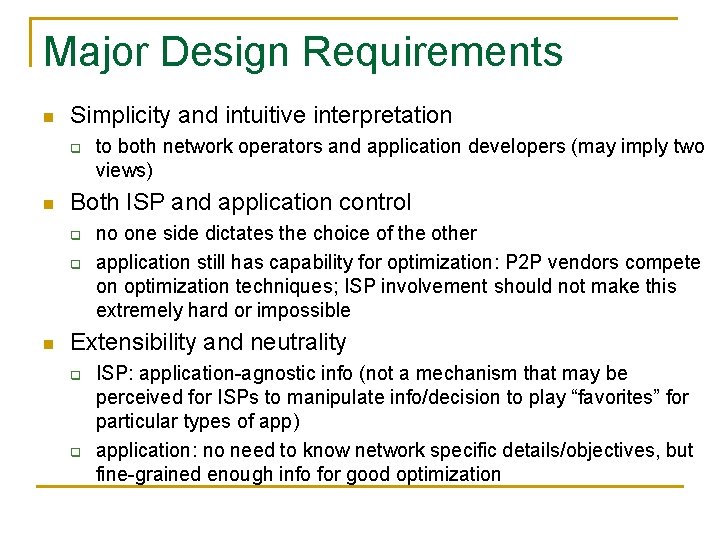

Two Major Design Requirements n Both ISP and application control q n no one side dictates the choice of the other Extensibility and neutrality q q ISP: application-agnostic (no need to know application specific details) application: network-agnostic (no need to know network specific details/objectives)

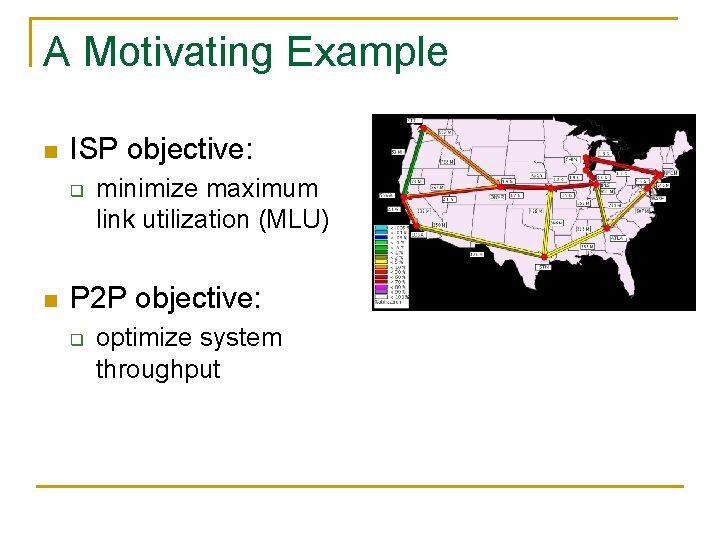

A Motivating Example n ISP objective: q n minimize maximum link utilization (MLU) P 2 P objective: q optimize system throughput

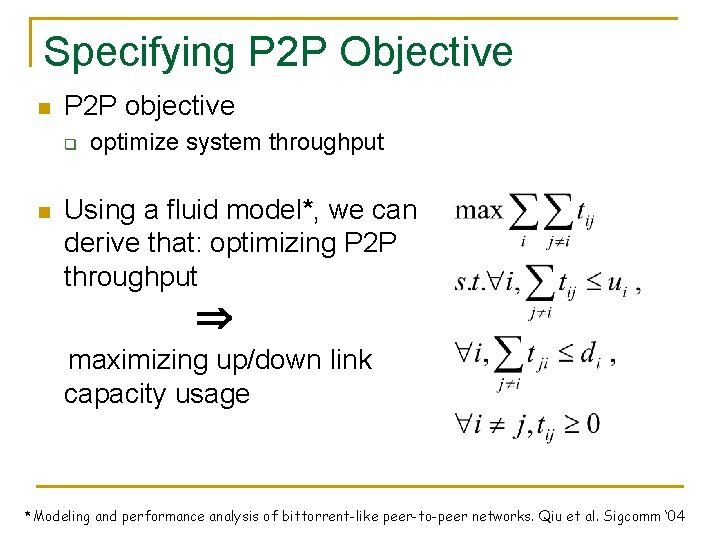

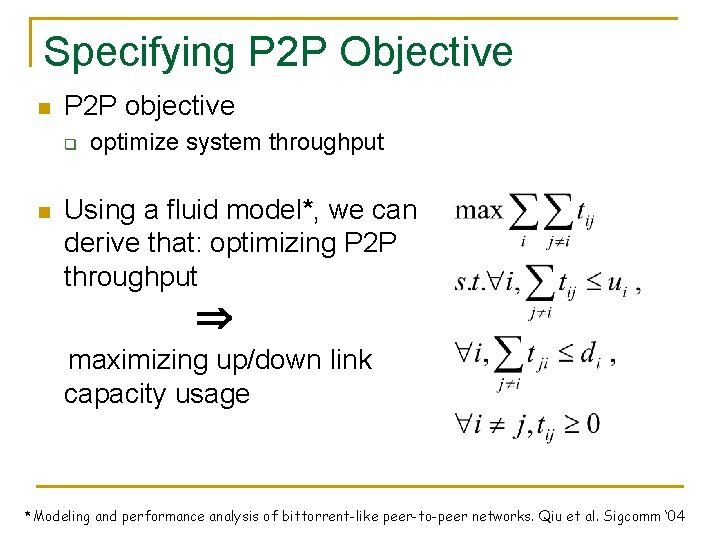

Specifying P 2 P Objective n P 2 P objective q n optimize system throughput Using a fluid model*, we can derive that: optimizing P 2 P throughput maximizing up/down link capacity usage *Modeling and performance analysis of bittorrent-like peer-to-peer networks. Qiu et al. Sigcomm ‘ 04

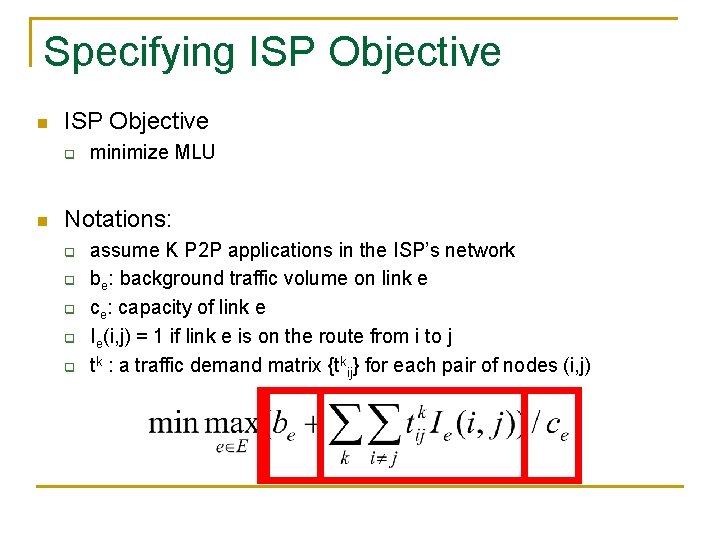

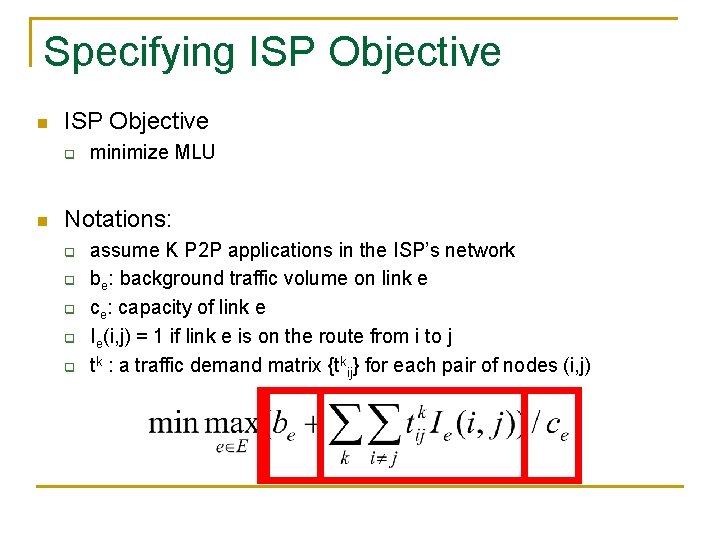

Specifying ISP Objective n ISP Objective q n minimize MLU Notations: q q q assume K P 2 P applications in the ISP’s network be: background traffic volume on link e ce: capacity of link e Ie(i, j) = 1 if link e is on the route from i to j tk : a traffic demand matrix {tkij} for each pair of nodes (i, j)

System Formulation n Combine the objectives of ISP and applications s. t. , for any k, T 1 tk Tk

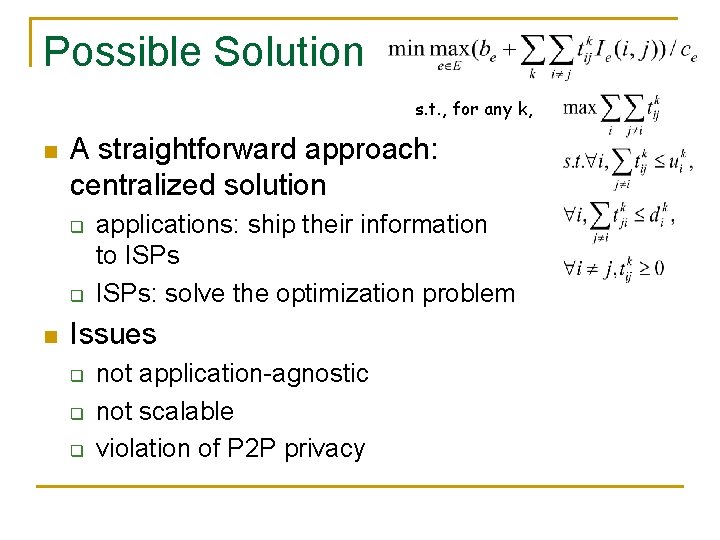

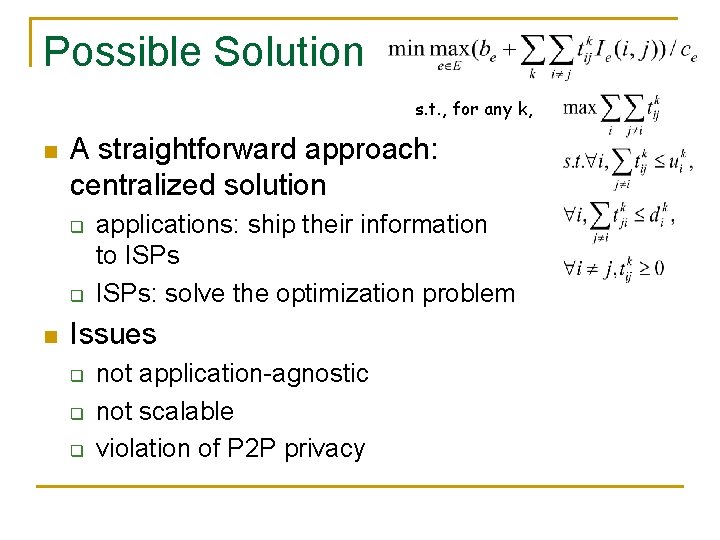

Possible Solution s. t. , for any k, n A straightforward approach: centralized solution q q n applications: ship their information to ISPs: solve the optimization problem Issues q q q not application-agnostic not scalable violation of P 2 P privacy

Constraints Couple Entities Constraints couple ISP/P 2 Ps together!

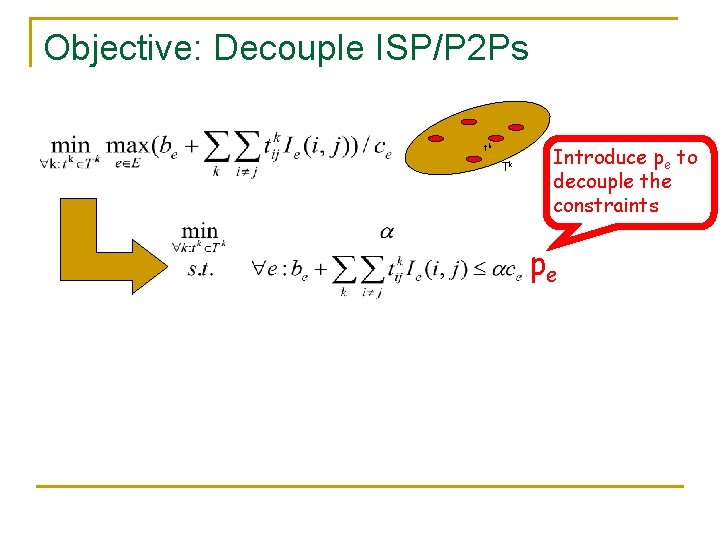

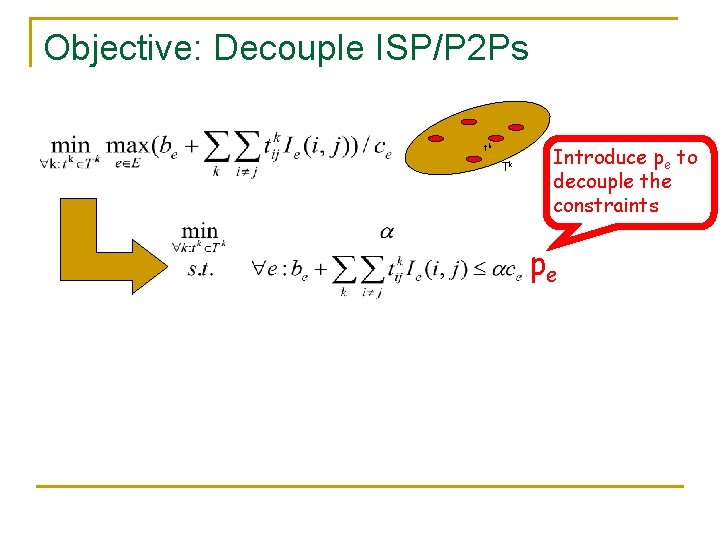

Objective: Decouple ISP/P 2 Ps tk Tk Introduce pe to decouple the constraints pe

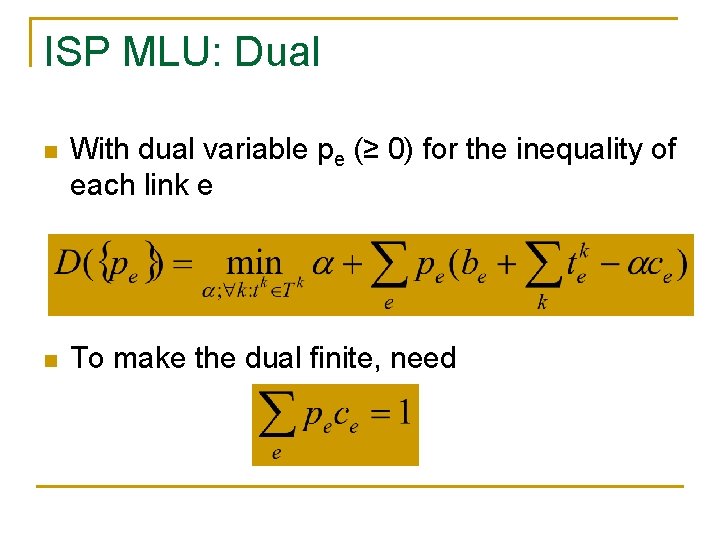

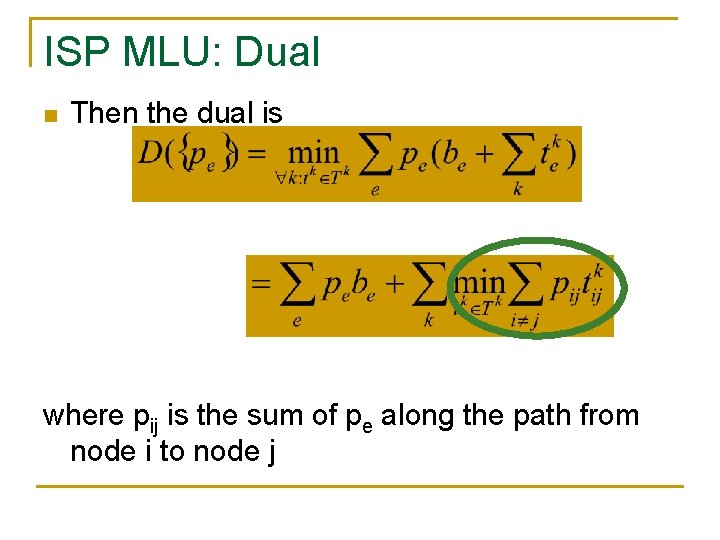

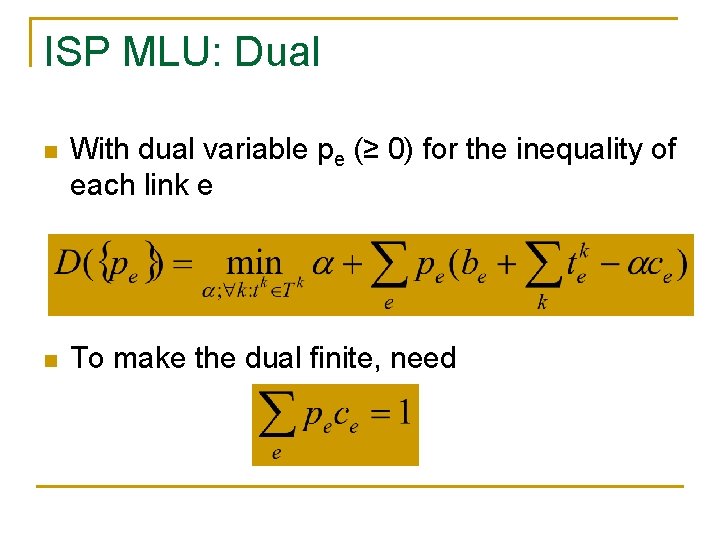

ISP MLU: Dual n With dual variable pe (≥ 0) for the inequality of each link e n To make the dual finite, need

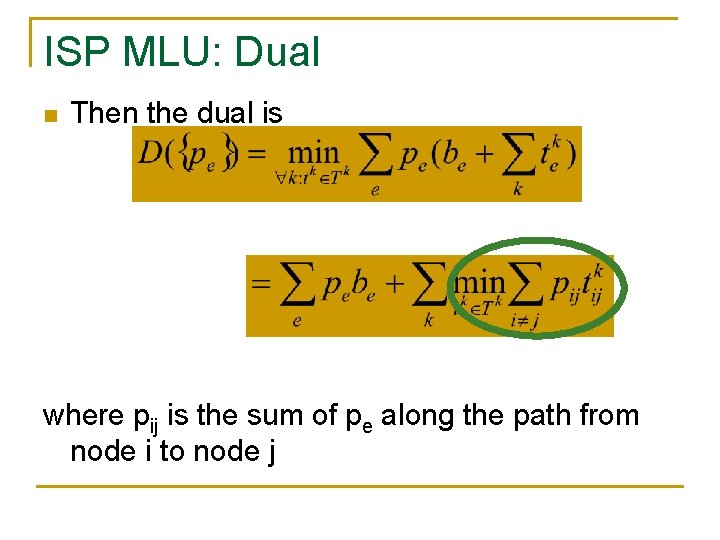

ISP MLU: Dual n Then the dual is where pij is the sum of pe along the path from node i to node j

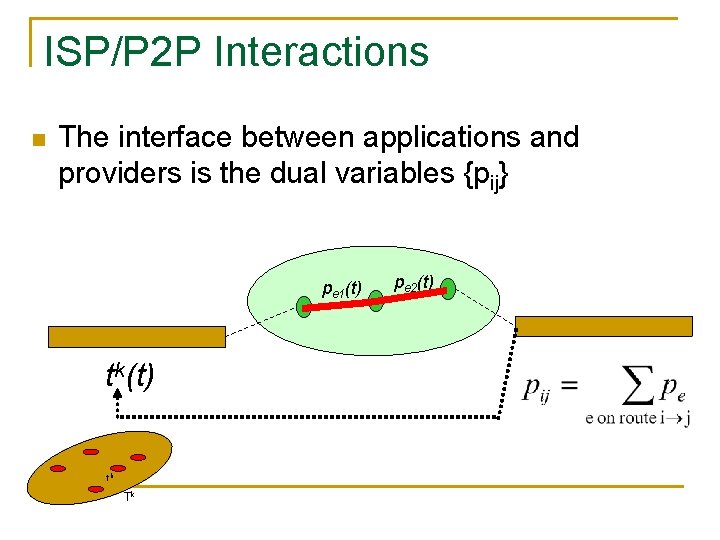

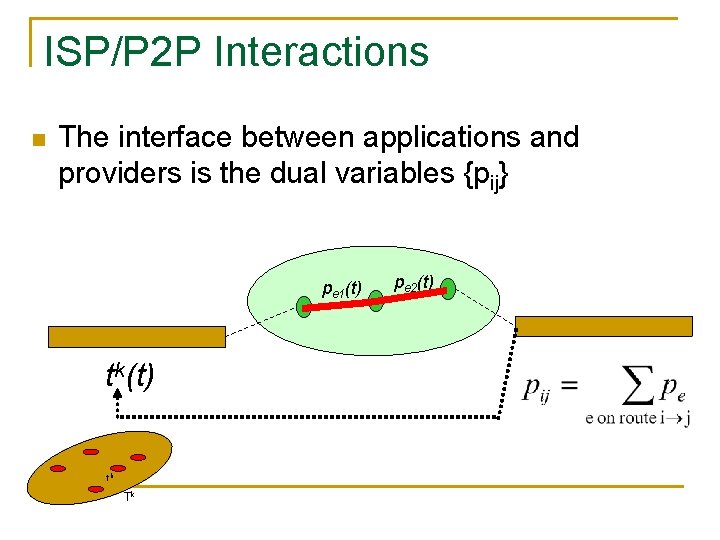

ISP/P 2 P Interactions n The interface between applications and providers is the dual variables {pij} pe 1(t) tk Tk pe 2(t)

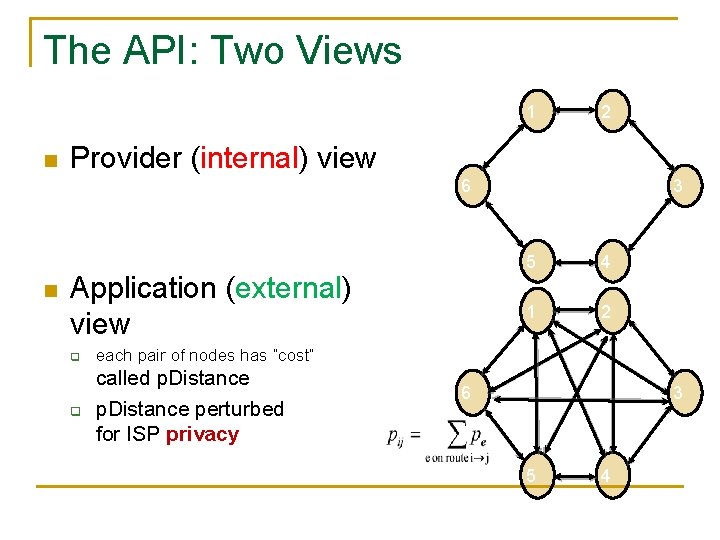

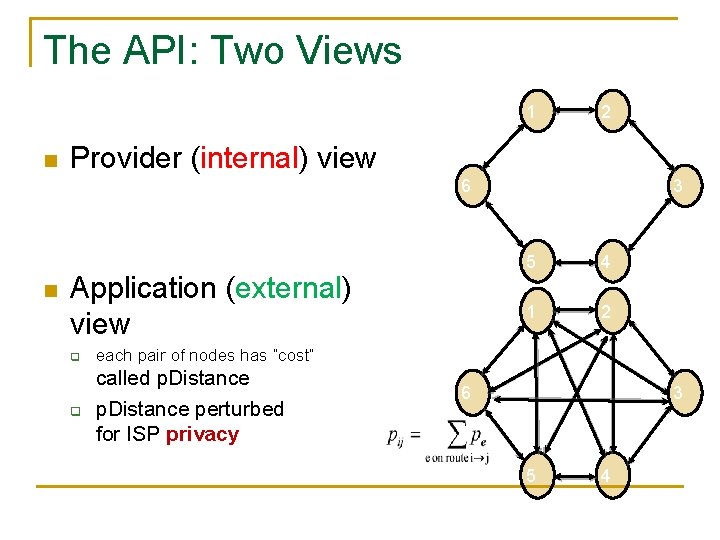

The API: Two Views 1 n 2 Provider (internal) view 6 n Application (external) view q each pair of nodes has “cost” q called p. Distance perturbed for ISP privacy 3 5 4 1 2 6 3 5 4

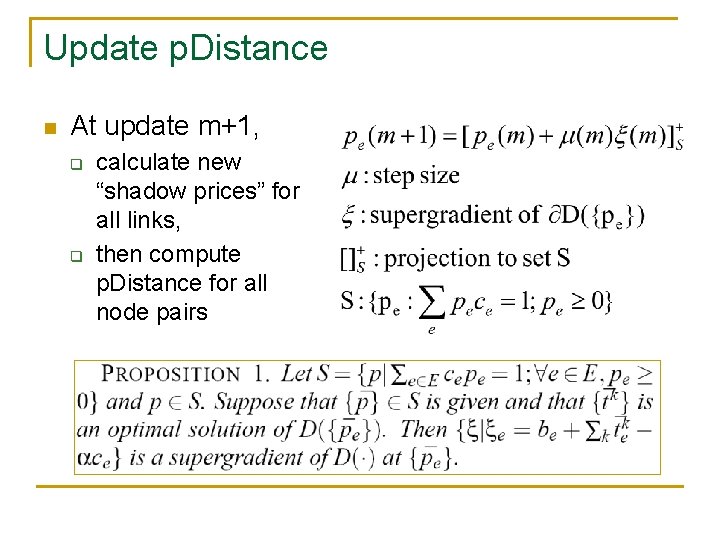

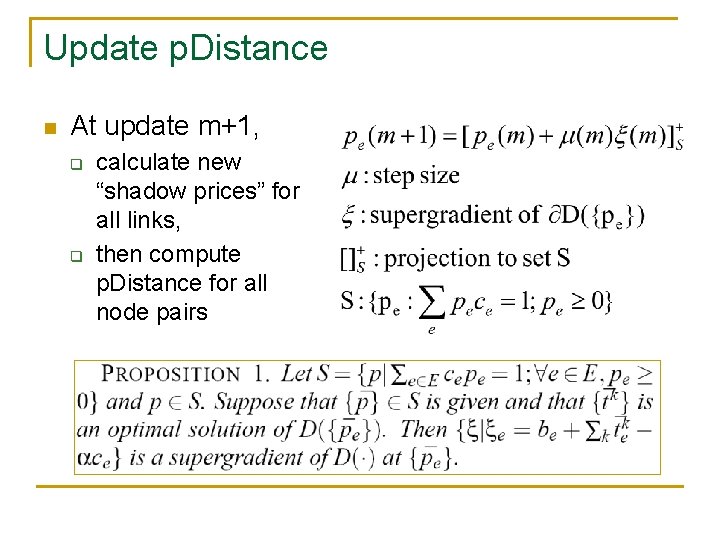

Update p. Distance n At update m+1, q q calculate new “shadow prices” for all links, then compute p. Distance for all node pairs

Generaliztion n The API handles other ISP objectives and P 2 P objectives ISPs Applications Minimize MLU Maximize throughput Minimize bit-distance product Robustness Minimize interdomain cost Rank peers using p. Distance Customized objectives …

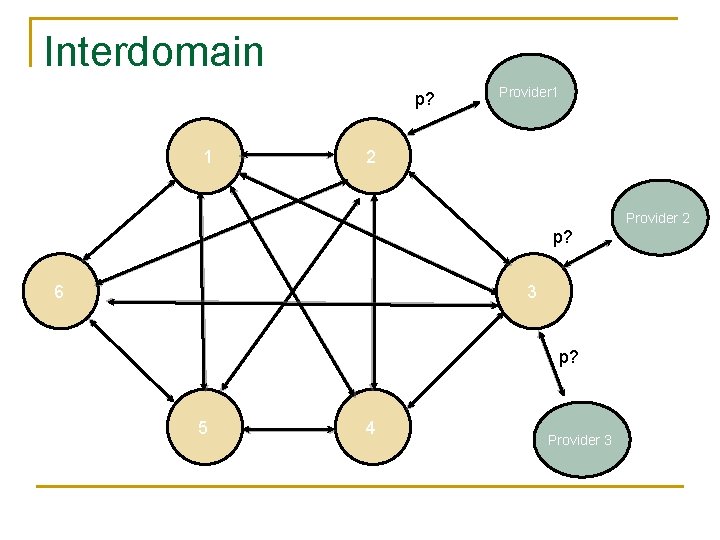

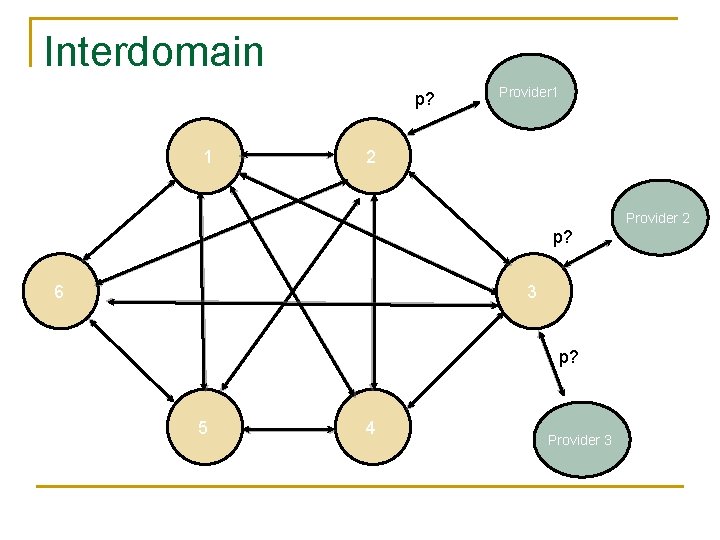

Interdomain p? 1 Provider 1 2 Provider 2 p? 6 3 p? 5 4 Provider 3

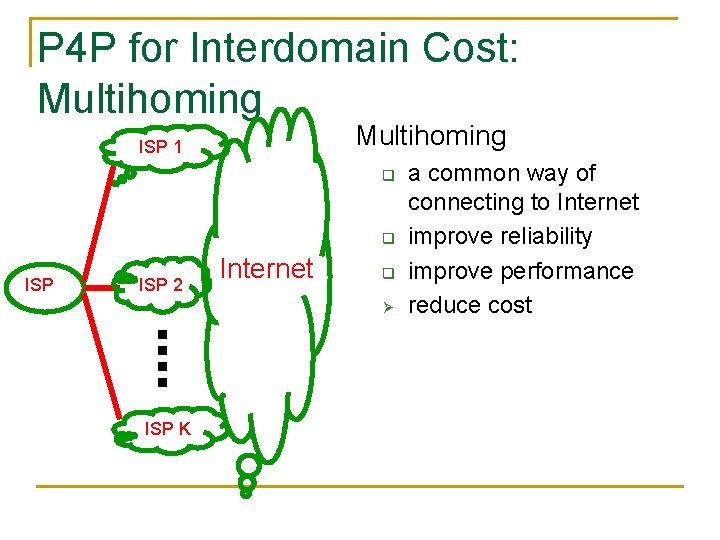

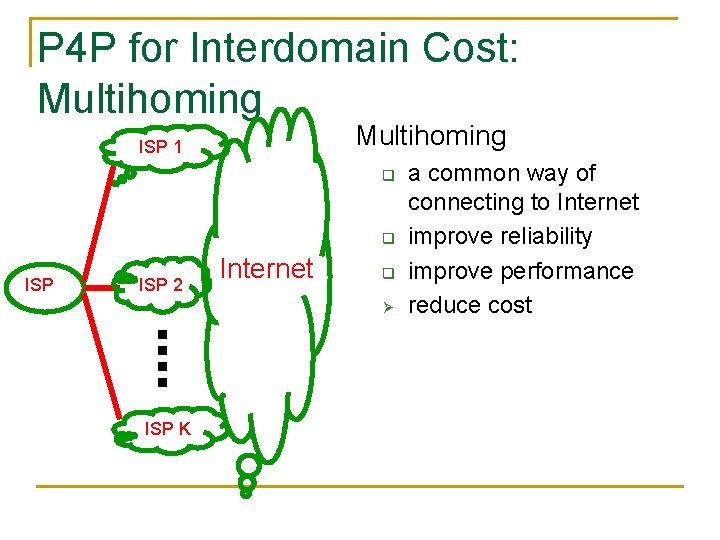

P 4 P for Interdomain Cost: Multihoming ISP 1 q q ISP 2 Internet q Ø ISP K a common way of connecting to Internet improve reliability improve performance reduce cost

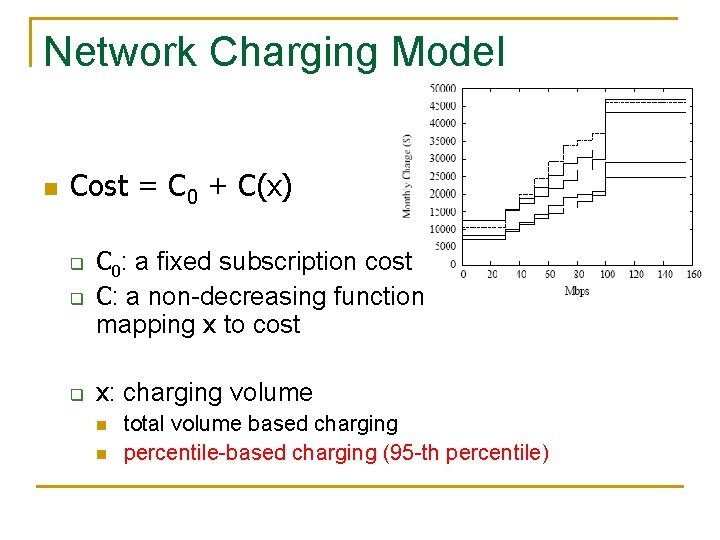

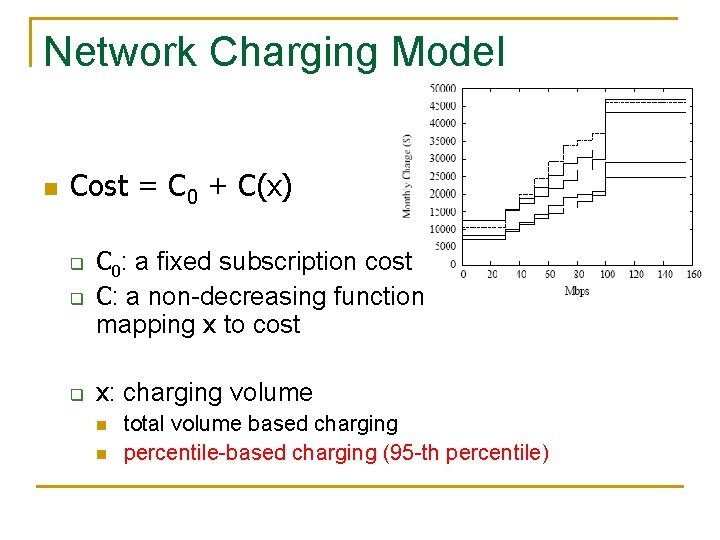

Network Charging Model n Cost = C 0 + C(x) q C 0: a fixed subscription cost C: a non-decreasing function mapping x to cost q x: charging volume q n n total volume based charging percentile-based charging (95 -th percentile)

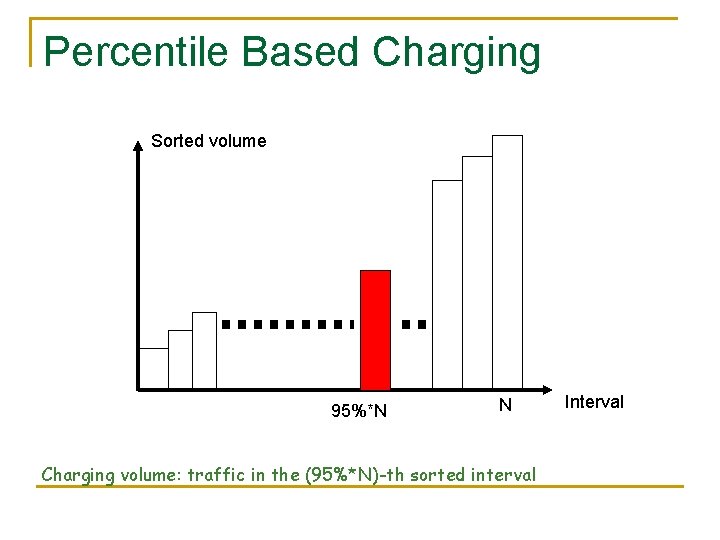

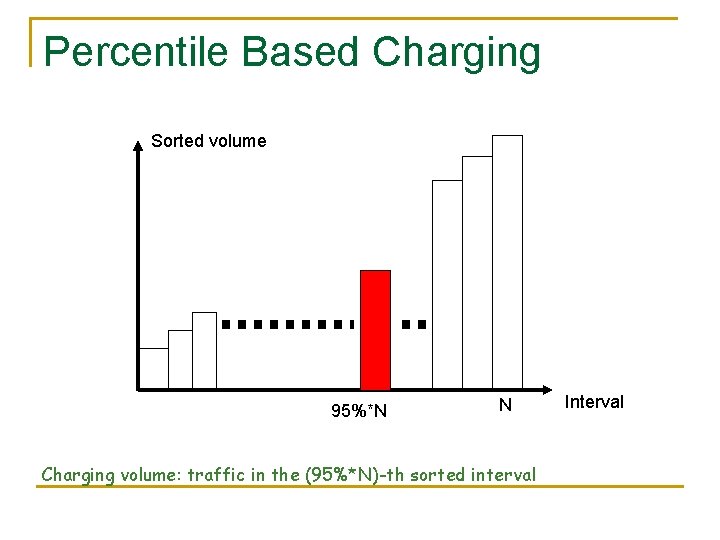

Percentile Based Charging Sorted volume 95%*N N Charging volume: traffic in the (95%*N)-th sorted interval Interval

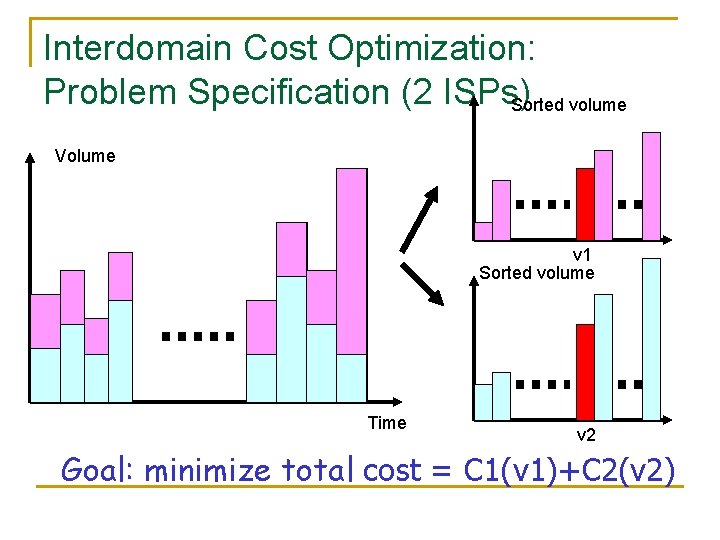

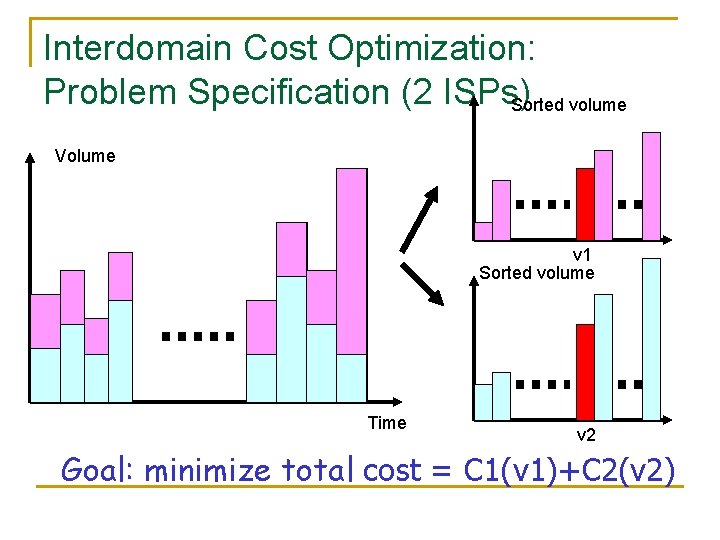

Interdomain Cost Optimization: Problem Specification (2 ISPs) Sorted volume Volume v 1 Sorted volume Time v 2 Goal: minimize total cost = C 1(v 1)+C 2(v 2)

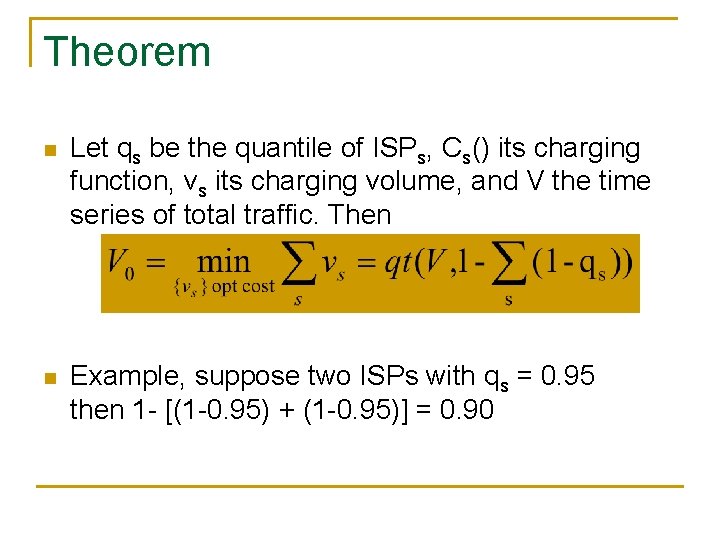

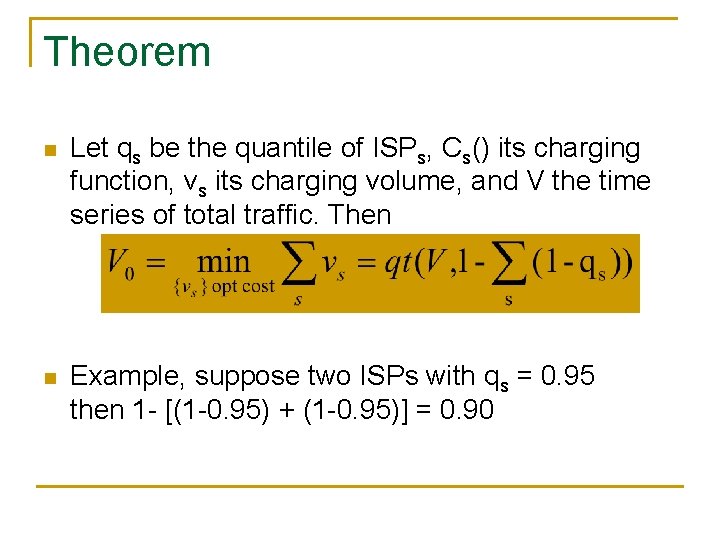

Theorem n Let qs be the quantile of ISPs, Cs() its charging function, vs its charging volume, and V the time series of total traffic. Then n Example, suppose two ISPs with qs = 0. 95 then 1 - [(1 -0. 95) + (1 -0. 95)] = 0. 90

Sketch of ISP Algorithm 1. Determine charging volume for each ISP q q 2. compute V 0 using dynamic programming to find {vs} that minimize ∑s cs(vs) subject to ∑svs=V 0 Assign traffic threshold v for each ISP at each interval

Integrating Cost Min with P 4 P

Evaluation Methodology n Bit. Torrent simulations q q n Abilene experiment using Bit. Torrent q q n Build a simulation package for Bit. Torrent Use topologies of Abilene and Tier-1 ISPs in simulations Run Bit. Torrent clients on Planet. Lab nodes in Abilene Interdomain emulation Field tests using Pando clients q q Applications: Pando pushed videos to 1. 25 million clients Providers: Telefonica/Verizon i. Trackers

Bit. Torrent Simulation: Bottleneck Link Utilization native Localized P 4 P results in less than half utilization on bottleneck links

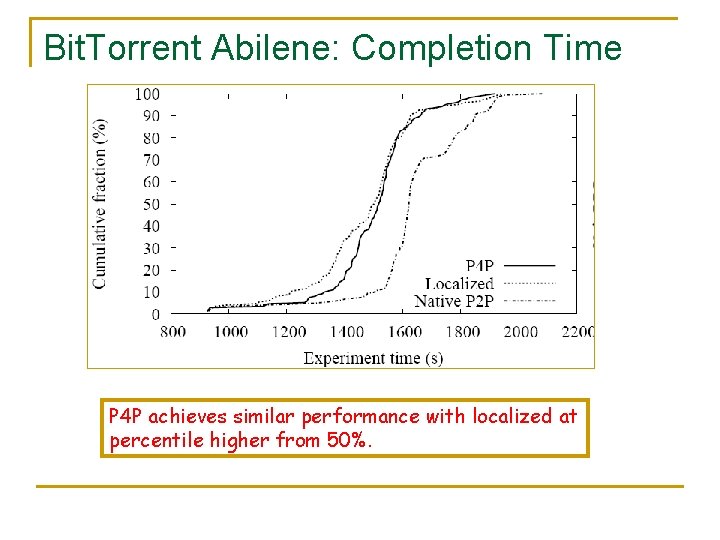

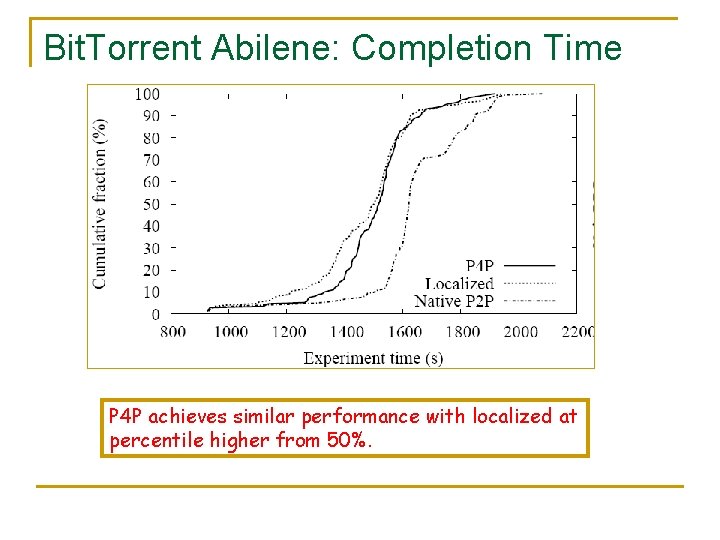

Bit. Torrent Abilene: Completion Time P 4 P achieves similar performance with localized at percentile higher from 50%.

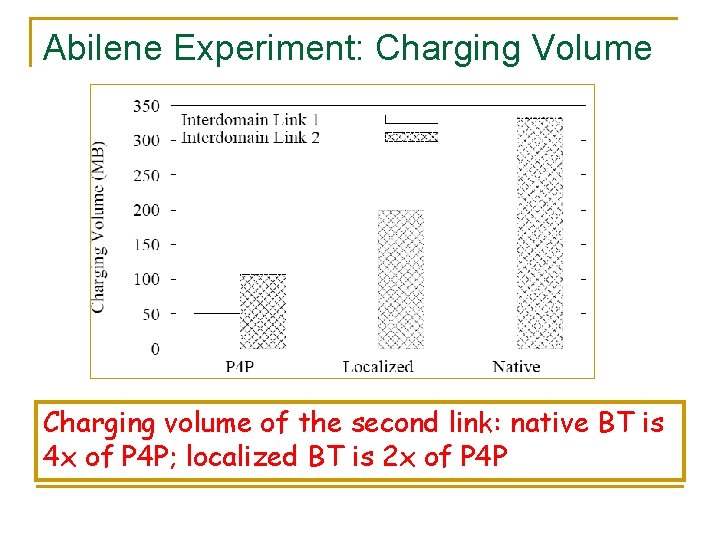

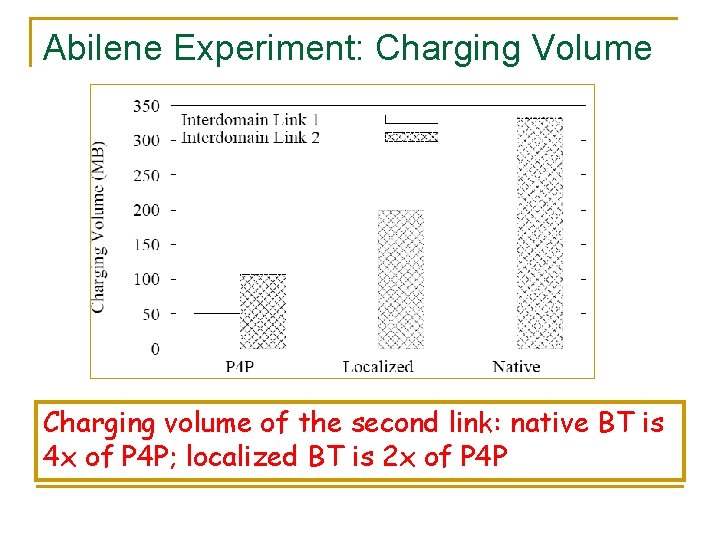

Abilene Experiment: Charging Volume Charging volume of the second link: native BT is 4 x of P 4 P; localized BT is 2 x of P 4 P

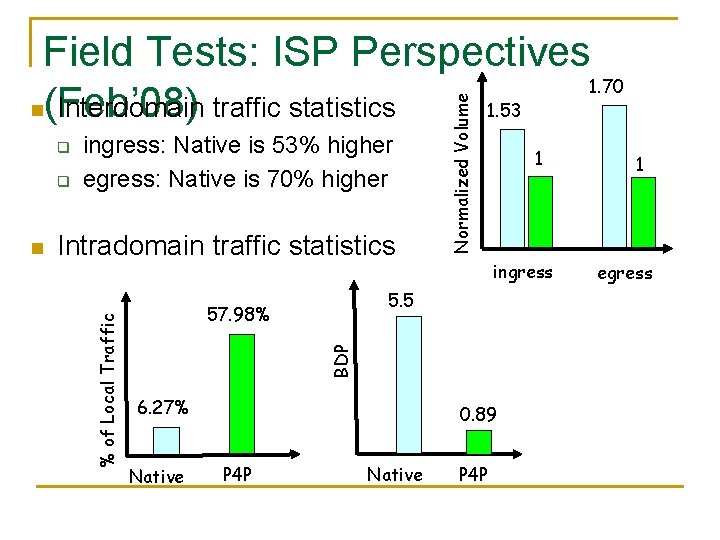

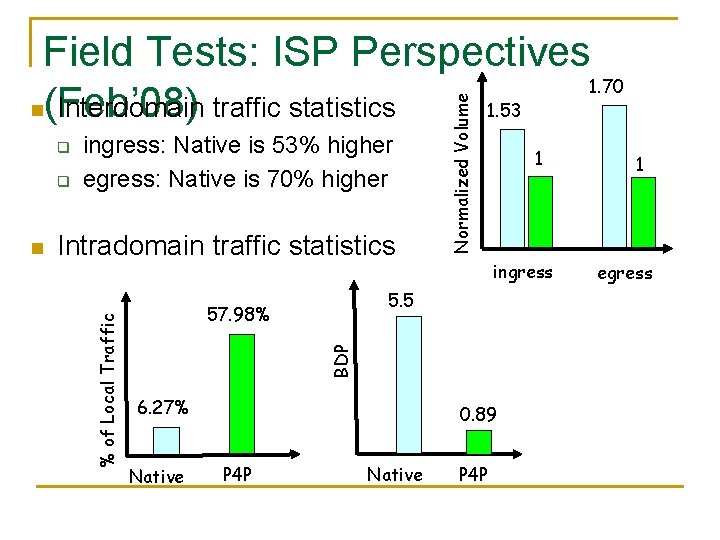

q q Intradomain traffic statistics 1 ingress 5. 5 57. 98% BDP % of Local Traffic n ingress: Native is 53% higher egress: Native is 70% higher Normalized Volume Field Tests: ISP Perspectives 1. 70 1. 53 n(Feb’ 08) Interdomain traffic statistics 6. 27% Native 0. 89 P 4 P Native P 4 P 1 egress

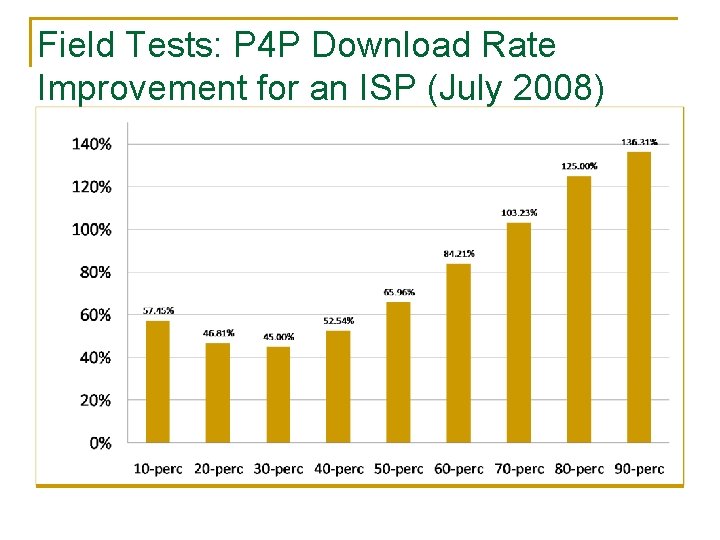

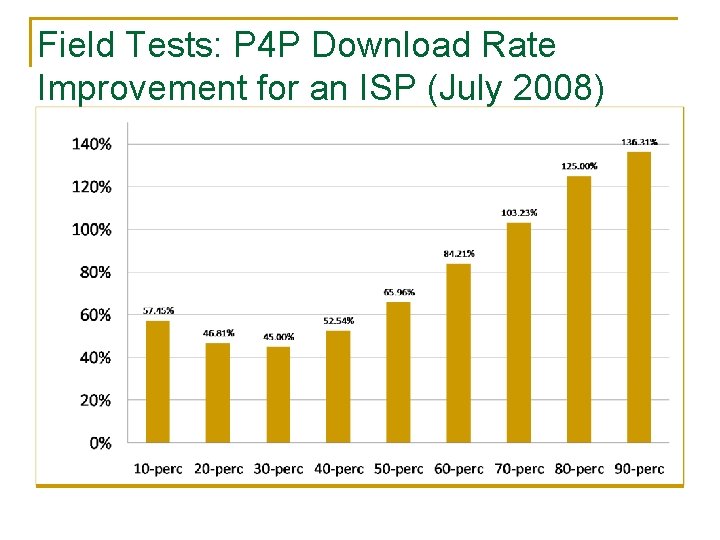

Field Tests: P 4 P Download Rate Improvement for an ISP (July 2008)

Summary & Future Work Summary q q q P 4 P for cooperative Internet traffic control Optimization decomposition to design an extensible and scalable framework Concurrent efforts: e. g, Feldmann et al, Telefonica/Thompson Future work q q q P 4 P capability interface (caching, Co. S, data locker) Other P 2 P application integration (streaming) Incentives, privacy, and security analysis of P 4 P

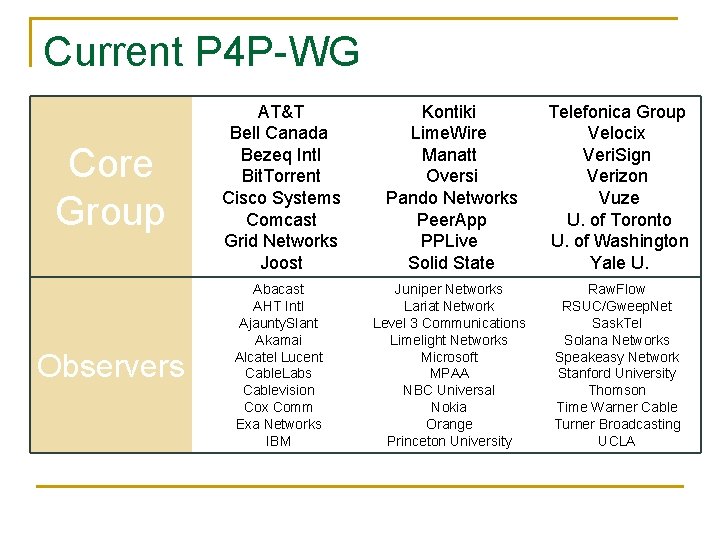

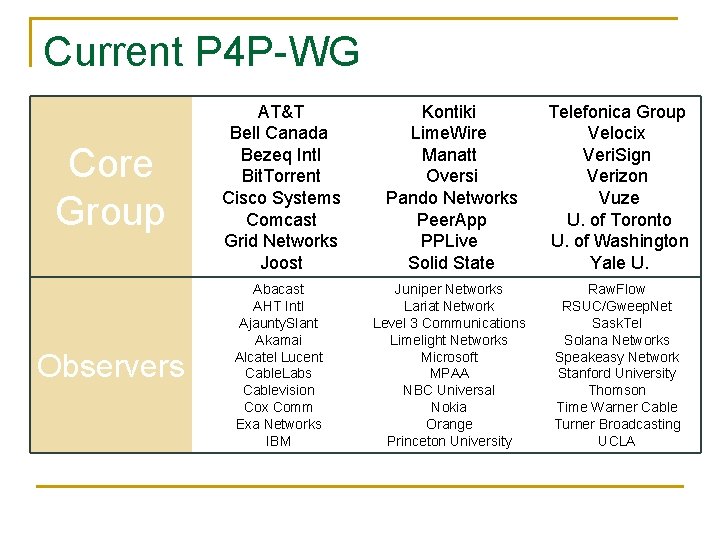

Current P 4 P-WG Core Group AT&T Bell Canada Bezeq Intl Bit. Torrent Cisco Systems Comcast Grid Networks Joost Kontiki Lime. Wire Manatt Oversi Pando Networks Peer. App PPLive Solid State Telefonica Group Velocix Veri. Sign Verizon Vuze U. of Toronto U. of Washington Yale U. Observers Abacast AHT Intl Ajaunty. Slant Akamai Alcatel Lucent Cable. Labs Cablevision Cox Comm Exa Networks IBM Juniper Networks Lariat Network Level 3 Communications Limelight Networks Microsoft MPAA NBC Universal Nokia Orange Princeton University Raw. Flow RSUC/Gweep. Net Sask. Tel Solana Networks Speakeasy Network Stanford University Thomson Time Warner Cable Turner Broadcasting UCLA

Thank you and Questions

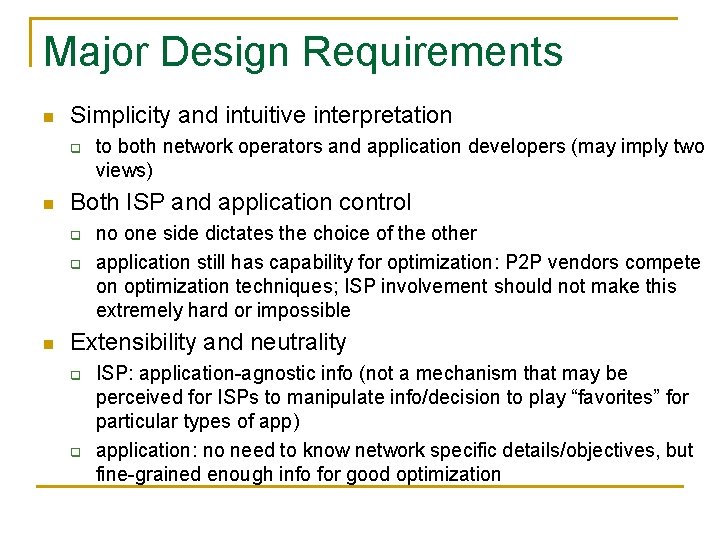

Major Design Requirements n Simplicity and intuitive interpretation q n Both ISP and application control q q n to both network operators and application developers (may imply two views) no one side dictates the choice of the other application still has capability for optimization: P 2 P vendors compete on optimization techniques; ISP involvement should not make this extremely hard or impossible Extensibility and neutrality q q ISP: application-agnostic info (not a mechanism that may be perceived for ISPs to manipulate info/decision to play “favorites” for particular types of app) application: no need to know network specific details/objectives, but fine-grained enough info for good optimization

Major Design Requirements (Cont’) Scalability n q q n ISP: avoid handling per peer-join request application: local/cached info from ISP useful during both initial peer joining and re-optimization Privacy preservation q ISP: information (spatial, time, status, and policy) aggregation and transformation (e. g. , interdomain link cost to congestion level due to virtual capacity) n q q n e. g. , some ISPs already publically report aggregated performance: e. g. , AT&T, Qwest inter-city performance http: //stat. qwest. net/index_flash. html application client privacy from ISP: no individual session info sent to ISP P 2 P vendor privacy: no revealing of client base information Fault tolerance

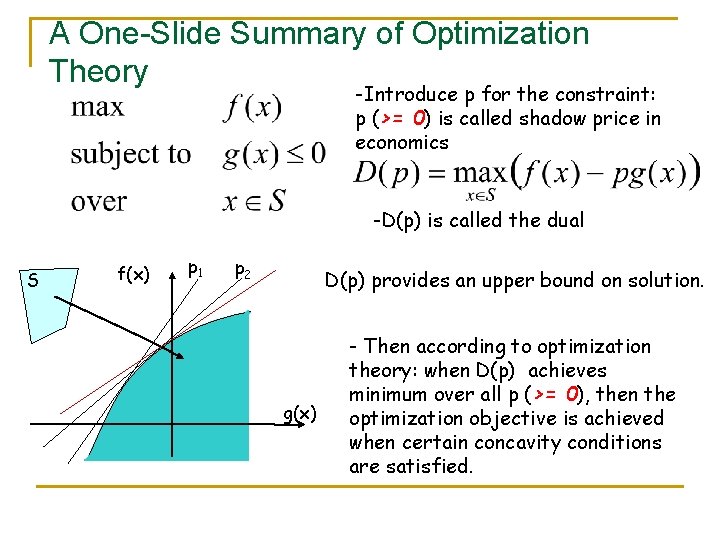

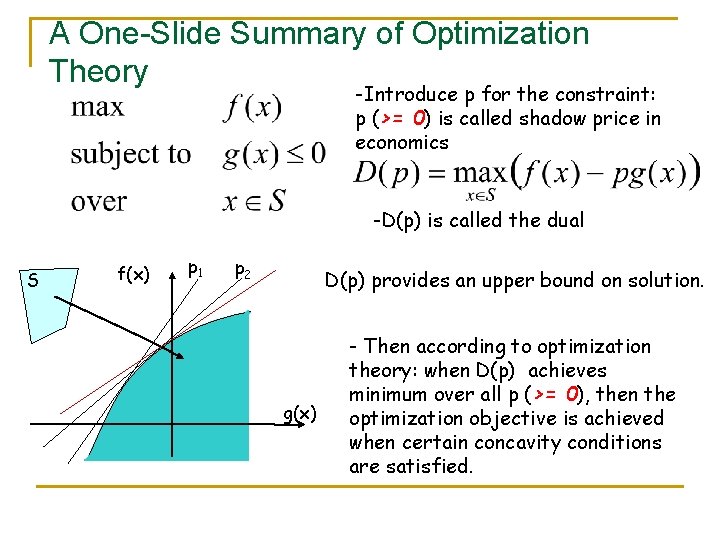

A One-Slide Summary of Optimization Theory -Introduce p for the constraint: p (>= 0) is called shadow price in economics -D(p) is called the dual S f(x) p 1 p 2 D(p) provides an upper bound on solution. g(x) - Then according to optimization theory: when D(p) achieves minimum over all p (>= 0), then the optimization objective is achieved when certain concavity conditions are satisfied.