Oxford University Particle Physics Computing Overview KASHIF MOHAMMAD

![Environment module: Working example [mohammad@pplxint 9 ~]$ python --version Python 2. 6. 6 [mohammad@pplxint Environment module: Working example [mohammad@pplxint 9 ~]$ python --version Python 2. 6. 6 [mohammad@pplxint](https://slidetodoc.com/presentation_image/44d5b863ad63a1479fe3eca8c71e3490/image-16.jpg)

- Slides: 39

Oxford University Particle Physics Computing Overview KASHIF MOHAMMAD SENIOR SYSTEMS MANAGER

Strategy When you arrive Interactive Machines Home and Data Area Environment Modules Batch System Grid Computing

When you arrive University Single Sign On(SSO) An account on Windows system and Physics email address Offered a choice of ◦ ◦ Windows Desktop (Fully Managed, No admin account for user) Ubuntu Desktop (Fully Managed, No admin account for user) Laptop (Installed by IT staff but managed by user) Apple Mac Account on Particle Physics Linux System ◦ We create an account for you if we know about your group. Otherwise we wait for user to contact us

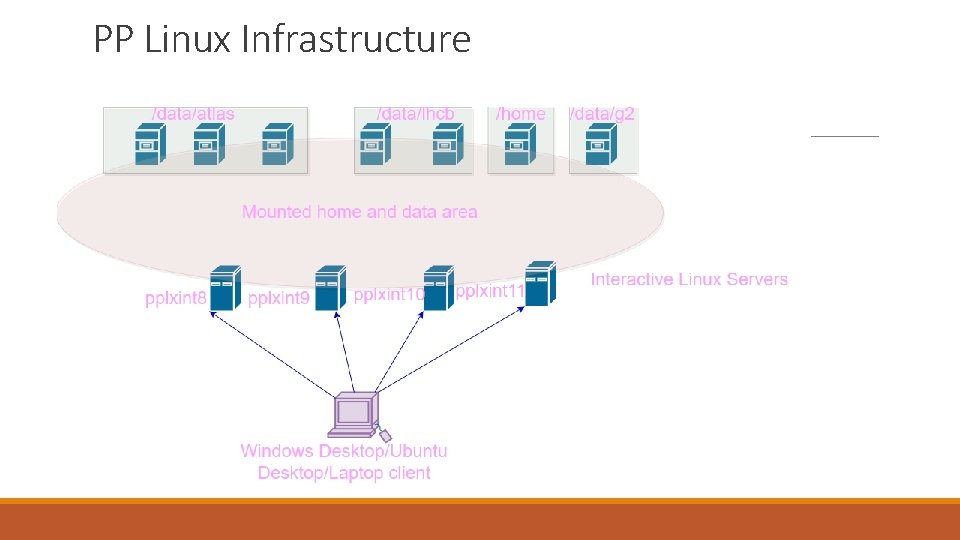

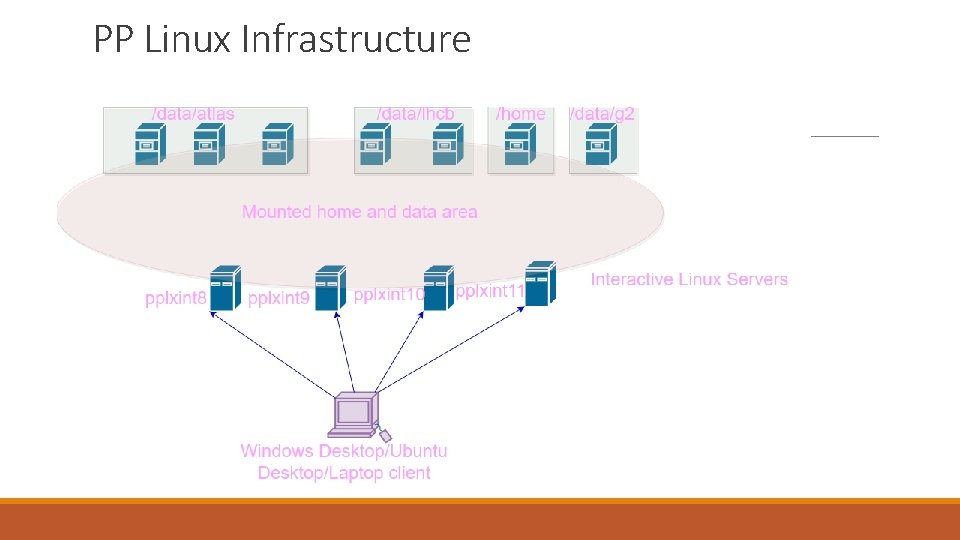

PP Linux Infrastructure

Interactive Machines Interactive machines are interface to the PP Linux environment These are general purpose shared machines which mounts users home area and data directories. Various software can be accessed through Environment Modules It is ideal for code development and testing your code Most users ssh into the machine either through putty or other ssh clients Full Remote Desktop is also available Can be accessed from outside Physics It’s a shared machine so please make sure that you are not running excessive processes on the machine.

Current Interactive Machines pplxint 8 and pplxint 9 ◦ Scientific Linux 6 (SL 6) ◦ Client for SL 6 PBS batch system ◦ Current main production system pplxint 10 and pplxint 11 ◦ ◦ Centos 7 Client for Centos 7 HTCondor batch system Recommended for new users if their code can run on centos 7 pplxint 11 is still in development (Sep 2018)

Bit About Home Storage Every user gets 30 GB home storage on PP Cluster mounted system Windows Desktop users have separate home area managed by Windows team Ubuntu Desktop users have also separate home area. All these home areas are on separate servers and does not depend on each other

PP Home Area The /home areas are backed up by two different systems nightly. The latest nightly backup of any lost or deleted files from your home directory is available at the read-only location /data/homebackup/{username} If you need older files, ask us If you need more space on /home, ask politely Store your thesis on /home NOT /data.

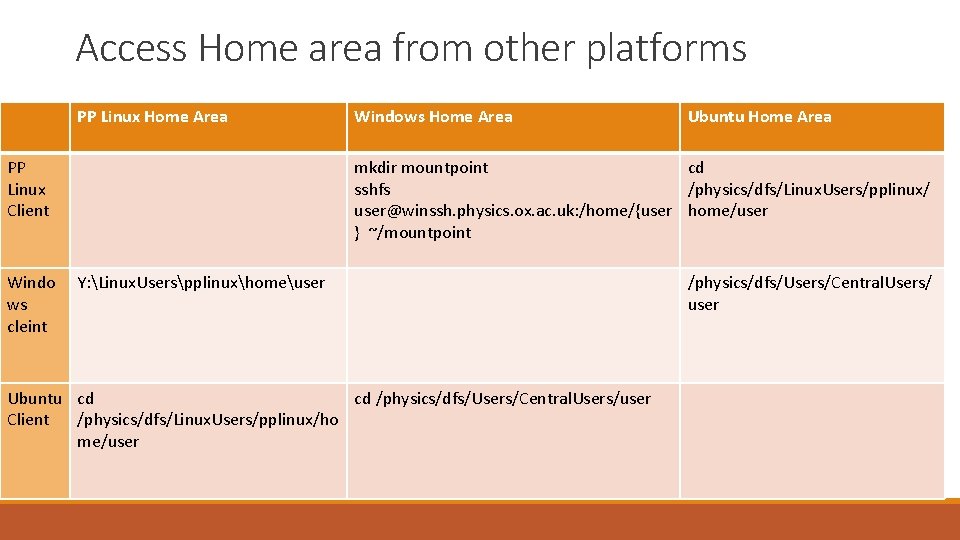

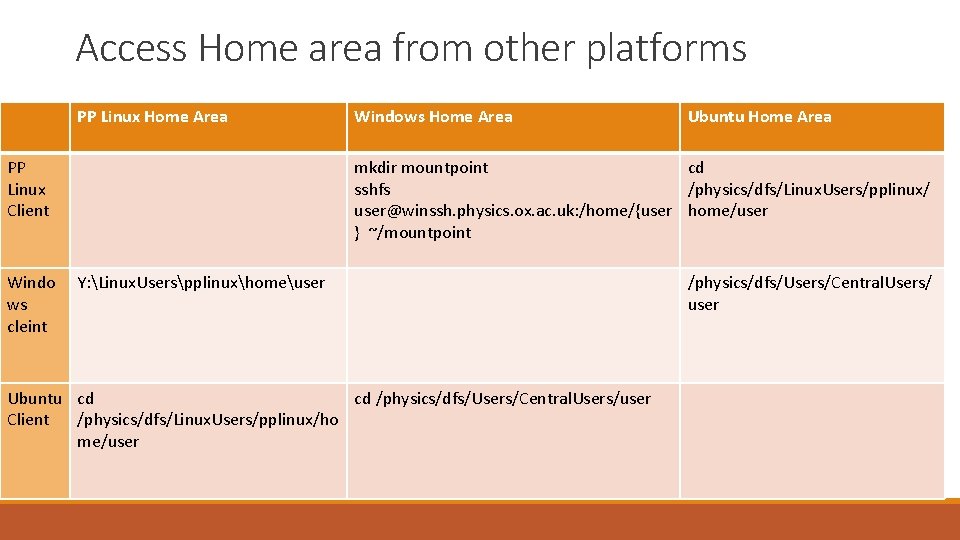

Access Home area from other platforms PP Linux Home Area PP Linux Client Windo ws cleint Windows Home Area Ubuntu Home Area mkdir mountpoint cd sshfs /physics/dfs/Linux. Users/pplinux/ user@winssh. physics. ox. ac. uk: /home/{user home/user } ~/mountpoint Y: Linux. Userspplinuxhomeuser Ubuntu cd cd /physics/dfs/Users/Central. Users/user Client /physics/dfs/Linux. Users/pplinux/ho me/user /physics/dfs/Users/Central. Users/ user

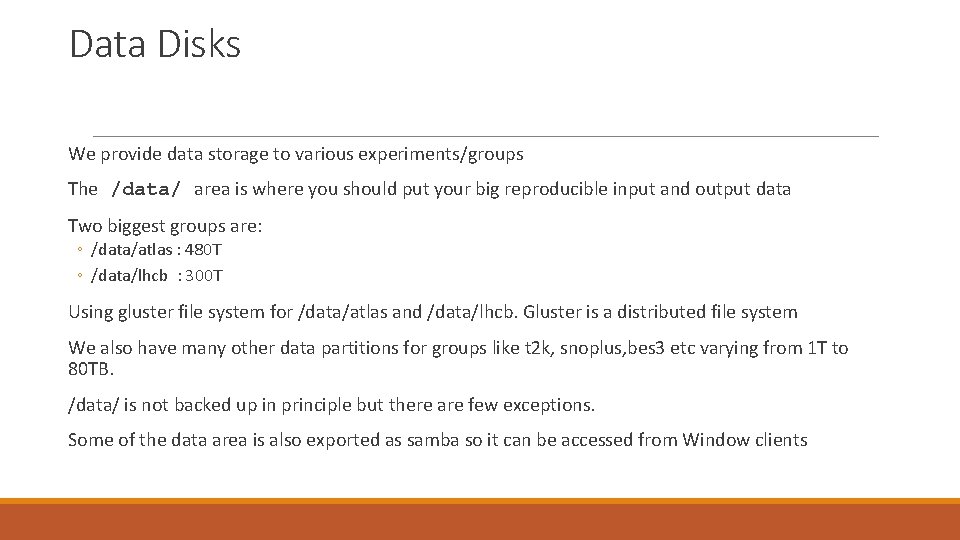

Data Disks We provide data storage to various experiments/groups The /data/ area is where you should put your big reproducible input and output data Two biggest groups are: ◦ /data/atlas : 480 T ◦ /data/lhcb : 300 T Using gluster file system for /data/atlas and /data/lhcb. Gluster is a distributed file system We also have many other data partitions for groups like t 2 k, snoplus, bes 3 etc varying from 1 T to 80 TB. /data/ is not backed up in principle but there are few exceptions. Some of the data area is also exported as samba so it can be accessed from Window clients

Distributed File System pplxgluster 01 80 TB pplxgluster 02 80 TB pplxgluster 03 40 TB pplxgluster. N N TB /data/atlas as single mount point SL 6 Node Centos 7 Node pplxint* Batch Server

Accessing PP Linux pplxint* servers are accessible from outside physics ssh directly from linux clients Putty is the preferred option from Windows. ◦ Putty is available from “Physics Self Service” at Department Managed Desktop ◦ It is better to use password protected ssh keys rather than plain password Xming is now preferred method for X Windows ◦ The older method was Exceed and you will still find it mentioned in lots of Physics documentation. ◦ Xming is also available from “Physics Self Service” For more details: https: //www 2. physics. ox. ac. uk/it-services/ppunix-cluster

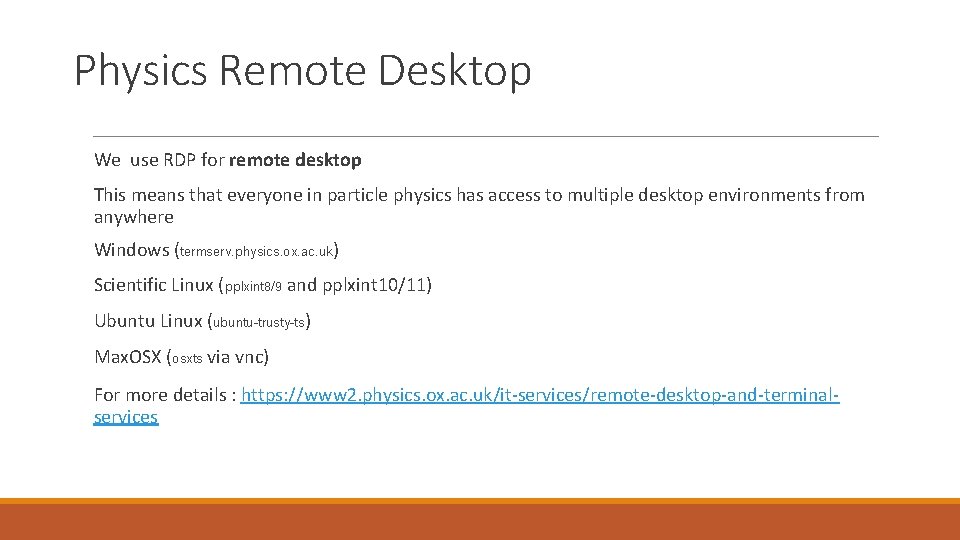

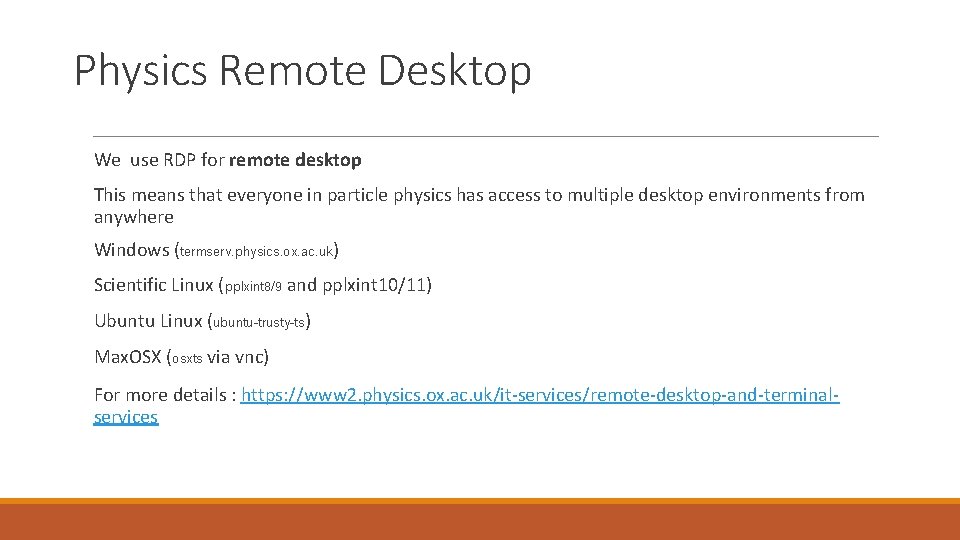

Physics Remote Desktop We use RDP for remote desktop This means that everyone in particle physics has access to multiple desktop environments from anywhere Windows (termserv. physics. ox. ac. uk) Scientific Linux (pplxint 8/9 and pplxint 10/11) Ubuntu Linux (ubuntu-trusty-ts) Max. OSX (osxts via vnc) For more details : https: //www 2. physics. ox. ac. uk/it-services/remote-desktop-and-terminalservices

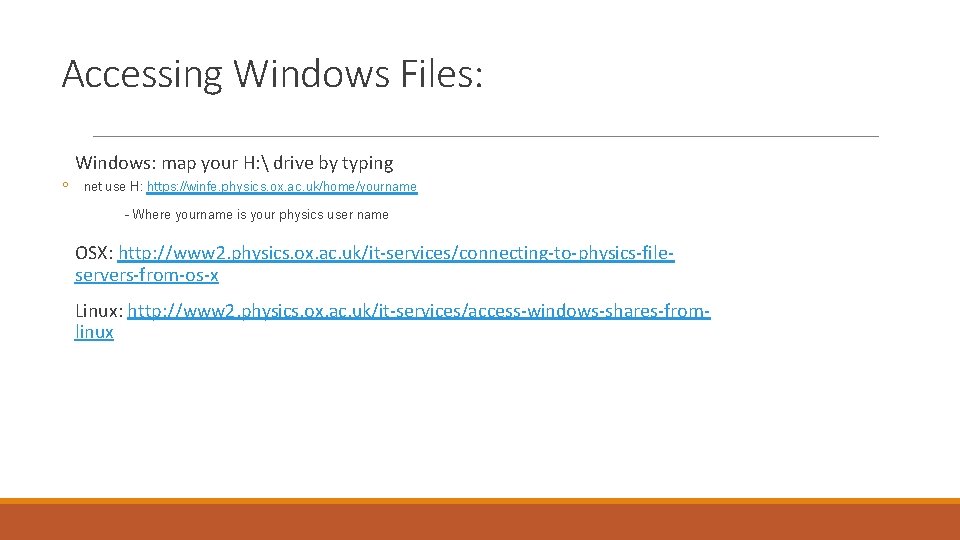

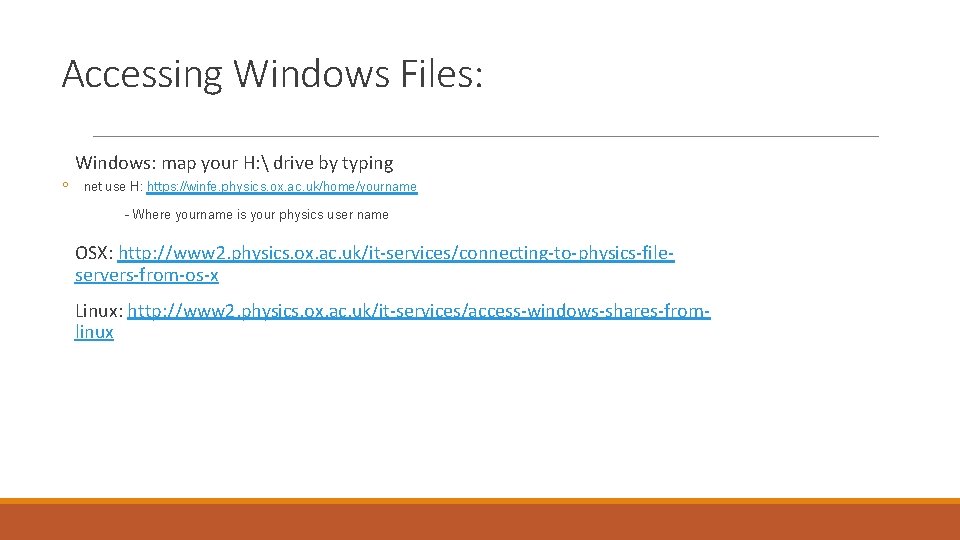

Accessing Windows Files: Windows: map your H: drive by typing ◦ net use H: https: //winfe. physics. ox. ac. uk/home/yourname - Where yourname is your physics user name OSX: http: //www 2. physics. ox. ac. uk/it-services/connecting-to-physics-fileservers-from-os-x Linux: http: //www 2. physics. ox. ac. uk/it-services/access-windows-shares-fromlinux

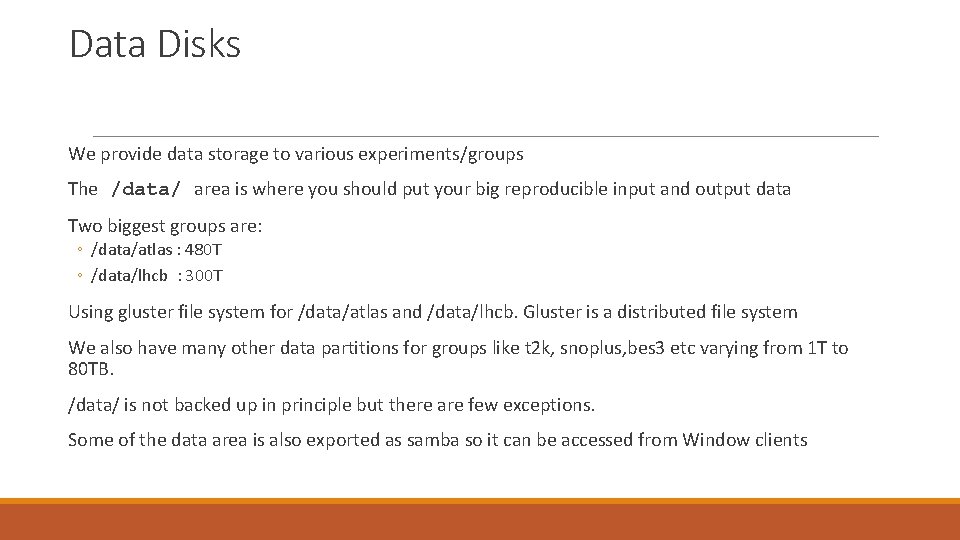

Environment Modules: Software management OS comes with set of core packages like gcc, python, glibc etc. The version of these core packages are tightly tied with the OS Version. So if you need advance version of gcc; what options you have: ◦ Compile in your area and update all paths manually ◦ Use Environment nodules provided by us We have many versions of gcc, python, root etc which can be loaded as module. Let us know if you need any software which is not available as module

![Environment module Working example mohammadpplxint 9 python version Python 2 6 6 mohammadpplxint Environment module: Working example [mohammad@pplxint 9 ~]$ python --version Python 2. 6. 6 [mohammad@pplxint](https://slidetodoc.com/presentation_image/44d5b863ad63a1479fe3eca8c71e3490/image-16.jpg)

Environment module: Working example [mohammad@pplxint 9 ~]$ python --version Python 2. 6. 6 [mohammad@pplxint 9 ~]$ module avail python ---------------------------------------------------- /network/software/el 6/modules ---------------------------------------------------python/02. 7. 3_gcc 47_cvmfs python/2. 7. 13__gcc 49 python/2. 7. 3(default) python/2. 7. 8__gcc 48 python/3. 4. 2__gcc 48 python/3. 4. 4 python/3. 4. 5 --------------------------------------------- /network/software/linux-x 86_64/arc-modules/modules-tested --------------------------------------------python/3. 3 ---------------------------------------------- /network/software/linux-x 86_64/arc-modules/modules ----------------------------------------------python/2. 7__gcc-4. 8 python/2. 7__qiime python/3. 3__gcc-4. 8 python/3. 4__gcc 4. 8 [mohammad@pplxint 9 ~]$ module load python/3. 3 [mohammad@pplxint 9 ~]$ python --version Python 3. 3. 2 [mohammad@pplxint 9 ~]$ module unload python/3. 3 [mohammad@pplxint 9 ~]$ python --version Python 2. 6. 6

Version Control Git is the most popular version control system now. Github is free cloud based software hosting platform Users can have unlimited public repo on github but private repos are paid ◦ But you can have free private repos as students: https: //education. github. com/ Git software is installed on all PP Linux system

Gitlab at Oxford Physics department has its own gitlab repository https: //gitlab. physics. ox. ac. uk/ All members of Oxford Physics already have accounts on gitlab. physics. ox. ac. uk More details are here ◦ https: //www 2. physics. ox. ac. uk/it-services/git-for-beginners

Recommended Working strategy Store your critical data on your home directory as it is backed up It is better to do your code development on interactive machines (pplxint*) Avoid running multiple jobs on interactive machines; use batch system instead /data/ is shared area and read/writable by group. Be careful when deleting stuff; specially never use ‘rm –rf *’ !

Batch System

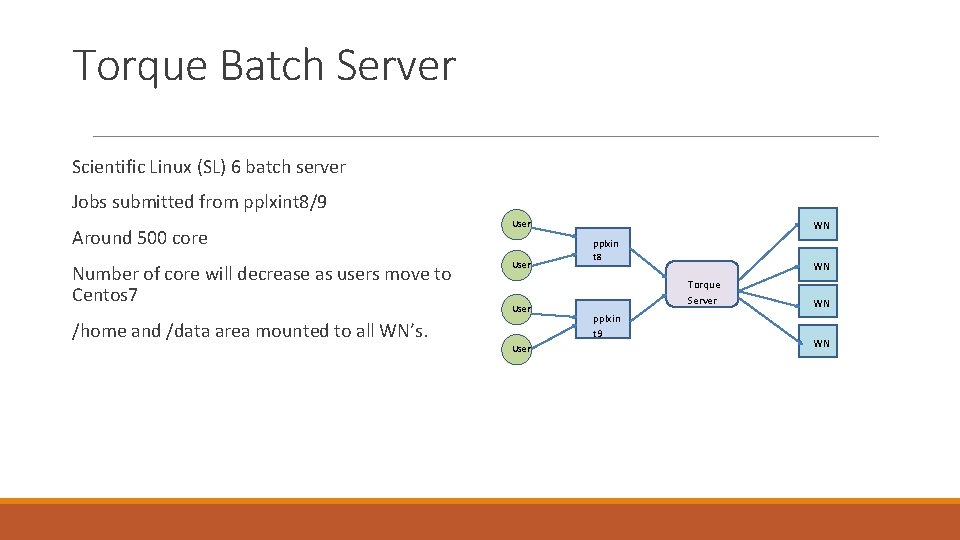

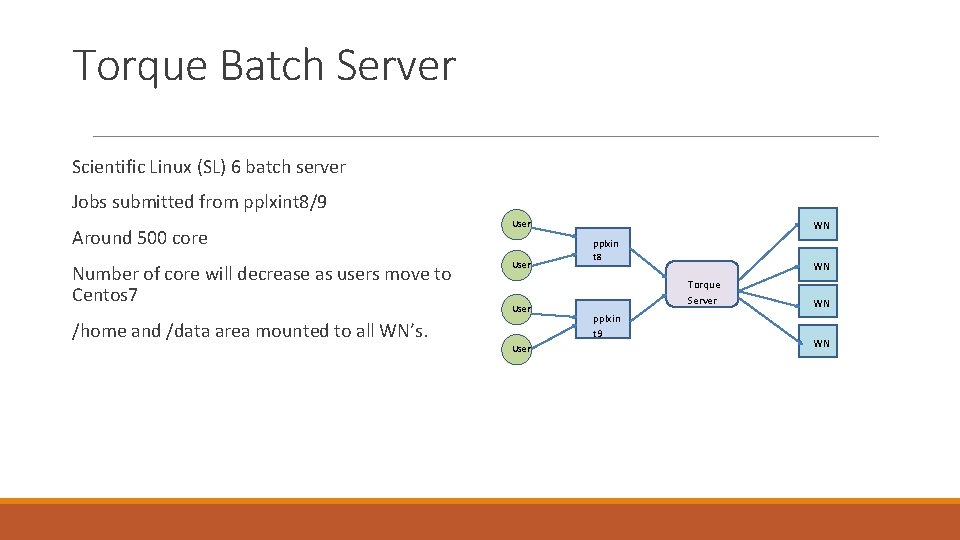

Torque Batch Server Scientific Linux (SL) 6 batch server Jobs submitted from pplxint 8/9 Around 500 core Number of core will decrease as users move to Centos 7 User /home and /data area mounted to all WN’s. User WN pplxin t 8 WN Torque Server pplxin t 9 WN WN

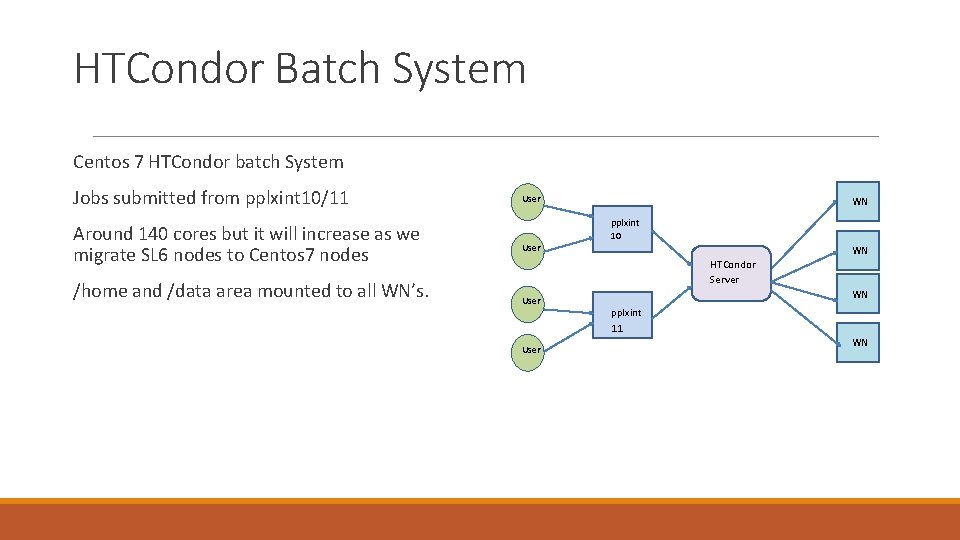

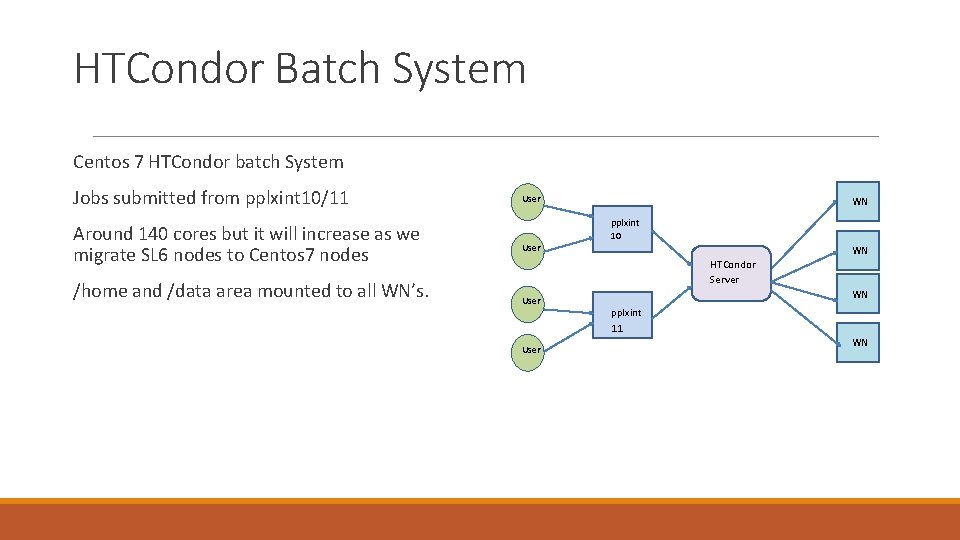

HTCondor Batch System Centos 7 HTCondor batch System Jobs submitted from pplxint 10/11 Around 140 cores but it will increase as we migrate SL 6 nodes to Centos 7 nodes /home and /data area mounted to all WN’s. User WN pplxint 10 WN HTCondor Server User WN pplxint 11 WN

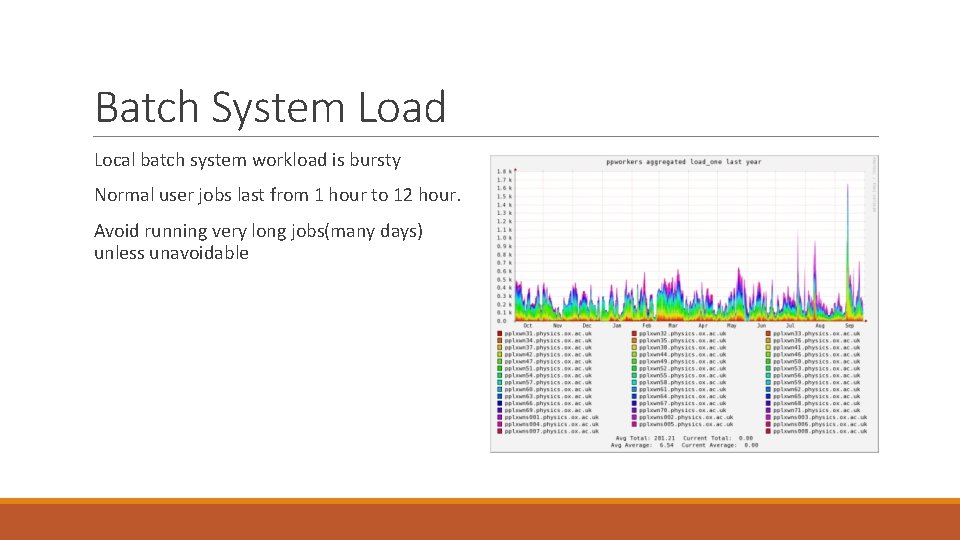

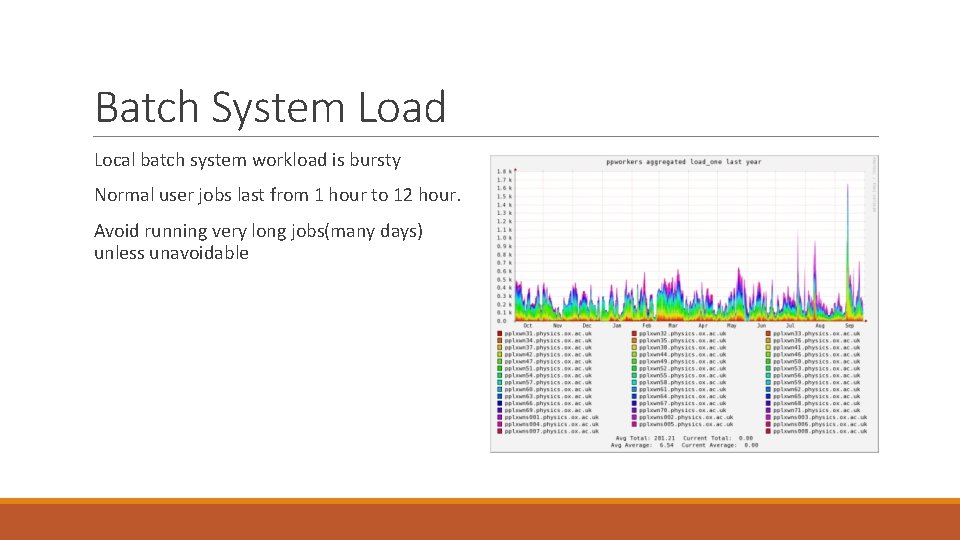

Batch System Load Local batch system workload is bursty Normal user jobs last from 1 hour to 12 hour. Avoid running very long jobs(many days) unless unavoidable

Grid Computing ATLAS, SNO, LHCB AND T 2 K IN PARTICULAR

Grid Oxford is a tier-2 centre for Worldwide LHC Computing Grid(WLCG) WLCG is a global collaboration spread across 170 computing centre ◦ Two million task run every day ◦ 800, 000 Computing cores ◦ 900 Petabyte data LHC experiments are the major users but other physics and non-physics groups have also used it extensively Oxford Tier-2 is a very small part of WLCG grid ◦ 3000 Cores ◦ 1 PB Data You need a Grid Certificate (X 509) to be able to use Grid

Grid Cluster at Begbroke

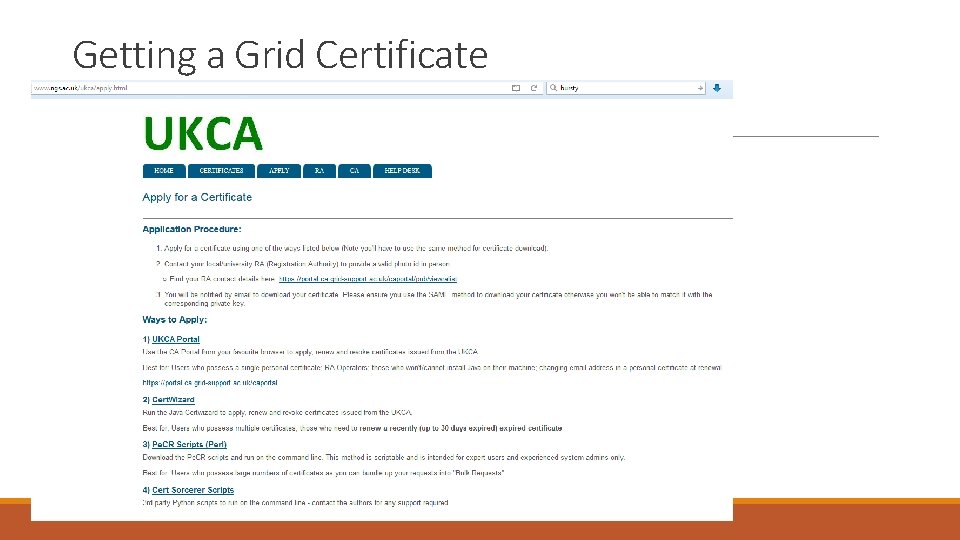

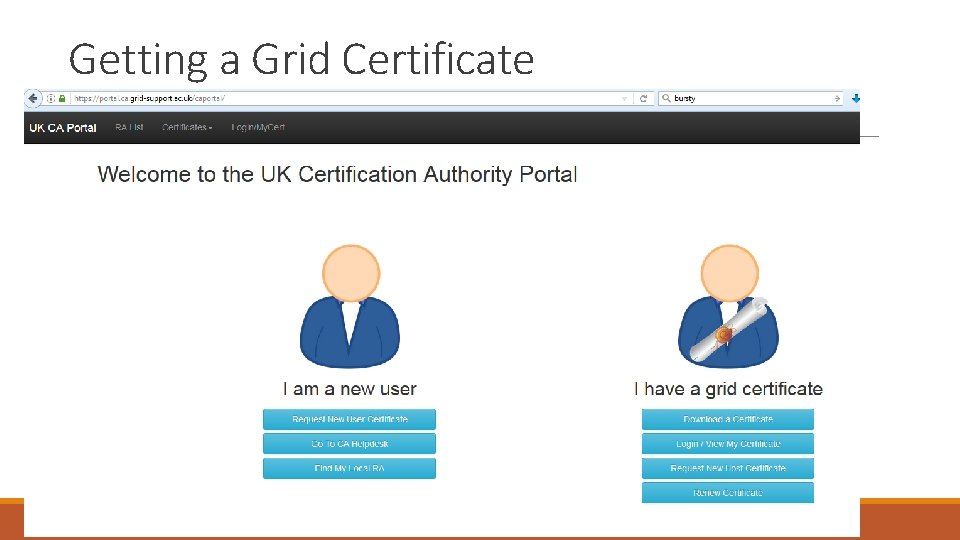

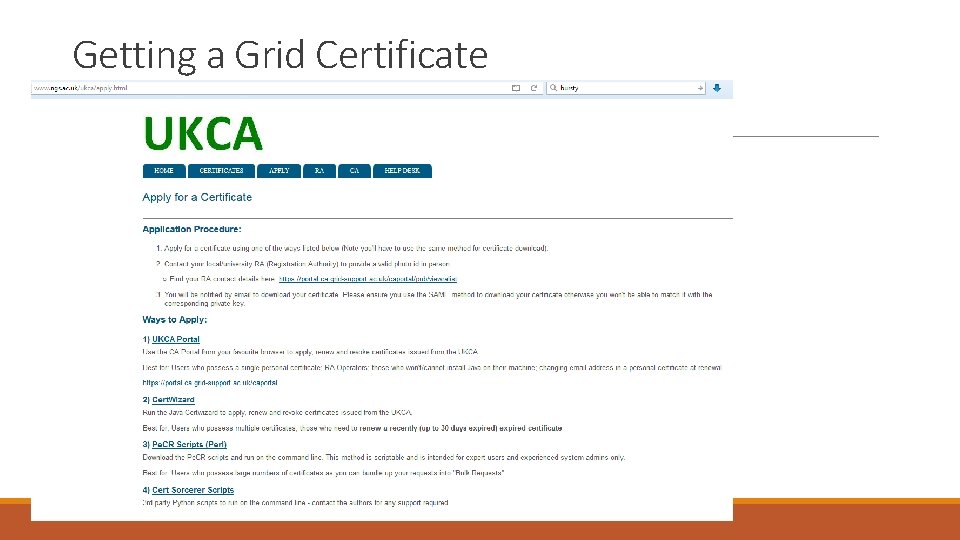

Getting a Grid Certificate Must remember to use the same PC to request and retrieve the Grid Certificate. The new UKCA page http: //www. ngs. ac. uk/ukca

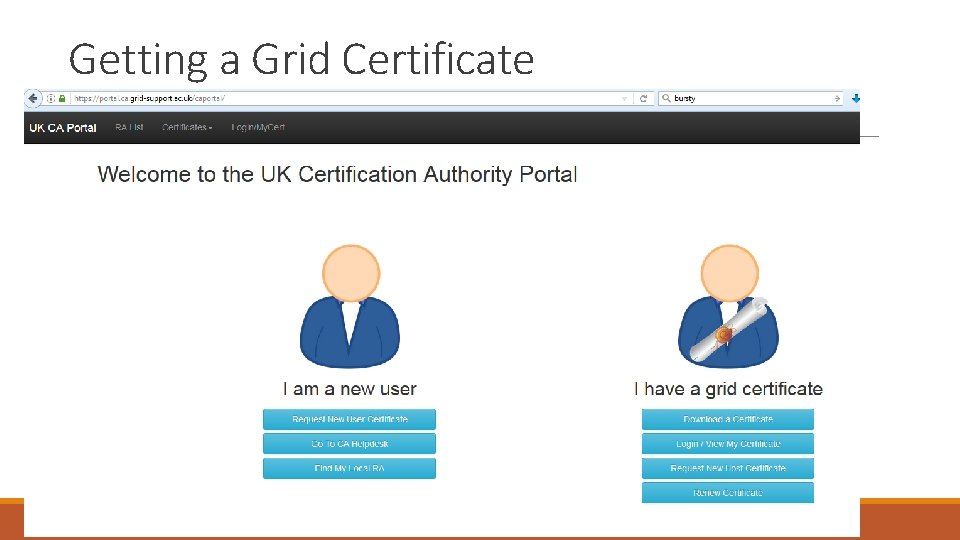

Getting a Grid Certificate

Getting a Grid Certificate

Getting a Grid Certificate

Getting a Grid Certificate You will then need to contact central Oxford IT. They will need to see you, with your university card, to approve your request: To: help@it. ox. ac. uk Dear Stuart Robeson and Jackie Hewitt, Please let me know a good time to come over to Banbury road IT office for you to approve my grid certificate request. Thanks.

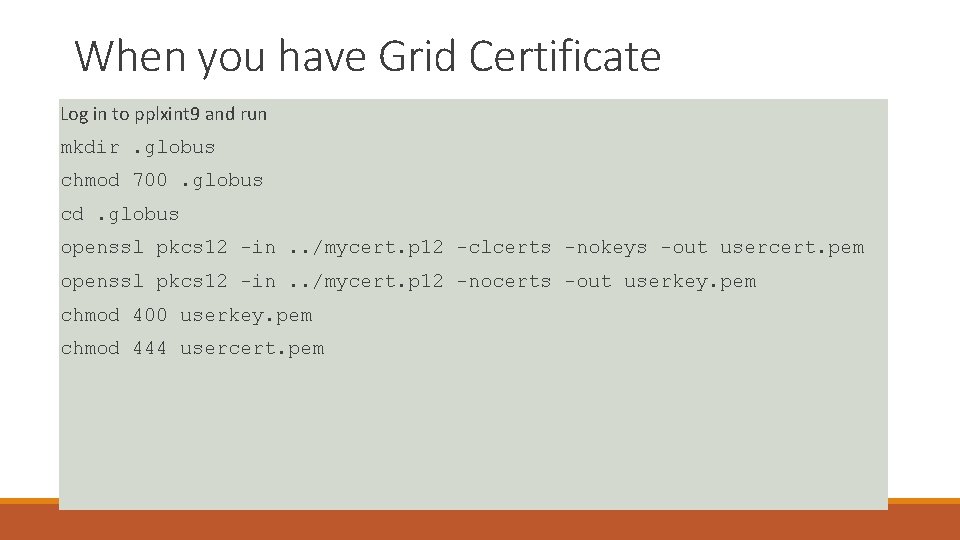

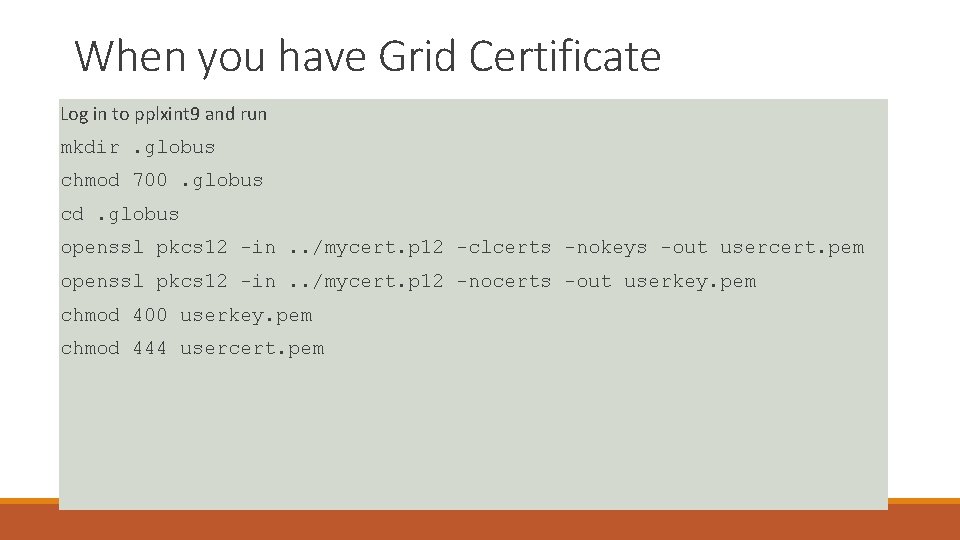

When you have Grid Certificate Log in to pplxint 9 and run mkdir. globus chmod 700. globus cd. globus openssl pkcs 12 -in. . /mycert. p 12 -clcerts -nokeys -out usercert. pem openssl pkcs 12 -in. . /mycert. p 12 -nocerts -out userkey. pem chmod 400 userkey. pem chmod 444 usercert. pem

Now Join a VO This is the Virtual Organisation such as “Atlas”, so: ◦ You are allowed to submit jobs using the infrastructure of the experiment ◦ Access data for the experiment Speak to your colleagues on the experiment about this. It is a different process for every experiment!

Joining a VO Your grid certificate identifies you to the grid as an individual user, but it's not enough on its own to allow you to run jobs; you also need to join a Virtual Organisation (VO). These are essentially just user groups, typically one per experiment, and individual grid sites can choose to support (or not) work by users of a particular VO. Most sites support the four LHC VOs, fewer support the smaller experiments. The sign-up procedures vary from VO to VO, UK ones typically require a manual approval step, LHC ones require an active CERN account. For anyone that's interested in using the grid, but is not working on an experiment with an existing VO, we have a local VO we can use to get you started.

Test your certificate Test your grid certificate: > voms-proxy-init –voms lhcb. cern. ch Enter GRID pass phrase: Your identity: /C=UK/O=e. Science/OU=Oxford/L=Oe. SC/CN=j bloggs Creating temporary proxy. . . . . Done Consult the documentation provided by your experiment for ‘their’ way to submit and manage grid jobs

Other Resources: Advance Research Computing(ARC) Oxford Advance Research computing is university wide central facility available to all university users for free ◦ http: //www. arc. ox. ac. uk/ It is useful if your research requires lots of computing resources or special requirements like MPI or GPU. ARC also provides regular free training courses about Linux, MPI, Python etc. It is worth registering for regular update about training ◦ https: //help. it. ox. ac. uk/courses/index

Other Resources : Lynda. com is a large online library of instructional videos covering the latest software and technologies It is available to university staff and student to access for free Go to https: //www. lynda. com/ and sign with organizational portal Put ox. ac. uk in organization and it will take you to university SSO

Getting Help https: //www 2. physics. ox. ac. uk/it-services/unix-systems Send an email to “itsupport” at “physics. ox. ac. uk” ◦ Please provide detail information about your problem Feel free to drop at Room No 661 in DWB PP Linux Staff ◦ Kashif Mohammad ◦ Vipul Davda

Questions?