Overview Logic Programming Bayesian Networks Hidden Markov Models

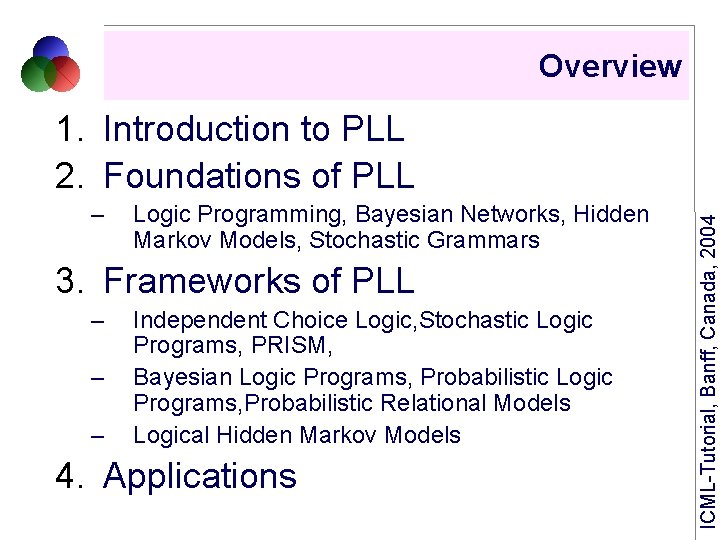

Overview – Logic Programming, Bayesian Networks, Hidden Markov Models, Stochastic Grammars 3. Frameworks of PLL – – – Independent Choice Logic, Stochastic Logic Programs, PRISM, Bayesian Logic Programs, Probabilistic Relational Models Logical Hidden Markov Models 4. Applications ICML-Tutorial, Banff, Canada, 2004 1. Introduction to PLL 2. Foundations of PLL

![[Haddawy, Ngo] Probabilistic Logic Programs (PLPs) Atoms = set of similar RVs First arguments [Haddawy, Ngo] Probabilistic Logic Programs (PLPs) Atoms = set of similar RVs First arguments](http://slidetodoc.com/presentation_image/171fdafd0d38a7f63a6790fad0bfae82/image-2.jpg)

[Haddawy, Ngo] Probabilistic Logic Programs (PLPs) Atoms = set of similar RVs First arguments = RV Last argument = state Clause = CPD entry Probability of being true RV State Earthquake Burglary Alarm Mary. Calls B bb b b John. Calls • Probability distribution over Herbrand interpretations 0. 1 : burglary(true). 0. 9 : burglary(false). 0. 01 : earthquake(true). 0. 99: earthquake(false). 0. 9 : alarm(true) : - burglary(true), earthquake(true). . false : - burglary(true), burglary(false). burglary(true) and + Integrity constraints burglary(true); burglary(false) : - true. burglary(false) true in the same interpretation false ? : - earthquake(true), earthquake(false). . ICML-Tutorial, Banff, Canada, 2004 • • P(A | B, E) 0. 9 0. 1 0. 2 0. 8 0. 9 0. 1 e b 0. 01 0. 99 E ee e e

![[Haddawy, Ngo] Probabilistic Logic Programs (PLPs) father(rex, fred). father(brian, doro). father(fred, henry). mother(ann, fred). [Haddawy, Ngo] Probabilistic Logic Programs (PLPs) father(rex, fred). father(brian, doro). father(fred, henry). mother(ann, fred).](http://slidetodoc.com/presentation_image/171fdafd0d38a7f63a6790fad0bfae82/image-3.jpg)

[Haddawy, Ngo] Probabilistic Logic Programs (PLPs) father(rex, fred). father(brian, doro). father(fred, henry). mother(ann, fred). mother(utta, doro). mother(doro, henry). Qualitative Part Quantitative Part 1. 0 : mc(P, a) : - mother(M, P), pc(M, a), mc(M, a). 0. 0 : mc(P, b) : - mother(M, P), pc(M, a), mc(M, a). Dependency . . . 0. 5 : pc(P, a) : - father(F, P), pc(F, 0), mc(F, a). 0. 5 : pc(P, 0) : - father(F, P), pc(F, 0), mc(F, a). . 1. 0 : bt(P, a) : - mc(P, a), pc(P, a) Variable Binding false : - pc(P, a), pc(P, b), pc(P, 0). pc(P, a); pc(P, b); pc(P, 0) : - person(P). . ICML-Tutorial, Banff, Canada, 2004 RV State

![[Haddawy, Ngo] Probabilistic Logic Programs (PLPs) mother(ann, fred). mother(utta, doro). mother(doro, henry). 1. 0 [Haddawy, Ngo] Probabilistic Logic Programs (PLPs) mother(ann, fred). mother(utta, doro). mother(doro, henry). 1. 0](http://slidetodoc.com/presentation_image/171fdafd0d38a7f63a6790fad0bfae82/image-4.jpg)

[Haddawy, Ngo] Probabilistic Logic Programs (PLPs) mother(ann, fred). mother(utta, doro). mother(doro, henry). 1. 0 : mc(P, a) : - mother(M, P), pc(M, a), mc(M, a). 0. 0 : mc(P, b) : - mother(M, P), pc(M, a), mc(M, a). . mc(ann) pc(rex) mc(utta) pc(utta) 0. 5 : pc(P, a) : -mc(rex) father(F, P), pc(F, 0), mc(F, a). mc(brian) pc(brian) 0. 5 : pc(P, 0) : - father(F, P), pc(F, 0), mc(F, a). . mc(fred) pc(fred) mc(doro) pc(doro) 1. 0 : bt(P, a) : - mc(P, aa), pc(P, aa) bt(utta) bt(rex) bt(ann) mc(henry) pc(henry) bt(fred) false : - pc(P, a), pc(P, b), pc(P, 0). bt(doro) bt(henry) pc(P, a); pc(P, b); pc(P, 0) : - person(P). . bt(brian) ICML-Tutorial, Banff, Canada, 2004 father(rex, fred). father(brian, doro). father(fred, henry).

![[Getoor, Koller, Pfeffer] Probabilistic Relational Models (PRMs) – Attributes = RV Earthquake Burglary Alarm [Getoor, Koller, Pfeffer] Probabilistic Relational Models (PRMs) – Attributes = RV Earthquake Burglary Alarm](http://slidetodoc.com/presentation_image/171fdafd0d38a7f63a6790fad0bfae82/image-5.jpg)

[Getoor, Koller, Pfeffer] Probabilistic Relational Models (PRMs) – Attributes = RV Earthquake Burglary Alarm Mary. Calls E e e e B b b b 0. 9 0. 2 0. 9 e b 0. 01 John. Calls Database alarm system Earthquake Burglary Table Alarm Mary. Calls Attribute John. Calls P(A | B, E) 0. 1 0. 8 0. 1 0. 99 ICML-Tutorial, Banff, Canada, 2004 • Database theory • Entity-Relationship Models

![[Getoor, Koller, Pfeffer] Probabilistic Relational Models (PRMs) Binary Relation Bloodtype (Mother) Bloodtype M-chromosome P-chromosome [Getoor, Koller, Pfeffer] Probabilistic Relational Models (PRMs) Binary Relation Bloodtype (Mother) Bloodtype M-chromosome P-chromosome](http://slidetodoc.com/presentation_image/171fdafd0d38a7f63a6790fad0bfae82/image-6.jpg)

[Getoor, Koller, Pfeffer] Probabilistic Relational Models (PRMs) Binary Relation Bloodtype (Mother) Bloodtype M-chromosome P-chromosome Person M-chromosome P-chromosome Bloodtype Table Person ICML-Tutorial, Banff, Canada, 2004 (Father)

![[Getoor, Koller, Pfeffer] Probabilistic Relational Models (PRMs) father(Father, Person). Bloodtype (Mother) mother(Mother, Person). Bloodtype [Getoor, Koller, Pfeffer] Probabilistic Relational Models (PRMs) father(Father, Person). Bloodtype (Mother) mother(Mother, Person). Bloodtype](http://slidetodoc.com/presentation_image/171fdafd0d38a7f63a6790fad0bfae82/image-7.jpg)

[Getoor, Koller, Pfeffer] Probabilistic Relational Models (PRMs) father(Father, Person). Bloodtype (Mother) mother(Mother, Person). Bloodtype M-chromosome P-chromosome Person bt(Person, BT). pc(Person, PC). M-chromosome P-chromosome mc(Person, MC). Bloodtype Person Dependencies (CPDs associated with): bt(Person, BT) : - pc(Person, PC), mc(Person, MC). pc(Person, PC) : - pc_father(Father, PCf), mc_father(Father, MCf). View : pc_father(Person, PCf) |. . . father(Father, Person), pc(Father, PC). ICML-Tutorial, Banff, Canada, 2004 (Father)

![[Getoor, Koller, Pfeffer] Probabilistic Relational Models (PRMs) mother(ann, fred). mother(utta, doro). mother(doro, henry). pc_father(Person, [Getoor, Koller, Pfeffer] Probabilistic Relational Models (PRMs) mother(ann, fred). mother(utta, doro). mother(doro, henry). pc_father(Person,](http://slidetodoc.com/presentation_image/171fdafd0d38a7f63a6790fad0bfae82/image-8.jpg)

[Getoor, Koller, Pfeffer] Probabilistic Relational Models (PRMs) mother(ann, fred). mother(utta, doro). mother(doro, henry). pc_father(Person, PCf) | father(Father, Person), pc(Father, PC). . mc(Person, MC) | pc_mother(Person, PCm), pc_mother(Person, MCm). pc(Person, PC) | pc_father(Person, PCf), mc_father(Person, MCf). bt(Person, BT) | pc(Person, PC), mc(Person, MC). State RV mc(ann) pc(ann) mc(rex) mc(fred) pc(rex) bt(ann) mc(utta) pc(utta) mc(brian) mc(doro) pc(doro) bt(brian) bt(utta) mc(henry) pc(henry) bt(fred) bt(doro) bt(henry) pc(brian) ICML-Tutorial, Banff, Canada, 2004 father(rex, fred). father(brian, doro). father(fred, henry).

![[Kersting, De Raedt] Bayesian Logic Programs (BLPs) E e e e Burglary earthquake/0 burglary/0 [Kersting, De Raedt] Bayesian Logic Programs (BLPs) E e e e Burglary earthquake/0 burglary/0](http://slidetodoc.com/presentation_image/171fdafd0d38a7f63a6790fad0bfae82/image-9.jpg)

[Kersting, De Raedt] Bayesian Logic Programs (BLPs) E e e e Burglary earthquake/0 burglary/0 Mary. Calls 0. 1 0. 8 0. 1 0. 99 John. Calls local BN fragment earthquake alarm/0 alarm mary. Calls/0 P(A | B, E) 0. 9 0. 2 0. 9 e b 0. 01 Alarm Rule Graph B b burglary E e e e B b b b P(A | B, E) 0. 9 0. 2 0. 9 e b 0. 01 0. 8 0. 1 0. 99 john. Calls/0 alarm : - earthquake, burglary. ICML-Tutorial, Banff, Canada, 2004 Earthquake

![[Kersting, De Raedt] Bayesian Logic Programs (BLPs) Rule Graph Mother mc/1 pc mc mother [Kersting, De Raedt] Bayesian Logic Programs (BLPs) Rule Graph Mother mc/1 pc mc mother](http://slidetodoc.com/presentation_image/171fdafd0d38a7f63a6790fad0bfae82/image-10.jpg)

[Kersting, De Raedt] Bayesian Logic Programs (BLPs) Rule Graph Mother mc/1 pc mc mother mc bt/1 Person variable argument atom Person mc(Person) pc(Mother) mc(Mother) (1. 0, 0. 0) a a (0. 5, 0. 0) a b . . . : - pc(Person), mc(Person). . . . bt(Person) pc predicate mc bt bt(Person) pc(Person) mc(Person) (1. 0, 0. 0) a a (0. 0, 1. 0, 0. 0) a b . . ICML-Tutorial, Banff, Canada, 2004 pc/1

![[Kersting, De Raedt] Bayesian Logic Programs (BLPs) Mother mc/1 pc mc mother mc bt/1 [Kersting, De Raedt] Bayesian Logic Programs (BLPs) Mother mc/1 pc mc mother mc bt/1](http://slidetodoc.com/presentation_image/171fdafd0d38a7f63a6790fad0bfae82/image-11.jpg)

[Kersting, De Raedt] Bayesian Logic Programs (BLPs) Mother mc/1 pc mc mother mc bt/1 Person mc(Person) pc(Mother) mc(Mother) (1. 0, 0. 0) a a (0. 5, 0. 0) a b . . mc(Person) | mother(Mother, Person), pc(Mother), mc(Mother). pc(Person) | father(Father, Person), pc(Father), mc(Father). bt(Person) | pc(Person), mc(Person). ICML-Tutorial, Banff, Canada, 2004 pc/1

![[Kersting, De Raedt] Bayesian Logic Programs (BLPs) father(rex, fred). father(brian, doro). father(fred, henry). mother(ann, [Kersting, De Raedt] Bayesian Logic Programs (BLPs) father(rex, fred). father(brian, doro). father(fred, henry). mother(ann,](http://slidetodoc.com/presentation_image/171fdafd0d38a7f63a6790fad0bfae82/image-12.jpg)

[Kersting, De Raedt] Bayesian Logic Programs (BLPs) father(rex, fred). father(brian, doro). father(fred, henry). mother(ann, fred). mother(utta, doro). mother(doro, henry). pc(Person) | father(Father, Person), pc(Father), mc(Father). bt(Person) | pc(Person), mc(Person). Bayesian Network induced over least Herbrand model mc(ann) pc(ann) mc(rex) mc(fred) pc(rex) bt(ann) mc(utta) pc(utta) mc(brian) mc(doro) pc(doro) bt(brian) bt(utta) mc(henry) pc(henry) bt(fred) bt(doro) bt(henry) pc(brian) ICML-Tutorial, Banff, Canada, 2004 mc(Person) | mother(Mother, Person), pc(Mother), mc(Mother).

![[Kersting, De Raedt] Bayesian Logic Programs (BLPs) – Finite branching factor, finite proofs, no [Kersting, De Raedt] Bayesian Logic Programs (BLPs) – Finite branching factor, finite proofs, no](http://slidetodoc.com/presentation_image/171fdafd0d38a7f63a6790fad0bfae82/image-13.jpg)

[Kersting, De Raedt] Bayesian Logic Programs (BLPs) – Finite branching factor, finite proofs, no selfdependency • Highlight – Separation of qualitative and quantitative parts – Functors • • • Graphical Representation Discrete and continuous RV BNs, DBNs, HMMs, SCFGs, Prolog. . . Turing-complete programming language Learning ICML-Tutorial, Banff, Canada, 2004 • Unique probability distribution over Herbrand interpretations

Declaritive Semantics mc(ann) pc(ann) mc(rex) mc(fred) pc(rex) bt(ann) mc(utta) pc(utta) mc(brian) mc(doro) pc(brian) bt(utta) mc(henry) pc(henry) bt(fred) bt(doro) bt(henry) consequence operator If the body of C holds then the head holds, too: mc(fred) is true because mother(ann, fred) mc(ann), pc(ann) are true ICML-Tutorial, Banff, Canada, 2004 • Dependency Graph = (possibly infite) Bayesian network

Procedural Semantics mc(ann) pc(ann) mc(rex) mc(fred) pc(rex) bt(ann) mc(utta) pc(utta) mc(brian) mc(doro) pc(doro) bt(brian) bt(utta) mc(henry) pc(henry) bt(fred) bt(doro) bt(henry) pc(brian) ICML-Tutorial, Banff, Canada, 2004 P(bt(ann)) ?

Procedural Semantics Bayes‘ rule P(bt(ann)| bt(fred)) = P(bt(ann), bt(fred)) ? mc(ann) pc(ann) mc(rex) mc(fred) pc(fred) P(bt(fred)) pc(rex) bt(ann) mc(utta) pc(utta) mc(brian) mc(doro) pc(doro) bt(brian) bt(utta) mc(henry) pc(henry) bt(fred) bt(doro) bt(henry) pc(brian) ICML-Tutorial, Banff, Canada, 2004 P(bt(ann), bt(fred))

Queries using And/Or trees P(bt(fred)) ? bt(fred) Or node is proven if at least one of pc(fred), mc(fred) pc(fred) mc(fred) father(rex, fred), mc(rex), pc(rex) ICML-Tutorial, Banff, Canada, 2004 its successors is provable. And node is proven if all of its successors are provable. mother(ann, fred), mc(ann), pc(ann) mc(ann) pc(ann) mc(rex) mc(fred) pc(rex) father(rex, fred) mother(ann, fred) mc(rex) bt(ann) pc(rex) pc(ann)) . . . bt(fred) mc(ann)

. . . Combining Partial Knowledge read/1 Book discusses prepared read Student prepared(Student, Topic) | read(Student, Book), discusses(Book, Topic). prepared/2 logic bn passes/1 prepared passes prepared Student passes(Student) | prepared(Student, bn), prepared(Student, logic). ICML-Tutorial, Banff, Canada, 2004 discusses/2 Topic

Combining Partial Knowledge Topic discusses(b 2, bn) Book discusses(b 1, bn) discusses read prepared(s 1, bn) Student prepared(s 2, bn) prepared(Student, Topic) | read(Student, Book), discusses(Book, Topic). • variable # of parents for prepared/2 due to read/2 – whether a student prepared a topic depends on the books she read • CPD only for one book-topic pair ICML-Tutorial, Banff, Canada, 2004 prepared

Combining Rules Topic P(A|B) and P(A|C) Book discusses read CR Student prepared(Student, Topic) | read(Student, Book), P(A|B, C) n n discusses(Book, Topic). Any algorithm which n has an empty output if and only if the input is empty n combines a set of CPDs into a single (combined) CPD E. g. noisy-or, regression, . . . ICML-Tutorial, Banff, Canada, 2004 prepared

Aggregates registration_grade/2 registered/2 student_ranking/1 ICML-Tutorial, Banff, Canada, 2004 . . . Map multisets of values to summary values (e. g. , sum, average, max, cardinality)

Aggregates registration_grade/2 registered/2 Functional Dependency (average) grade_avg/1 Deterministic student_ranking/1 Probabilistic Dependency (CPD) registered/2 Course Student registration_grade_avg student_ranking Student ICML-Tutorial, Banff, Canada, 2004 . . . Map multisets of values to summary values (e. g. , sum, average, max, cardinality)

Summary: Model-Theoretic mc(Person) | mother(Mother, Person), pc(Mother), mc(Mother). pc(Person) | father(Father, Person), pc(Father), mc(Father). bt(Person) | pc(Person), mc(Person). + Consequence operator If the body holds then the head holds, too. Conditional independencies = encoded in the induced BN structure Local + (macro) CPDs probability models mc(Person) (1. 0, 0. 0) (0. 5, 0. 0). . . pc(Mother) a a. . . + CRs noisy-or, . . . = Joint probability distribution over the least Herbrand interpretation mc(Mother) a b. . . ICML-Tutorial, Banff, Canada, 2004 Underlying logic pogram

Learning Tasks Database Algorithm Model • Parameter Estimation – Numerical Optimization Problem • Model Selection – Combinatorical Search ICML-Tutorial, Banff, Canada, 2004 Learning

• Representation (cf. above) • Structure on the search space becomes more complex – operators for traversing the space –… • Algorithms remain essentially the same ICML-Tutorial, Banff, Canada, 2004 Differences between SL and PLL ?

What is the data about? – Model Theoretic Earthquake Burglary Alarm Model(1) Mary. Calls John. Calls earthquake=yes, Model(3) burglary=no, earthquake=? , alarm=? , burglary=? , marycalls=yes, Model(2) alarm=yes, johncalls=no earthquake=no, marycalls=yes, burglary=no, johncalls=yes alarm=no, marycalls=no, johncalls=no ICML-Tutorial, Banff, Canada, 2004 E

What is the data about? – Model Theoretic • Random Variable + States = (partial) Herbrand interpretation • Akin to „learning from interpretations“ in ILP Background m(ann, dorothy), f(brian, dorothy), Model(1) m(cecily, fred), pc(brian)=b, f(henry, fred), bt(ann)=a, f(fred, bob), bt(brian)=? , m(kim, bob), bt(dorothy)=a . . . bt(cecily)=ab, pc(henry)=a, mc(fred)=? , bt(kim)=a, Model(3) pc(rex)=b, bt(doro)=a, bt(brian)=? Bloodtype example Model(2) pc(bob)=b ICML-Tutorial, Banff, Canada, 2004 Data case:

Database D + Learning Algorithm Parameter Q Underlying Logic program L ICML-Tutorial, Banff, Canada, 2004 Parameter Estimation – Model Theoretic

Parameter Estimation – Model Theoretic * • „Best fit“: ML parameters q * q = argmaxq P( data | logic program, q) = argmaxq log P( data | logic program, q) • Reduces to problem to estimate parameters of a Bayesian networks: given structure, ICML-Tutorial, Banff, Canada, 2004 • Estimate the CPD q entries that best fit the data

+ ICML-Tutorial, Banff, Canada, 2004 Parameter Estimation – Model Theoretic

Excourse: Decomposable CRs E . . . Multiple ground instance of the same clause Deterministic CPD for Combining Rule ICML-Tutorial, Banff, Canada, 2004 • Parameters of the clauses and not of the support network.

+ ICML-Tutorial, Banff, Canada, 2004 Parameter Estimation – Model Theoretic

+ Parameter tighting ICML-Tutorial, Banff, Canada, 2004 Parameter Estimation – Model Theoretic

EM – Model Theoretic Logic Program L Expectation Expected counts of a clause M M M Current Model (M, qk) Inference P( head(GI), body(GI) | DC ) M M Ground Instance Data. Case GI DC M Initial Parameters q 0 Ground Instance Data. Case GI DC Maximization P( body(GI) | DC ) P( head(GI), body(GI) | DC ) Update parameters (ML, MAP) ICML-Tutorial, Banff, Canada, 2004 EM-algorithm: iterate until convergence

Database Learning + Algorithm Language: Bayesian bt/1, pc/1, mc/1 Background Knowledge: Logical mother/2, father/2 ICML-Tutorial, Banff, Canada, 2004 Model Selection – Model Theoretic

Model Selection – Model Theoretic Combination of ILP and BN learning Combinatorical search for hypo M* s. t. – M* logically covers the data D – M* is optimal w. r. t. some scoring function score, i. e. , M* = argmax. M score(M, D). • Highlights – – Refinement operators Background knowledge Language biase Search bias ICML-Tutorial, Banff, Canada, 2004 • •

Refinement Operators – – Add atom apply a substitution { X / Y } where X, Y already appear in atom apply a substitution { X / f(Y 1, … , Yn)} where Yi new variables apply a substitution {X / c } where c is a constant – Generalization: – delete atom – turn ‘term’ into variable • p(a, f(b)) becomes p(X, f(b)) or p(a, f(X)) • p(a, a) becomes p(X, X) or p(a, X) or p(X, a) – replace two occurences of variable X into X 1 and X 2 • p(X, X) becomes p(X 1, X 2) ICML-Tutorial, Banff, Canada, 2004 • Add a fact, delete a fact or refine an existing clause: – Specialization:

Example mc(X) | m(M, X), mc(M), pc(M). pc(X) | f(F, X), mc(F), pc(F). bt(X) | mc(X), pc(X). mc(ann) mc(eric) pc(ann) m(ann, john) pc(eric) mc(john) Data cases {m(ann, john)=true, pc(ann)=a, mc(ann)=? , f(eric, john)=true, pc(eric)=b, mc(eric)=a, mc(john)=ab, pc(john)=a, bt(john) = ? }. . . bc(john) pc(john) ICML-Tutorial, Banff, Canada, 2004 Original program f(eric, john)

Example mc(X) | m(M, X), mc(M), pc(M). pc(X) | f(F, X), mc(F), pc(F). bt(X) | mc(X), pc(X). mc(ann) Initial hypothesis mc(X) | m(M, X). pc(X) | f(F, X). bt(X) | mc(X). mc(eric) pc(ann) m(ann, john) pc(eric) mc(john) bc(john) pc(john) ICML-Tutorial, Banff, Canada, 2004 Original program f(eric, john)

Example mc(X) | m(M, X), mc(M), pc(M). pc(X) | f(F, X), mc(F), pc(F). bt(X) | mc(X), pc(X). mc(ann) Initial hypothesis mc(X) | m(M, X). pc(X) | f(F, X). bt(X) | mc(X). mc(eric) pc(ann) m(ann, john) pc(eric) mc(john) bc(john) pc(john) ICML-Tutorial, Banff, Canada, 2004 Original program f(eric, john)

Example mc(X) | m(M, X), mc(M), pc(M). pc(X) | f(F, X), mc(F), pc(F). bt(X) | mc(X), pc(X). mc(ann) pc(ann) Initial hypothesis mc(X) | m(M, X). pc(X) | f(F, X). bt(X) | mc(X). mc(eric) m(ann, john) pc(eric) mc(john) bc(john) Refinement mc(X) | m(M, X). pc(X) | f(F, X). bt(X) | mc(X), pc(X). pc(john) ICML-Tutorial, Banff, Canada, 2004 Original program f(eric, john)

Example mc(X) | m(M, X), mc(M), pc(M). pc(X) | f(F, X), mc(F), pc(F). bt(X) | mc(X), pc(X). mc(ann) pc(ann) Initial hypothesis mc(X) | m(M, X). pc(X) | f(F, X). bt(X) | mc(X). mc(eric) m(ann, john) pc(eric) mc(john) pc(john) bc(john) Refinement mc(X) | m(M, X). pc(X) | f(F, X). bt(X) | mc(X), pc(X). Refinement mc(X) | m(M, X), mc(X). pc(X) | f(F, X). bt(X) | mc(X), pc(X). ICML-Tutorial, Banff, Canada, 2004 Original program f(eric, john)

Example mc(X) | m(M, X), mc(M), pc(M). pc(X) | f(F, X), mc(F), pc(F). bt(X) | mc(X), pc(X). mc(ann) pc(ann) Initial hypothesis mc(X) | m(M, X). pc(X) | f(F, X). bt(X) | mc(X). mc(eric) m(ann, john) pc(eric) mc(john) pc(john) bc(john) Refinement mc(X) | m(M, X). pc(X) | f(F, X). bt(X) | mc(X), pc(X). Refinement mc(X) | m(M, X), pc(X) | f(F, X). bt(X) | mc(X), pc(X). ICML-Tutorial, Banff, Canada, 2004 Original program f(eric, john)

Example E mc(X) | m(M, X), mc(M), pc(M). pc(X) | f(F, X), mc(F), pc(F). bt(X) | mc(X), pc(X). mc(ann) pc(ann) Initial hypothesis mc(X) | m(M, X). pc(X) | f(F, X). bt(X) | mc(X). mc(eric) m(ann, john) pc(eric) mc(john) pc(john) f(eric, john) bc(john) Refinement mc(X) | m(M, X). pc(X) | f(F, X). bt(X) | mc(X), pc(X). Refinement mc(X) | m(M, X), pc(X) | f(F, X). bt(X) | mc(X), pc(X). ICML-Tutorial, Banff, Canada, 2004 Original program . . .

• Many clauses can be eliminated a priori • Due to type structure of clauses – e. g. atom(compound, atom, charge), bond(compound, atom, bondtype) active(compound) – eliminate e. g. • active(C) : - atom(X, C, 5) • not conform to type structure ICML-Tutorial, Banff, Canada, 2004 Bias

Bias - continued • or to modes of predicates : determines calling pattern in queries • mode(atom(+, -, -)) • mode(bond(+, +, -, -)) – all variables in head are + (input) – active(C) : - bond(C, A 1, A 2, T) not mode conform • because A 1 does not exist in left part of clause and argument declared + – active(C) : - atom(C, A, P), bond(C, A, A 2, double) mode conform. ICML-Tutorial, Banff, Canada, 2004 – + : input; - : output

Conclusions on Learning • Algorithms remain essentially the same • Structure on the search space becomes more complex Scores Independency Statistical Learning Priors Refinement Operators Inductive Logic Programming/ Multi-relational Data Mining Bias Background Knowledge ICML-Tutorial, Banff, Canada, 2004 – Not single edges but bunches of edges are modified

Overview – Logic Programming, Bayesian Networks, Hidden Markov Models, Stochastic Grammars 3. Frameworks of PLL – – – Independent Choice Logic, Stochastic Logic Programs, PRISM, Bayesian Logic Programs, Probabilistic Relational Models Logical Hidden Markov Models 4. Applications ICML-Tutorial, Banff, Canada, 2004 1. Introduction to PLL 2. Foundations of PLL

Logical (Hidden) Markov Models data mining. Lecturer Luc CS course on statistics CS department CS course on Lecturer Robotics Wolfram Each state is trained independently No sharing of experience, large state space ICML-Tutorial, Banff, Canada, 2004 CS course on

Logical (Hidden) Markov Models lecturer(cs, luc) course(cs, stats) (0. 7) dept(D) -> course(D, C). (0. 2) dept(D) -> lecturer(D, L). dept(cs) . . . (0. 3) course(cs, rob) (0. 3) course(D, C) -> lecturer(D, L). course(D, C) -> dept(D). lecturer(cs, wolfram) course(D, C) -> course(D´, C´). . (0. 1) lecturer(D, L) -> course(D, C). . dept(D) course(D, C) lecturer(D, L) Abstract states course(D, C) ICML-Tutorial, Banff, Canada, 2004 course(cs, dm)

• So far, only transitions between abstract states • Needed: possible transitions and their probabilities for any ground state lecturer(D, L) Possible instantiations for each arguments {cs, math, bio, . . . } x {luc, wolfram, . . . } Chance of instations P(lecturer(cs, luc)) ICML-Tutorial, Banff, Canada, 2004 Logical (Hidden) Markov Models

![Logical (Hidden) Markov Models RMMs [Anderson et al. 03]: lecturer(D, L) LOHMMs [Kersting et Logical (Hidden) Markov Models RMMs [Anderson et al. 03]: lecturer(D, L) LOHMMs [Kersting et](http://slidetodoc.com/presentation_image/171fdafd0d38a7f63a6790fad0bfae82/image-52.jpg)

Logical (Hidden) Markov Models RMMs [Anderson et al. 03]: lecturer(D, L) LOHMMs [Kersting et al. 03]: Naive Bayes P(D) P(L) * ICML-Tutorial, Banff, Canada, 2004 Probability Estimation Trees

What is the data about? – Intermediate Data case: • (partial) traces (or derivations) • Akin to Shapiro´s algorithmic program debuging Trace(1) dept(cs), course(cs, dm), lecturer(pedro, cs), . . . Trace(2) dept(bio), course(bio, genetics), lecturer(mendel, bio), . . . Trace(3) dept(cs), course(cs, stats), dept(cs), couse(cs, ml), . . . ICML-Tutorial, Banff, Canada, 2004 E

What is the data about? – Proof Theoretic E 1. 0 : S NP, VP 1. 0 : Det the 0. 5 : N man 0. 5 : N telescope Example(1) s([I, saw, the, man], []). 0. 5 : VP V, NP 0. 5 : VP VP, PP 1. 0 : PP P, NP Example(2) 1. 0 : V saw s([the, man, saw, the man], []). 1. 0 : P with Example(3) s([I, saw, the, man, with, the telescope], []). definite clause grammar ICML-Tutorial, Banff, Canada, 2004 1/3 : NP i 1/3 : NP Det, N 1/3 : NP NP, PP

What is the data about? – Proof Theoretic Data case: • ground fact (or even clauses) • Akin to „learning from entailment“ (ILP) Background m(ann, dorothy), Example(1) f(brian, dorothy), bt(ann)=a. m(cecily, fred), Example(2) bt(fred)=ab bt(brian)=ab f(henry, fred), f(fred, bob), Example(5) m(kim, bob), mc(dorothy)=b . . . Example(4) Bloodtype example Example(3) pc(brian)=a ICML-Tutorial, Banff, Canada, 2004 E

- Slides: 55