Overview Basic concepts The Models BellLa Padula BLP

Overview • Basic concepts • The Models – Bell-La. Padula (BLP) – Biba – Clark-Wilson – Chinese Wall • Systems Evaluation 1

Basic Concepts 2

Terminology • Trusted Computing Base (TCB) – combination of protection mechanisms within a computer system • Subjects / Objects – Subjects are active (e. g. , users / programs) – Objects are passive (e. g. , files) • Reference Monitor – abstract machine that mediates subject access to objects • Security Kernel – core element of TCB that enforces the reference monitor’s security policy 3

Types of Access Control • Discretionary Access Control (DAC) – data owners can create and modify matrix of subject / object relationships (e. g. , ACLs) • Mandatory Access Control (MAC) – “insecure” transactions prohibited regardless of DAC • Cannot enforce MAC rules with DAC security kernel – Someone with read access to a file can copy it and build a new “insecure” DAC matrix because he will be an owner of the new file. 4

Information Flow Models • Pour cement over a PC and you have a secure system • In reality, there are state transitions • Key is to ensure transitions are secure • Models provide rules for how information flows from state to state. • Information flow models do not address covert channels – Trojan horses – Requesting system resources to learn about other users 5

Access Control Models 6

Models • Bell-La. Padula • Biba • Clark-Wilson • Chinese Wall Good brief summary on Harris p. 247 7

Bell-La. Padula (BLP) Model • BLP is formal (mathematical) description of mandatory access control • Three properties: – ds-property (discretionary security) – ss-property (simple security – no “read down”) – *-property (star property – no “write down”) • A secure system satisfies all of these properties • BLP includes mathematical proof that if a system is secure and a transition satisfies all of the properties, then the system will remain secure. 8

Bell-La. Padula Model (Continued) • Honeywell Multics kernel was only true implementation of BLP, but it never took hold • DOD information security requirements currently achieved via discretionary access control and segregation of systems rather than BLP-compliant computers 9

Biba Model • Similar to BLP but focus is on integrity, not confidentiality • Result is to turn the BLP model upside down – High integrity subjects cannot read lower integrity objects (no “read down”) – Subjects cannot move low integrity data to highintegrity environment (no “write up”) • Mc. Lean notes that ability to flip models essentially renders their assurance properties useless 10

Clark-Wilson Model • Reviews distinction between military and commercial policy – Military policy focus on confidentiality – Commercial policy focus on integrity • Mandatory commercial controls typically involve who gets to do what type of transaction rather than who sees what (Example: cut a check above a certain dollar amount) 11

Clark-Wilson Model (Continued) • Two types of objects: – Constrained Data Items (CDIs) – Unconstrained Data Items (UDIs) • Two types of transactions on CDIs in model – Integrity Verification Procedures (IVPs) – Transformation Procedures (TPs) • IVPs certify that TPs on CDIs result in valid state • All TPs must be certified to result in valid transformation 12

Clark-Wilson Model (Continued) • System maintains list of valid relations of the form: {User. ID, TP, CDI/UDI} • Only permitted manipulation of CDI is via an authorized TP • If a TP takes a UDI as an input, then it must result in a proper CDI or the TP will be rejected • Additional requirements – Auditing: TPs must write to an append-only CDI (log) – Separation of duties 13

Clark-Wilson versus Biba • In Biba’s model, UDI to CDI conversion is performed by trusted subject only (e. g. , a security officer), but this is problematic for data entry function. • In Clark-Wilson, TPs are specified for particular users and functions. Biba’s model does not offer this level of granularity. 14

Chinese Wall Focus is on conflicts of interest. • Principle: Users should not access the confidential information of both a client organization and one or more of its competitors. • How it works – Users have no “wall” initially. – Once any given file is accessed, files with competitor information become inaccessible. – Unlike other models, access control rules change with user behavior 15

Systems Evaluation 16

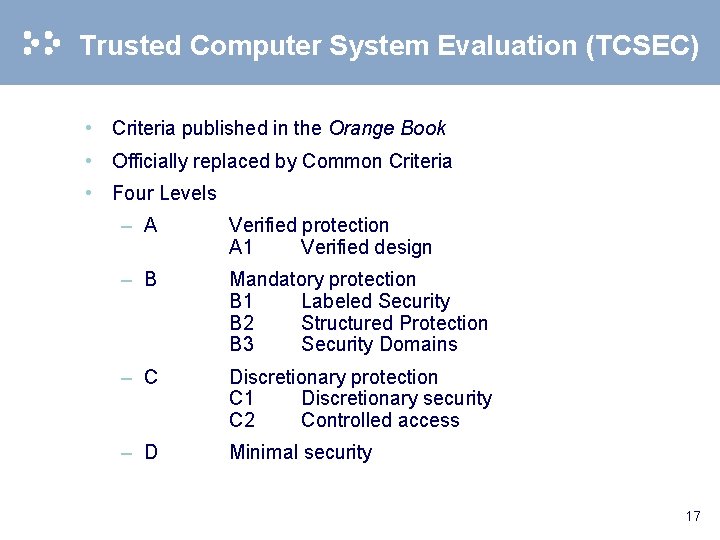

Trusted Computer System Evaluation (TCSEC) • Criteria published in the Orange Book • Officially replaced by Common Criteria • Four Levels – A Verified protection A 1 Verified design – B Mandatory protection B 1 Labeled Security B 2 Structured Protection B 3 Security Domains – C Discretionary protection C 1 Discretionary security C 2 Controlled access – D Minimal security 17

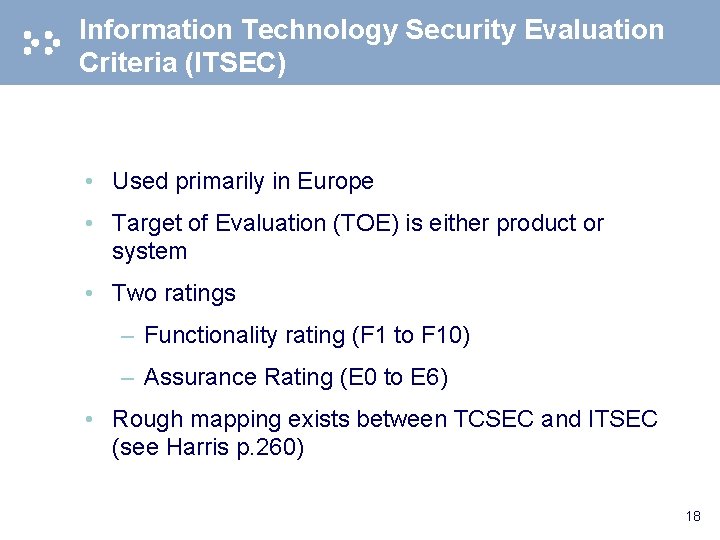

Information Technology Security Evaluation Criteria (ITSEC) • Used primarily in Europe • Target of Evaluation (TOE) is either product or system • Two ratings – Functionality rating (F 1 to F 10) – Assurance Rating (E 0 to E 6) • Rough mapping exists between TCSEC and ITSEC (see Harris p. 260) 18

Common Criteria • ISO standard evaluation criteria that combines several different criteria, including TCSEC and ITSEC • Participating governments recognize Common Criteria certifications awarded in other nations • Seven Evaluation Assurance Levels (EAL 1 -7) • Utilize protection profiles (see Harris p. 262) 19

Common Criteria – Evaluation Assurance Levels - Overview • Define a scale for measuring the criteria for the evaluation of PPs (Protection Profiles) and STs (Security Targets) • Constructed using components from the assurance families • Organization – Seven hierarchically ordered EALs in a uniformly increasing scale of assurance 20

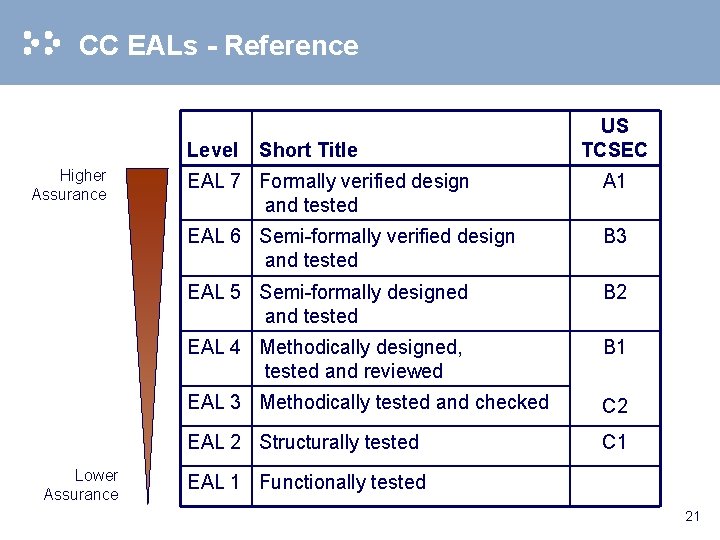

CC EALs - Reference Level Higher Assurance Lower Assurance Short Title US TCSEC EAL 7 Formally verified design and tested A 1 EAL 6 Semi-formally verified design and tested B 3 EAL 5 Semi-formally designed and tested B 2 EAL 4 Methodically designed, tested and reviewed B 1 EAL 3 Methodically tested and checked C 2 EAL 2 Structurally tested C 1 EAL 1 Functionally tested 21

CC EALs – Summary 1 -3 • EAL 1 - Functionally tested – “Applicable where some confidence in correct operation is required, but the threats to security are not viewed as serious” • EAL 2 - Structurally tested – “Applicable where developers or users require a low to moderate level of independently assured security” • EAL 3 - Methodically tested and checked – “Applicable where the requirement is for a moderate level of independently assured security” 22

CC EALs – Summary 4 -5 • EAL 4 - Methodically designed, tested and reviewed – “Applicable where developers or users require a moderate to high level of independently assured security” • EAL 5 - Semi-formally designed and tested – “Applicable where the requirement is for a high level of independently assured security” 23

CC EALs – Summary 6 -7 • EAL 6 - Semi-formally verified design and tested – “Applicable to the development of specialised TOEs (Targets of Evaluation), for high risk situations ” • EAL 7 - Formally verified design and tested – “Applicable to the development of security TOEs for application in extremely high risk situations 24

- Slides: 24