OVERCOMING APPLES TO ORANGES ACADEMIC RESEARCH IMPACT IN

- Slides: 23

OVERCOMING APPLES TO ORANGES: ACADEMIC RESEARCH IMPACT IN THE REAL WORLD tsp e N 20 d ee 14 Thane Chambers Faculty of Nursing & University of Alberta Libraries Leah Vanderjagt University of Alberta Libraries

WELCOME ● Who we are ● What we’ll cover o What is the broad context for research performance metrics need in post-secondary? o What’s are some issues with research performance metrics? o How can we improve research performance assessment?

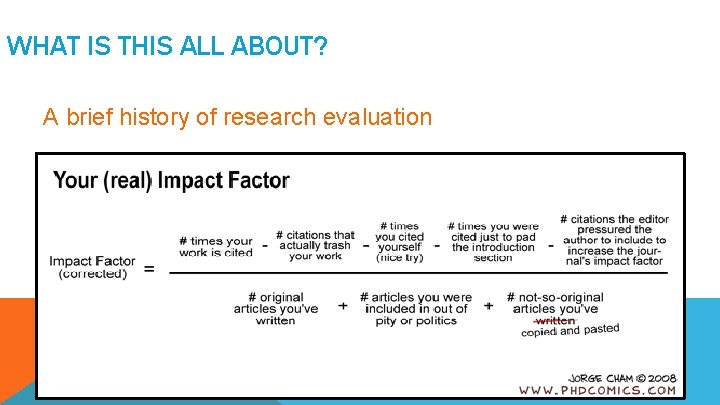

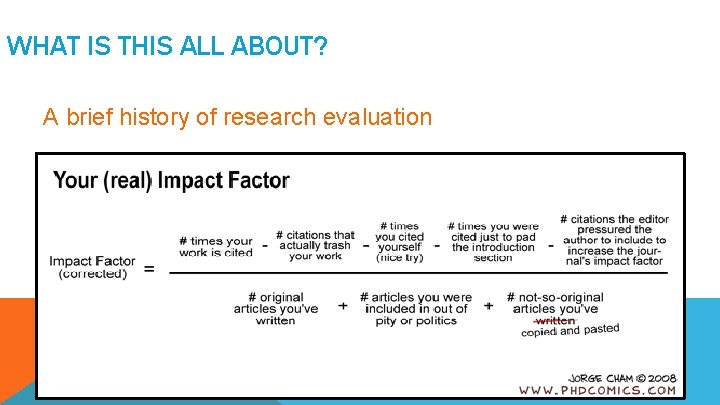

WHAT IS THIS ALL ABOUT? A brief history of research evaluation

FUNDAMENTAL PRINCIPLES OF RESEARCH METRICS: ● Single indicators are misleading on their own ● Integration of both qualitative & quantitative data is necessary ● Various frameworks for research performance already exist

FUNDAMENTAL PRINCIPLES OF RESEARCH METRICS: ● Times cited ≠ Quality ● Discipline to discipline comparison is inappropriate ● Citation databases cover certain disciplines better than others

BROAD/GLOBAL CONTEXT ● Recent upswing in interest about research evaluation ● Nationwide assessments in UK, Australia, New Zealand ● An audit culture is growing

METRICS WANTED • For what? • Performance (strength and weakness) • Comparison (with other institutions) • Collaboration (potential and existing) • Traditional metrics • Altmetrics

WHO’S IN THE GAME: CONSUMERS ● Senior university administrators ● ● Funding agencies Institutional funders Researchers Librarians

WHO’S IN THE GAME: PRODUCERS (VENDORS) ● Elsevier: Scopus and Sci. Val ● Thomson Reuters: Web of Science and In. Cites ● Digital Science: Symplectic Elements ● Article-level metrics (altmetrics) solutions

VENDOR CLAIMS ● ● Quick, easy, and meaningful benchmarking Ability to devise optimal plans Flexibility Insightful analysis to identify unknown areas of research excellence …. . . All with a push of a button!

WHAT DO WE FIND WHEN WE TEST THESE CLAIMS?

WHAT’S NEEDED: PERSISTENT IDENTIFIERS ● Without DOIs, how can impact be tracked? ● ISBNs, repository handles ● Disciplinary and geographic differences in DOI coverage: DOI assignment costs $$ ● What about grey literature? ● Altmetrics still may depend on DOI

WHAT’S NEEDED: NAME DISAMBIGUATION (THE BIGGEST PROBLEM)

WHAT’S NEEDED: SOURCE COVERAGE ● Source coverage in most prominent products are still Scopus and Web of Science (STEM-heavy) ● Integration of broader sources is packaged with more expensive implementations ● Some products specifically market broad source coverage (Symplectic Elements)

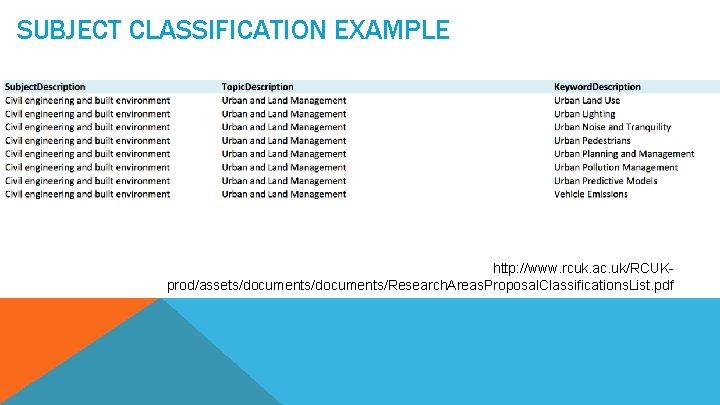

WHAT’S NEEDED: NATIONAL SUBJECT AREA CLASSIFICATION (TO A FINE LEVEL) ● Subject classification in products is EXTREMELY broad - so broad, comparisons are inappropriate ● Integration of a national standard of granular subject classification would help everyone

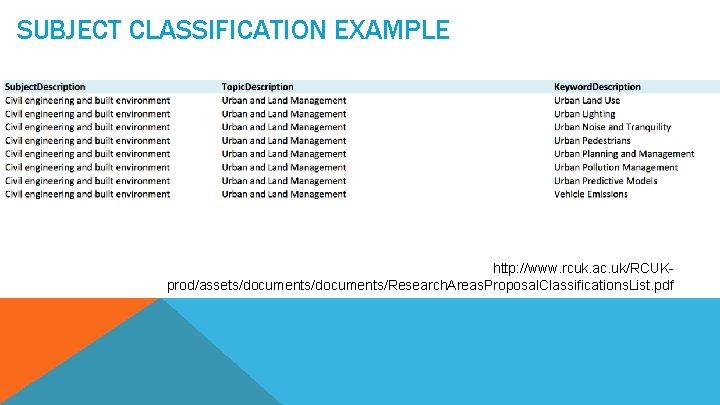

SUBJECT CLASSIFICATION EXAMPLE http: //www. rcuk. ac. uk/RCUKprod/assets/documents/Research. Areas. Proposal. Classifications. List. pdf

WHAT’S NEEDED: TRAINING & KNOWLEDGE ● Do all CONSUMERS want/need training? ● Have we analyzed our services for citation impact and metrics analysis? ● Top-to-bottom organizational training couched in strategic needs for metrics identified

WHAT’S NEEDED: PROCESSES & WORKFLOWS ● ● Data cleaning Verification of new data Running analysis Verifying analysis

WHAT’S NEEDED: CULTURAL UNDERSTANDING ● How is the data going to be used? And who will be rewarded? ● An audit culture ● Social sciences, humanities, arts would have justified concerns with the adoption of tools that are citation based

HOW CAN ACADEMIC LIBRARIES HELP? ● Share our knowledge of best practices/other effective implementations ● Challenge vendors to address problems ● Train for author ID systems and assignment and integrate author IDs with digital initiatives

HOW CAN ACADEMIC LIBRARIES HELP? ● Advocate for national comparison standards (CASRAI) ● Employ our subject-focused outreach model ● As a central unit, make broad organizational connections to help with implementation ● Promote our expertise: bibliographic analysis is an LIS domain

RECOMMENDATIONS ● Strategic leaders need to initiate university wide conversations about what research evaluation means for the institution ● Tools need to be flexible to incorporate non-Journal based scholarly work/data ● New workflows need to be minimized and incorporated into existing workflows as much as possible ● Broad adoption of ORCID ID system

REFERENCES Marjanovic, S. , Hanney, S. , & Wooding, S. (2009). A Historical Reflection on Research Evaluation Studies, Their Recurrent Themes and Challenges. Technical Report. RAND Corporation. Moed, H. F. (2005). Citation analysis in research evaluation. Dordrecht: Springer.

Apples juicy apples round poem

Apples juicy apples round poem Apples juicy apples round poem

Apples juicy apples round poem Apples here

Apples here Apples here apples there

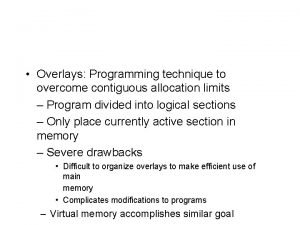

Apples here apples there A technique for overcoming internal fragmentation

A technique for overcoming internal fragmentation Overcoming the dark side of leadership

Overcoming the dark side of leadership Overcoming barriers to employment

Overcoming barriers to employment Scripture about overcoming adversity

Scripture about overcoming adversity Overcoming communication barriers in pharmacy

Overcoming communication barriers in pharmacy Do we our life done

Do we our life done Overcoming hindrances to spiritual development

Overcoming hindrances to spiritual development How did helen keller overcome adversity

How did helen keller overcome adversity Roz shafran perfectionism

Roz shafran perfectionism Overcoming life issues

Overcoming life issues Writing a narrative about overcoming a challenge

Writing a narrative about overcoming a challenge Overcoming challenges essay

Overcoming challenges essay Knowledge2practice

Knowledge2practice 7 basic plots

7 basic plots There isnt any cheese

There isnt any cheese On saturday he ate through one piece of chocolate cake

On saturday he ate through one piece of chocolate cake Did pumpkins come from the new world or old world

Did pumpkins come from the new world or old world Is a rusting bicycle a chemical or physical change

Is a rusting bicycle a chemical or physical change Mother and daughter by gary soto summary

Mother and daughter by gary soto summary How do authors use imagery

How do authors use imagery