Outline Information Retrieval Models Vector space model Language

- Slides: 48

Outline Information Retrieval Models Vector space model Language modeling (Laplace smoothing、Jelinek-Mercer smoothing) Machine Learning Models Support Neural Networks XGBoost Vector Machine Conclusion

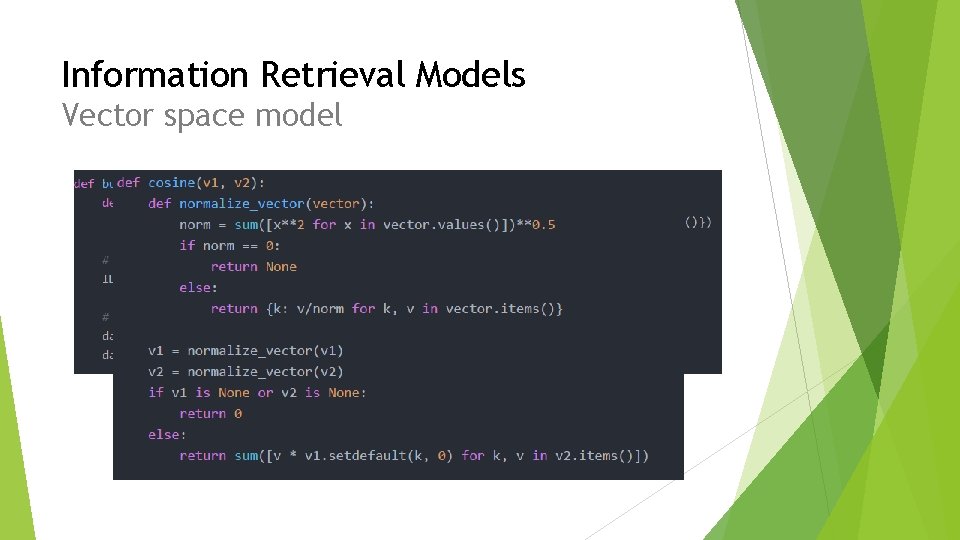

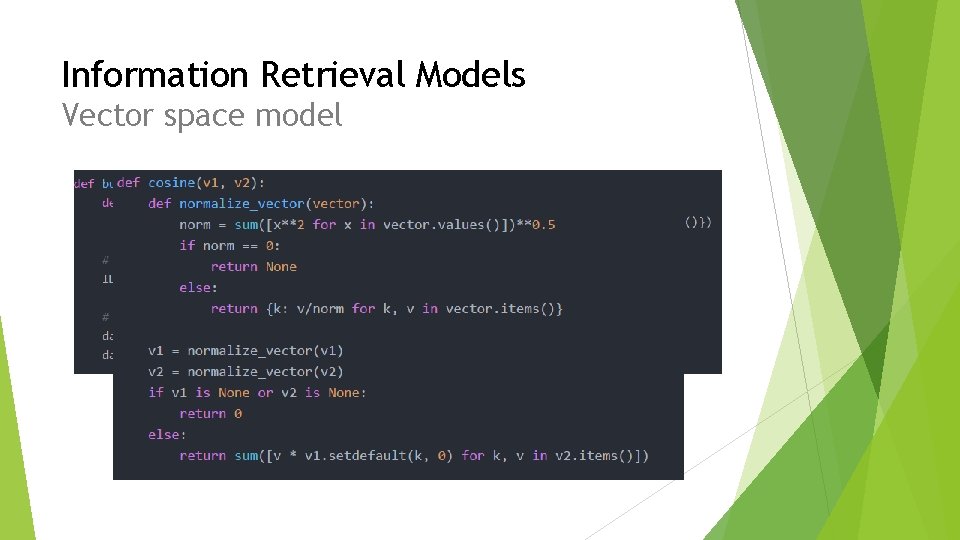

Information Retrieval Models Vector space model

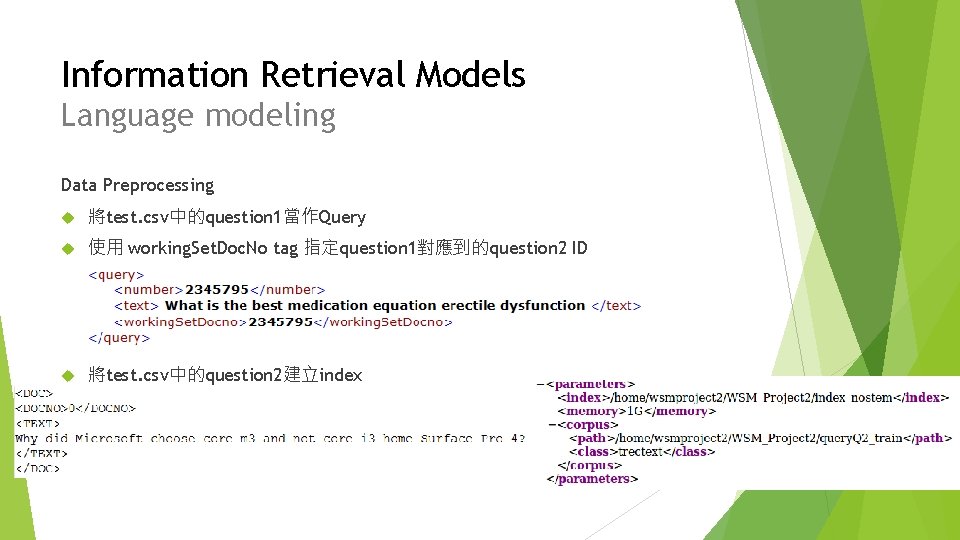

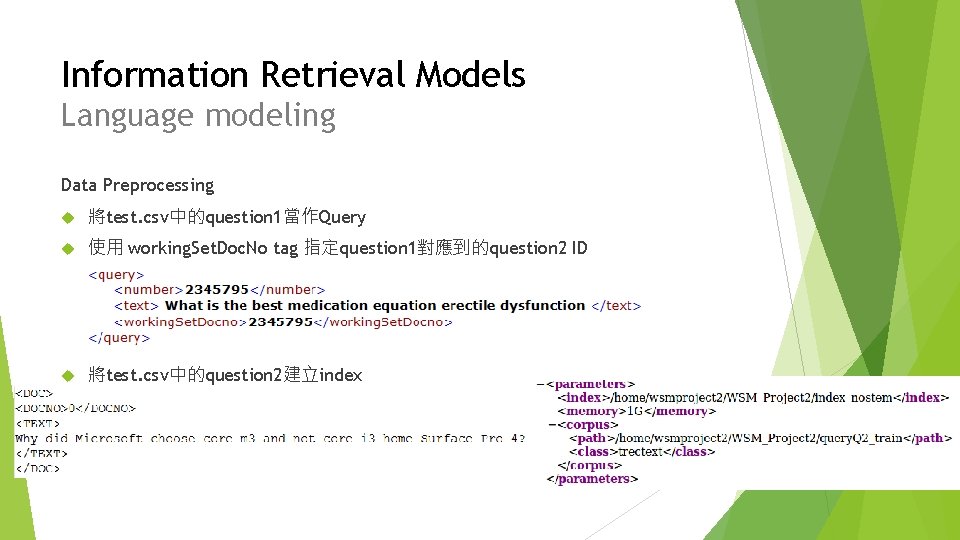

Information Retrieval Models Language modeling Data Preprocessing 將test. csv中的question 1當作Query 使用 working. Set. Doc. No tag 指定question 1對應到的question 2 ID 將test. csv中的question 2建立index

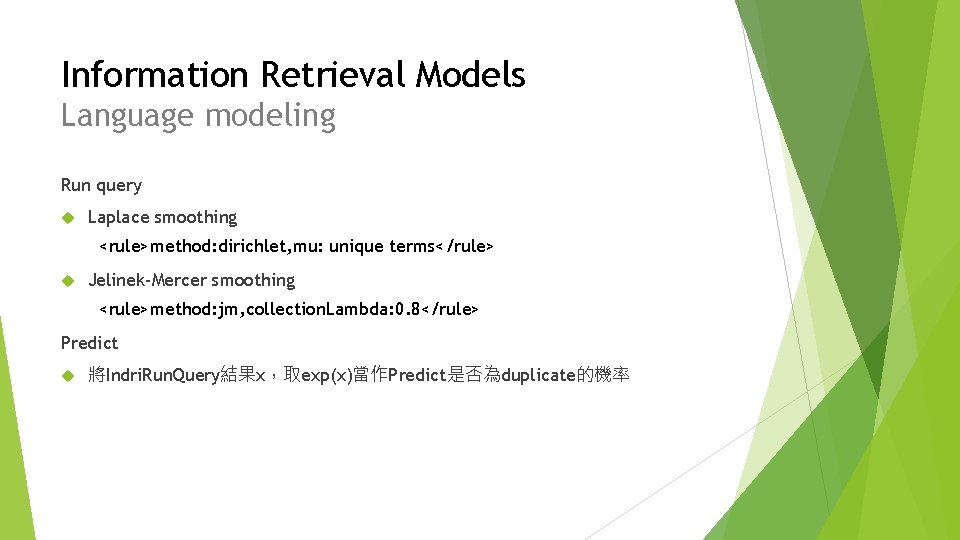

Information Retrieval Models Language modeling Run query Laplace smoothing <rule>method: dirichlet, mu: unique terms</rule> Jelinek-Mercer smoothing <rule>method: jm, collection. Lambda: 0. 8</rule> Predict 將Indri. Run. Query結果x,取exp(x)當作Predict是否為duplicate的機率

Support Vector Machine Learning Models

Machine Learning Models Support Vector Machine Data Preprocessing Feature Selecting Cross-Validation Grid Model Training Predicting

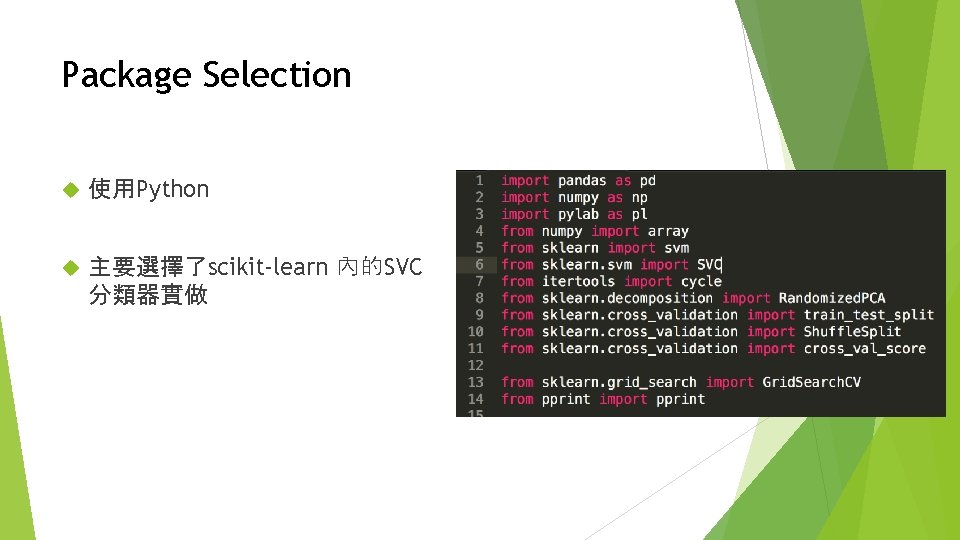

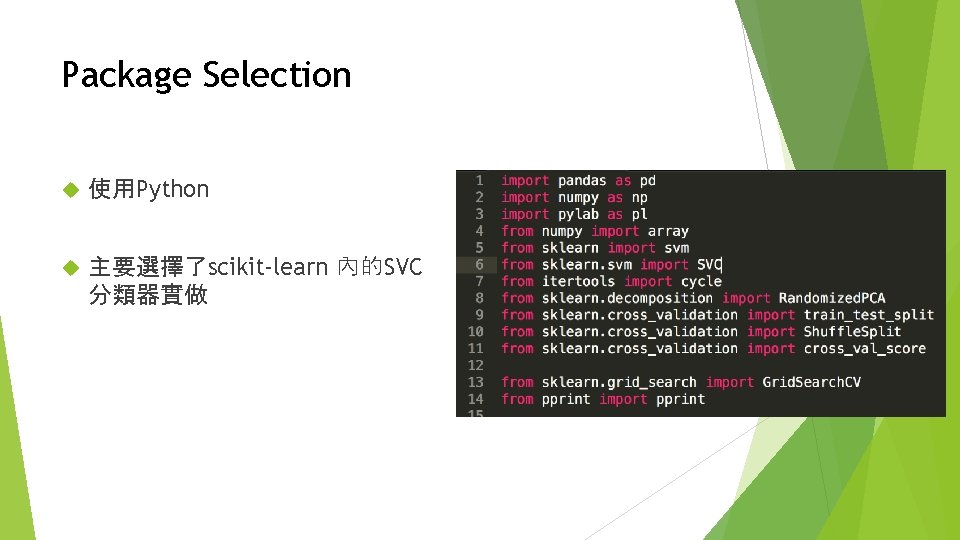

Package Selection 使用Python 主要選擇了scikit-learn 內的SVC 分類器實做

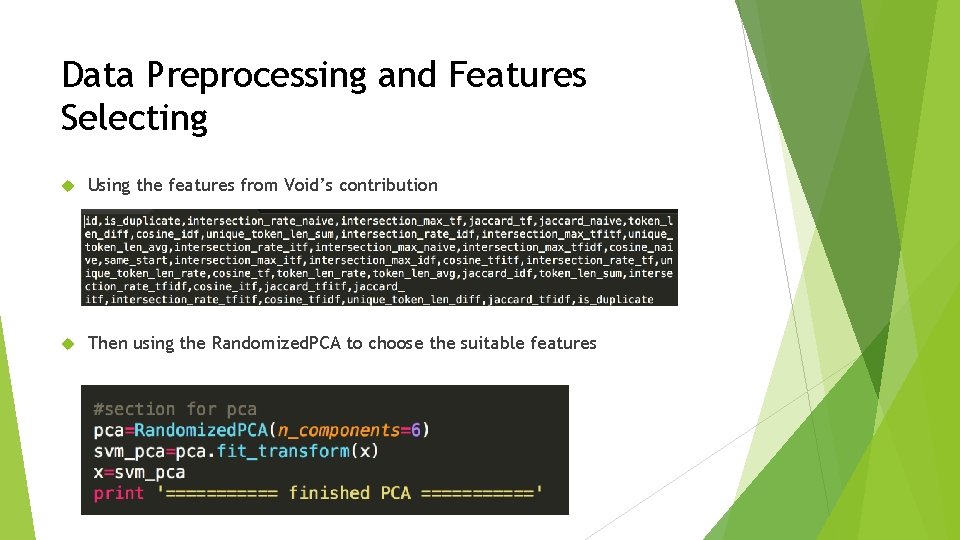

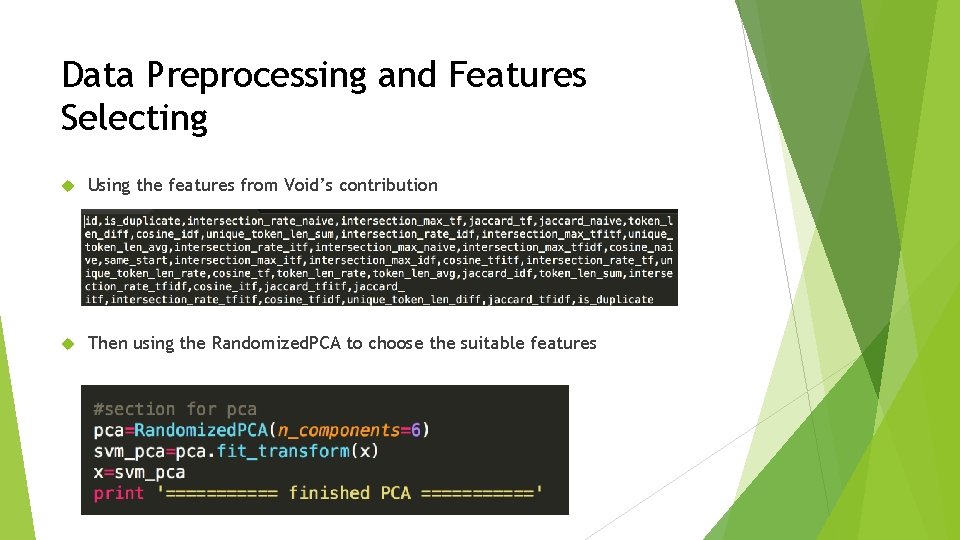

Data Preprocessing and Features Selecting Using the features from Void’s contribution Then using the Randomized. PCA to choose the suitable features

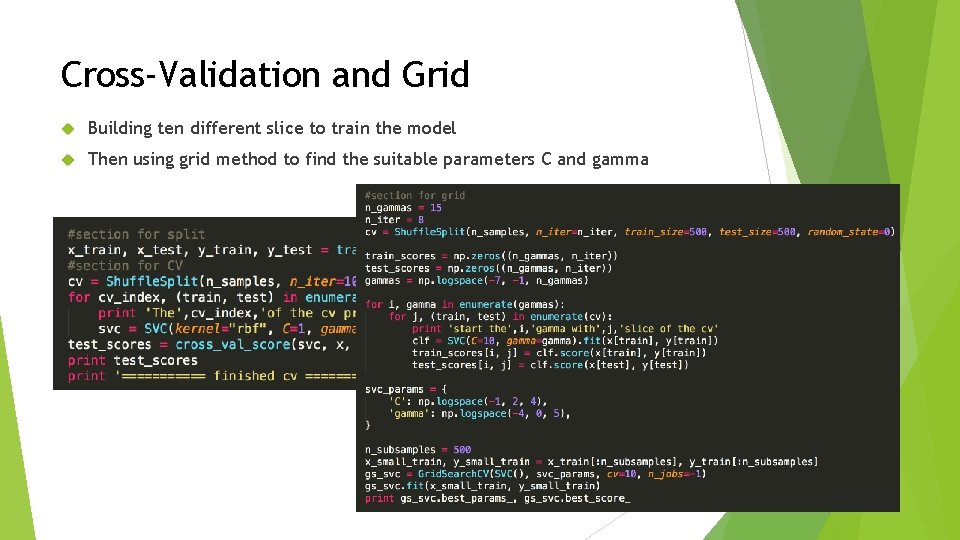

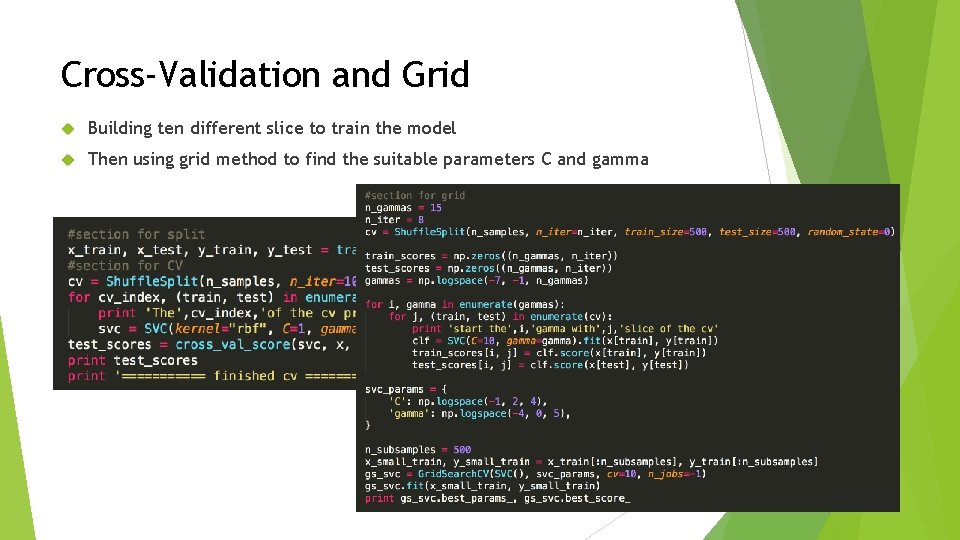

Cross-Validation and Grid Building ten different slice to train the model Then using grid method to find the suitable parameters C and gamma

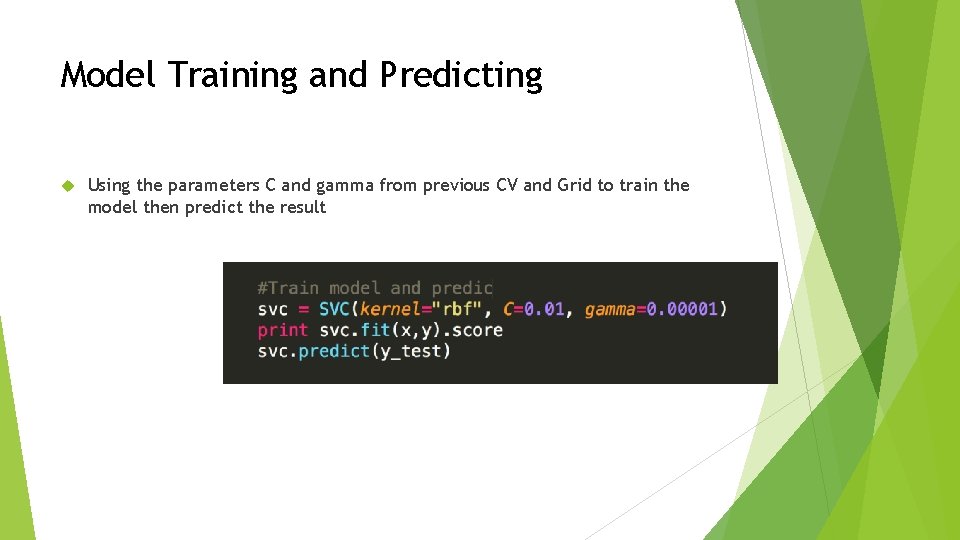

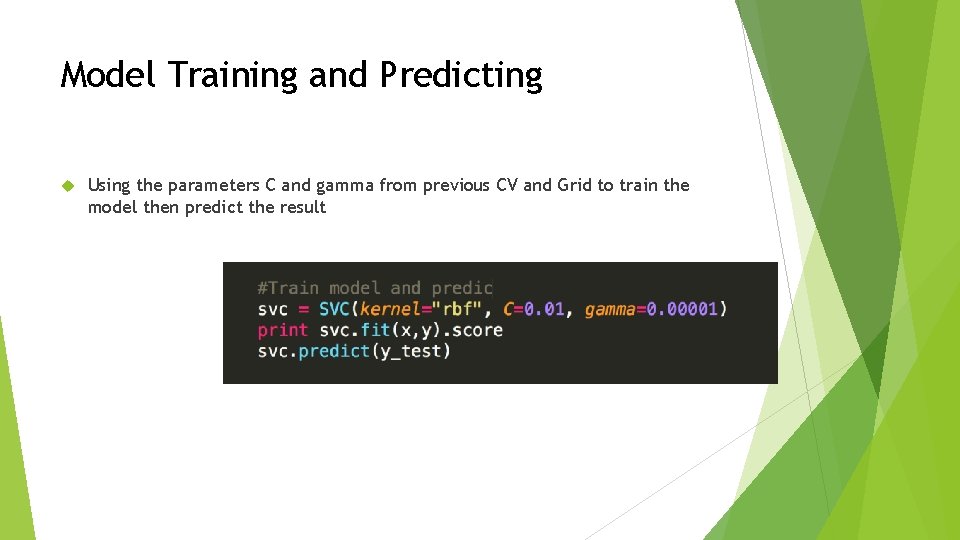

Model Training and Predicting Using the parameters C and gamma from previous CV and Grid to train the model then predict the result

Neural Networks Machine Learning Models

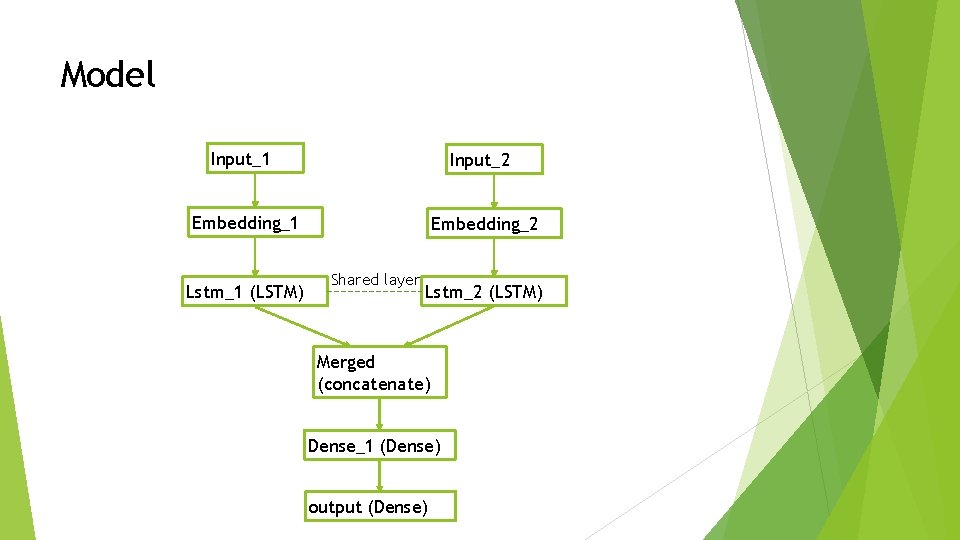

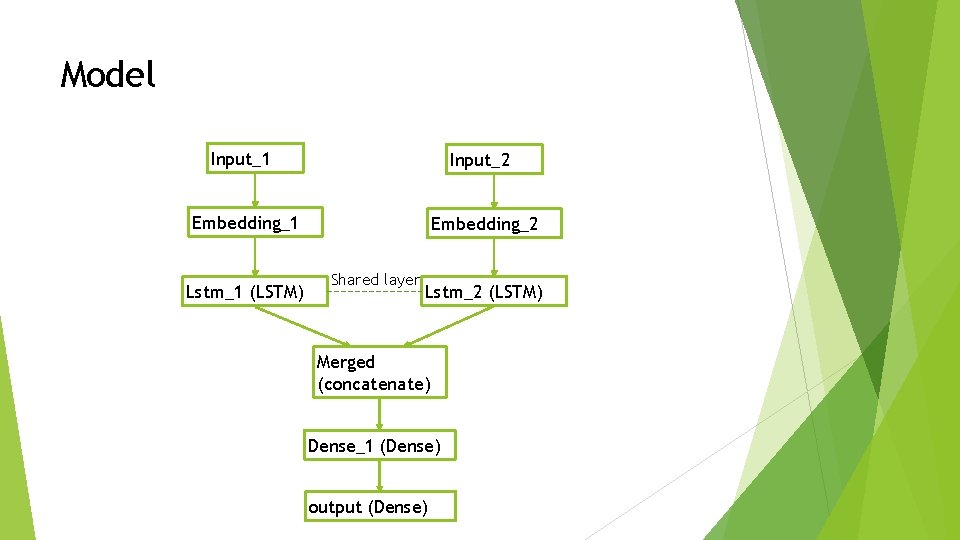

Machine Learning Models Neural Networks Model Word Embedding Callbacks Data clean

Model Input_1 Input_2 Embedding_1 Embedding_2 Lstm_1 (LSTM) Shared layer Lstm_2 (LSTM) Merged (concatenate) Dense_1 (Dense) output (Dense)

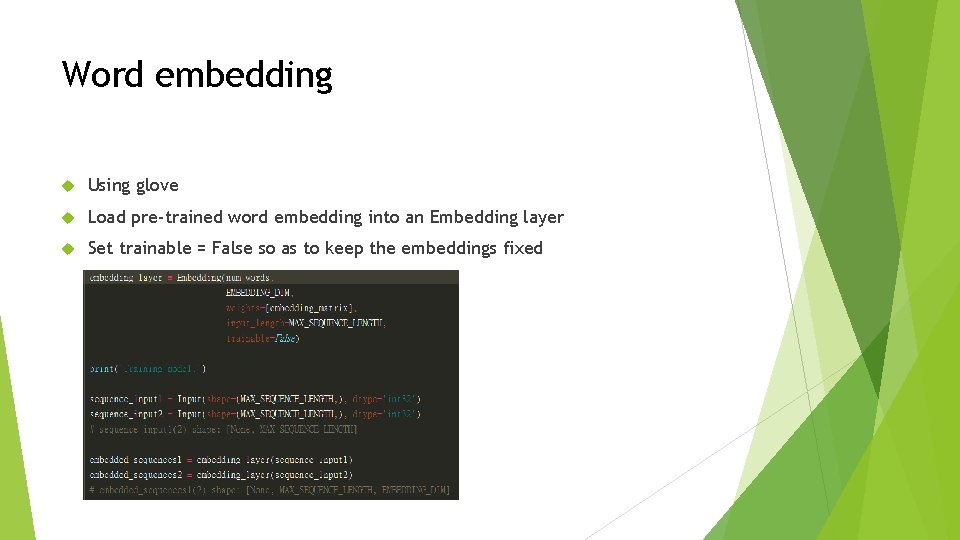

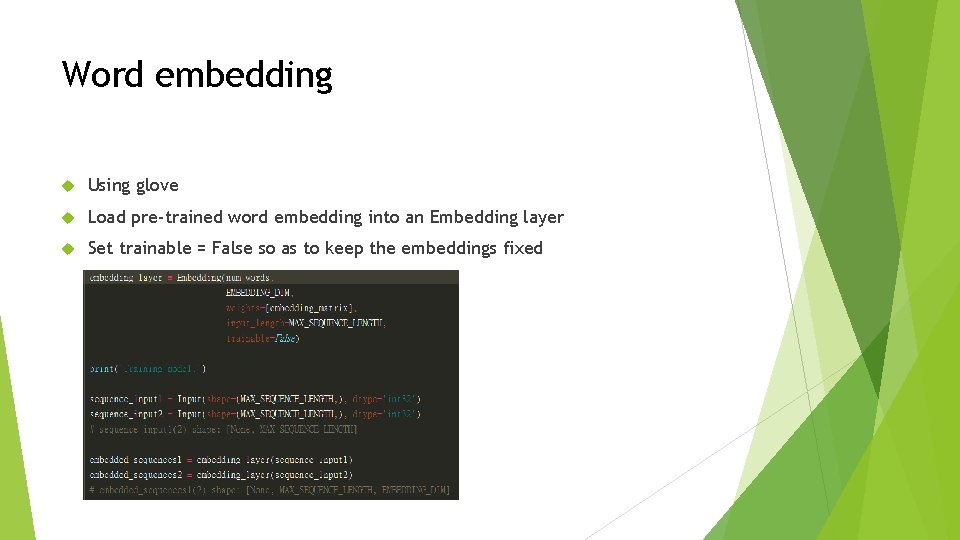

Word embedding Using glove Load pre-trained word embedding into an Embedding layer Set trainable = False so as to keep the embeddings fixed

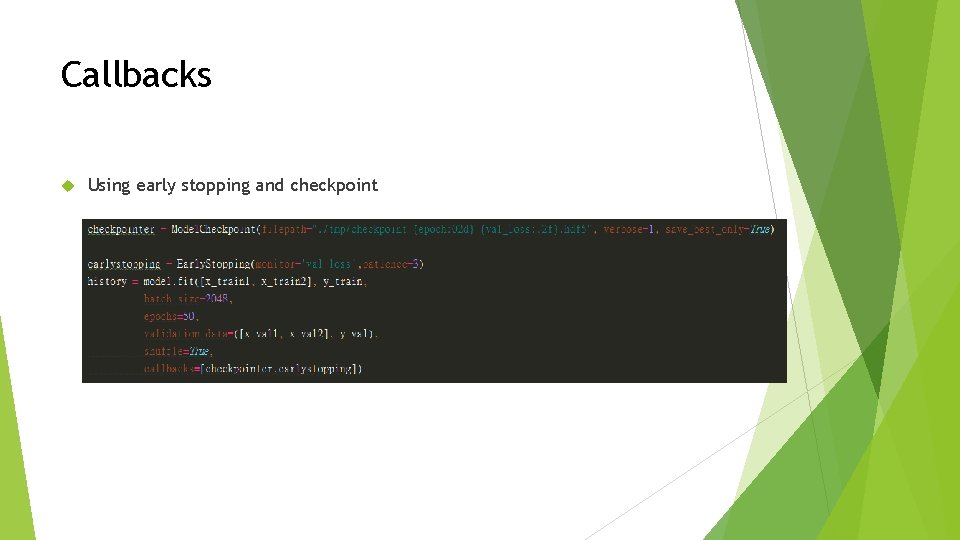

Callbacks Using early stopping and checkpoint

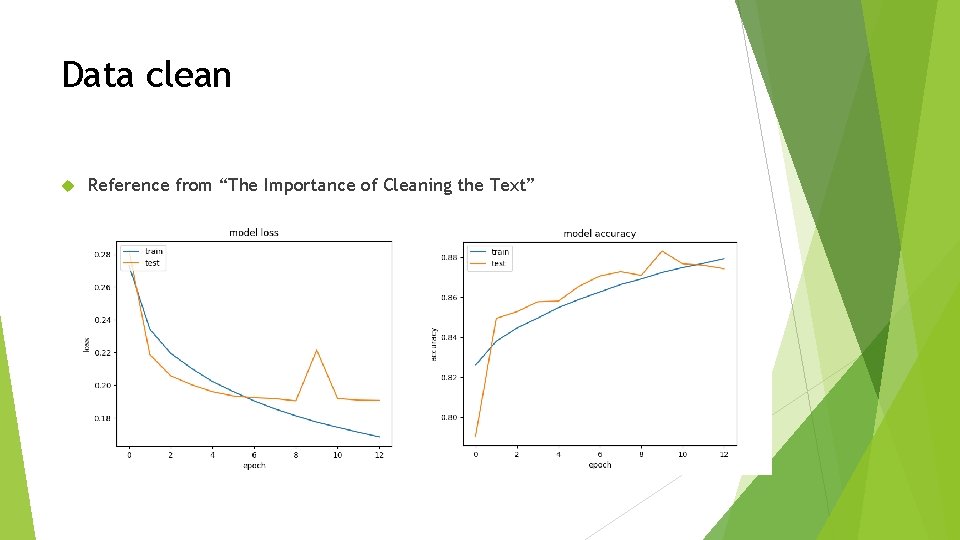

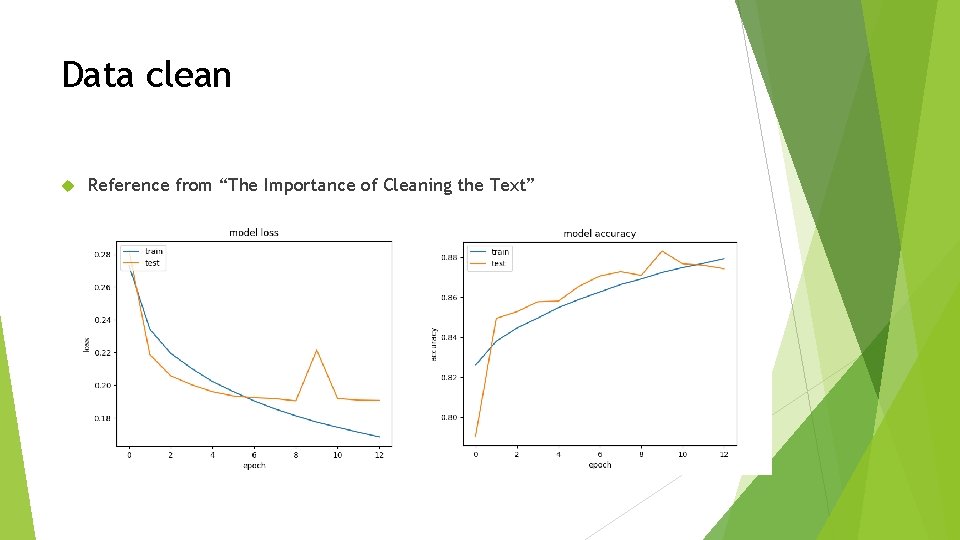

Data clean Reference from “The Importance of Cleaning the Text”

XGBOOST Machine Learning Models

Machine Learning Feature Engineering Data Preprocessing Predict

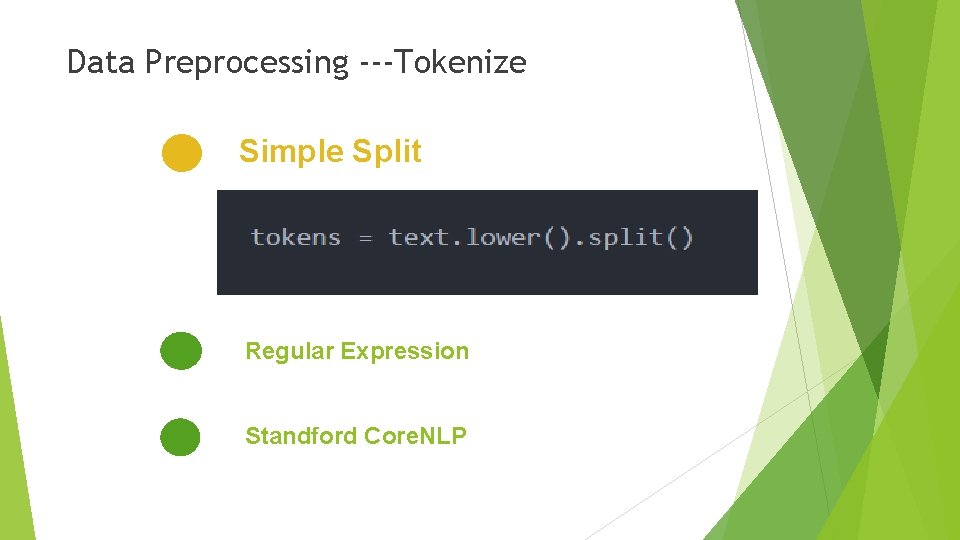

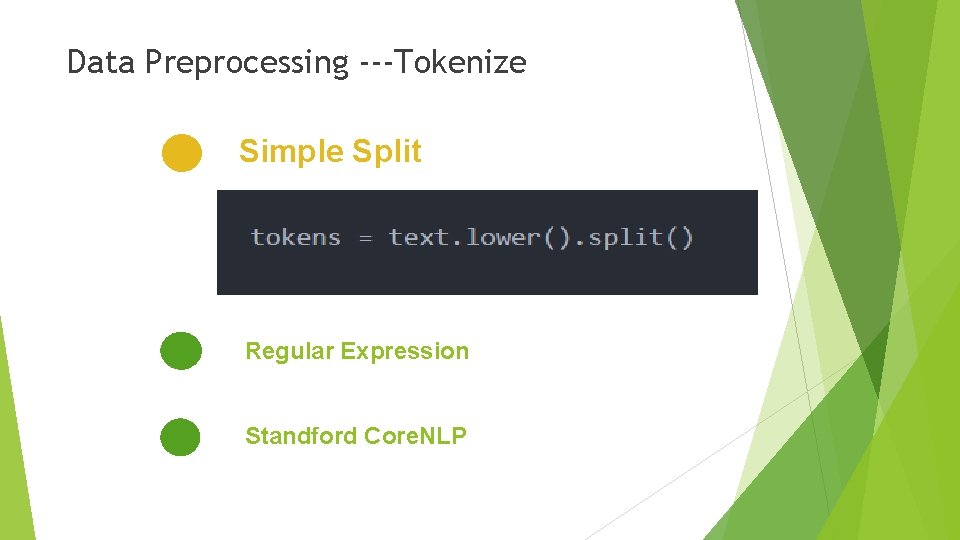

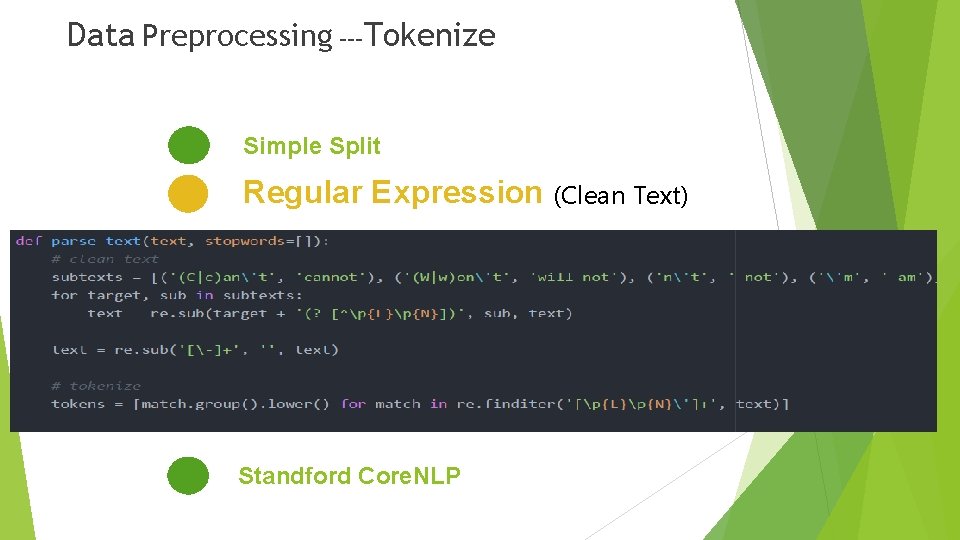

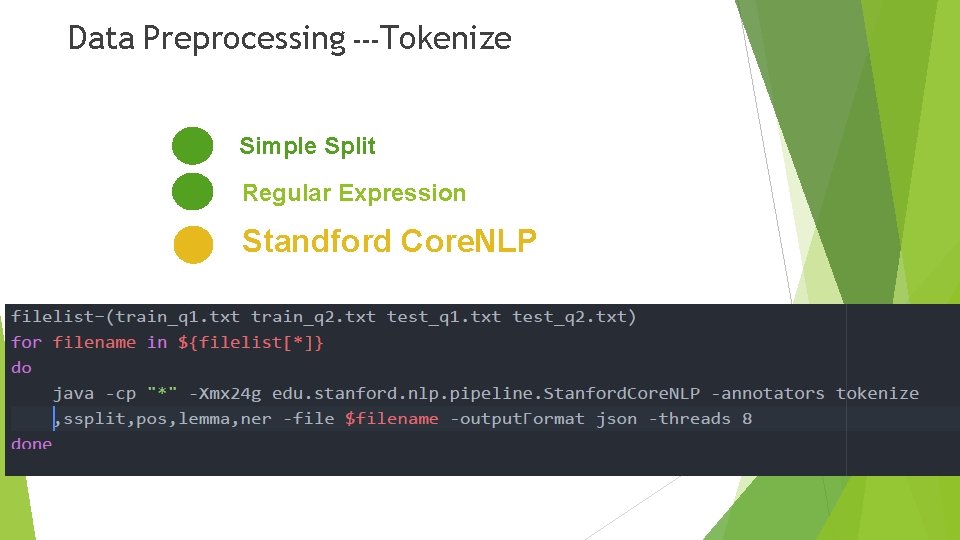

Data Preprocessing ---Tokenize Simple Split Regular Expression Standford Core. NLP

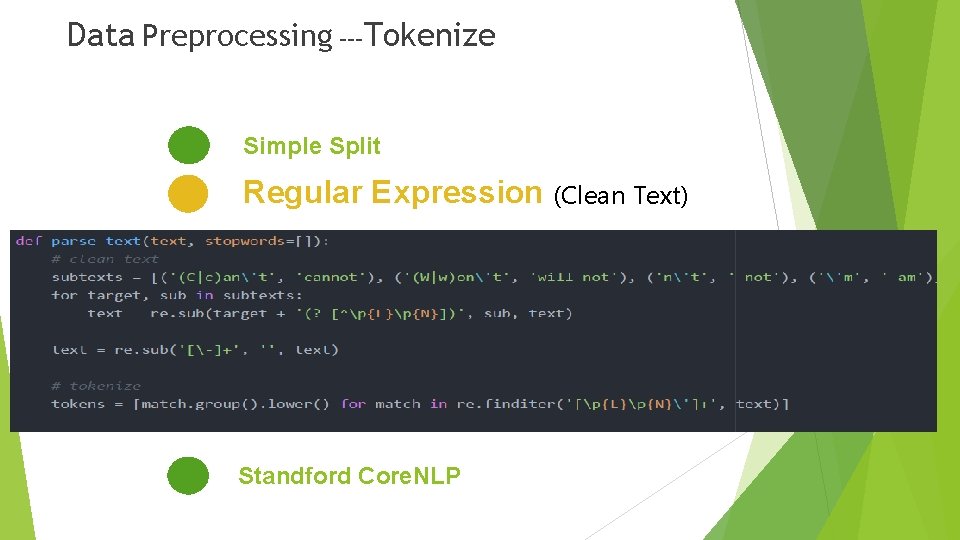

Data Preprocessing ---Tokenize Simple Split Regular Expression (Clean Text) Standford Core. NLP

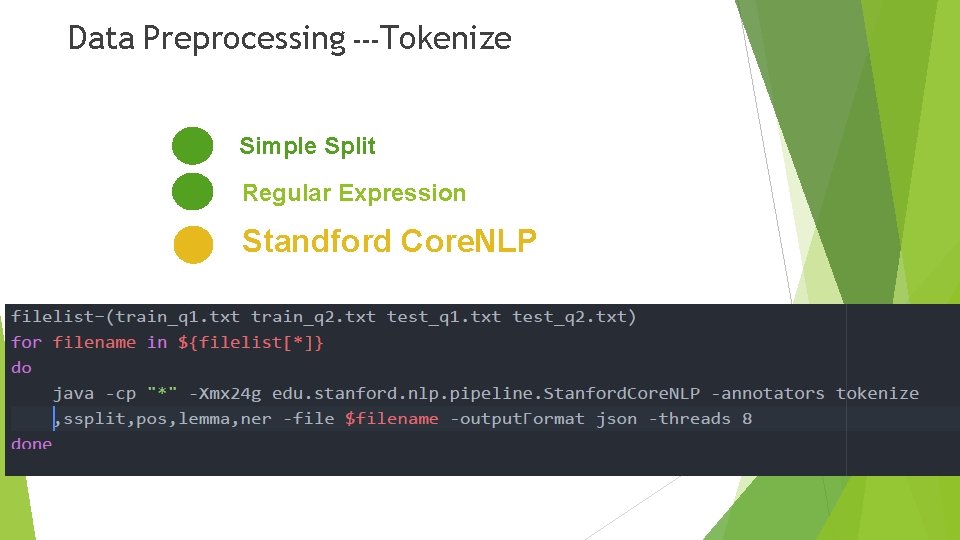

Data Preprocessing ---Tokenize Simple Split Regular Expression Standford Core. NLP

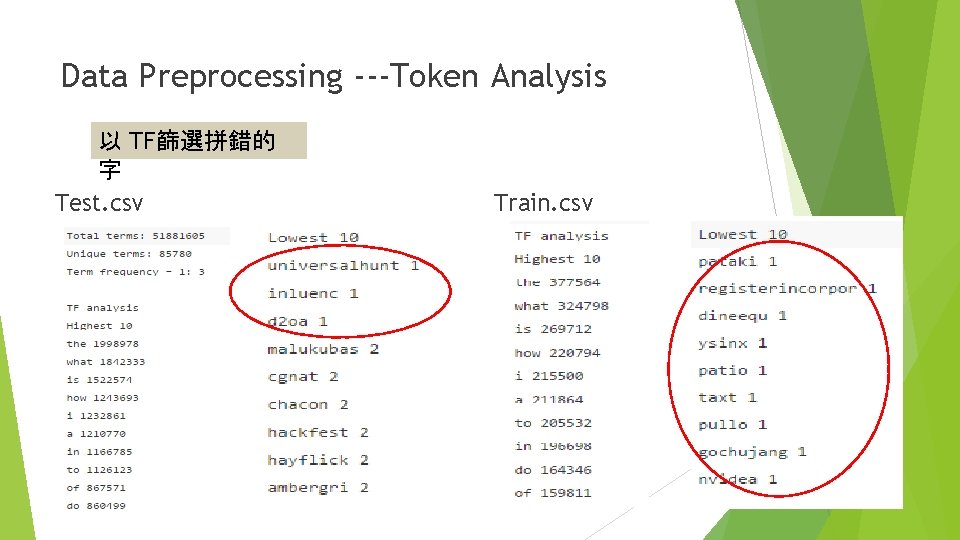

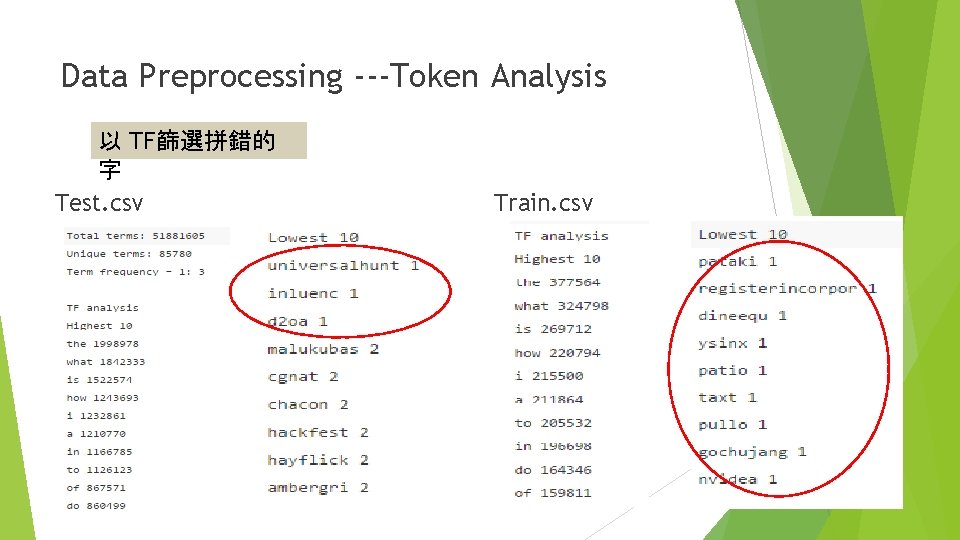

Data Preprocessing ---Token Analysis 以 TF篩選拼錯的 字 Test. csv Train. csv

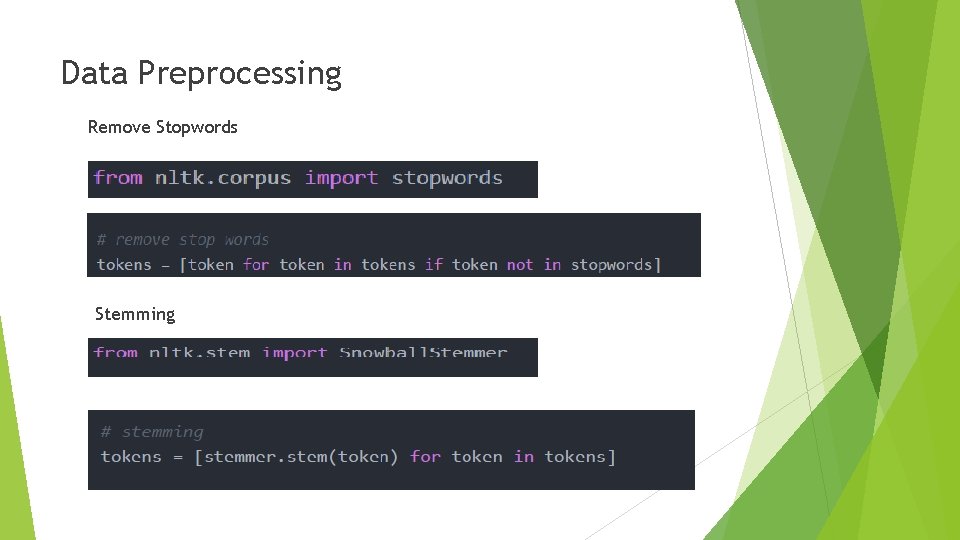

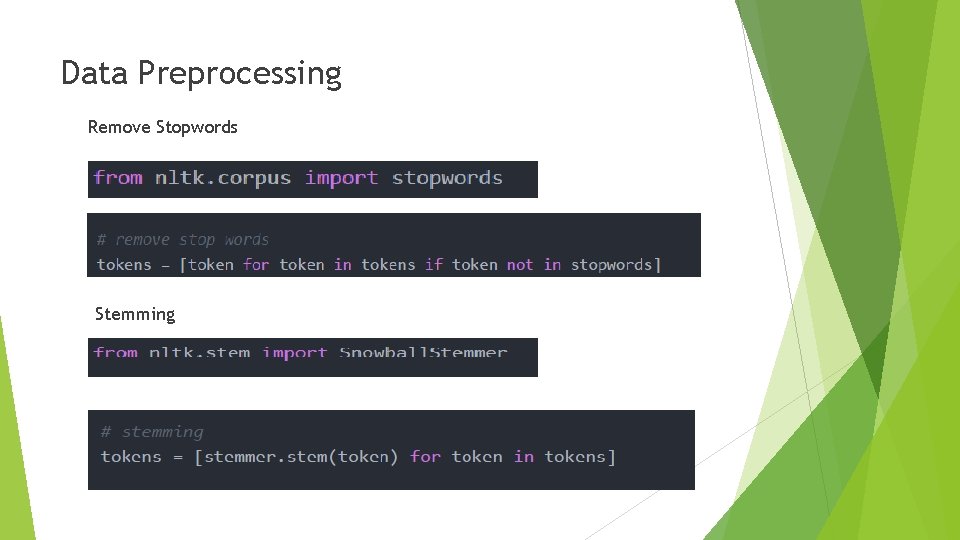

Data Preprocessing Remove Stopwords Stemming

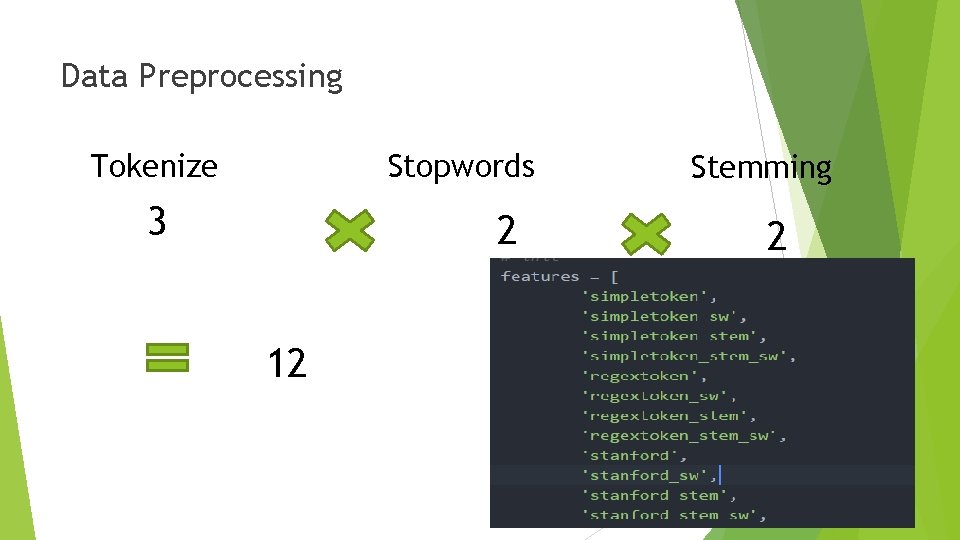

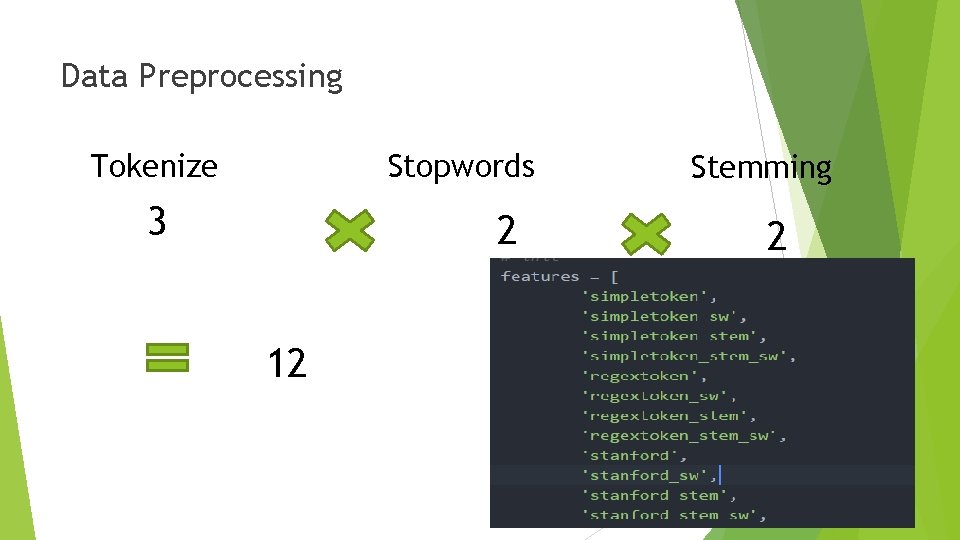

Data Preprocessing Tokenize Stopwords 3 2 12 Stemming 2

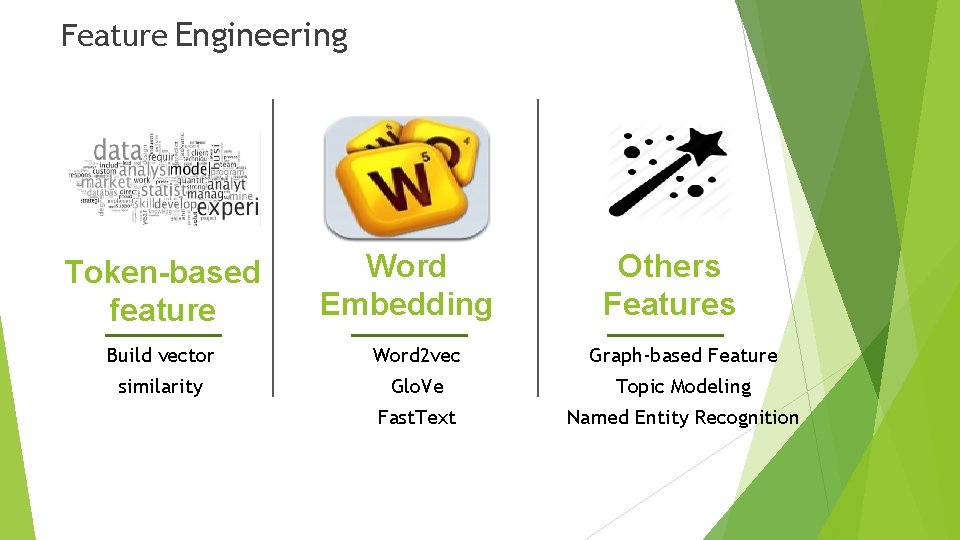

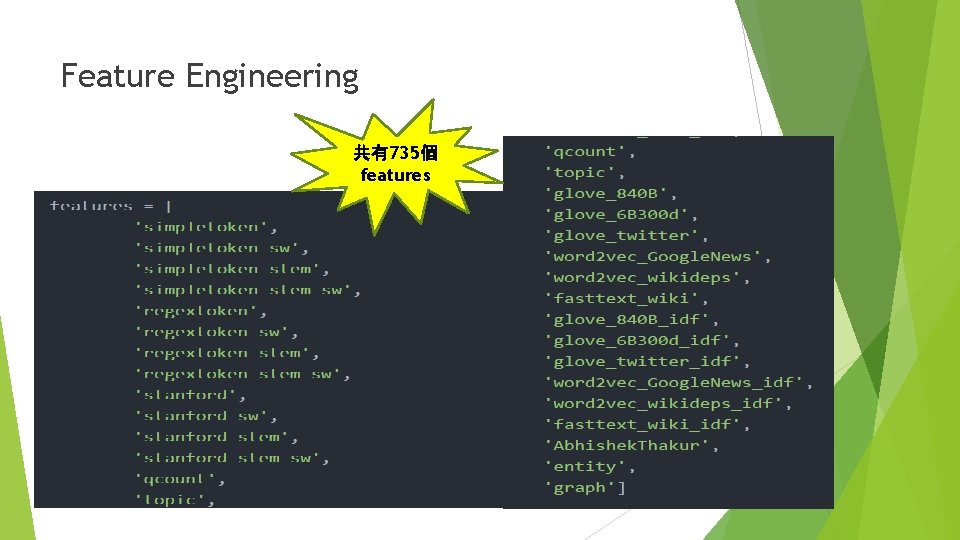

Feature Engineering Token-based feature Word Embedding Others Features Build vector Word 2 vec Graph-based Feature similarity Glo. Ve Topic Modeling Fast. Text Named Entity Recognition

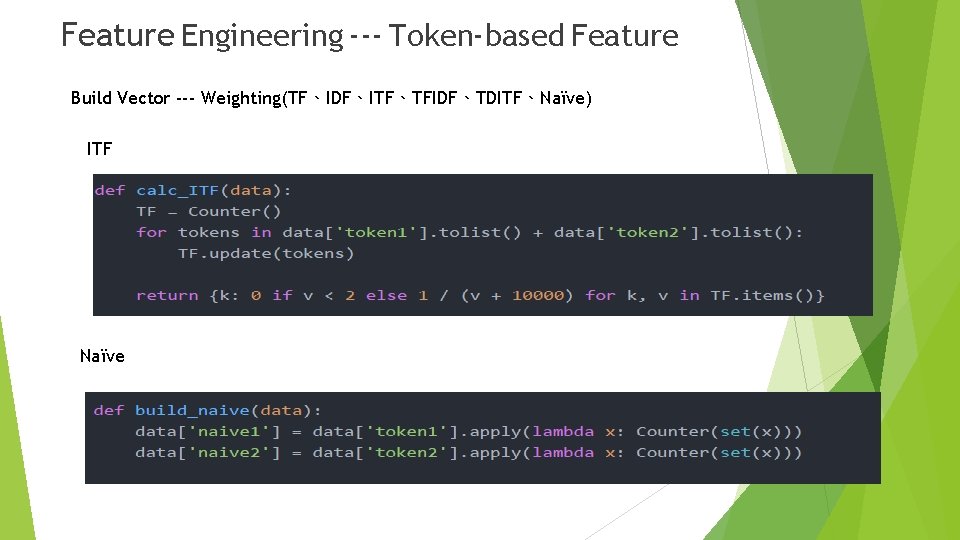

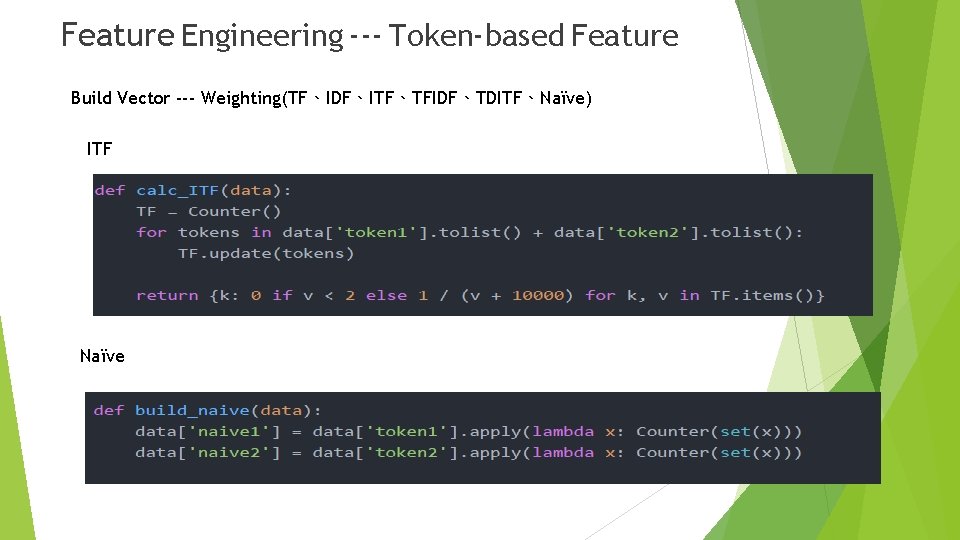

Feature Engineering --- Token-based Feature Build Vector --- Weighting(TF、IDF、ITF、TFIDF、TDITF、Naïve) ITF Naïve

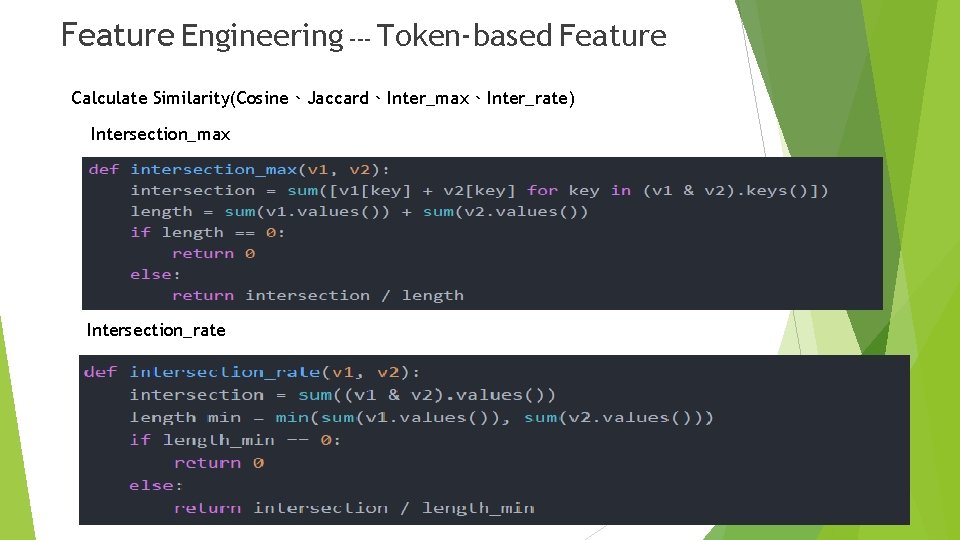

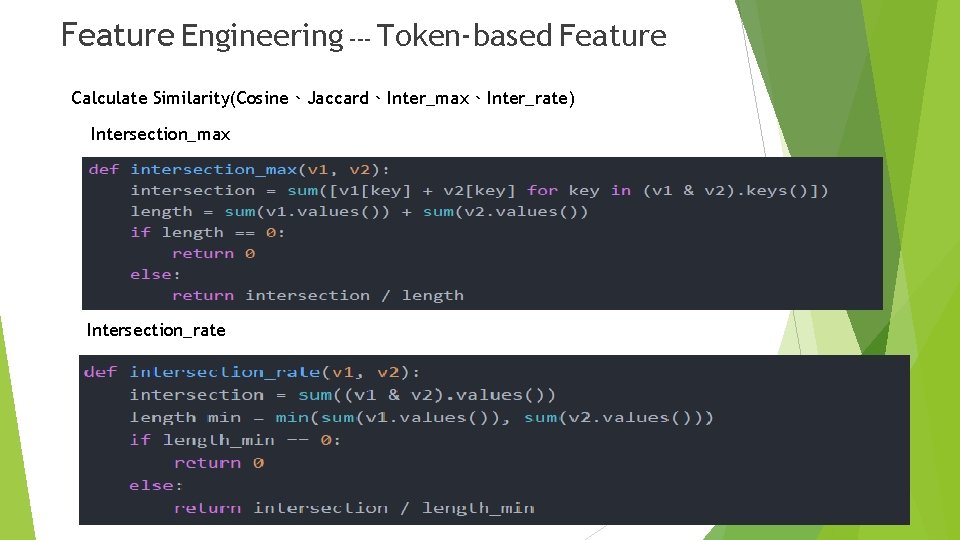

Feature Engineering --- Token-based Feature Calculate Similarity(Cosine、Jaccard、Inter_max、Inter_rate) Intersection_max Intersection_rate

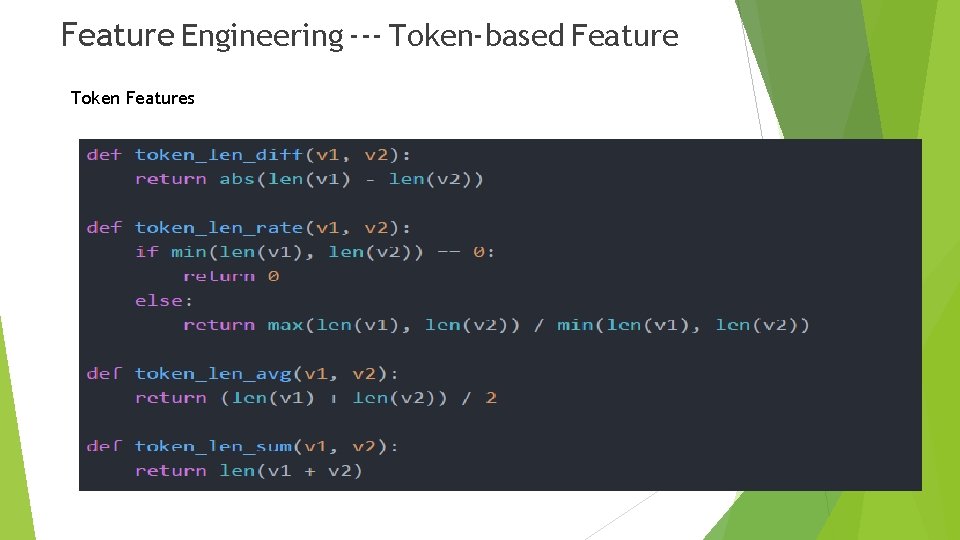

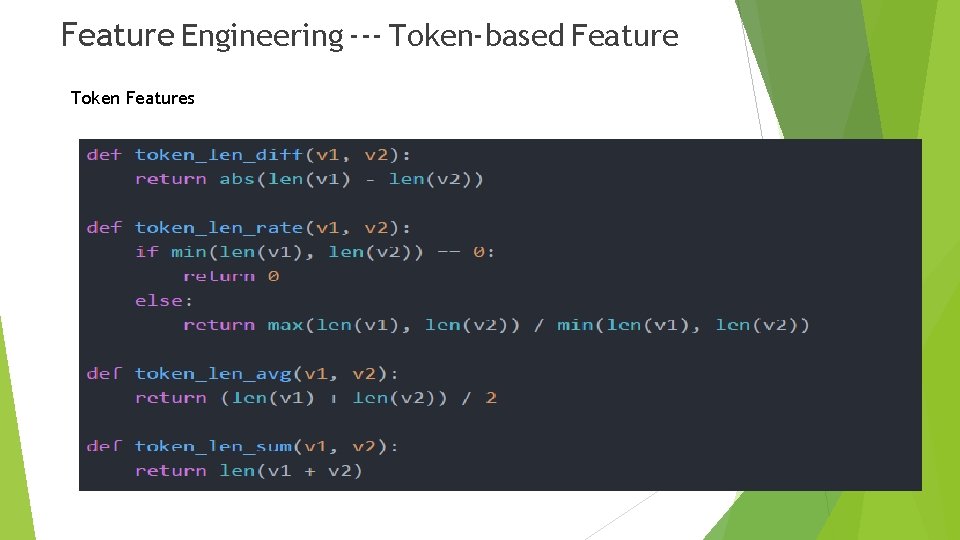

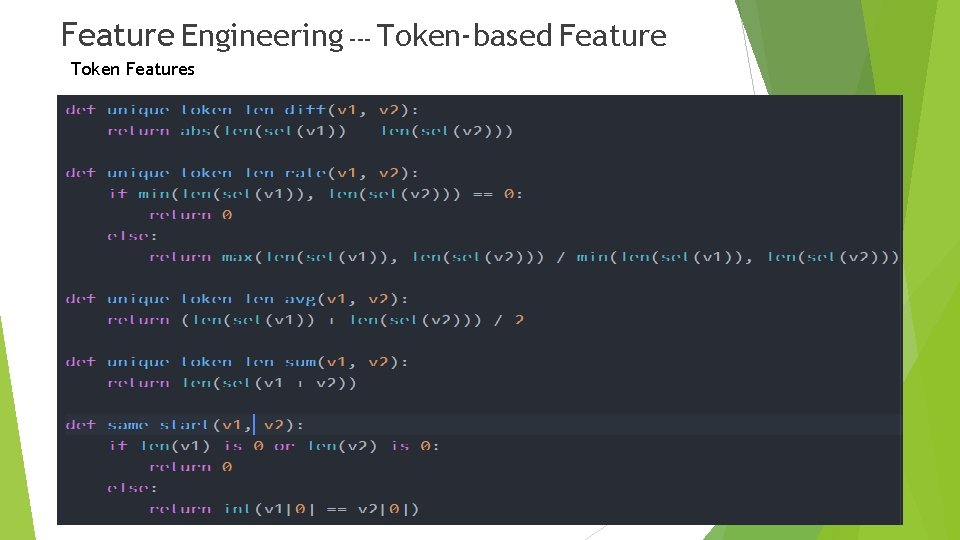

Feature Engineering --- Token-based Feature Token Features

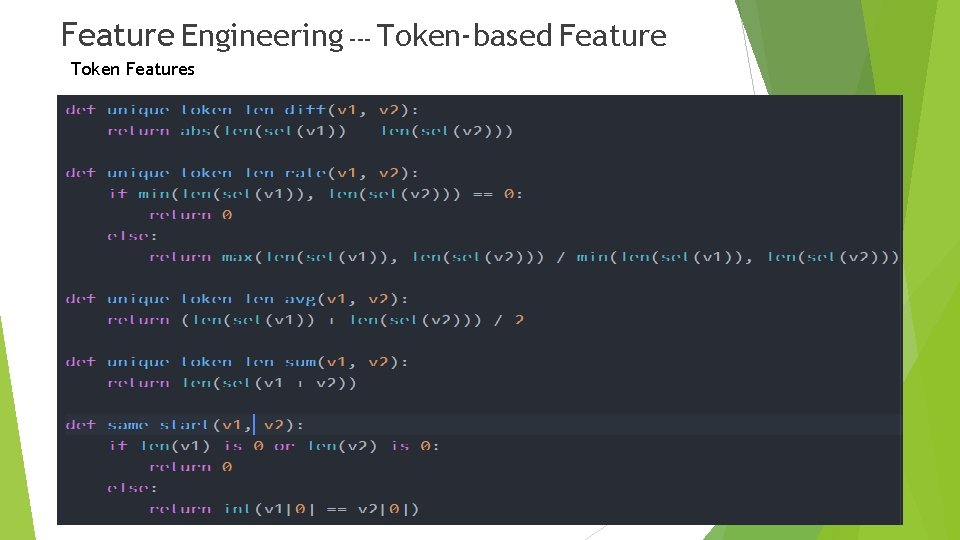

Feature Engineering --- Token-based Feature Token Features

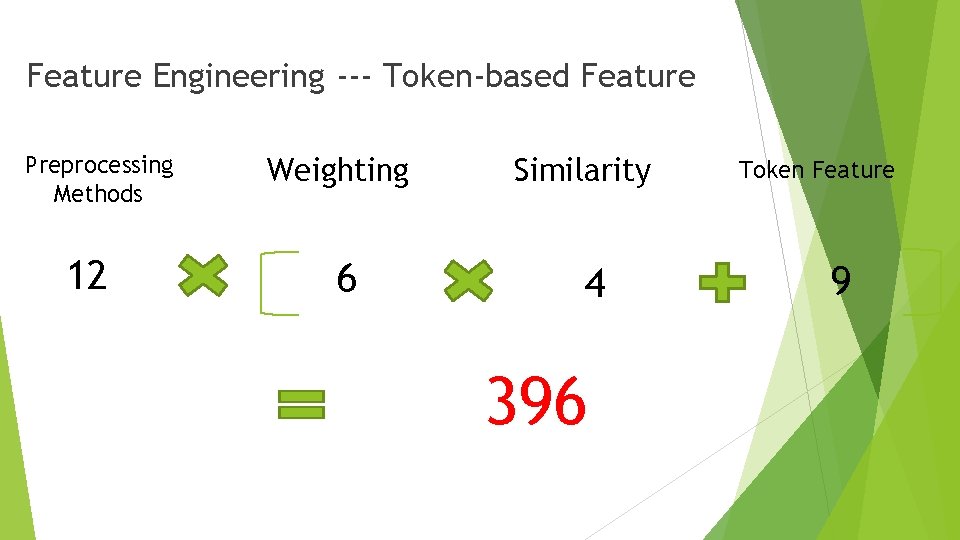

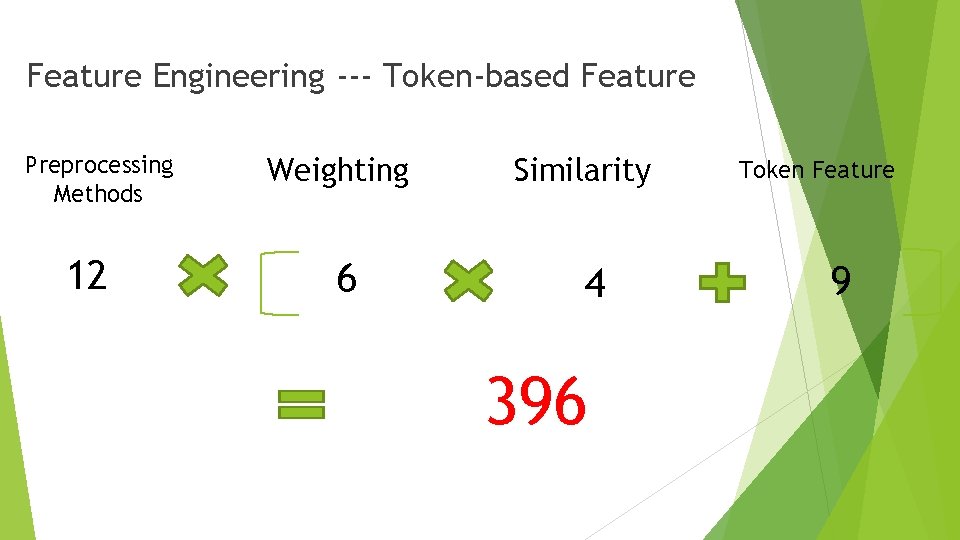

Feature Engineering --- Token-based Feature Preprocessing Methods 12 Weighting 6 Similarity 4 396 Token Feature 9

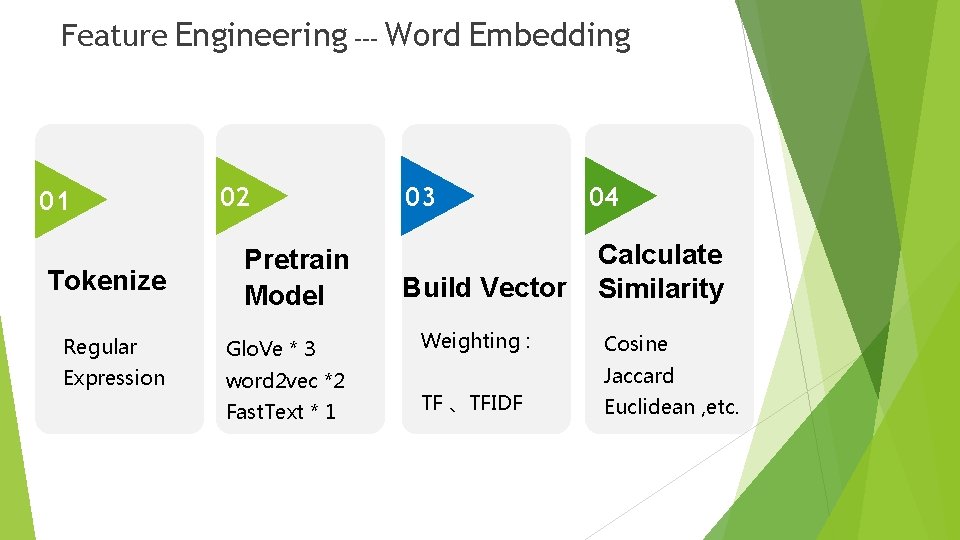

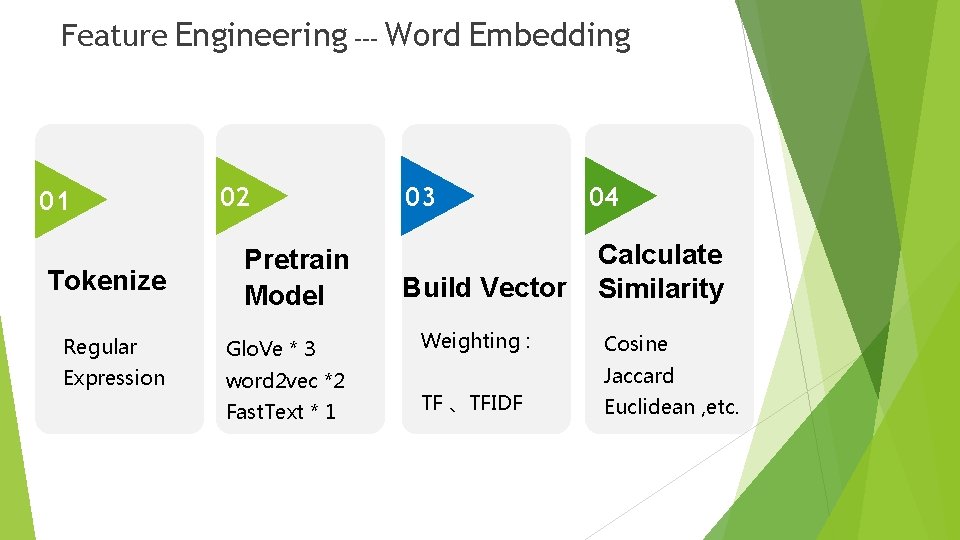

Feature Engineering --- Word Embedding 01 01 Tokenize 02 Pretrain Model Regular Glo. Ve * 3 Expression word 2 vec *2 Fast. Text * 1 03 Build Vector Weighting : 04 Calculate Similarity Cosine Jaccard TF 、TFIDF Euclidean , etc.

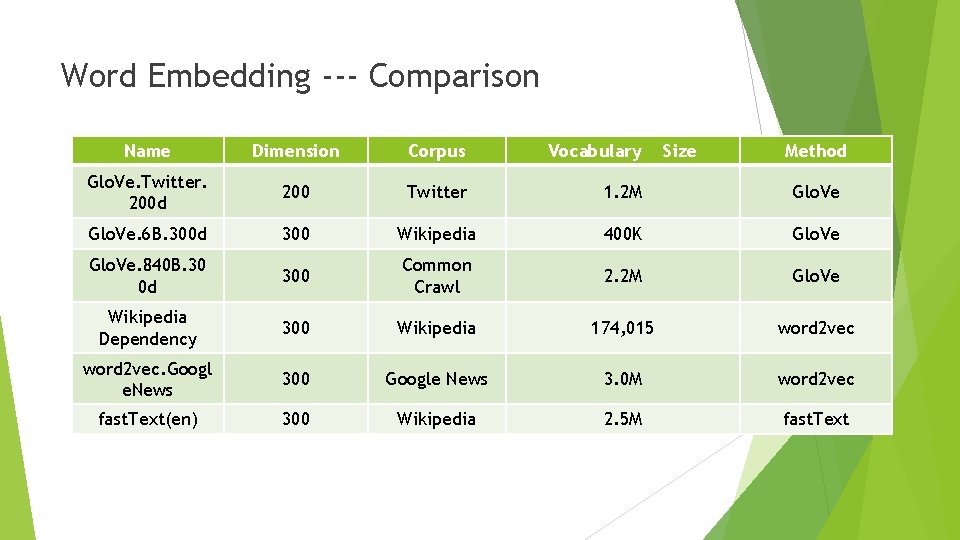

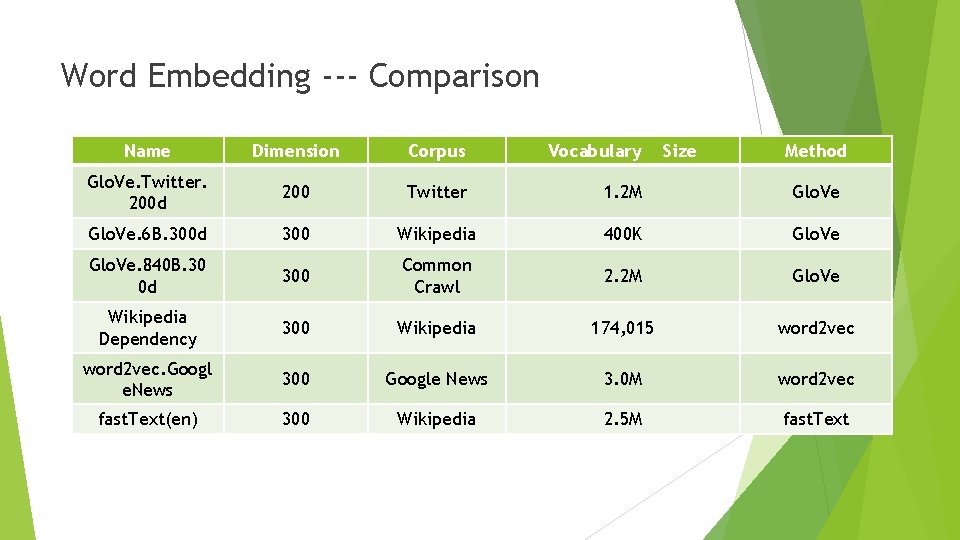

Word Embedding --- Comparison Name Dimension Corpus Vocabulary Size Method Glo. Ve. Twitter. 200 d 200 Twitter 1. 2 M Glo. Ve. 6 B. 300 d 300 Wikipedia 400 K Glo. Ve. 840 B. 30 0 d 300 Common Crawl 2. 2 M Glo. Ve Wikipedia Dependency 300 Wikipedia 174, 015 word 2 vec. Googl e. News 300 Google News 3. 0 M word 2 vec fast. Text(en) 300 Wikipedia 2. 5 M fast. Text

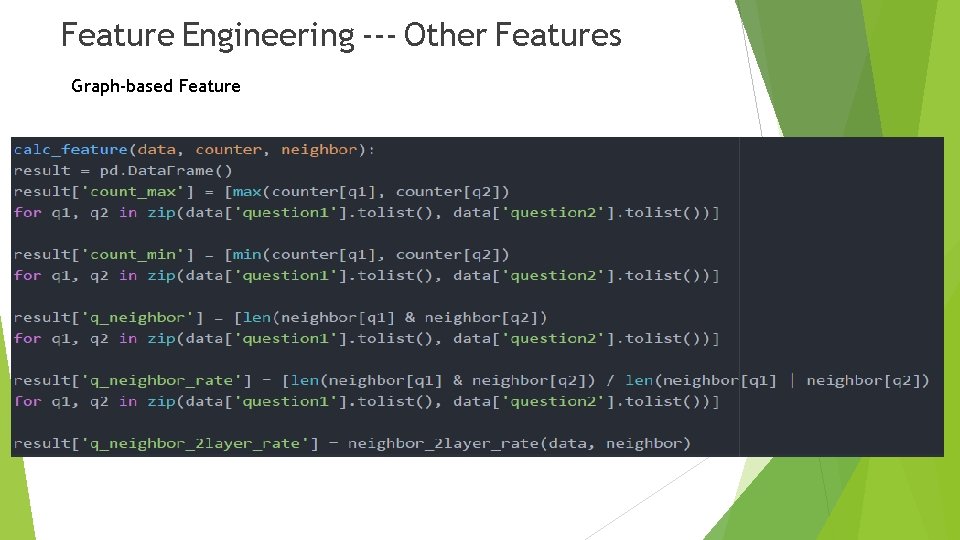

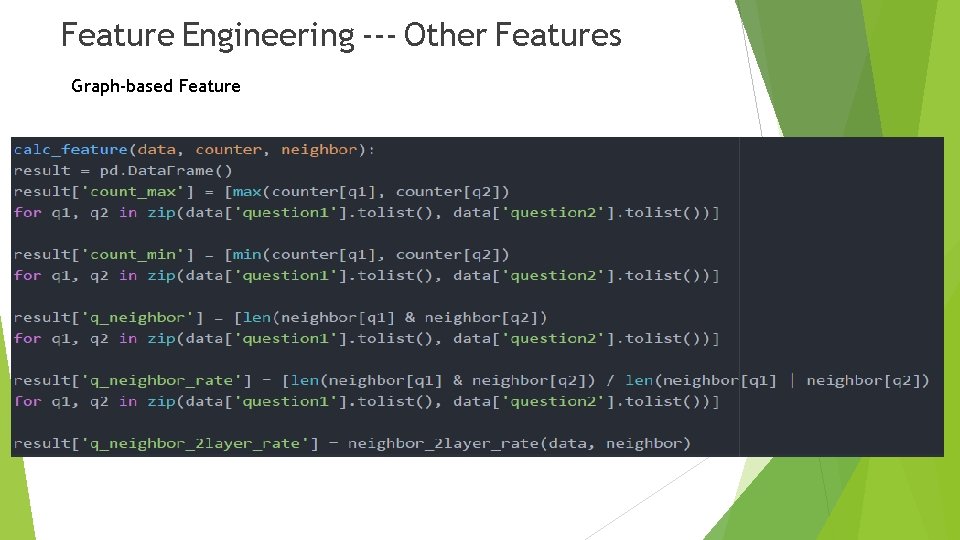

Feature Engineering --- Other Features Graph-based Feature

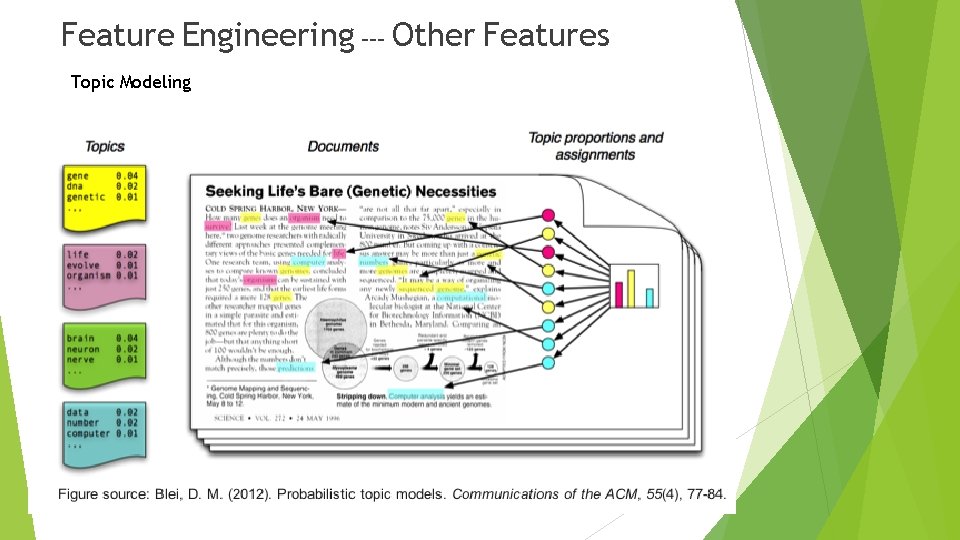

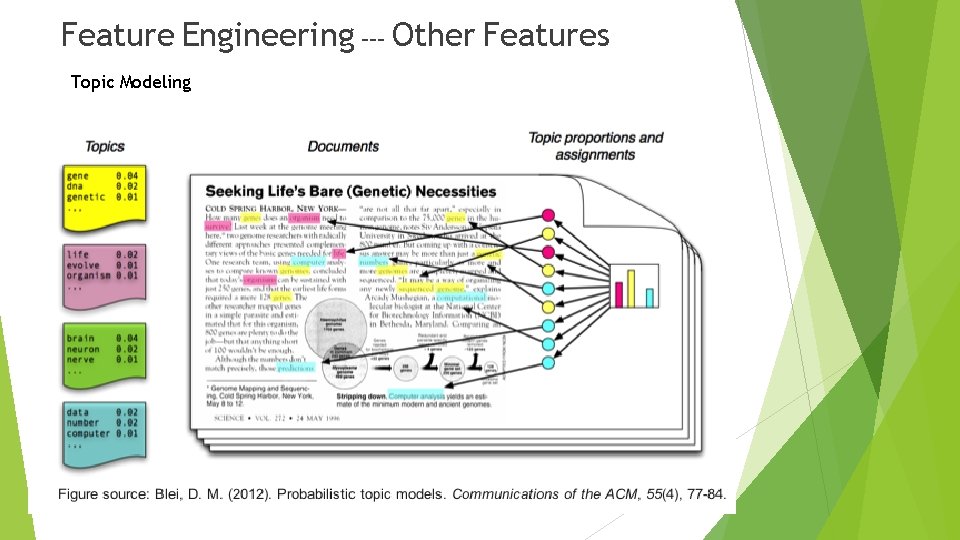

Feature Engineering --- Other Features Topic Modeling

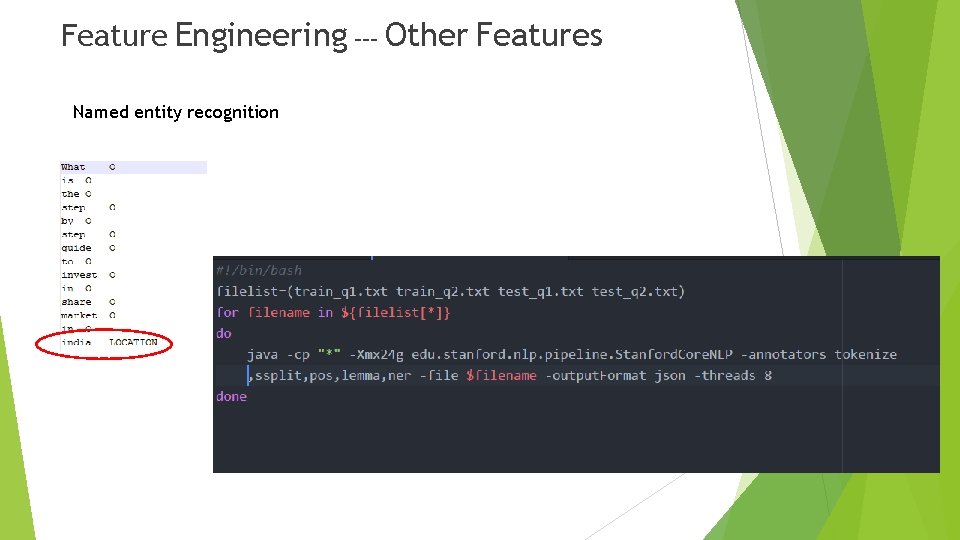

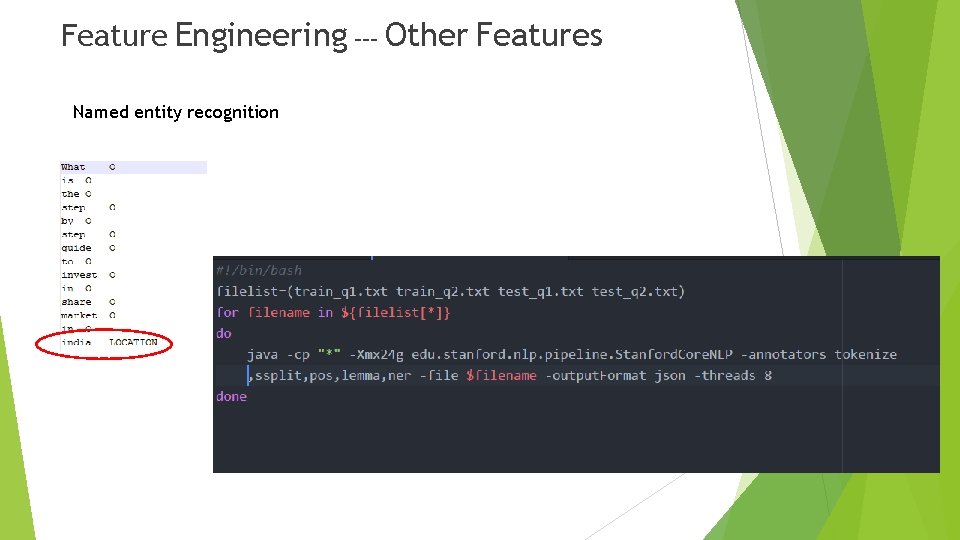

Feature Engineering --- Other Features Named entity recognition

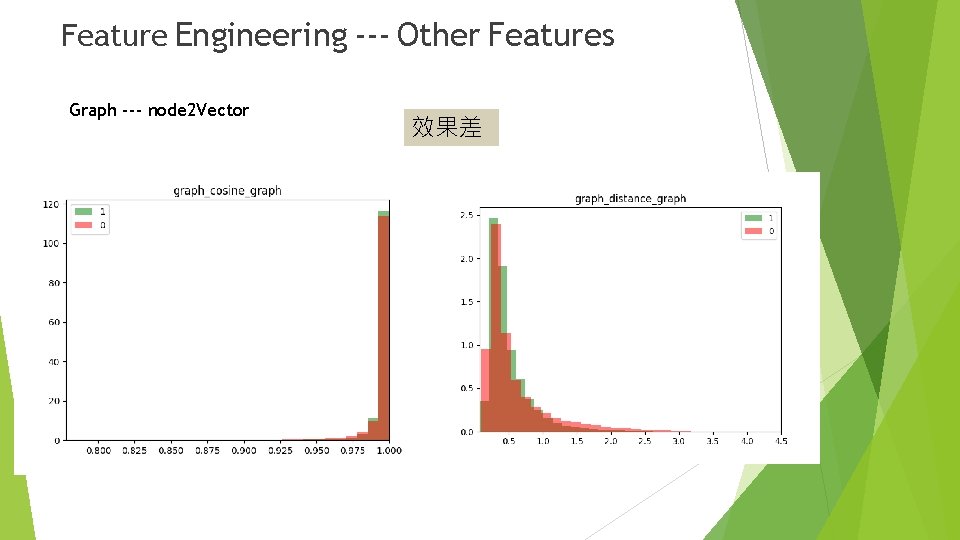

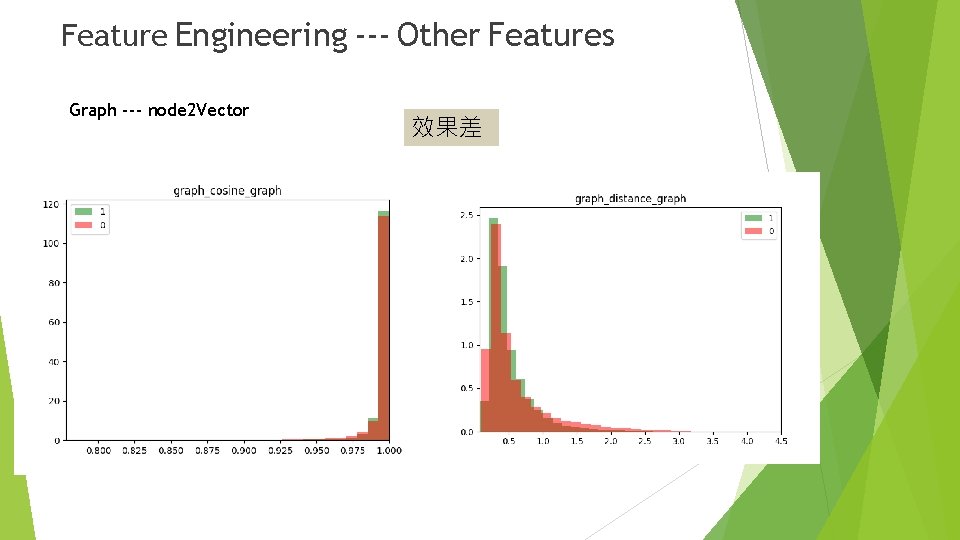

Feature Engineering --- Other Features Graph --- node 2 Vector 效果差

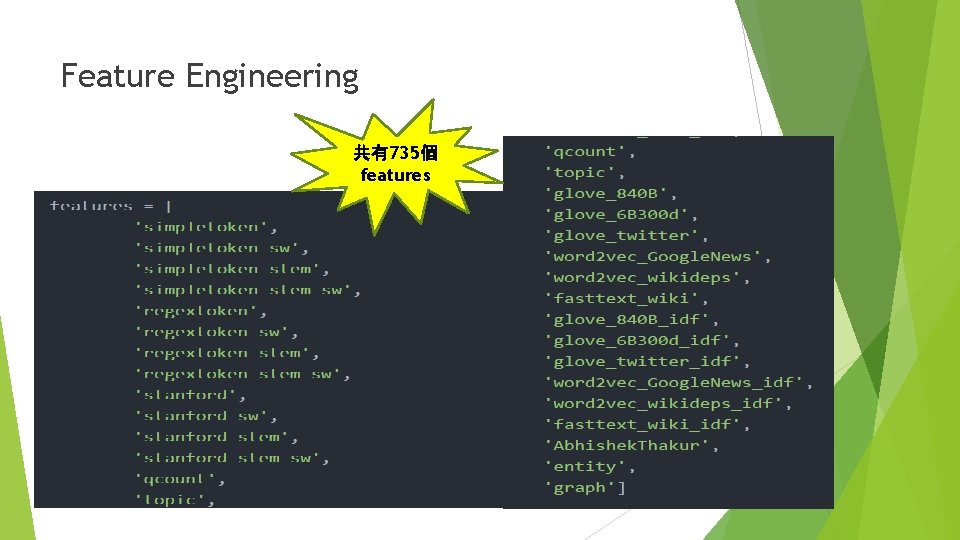

Feature Engineering 共有735個 features

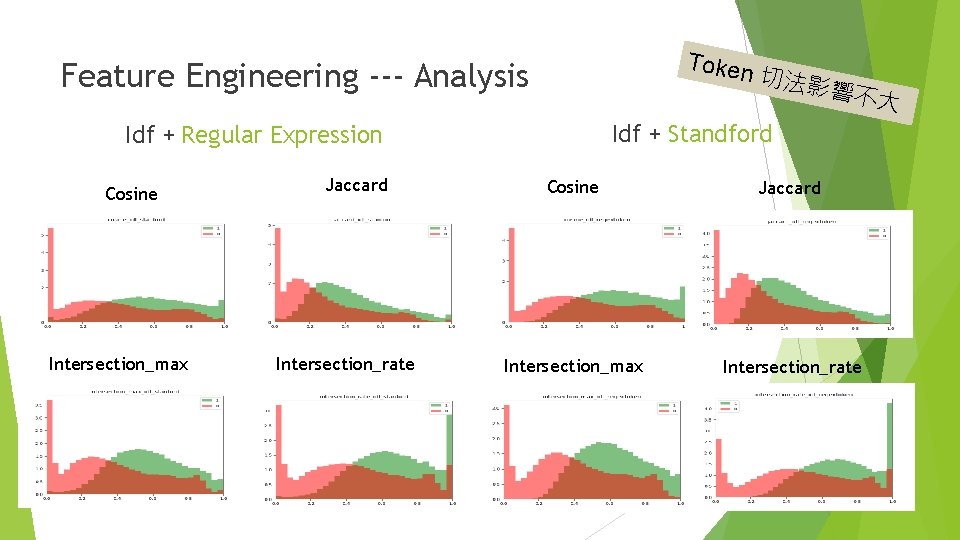

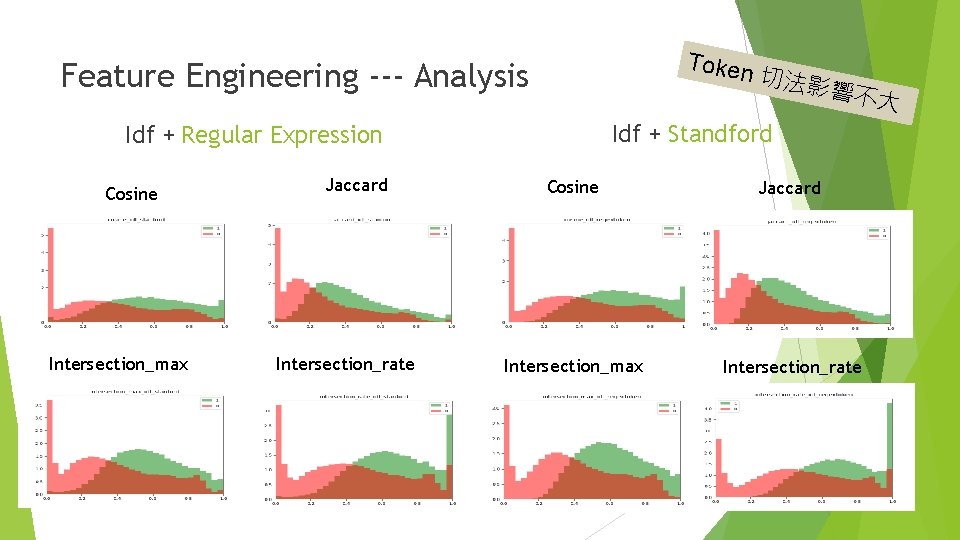

Token Feature Engineering --- Analysis Intersection_max Jaccard Intersection_rate 響不大 Idf + Standford Idf + Regular Expression Cosine 切法影 Cosine Jaccard Intersection_max Intersection_rate

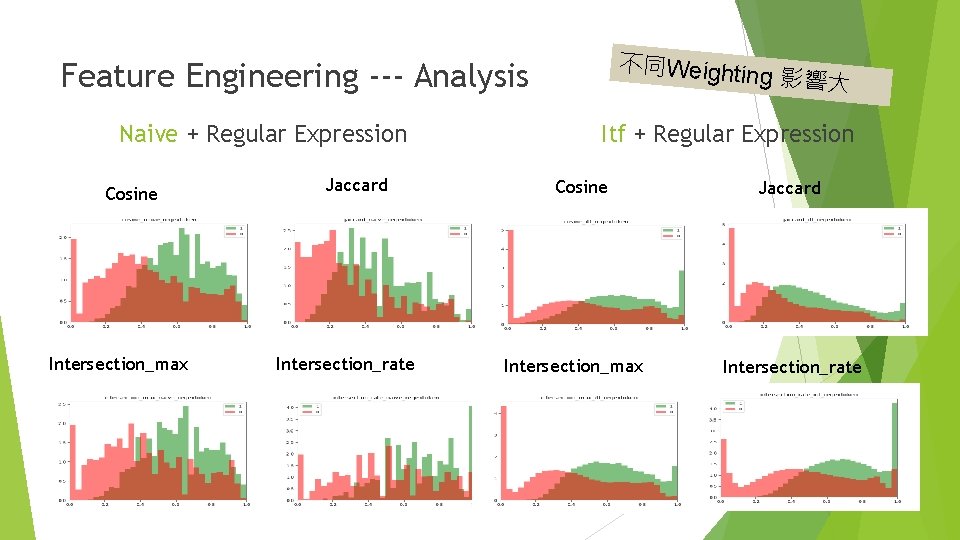

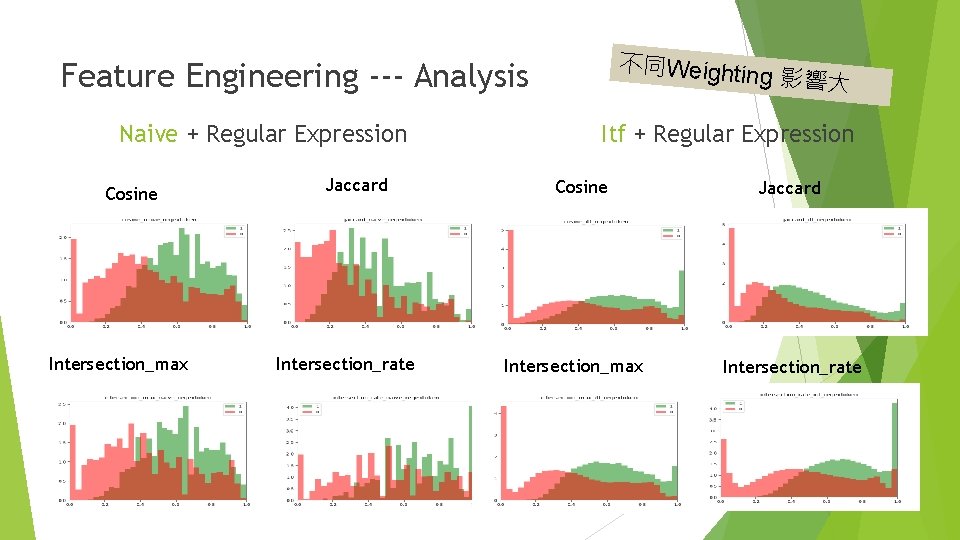

不同Weightin Feature Engineering --- Analysis Naive + Regular Expression Cosine Intersection_max Jaccard Intersection_rate g 影響大 Itf + Regular Expression Cosine Intersection_max Jaccard Intersection_rate

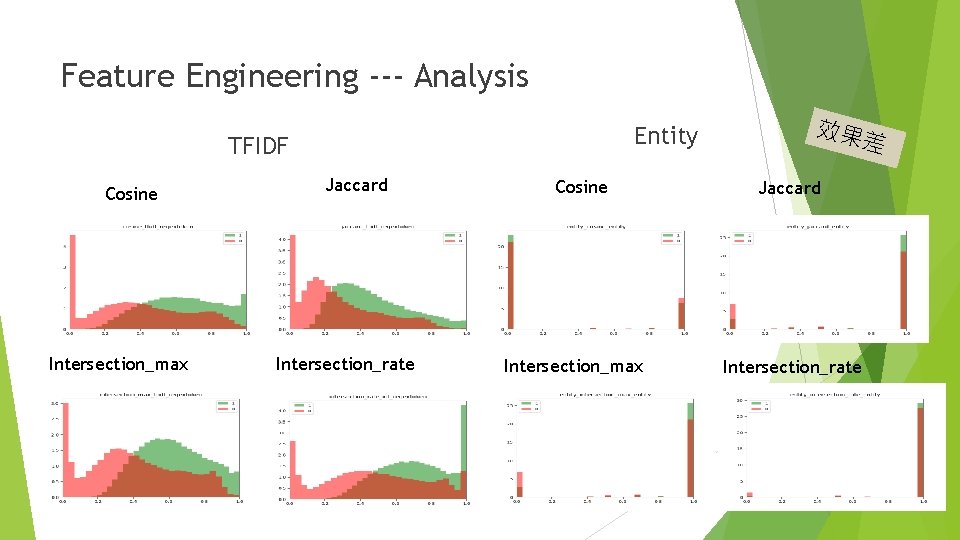

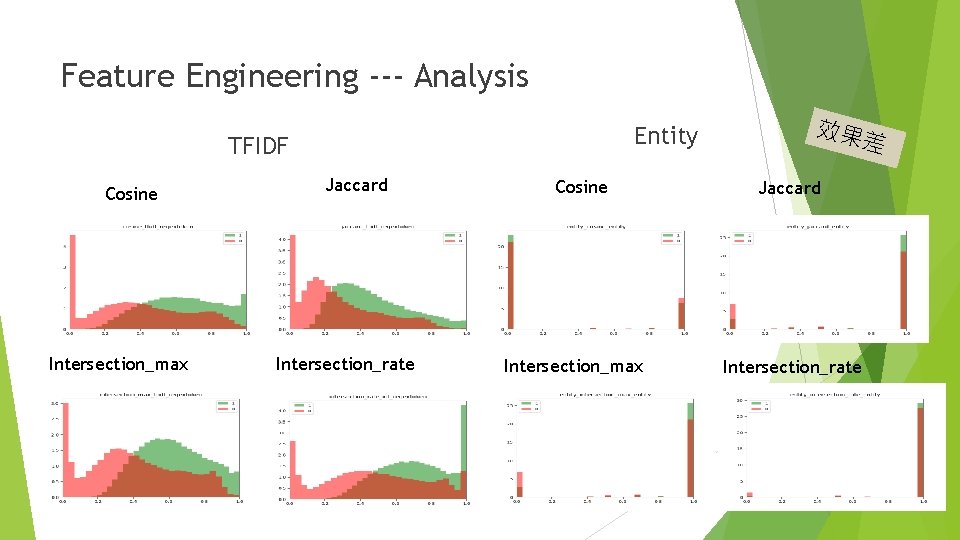

Feature Engineering --- Analysis Entity TFIDF Cosine Intersection_max Jaccard Intersection_rate Cosine Intersection_max 效果 差 Jaccard Intersection_rate

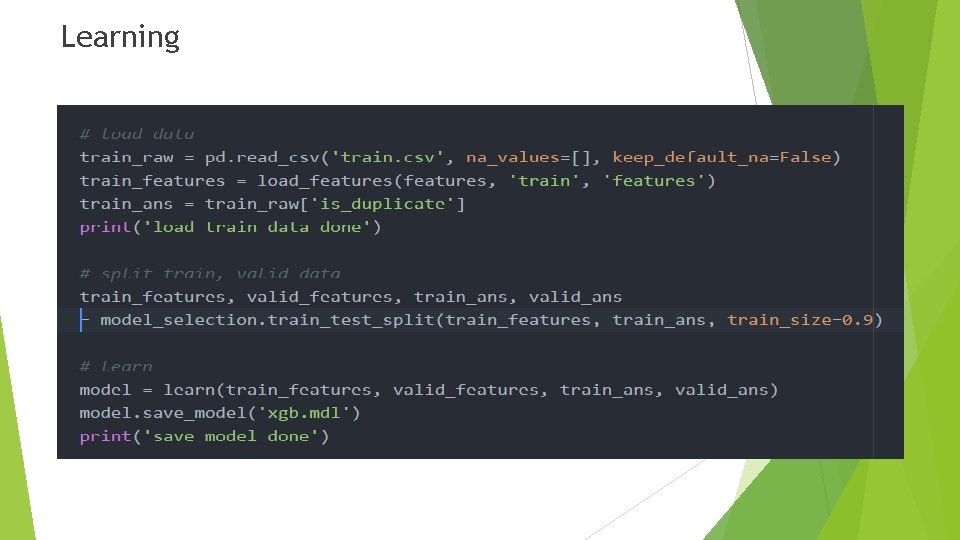

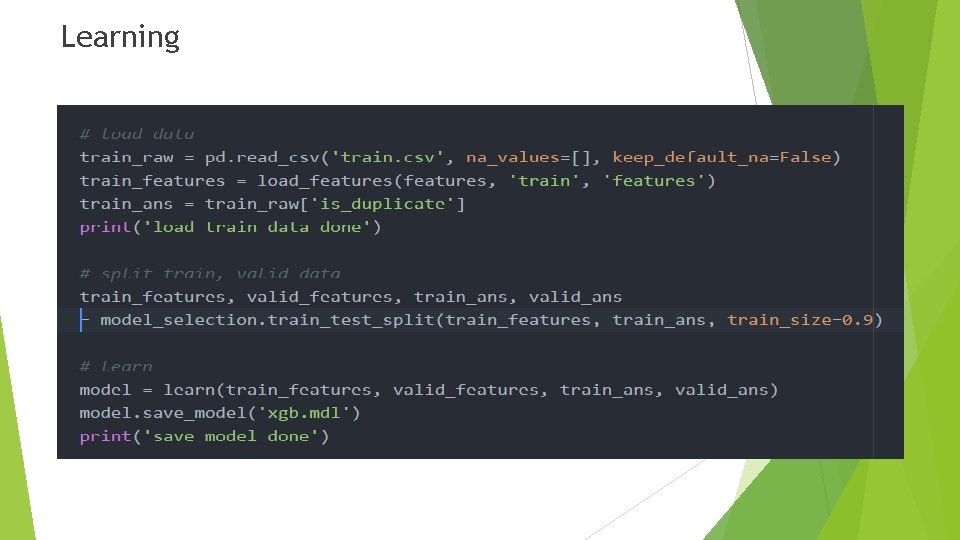

Learning

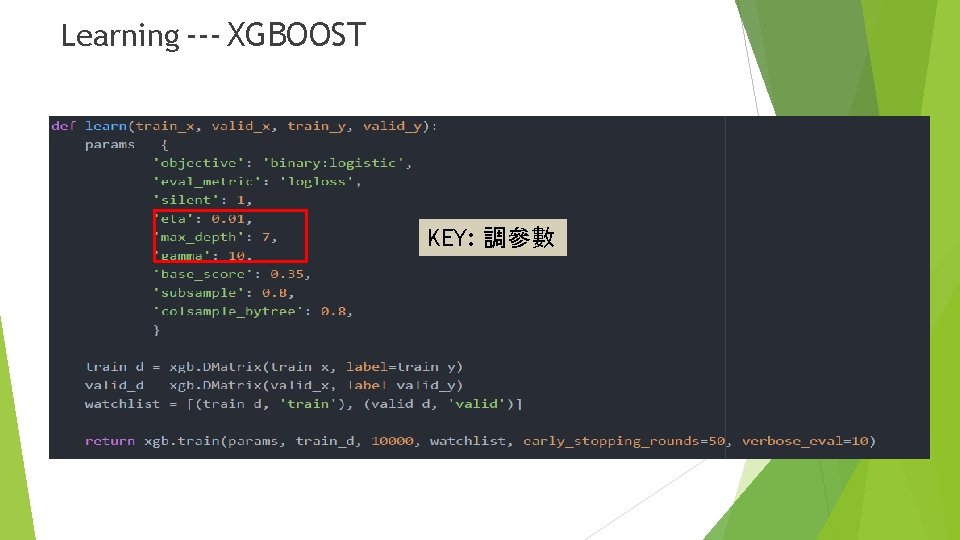

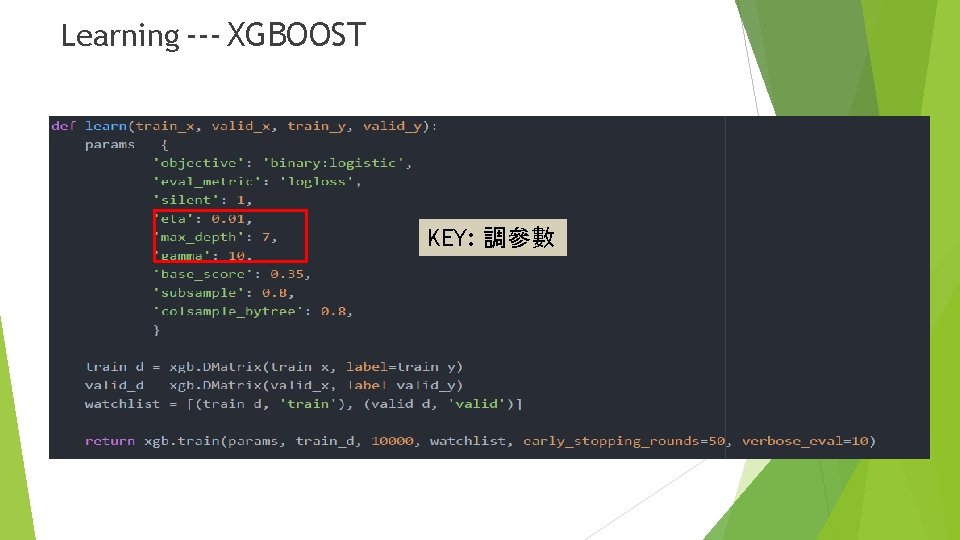

Learning --- XGBOOST KEY: 調參數

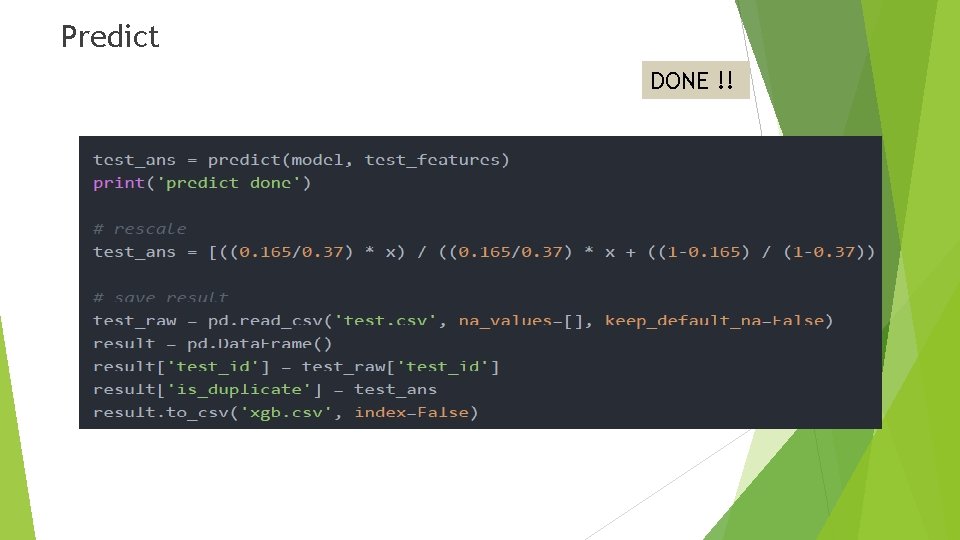

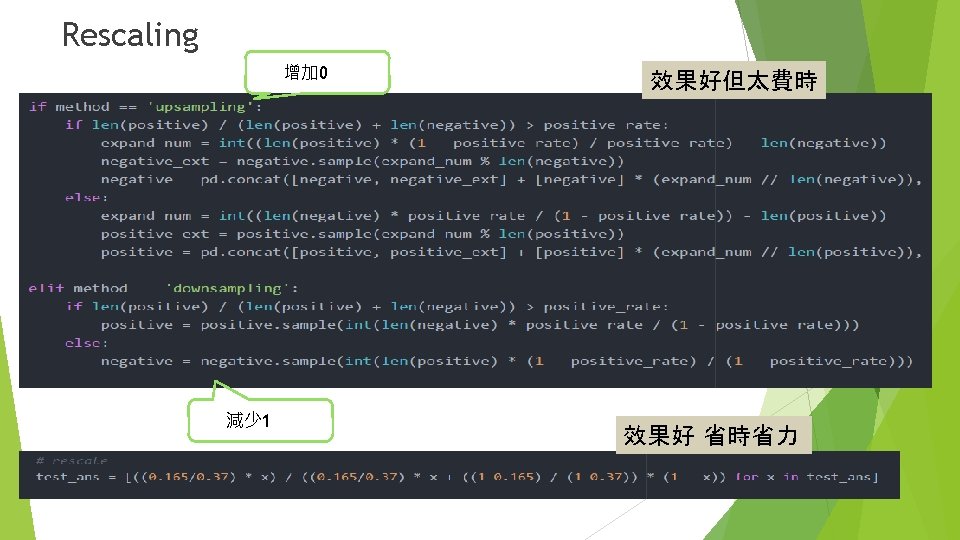

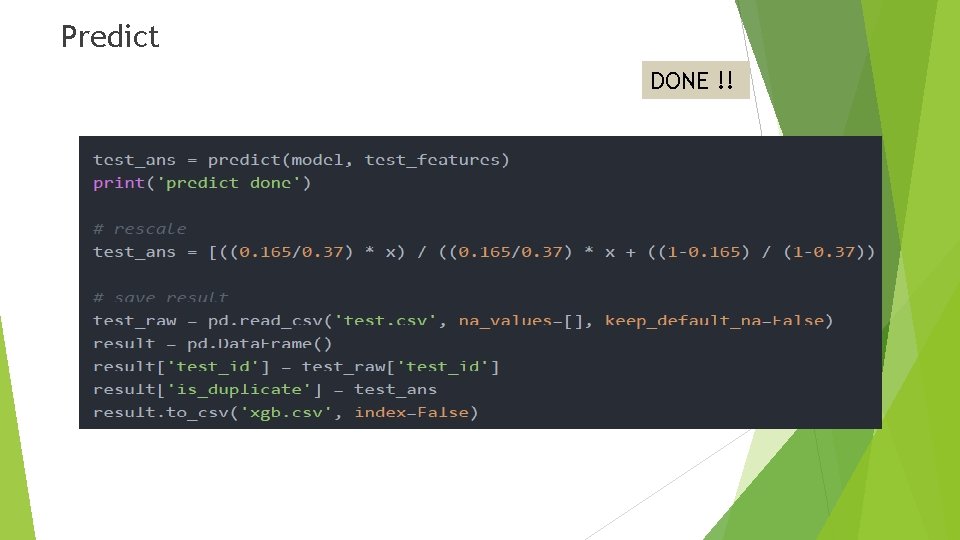

Predict DONE !!

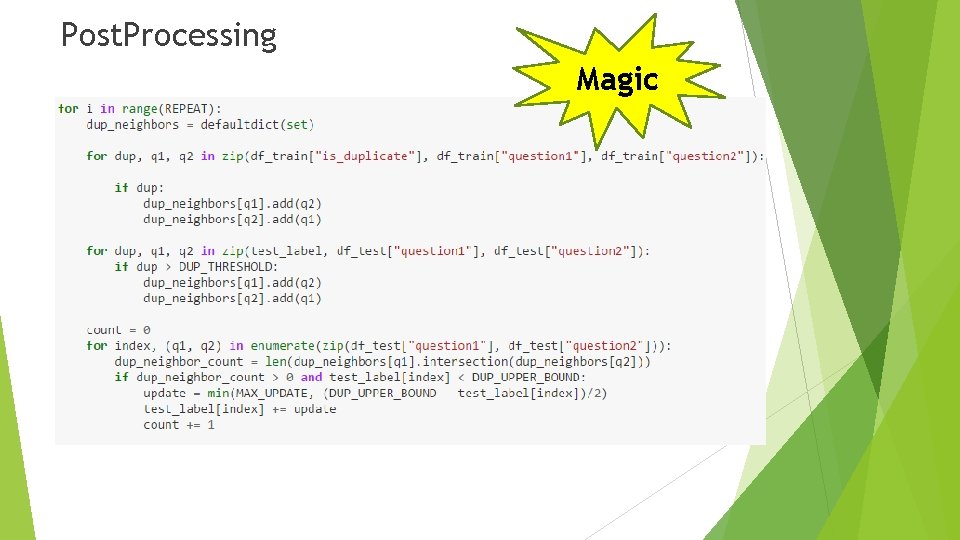

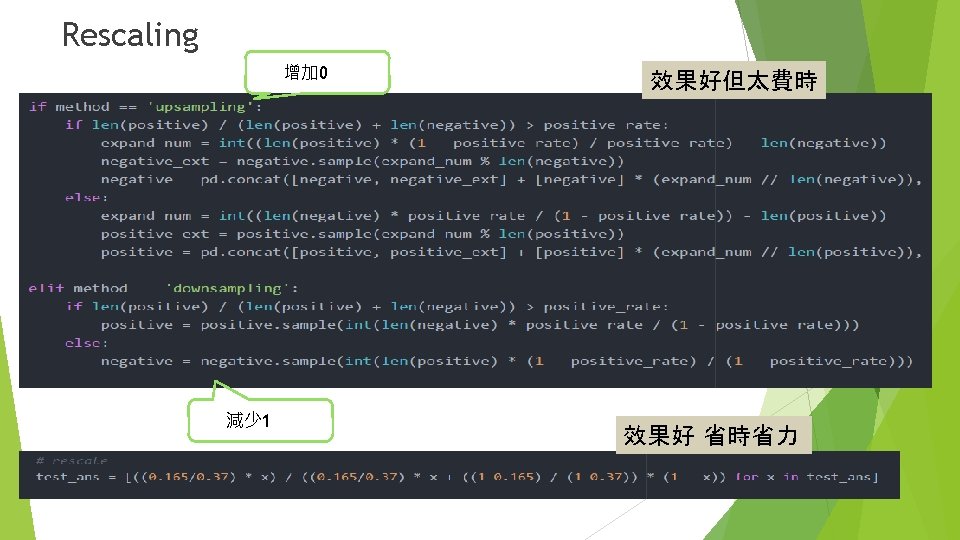

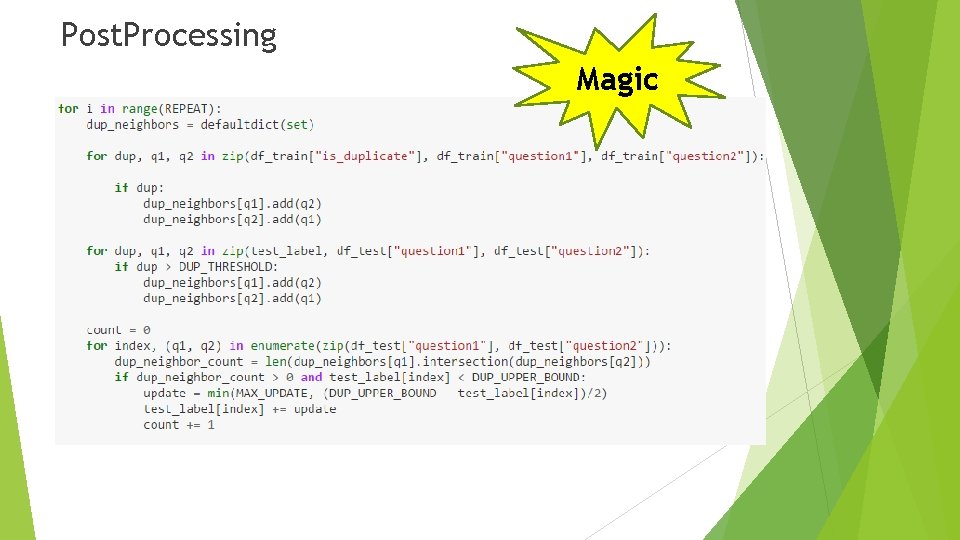

Post. Processing Magic

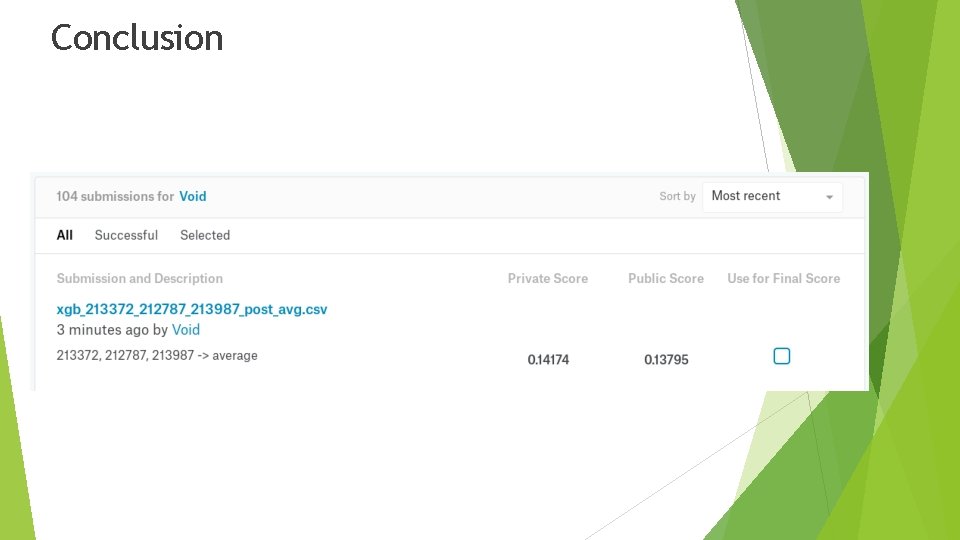

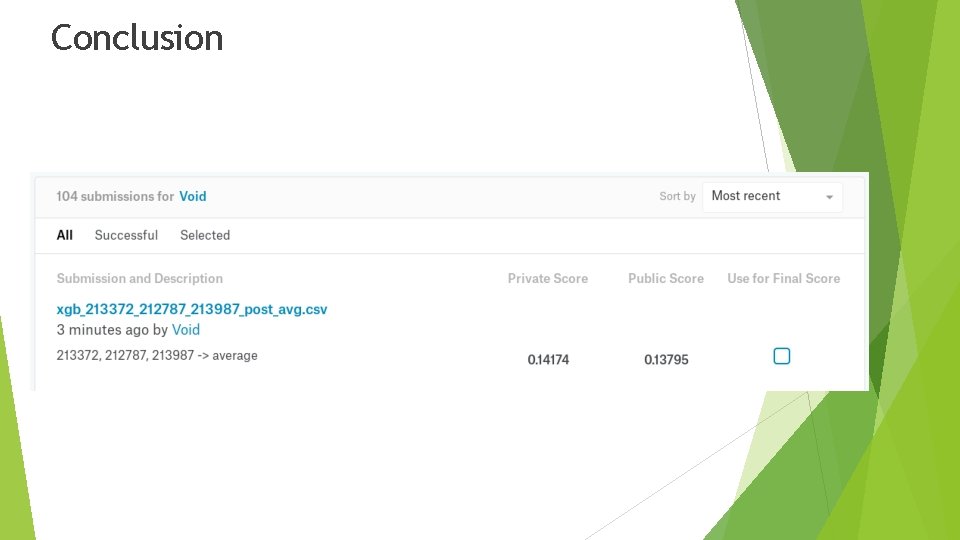

Conclusion

THANKS FOR LISTENING