Outline for Today Objectives To introduce mechanisms for

Outline for Today • Objectives: – To introduce mechanisms for synchronization • Administrative details: 1

Hardware Assistance • Most modern architectures provide some support for building synchronization: atomic read-modify-write instructions. • Example: test-and-set (loc, reg) [ sets bit to 1 in the new value of loc; returns old value of loc in reg ] • Other examples: compare-and-swap, fetch-and-op [ ] notation means atomic 2

Linux Atomic Operations (Bitwise) • No special data type – take pointer and bit number as arguments. Bit 0 is least sign. bit. • Selected operations o o set_bit clear_bit change_bit test_bit o test_and_set_bit o test_and_clear_bit o test_and_change_bit 3

Busywaiting with Test-and-Set • Declare a shared memory location to represent a busyflag on the critical section we are trying to protect. • enter_region (or acquiring the “lock”): waitloop: tsl busyflag, R 0 // R 0 busyflag; busyflag 1 bnz R 0, waitloop // was it already set? • exit region (or releasing the “lock”): busyflag 0 4

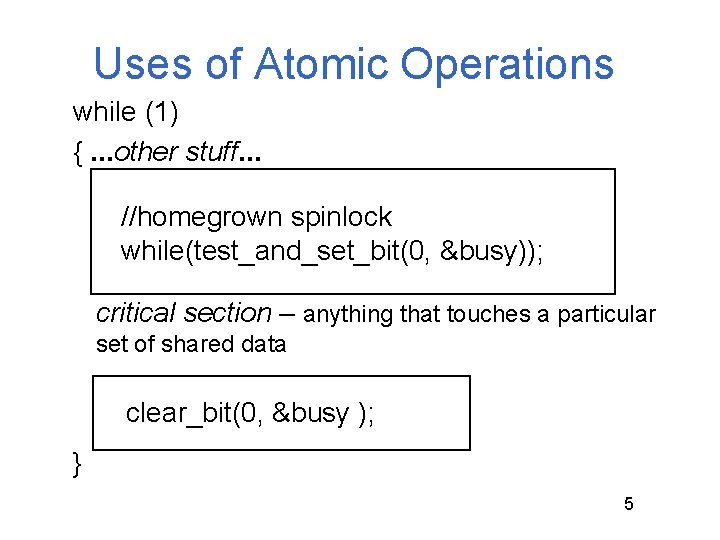

Uses of Atomic Operations while (1) {. . . other stuff. . . //homegrown spinlock while(test_and_set_bit(0, &busy)); critical section – anything that touches a particular set of shared data clear_bit(0, &busy ); } 5

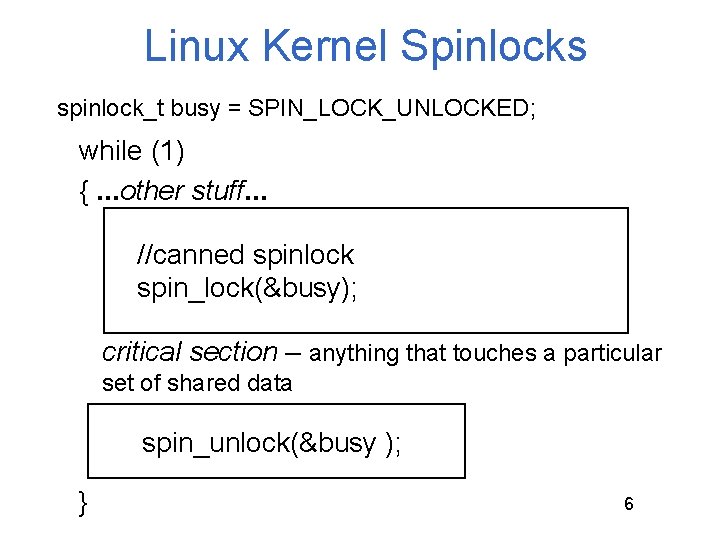

Linux Kernel Spinlocks spinlock_t busy = SPIN_LOCK_UNLOCKED; while (1) {. . . other stuff. . . //canned spinlock spin_lock(&busy); critical section – anything that touches a particular set of shared data spin_unlock(&busy ); } 6

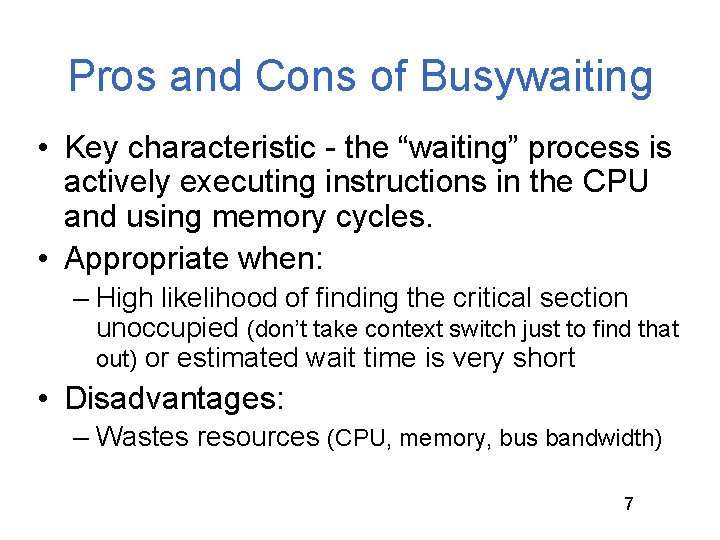

Pros and Cons of Busywaiting • Key characteristic - the “waiting” process is actively executing instructions in the CPU and using memory cycles. • Appropriate when: – High likelihood of finding the critical section unoccupied (don’t take context switch just to find that out) or estimated wait time is very short • Disadvantages: – Wastes resources (CPU, memory, bus bandwidth) 7

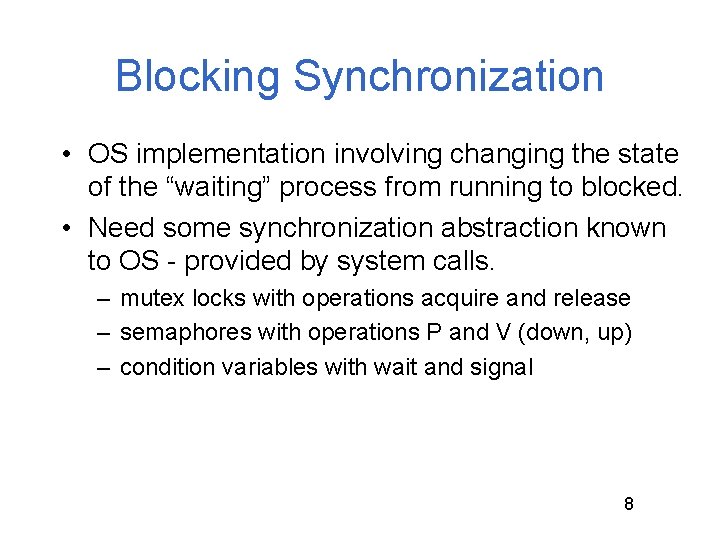

Blocking Synchronization • OS implementation involving changing the state of the “waiting” process from running to blocked. • Need some synchronization abstraction known to OS - provided by system calls. – mutex locks with operations acquire and release – semaphores with operations P and V (down, up) – condition variables with wait and signal 8

Template for Implementing Blocking Synchronization • Associated with the lock is a memory location (busy) and a queue for waiting threads/processes. • Acquire syscall: while (busy) {enqueue caller on lock’s queue} /*upon waking to nonbusy lock*/ busy = true; • Release syscall: busy = false; /* wakeup */ move any waiting threads to Ready queue 9

Pros and Cons of Blocking • Waiting processes/threads don’t consume CPU cycles • Appropriate: when the cost of a system call is justified by expected waiting time – High likelihood of contention for lock – Long critical sections • Disadvantage: OS involvement -> overhead 10

Synchronization Primitives (Abstractions*) *implementable by busywaiting or blocking 13

14

15

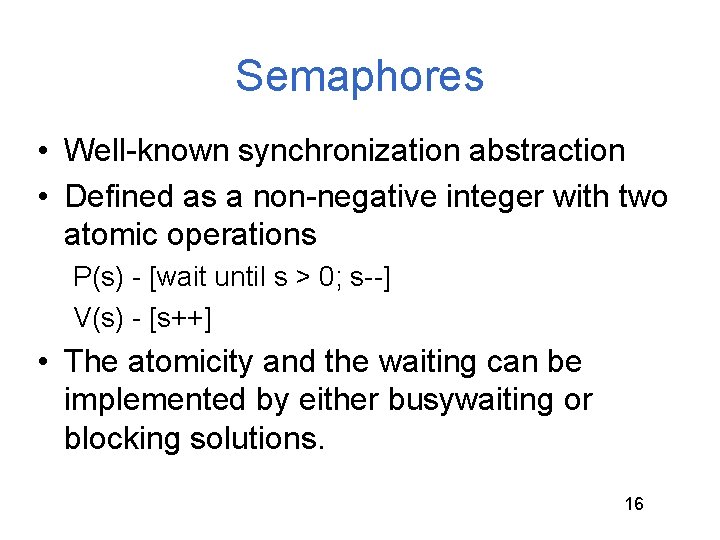

Semaphores • Well-known synchronization abstraction • Defined as a non-negative integer with two atomic operations P(s) - [wait until s > 0; s--] V(s) - [s++] • The atomicity and the waiting can be implemented by either busywaiting or blocking solutions. 16

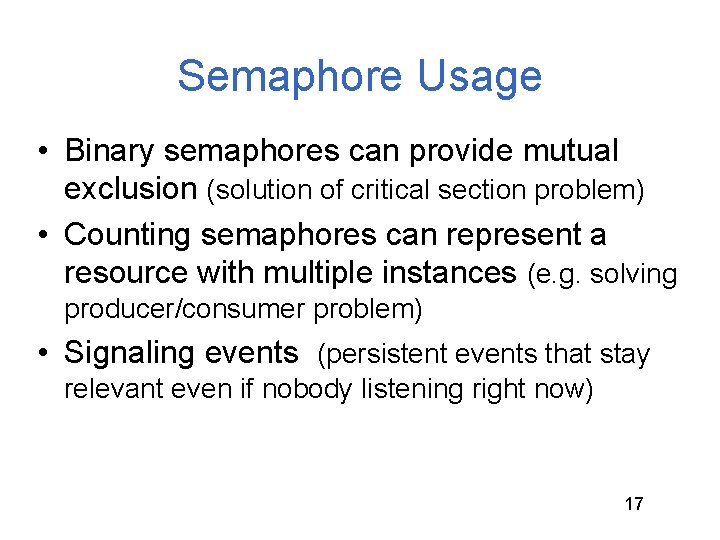

Semaphore Usage • Binary semaphores can provide mutual exclusion (solution of critical section problem) • Counting semaphores can represent a resource with multiple instances (e. g. solving producer/consumer problem) • Signaling events (persistent events that stay relevant even if nobody listening right now) 17

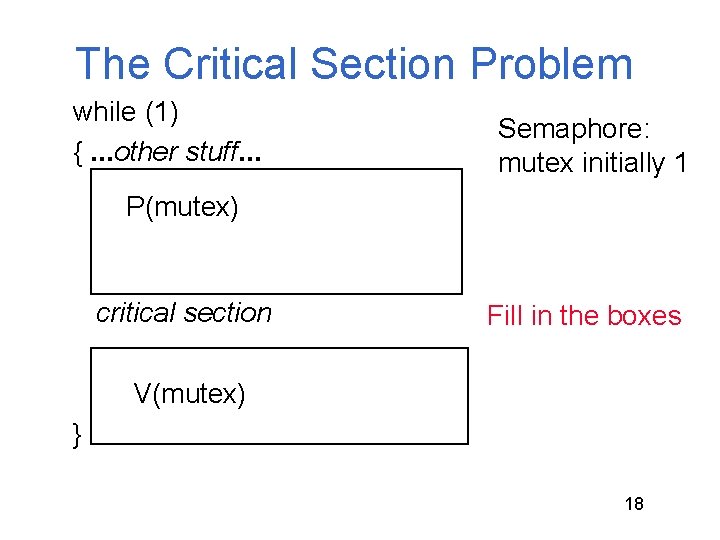

The Critical Section Problem while (1) {. . . other stuff. . . Semaphore: mutex initially 1 P(mutex) critical section Fill in the boxes V(mutex) } 18

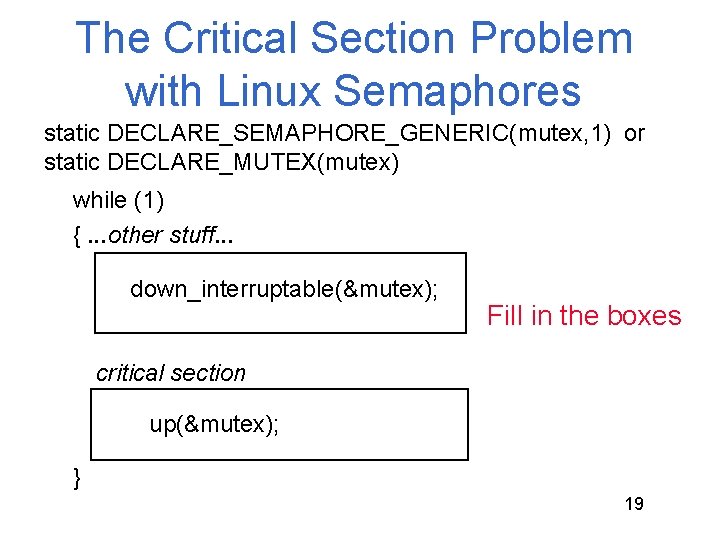

The Critical Section Problem with Linux Semaphores static DECLARE_SEMAPHORE_GENERIC(mutex, 1) or static DECLARE_MUTEX(mutex) while (1) {. . . other stuff. . . down_interruptable(&mutex); Fill in the boxes critical section up(&mutex); } 19

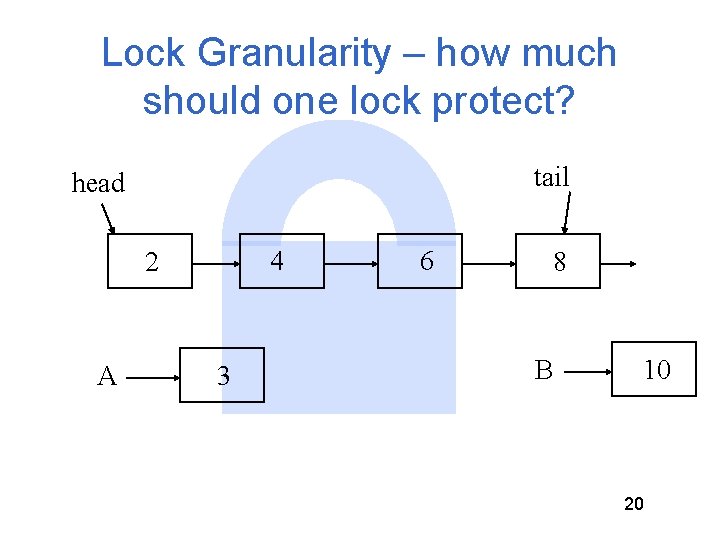

Lock Granularity – how much should one lock protect? tail head 4 2 A 3 6 8 B 10 20

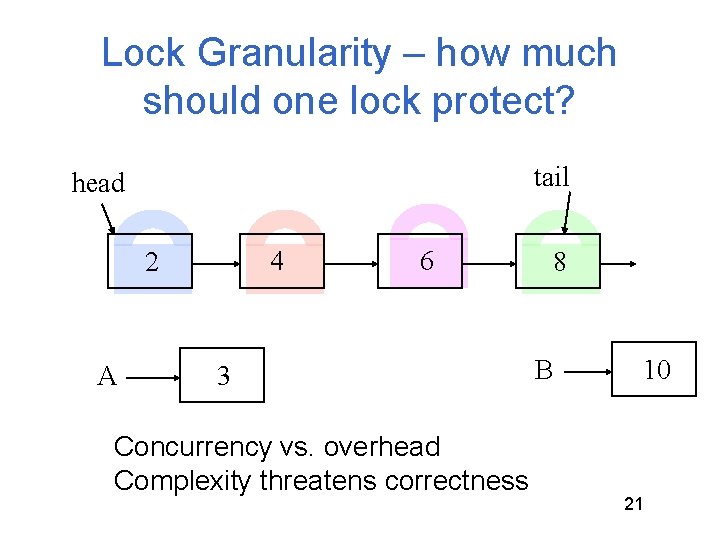

Lock Granularity – how much should one lock protect? tail head 4 2 A 6 3 Concurrency vs. overhead Complexity threatens correctness 8 B 10 21

Classic Synchronization Problems There a number of “classic” problems that represent a class of synchronization situations • Critical Section problem • Producer/Consumer problem • Reader/Writer problem • 5 Dining Philosophers Why? Once you know the “generic” solutions, you can recognize other special cases in which to apply them (e. g. , this is just a version of the reader/writer problem) 22

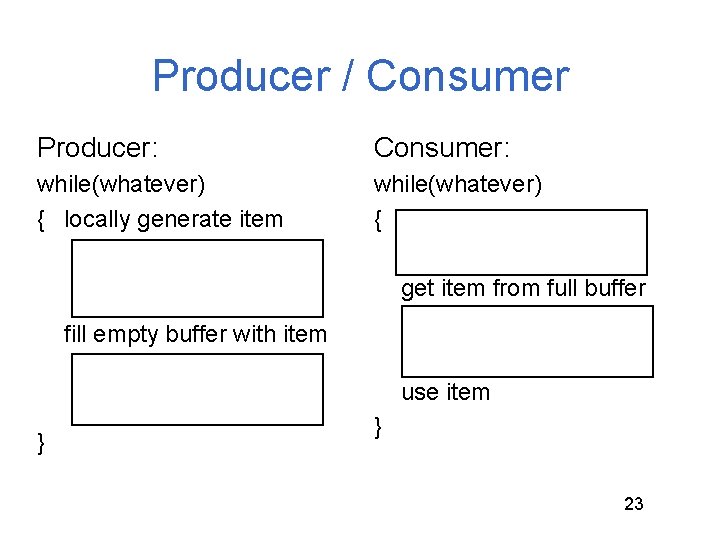

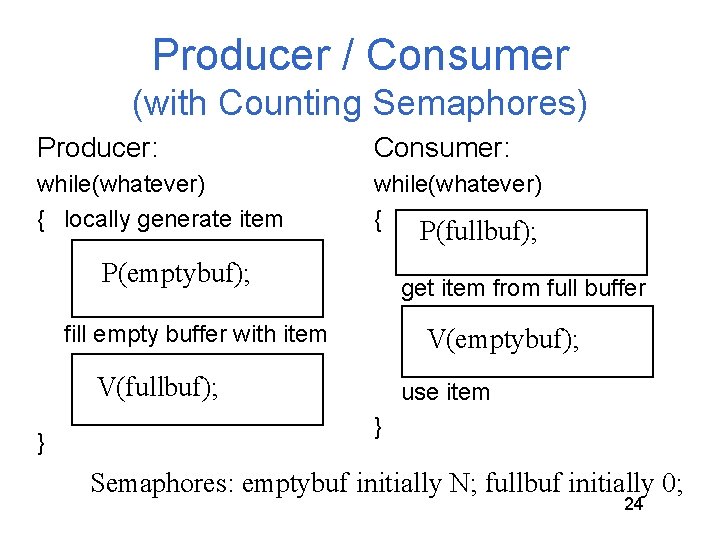

Producer / Consumer Producer: Consumer: while(whatever) { locally generate item while(whatever) { get item from full buffer fill empty buffer with item use item } } 23

Producer / Consumer (with Counting Semaphores) Producer: Consumer: while(whatever) { locally generate item while(whatever) { P(fullbuf); P(emptybuf); get item from full buffer fill empty buffer with item V(emptybuf); V(fullbuf); } use item } Semaphores: emptybuf initially N; fullbuf initially 0; 24

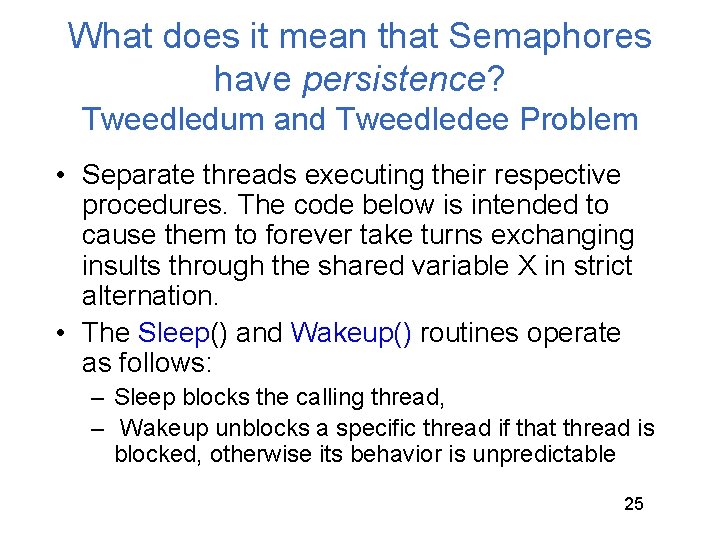

What does it mean that Semaphores have persistence? Tweedledum and Tweedledee Problem • Separate threads executing their respective procedures. The code below is intended to cause them to forever take turns exchanging insults through the shared variable X in strict alternation. • The Sleep() and Wakeup() routines operate as follows: – Sleep blocks the calling thread, – Wakeup unblocks a specific thread if that thread is blocked, otherwise its behavior is unpredictable 25

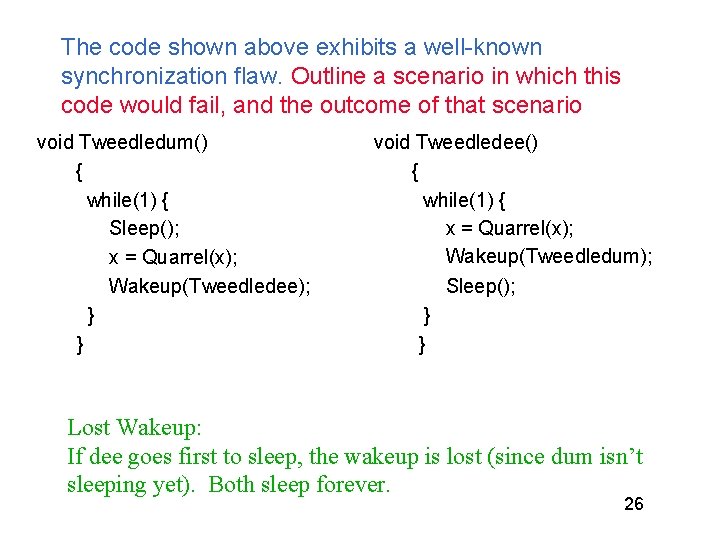

The code shown above exhibits a well-known synchronization flaw. Outline a scenario in which this code would fail, and the outcome of that scenario void Tweedledum() { while(1) { Sleep(); x = Quarrel(x); Wakeup(Tweedledee); } } void Tweedledee() { while(1) { x = Quarrel(x); Wakeup(Tweedledum); Sleep(); } } Lost Wakeup: If dee goes first to sleep, the wakeup is lost (since dum isn’t sleeping yet). Both sleep forever. 26

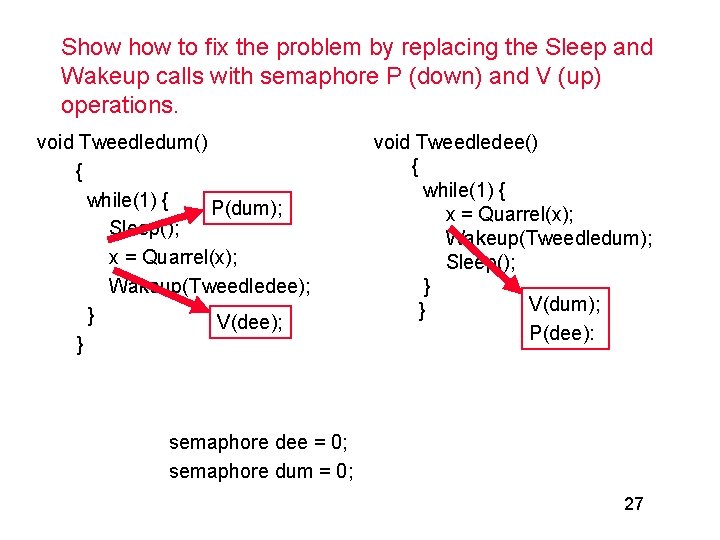

Show to fix the problem by replacing the Sleep and Wakeup calls with semaphore P (down) and V (up) operations. void Tweedledum() { while(1) { P(dum); Sleep(); x = Quarrel(x); Wakeup(Tweedledee); } V(dee); } void Tweedledee() { while(1) { x = Quarrel(x); Wakeup(Tweedledum); Sleep(); } V(dum); } P(dee): semaphore dee = 0; semaphore dum = 0; 27

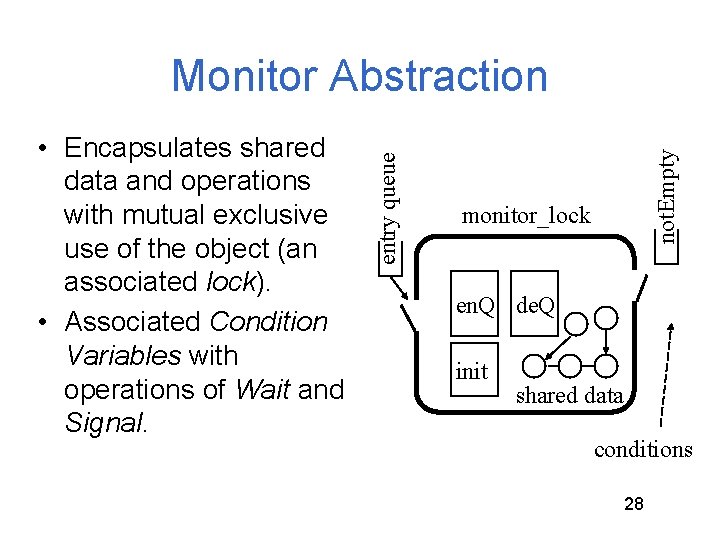

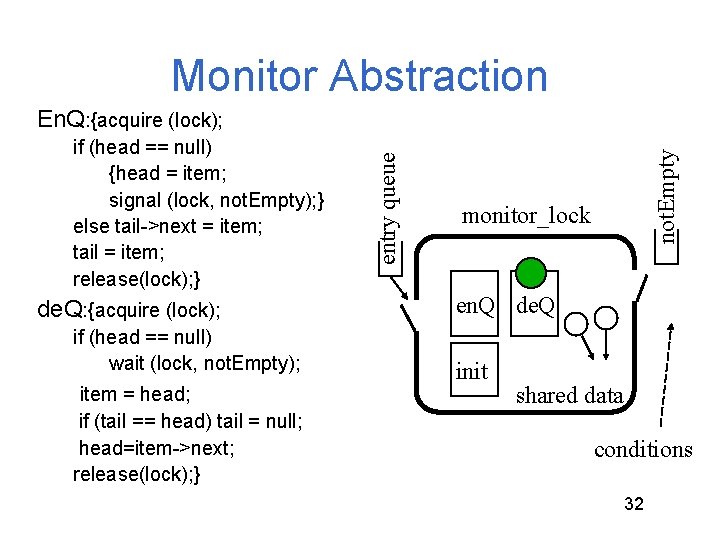

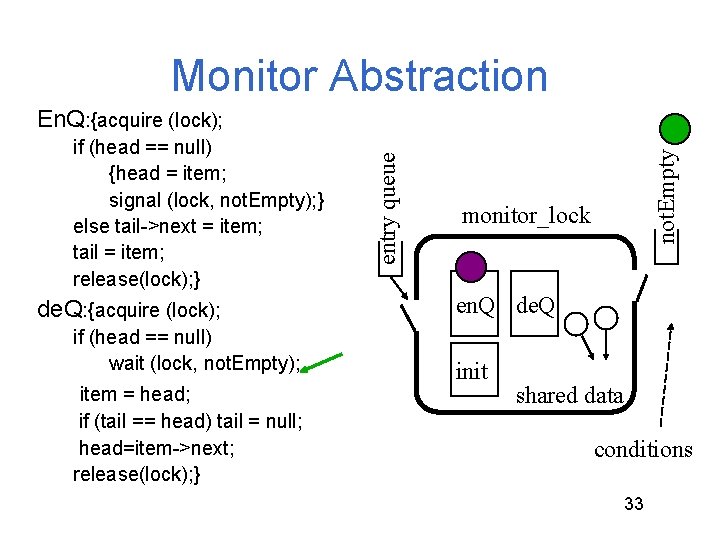

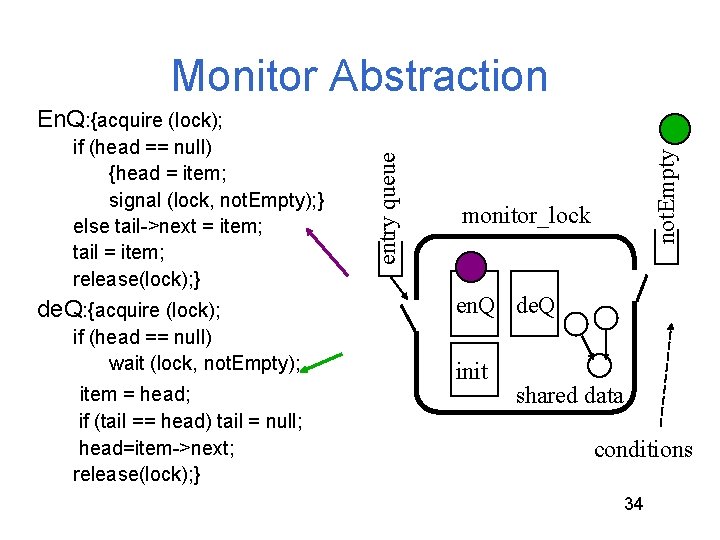

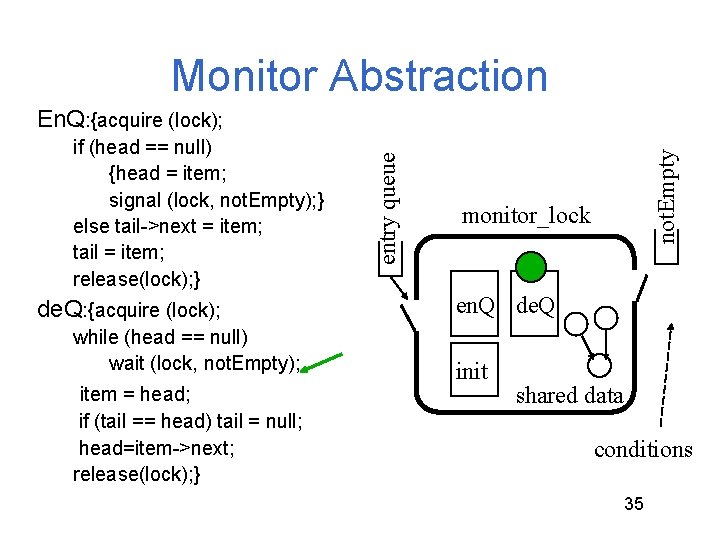

not. Empty • Encapsulates shared data and operations with mutual exclusive use of the object (an associated lock). • Associated Condition Variables with operations of Wait and Signal. entry queue Monitor Abstraction monitor_lock en. Q de. Q init shared data conditions 28

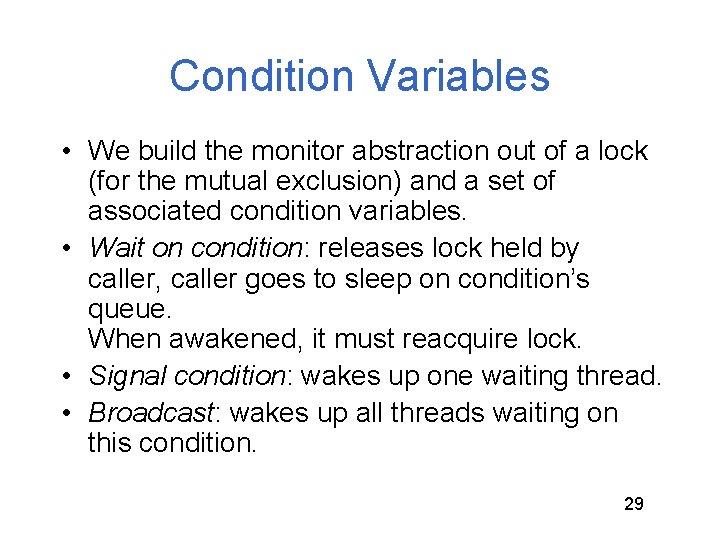

Condition Variables • We build the monitor abstraction out of a lock (for the mutual exclusion) and a set of associated condition variables. • Wait on condition: releases lock held by caller, caller goes to sleep on condition’s queue. When awakened, it must reacquire lock. • Signal condition: wakes up one waiting thread. • Broadcast: wakes up all threads waiting on this condition. 29

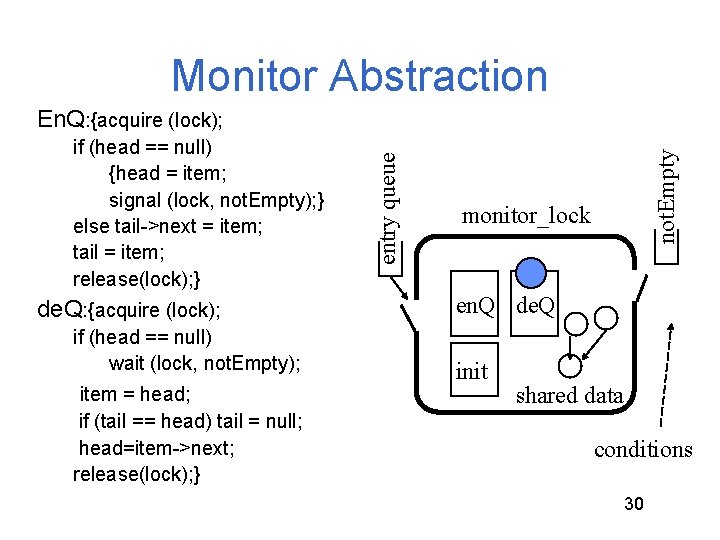

Monitor Abstraction de. Q: {acquire (lock); if (head == null) wait (lock, not. Empty); item = head; if (tail == head) tail = null; head=item->next; release(lock); } not. Empty if (head == null) {head = item; signal (lock, not. Empty); } else tail->next = item; tail = item; release(lock); } entry queue En. Q: {acquire (lock); monitor_lock en. Q de. Q init shared data conditions 30

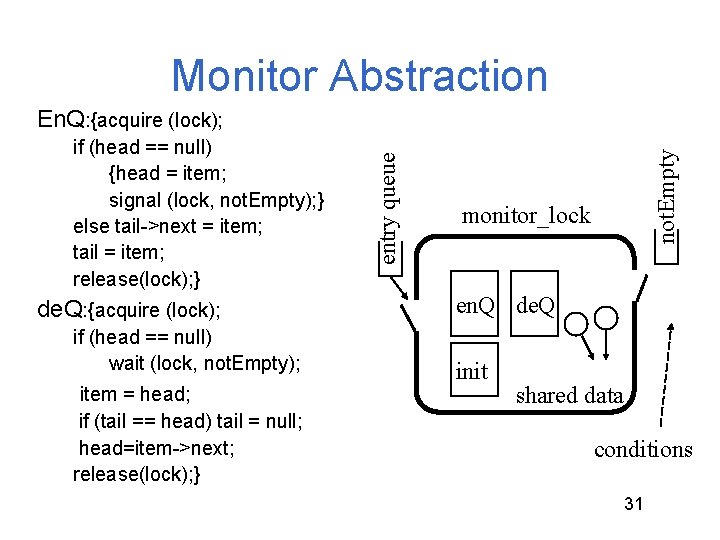

Monitor Abstraction de. Q: {acquire (lock); if (head == null) wait (lock, not. Empty); item = head; if (tail == head) tail = null; head=item->next; release(lock); } not. Empty if (head == null) {head = item; signal (lock, not. Empty); } else tail->next = item; tail = item; release(lock); } entry queue En. Q: {acquire (lock); monitor_lock en. Q de. Q init shared data conditions 31

Monitor Abstraction de. Q: {acquire (lock); if (head == null) wait (lock, not. Empty); item = head; if (tail == head) tail = null; head=item->next; release(lock); } not. Empty if (head == null) {head = item; signal (lock, not. Empty); } else tail->next = item; tail = item; release(lock); } entry queue En. Q: {acquire (lock); monitor_lock en. Q de. Q init shared data conditions 32

Monitor Abstraction de. Q: {acquire (lock); if (head == null) wait (lock, not. Empty); item = head; if (tail == head) tail = null; head=item->next; release(lock); } not. Empty if (head == null) {head = item; signal (lock, not. Empty); } else tail->next = item; tail = item; release(lock); } entry queue En. Q: {acquire (lock); monitor_lock en. Q de. Q init shared data conditions 33

Monitor Abstraction de. Q: {acquire (lock); if (head == null) wait (lock, not. Empty); item = head; if (tail == head) tail = null; head=item->next; release(lock); } not. Empty if (head == null) {head = item; signal (lock, not. Empty); } else tail->next = item; tail = item; release(lock); } entry queue En. Q: {acquire (lock); monitor_lock en. Q de. Q init shared data conditions 34

Monitor Abstraction de. Q: {acquire (lock); while (head == null) wait (lock, not. Empty); item = head; if (tail == head) tail = null; head=item->next; release(lock); } not. Empty if (head == null) {head = item; signal (lock, not. Empty); } else tail->next = item; tail = item; release(lock); } entry queue En. Q: {acquire (lock); monitor_lock en. Q de. Q init shared data conditions 35

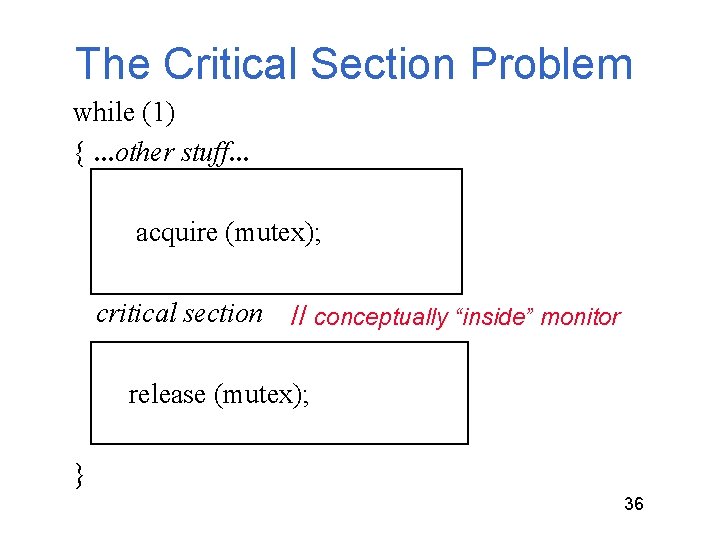

The Critical Section Problem while (1) {. . . other stuff. . . acquire (mutex); critical section // conceptually “inside” monitor release (mutex); } 36

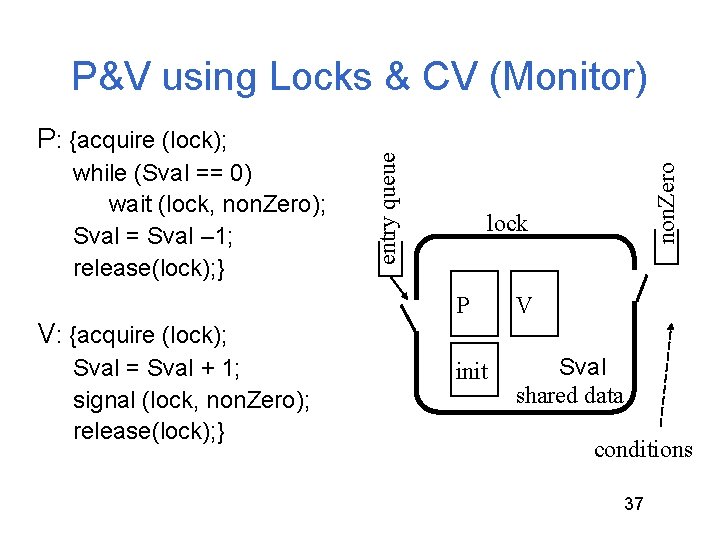

while (Sval == 0) wait (lock, non. Zero); Sval = Sval – 1; release(lock); } V: {acquire (lock); Sval = Sval + 1; signal (lock, non. Zero); release(lock); } non. Zero P: {acquire (lock); entry queue P&V using Locks & CV (Monitor) lock P V init Sval shared data conditions 37

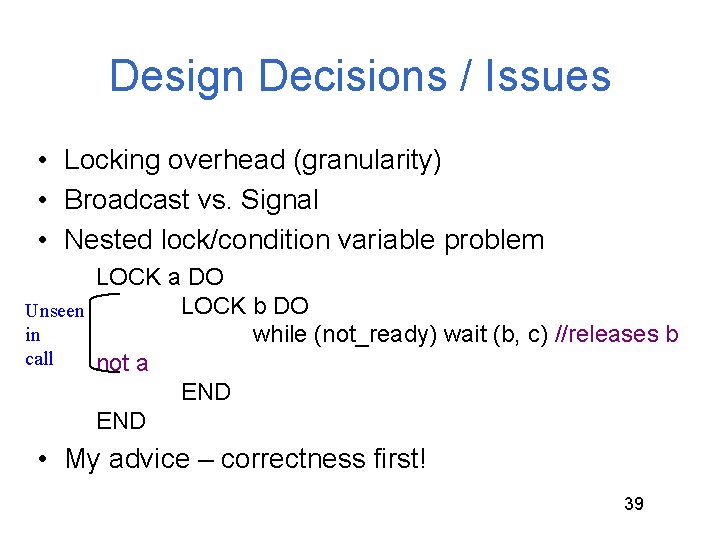

Design Decisions / Issues • Locking overhead (granularity) • Broadcast vs. Signal • Nested lock/condition variable problem LOCK a DO LOCK b DO Unseen in while (not_ready) wait (b, c) //releases b call not a END • My advice – correctness first! 39

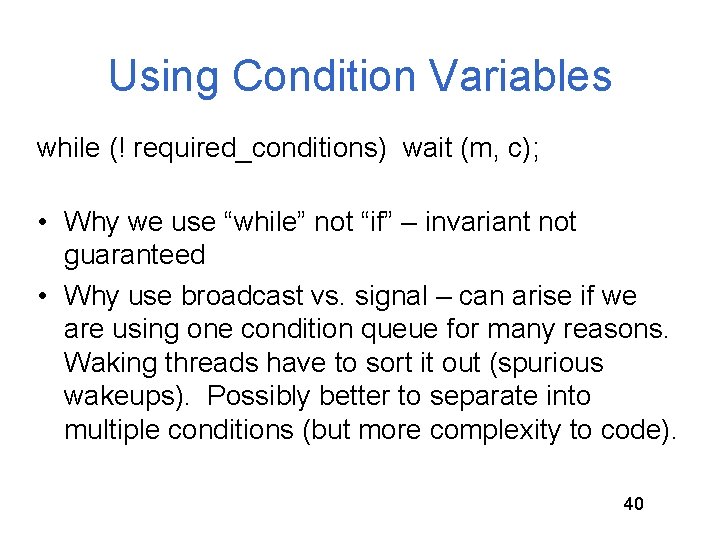

Using Condition Variables while (! required_conditions) wait (m, c); • Why we use “while” not “if” – invariant not guaranteed • Why use broadcast vs. signal – can arise if we are using one condition queue for many reasons. Waking threads have to sort it out (spurious wakeups). Possibly better to separate into multiple conditions (but more complexity to code). 40

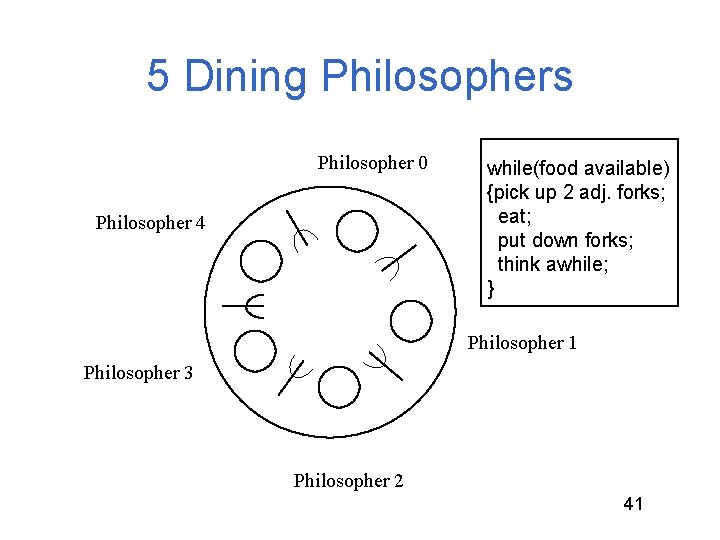

5 Dining Philosophers Philosopher 0 Philosopher 4 while(food available) {pick up 2 adj. forks; eat; put down forks; think awhile; } Philosopher 1 Philosopher 3 Philosopher 2 41

Template for Philosopher while (food available) { /*pick up forks*/ eat; /*put down forks*/ think awhile; } 42

![Naive Solution while (food available) { P(fork[left(me)]); P(fork[right(me)]); /*pick up forks*/ eat; V(fork[left(me)]); V(fork[right(me)]); Naive Solution while (food available) { P(fork[left(me)]); P(fork[right(me)]); /*pick up forks*/ eat; V(fork[left(me)]); V(fork[right(me)]);](http://slidetodoc.com/presentation_image_h2/176667fed574022bcf1afea3f1e89260/image-40.jpg)

Naive Solution while (food available) { P(fork[left(me)]); P(fork[right(me)]); /*pick up forks*/ eat; V(fork[left(me)]); V(fork[right(me)]); /*put down forks*/ think awhile; } Does this work? 43

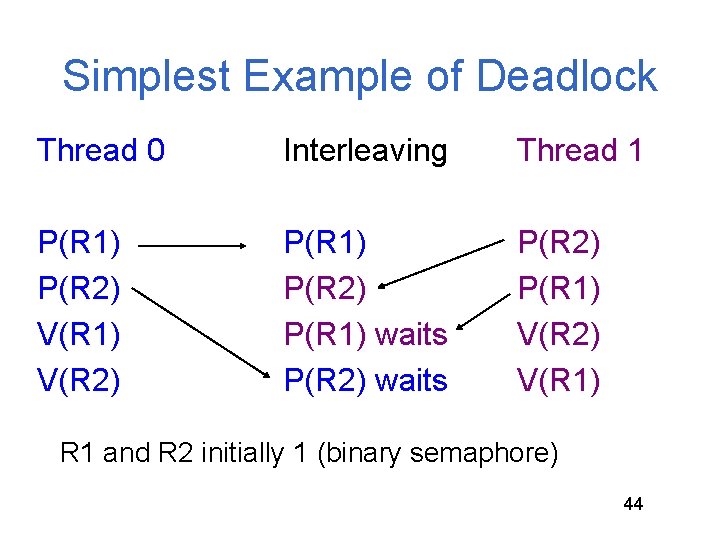

Simplest Example of Deadlock Thread 0 Interleaving Thread 1 P(R 1) P(R 2) V(R 1) V(R 2) P(R 1) P(R 2) P(R 1) waits P(R 2) P(R 1) V(R 2) V(R 1) R 1 and R 2 initially 1 (binary semaphore) 44

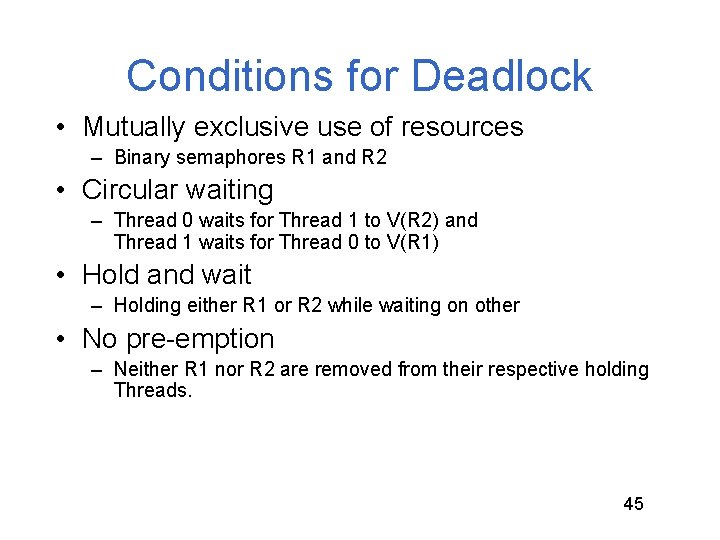

Conditions for Deadlock • Mutually exclusive use of resources – Binary semaphores R 1 and R 2 • Circular waiting – Thread 0 waits for Thread 1 to V(R 2) and Thread 1 waits for Thread 0 to V(R 1) • Hold and wait – Holding either R 1 or R 2 while waiting on other • No pre-emption – Neither R 1 nor R 2 are removed from their respective holding Threads. 45

Philosophy 101 (or why 5 DP is interesting) • How to eat with your Fellows without causing Deadlock. – Circular arguments (the circular wait condition) – Not giving up on firmly held things (no preemption) – Infinite patience with Half-baked schemes (hold some & wait for more) • Why Starvation exists and what we can do about it. 46

Dealing with Deadlock It can be prevented by breaking one of the prerequisite conditions: • Mutually exclusive use of resources – Example: Allowing shared access to read-only files (readers/writers problem) • circular waiting – Example: Define an ordering on resources and acquire them in order • hold and wait • no pre-emption 47

![Circular Wait Condition while (food available) { if (me 0) {P(fork[left(me)]); P(fork[right(me)]); } else Circular Wait Condition while (food available) { if (me 0) {P(fork[left(me)]); P(fork[right(me)]); } else](http://slidetodoc.com/presentation_image_h2/176667fed574022bcf1afea3f1e89260/image-45.jpg)

Circular Wait Condition while (food available) { if (me 0) {P(fork[left(me)]); P(fork[right(me)]); } else {(P(fork[right(me)]); P(fork[left(me)]); } eat; V(fork[left(me)]); V(fork[right(me)]); think awhile; } 48

![Hold and Wait Condition while (food available) { P(mutex); while (forks [me] != 2) Hold and Wait Condition while (food available) { P(mutex); while (forks [me] != 2)](http://slidetodoc.com/presentation_image_h2/176667fed574022bcf1afea3f1e89260/image-46.jpg)

Hold and Wait Condition while (food available) { P(mutex); while (forks [me] != 2) {blocking[me] = true; V(mutex); P(sleepy[me]); P(mutex); } forks [leftneighbor(me)] --; forks [rightneighbor(me)]--; V(mutex): eat; P(mutex); forks [leftneighbor(me)] ++; forks [rightneighbor(me)]++; if (blocking[leftneighbor(me)]) {blocking [leftneighbor(me)] = false; V(sleepy[leftneighbor(me)]); } if (blocking[rightneighbor(me)]) {blocking[rightneighbor(me)] = false; V(sleepy[rightneighbor(me)]); } V(mutex); think awhile; 49 }

Starvation The difference between deadlock and starvation is subtle: – Once a set of processes are deadlocked, there is no future execution sequence that can get them out of it. – In starvation, there does exist some execution sequence that is favorable to the starving process although there is no guarantee it will ever occur. – Rollback and Retry solutions are prone to starvation. – Continuous arrival of higher priority processes is another common starvation situation. 50

![5 DP - Monitor Style Boolean eating [5]; Lock fork. Mutex; Condition forks. Avail; 5 DP - Monitor Style Boolean eating [5]; Lock fork. Mutex; Condition forks. Avail;](http://slidetodoc.com/presentation_image_h2/176667fed574022bcf1afea3f1e89260/image-48.jpg)

5 DP - Monitor Style Boolean eating [5]; Lock fork. Mutex; Condition forks. Avail; void Pickup. Forks (int i) { fork. Mutex. Acquire( ); while ( eating[(i-1)%5] eating[(i+1)%5] ) forks. Avail. Wait(&fork. Mutex); eating[i] = true; fork. Mutex. Release( ); void Putdown. Forks (int i) { fork. Mutex. Acquire( ); eating[i] = false; forks. Avail. Broadcast(&fork. Mute x); fork. Mutex. Release( ); } } 51

![What about this? while (food available) { fork. Mutex. Acquire( ); while (forks [me] What about this? while (food available) { fork. Mutex. Acquire( ); while (forks [me]](http://slidetodoc.com/presentation_image_h2/176667fed574022bcf1afea3f1e89260/image-49.jpg)

What about this? while (food available) { fork. Mutex. Acquire( ); while (forks [me] != 2) {blocking[me]=true; fork. Mutex. Release( ); sleep( ); fork. Mutex. Acquire( ); } forks [leftneighbor(me)]--; forks [rightneighbor(me)]--; fork. Mutex. Release( ): eat; fork. Mutex. Acquire( ); forks[leftneighbor(me)] ++; forks [rightneighbor(me)]++; if (blocking[leftneighbor(me)] || blocking[rightneighbor(me)]) wakeup ( ); fork. Mutex. Release( ); think awhile; } 52

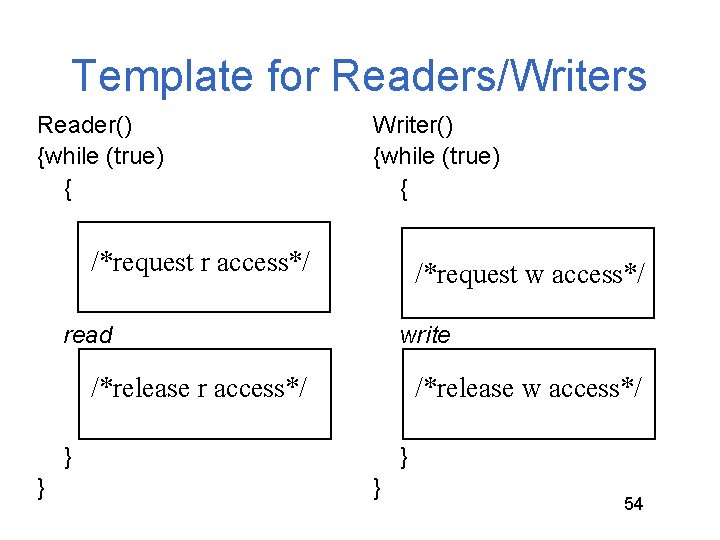

Readers/Writers Problem Synchronizing access to a file or data record in a database such that any number of threads requesting read-only access are allowed but only one thread requesting write access is allowed, excluding all readers. 53

Template for Readers/Writers Reader() {while (true) { Writer() {while (true) { /*request r access*/ /*request w access*/ read write /*release r access*/ /*release w access*/ } } 54

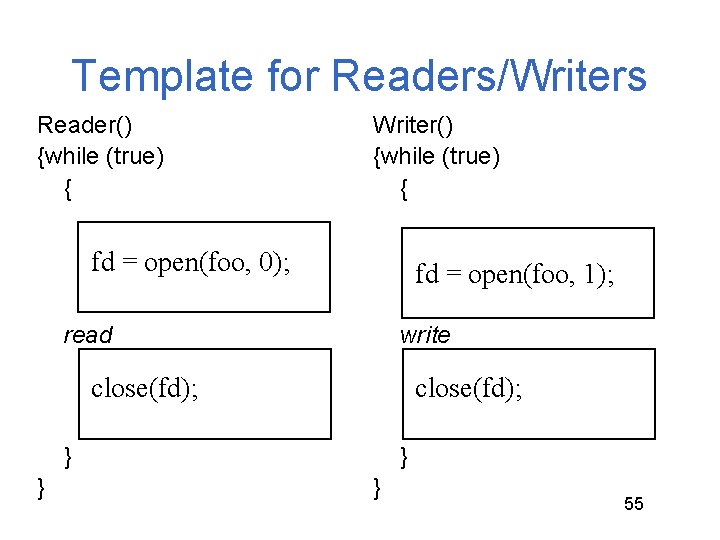

Template for Readers/Writers Reader() {while (true) { Writer() {while (true) { fd = open(foo, 0); fd = open(foo, 1); read write close(fd); } } 55

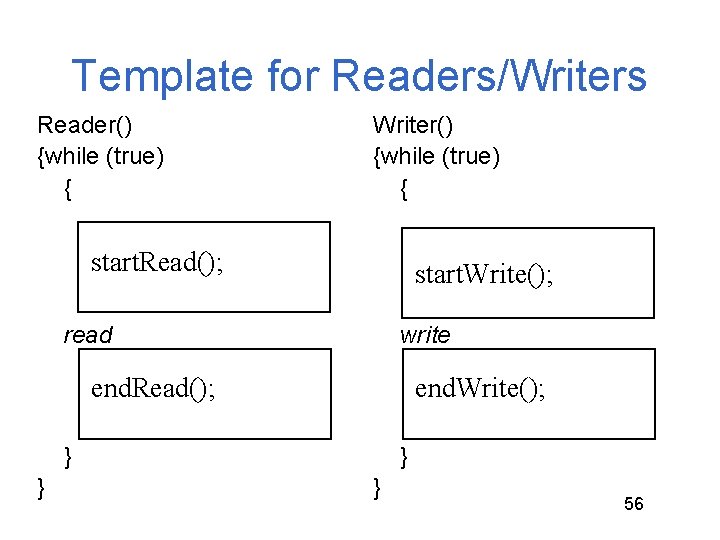

Template for Readers/Writers Reader() {while (true) { Writer() {while (true) { start. Read(); start. Write(); read write end. Read(); end. Write(); } } 56

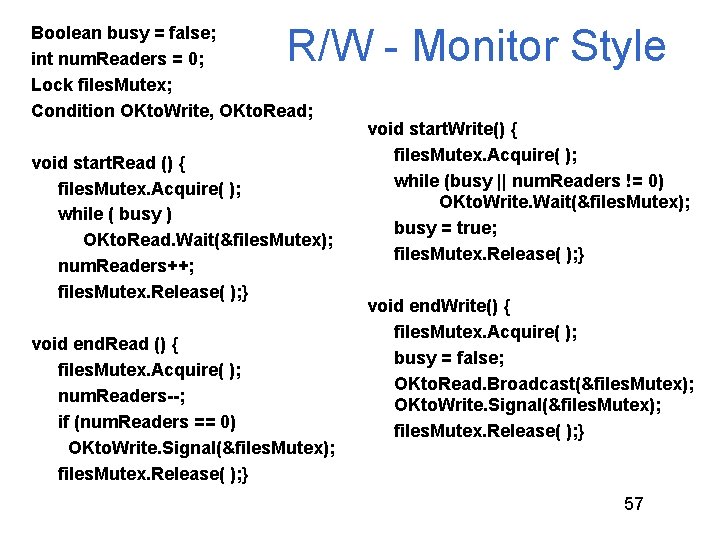

R/W - Monitor Style Boolean busy = false; int num. Readers = 0; Lock files. Mutex; Condition OKto. Write, OKto. Read; void start. Read () { files. Mutex. Acquire( ); while ( busy ) OKto. Read. Wait(&files. Mutex); num. Readers++; files. Mutex. Release( ); } void end. Read () { files. Mutex. Acquire( ); num. Readers--; if (num. Readers == 0) OKto. Write. Signal(&files. Mutex); files. Mutex. Release( ); } void start. Write() { files. Mutex. Acquire( ); while (busy || num. Readers != 0) OKto. Write. Wait(&files. Mutex); busy = true; files. Mutex. Release( ); } void end. Write() { files. Mutex. Acquire( ); busy = false; OKto. Read. Broadcast(&files. Mutex); OKto. Write. Signal(&files. Mutex); files. Mutex. Release( ); } 57

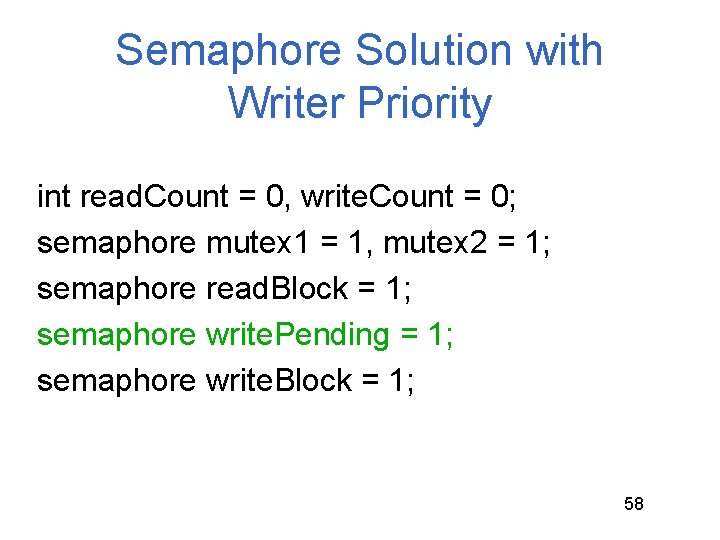

Semaphore Solution with Writer Priority int read. Count = 0, write. Count = 0; semaphore mutex 1 = 1, mutex 2 = 1; semaphore read. Block = 1; semaphore write. Pending = 1; semaphore write. Block = 1; 58

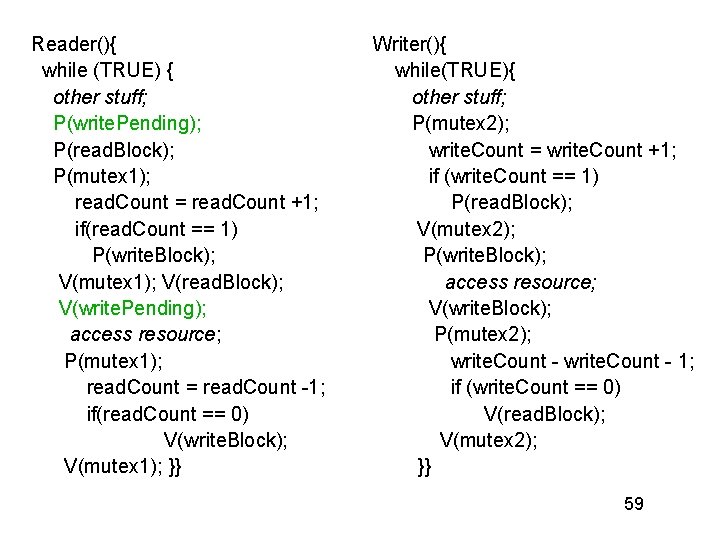

Reader(){ while (TRUE) { other stuff; P(write. Pending); P(read. Block); P(mutex 1); read. Count = read. Count +1; if(read. Count == 1) P(write. Block); V(mutex 1); V(read. Block); V(write. Pending); access resource; P(mutex 1); read. Count = read. Count -1; if(read. Count == 0) V(write. Block); V(mutex 1); }} Writer(){ while(TRUE){ other stuff; P(mutex 2); write. Count = write. Count +1; if (write. Count == 1) P(read. Block); V(mutex 2); P(write. Block); access resource; V(write. Block); P(mutex 2); write. Count - 1; if (write. Count == 0) V(read. Block); V(mutex 2); }} 59

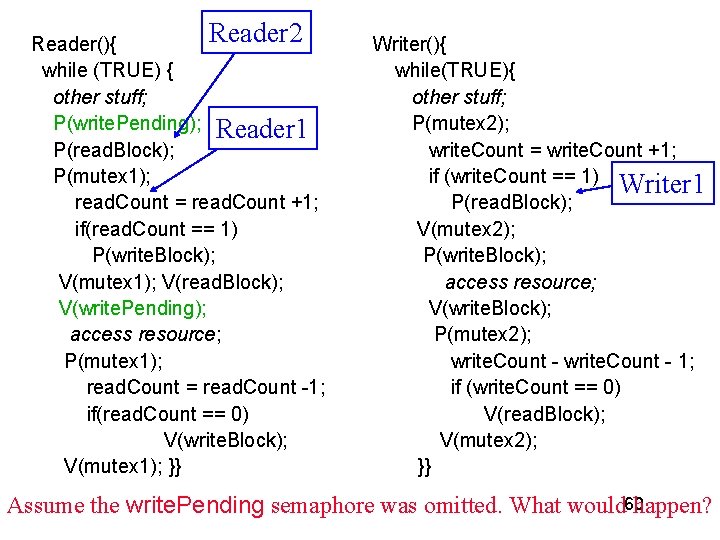

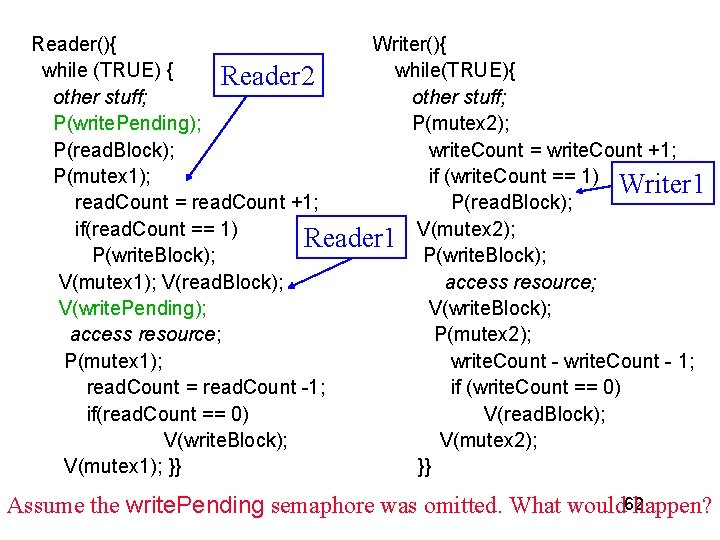

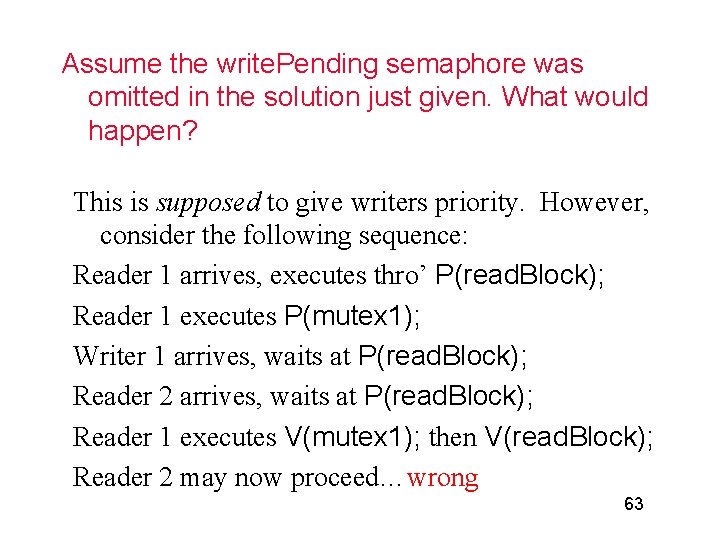

Reader 2 Reader(){ while (TRUE) { other stuff; P(write. Pending); Reader 1 P(read. Block); P(mutex 1); read. Count = read. Count +1; if(read. Count == 1) P(write. Block); V(mutex 1); V(read. Block); V(write. Pending); access resource; P(mutex 1); read. Count = read. Count -1; if(read. Count == 0) V(write. Block); V(mutex 1); }} Writer(){ while(TRUE){ other stuff; P(mutex 2); write. Count = write. Count +1; if (write. Count == 1) Writer 1 P(read. Block); V(mutex 2); P(write. Block); access resource; V(write. Block); P(mutex 2); write. Count - 1; if (write. Count == 0) V(read. Block); V(mutex 2); }} Assume the write. Pending semaphore was omitted. What would 60 happen?

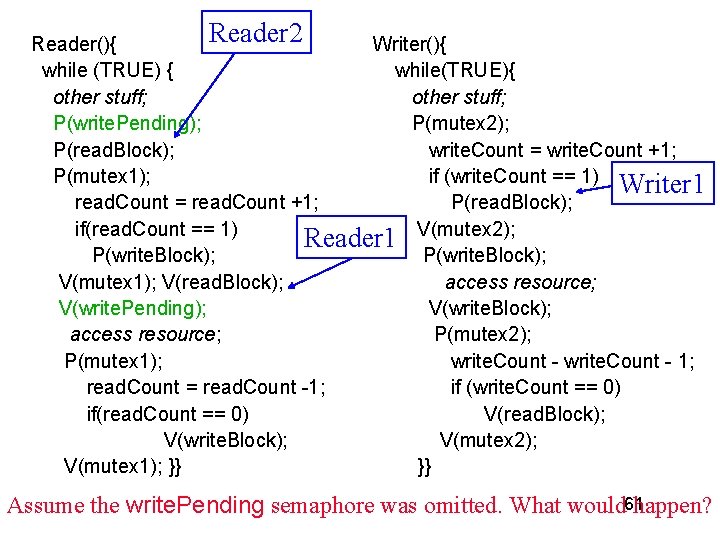

Reader 2 Reader(){ Writer(){ while (TRUE) { while(TRUE){ other stuff; P(write. Pending); P(mutex 2); P(read. Block); write. Count = write. Count +1; P(mutex 1); if (write. Count == 1) Writer 1 read. Count = read. Count +1; P(read. Block); if(read. Count == 1) Reader 1 V(mutex 2); P(write. Block); V(mutex 1); V(read. Block); access resource; V(write. Pending); V(write. Block); access resource; P(mutex 2); P(mutex 1); write. Count - 1; read. Count = read. Count -1; if (write. Count == 0) if(read. Count == 0) V(read. Block); V(write. Block); V(mutex 2); V(mutex 1); }} }} Assume the write. Pending semaphore was omitted. What would 61 happen?

Reader(){ Writer(){ while (TRUE) { while(TRUE){ Reader 2 other stuff; P(write. Pending); P(mutex 2); P(read. Block); write. Count = write. Count +1; P(mutex 1); if (write. Count == 1) Writer 1 read. Count = read. Count +1; P(read. Block); if(read. Count == 1) Reader 1 V(mutex 2); P(write. Block); V(mutex 1); V(read. Block); access resource; V(write. Pending); V(write. Block); access resource; P(mutex 2); P(mutex 1); write. Count - 1; read. Count = read. Count -1; if (write. Count == 0) if(read. Count == 0) V(read. Block); V(write. Block); V(mutex 2); V(mutex 1); }} }} Assume the write. Pending semaphore was omitted. What would 62 happen?

Assume the write. Pending semaphore was omitted in the solution just given. What would happen? This is supposed to give writers priority. However, consider the following sequence: Reader 1 arrives, executes thro’ P(read. Block); Reader 1 executes P(mutex 1); Writer 1 arrives, waits at P(read. Block); Reader 2 arrives, waits at P(read. Block); Reader 1 executes V(mutex 1); then V(read. Block); Reader 2 may now proceed…wrong 63

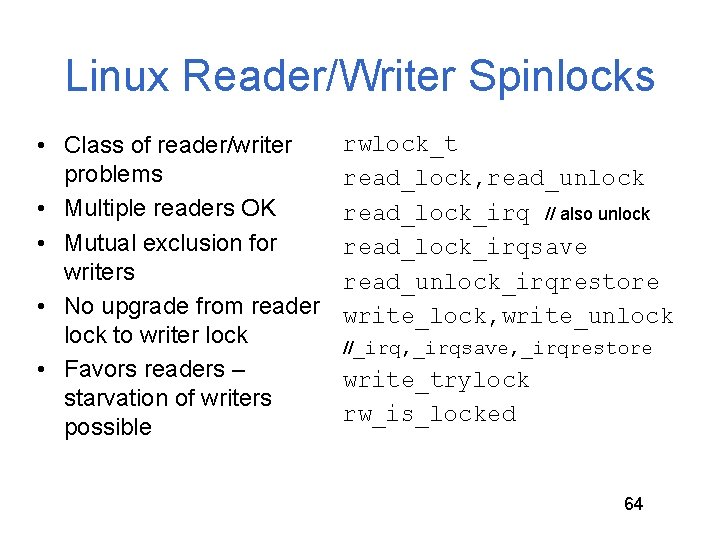

Linux Reader/Writer Spinlocks • Class of reader/writer problems • Multiple readers OK • Mutual exclusion for writers • No upgrade from reader lock to writer lock • Favors readers – starvation of writers possible rwlock_t read_lock, read_unlock read_lock_irq // also unlock read_lock_irqsave read_unlock_irqrestore write_lock, write_unlock //_irq, _irqsave, _irqrestore write_trylock rw_is_locked 64

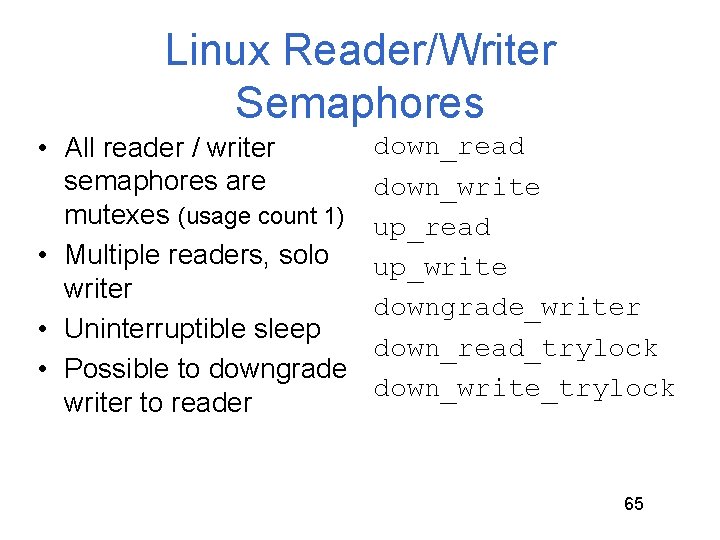

Linux Reader/Writer Semaphores • All reader / writer semaphores are mutexes (usage count 1) • Multiple readers, solo writer • Uninterruptible sleep • Possible to downgrade writer to reader down_read down_write up_read up_write downgrade_writer down_read_trylock down_write_trylock 65

- Slides: 62