Outline for Today Objectives To define the process

Outline for Today • Objectives: – To define the process and thread abstractions. – To briefly introduce mechanisms for implementing processes (threads). – To introduce the critical section problem. – To learn how to reason about the correctness of concurrent programs. • Administrative details: 1

Separation of Policy and Mechanism • “Why and What” vs. “How” • Objectives and strategies vs. data structures, hardware and software implementation issues. • Process abstraction vs. Process machinery 2

Process Abstraction • Unit of scheduling • One (or more*) sequential threads of control – program counter, register values, call stack • Unit of resource allocation – address space (code and data), open files – sometimes called tasks or jobs • Operations on processes: fork (clone-style creation), wait (parent on child), exit (self-termination), signal, kill. Process-related System Calls in Unix. 3

Why Use Processes? To capture naturally concurrent activities – Waiting for slow devices – Providing human users faster response. – Shared network servers multiplexing among client requests (each client served by its own server thread) To gain speedup by exploiting parallelism in hardware – Maintenance tasks performed “in the background” – Multiprocessors – Overlap the asynchronous and independent functioning of devices and users • Within a single user thread – signal handlers cause asynchronous control flow. 4

Threads and Processes • Decouple the resource allocation aspect from the control aspect • Thread abstraction - defines a single sequential instruction stream (PC, stack, register values) • Process - the resource context serving as a “container” for one or more threads (shared address space) • Kernel threads - unit of scheduling (kernel-supported thread operations still slow) 5

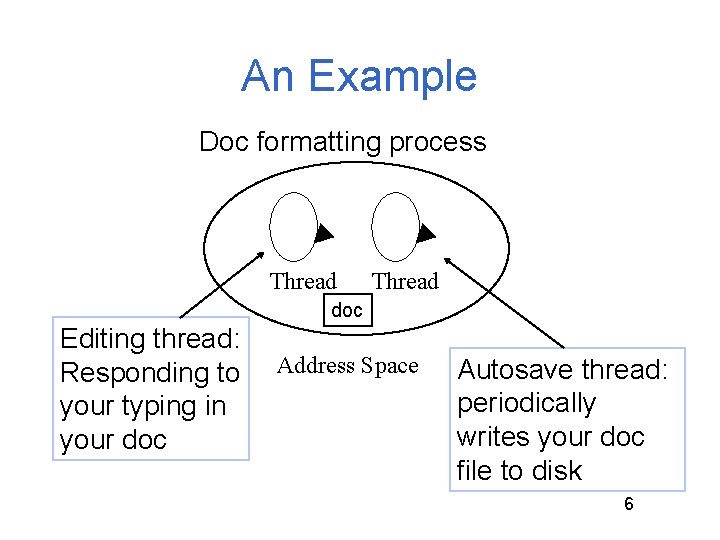

An Example Doc formatting process Thread doc Editing thread: Responding to your typing in your doc Address Space Autosave thread: periodically writes your doc file to disk 6

User-Level Threads • To avoid the performance penalty of kernelsupported threads, implement at user level and manage by a run-time system – Contained “within” a single kernel entity (process) – Invisible to OS (OS schedules their container, not being aware of the threads themselves or their states). Poor scheduling decisions possible. • User-level thread operations can be 100 x faster than kernel thread operations, but need better integration / cooperation with OS. 7

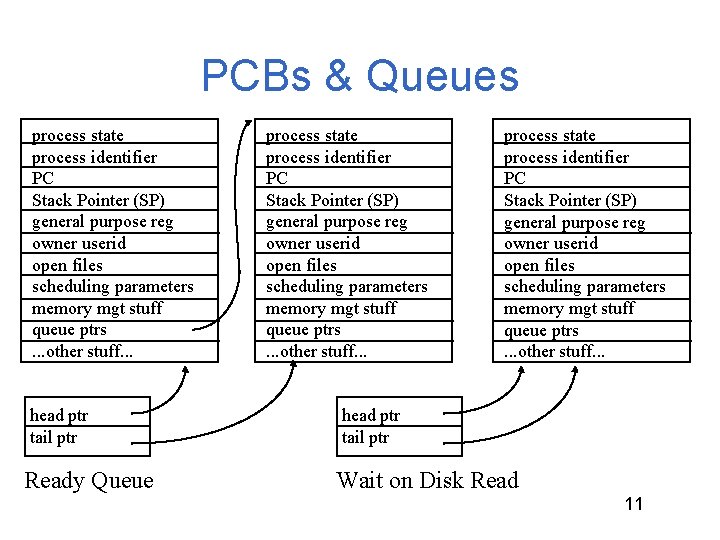

Process Mechanisms • PCB data structure in kernel memory represents a process (allocated on process creation, deallocated on termination). • PCBs reside on various state queues (including a different queue for each “cause” of waiting) reflecting the process’s state. • As a process executes, the OS moves its PCB from queue to queue (e. g. from the “waiting on I/O” queue to the “ready to run” queue). 8

Context Switching • When a process is running, its program counter, register values, stack pointer, etc. are contained in the hardware registers of the CPU. The process has direct control of the CPU hardware for now. • When a process is not the one currently running, its current register values are saved in a process descriptor data structure (PCB - process control block) • Context switching involves moving state between CPU and various processes’ PCBs by the OS. 9

Context Switching • When a process is running, its program counter, register values, stack pointer, etc. are contained in the hardware registers of the CPU. The process has direct control of the CPU hardware for now. • When a process is not the one currently running, its current register values are saved in a process descriptor data structure (PCB - process control block) (Linux: task_struct) • Context switching involves moving state between CPU and various processes’ descriptors by the OS. 10

PCBs & Queues process state process identifier PC Stack Pointer (SP) general purpose reg owner userid open files scheduling parameters memory mgt stuff queue ptrs. . . other stuff. . . head ptr tail ptr Ready Queue process state process identifier PC Stack Pointer (SP) general purpose reg owner userid open files scheduling parameters memory mgt stuff queue ptrs. . . other stuff. . . head ptr tail ptr Wait on Disk Read 11

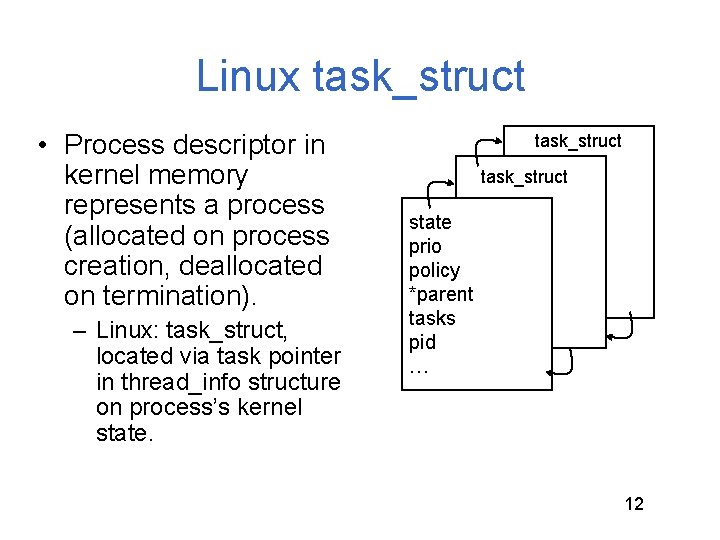

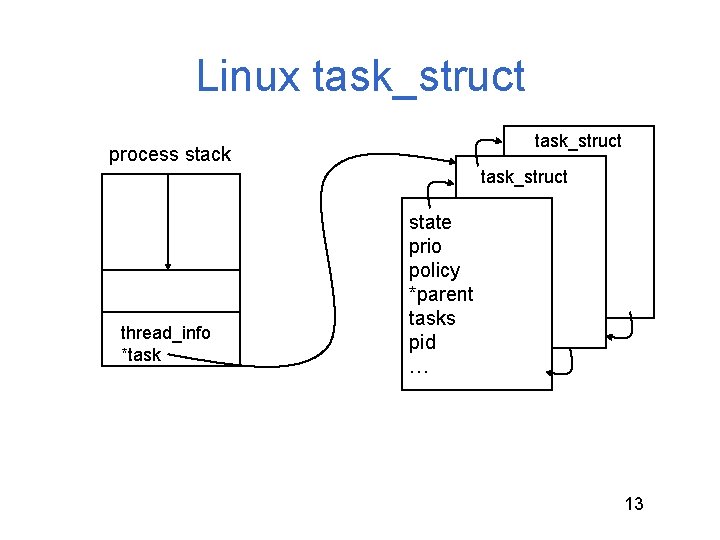

Linux task_struct • Process descriptor in kernel memory represents a process (allocated on process creation, deallocated on termination). – Linux: task_struct, located via task pointer in thread_info structure on process’s kernel state. task_struct state prio policy *parent tasks pid … 12

Linux task_struct process stack task_struct thread_info *task state prio policy *parent tasks pid … 13

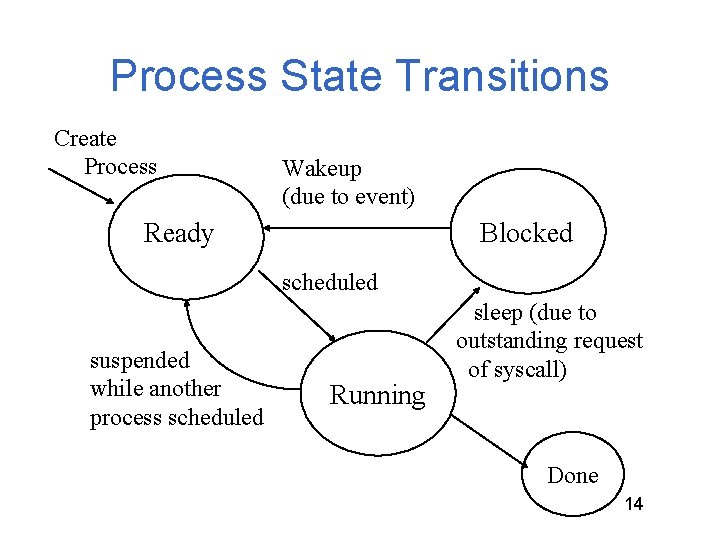

Process State Transitions Create Process Wakeup (due to event) Ready Blocked scheduled suspended while another process scheduled Running sleep (due to outstanding request of syscall) Done 14

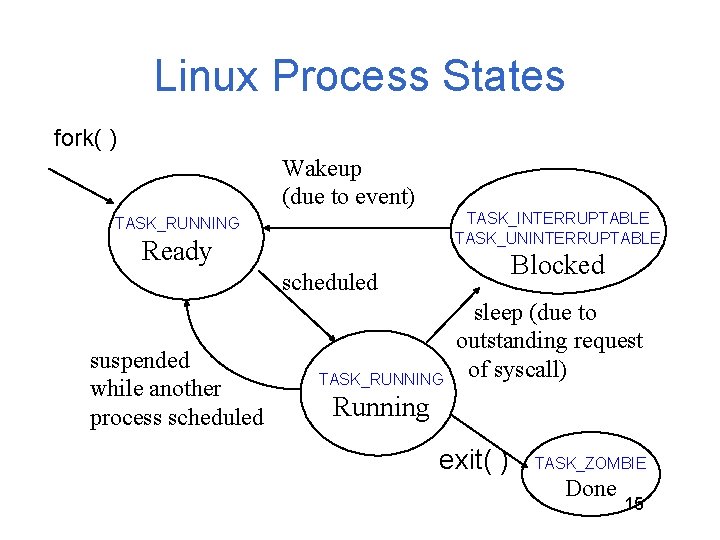

Linux Process States fork( ) Wakeup (due to event) TASK_INTERRUPTABLE TASK_UNINTERRUPTABLE TASK_RUNNING Ready Blocked scheduled suspended while another process scheduled TASK_RUNNING sleep (due to outstanding request of syscall) Running exit( ) TASK_ZOMBIE Done 15

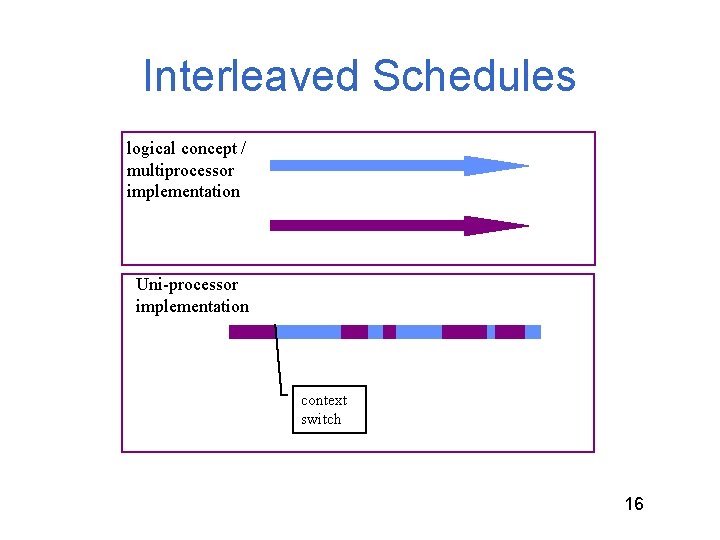

Interleaved Schedules logical concept / multiprocessor implementation Uni-processor implementation context switch 16

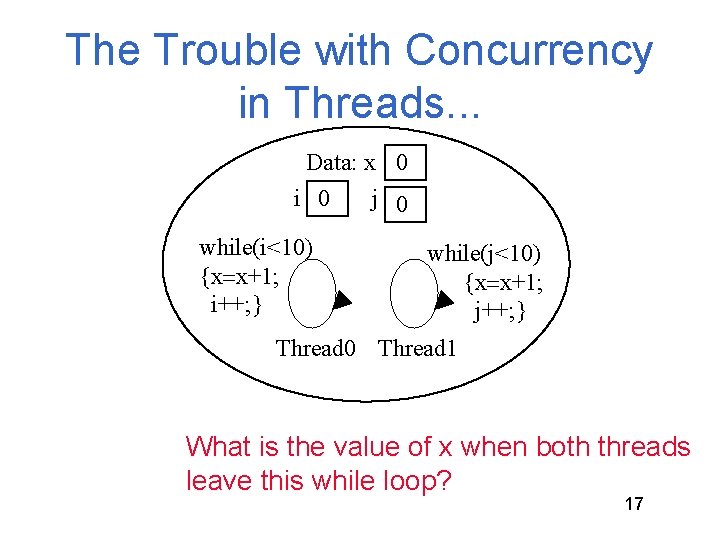

The Trouble with Concurrency in Threads. . . Data: x 0 i 0 j 0 while(i<10) {x x+1; i++; } while(j<10) {x x+1; j++; } Thread 0 Thread 1 What is the value of x when both threads leave this while loop? 17

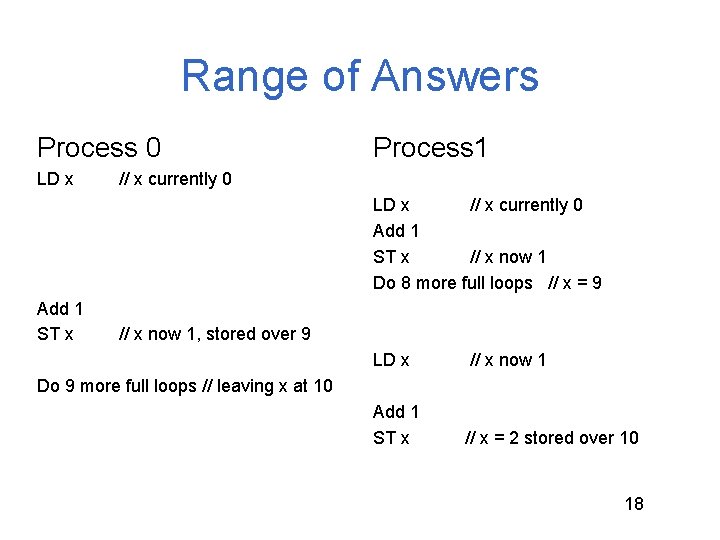

Range of Answers Process 0 LD x Process 1 // x currently 0 LD x // x currently 0 Add 1 ST x // x now 1 Do 8 more full loops // x = 9 Add 1 ST x // x now 1, stored over 9 LD x // x now 1 Add 1 ST x // x = 2 stored over 10 Do 9 more full loops // leaving x at 10 18

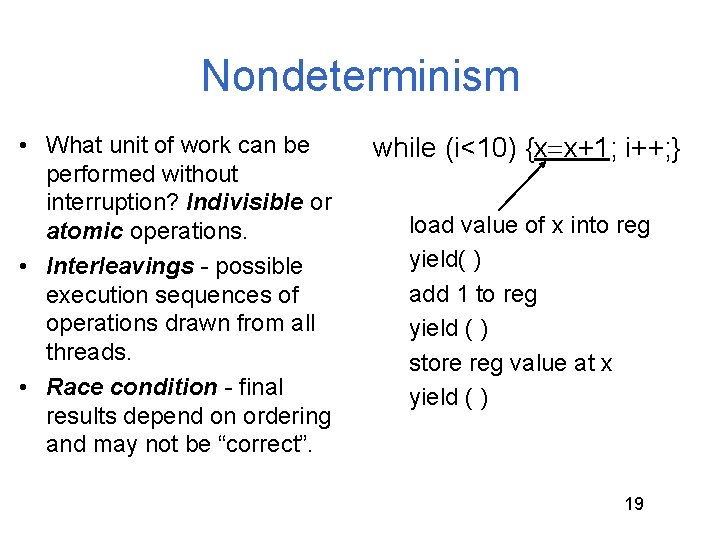

Nondeterminism • What unit of work can be performed without interruption? Indivisible or atomic operations. • Interleavings - possible execution sequences of operations drawn from all threads. • Race condition - final results depend on ordering and may not be “correct”. while (i<10) {x x+1; i++; } load value of x into reg yield( ) add 1 to reg yield ( ) store reg value at x yield ( ) 19

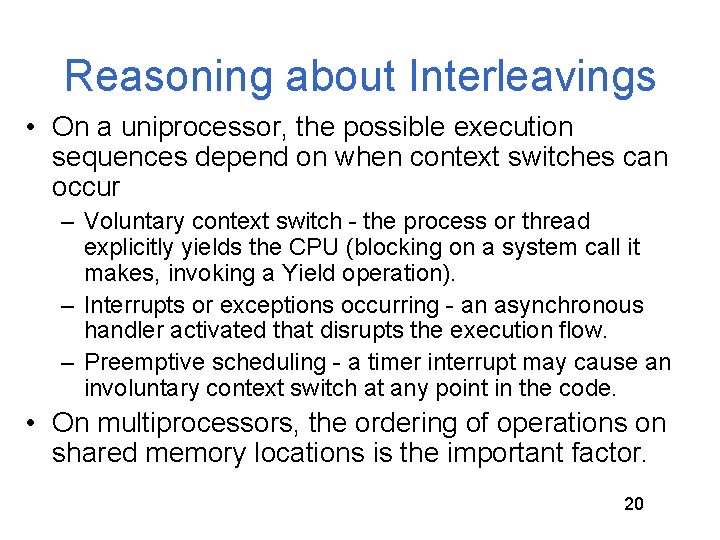

Reasoning about Interleavings • On a uniprocessor, the possible execution sequences depend on when context switches can occur – Voluntary context switch - the process or thread explicitly yields the CPU (blocking on a system call it makes, invoking a Yield operation). – Interrupts or exceptions occurring - an asynchronous handler activated that disrupts the execution flow. – Preemptive scheduling - a timer interrupt may cause an involuntary context switch at any point in the code. • On multiprocessors, the ordering of operations on shared memory locations is the important factor. 20

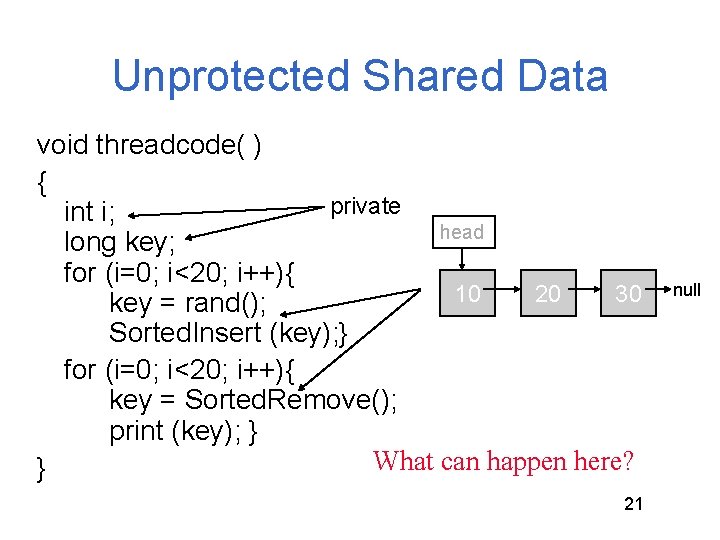

Unprotected Shared Data void threadcode( ) { private int i; head long key; for (i=0; i<20; i++){ 10 20 30 key = rand(); Sorted. Insert (key); } for (i=0; i<20; i++){ key = Sorted. Remove(); print (key); } What can happen here? } 21 null

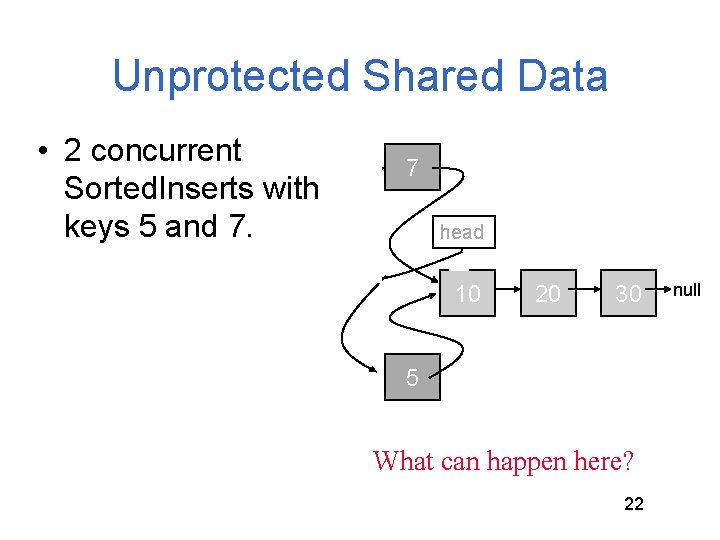

Unprotected Shared Data • 2 concurrent Sorted. Inserts with keys 5 and 7. 7 head 10 20 30 5 What can happen here? 22 null

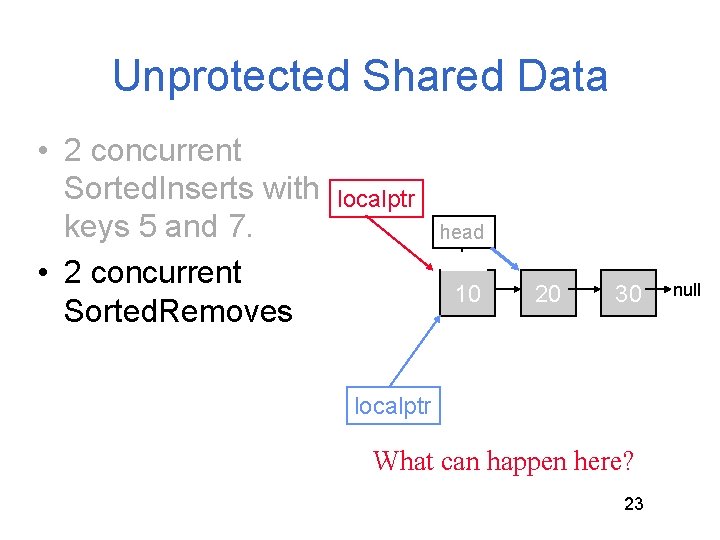

Unprotected Shared Data • 2 concurrent Sorted. Inserts with keys 5 and 7. • 2 concurrent Sorted. Removes localptr head 10 20 30 localptr What can happen here? 23 null

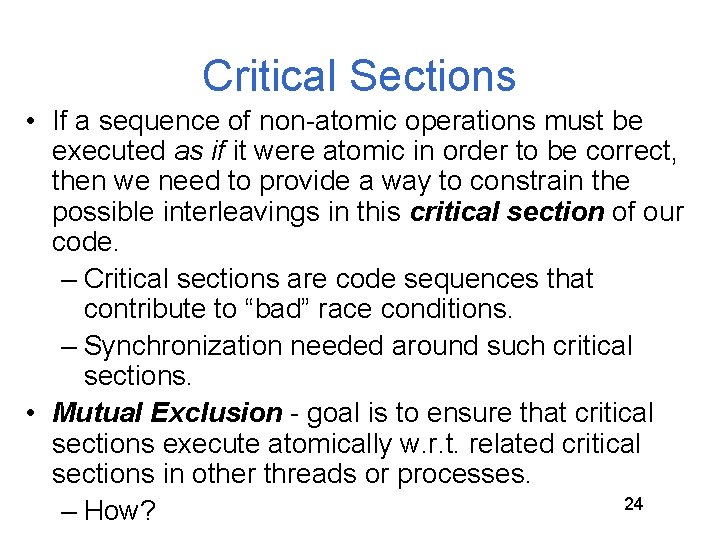

Critical Sections • If a sequence of non-atomic operations must be executed as if it were atomic in order to be correct, then we need to provide a way to constrain the possible interleavings in this critical section of our code. – Critical sections are code sequences that contribute to “bad” race conditions. – Synchronization needed around such critical sections. • Mutual Exclusion - goal is to ensure that critical sections execute atomically w. r. t. related critical sections in other threads or processes. 24 – How?

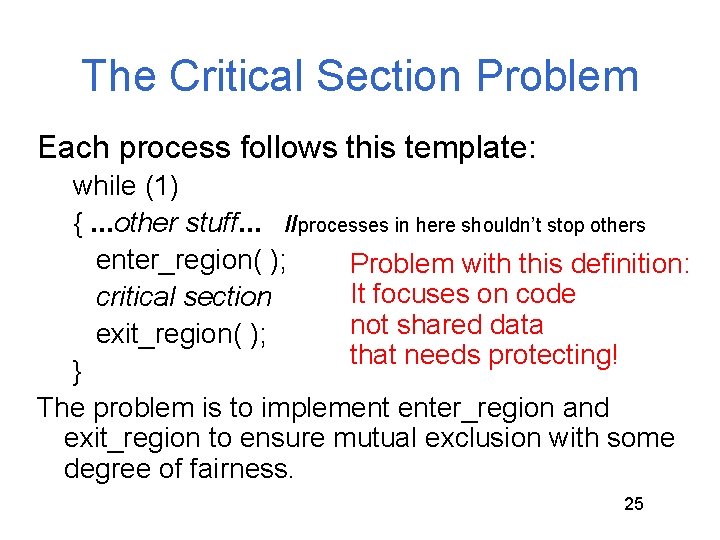

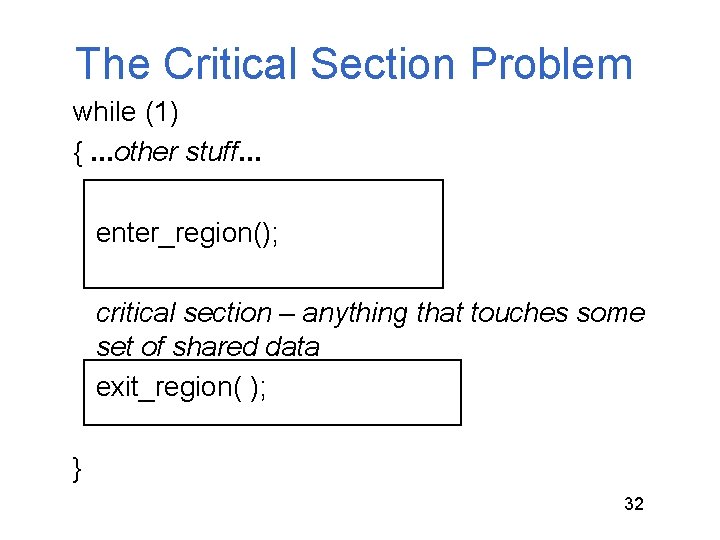

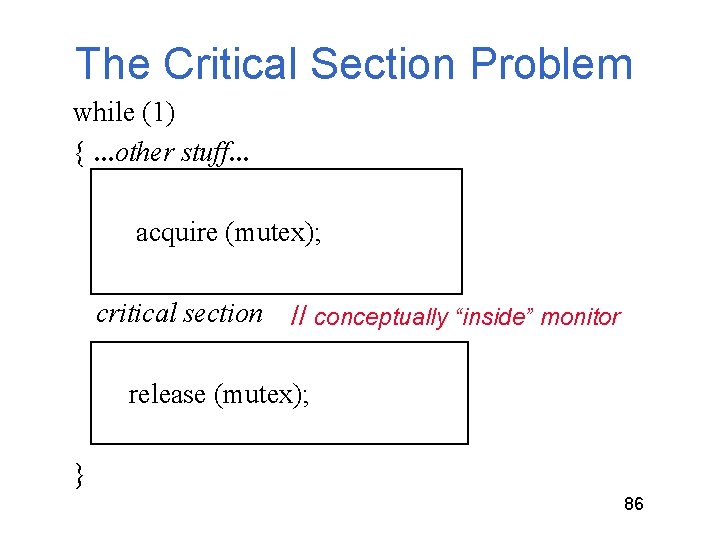

The Critical Section Problem Each process follows this template: while (1) {. . . other stuff. . . //processes in here shouldn’t stop others enter_region( ); Problem with this definition: It focuses on code critical section not shared data exit_region( ); that needs protecting! } The problem is to implement enter_region and exit_region to ensure mutual exclusion with some degree of fairness. 25

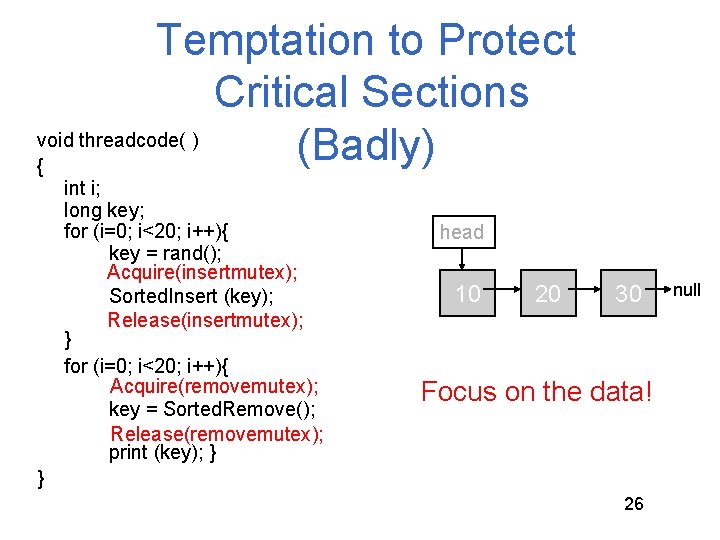

Temptation to Protect Critical Sections void threadcode( ) (Badly) { int i; long key; for (i=0; i<20; i++){ key = rand(); Acquire(insertmutex); Sorted. Insert (key); Release(insertmutex); } for (i=0; i<20; i++){ Acquire(removemutex); key = Sorted. Remove(); Release(removemutex); print (key); } head 10 20 30 Focus on the data! } 26 null

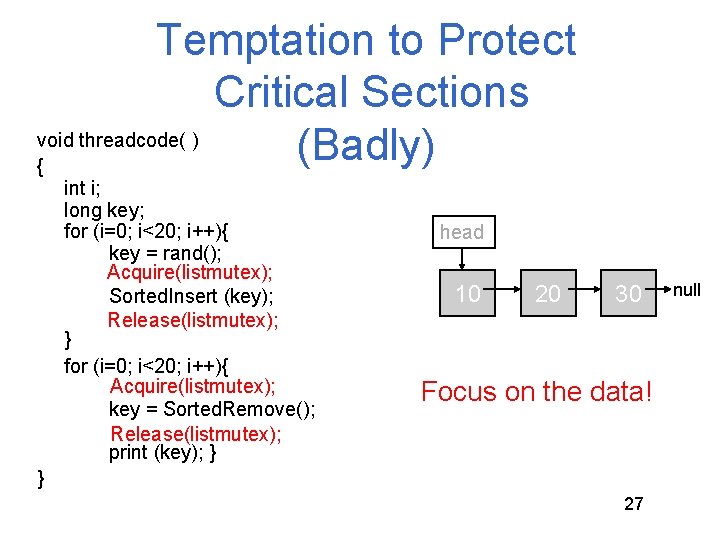

Temptation to Protect Critical Sections void threadcode( ) (Badly) { int i; long key; for (i=0; i<20; i++){ key = rand(); Acquire(listmutex); Sorted. Insert (key); Release(listmutex); } for (i=0; i<20; i++){ Acquire(listmutex); key = Sorted. Remove(); Release(listmutex); print (key); } head 10 20 30 Focus on the data! } 27 null

![Another Example Problem: Given arrays C[0: x, 0: y], A [0: x, 0: y], Another Example Problem: Given arrays C[0: x, 0: y], A [0: x, 0: y],](http://slidetodoc.com/presentation_image_h/96f3011628f6825409880d42c143d564/image-28.jpg)

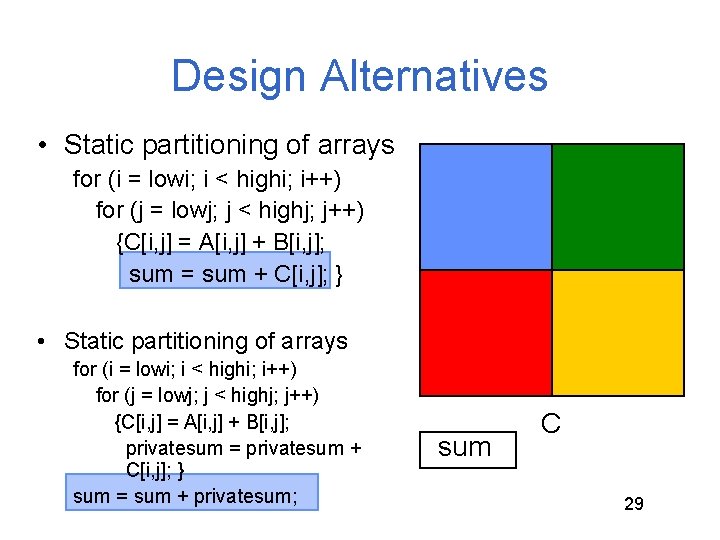

Another Example Problem: Given arrays C[0: x, 0: y], A [0: x, 0: y], and B [0: x, 0: y]. Use n threads to update each element of C to the sum of A and B and then the last thread returns the average value of all C elements. 28

Design Alternatives • Static partitioning of arrays for (i = lowi; i < highi; i++) for (j = lowj; j < highj; j++) {C[i, j] = A[i, j] + B[i, j]; sum = sum + C[i, j]; } • Static partitioning of arrays for (i = lowi; i < highi; i++) for (j = lowj; j < highj; j++) {C[i, j] = A[i, j] + B[i, j]; privatesum = privatesum + C[i, j]; } sum = sum + privatesum; sum C 29

![Design Alternatives • Dynamic partitioning of arrays while (elements_remain(i, j)) {C[i, j] = A[i, Design Alternatives • Dynamic partitioning of arrays while (elements_remain(i, j)) {C[i, j] = A[i,](http://slidetodoc.com/presentation_image_h/96f3011628f6825409880d42c143d564/image-30.jpg)

Design Alternatives • Dynamic partitioning of arrays while (elements_remain(i, j)) {C[i, j] = A[i, j] + B[i, j]; sum = sum + C[i, j]; } sum C 30

Implementation Options for Mutual Exclusion • Disable Interrupts • Busywaiting solutions - spinlocks – execute a tight loop if critical section is busy – benefits from specialized atomic (read-mod-write) instructions • Blocking synchronization – sleep (enqueued on wait queue) while C. S. is busy Synchronization primitives (abstractions, such as locks) which are provided by a system may be implemented with some combination of these techniques. 31

The Critical Section Problem while (1) {. . . other stuff. . . enter_region(); critical section – anything that touches some set of shared data exit_region( ); } 32

Critical Data • Goal in solving the critical section problem is to build synchronization so that the sequence of instructions that can cause a race condition are executed AS IF they were indivisible – “Other stuff” code that does not touch the critical data associated with a critical section can be interleaved with the critical section code. – Code from a critical section involving data x can be interleaved with code from a critical section associated with data y. 33

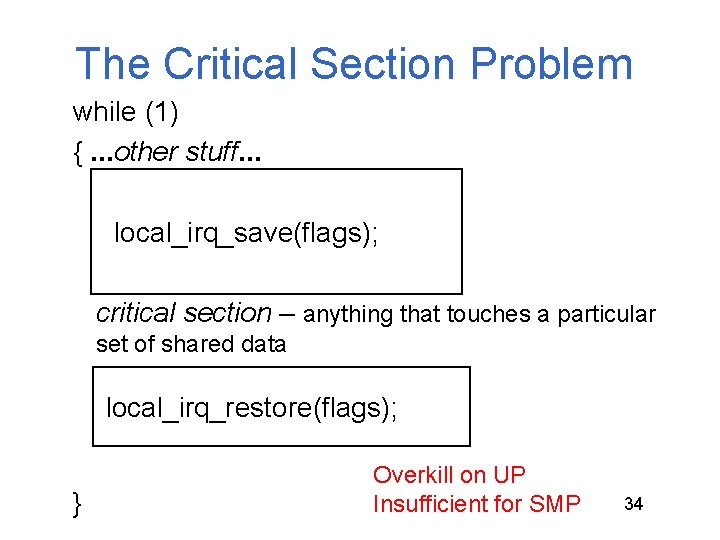

The Critical Section Problem while (1) {. . . other stuff. . . local_irq_save(flags); critical section – anything that touches a particular set of shared data local_irq_restore(flags); } Overkill on UP Insufficient for SMP 34

![Proposed Algorithm for 2 Process Mutual Exclusion Boolean flag[2]; proc (int i) { while Proposed Algorithm for 2 Process Mutual Exclusion Boolean flag[2]; proc (int i) { while](http://slidetodoc.com/presentation_image_h/96f3011628f6825409880d42c143d564/image-35.jpg)

Proposed Algorithm for 2 Process Mutual Exclusion Boolean flag[2]; proc (int i) { while (TRUE){ compute; flag[i] = TRUE ; while(flag[(i+1) mod 2]) ; critical section; flag[i] = FALSE; } } flag[0] = flag[1]= FALSE; fork (proc, 1, 0); fork (proc, 1, 1); Is it correct? Assume they go lockstep. Both set their own flag to TRUE. Both busywait forever on the other’s flag -> deadlock. 35

![Proposed Algorithm for 2 Process Mutual Exclusion • enter_region: needin [me] = true; turn Proposed Algorithm for 2 Process Mutual Exclusion • enter_region: needin [me] = true; turn](http://slidetodoc.com/presentation_image_h/96f3011628f6825409880d42c143d564/image-36.jpg)

Proposed Algorithm for 2 Process Mutual Exclusion • enter_region: needin [me] = true; turn = you; while (needin [you] && turn == you) {no_op}; • exit_region: needin [me] = false; Is it correct? 36

![Interleaving of Execution of 2 Threads (blue and green) enter_region: needin [me] = true; Interleaving of Execution of 2 Threads (blue and green) enter_region: needin [me] = true;](http://slidetodoc.com/presentation_image_h/96f3011628f6825409880d42c143d564/image-37.jpg)

Interleaving of Execution of 2 Threads (blue and green) enter_region: needin [me] = true; turn = you; while (needin [you] && turn == you) {no_op}; Critical Section exit_region: needin [me] = false; 37

![needin [blue] = true; needin [green] = true; turn = green; turn = blue; needin [blue] = true; needin [green] = true; turn = green; turn = blue;](http://slidetodoc.com/presentation_image_h/96f3011628f6825409880d42c143d564/image-38.jpg)

needin [blue] = true; needin [green] = true; turn = green; turn = blue; while (needin [green] && turn == green) Critical Section while (needin [blue] && turn == blue){no_op}; needin [blue] = false; while (needin [blue] && turn == blue) Critical Section needin [green] = false; 38

![Peterson’s Algorithm for 2 Process Mutual Exclusion • enter_region: needin [me] = true; turn Peterson’s Algorithm for 2 Process Mutual Exclusion • enter_region: needin [me] = true; turn](http://slidetodoc.com/presentation_image_h/96f3011628f6825409880d42c143d564/image-39.jpg)

Peterson’s Algorithm for 2 Process Mutual Exclusion • enter_region: needin [me] = true; turn = you; while (needin [you] && turn == you) {no_op}; • exit_region: needin [me] = false; What about more than 2 processes? 39

![Greedy Version (turn = me) needin [blue] = true; needin [green] = true; turn Greedy Version (turn = me) needin [blue] = true; needin [green] = true; turn](http://slidetodoc.com/presentation_image_h/96f3011628f6825409880d42c143d564/image-40.jpg)

Greedy Version (turn = me) needin [blue] = true; needin [green] = true; turn = blue; while (needin [green] && turn == green) Critical Section turn = green; while (needin [blue] && turn == blue) Critical Section Oooops! 40

![Can we extend 2 -process algorithm to work with n processes? needin [me] = Can we extend 2 -process algorithm to work with n processes? needin [me] =](http://slidetodoc.com/presentation_image_h/96f3011628f6825409880d42c143d564/image-41.jpg)

Can we extend 2 -process algorithm to work with n processes? needin [me] = true; turn = you; needin [me] = true; turn = you; CS Idea: Tournament Details: Bookkeeping (left to the reader) 42

![Lamport’s Bakery Algorithm • enter_region: choosing[me] = true; number[me] = max(number[0: n-1]) + 1; Lamport’s Bakery Algorithm • enter_region: choosing[me] = true; number[me] = max(number[0: n-1]) + 1;](http://slidetodoc.com/presentation_image_h/96f3011628f6825409880d42c143d564/image-42.jpg)

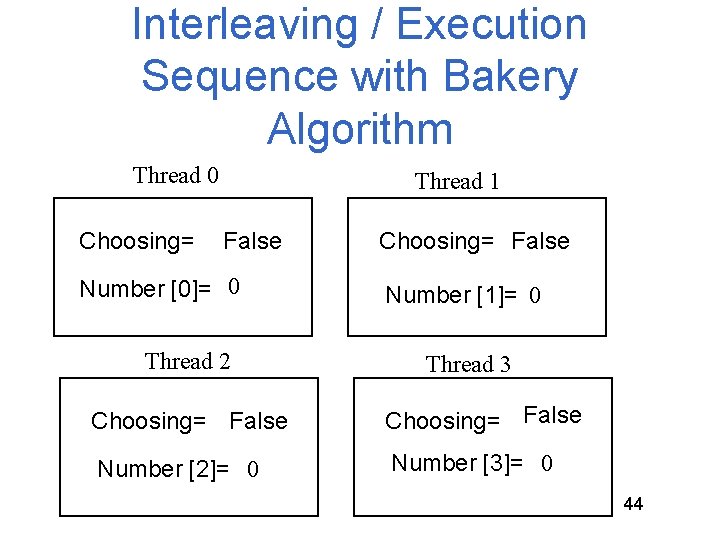

Lamport’s Bakery Algorithm • enter_region: choosing[me] = true; number[me] = max(number[0: n-1]) + 1; choosing[me] = false; for (j=0; n-1; j++) { { while (choosing[j] != 0) {skip} while((number[j] != 0 ) and ((number[j] < number[me]) or ((number[j] == number[me]) and (j < me)))) {skip} } • exit_region: number[me] = 0; 43

Interleaving / Execution Sequence with Bakery Algorithm Thread 0 Choosing= Thread 1 False Number [0]= 0 Thread 2 Choosing= False Number [1]= 0 Thread 3 Choosing= False Number [2]= 0 Number [3]= 0 44

![Thread 0 Choosing= Thread 1 True Number [0]= 0 Thread 2 Choosing= True Number Thread 0 Choosing= Thread 1 True Number [0]= 0 Thread 2 Choosing= True Number](http://slidetodoc.com/presentation_image_h/96f3011628f6825409880d42c143d564/image-44.jpg)

Thread 0 Choosing= Thread 1 True Number [0]= 0 Thread 2 Choosing= True Number [1]= 10 Thread 3 Choosing= False Choosing= True Number [2]= 0 Number [3]= 10 45

![for (j=0; n-1; j++) { { while (choosing[j] != 0) {skip} while((number[j] != 0 for (j=0; n-1; j++) { { while (choosing[j] != 0) {skip} while((number[j] != 0](http://slidetodoc.com/presentation_image_h/96f3011628f6825409880d42c143d564/image-45.jpg)

for (j=0; n-1; j++) { { while (choosing[j] != 0) {skip} while((number[j] != 0 ) and ((number[j] < number[me]) or ((number[j] == number[me]) and (j < me)))) {skip} } Thread 0 Thread 1 Choosing= True Number [0]= 0 Thread 2 Choosing= False Number [1]= 1 Thread 3 Choosing= False Number [2]= 0 Number [3]= 1 46

![for (j=0; n-1; j++) { { while (choosing[j] != 0) {skip} while((number[j] != 0 for (j=0; n-1; j++) { { while (choosing[j] != 0) {skip} while((number[j] != 0](http://slidetodoc.com/presentation_image_h/96f3011628f6825409880d42c143d564/image-46.jpg)

for (j=0; n-1; j++) { { while (choosing[j] != 0) {skip} while((number[j] != 0 ) and ((number[j] < number[me]) or ((number[j] == number[me]) and (j < me)))) {skip} } Thread 0 Thread 1 Choosing= False Number [0]= 2 Thread 2 Choosing= False Number [1]= 1 Thread 3 Choosing= False Number [2]= 0 Number [3]= 1 47

![for (j=0; n-1; j++) { { while (choosing[j] != 0) {skip} while((number[j] != 0 for (j=0; n-1; j++) { { while (choosing[j] != 0) {skip} while((number[j] != 0](http://slidetodoc.com/presentation_image_h/96f3011628f6825409880d42c143d564/image-47.jpg)

for (j=0; n-1; j++) { { while (choosing[j] != 0) {skip} while((number[j] != 0 ) and ((number[j] < number[me]) or ((number[j] == number[me]) and (j < me)))) {skip} } Thread 0 Thread 1 Choosing= False Number [0]= 2 Thread 2 Choosing= False Number [1]= 1 Thread 3 Choosing= True Choosing= False Number [2]= 3 Number [3]= 1 48

![for (j=0; n-1; j++) { { while (choosing[j] != 0) {skip} while((number[j] != 0 for (j=0; n-1; j++) { { while (choosing[j] != 0) {skip} while((number[j] != 0](http://slidetodoc.com/presentation_image_h/96f3011628f6825409880d42c143d564/image-48.jpg)

for (j=0; n-1; j++) { { while (choosing[j] != 0) {skip} while((number[j] != 0 ) and ((number[j] < number[me]) or ((number[j] == number[me]) and (j < me)))) {skip} } Thread 3 Thread 0 Thread 1 Stuck Choosing= False Number [0]= 2 Thread 2 Choosing= False Number [1]= 1 Thread 3 Choosing= True Choosing= False Number [2]= 3 Number [3]= 1 49

![for (j=0; n-1; j++) { { while (choosing[j] != 0) {skip} while((number[j] != 0 for (j=0; n-1; j++) { { while (choosing[j] != 0) {skip} while((number[j] != 0](http://slidetodoc.com/presentation_image_h/96f3011628f6825409880d42c143d564/image-49.jpg)

for (j=0; n-1; j++) { { while (choosing[j] != 0) {skip} while((number[j] != 0 ) and ((number[j] < number[me]) or ((number[j] == number[me]) and (j < me)))) {skip} } Thread 0 Thread 1 Choosing= False Number [0]= 2 Thread 2 Choosing= False Number [1]= 0 Thread 3 Choosing= True Choosing= False Number [2]= 3 Number [3]= 1 50

![for (j=0; n-1; j++) { { while (choosing[j] != 0) {skip} while((number[j] != 0 for (j=0; n-1; j++) { { while (choosing[j] != 0) {skip} while((number[j] != 0](http://slidetodoc.com/presentation_image_h/96f3011628f6825409880d42c143d564/image-50.jpg)

for (j=0; n-1; j++) { { while (choosing[j] != 0) {skip} while((number[j] != 0 ) and ((number[j] < number[me]) or ((number[j] == number[me]) and (j < me)))) {skip} } Thread 0 Thread 1 Choosing= False Number [0]= 2 Thread 2 Choosing= False Number [1]= 0 Thread 3 Choosing= False Number [2]= 3 Number [3]= 1 51

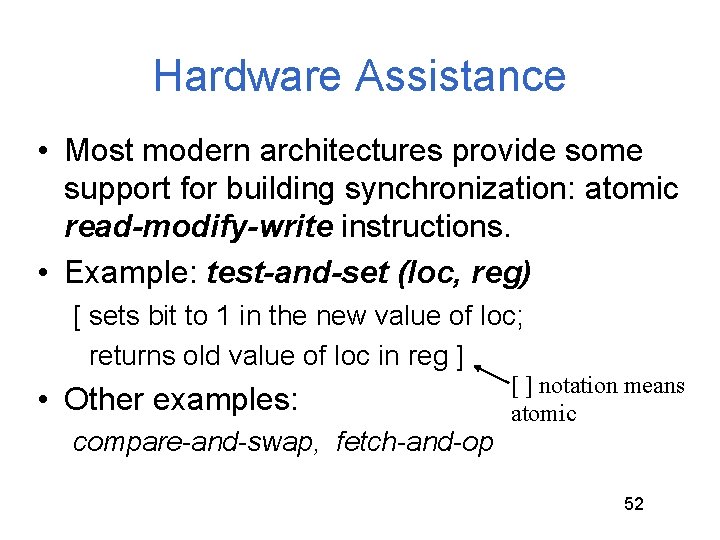

Hardware Assistance • Most modern architectures provide some support for building synchronization: atomic read-modify-write instructions. • Example: test-and-set (loc, reg) [ sets bit to 1 in the new value of loc; returns old value of loc in reg ] • Other examples: compare-and-swap, fetch-and-op [ ] notation means atomic 52

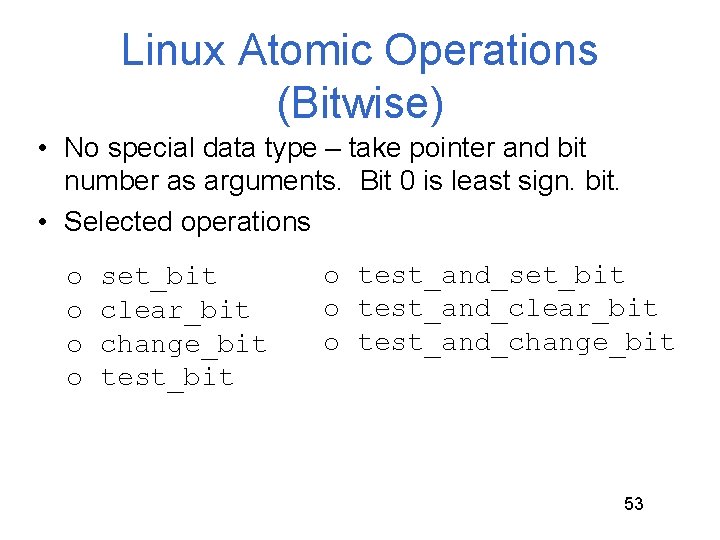

Linux Atomic Operations (Bitwise) • No special data type – take pointer and bit number as arguments. Bit 0 is least sign. bit. • Selected operations o o set_bit clear_bit change_bit test_bit o test_and_set_bit o test_and_clear_bit o test_and_change_bit 53

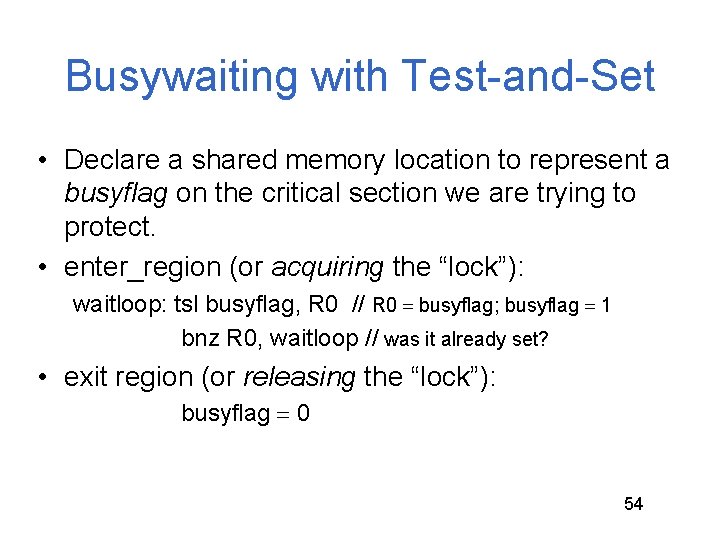

Busywaiting with Test-and-Set • Declare a shared memory location to represent a busyflag on the critical section we are trying to protect. • enter_region (or acquiring the “lock”): waitloop: tsl busyflag, R 0 // R 0 busyflag; busyflag 1 bnz R 0, waitloop // was it already set? • exit region (or releasing the “lock”): busyflag 0 54

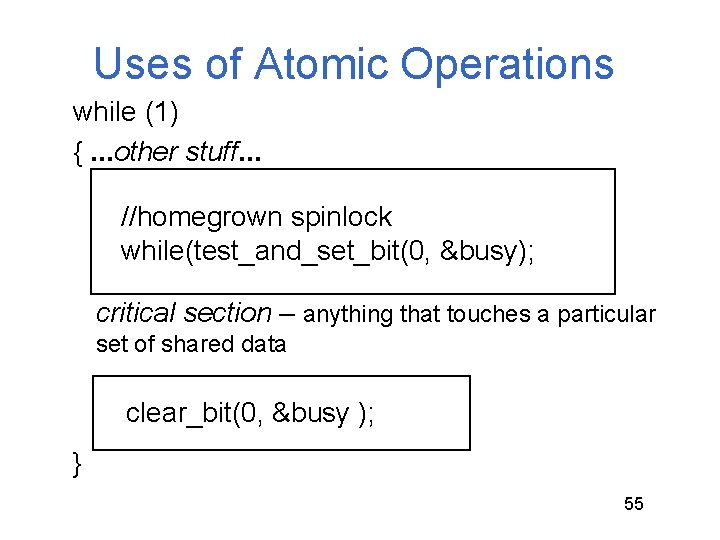

Uses of Atomic Operations while (1) {. . . other stuff. . . //homegrown spinlock while(test_and_set_bit(0, &busy); critical section – anything that touches a particular set of shared data clear_bit(0, &busy ); } 55

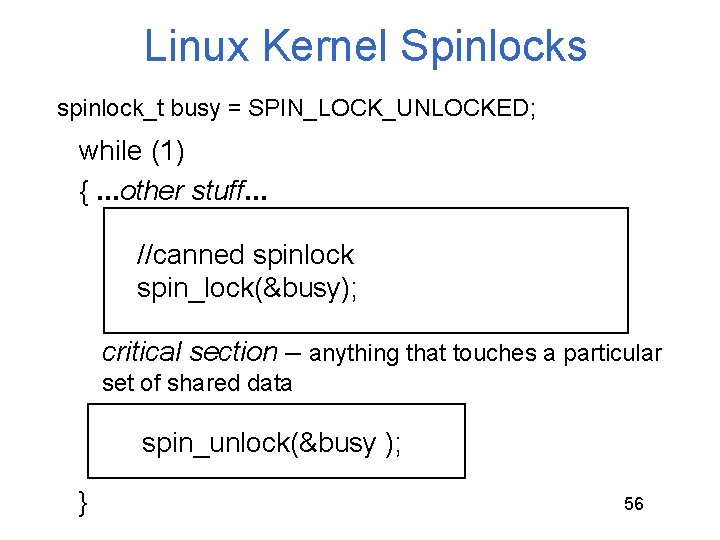

Linux Kernel Spinlocks spinlock_t busy = SPIN_LOCK_UNLOCKED; while (1) {. . . other stuff. . . //canned spinlock spin_lock(&busy); critical section – anything that touches a particular set of shared data spin_unlock(&busy ); } 56

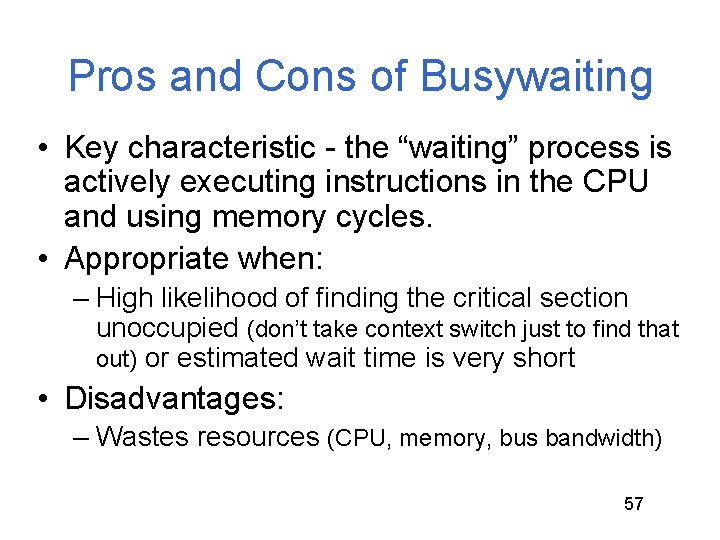

Pros and Cons of Busywaiting • Key characteristic - the “waiting” process is actively executing instructions in the CPU and using memory cycles. • Appropriate when: – High likelihood of finding the critical section unoccupied (don’t take context switch just to find that out) or estimated wait time is very short • Disadvantages: – Wastes resources (CPU, memory, bus bandwidth) 57

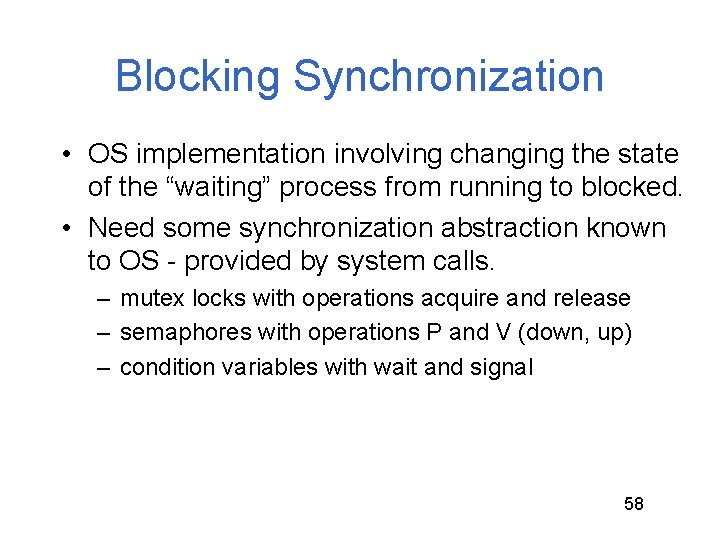

Blocking Synchronization • OS implementation involving changing the state of the “waiting” process from running to blocked. • Need some synchronization abstraction known to OS - provided by system calls. – mutex locks with operations acquire and release – semaphores with operations P and V (down, up) – condition variables with wait and signal 58

Template for Implementing Blocking Synchronization • Associated with the lock is a memory location (busy) and a queue for waiting threads/processes. • Acquire syscall: while (busy) {enqueue caller on lock’s queue} /*upon waking to nonbusy lock*/ busy = true; • Release syscall: busy = false; /* wakeup */ move any waiting threads to Ready queue 59

Pros and Cons of Blocking • Waiting processes/threads don’t consume CPU cycles • Appropriate: when the cost of a system call is justified by expected waiting time – High likelihood of contention for lock – Long critical sections • Disadvantage: OS involvement d overhead 60

Synchronization Primitives (Abstractions*) *implementable by busywaiting or blocking 63

64

65

Semaphores • Well-known synchronization abstraction • Defined as a non-negative integer with two atomic operations P(s) - [wait until s > 0; s--] V(s) - [s++] • The atomicity and the waiting can be implemented by either busywaiting or blocking solutions. 66

Semaphore Usage • Binary semaphores can provide mutual exclusion (solution of critical section problem) • Counting semaphores can represent a resource with multiple instances (e. g. solving producer/consumer problem) • Signaling events (persistent events that stay relevant even if nobody listening right now) 67

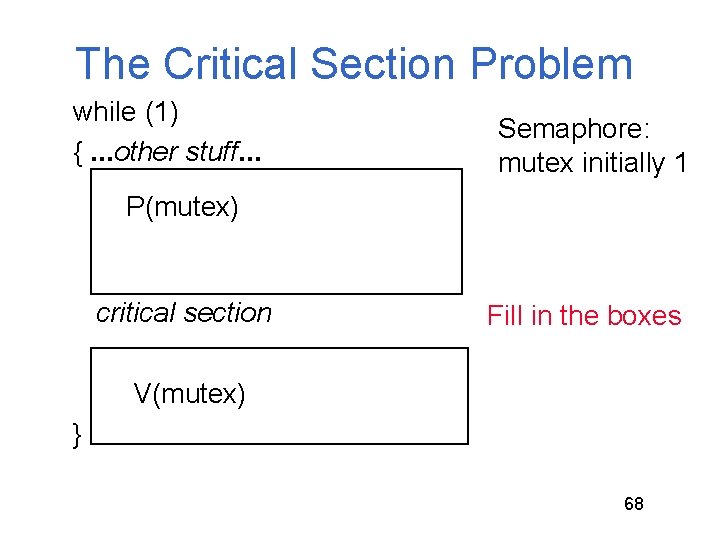

The Critical Section Problem while (1) {. . . other stuff. . . Semaphore: mutex initially 1 P(mutex) critical section Fill in the boxes V(mutex) } 68

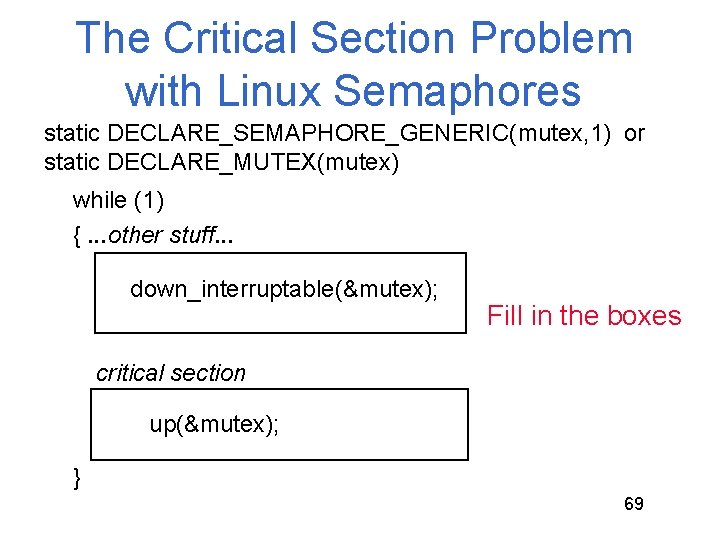

The Critical Section Problem with Linux Semaphores static DECLARE_SEMAPHORE_GENERIC(mutex, 1) or static DECLARE_MUTEX(mutex) while (1) {. . . other stuff. . . down_interruptable(&mutex); Fill in the boxes critical section up(&mutex); } 69

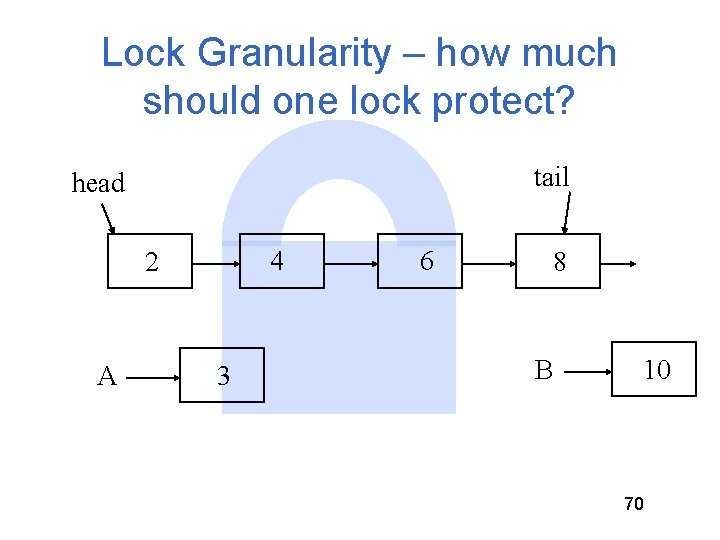

Lock Granularity – how much should one lock protect? tail head 4 2 A 3 6 8 B 10 70

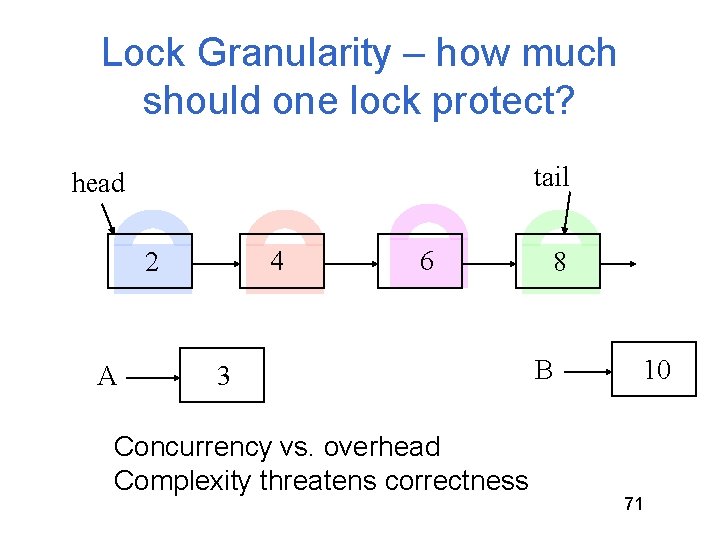

Lock Granularity – how much should one lock protect? tail head 4 2 A 6 3 Concurrency vs. overhead Complexity threatens correctness 8 B 10 71

Classic Synchronization Problems There a number of “classic” problems that represent a class of synchronization situations • Critical Section problem • Producer/Consumer problem • Reader/Writer problem • 5 Dining Philosophers Why? Once you know the “generic” solutions, you can recognize other special cases in which to apply them (e. g. , this is just a version of the reader/writer problem) 72

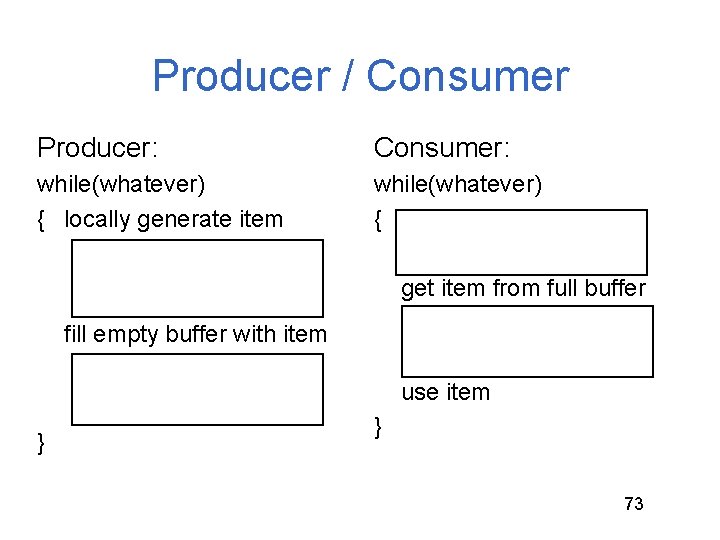

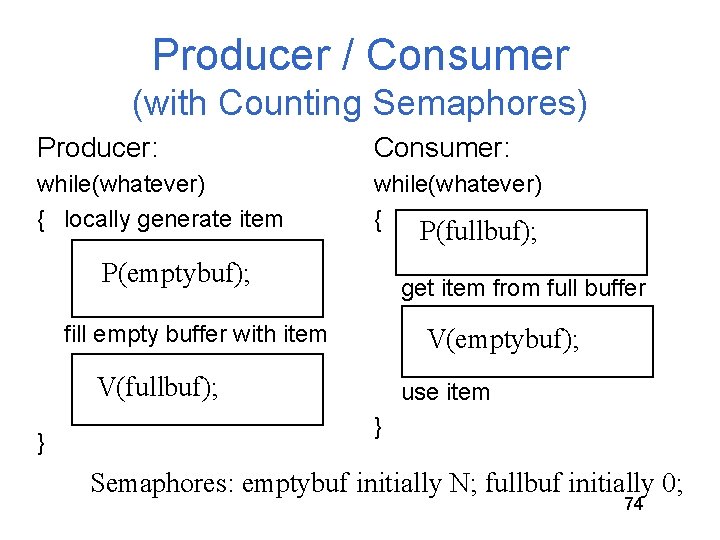

Producer / Consumer Producer: Consumer: while(whatever) { locally generate item while(whatever) { get item from full buffer fill empty buffer with item use item } } 73

Producer / Consumer (with Counting Semaphores) Producer: Consumer: while(whatever) { locally generate item while(whatever) { P(fullbuf); P(emptybuf); get item from full buffer fill empty buffer with item V(emptybuf); V(fullbuf); } use item } Semaphores: emptybuf initially N; fullbuf initially 0; 74

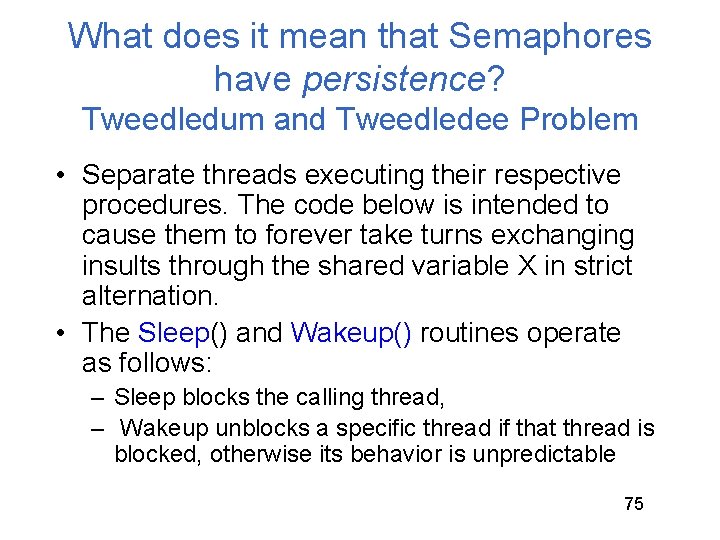

What does it mean that Semaphores have persistence? Tweedledum and Tweedledee Problem • Separate threads executing their respective procedures. The code below is intended to cause them to forever take turns exchanging insults through the shared variable X in strict alternation. • The Sleep() and Wakeup() routines operate as follows: – Sleep blocks the calling thread, – Wakeup unblocks a specific thread if that thread is blocked, otherwise its behavior is unpredictable 75

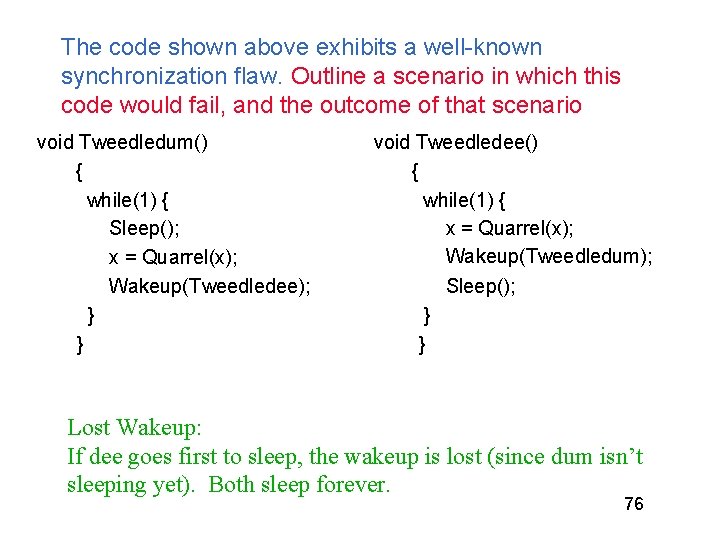

The code shown above exhibits a well-known synchronization flaw. Outline a scenario in which this code would fail, and the outcome of that scenario void Tweedledum() { while(1) { Sleep(); x = Quarrel(x); Wakeup(Tweedledee); } } void Tweedledee() { while(1) { x = Quarrel(x); Wakeup(Tweedledum); Sleep(); } } Lost Wakeup: If dee goes first to sleep, the wakeup is lost (since dum isn’t sleeping yet). Both sleep forever. 76

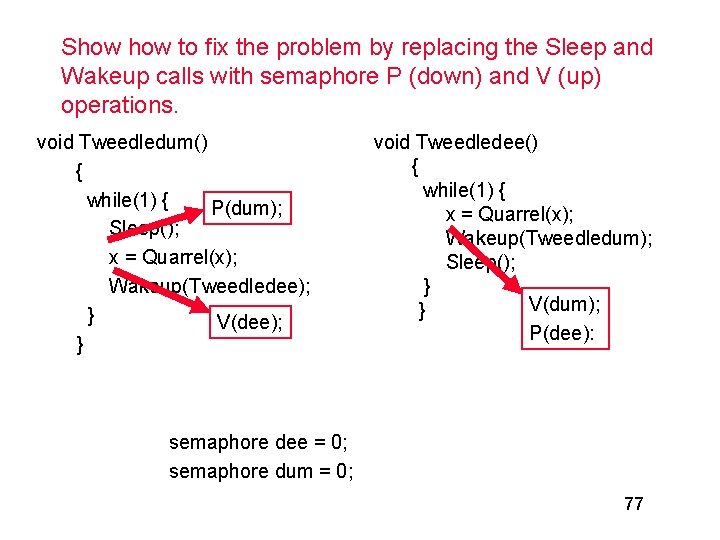

Show to fix the problem by replacing the Sleep and Wakeup calls with semaphore P (down) and V (up) operations. void Tweedledum() { while(1) { P(dum); Sleep(); x = Quarrel(x); Wakeup(Tweedledee); } V(dee); } void Tweedledee() { while(1) { x = Quarrel(x); Wakeup(Tweedledum); Sleep(); } V(dum); } P(dee): semaphore dee = 0; semaphore dum = 0; 77

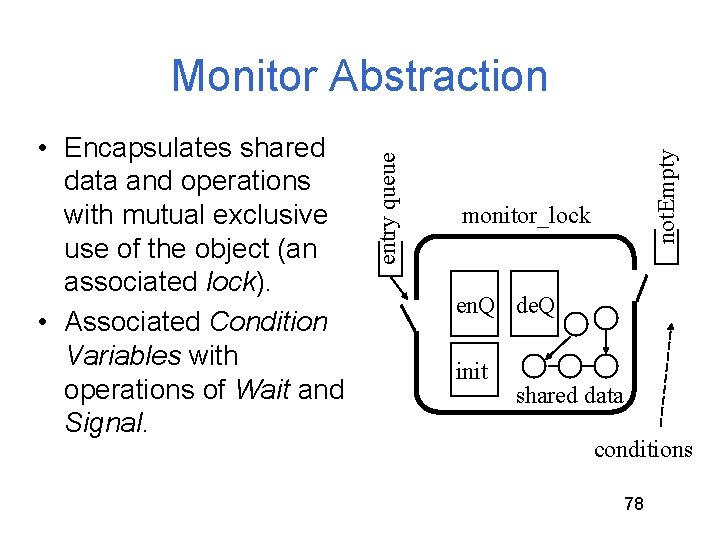

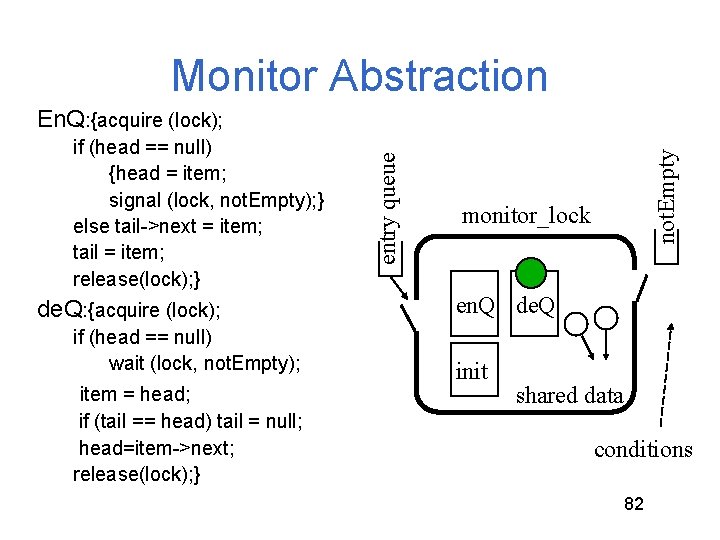

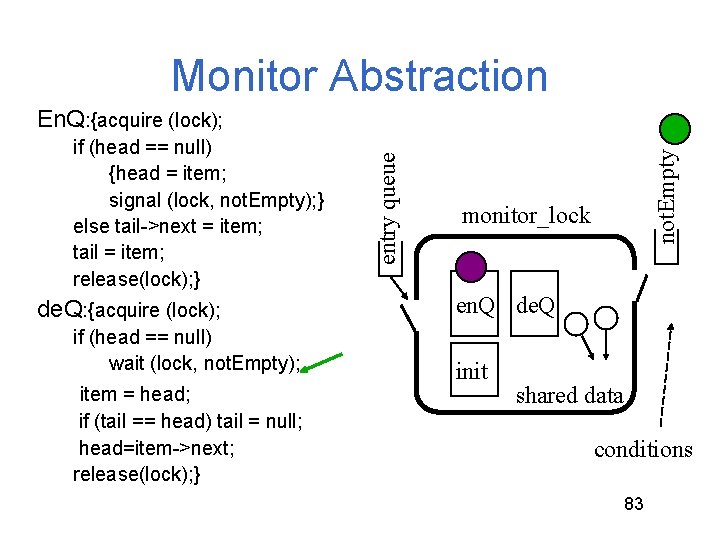

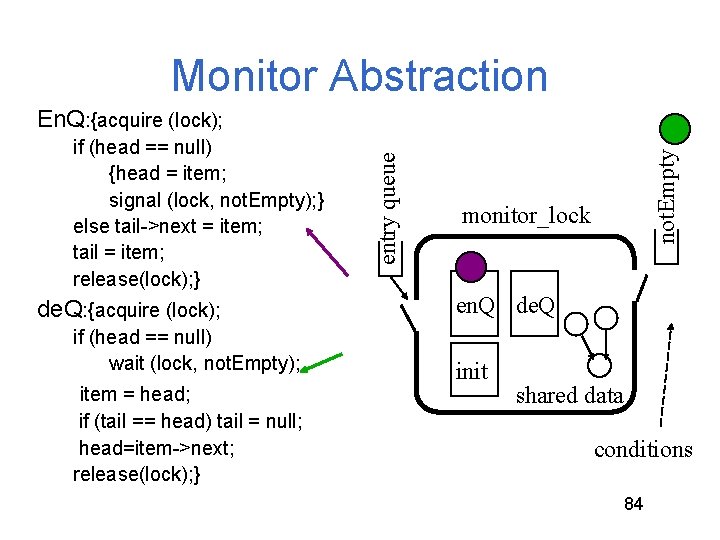

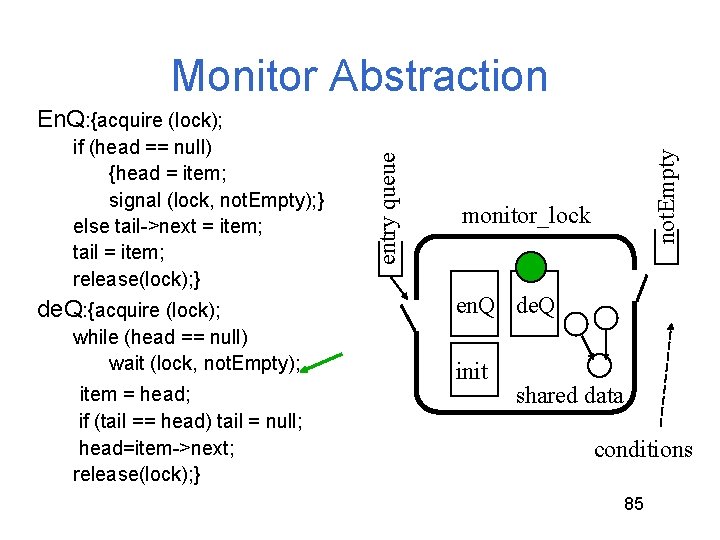

not. Empty • Encapsulates shared data and operations with mutual exclusive use of the object (an associated lock). • Associated Condition Variables with operations of Wait and Signal. entry queue Monitor Abstraction monitor_lock en. Q de. Q init shared data conditions 78

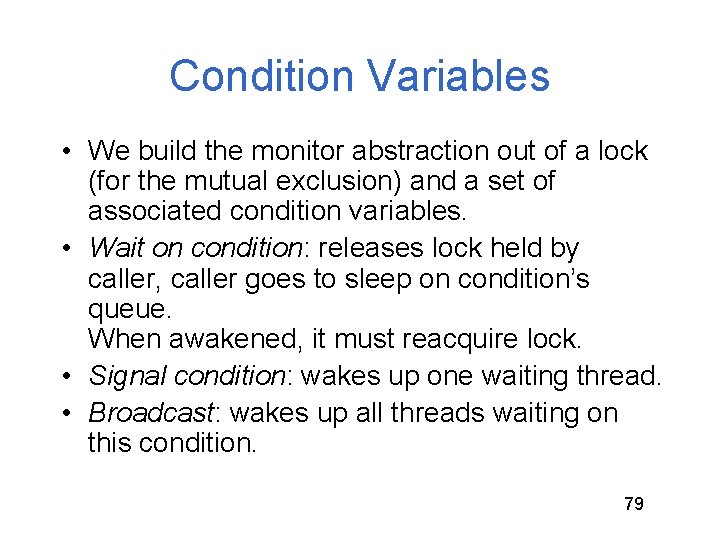

Condition Variables • We build the monitor abstraction out of a lock (for the mutual exclusion) and a set of associated condition variables. • Wait on condition: releases lock held by caller, caller goes to sleep on condition’s queue. When awakened, it must reacquire lock. • Signal condition: wakes up one waiting thread. • Broadcast: wakes up all threads waiting on this condition. 79

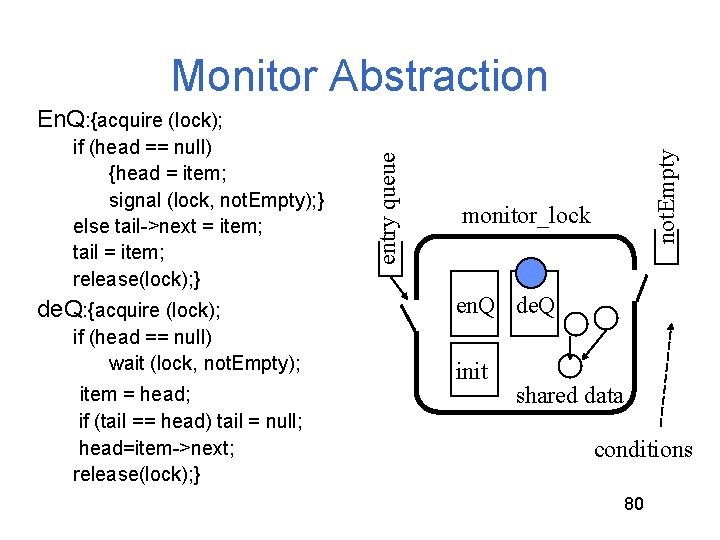

Monitor Abstraction de. Q: {acquire (lock); if (head == null) wait (lock, not. Empty); item = head; if (tail == head) tail = null; head=item->next; release(lock); } not. Empty if (head == null) {head = item; signal (lock, not. Empty); } else tail->next = item; tail = item; release(lock); } entry queue En. Q: {acquire (lock); monitor_lock en. Q de. Q init shared data conditions 80

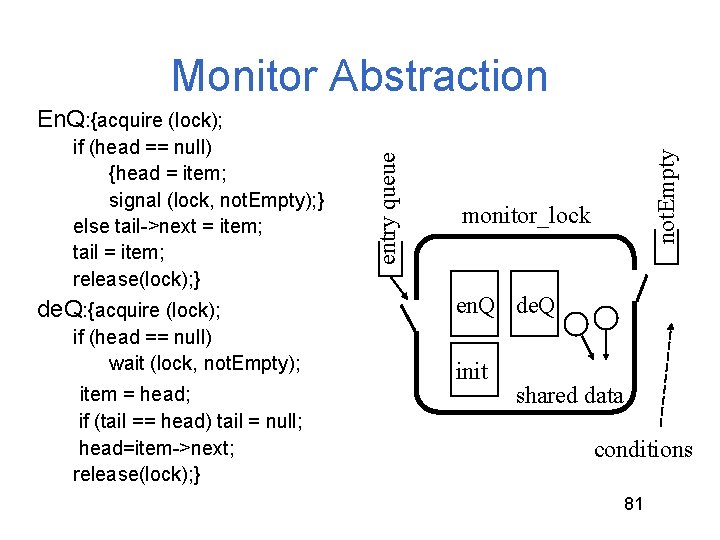

Monitor Abstraction de. Q: {acquire (lock); if (head == null) wait (lock, not. Empty); item = head; if (tail == head) tail = null; head=item->next; release(lock); } not. Empty if (head == null) {head = item; signal (lock, not. Empty); } else tail->next = item; tail = item; release(lock); } entry queue En. Q: {acquire (lock); monitor_lock en. Q de. Q init shared data conditions 81

Monitor Abstraction de. Q: {acquire (lock); if (head == null) wait (lock, not. Empty); item = head; if (tail == head) tail = null; head=item->next; release(lock); } not. Empty if (head == null) {head = item; signal (lock, not. Empty); } else tail->next = item; tail = item; release(lock); } entry queue En. Q: {acquire (lock); monitor_lock en. Q de. Q init shared data conditions 82

Monitor Abstraction de. Q: {acquire (lock); if (head == null) wait (lock, not. Empty); item = head; if (tail == head) tail = null; head=item->next; release(lock); } not. Empty if (head == null) {head = item; signal (lock, not. Empty); } else tail->next = item; tail = item; release(lock); } entry queue En. Q: {acquire (lock); monitor_lock en. Q de. Q init shared data conditions 83

Monitor Abstraction de. Q: {acquire (lock); if (head == null) wait (lock, not. Empty); item = head; if (tail == head) tail = null; head=item->next; release(lock); } not. Empty if (head == null) {head = item; signal (lock, not. Empty); } else tail->next = item; tail = item; release(lock); } entry queue En. Q: {acquire (lock); monitor_lock en. Q de. Q init shared data conditions 84

Monitor Abstraction de. Q: {acquire (lock); while (head == null) wait (lock, not. Empty); item = head; if (tail == head) tail = null; head=item->next; release(lock); } not. Empty if (head == null) {head = item; signal (lock, not. Empty); } else tail->next = item; tail = item; release(lock); } entry queue En. Q: {acquire (lock); monitor_lock en. Q de. Q init shared data conditions 85

The Critical Section Problem while (1) {. . . other stuff. . . acquire (mutex); critical section // conceptually “inside” monitor release (mutex); } 86

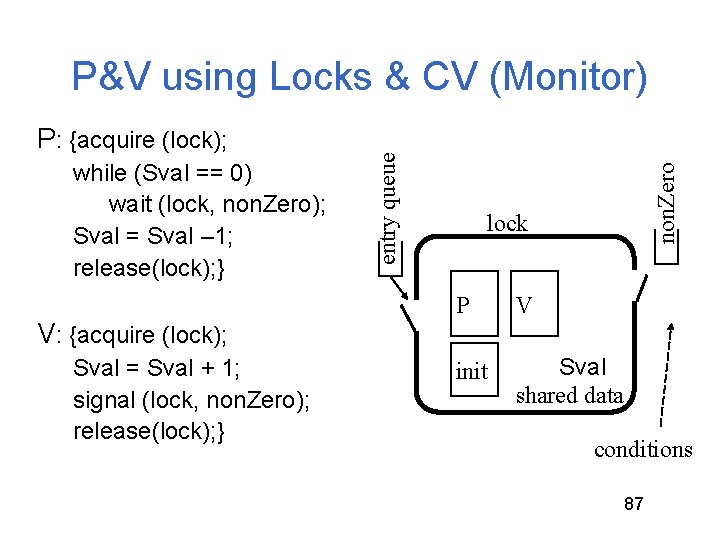

while (Sval == 0) wait (lock, non. Zero); Sval = Sval – 1; release(lock); } V: {acquire (lock); Sval = Sval + 1; signal (lock, non. Zero); release(lock); } non. Zero P: {acquire (lock); entry queue P&V using Locks & CV (Monitor) lock P V init Sval shared data conditions 87

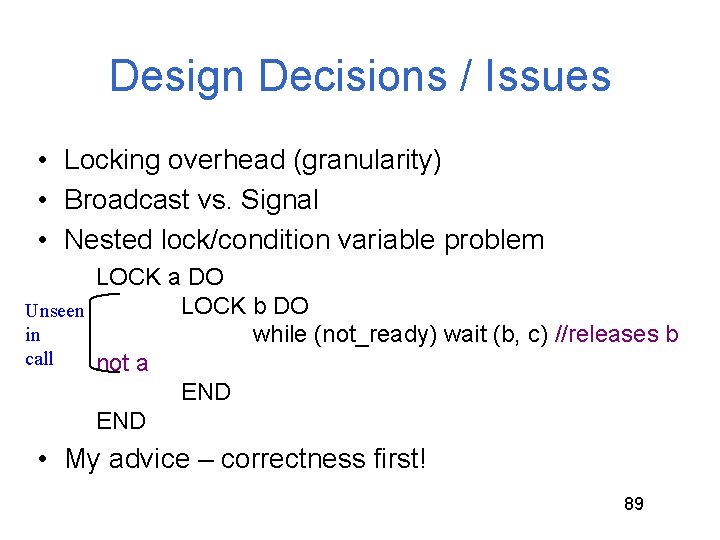

Design Decisions / Issues • Locking overhead (granularity) • Broadcast vs. Signal • Nested lock/condition variable problem LOCK a DO LOCK b DO Unseen in while (not_ready) wait (b, c) //releases b call not a END • My advice – correctness first! 89

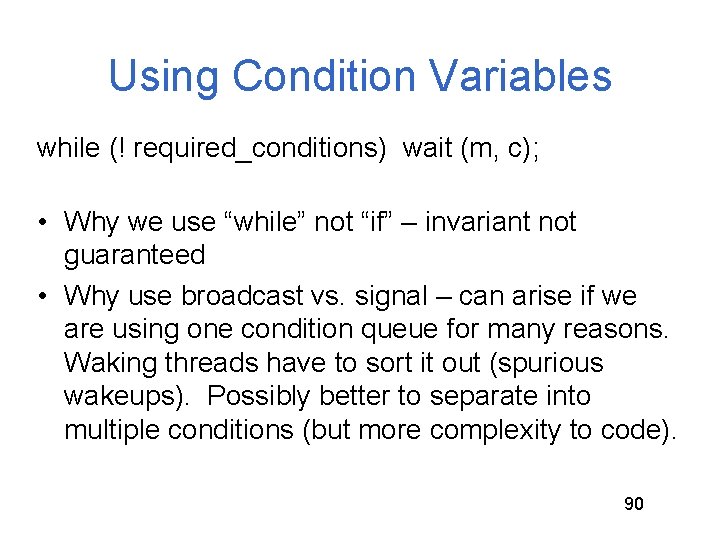

Using Condition Variables while (! required_conditions) wait (m, c); • Why we use “while” not “if” – invariant not guaranteed • Why use broadcast vs. signal – can arise if we are using one condition queue for many reasons. Waking threads have to sort it out (spurious wakeups). Possibly better to separate into multiple conditions (but more complexity to code). 90

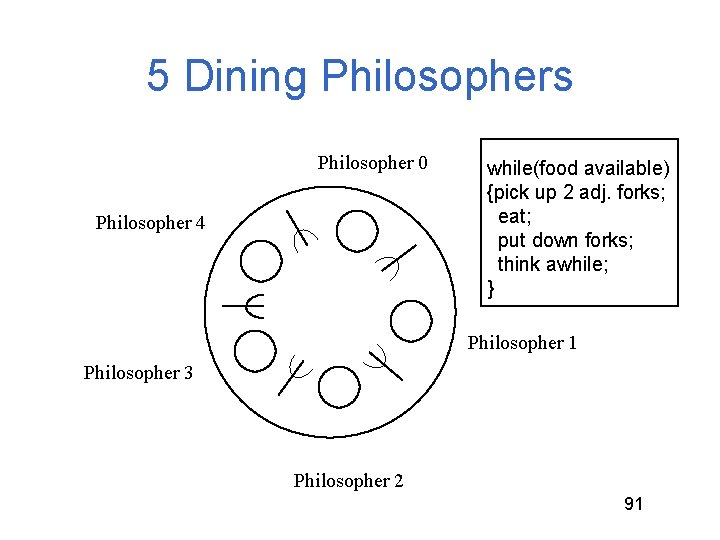

5 Dining Philosophers Philosopher 0 Philosopher 4 while(food available) {pick up 2 adj. forks; eat; put down forks; think awhile; } Philosopher 1 Philosopher 3 Philosopher 2 91

Template for Philosopher while (food available) { /*pick up forks*/ eat; /*put down forks*/ think awhile; } 92

![Naive Solution while (food available) { P(fork[left(me)]); P(fork[right(me)]); /*pick up forks*/ eat; V(fork[left(me)]); V(fork[right(me)]); Naive Solution while (food available) { P(fork[left(me)]); P(fork[right(me)]); /*pick up forks*/ eat; V(fork[left(me)]); V(fork[right(me)]);](http://slidetodoc.com/presentation_image_h/96f3011628f6825409880d42c143d564/image-89.jpg)

Naive Solution while (food available) { P(fork[left(me)]); P(fork[right(me)]); /*pick up forks*/ eat; V(fork[left(me)]); V(fork[right(me)]); /*put down forks*/ think awhile; } Does this work? 93

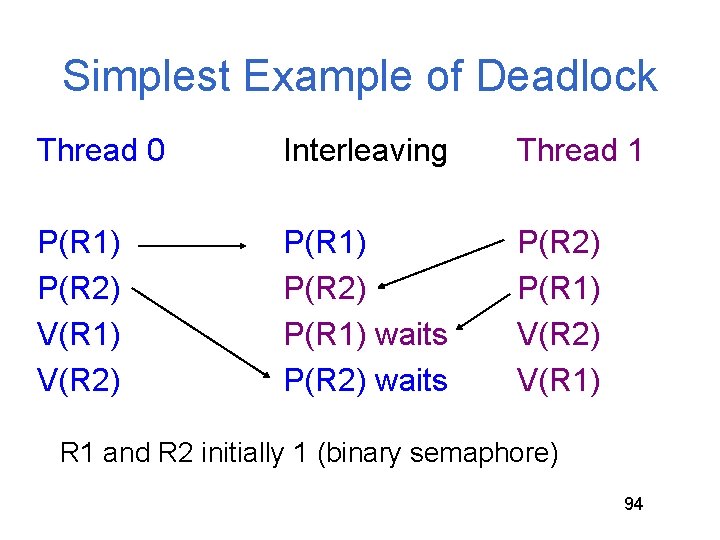

Simplest Example of Deadlock Thread 0 Interleaving Thread 1 P(R 1) P(R 2) V(R 1) V(R 2) P(R 1) P(R 2) P(R 1) waits P(R 2) P(R 1) V(R 2) V(R 1) R 1 and R 2 initially 1 (binary semaphore) 94

Conditions for Deadlock • Mutually exclusive use of resources – Binary semaphores R 1 and R 2 • Circular waiting – Thread 0 waits for Thread 1 to V(R 2) and Thread 1 waits for Thread 0 to V(R 1) • Hold and wait – Holding either R 1 or R 2 while waiting on other • No pre-emption – Neither R 1 nor R 2 are removed from their respective holding Threads. 95

Philosophy 101 (or why 5 DP is interesting) • How to eat with your Fellows without causing Deadlock. – Circular arguments (the circular wait condition) – Not giving up on firmly held things (no preemption) – Infinite patience with Half-baked schemes (hold some & wait for more) • Why Starvation exists and what we can do about it. 96

Dealing with Deadlock It can be prevented by breaking one of the prerequisite conditions: • Mutually exclusive use of resources – Example: Allowing shared access to read-only files (readers/writers problem) • circular waiting – Example: Define an ordering on resources and acquire them in order • hold and wait • no pre-emption 97

![Circular Wait Condition while (food available) { if (me 0) {P(fork[left(me)]); P(fork[right(me)]); } else Circular Wait Condition while (food available) { if (me 0) {P(fork[left(me)]); P(fork[right(me)]); } else](http://slidetodoc.com/presentation_image_h/96f3011628f6825409880d42c143d564/image-94.jpg)

Circular Wait Condition while (food available) { if (me 0) {P(fork[left(me)]); P(fork[right(me)]); } else {(P(fork[right(me)]); P(fork[left(me)]); } eat; V(fork[left(me)]); V(fork[right(me)]); think awhile; } 98

![Hold and Wait Condition while (food available) { P(mutex); while (forks [me] != 2) Hold and Wait Condition while (food available) { P(mutex); while (forks [me] != 2)](http://slidetodoc.com/presentation_image_h/96f3011628f6825409880d42c143d564/image-95.jpg)

Hold and Wait Condition while (food available) { P(mutex); while (forks [me] != 2) {blocking[me] = true; V(mutex); P(sleepy[me]); P(mutex); } forks [leftneighbor(me)] --; forks [rightneighbor(me)]--; V(mutex): eat; P(mutex); forks [leftneighbor(me)] ++; forks [rightneighbor(me)]++; if (blocking[leftneighbor(me)]) {blocking [leftneighbor(me)] = false; V(sleepy[leftneighbor(me)]); } if (blocking[rightneighbor(me)]) {blocking[rightneighbor(me)] = false; V(sleepy[rightneighbor(me)]); } V(mutex); think awhile; 99 }

Starvation The difference between deadlock and starvation is subtle: – Once a set of processes are deadlocked, there is no future execution sequence that can get them out of it. – In starvation, there does exist some execution sequence that is favorable to the starving process although there is no guarantee it will ever occur. – Rollback and Retry solutions are prone to starvation. – Continuous arrival of higher priority processes is another common starvation situation. 100

![5 DP - Monitor Style Boolean eating [5]; Lock fork. Mutex; Condition forks. Avail; 5 DP - Monitor Style Boolean eating [5]; Lock fork. Mutex; Condition forks. Avail;](http://slidetodoc.com/presentation_image_h/96f3011628f6825409880d42c143d564/image-97.jpg)

5 DP - Monitor Style Boolean eating [5]; Lock fork. Mutex; Condition forks. Avail; void Pickup. Forks (int i) { fork. Mutex. Acquire( ); while ( eating[(i-1)%5] eating[(i+1)%5] ) forks. Avail. Wait(&fork. Mutex); eating[i] = true; fork. Mutex. Release( ); void Putdown. Forks (int i) { fork. Mutex. Acquire( ); eating[i] = false; forks. Avail. Broadcast(&fork. Mute x); fork. Mutex. Release( ); } } 101

![What about this? while (food available) { fork. Mutex. Acquire( ); while (forks [me] What about this? while (food available) { fork. Mutex. Acquire( ); while (forks [me]](http://slidetodoc.com/presentation_image_h/96f3011628f6825409880d42c143d564/image-98.jpg)

What about this? while (food available) { fork. Mutex. Acquire( ); while (forks [me] != 2) {blocking[me]=true; fork. Mutex. Release( ); sleep( ); fork. Mutex. Acquire( ); } forks [leftneighbor(me)]--; forks [rightneighbor(me)]--; fork. Mutex. Release( ): eat; fork. Mutex. Acquire( ); forks[leftneighbor(me)] ++; forks [rightneighbor(me)]++; if (blocking[leftneighbor(me)] || blocking[rightneighbor(me)]) wakeup ( ); fork. Mutex. Release( ); think awhile; } 102

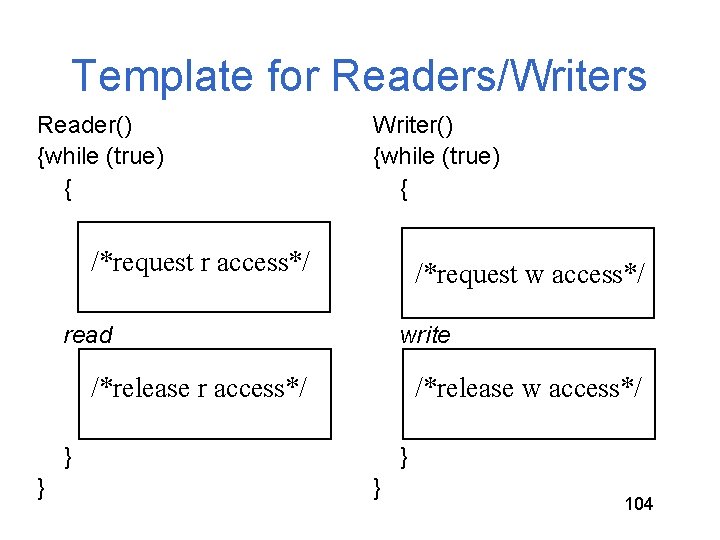

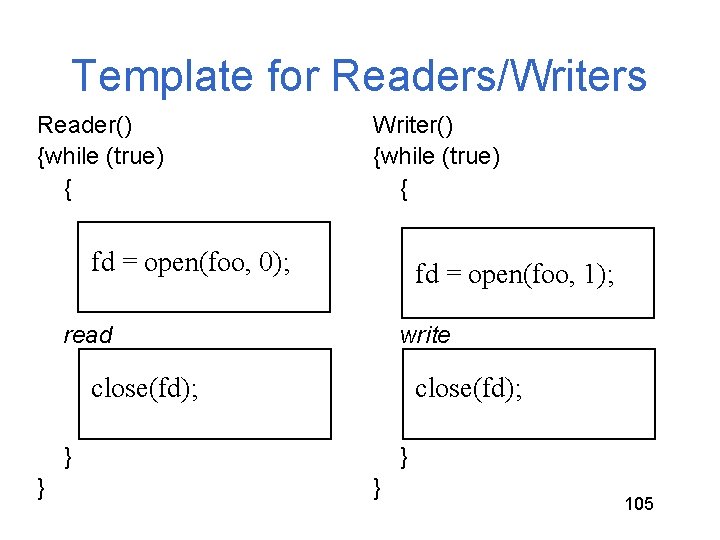

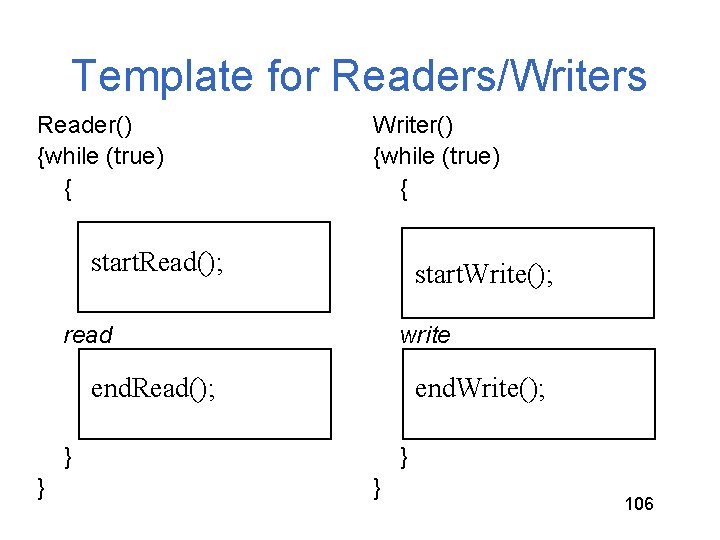

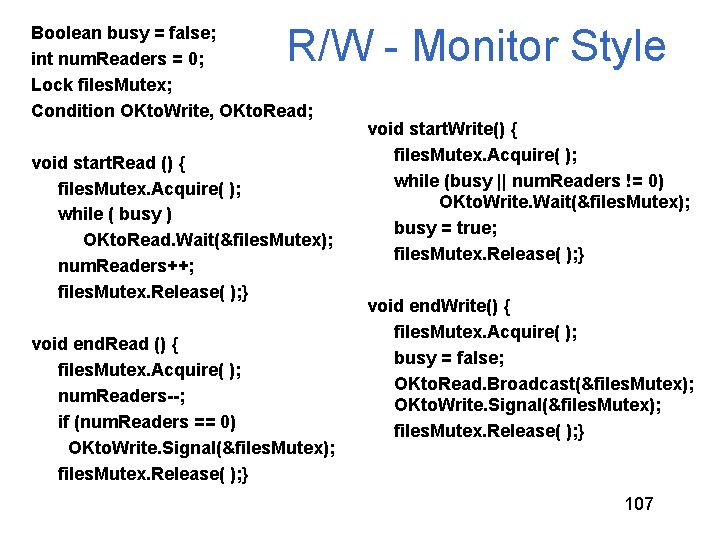

Readers/Writers Problem Synchronizing access to a file or data record in a database such that any number of threads requesting read-only access are allowed but only one thread requesting write access is allowed, excluding all readers. 103

Template for Readers/Writers Reader() {while (true) { Writer() {while (true) { /*request r access*/ /*request w access*/ read write /*release r access*/ /*release w access*/ } } 104

Template for Readers/Writers Reader() {while (true) { Writer() {while (true) { fd = open(foo, 0); fd = open(foo, 1); read write close(fd); } } 105

Template for Readers/Writers Reader() {while (true) { Writer() {while (true) { start. Read(); start. Write(); read write end. Read(); end. Write(); } } 106

R/W - Monitor Style Boolean busy = false; int num. Readers = 0; Lock files. Mutex; Condition OKto. Write, OKto. Read; void start. Read () { files. Mutex. Acquire( ); while ( busy ) OKto. Read. Wait(&files. Mutex); num. Readers++; files. Mutex. Release( ); } void end. Read () { files. Mutex. Acquire( ); num. Readers--; if (num. Readers == 0) OKto. Write. Signal(&files. Mutex); files. Mutex. Release( ); } void start. Write() { files. Mutex. Acquire( ); while (busy || num. Readers != 0) OKto. Write. Wait(&files. Mutex); busy = true; files. Mutex. Release( ); } void end. Write() { files. Mutex. Acquire( ); busy = false; OKto. Read. Broadcast(&files. Mutex); OKto. Write. Signal(&files. Mutex); files. Mutex. Release( ); } 107

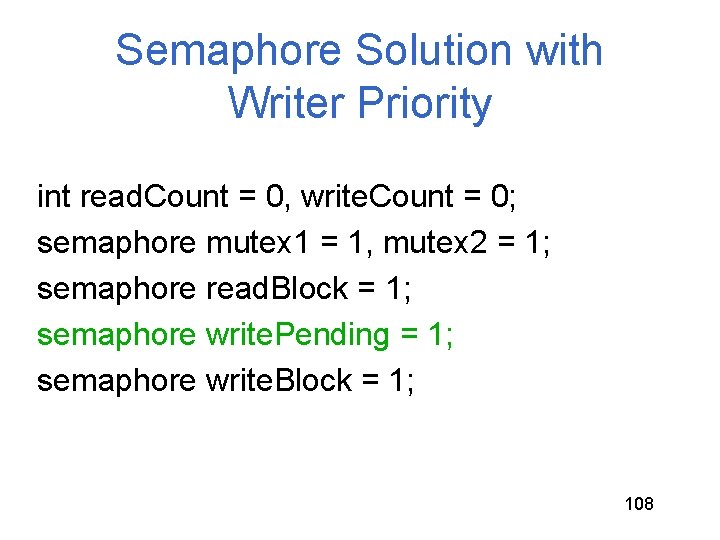

Semaphore Solution with Writer Priority int read. Count = 0, write. Count = 0; semaphore mutex 1 = 1, mutex 2 = 1; semaphore read. Block = 1; semaphore write. Pending = 1; semaphore write. Block = 1; 108

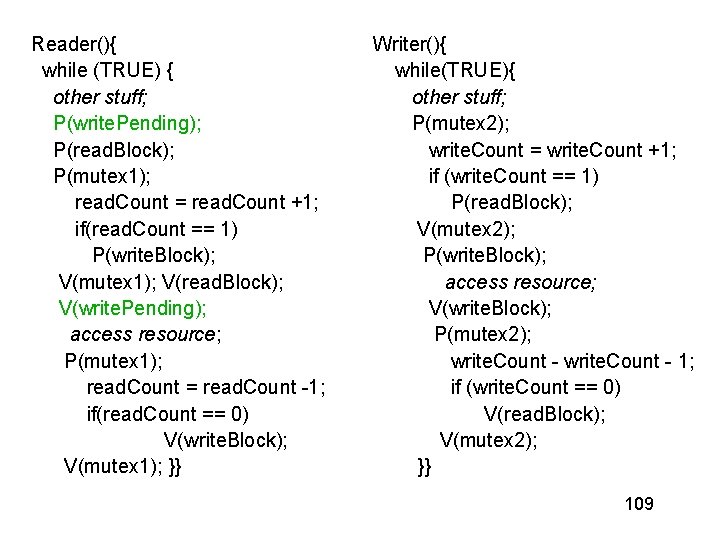

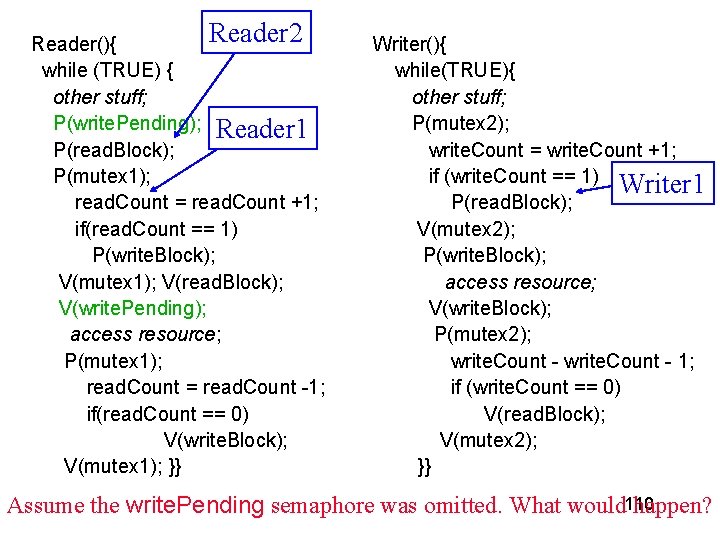

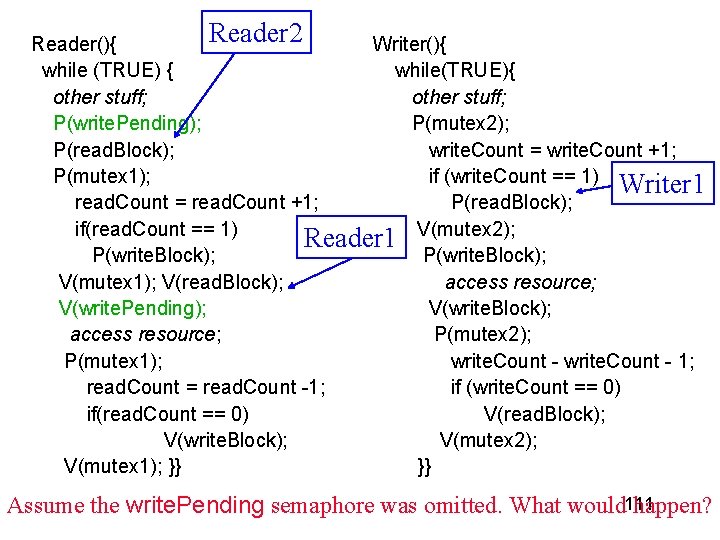

Reader(){ while (TRUE) { other stuff; P(write. Pending); P(read. Block); P(mutex 1); read. Count = read. Count +1; if(read. Count == 1) P(write. Block); V(mutex 1); V(read. Block); V(write. Pending); access resource; P(mutex 1); read. Count = read. Count -1; if(read. Count == 0) V(write. Block); V(mutex 1); }} Writer(){ while(TRUE){ other stuff; P(mutex 2); write. Count = write. Count +1; if (write. Count == 1) P(read. Block); V(mutex 2); P(write. Block); access resource; V(write. Block); P(mutex 2); write. Count - 1; if (write. Count == 0) V(read. Block); V(mutex 2); }} 109

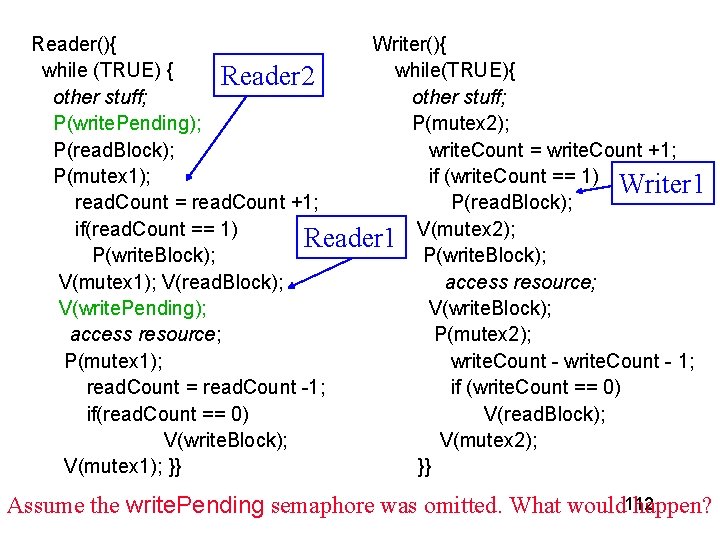

Reader 2 Reader(){ while (TRUE) { other stuff; P(write. Pending); Reader 1 P(read. Block); P(mutex 1); read. Count = read. Count +1; if(read. Count == 1) P(write. Block); V(mutex 1); V(read. Block); V(write. Pending); access resource; P(mutex 1); read. Count = read. Count -1; if(read. Count == 0) V(write. Block); V(mutex 1); }} Writer(){ while(TRUE){ other stuff; P(mutex 2); write. Count = write. Count +1; if (write. Count == 1) Writer 1 P(read. Block); V(mutex 2); P(write. Block); access resource; V(write. Block); P(mutex 2); write. Count - 1; if (write. Count == 0) V(read. Block); V(mutex 2); }} Assume the write. Pending semaphore was omitted. What would 110 happen?

Reader 2 Reader(){ Writer(){ while (TRUE) { while(TRUE){ other stuff; P(write. Pending); P(mutex 2); P(read. Block); write. Count = write. Count +1; P(mutex 1); if (write. Count == 1) Writer 1 read. Count = read. Count +1; P(read. Block); if(read. Count == 1) Reader 1 V(mutex 2); P(write. Block); V(mutex 1); V(read. Block); access resource; V(write. Pending); V(write. Block); access resource; P(mutex 2); P(mutex 1); write. Count - 1; read. Count = read. Count -1; if (write. Count == 0) if(read. Count == 0) V(read. Block); V(write. Block); V(mutex 2); V(mutex 1); }} }} Assume the write. Pending semaphore was omitted. What would 111 happen?

Reader(){ Writer(){ while (TRUE) { while(TRUE){ Reader 2 other stuff; P(write. Pending); P(mutex 2); P(read. Block); write. Count = write. Count +1; P(mutex 1); if (write. Count == 1) Writer 1 read. Count = read. Count +1; P(read. Block); if(read. Count == 1) Reader 1 V(mutex 2); P(write. Block); V(mutex 1); V(read. Block); access resource; V(write. Pending); V(write. Block); access resource; P(mutex 2); P(mutex 1); write. Count - 1; read. Count = read. Count -1; if (write. Count == 0) if(read. Count == 0) V(read. Block); V(write. Block); V(mutex 2); V(mutex 1); }} }} Assume the write. Pending semaphore was omitted. What would 112 happen?

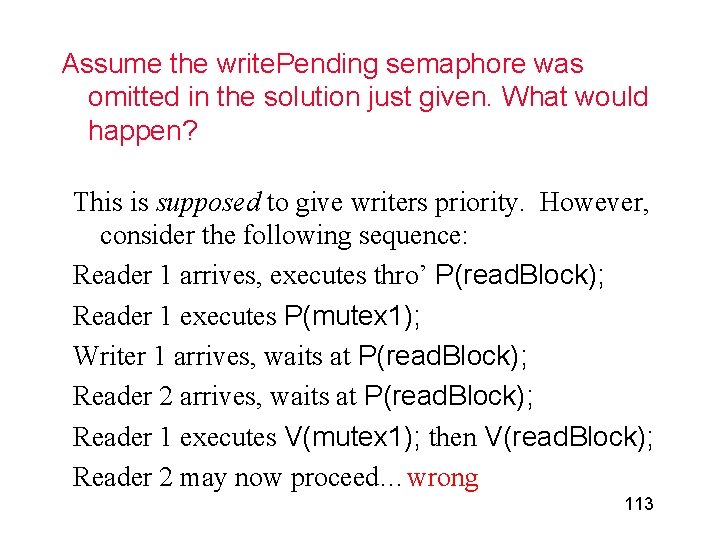

Assume the write. Pending semaphore was omitted in the solution just given. What would happen? This is supposed to give writers priority. However, consider the following sequence: Reader 1 arrives, executes thro’ P(read. Block); Reader 1 executes P(mutex 1); Writer 1 arrives, waits at P(read. Block); Reader 2 arrives, waits at P(read. Block); Reader 1 executes V(mutex 1); then V(read. Block); Reader 2 may now proceed…wrong 113

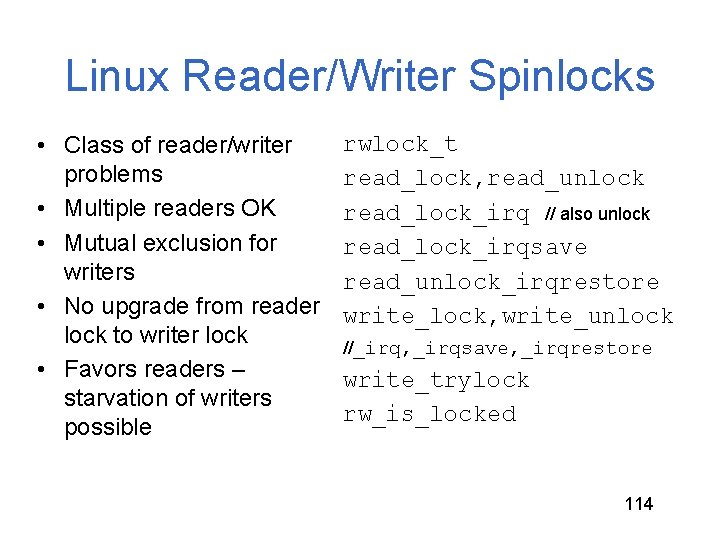

Linux Reader/Writer Spinlocks • Class of reader/writer problems • Multiple readers OK • Mutual exclusion for writers • No upgrade from reader lock to writer lock • Favors readers – starvation of writers possible rwlock_t read_lock, read_unlock read_lock_irq // also unlock read_lock_irqsave read_unlock_irqrestore write_lock, write_unlock //_irq, _irqsave, _irqrestore write_trylock rw_is_locked 114

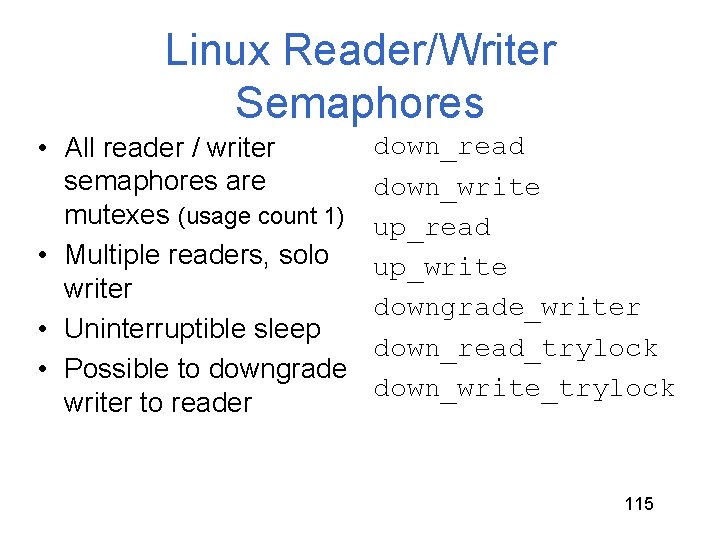

Linux Reader/Writer Semaphores • All reader / writer semaphores are mutexes (usage count 1) • Multiple readers, solo writer • Uninterruptible sleep • Possible to downgrade writer to reader down_read down_write up_read up_write downgrade_writer down_read_trylock down_write_trylock 115

- Slides: 111