Outline Classification ILP Architectures Data Parallel Architectures Process

![Outline • • Classification ILP Architectures • Flynn’s [66] Data Parallel Architectures • Feng’s Outline • • Classification ILP Architectures • Flynn’s [66] Data Parallel Architectures • Feng’s](https://slidetodoc.com/presentation_image/382fc4c949d58aaaf6acef5aedd582e2/image-2.jpg)

![Systolic Arrays [H. T. Kung 1978] Simplicity, Regularity, Concurrency, Communication Example : Band matrix Systolic Arrays [H. T. Kung 1978] Simplicity, Regularity, Concurrency, Communication Example : Band matrix](https://slidetodoc.com/presentation_image/382fc4c949d58aaaf6acef5aedd582e2/image-24.jpg)

- Slides: 37

Outline • • Classification ILP Architectures Data Parallel Architectures Process level Parallel Architectures Issues in parallel architectures Cache coherence problem Interconnection networks 1

![Outline Classification ILP Architectures Flynns 66 Data Parallel Architectures Fengs Outline • • Classification ILP Architectures • Flynn’s [66] Data Parallel Architectures • Feng’s](https://slidetodoc.com/presentation_image/382fc4c949d58aaaf6acef5aedd582e2/image-2.jpg)

Outline • • Classification ILP Architectures • Flynn’s [66] Data Parallel Architectures • Feng’s [72] Process level Parallel Architectures • Händler’s [77] • Modern (Sima, Fountain & Kacsuk) Issues in parallel architectures Cache coherence problem Interconnection networks 2

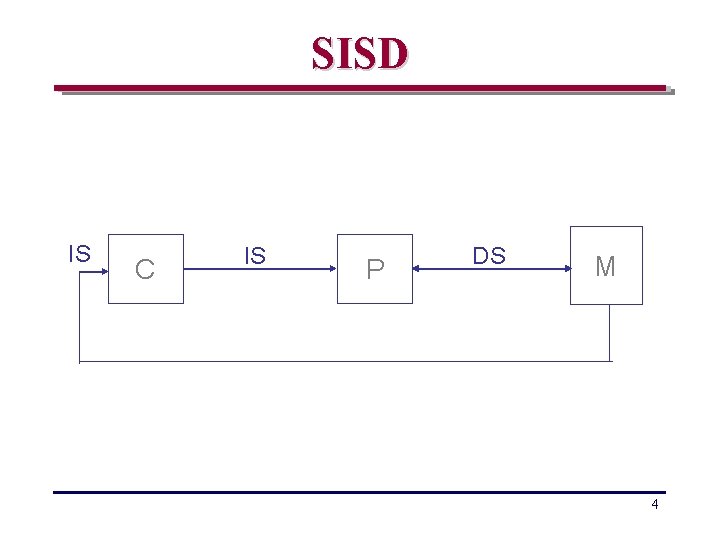

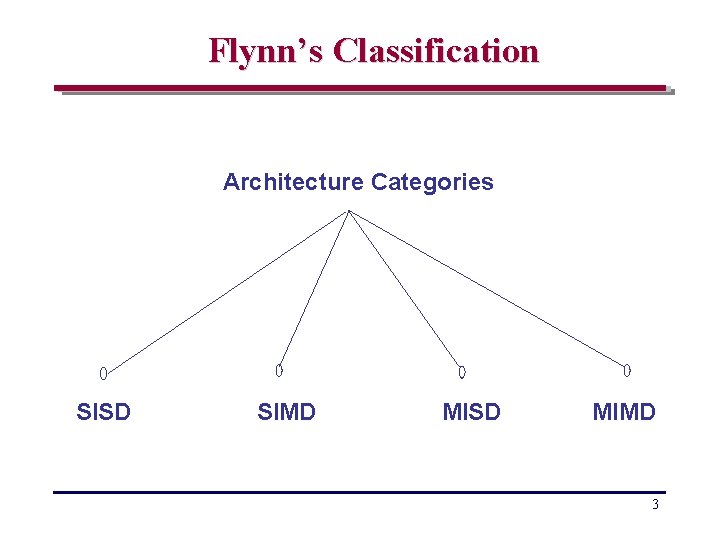

Flynn’s Classification Architecture Categories SISD SIMD MISD MIMD 3

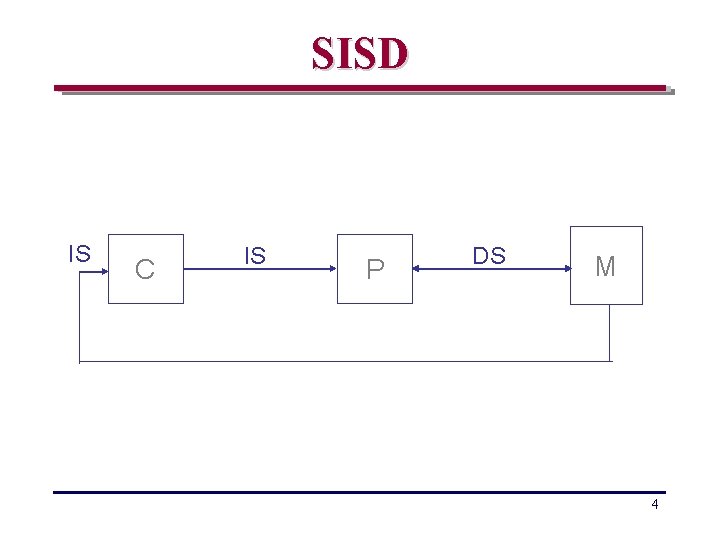

SISD IS C IS P DS M 4

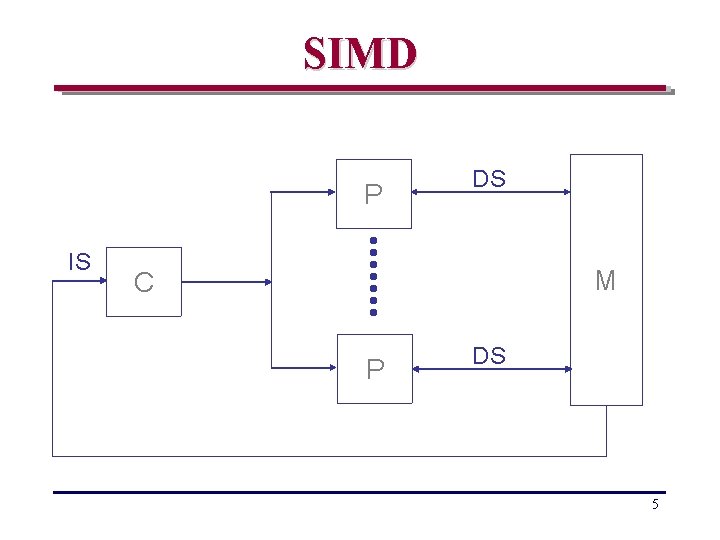

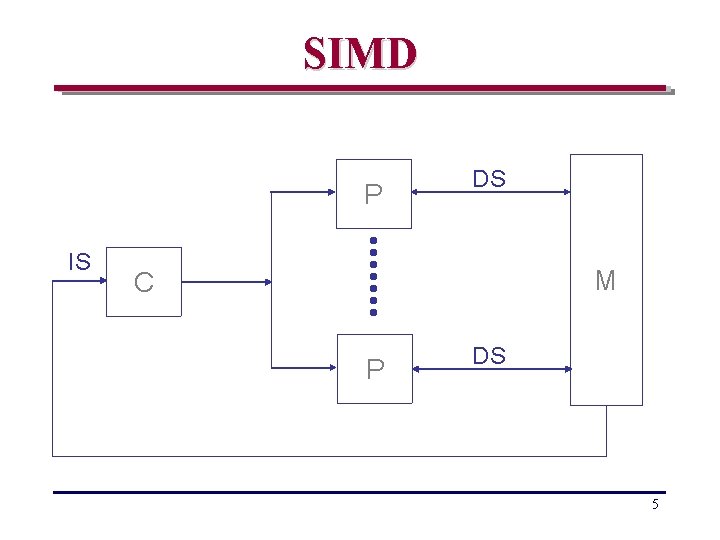

SIMD P IS DS M C P DS 5

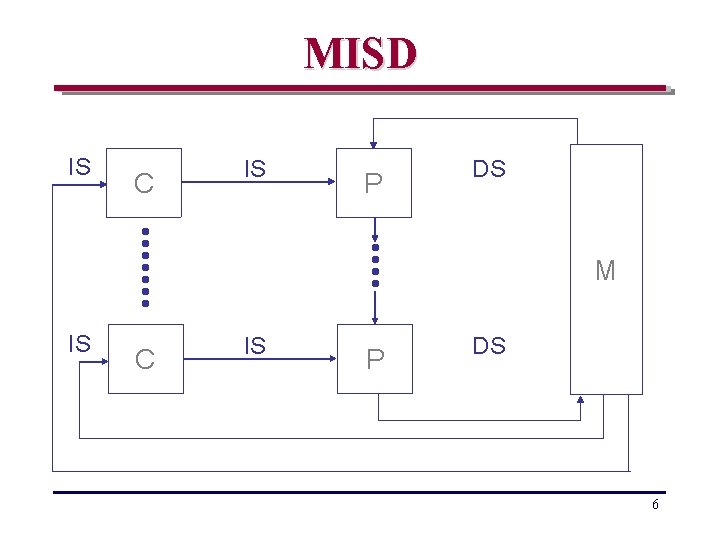

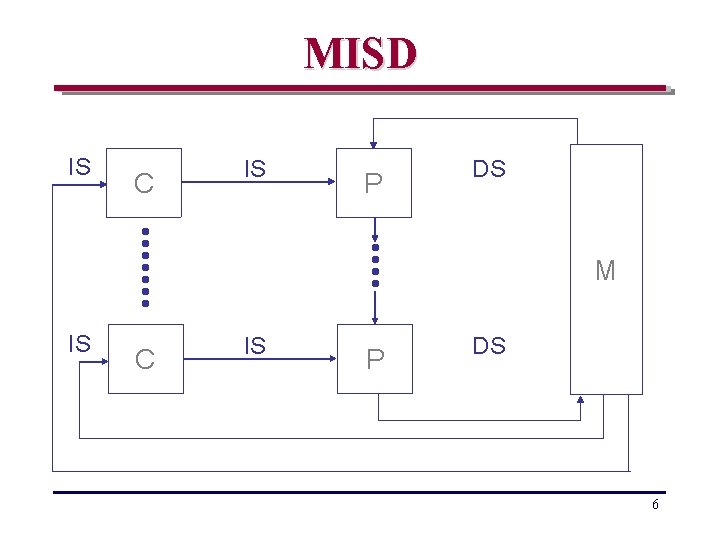

MISD IS C IS P DS M IS C IS P DS 6

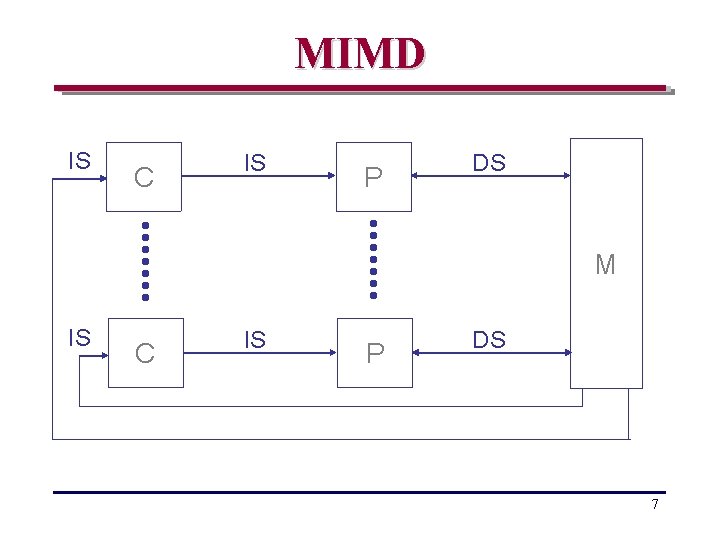

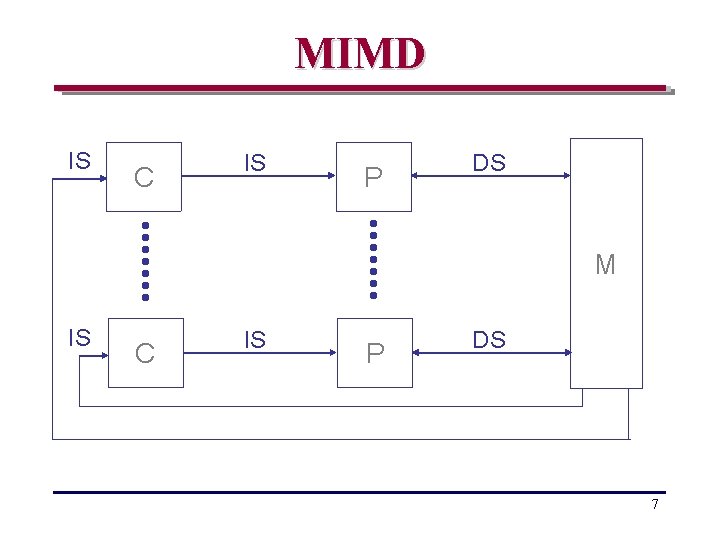

MIMD IS C IS P DS M IS C IS P DS 7

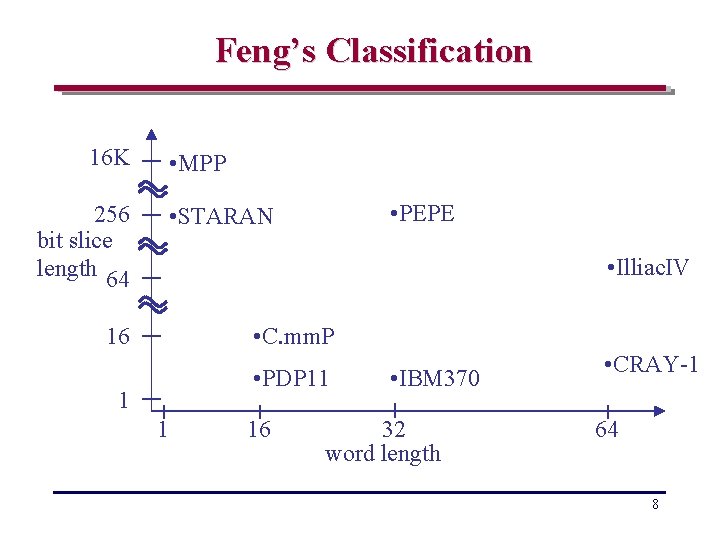

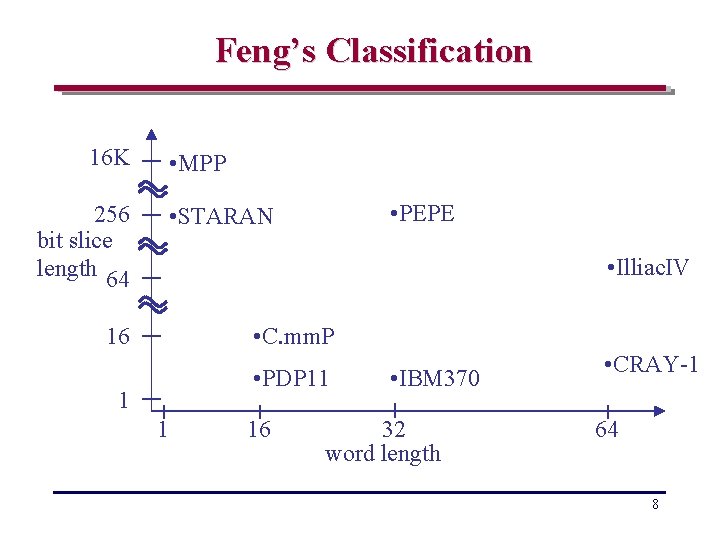

Feng’s Classification 16 K • MPP 256 bit slice length 64 • PEPE • STARAN • Illiac. IV • C. mm. P 16 • PDP 11 1 1 16 • IBM 370 32 word length • CRAY-1 64 8

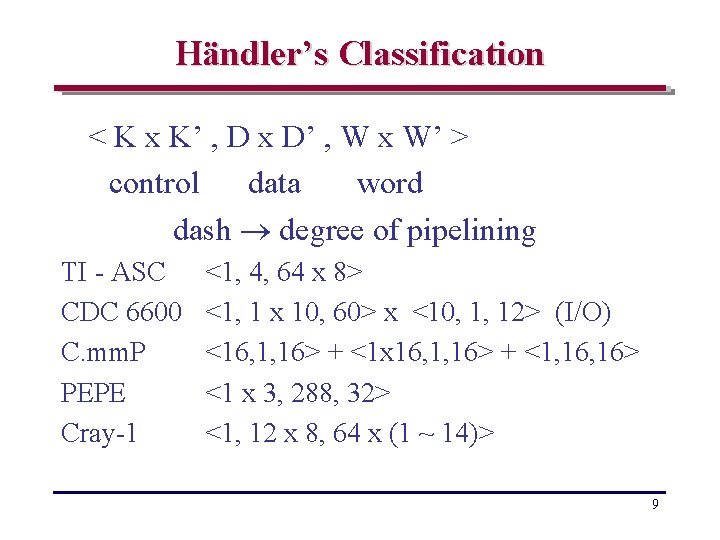

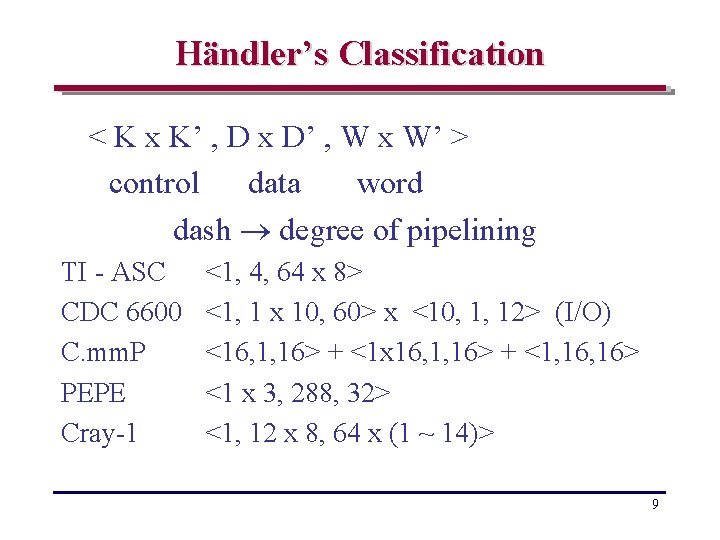

Händler’s Classification < K x K’ , D x D’ , W x W’ > control data word dash degree of pipelining TI - ASC CDC 6600 C. mm. P PEPE Cray-1 <1, 4, 64 x 8> <1, 1 x 10, 60> x <10, 1, 12> (I/O) <16, 1, 16> + <1 x 16, 1, 16> + <1, 16> <1 x 3, 288, 32> <1, 12 x 8, 64 x (1 ~ 14)> 9

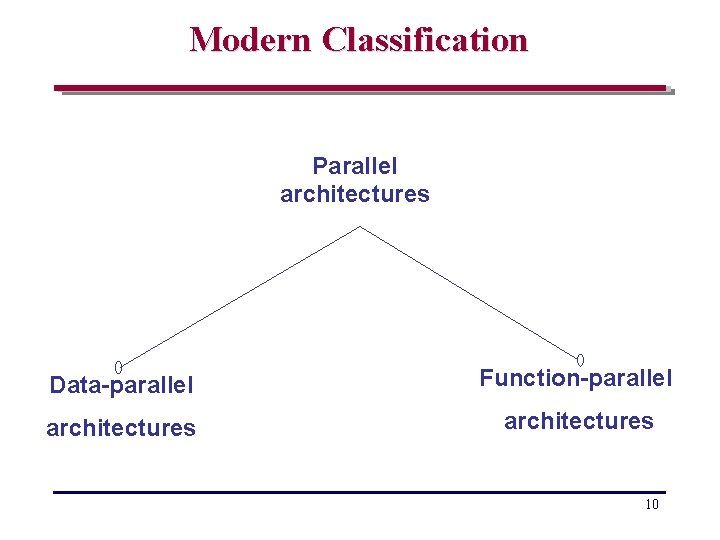

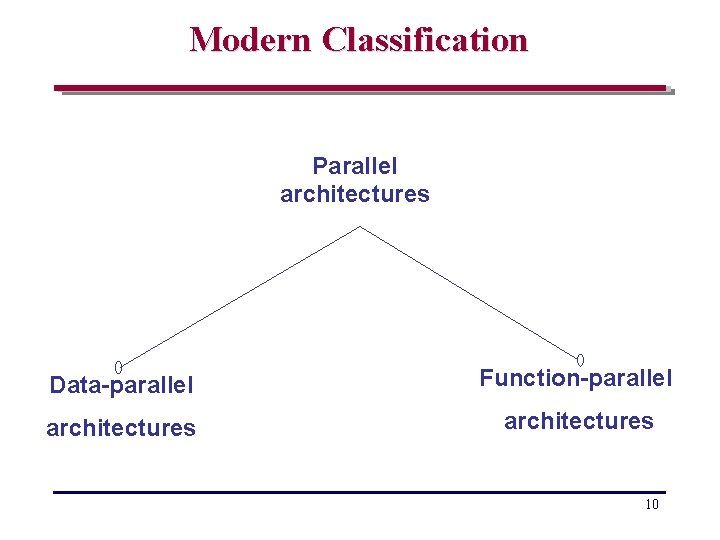

Modern Classification Parallel architectures Data-parallel Function-parallel architectures 10

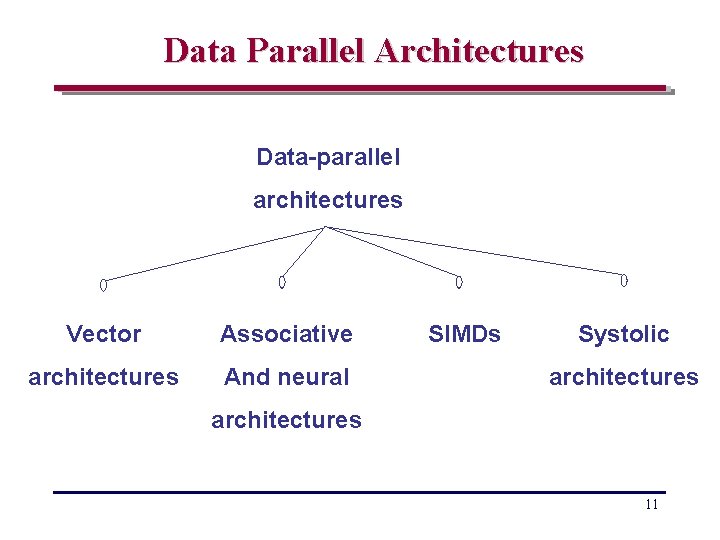

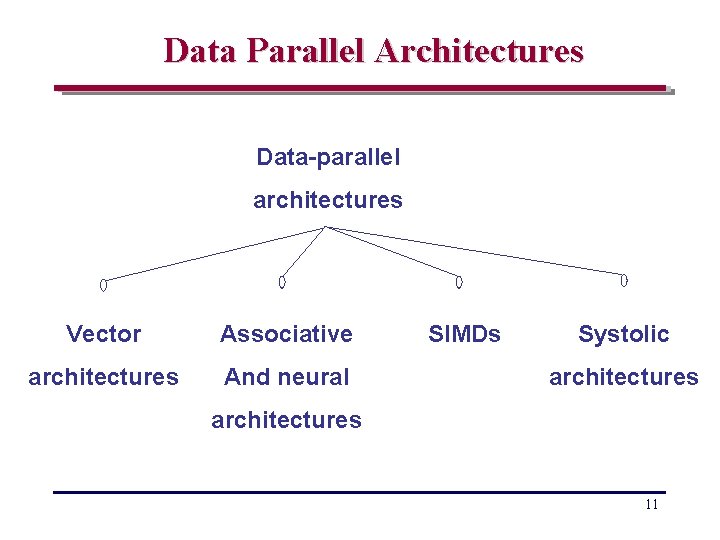

Data Parallel Architectures Data-parallel architectures Vector Associative architectures And neural SIMDs Systolic architectures 11

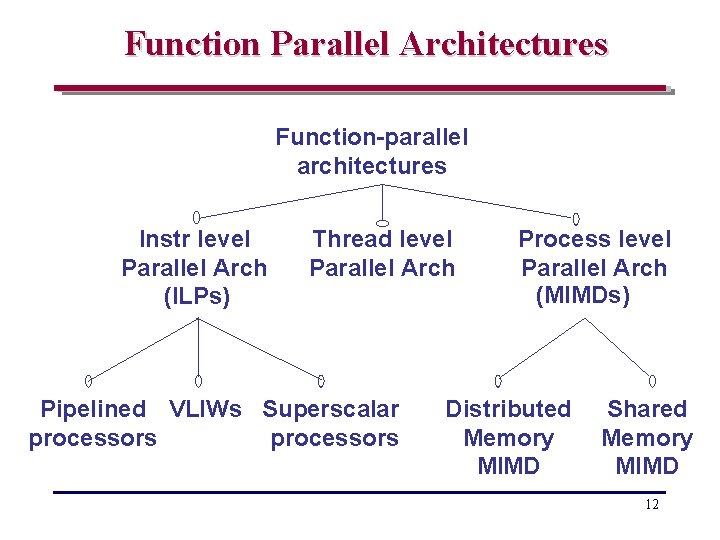

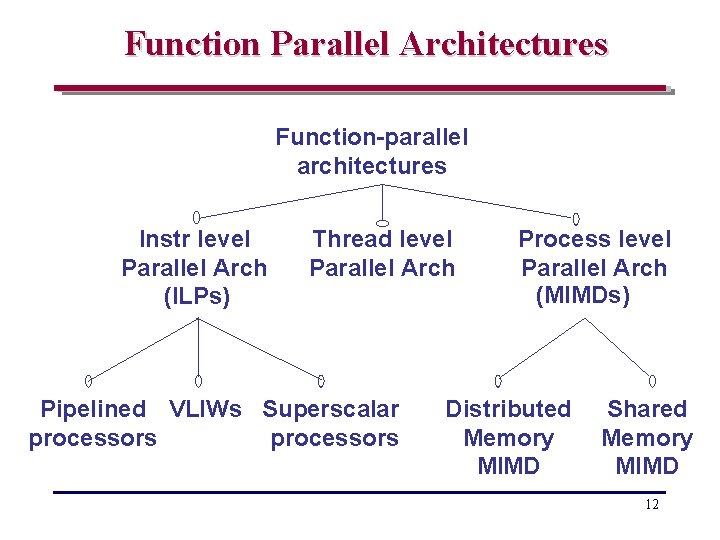

Function Parallel Architectures Function-parallel architectures Instr level Parallel Arch (ILPs) Thread level Parallel Arch Pipelined VLIWs Superscalar processors Process level Parallel Arch (MIMDs) Distributed Memory MIMD Shared Memory MIMD 12

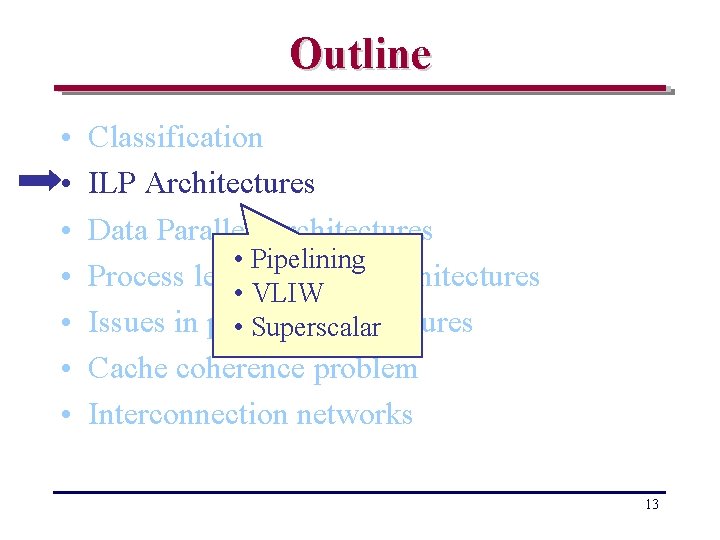

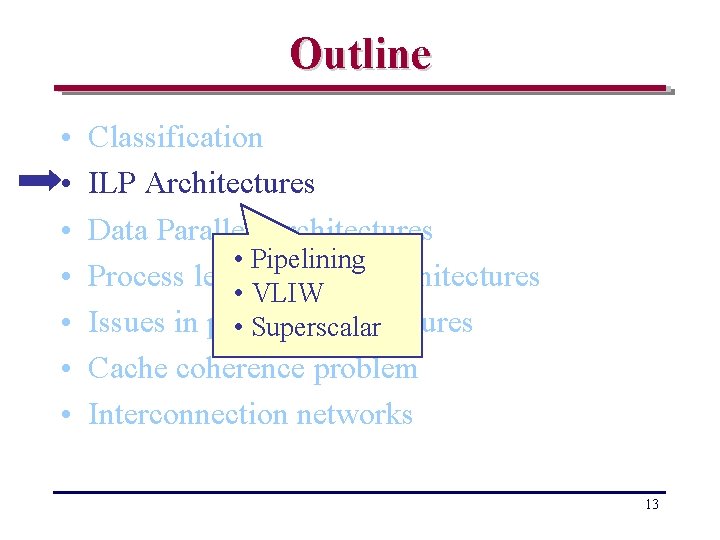

Outline • • Classification ILP Architectures Data Parallel Architectures • Pipelining Process level Parallel Architectures • VLIW Issues in parallel architectures • Superscalar Cache coherence problem Interconnection networks 13

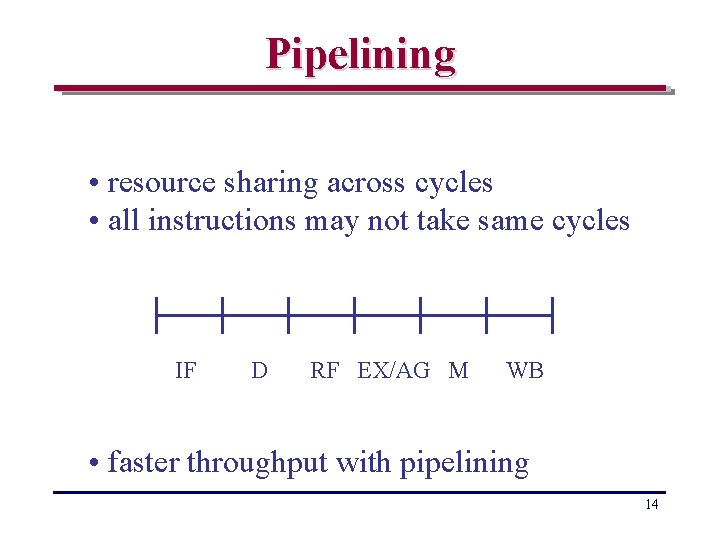

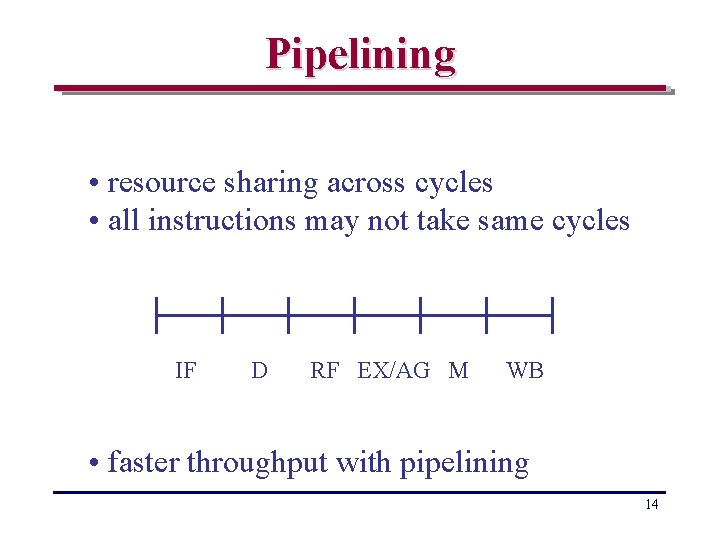

Pipelining • resource sharing across cycles • all instructions may not take same cycles IF D RF EX/AG M WB • faster throughput with pipelining 14

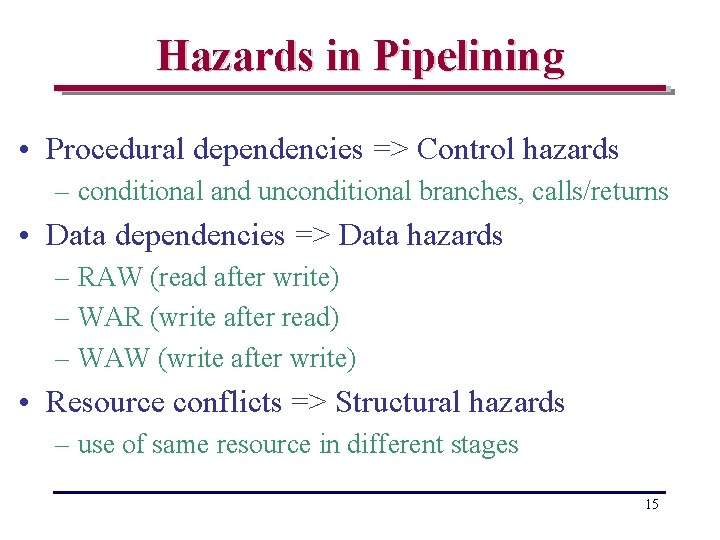

Hazards in Pipelining • Procedural dependencies => Control hazards – conditional and unconditional branches, calls/returns • Data dependencies => Data hazards – RAW (read after write) – WAR (write after read) – WAW (write after write) • Resource conflicts => Structural hazards – use of same resource in different stages 15

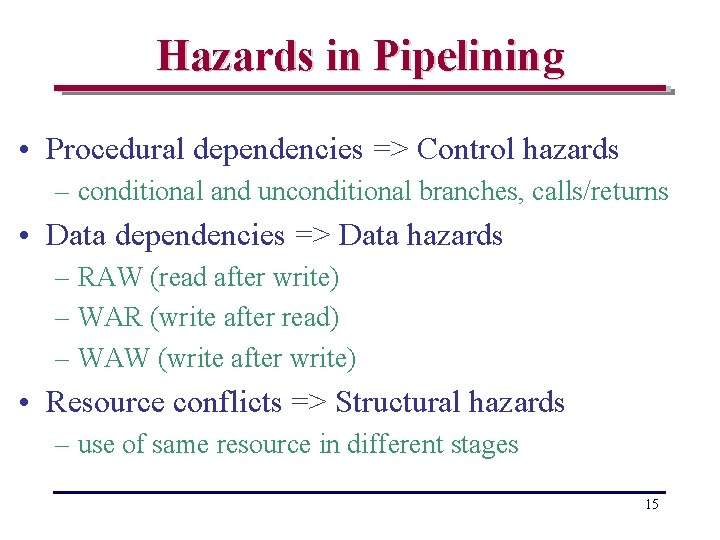

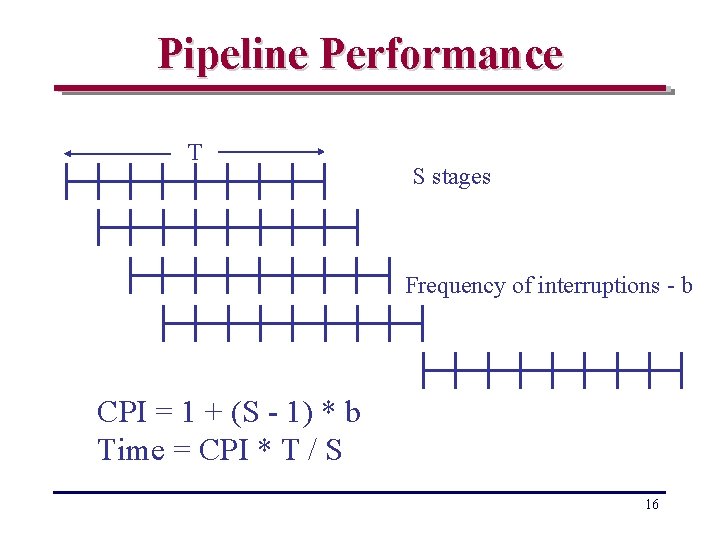

Pipeline Performance T S stages Frequency of interruptions - b CPI = 1 + (S - 1) * b Time = CPI * T / S 16

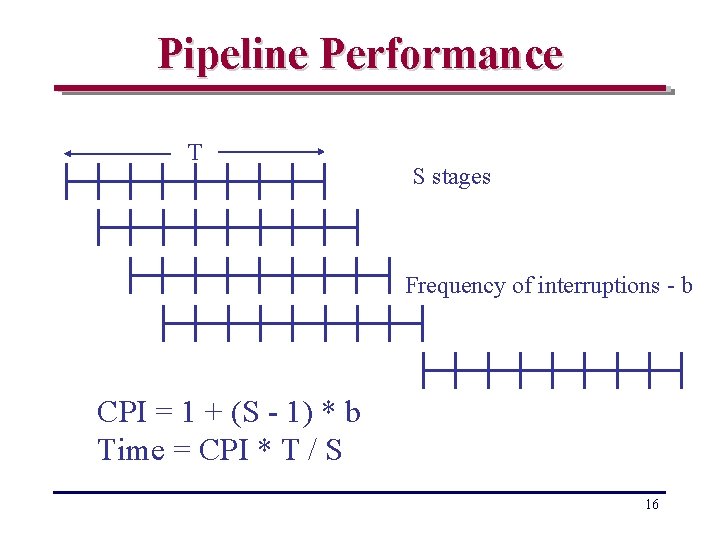

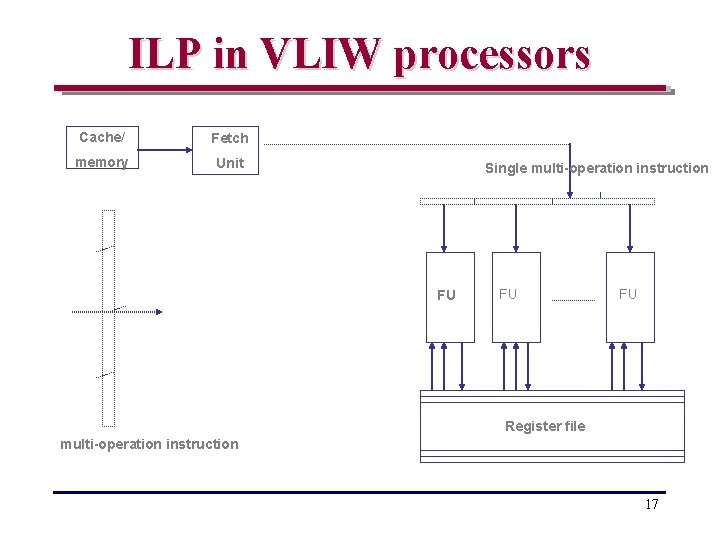

ILP in VLIW processors Cache/ Fetch memory Unit Single multi-operation instruction FU FU FU Register file multi-operation instruction 17

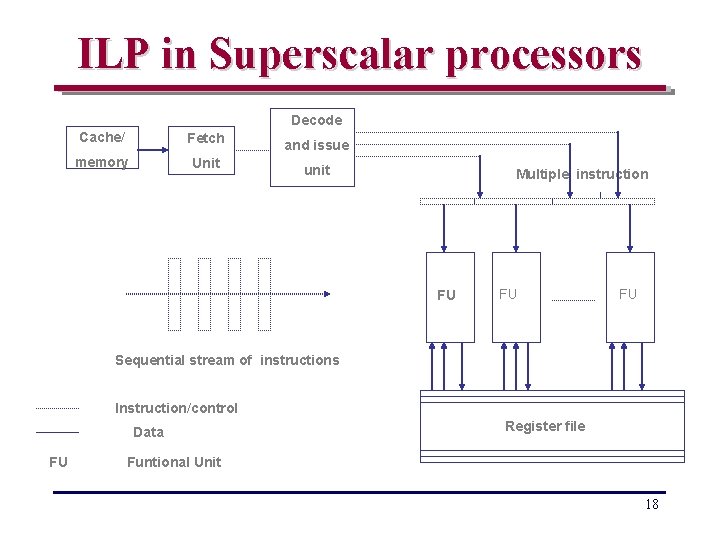

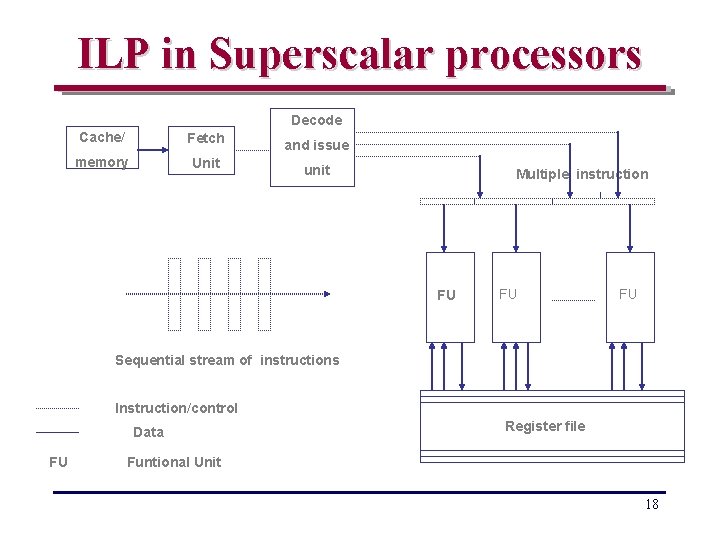

ILP in Superscalar processors Decode Cache/ Fetch memory Unit and issue unit Multiple instruction FU FU FU Sequential stream of instructions Instruction/control Data FU Register file Funtional Unit 18

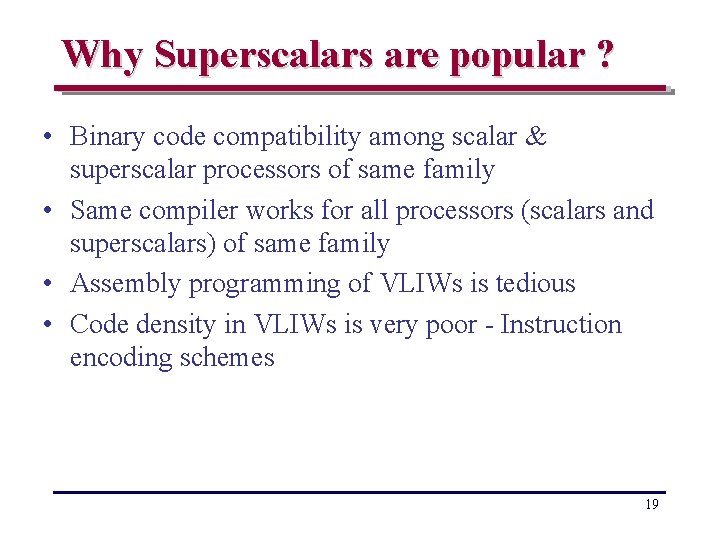

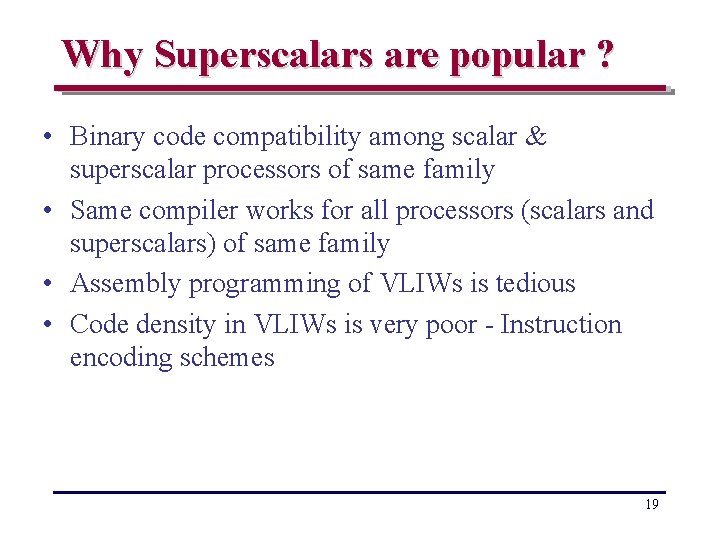

Why Superscalars are popular ? • Binary code compatibility among scalar & superscalar processors of same family • Same compiler works for all processors (scalars and superscalars) of same family • Assembly programming of VLIWs is tedious • Code density in VLIWs is very poor - Instruction encoding schemes 19

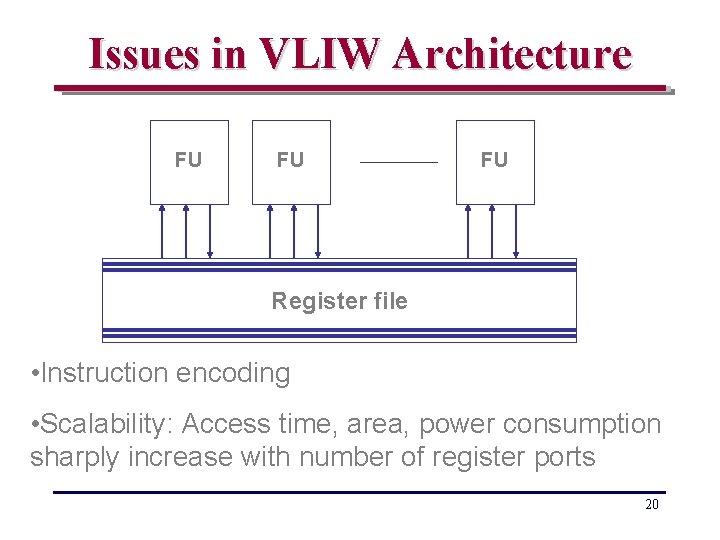

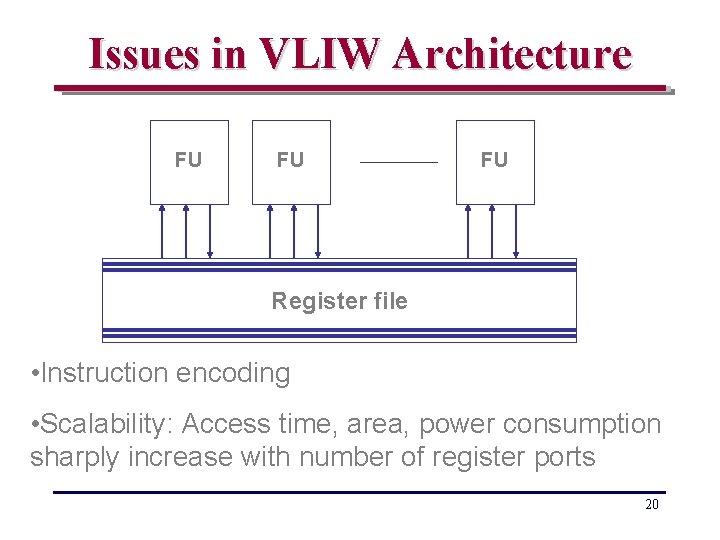

Issues in VLIW Architecture FU FU FU Register file • Instruction encoding • Scalability: Access time, area, power consumption sharply increase with number of register ports 20

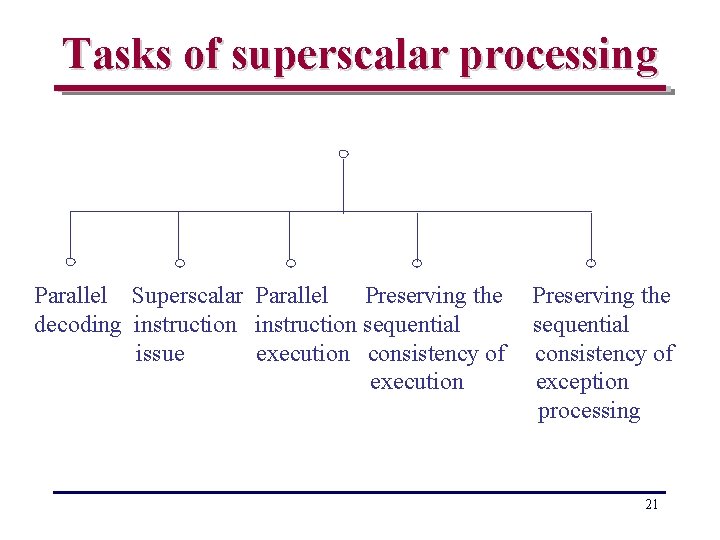

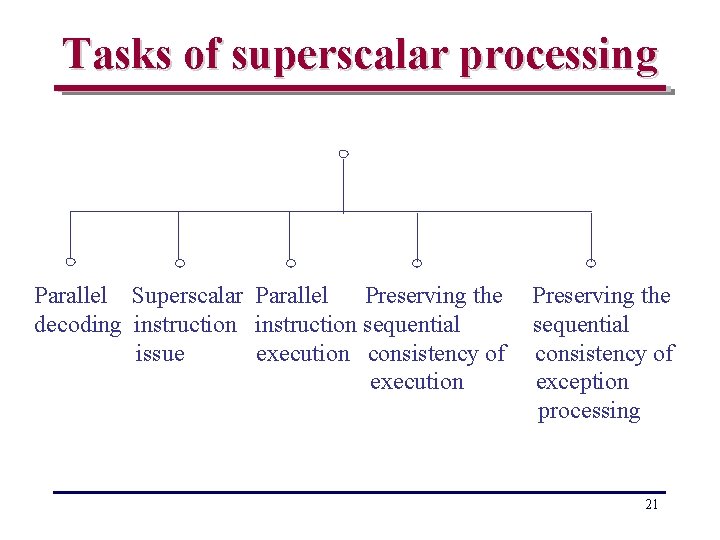

Tasks of superscalar processing Parallel Superscalar Parallel Preserving the decoding instruction sequential issue execution consistency of execution Preserving the sequential consistency of exception processing 21

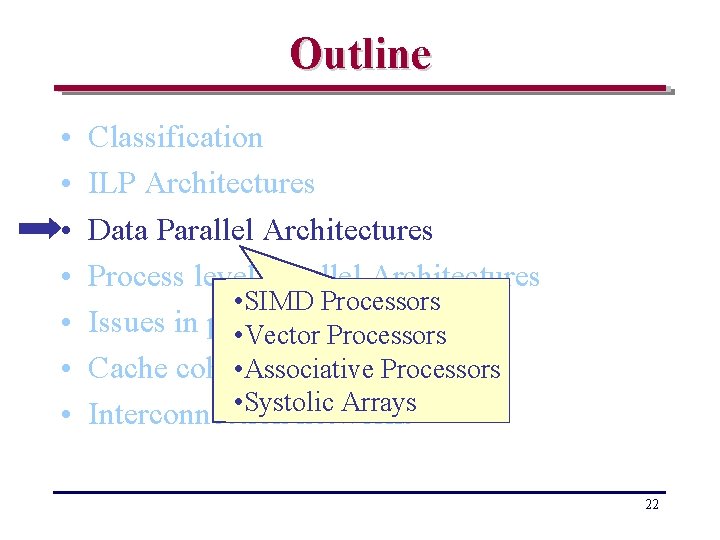

Outline • • Classification ILP Architectures Data Parallel Architectures Process level Parallel Architectures • SIMD Processors Issues in parallel • Vectorarchitectures Processors • Associative Processors Cache coherence problem • Systolic Arrays Interconnection networks 22

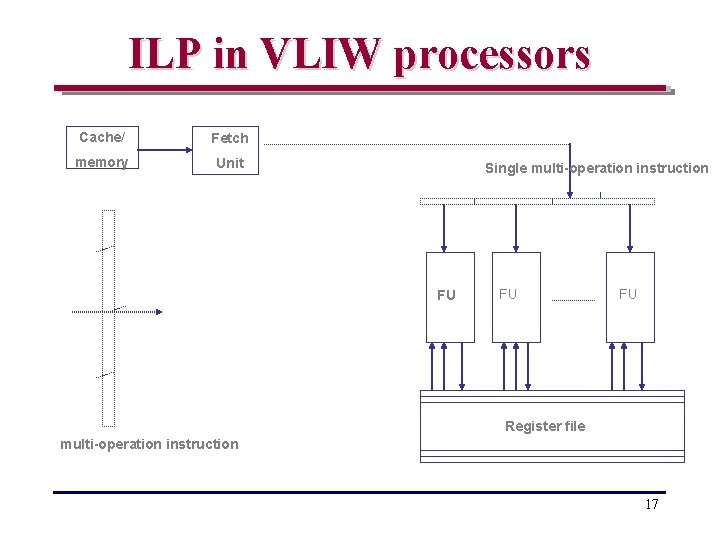

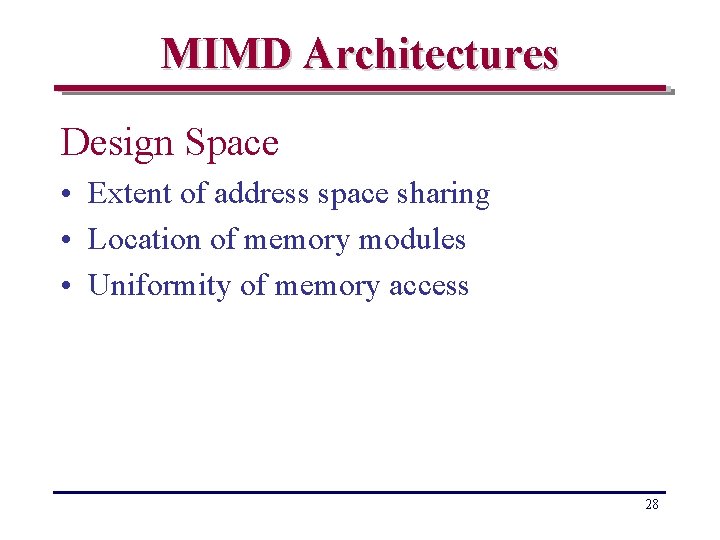

Data Parallel Architectures • SIMD Processors – Multiple processing elements driven by a single instruction stream • Vector Processors – Uni-processors with vector instructions • Associative Processors – SIMD like processors with associative memory • Systolic Arrays – Application specific VLSI structures 23

![Systolic Arrays H T Kung 1978 Simplicity Regularity Concurrency Communication Example Band matrix Systolic Arrays [H. T. Kung 1978] Simplicity, Regularity, Concurrency, Communication Example : Band matrix](https://slidetodoc.com/presentation_image/382fc4c949d58aaaf6acef5aedd582e2/image-24.jpg)

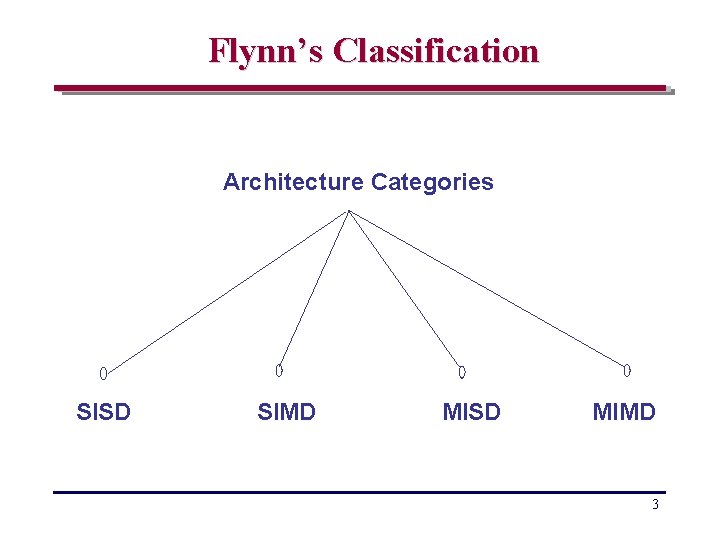

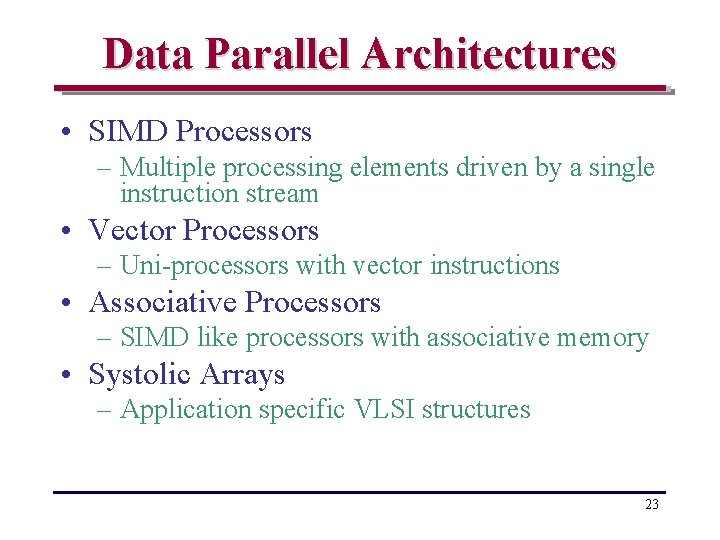

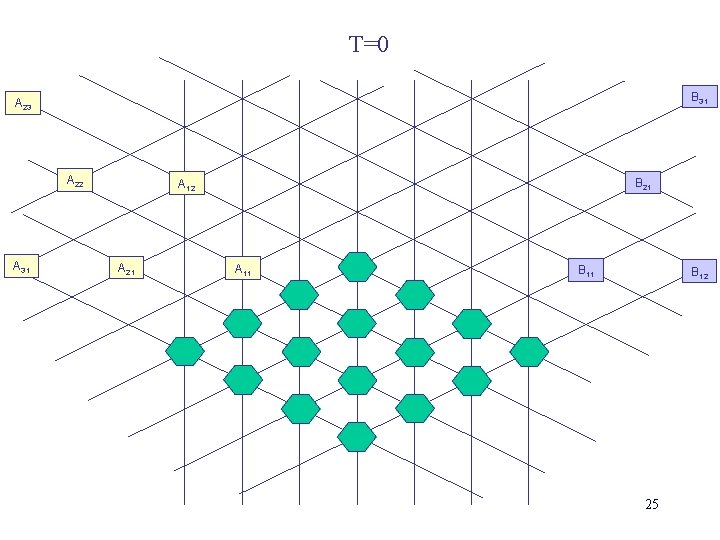

Systolic Arrays [H. T. Kung 1978] Simplicity, Regularity, Concurrency, Communication Example : Band matrix multiplication 24

T=0 B 31 A 23 A 22 A 31 B 21 A 12 A 21 A 11 B 12 25

Outline • • Classification ILP Architectures Data Parallel Architectures Process level Parallel Architectures Issues in parallel architectures • MIMD Processors Cache coherence problem - Shared Memory Interconnection networks Memory - Distributed 26

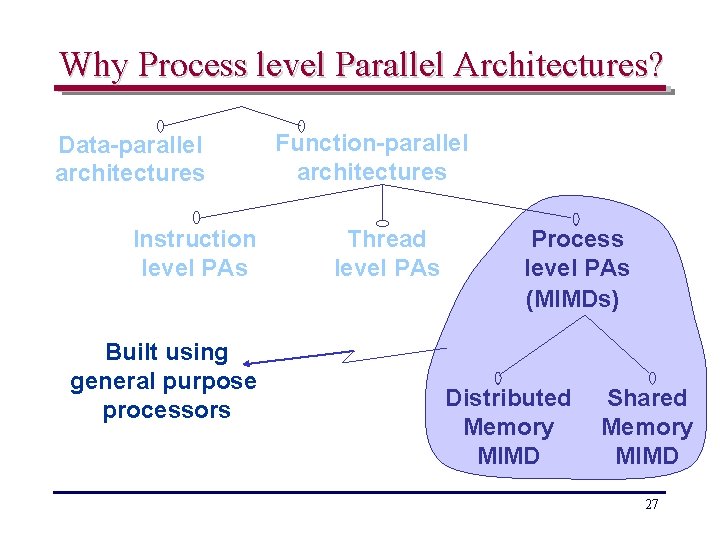

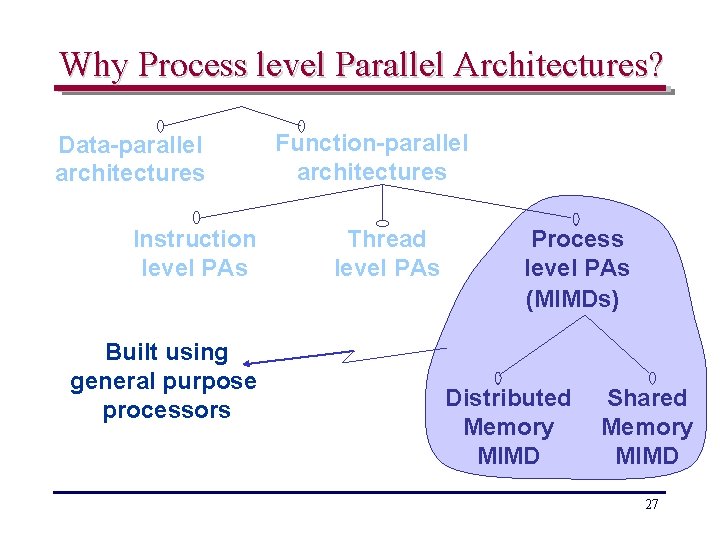

Why Process level Parallel Architectures? Data-parallel architectures Instruction level PAs Built using general purpose processors Function-parallel architectures Thread level PAs Process level PAs (MIMDs) Distributed Memory MIMD Shared Memory MIMD 27

MIMD Architectures Design Space • Extent of address space sharing • Location of memory modules • Uniformity of memory access 28

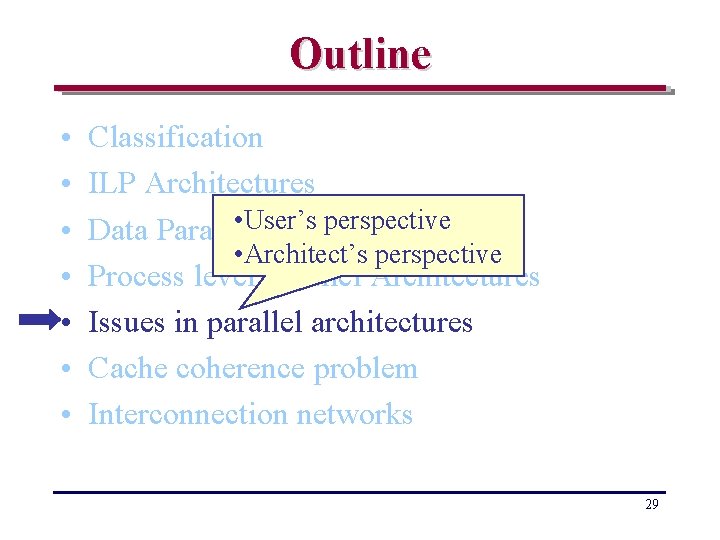

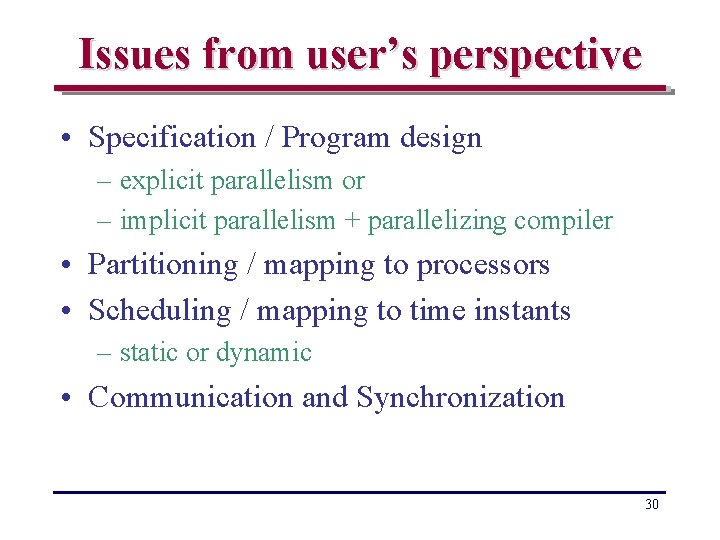

Outline • • Classification ILP Architectures • User’s perspective Data Parallel Architectures • Architect’s perspective Process level Parallel Architectures Issues in parallel architectures Cache coherence problem Interconnection networks 29

Issues from user’s perspective • Specification / Program design – explicit parallelism or – implicit parallelism + parallelizing compiler • Partitioning / mapping to processors • Scheduling / mapping to time instants – static or dynamic • Communication and Synchronization 30

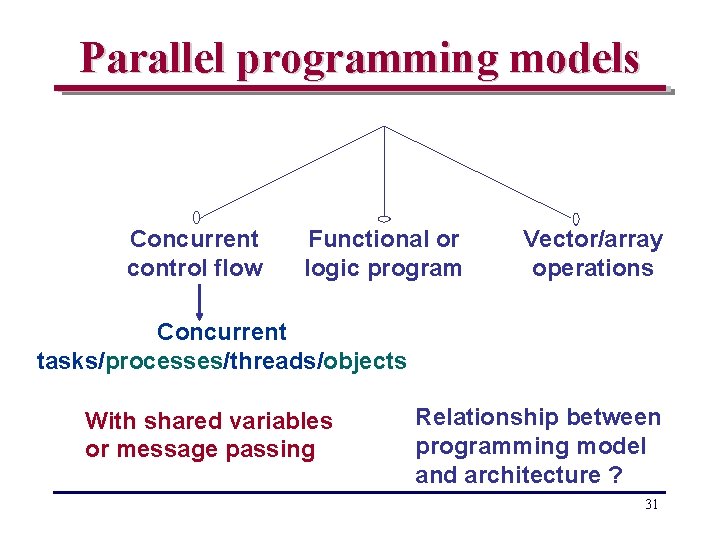

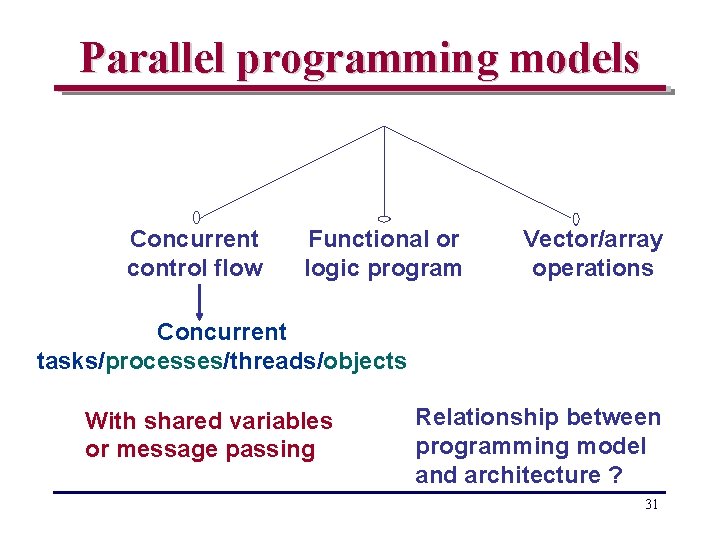

Parallel programming models Concurrent control flow Functional or logic program Vector/array operations Concurrent tasks/processes/threads/objects With shared variables or message passing Relationship between programming model and architecture ? 31

Issues from architect’s perspective • Coherence problem in shared memory with caches • Efficient interconnection networks 32

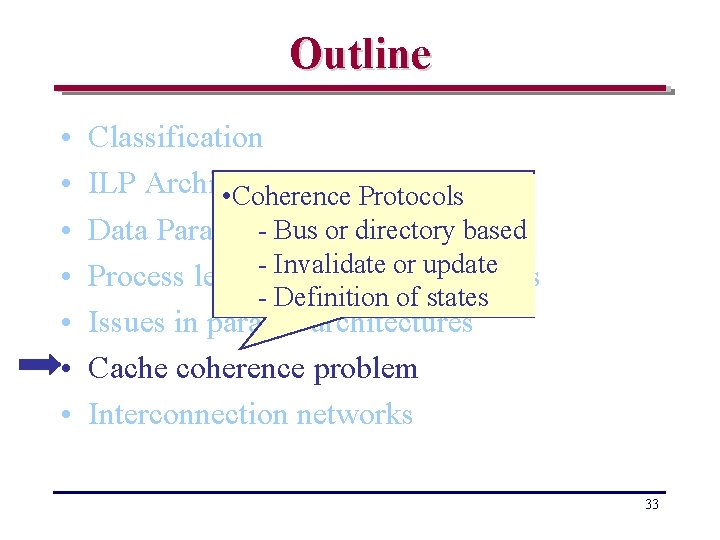

Outline • • Classification ILP Architectures • Coherence Protocols Bus or directory based Data Parallel -Architectures Invalidate or update Process level -Parallel Architectures - Definition of states Issues in parallel architectures Cache coherence problem Interconnection networks 33

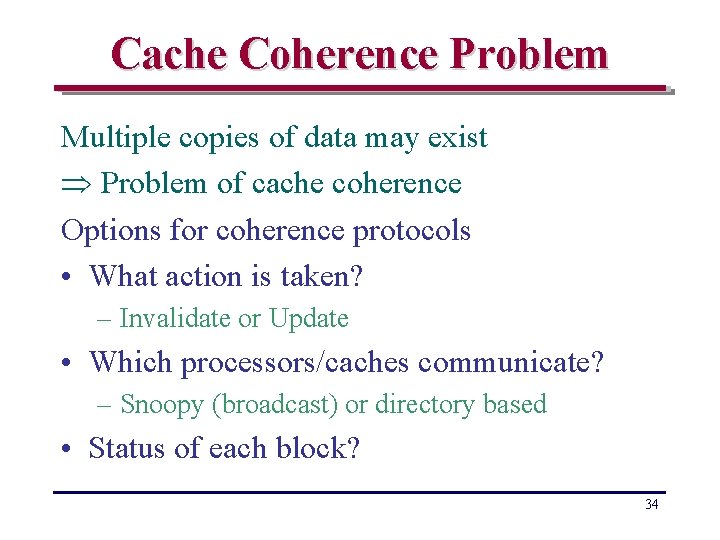

Cache Coherence Problem Multiple copies of data may exist Problem of cache coherence Options for coherence protocols • What action is taken? – Invalidate or Update • Which processors/caches communicate? – Snoopy (broadcast) or directory based • Status of each block? 34

Outline • • Classification ILP Architectures Data Parallel Architectures Process level Parallel Architectures • Switching and control Issues in parallel architectures • Topology Cache coherence problem Interconnection networks 35

Interconnection Networks • Architectural Variations: – Topology – Direct or Indirect (through switches) – Static (fixed connections) or Dynamic (connections established as required) – Routing type store and forward/worm hole) • Efficiency: – Delay – Bandwidth – Cost 36

Books • D. Sima, T. Fountain, P. Kacsuk, "Advanced Computer Architectures : A Design Space Approach", Addison Wesley, 1997. • M. J. Flynn, "Computer Architecture : Pipelined and Parallel Processor Design", Narosa Publishing House/ Jones and Bartlett, 1996. • D. A. Patterson, J. L. Hennessy, "Computer Architecture : A Quantitative Approach", Morgan Kaufmann Publishers, 2002. • K. Hwang, "Advanced Computer Architecture : Parallelism, Scalability, Programmability", Mc. Graw Hill, 1993. • H. G. Cragon, "Memory Systems and Pipelined Processors", Narosa Publishing House/ Jones and Bartlett, 1998. • D. E. Culler, J. P Singh and Anoop Gupta, "Parallel Computer Architecture, A Hardware/Software Approach", Harcourt Asia / Morgan Kaufmann Publishers, 2000. 37