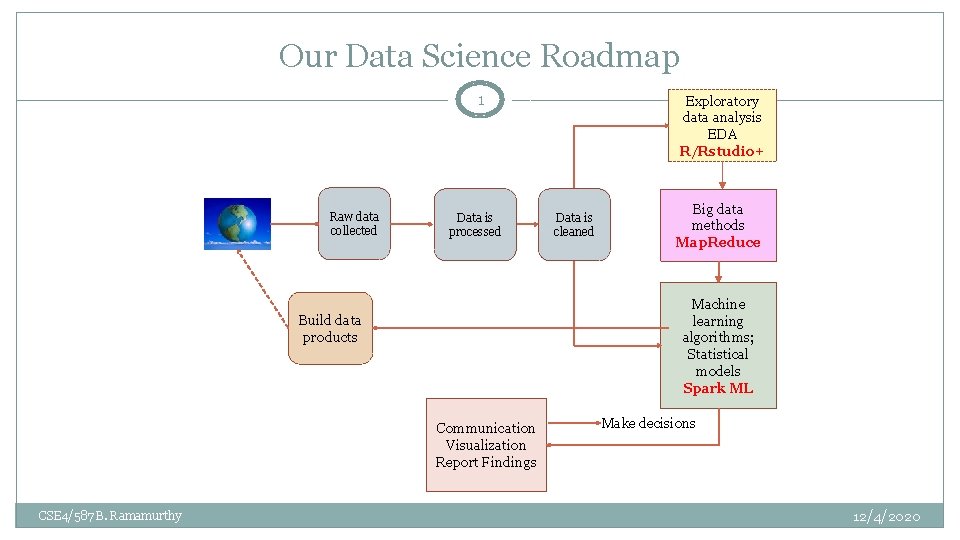

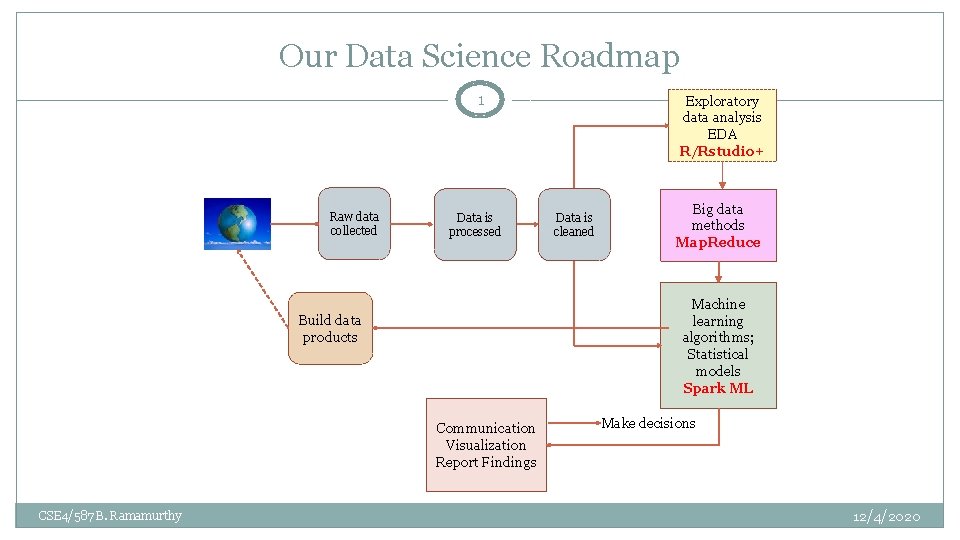

Our Data Science Roadmap 1 Raw data collected

- Slides: 7

Our Data Science Roadmap 1 Raw data collected Data is processed Data is cleaned Big data methods Map. Reduce Machine learning algorithms; Statistical models Spark ML Build data products Communication Visualization Report Findings CSE 4/587 B. Ramamurthy Exploratory data analysis EDA R/Rstudio+ Make decisions 12/4/2020

Topics for Final Exam 2 �Data-Intensive Text Processing with Map. Reduce by Jimmy Lin and Chris Dyer Ch. 2, 3 upto p. 57 Ch. 5 Text processing, MR, and graph processing including shortest path and page rank Lab 2 MR usage details �Naïve Bayes and Bayesian Classification (Class notes) Study Field Cady’s text: Chapter 6, 7 and 8: focus on Bayes, logistic regressions and evalution �Apache Spark RDD paper by Zaharia et al Motivation for Spark APIs Lab 3 details CSE 4/587 B. Ramamurthy 12/4/2020

Topics for Final Exam 3 �Data-Intensive Text Processing with Map. Reduce by Jimmy Lin and Chris Dyer Ch. 2, 3 upto p. 57 Ch. 5 Text processing, MR, and graph processing including shortest path and page rank Lab 2 MR usage details �Naïve Bayes and Bayesian Classification (Class notes) �Apache Spark RDD paper by Zaharia et al Motivation for Spark APIs Lab 3 details CSE 4/587 B. Ramamurthy 12/4/2020

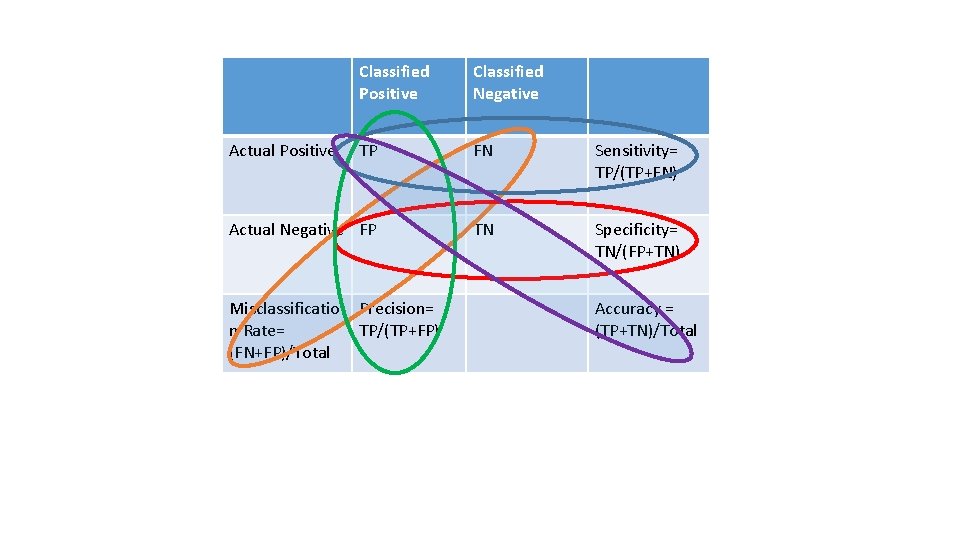

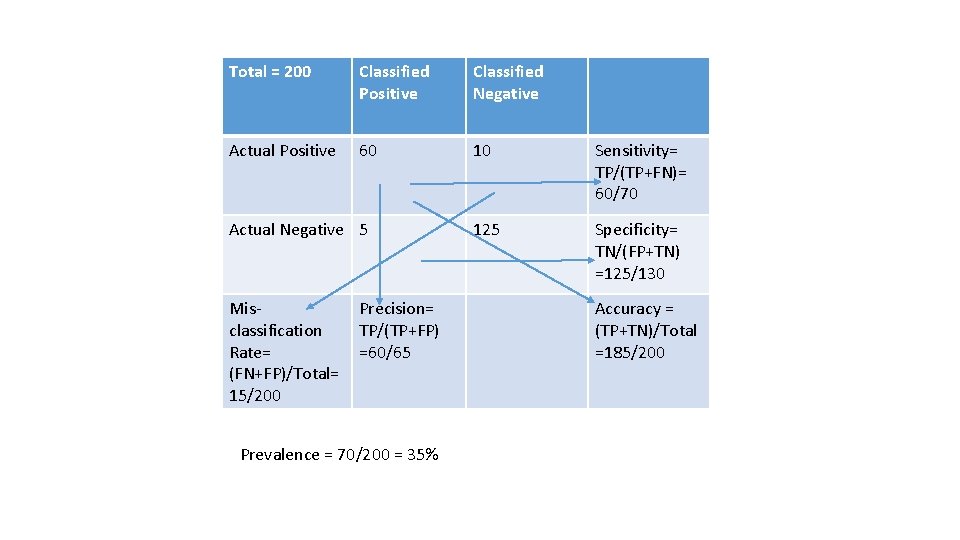

Confusion Matrix 4 �Evaluating and comparing performance of prediction classifiers. �Confusion matrix: Only binary confusion matrix �In the next slide I have shown an easy way to remember the various metrics �The slide after than shows a sample computation. �Lets explore CSE 4/587 B. Ramamurthy 12/4/2020

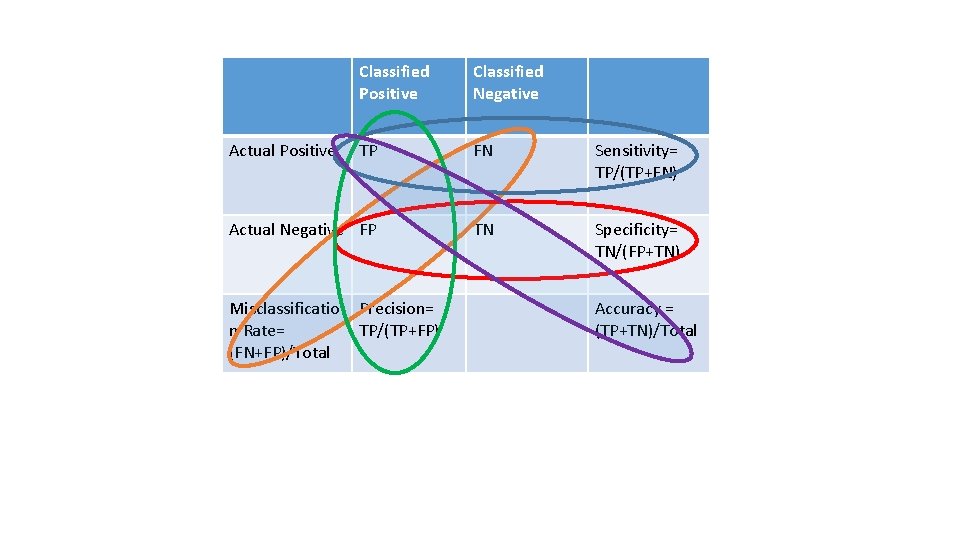

Classified Positive Classified Negative TP FN Sensitivity= TP/(TP+FN) Actual Negative FP TN Specificity= TN/(FP+TN) Actual Positive Misclassificatio n Rate= (FN+FP)/Total Precision= TP/(TP+FP) Accuracy = (TP+TN)/Total

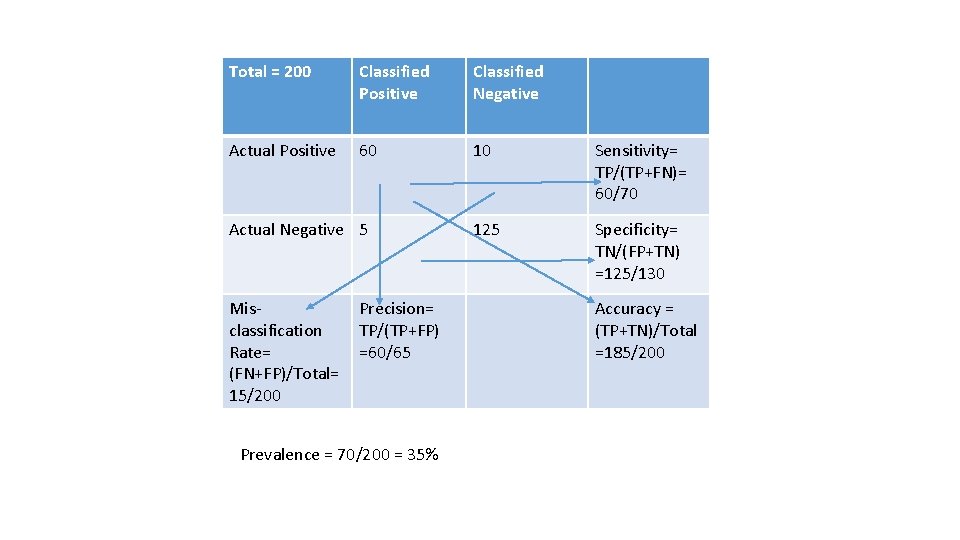

Total = 200 Classified Positive Classified Negative Actual Positive 60 10 Sensitivity= TP/(TP+FN)= 60/70 125 Specificity= TN/(FP+TN) =125/130 Actual Negative 5 Misclassification Rate= (FN+FP)/Total= 15/200 Precision= TP/(TP+FP) =60/65 Prevalence = 70/200 = 35% Accuracy = (TP+TN)/Total =185/200

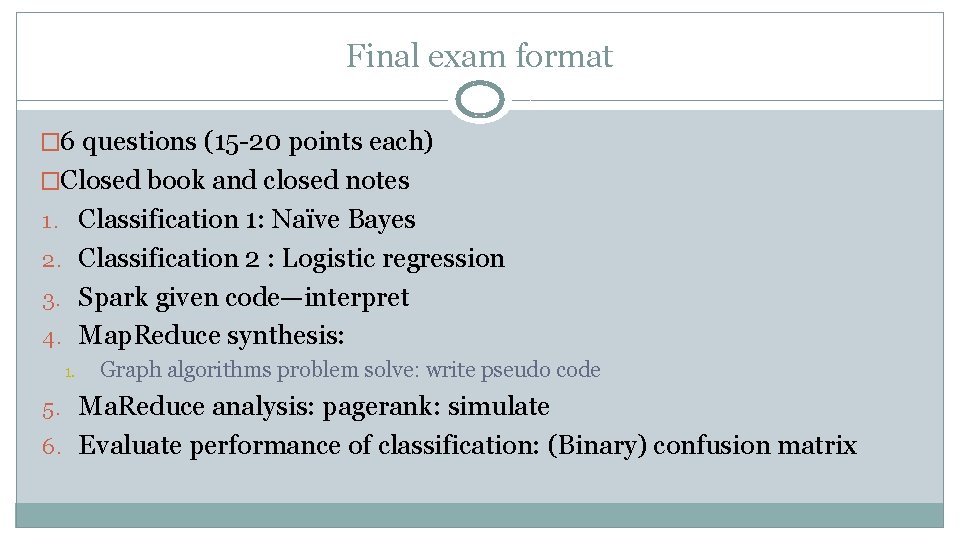

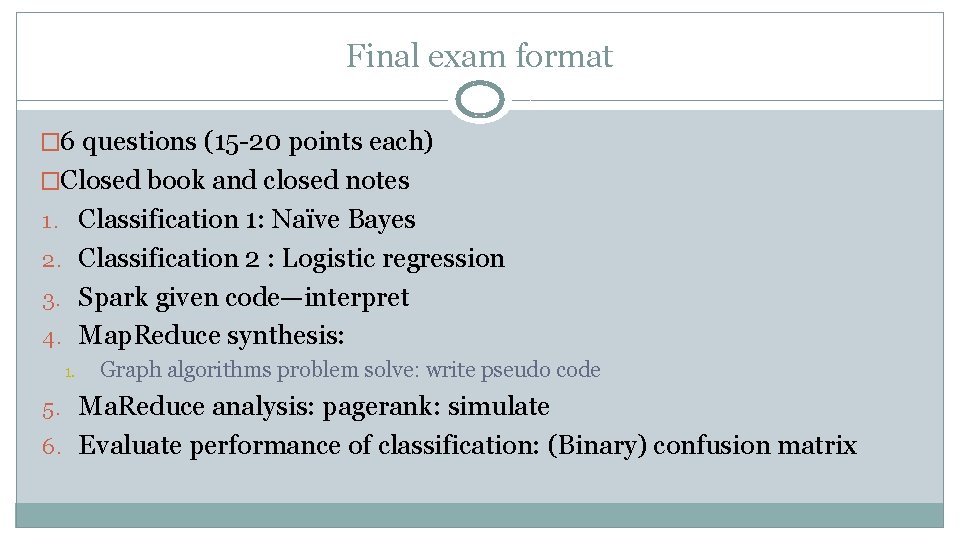

Final exam format � 6 questions (15 -20 points each) �Closed book and closed notes 1. Classification 1: Naïve Bayes 2. Classification 2 : Logistic regression 3. Spark given code—interpret 4. Map. Reduce synthesis: 1. Graph algorithms problem solve: write pseudo code 5. Ma. Reduce analysis: pagerank: simulate 6. Evaluate performance of classification: (Binary) confusion matrix