OSMA Software Assurance Symposium 2002 Achieving High Software

- Slides: 24

OSMA Software Assurance Symposium 2002 Achieving High Software Reliability The Software Measurement Analysis and Reliability Toolkit & Module-Order Modeling Taghi M. Khoshgoftaar (taghi@cse. fau. edu) Empirical Software Engineering Laboratory Florida Atlantic University Boca Raton, Florida USA 1

Overview • SMART: The Software Measurement Analysis and Reliability Toolkit • Module-Order Modeling • Investigating the impact of underlying prediction models on module-order models • Empirical case studies • Summary 2

SMART • Case-Based Reasoning – quantitative software quality prediction models: predicting faults, code churn, etc. – qualitative software classification (risk-based) models: two-group and three-group models • Module-Order Models – priority-based ranking of modules with respect to their software quality September 6, 2002 Empirical Software Engineering Laboratory Florida Atlantic Univeristy 3

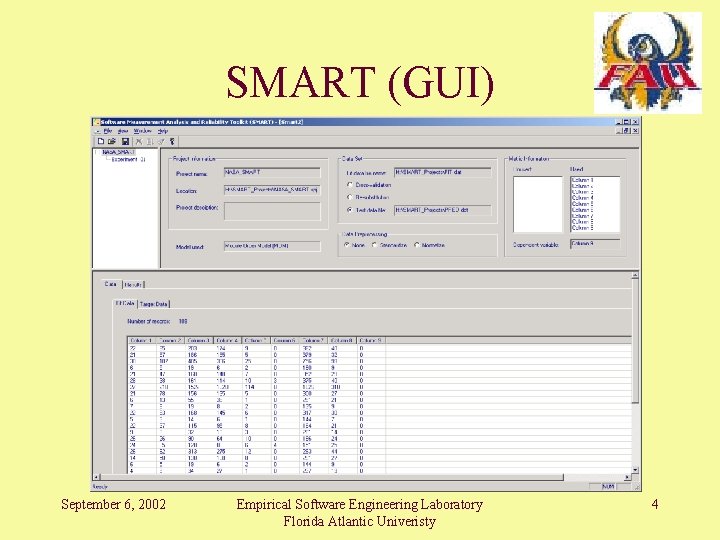

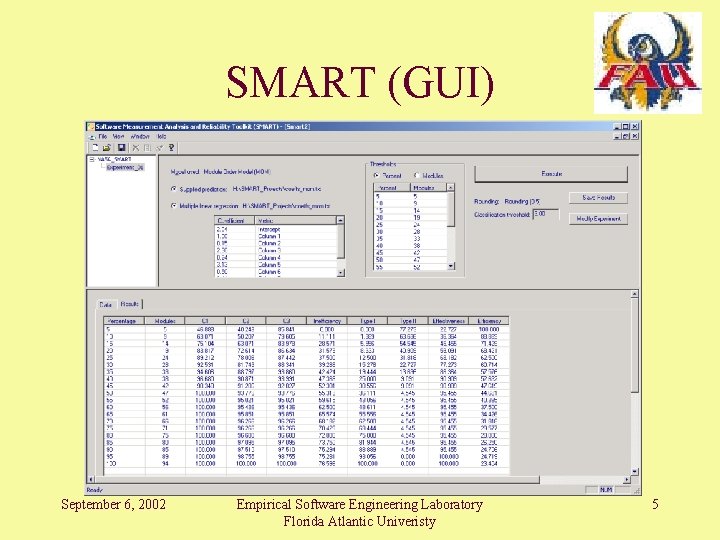

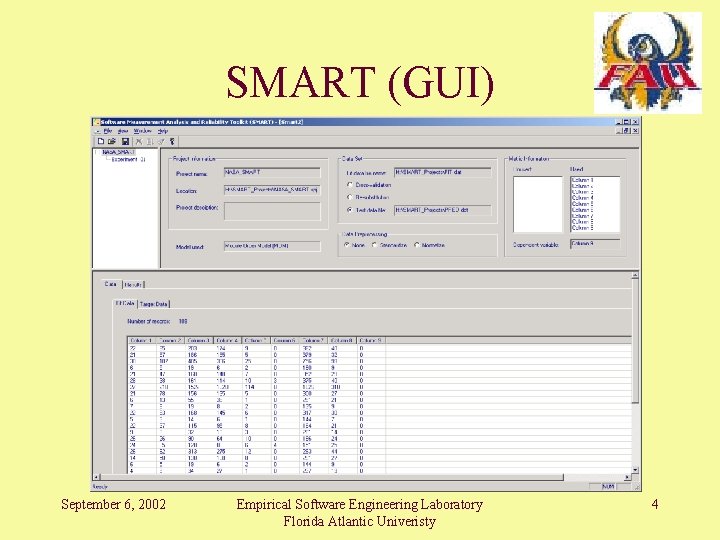

SMART (GUI) September 6, 2002 Empirical Software Engineering Laboratory Florida Atlantic Univeristy 4

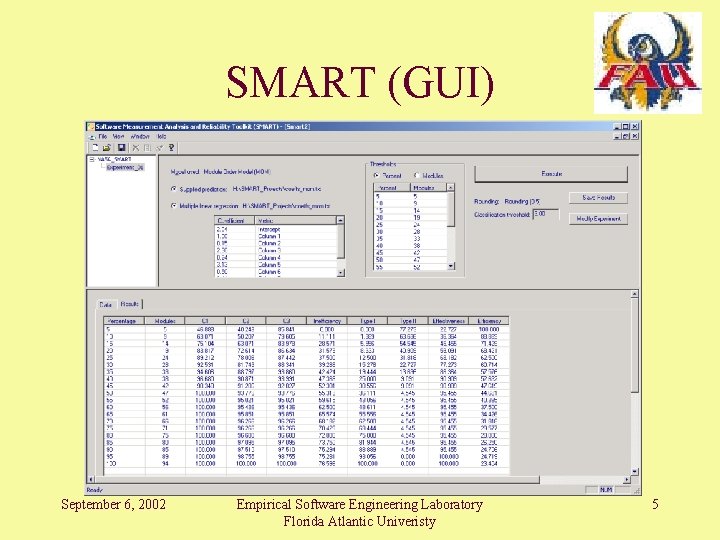

SMART (GUI) September 6, 2002 Empirical Software Engineering Laboratory Florida Atlantic Univeristy 5

Module-Order Models • Why module-order models? – Classification models are not suitable from the business & improved cost-effective view points • same quality improvement resources applied to all modules predicted as high-risk or fault-prone – A priority-based software quality improvement is more suited for a cost-effective usage of available resources • inspecting the most fault-prone modules first September 6, 2002 Empirical Software Engineering Laboratory Florida Atlantic Univeristy 6

MOMs. . . • Answers practical questions posed by project management, such as – which & how many modules to target for V&V? – what’s the best usage of available resources? • Different underlying quantitative software quality prediction models available – what is their impact on the performance of module-order models? September 6, 2002 Empirical Software Engineering Laboratory Florida Atlantic Univeristy 7

MOMs. . . • Components of a module-order model – underlying software quality prediction model – ranking of modules according to the predicted quality factor, and – procedure for evaluating accuracy and effectiveness of predicted ranking • Alberg diagrams: faults accounted-for by rankings • Performance diagrams: measuring accuracy of the predicted ranking with respect to actual (perfect) ranking September 6, 2002 Empirical Software Engineering Laboratory Florida Atlantic Univeristy 8

MOMs. . . • Based on schedule & resources allocated for testing and V&V, determine a range of cutoff percentages that includes the management’s options for covering the last module (as per the ranking) to be inspected • Choose a set of representative cutoff percentages, ‘c’, from that range – for each c, determine the number of faults accounted for by the actual & predicted ranking September 6, 2002 Empirical Software Engineering Laboratory Florida Atlantic Univeristy 9

Case Study Example • A large legacy telecommunications system – mission-critical software – written in a procedural language – software metrics from four system releases, with a few thousand modules in each release – fault data comprised of faults discovered during post unit testing, including system operations – 24 product metrics & 4 execution metrics used – Release 1 used as fit data & others as test data September 6, 2002 Empirical Software Engineering Laboratory Florida Atlantic Univeristy 10

Fault Prediction Models • Rank-order based on average absolute error and average relative error of models 1. 2. 3. 4. 5. 6. CART-LAD regression tree Case-Based Reasoning (SMART) Multiple Linear Regression Artificial Neural Networks CART-LS regression tree S-PLUS regression tree September 6, 2002 Empirical Software Engineering Laboratory Florida Atlantic Univeristy 11

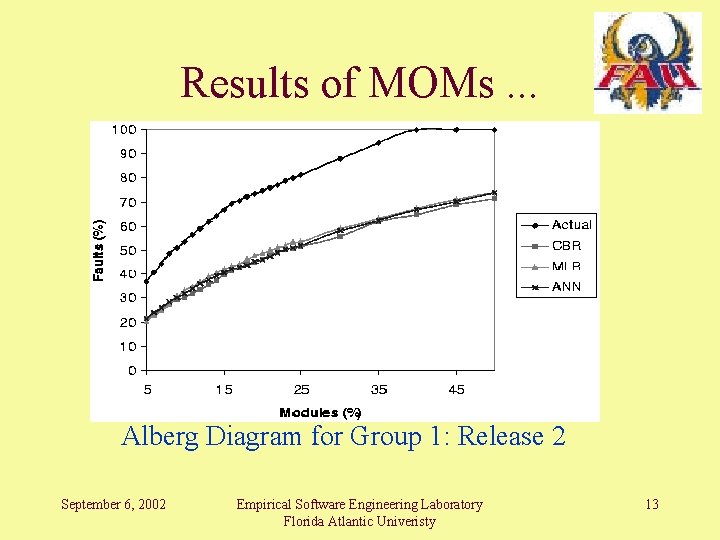

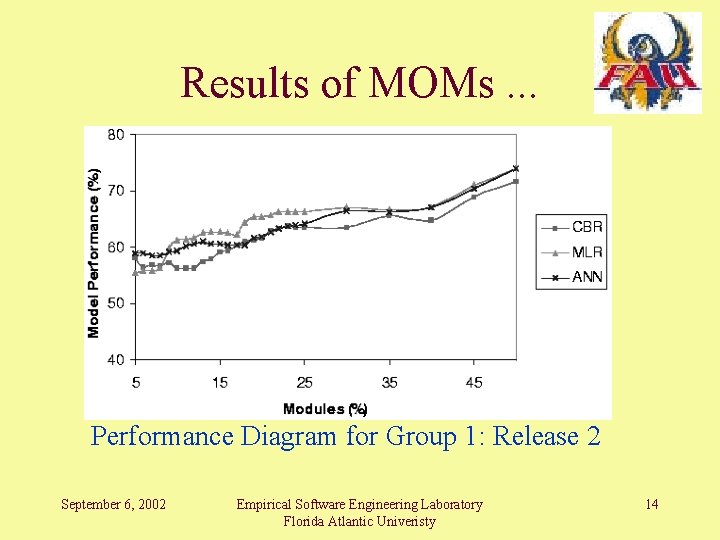

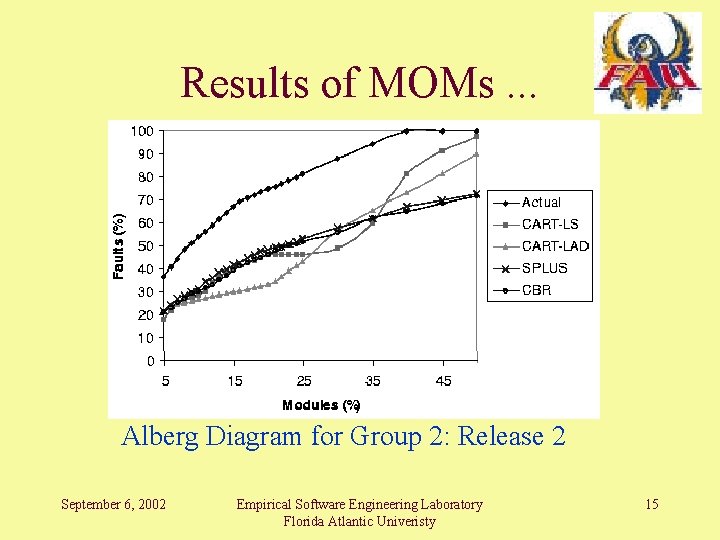

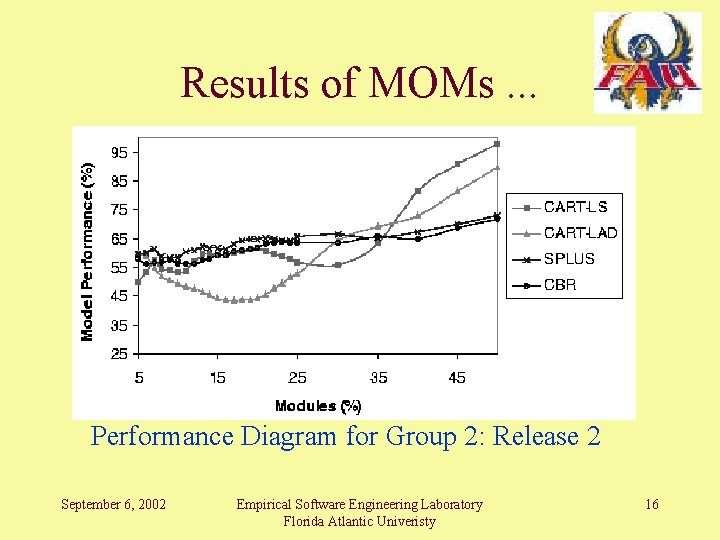

Results of MOMs • Group 1 – CBR – MLR – ANNs • Group 2 (all available regression trees) – CART-LS – CART-LAD – S-PLUS September 6, 2002 Empirical Software Engineering Laboratory Florida Atlantic Univeristy 12

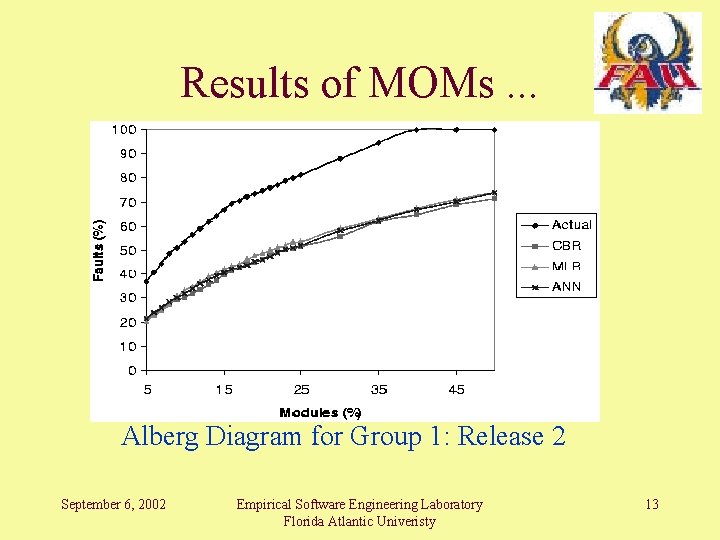

Results of MOMs. . . Alberg Diagram for Group 1: Release 2 September 6, 2002 Empirical Software Engineering Laboratory Florida Atlantic Univeristy 13

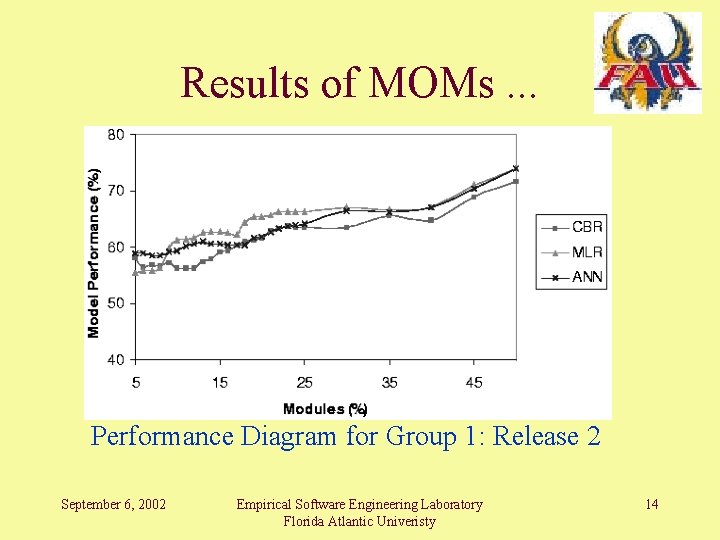

Results of MOMs. . . Performance Diagram for Group 1: Release 2 September 6, 2002 Empirical Software Engineering Laboratory Florida Atlantic Univeristy 14

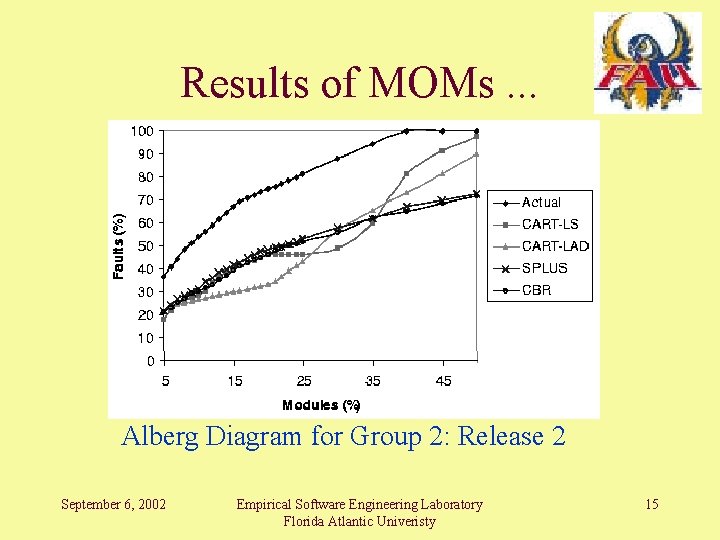

Results of MOMs. . . Alberg Diagram for Group 2: Release 2 September 6, 2002 Empirical Software Engineering Laboratory Florida Atlantic Univeristy 15

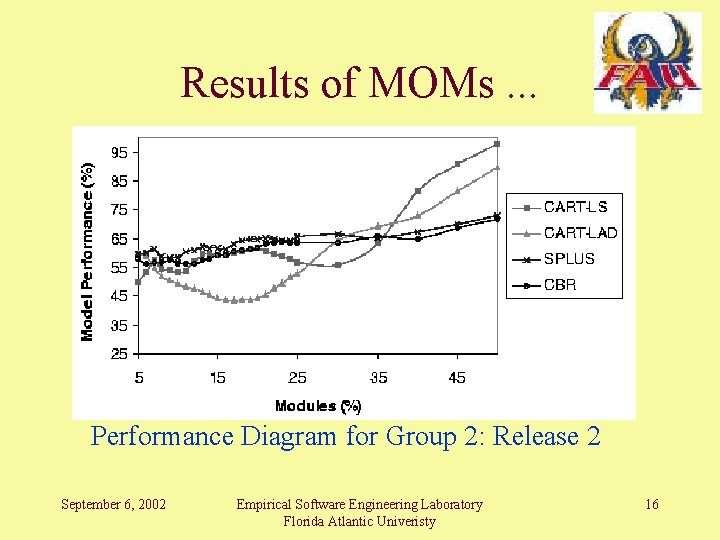

Results of MOMs. . . Performance Diagram for Group 2: Release 2 September 6, 2002 Empirical Software Engineering Laboratory Florida Atlantic Univeristy 16

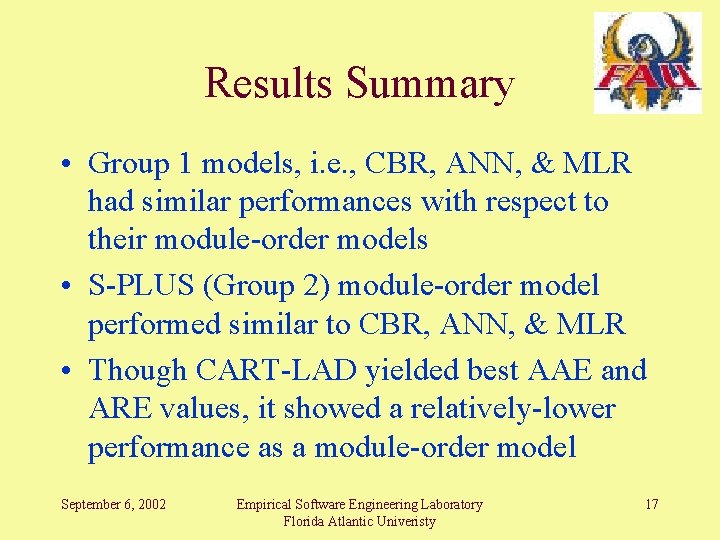

Results Summary • Group 1 models, i. e. , CBR, ANN, & MLR had similar performances with respect to their module-order models • S-PLUS (Group 2) module-order model performed similar to CBR, ANN, & MLR • Though CART-LAD yielded best AAE and ARE values, it showed a relatively-lower performance as a module-order model September 6, 2002 Empirical Software Engineering Laboratory Florida Atlantic Univeristy 17

Results Summary. . . • When used as a module-order model, CART -LS is better than CART-LAD – In contrast, with respect to AAE and ARE values CART-LAD is better than CART-LS • Overall, for this case study the CART-LS module-order model performed generally better than the other five models, i. e. , CBR, CART-LAD, ANN, MLR, and S-PLUS September 6, 2002 Empirical Software Engineering Laboratory Florida Atlantic Univeristy 18

Results Summary. . . • Observing the effects of data characteristics – performance of MOMs is dependent on the system domain and the software application • Are AAE & ARE good performance metrics for selecting underlying prediction models for module-order modeling? – Selecting the prediction models based on AAE and ARE did not provide any conclusive insight into the performance of a module-order model September 6, 2002 Empirical Software Engineering Laboratory Florida Atlantic Univeristy 19

Conclusion • Software fault prediction and quality classification models by themselves may not be sufficient from the business and practical view points (return-on-investment) • Module-order modeling presents a more goal-oriented approach by predicting a priority-based ranking of modules with respect to software quality September 6, 2002 Empirical Software Engineering Laboratory Florida Atlantic Univeristy 20

Conclusion. . . • Case studies investigating the impact of different underlying prediction models on module-order models • Completed the ready-to-use (stand alone) version of SMART, including its – requirements and specifications document – design, implementation, and integration document September 6, 2002 Empirical Software Engineering Laboratory Florida Atlantic Univeristy 21

Future Work • A resource-based approach for the selection and evaluation of software quality models • Developing models that provide an improved goal- and objective-oriented software quality assurance – lowering the expected cost of misclassification – improving the cost-benefit factor of models – a better focus on return-on-investment September 6, 2002 Empirical Software Engineering Laboratory Florida Atlantic Univeristy 22

Future Work. . . • Applying the SMART technology to software metrics and fault data collected from a NASA software project – evaluating performance & benefits of SMART in the context of NASA software data • Incorporating SMART into a live NASA software project – demonstrating practical technology transfer September 6, 2002 Empirical Software Engineering Laboratory Florida Atlantic Univeristy 23

OSMA Software Assurance Symposium 2002 Achieving High Software Reliability Thank You … Taghi M. Khoshgoftaar taghi@cse. fau. edu (561) 297 3994 Empirical Software Engineering Laboratory Florida Atlantic University Boca Raton, Florida, USA 24