Orchestrating the Execution of Stream Programs on Multicore

![Preliminaries • Synchronous Data Flow (SDF) [Lee ’ 87] • Stream. It [Thies ’ Preliminaries • Synchronous Data Flow (SDF) [Lee ’ 87] • Stream. It [Thies ’](https://slidetodoc.com/presentation_image/ce10f5ad5b5f394bc7224f9293b1417f/image-7.jpg)

![SPE Code Template void spe_work() { char stage[N] = {0, 0, . . . SPE Code Template void spe_work() { char stage[N] = {0, 0, . . .](https://slidetodoc.com/presentation_image/ce10f5ad5b5f394bc7224f9293b1417f/image-38.jpg)

- Slides: 38

Orchestrating the Execution of Stream Programs on Multicore Platforms Manjunath Kudlur, Scott Mahlke Advanced Computer Architecture Lab. University of Michigan 1 University of Michigan Electrical Engineering and Computer Science

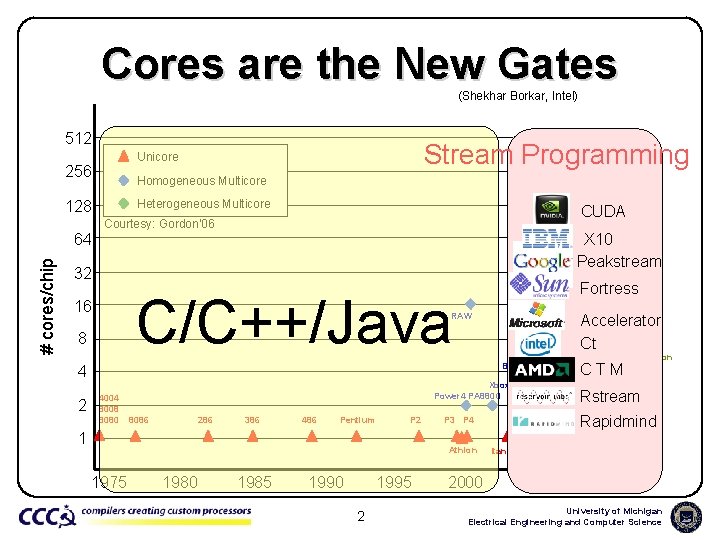

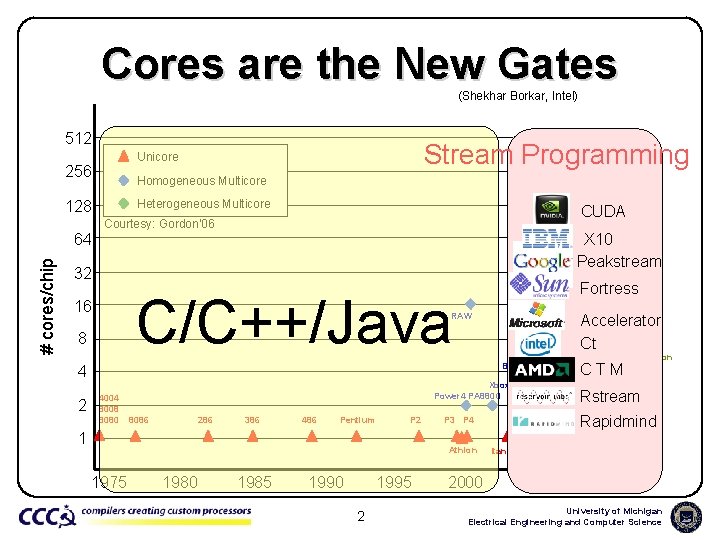

Cores are the New Gates (Shekhar Borkar, Intel) 512 Stream Programming Unicore 256 Pico. Chip AMBRIC Homogeneous Multicore CISCO CSR 1 Heterogeneous Multicore 128 NVIDIA G 80 Courtesy: Gordon’ 06 Larrabee # cores/chip 64 X 10 Peakstream 32 RAZA XLR C/C++/Java 16 RAW 8 4004 8008 8080 Xbox 360 Power 4 PA 8800 8086 286 386 486 Pentium P 2 1 P 3 P 4 Athlon 1975 1980 1985 1990 1995 2 2000 Fortress Cavium Accelerator Ct Cell Niagara BCM 1480 4 2 CUDA Opteron 4 P AMD Fusion CTM Core 2 Quad Xeon Power 6 Rstream Opteron Core 2 Duo Core. Duo Rapidmind Core Itanium 2 2005 2010 University of Michigan Electrical Engineering and Computer Science

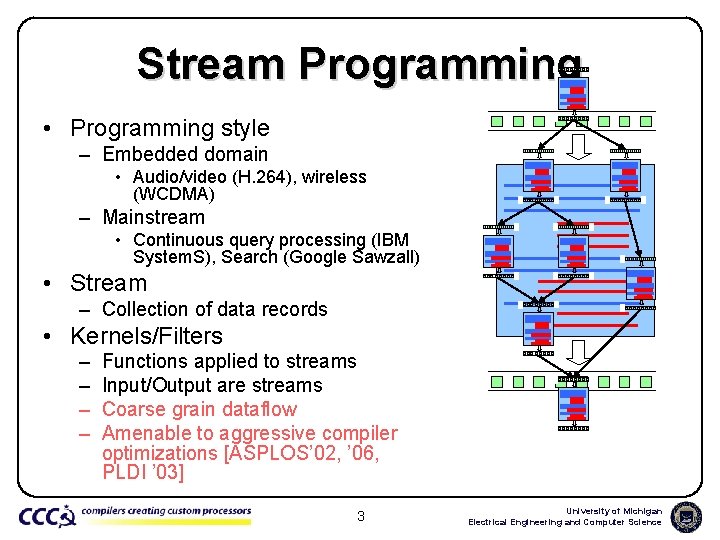

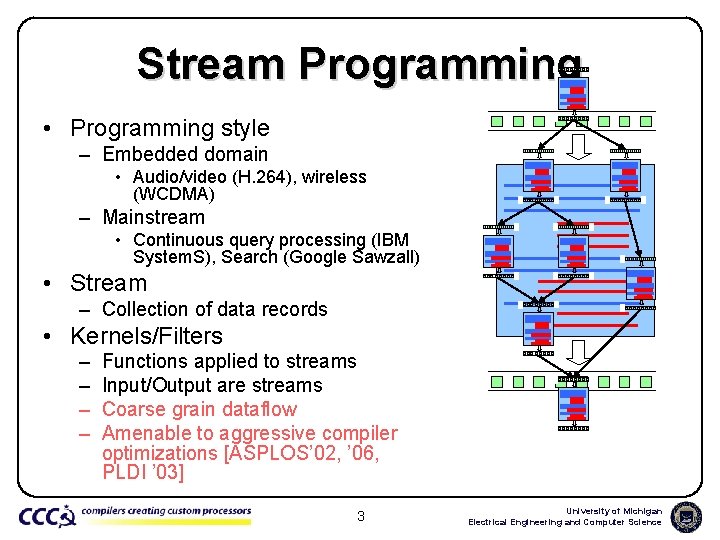

Stream Programming • Programming style – Embedded domain • Audio/video (H. 264), wireless (WCDMA) – Mainstream • Continuous query processing (IBM System. S), Search (Google Sawzall) • Stream – Collection of data records • Kernels/Filters – – Functions applied to streams Input/Output are streams Coarse grain dataflow Amenable to aggressive compiler optimizations [ASPLOS’ 02, ’ 06, PLDI ’ 03] 3 University of Michigan Electrical Engineering and Computer Science

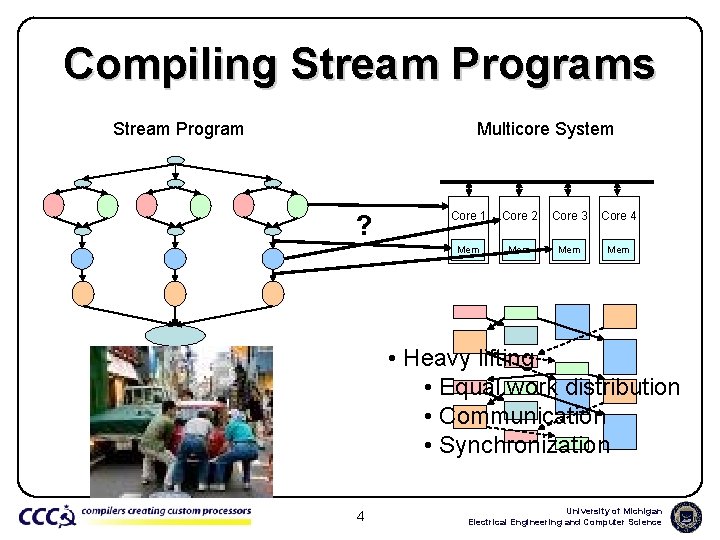

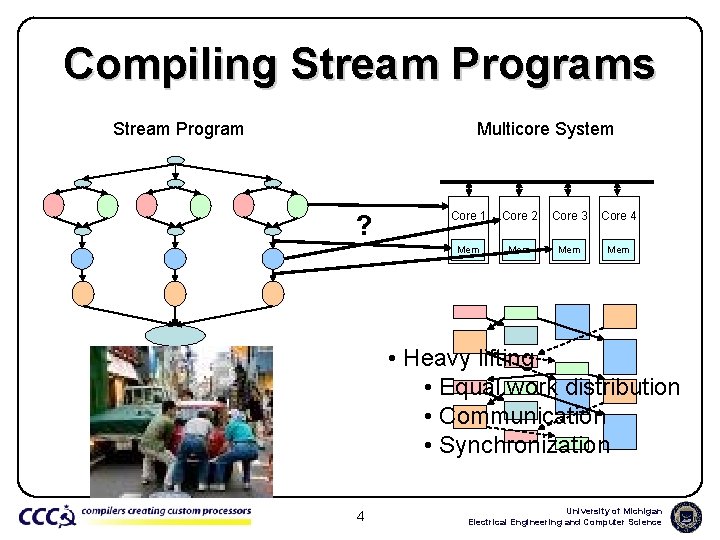

Compiling Stream Programs Stream Program Multicore System ? Core 1 Core 2 Core 3 Core 4 Mem Mem • Heavy lifting • Equal work distribution • Communication • Synchronization 4 University of Michigan Electrical Engineering and Computer Science

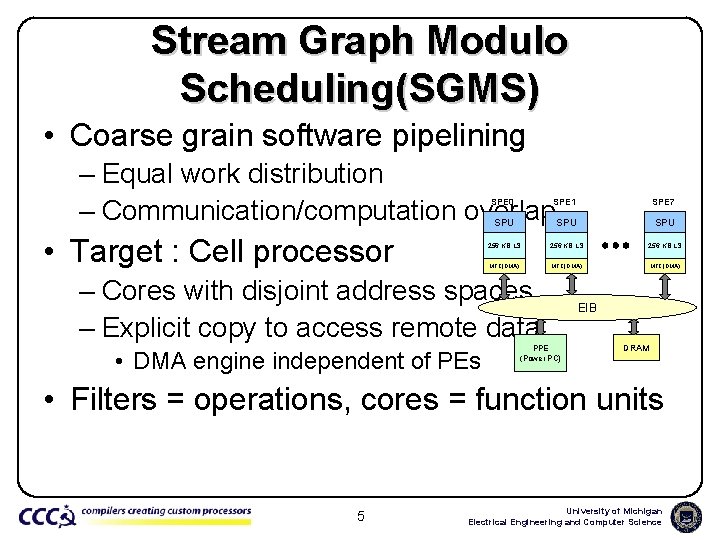

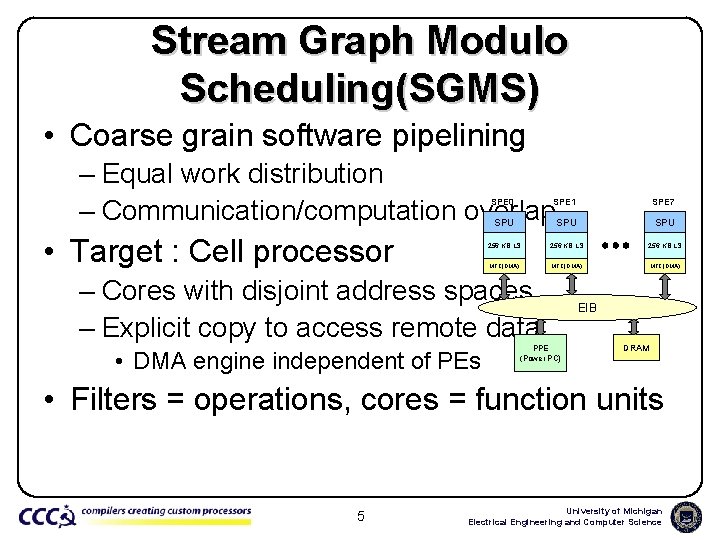

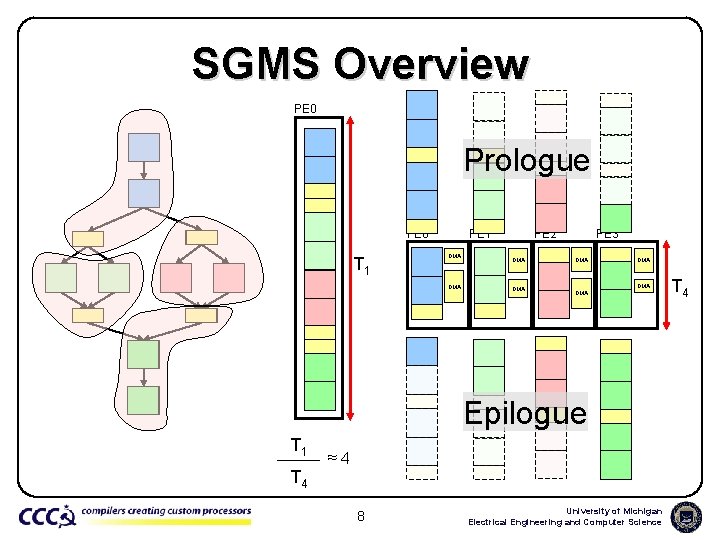

Stream Graph Modulo Scheduling(SGMS) • Coarse grain software pipelining – Equal work distribution – Communication/computation overlap • Target : Cell processor SPE 0 SPE 1 SPE 7 SPU SPU 256 KB LS MFC(DMA) – Cores with disjoint address spaces – Explicit copy to access remote data • DMA engine independent of PEs PPE (Power PC) EIB DRAM • Filters = operations, cores = function units 5 University of Michigan Electrical Engineering and Computer Science

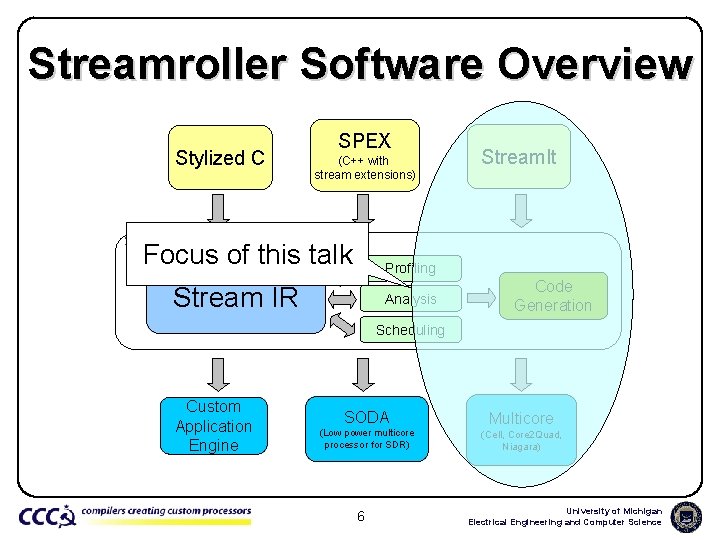

Streamroller Software Overview Stylized C SPEX (C++ with stream extensions) Stream. It Streamroller Focus of this talk Profiling Stream IR Analysis Code Generation Scheduling Custom Application Engine SODA (Low power multicore processor for SDR) 6 Multicore (Cell, Core 2 Quad, Niagara) University of Michigan Electrical Engineering and Computer Science

![Preliminaries Synchronous Data Flow SDF Lee 87 Stream It Thies Preliminaries • Synchronous Data Flow (SDF) [Lee ’ 87] • Stream. It [Thies ’](https://slidetodoc.com/presentation_image/ce10f5ad5b5f394bc7224f9293b1417f/image-7.jpg)

Preliminaries • Synchronous Data Flow (SDF) [Lee ’ 87] • Stream. It [Thies ’ 02] int->int filter FIR(int N, int wgts[N]) { int wgts[N]; work pop 1 push 1 { int i, sum = 0; wgts = adapt(wgts); for(i=0; i<N; i++) sum += peek(i)*wgts[i]; push(sum); pop(); } } sl s u f e l e t a at t S S Push and pop items from input/output FIFOs 7 University of Michigan Electrical Engineering and Computer Science

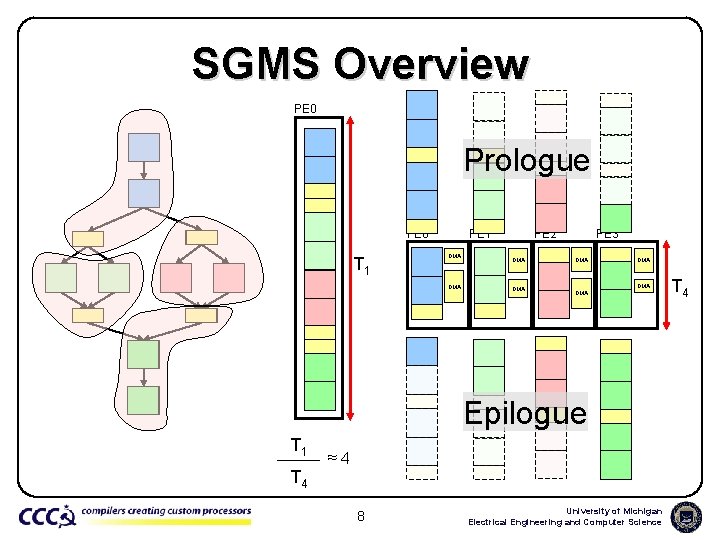

SGMS Overview PE 0 Prologue PE 0 T 1 PE 1 DMA PE 2 DMA PE 3 DMA DMA Epilogue T 1 T 4 ≈4 8 University of Michigan Electrical Engineering and Computer Science T 4

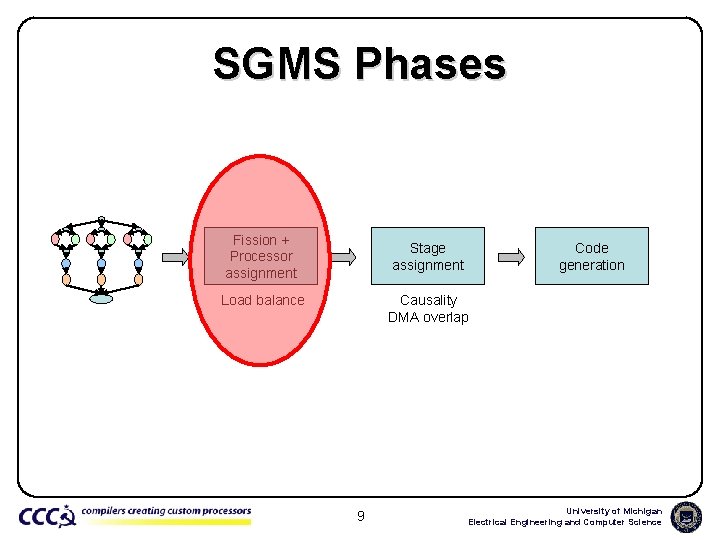

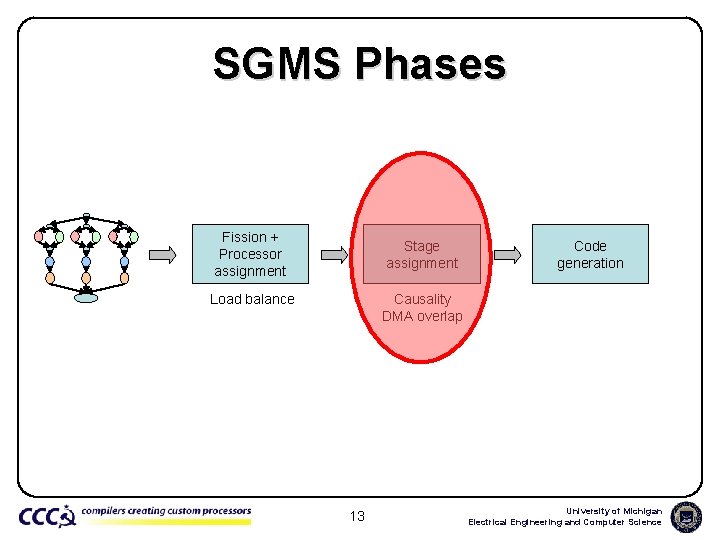

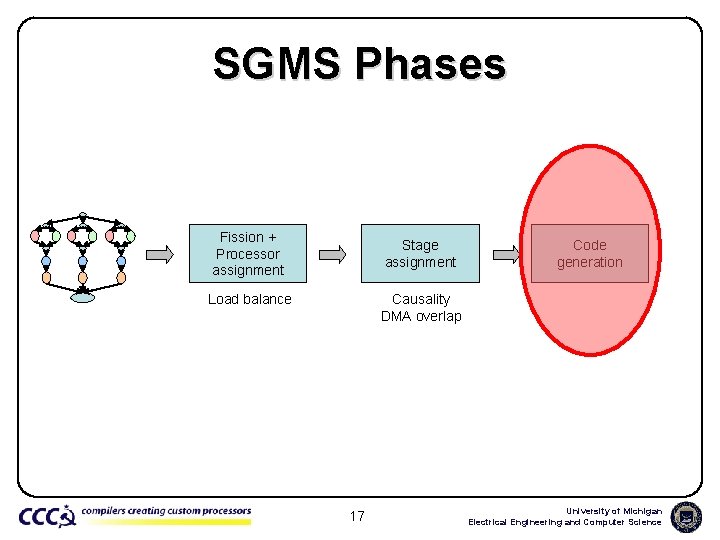

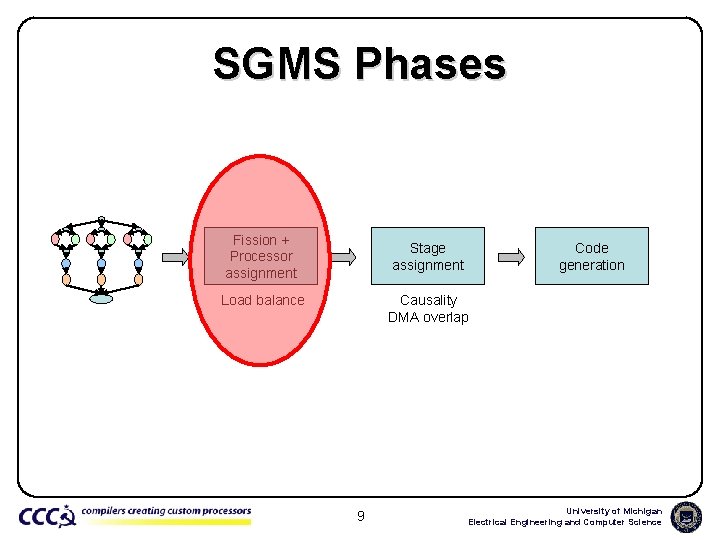

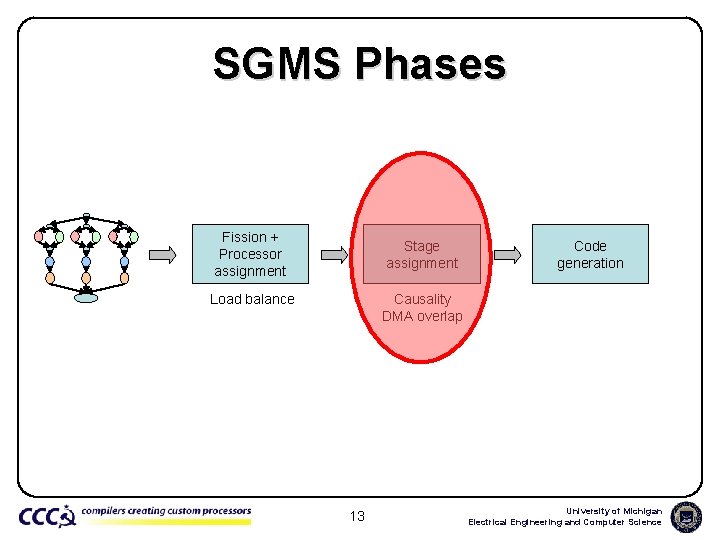

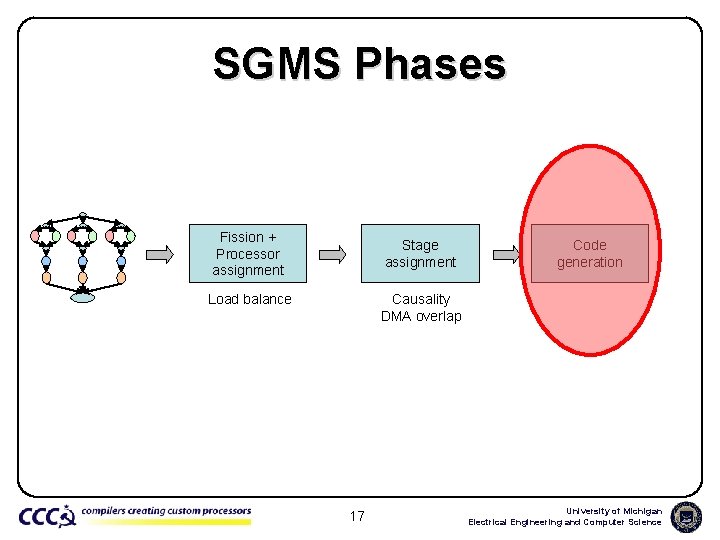

SGMS Phases Fission + Processor assignment Stage assignment Load balance Code generation Causality DMA overlap 9 University of Michigan Electrical Engineering and Computer Science

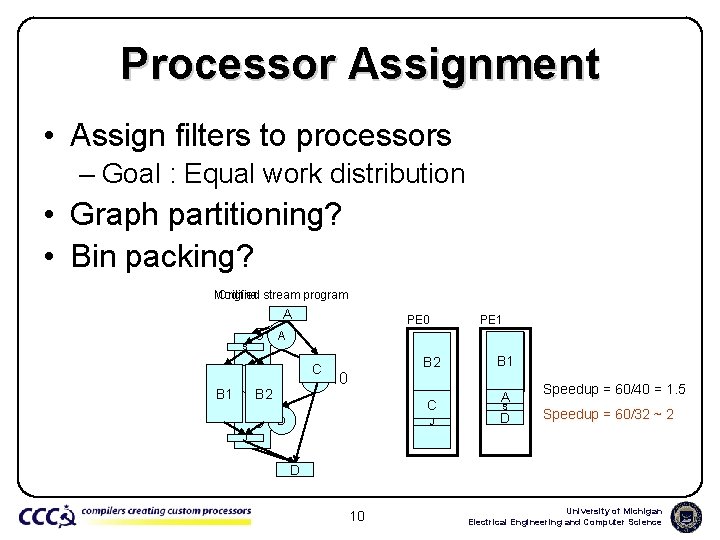

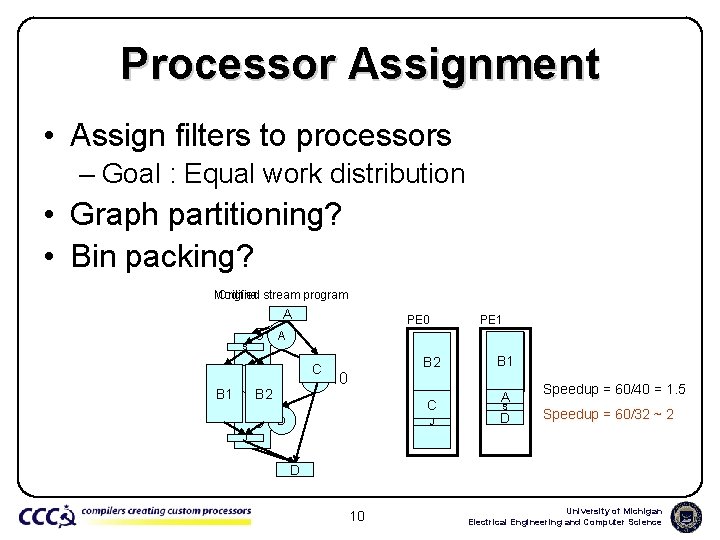

Processor Assignment • Assign filters to processors – Goal : Equal work distribution • Graph partitioning? • Bin packing? Modified Original stream program A S 40 5 C BB 2 B 1 PE 0 A 5 B 2 10 B C D J PE 1 A B 1 C D A Speedup = 60/40 = 1. 5 D Speedup = 60/32 ~ 2 S J D 10 University of Michigan Electrical Engineering and Computer Science

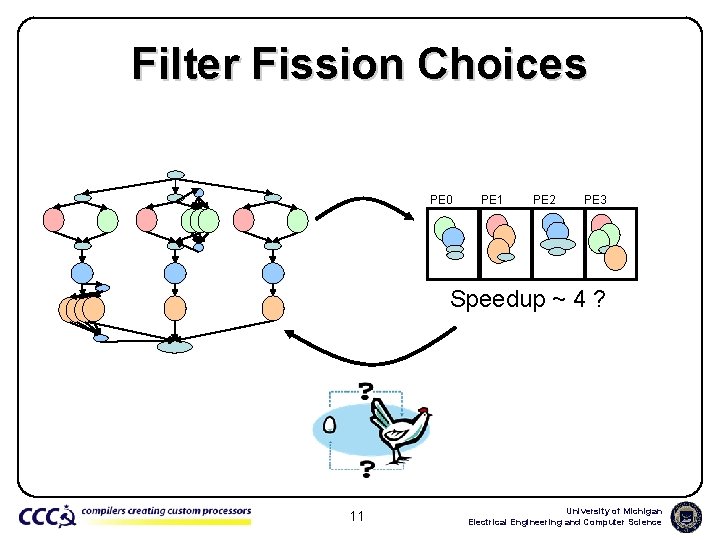

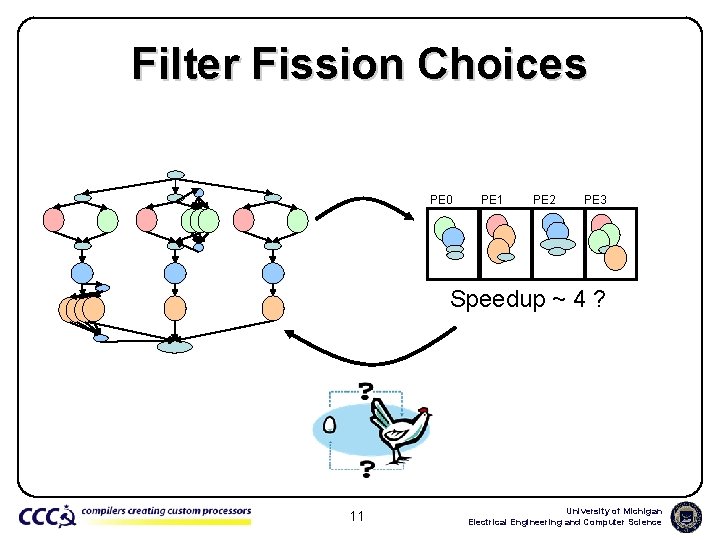

Filter Fission Choices PE 0 PE 1 PE 2 PE 3 Speedup ~ 4 ? 11 University of Michigan Electrical Engineering and Computer Science

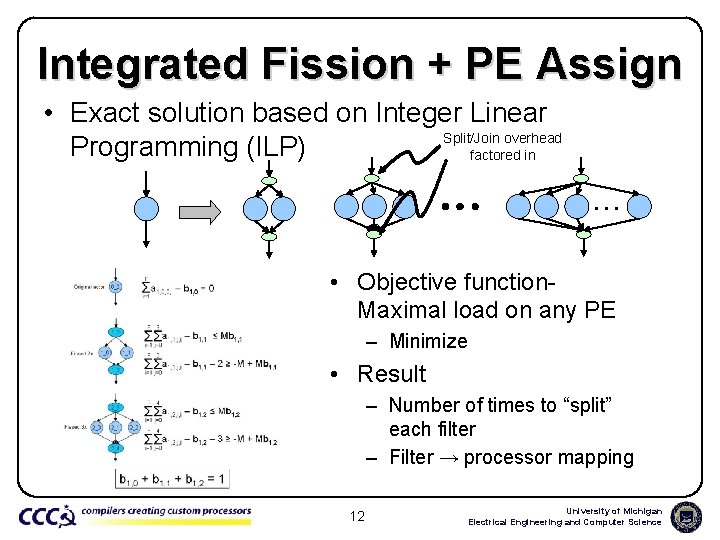

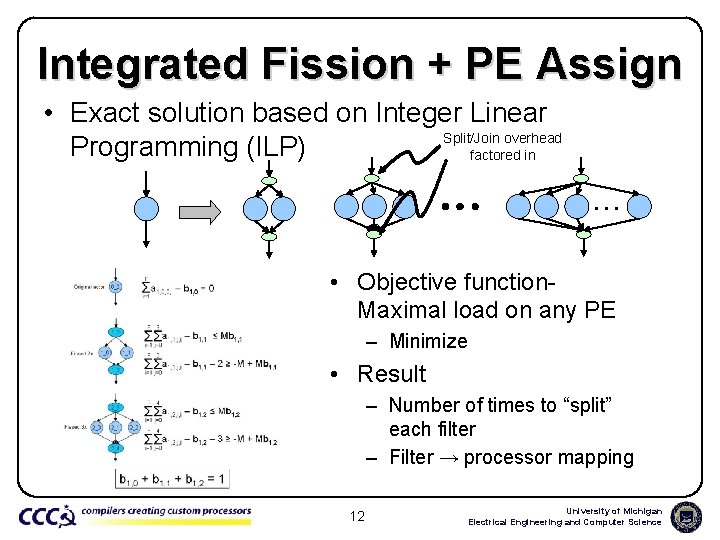

Integrated Fission + PE Assign • Exact solution based on Integer Linear Split/Join overhead Programming (ILP) factored in … • Objective function. Maximal load on any PE – Minimize • Result – Number of times to “split” each filter – Filter → processor mapping 12 University of Michigan Electrical Engineering and Computer Science

SGMS Phases Fission + Processor assignment Stage assignment Load balance Code generation Causality DMA overlap 13 University of Michigan Electrical Engineering and Computer Science

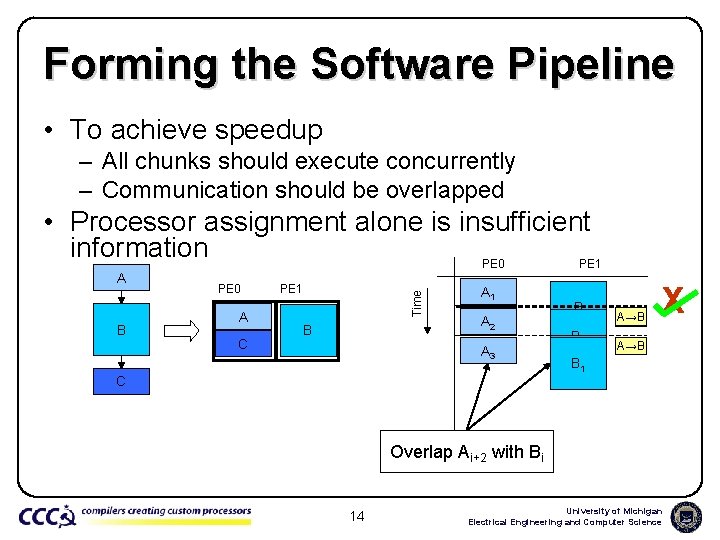

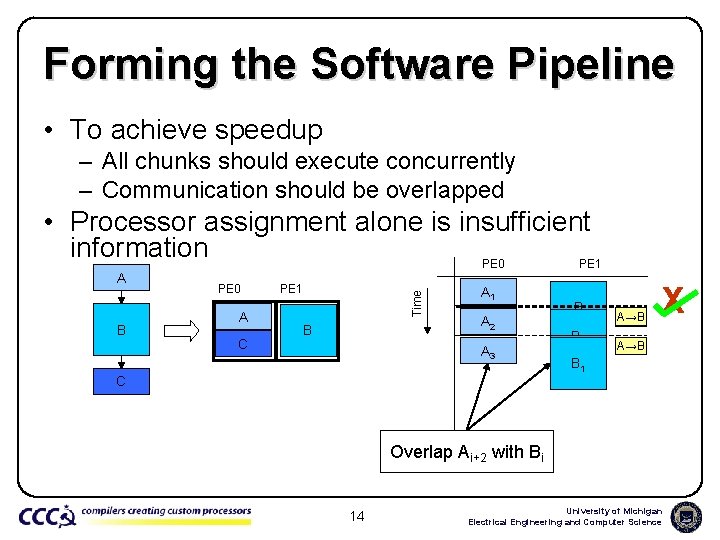

Forming the Software Pipeline • To achieve speedup – All chunks should execute concurrently – Communication should be overlapped • Processor assignment alone is insufficient information PE 0 PE 1 B PE 0 A C PE 1 Time A B AA 1 A 2 A 3 C B B 1 A→B X A→B B 11 Overlap Ai+2 with Bi 14 University of Michigan Electrical Engineering and Computer Science

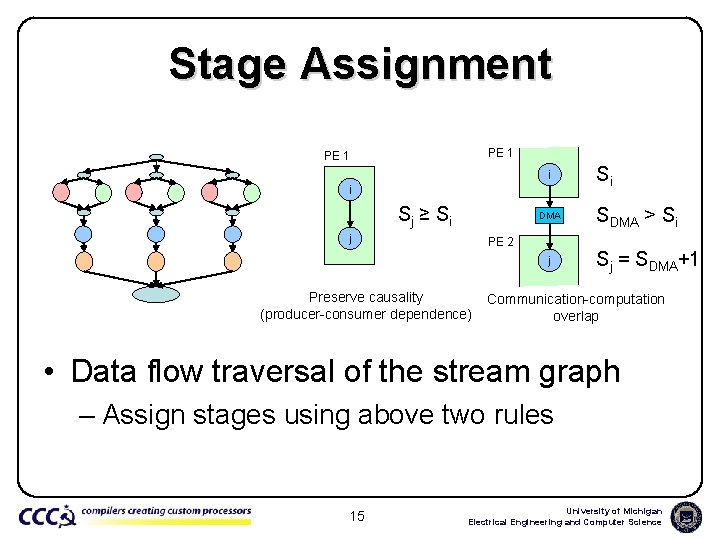

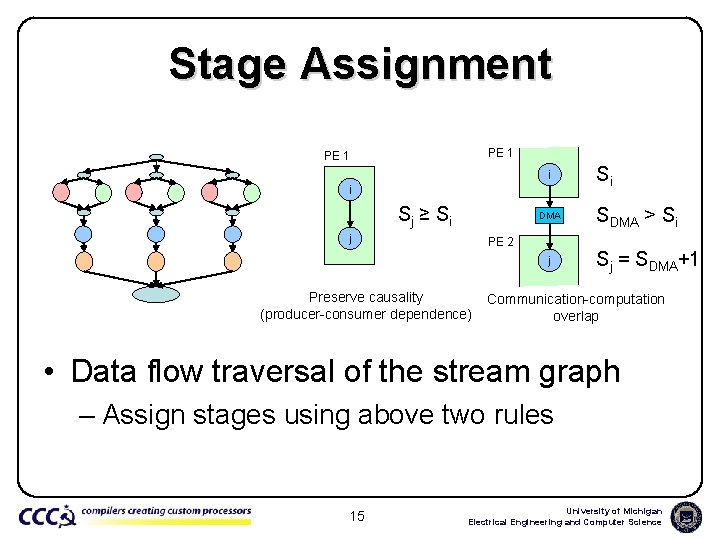

Stage Assignment PE 1 i i Sj ≥ Si DMA j PE 2 j Preserve causality (producer-consumer dependence) Si SDMA > Si Sj = SDMA+1 Communication-computation overlap • Data flow traversal of the stream graph – Assign stages using above two rules 15 University of Michigan Electrical Engineering and Computer Science

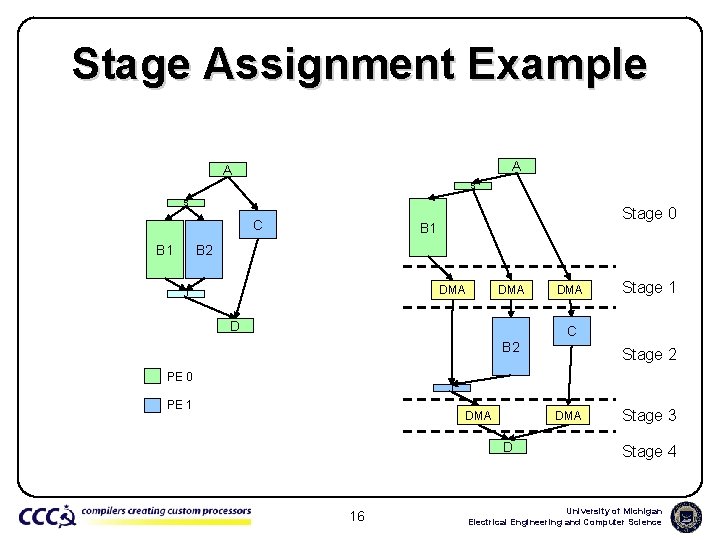

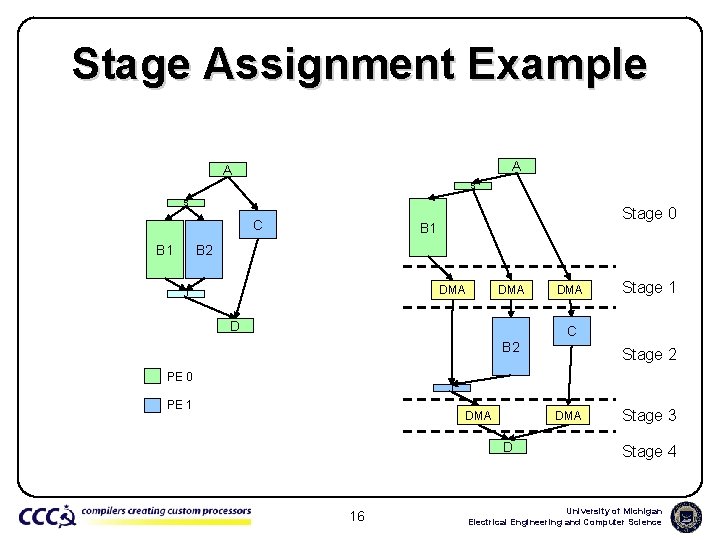

Stage Assignment Example A A S S C B 1 Stage 0 B 1 B 2 DMA J DMA D B 2 PE 0 DMA Stage 1 C Stage 2 J PE 1 DMA D 16 Stage 3 Stage 4 University of Michigan Electrical Engineering and Computer Science

SGMS Phases Fission + Processor assignment Stage assignment Load balance Code generation Causality DMA overlap 17 University of Michigan Electrical Engineering and Computer Science

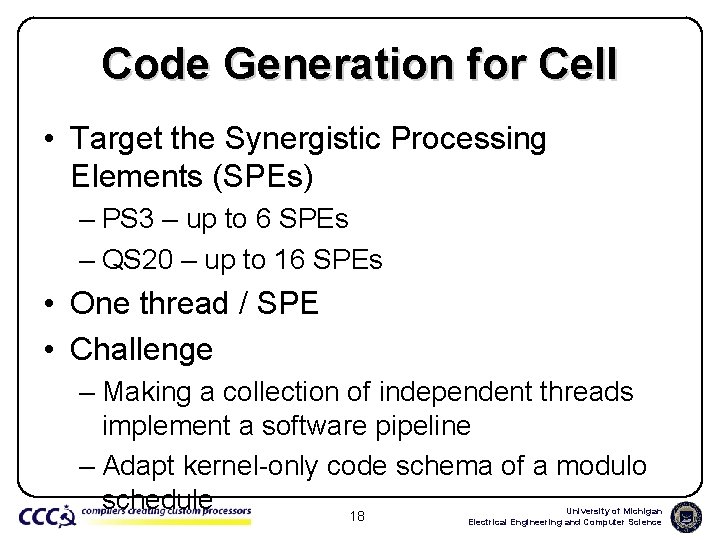

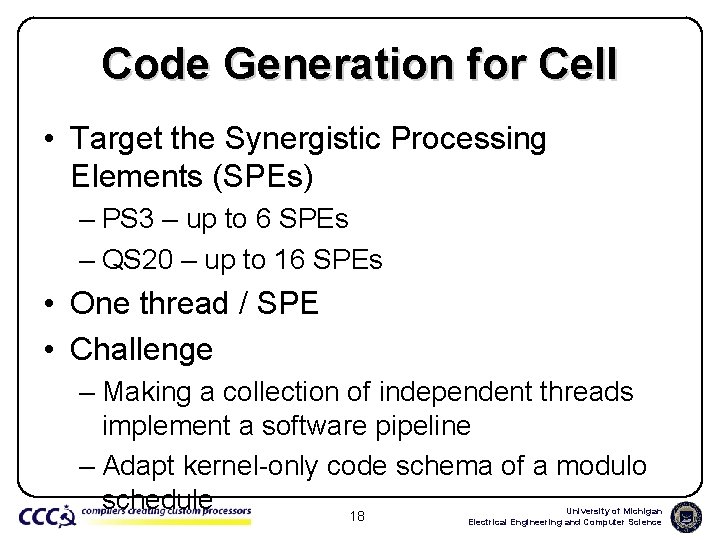

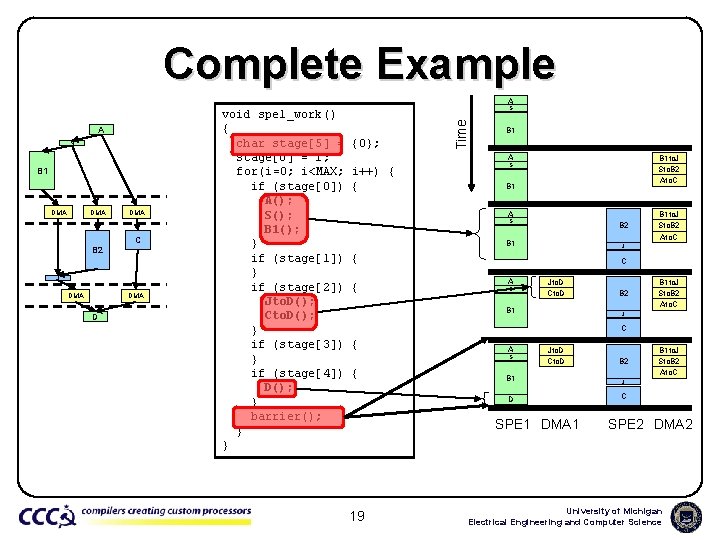

Code Generation for Cell • Target the Synergistic Processing Elements (SPEs) – PS 3 – up to 6 SPEs – QS 20 – up to 16 SPEs • One thread / SPE • Challenge – Making a collection of independent threads implement a software pipeline – Adapt kernel-only code schema of a modulo schedule 18 University of Michigan Electrical Engineering and Computer Science

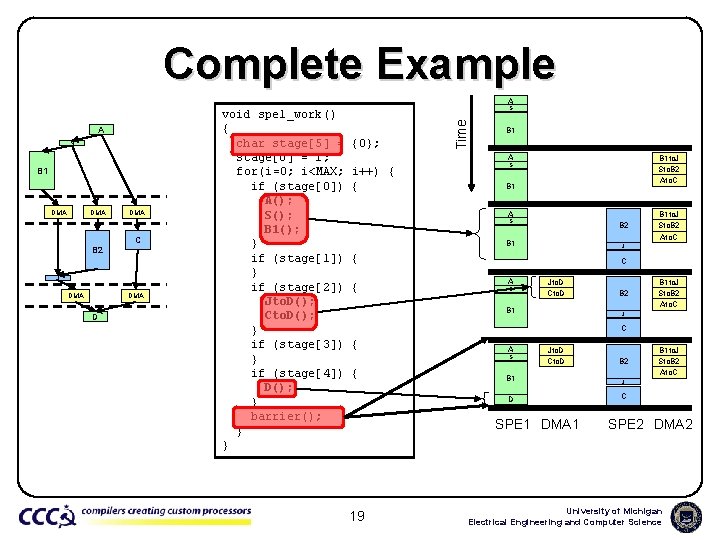

Complete Example A B 1 DMA DMA C B 2 J DMA D S {0}; Time A S void spe 1_work() { char stage[5] = stage[0] = 1; for(i=0; i<MAX; if (stage[0]) A(); S(); B 1(); } if (stage[1]) } if (stage[2]) Jto. D(); Cto. D(); } if (stage[3]) } if (stage[4]) D(); } barrier(); } } B 1 A i++) { { B 1 to. J Sto. B 2 Ato. C S B 1 A S B 2 B 1 J { { B 1 to. J Sto. B 2 Ato. C C A S Jto. D Cto. D B 1 B 2 B 1 to. J Sto. B 2 Ato. C J C { A S { Jto. D Cto. D B 1 D SPE 1 DMA 1 19 B 2 B 1 to. J Sto. B 2 Ato. C J C SPE 2 DMA 2 University of Michigan Electrical Engineering and Computer Science

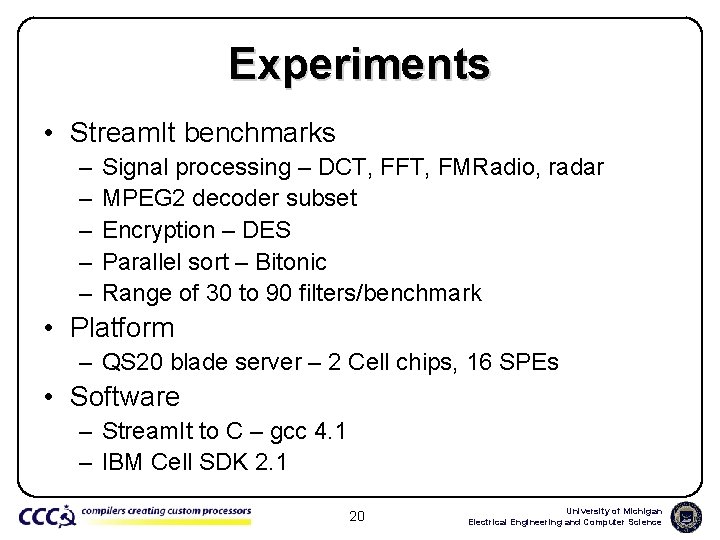

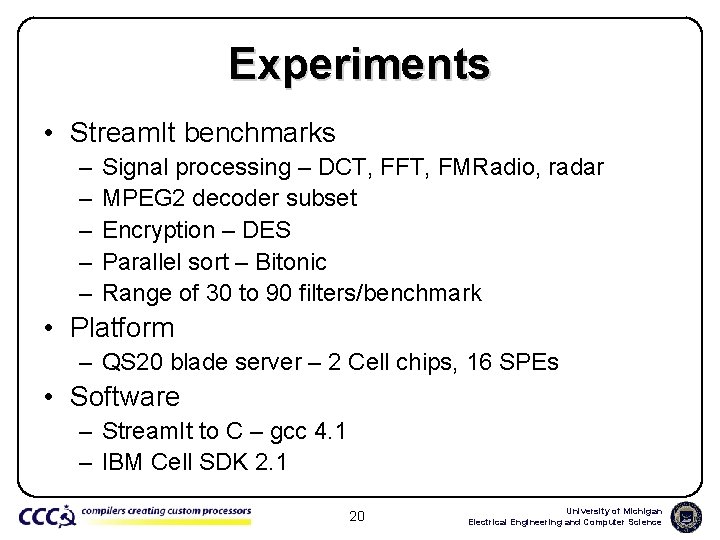

Experiments • Stream. It benchmarks – – – Signal processing – DCT, FFT, FMRadio, radar MPEG 2 decoder subset Encryption – DES Parallel sort – Bitonic Range of 30 to 90 filters/benchmark • Platform – QS 20 blade server – 2 Cell chips, 16 SPEs • Software – Stream. It to C – gcc 4. 1 – IBM Cell SDK 2. 1 20 University of Michigan Electrical Engineering and Computer Science

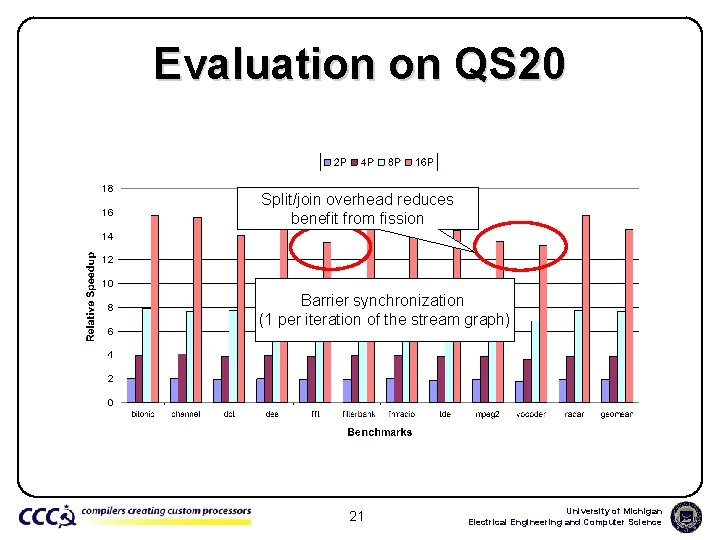

Evaluation on QS 20 Split/join overhead reduces benefit from fission Barrier synchronization (1 per iteration of the stream graph) 21 University of Michigan Electrical Engineering and Computer Science

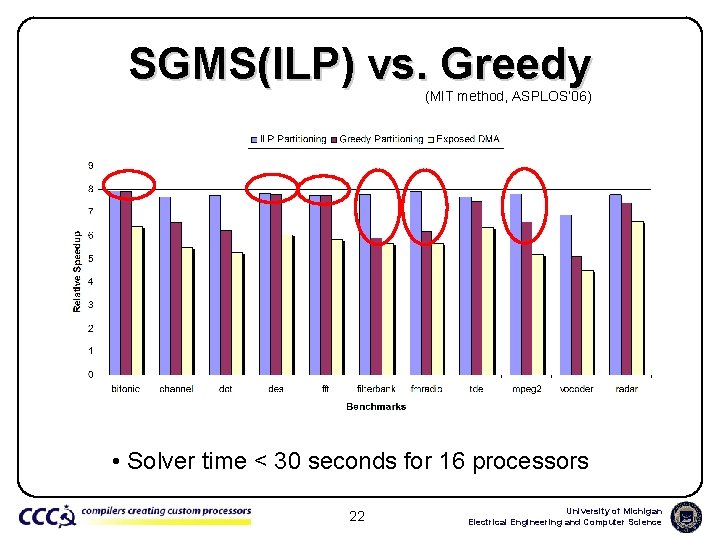

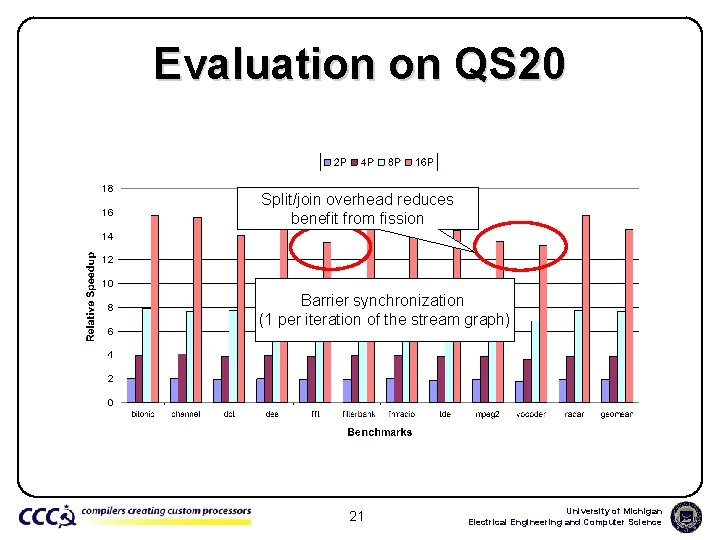

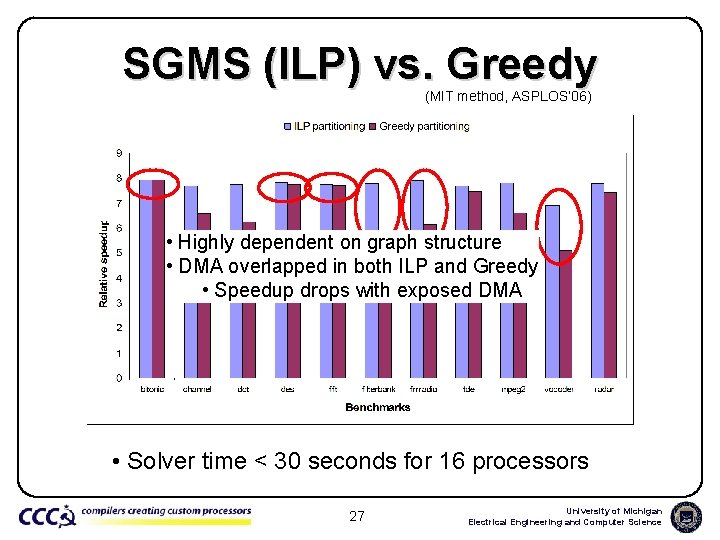

SGMS(ILP) vs. Greedy (MIT method, ASPLOS’ 06) • Solver time < 30 seconds for 16 processors 22 University of Michigan Electrical Engineering and Computer Science

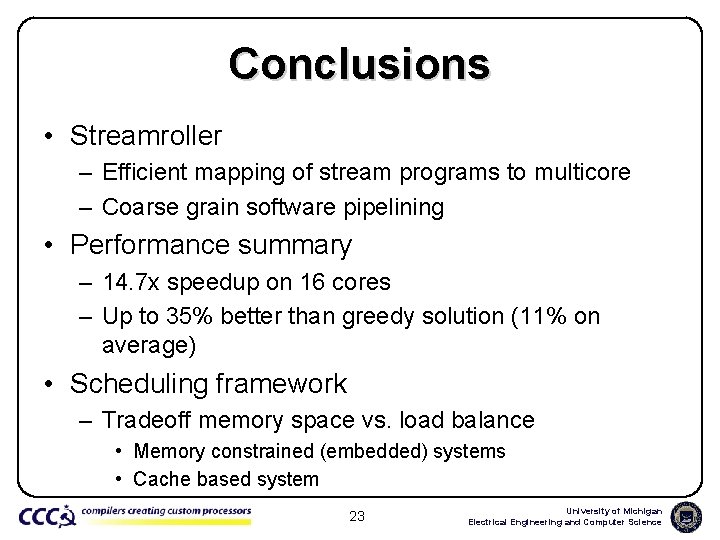

Conclusions • Streamroller – Efficient mapping of stream programs to multicore – Coarse grain software pipelining • Performance summary – 14. 7 x speedup on 16 cores – Up to 35% better than greedy solution (11% on average) • Scheduling framework – Tradeoff memory space vs. load balance • Memory constrained (embedded) systems • Cache based system 23 University of Michigan Electrical Engineering and Computer Science

24 University of Michigan Electrical Engineering and Computer Science

25 University of Michigan Electrical Engineering and Computer Science

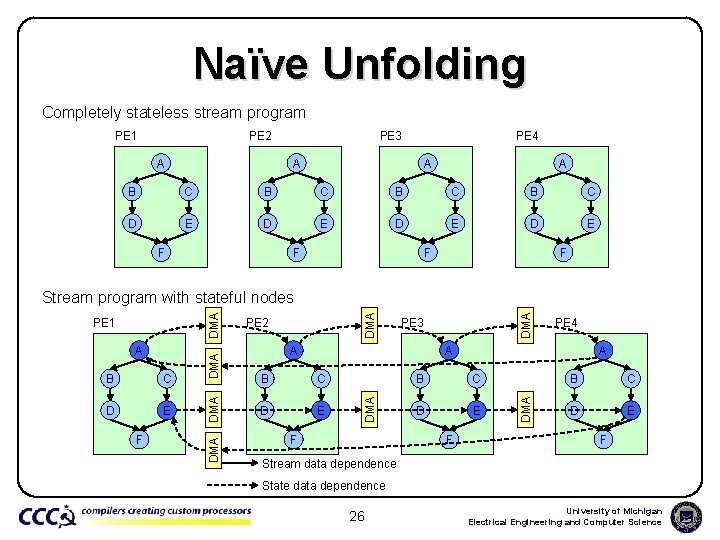

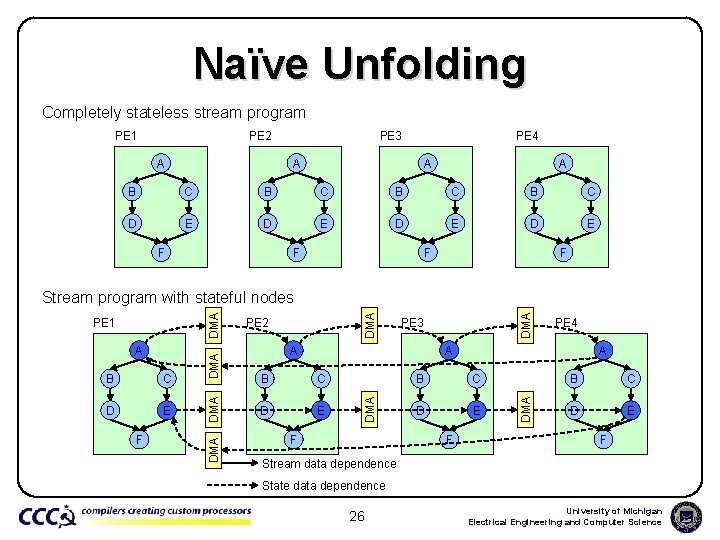

Naïve Unfolding Completely stateless stream program PE 1 PE 2 A PE 3 PE 4 A A A B C B C D E D E F F D E F DMA PE 3 A PE 4 A B C D E F DMA C PE 2 DMA B DMA A DMA PE 1 DMA Stream program with stateful nodes B C D E F Stream data dependence State data dependence 26 University of Michigan Electrical Engineering and Computer Science

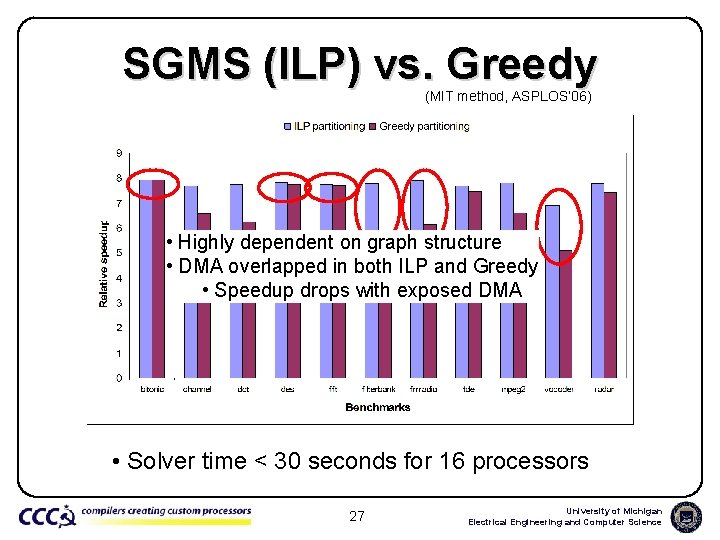

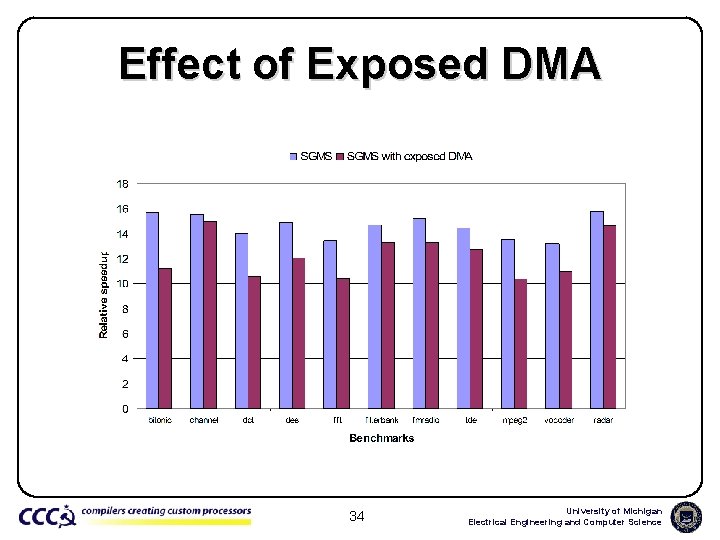

SGMS (ILP) vs. Greedy (MIT method, ASPLOS’ 06) • Highly dependent on graph structure • DMA overlapped in both ILP and Greedy • Speedup drops with exposed DMA • Solver time < 30 seconds for 16 processors 27 University of Michigan Electrical Engineering and Computer Science

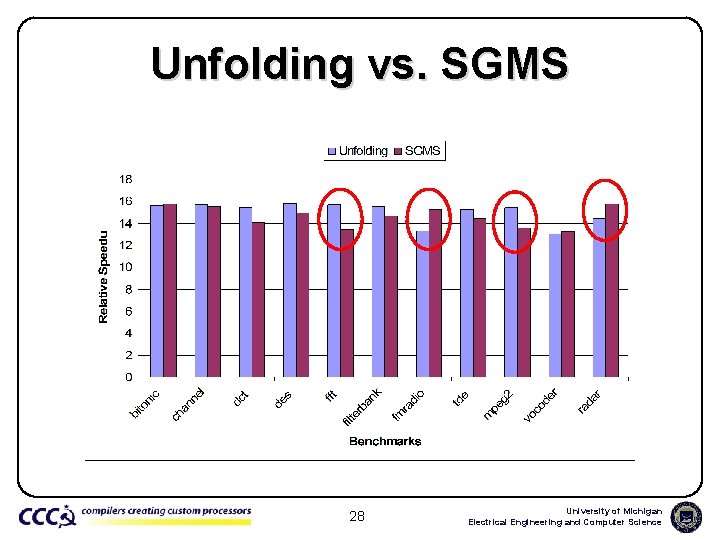

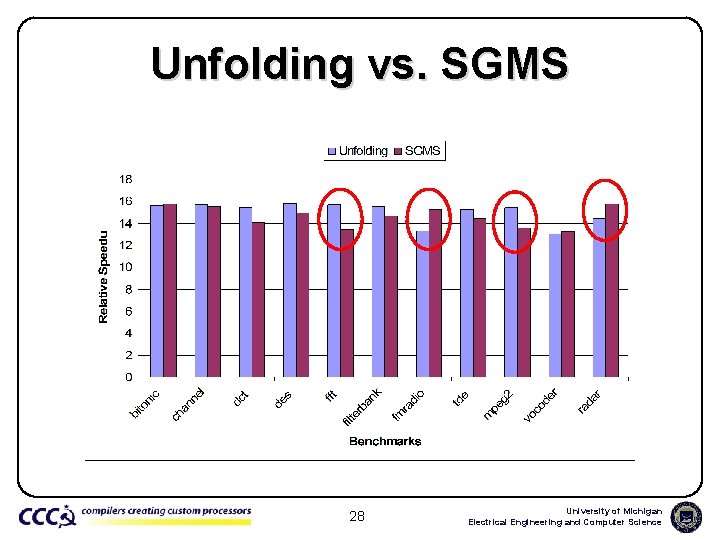

Unfolding vs. SGMS 28 University of Michigan Electrical Engineering and Computer Science

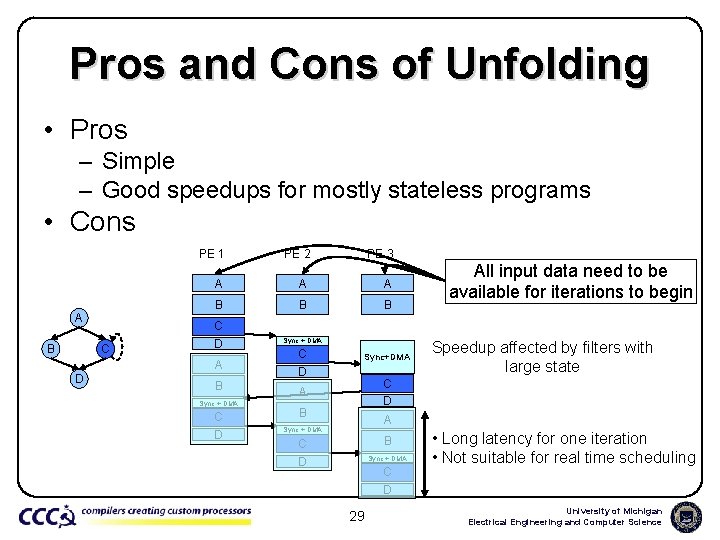

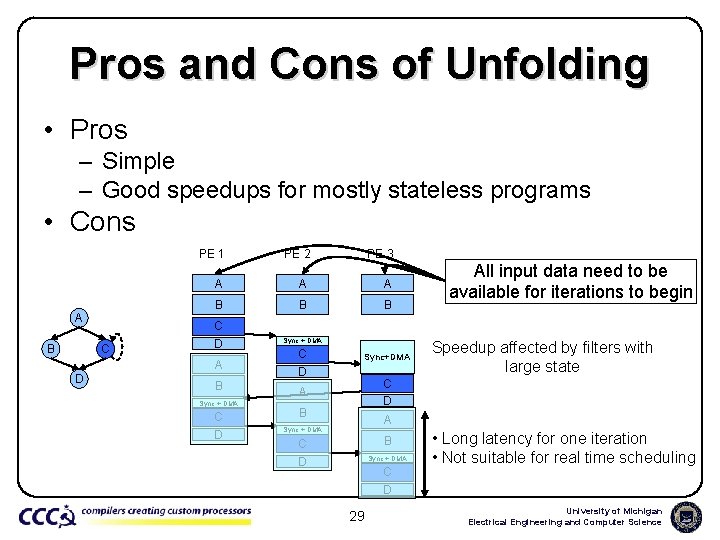

Pros and Cons of Unfolding • Pros – Simple – Good speedups for mostly stateless programs • Cons A B C D PE 1 PE 2 PE 3 A A A B B B C D Sync + DMA A C D B A Sync + DMA C D Sync+DMA Sync + DMA All input data need to be available for iterations to begin Speedup affected by filters with large state C D B A Sync + DMA B C D Sync + DMA C D 29 • Long latency for one iteration • Not suitable for real time scheduling University of Michigan Electrical Engineering and Computer Science

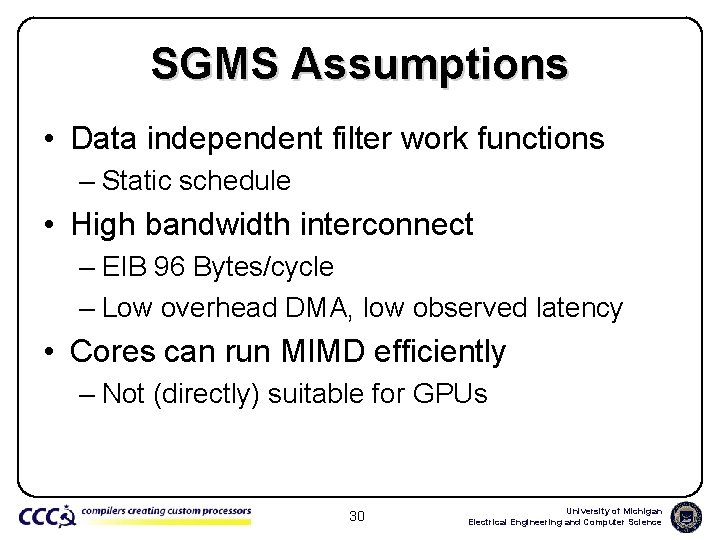

SGMS Assumptions • Data independent filter work functions – Static schedule • High bandwidth interconnect – EIB 96 Bytes/cycle – Low overhead DMA, low observed latency • Cores can run MIMD efficiently – Not (directly) suitable for GPUs 30 University of Michigan Electrical Engineering and Computer Science

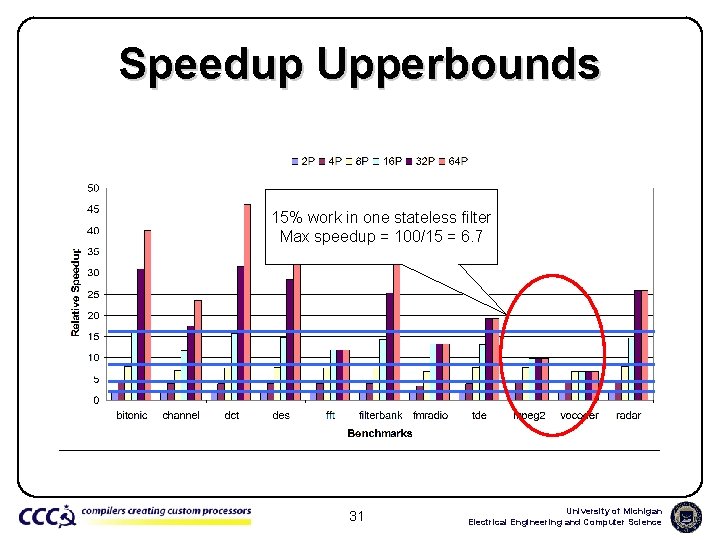

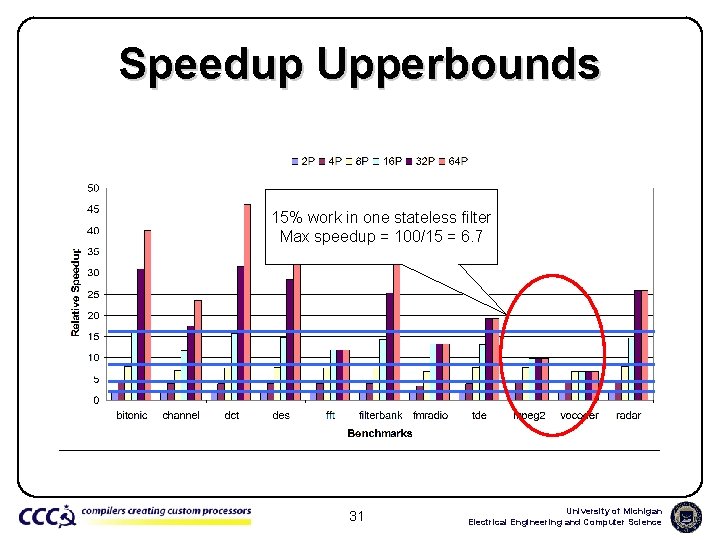

Speedup Upperbounds 15% work in one stateless filter Max speedup = 100/15 = 6. 7 31 University of Michigan Electrical Engineering and Computer Science

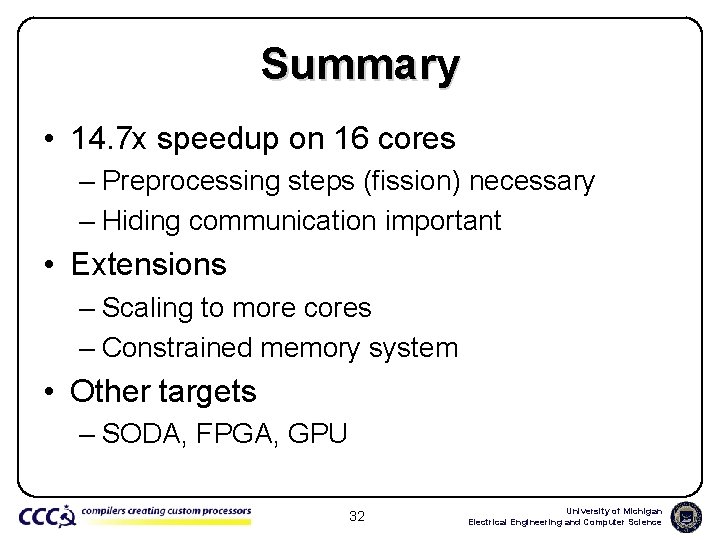

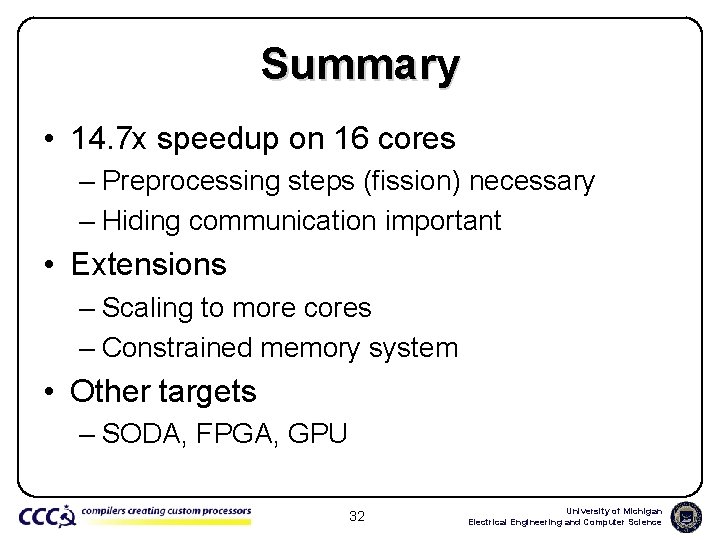

Summary • 14. 7 x speedup on 16 cores – Preprocessing steps (fission) necessary – Hiding communication important • Extensions – Scaling to more cores – Constrained memory system • Other targets – SODA, FPGA, GPU 32 University of Michigan Electrical Engineering and Computer Science

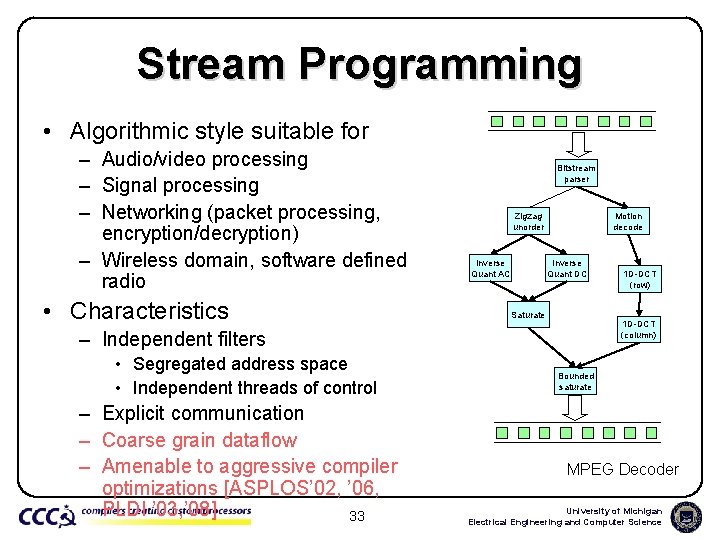

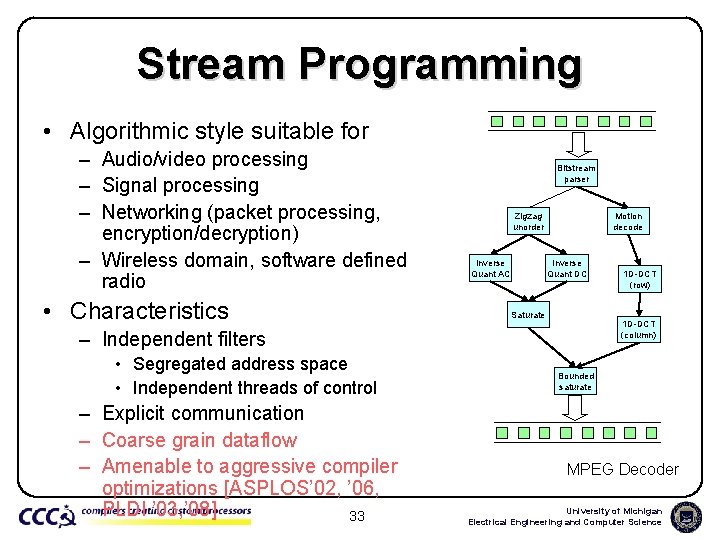

Stream Programming • Algorithmic style suitable for – Audio/video processing – Signal processing – Networking (packet processing, encryption/decryption) – Wireless domain, software defined radio • Characteristics Bitstream parser Zigzag unorder Inverse Quant AC Motion decode Inverse Quant DC Saturate 1 D-DCT (column) – Independent filters • Segregated address space • Independent threads of control – Explicit communication – Coarse grain dataflow – Amenable to aggressive compiler optimizations [ASPLOS’ 02, ’ 06, PLDI ’ 03, ’ 08] 33 1 D-DCT (row) Bounded saturate MPEG Decoder University of Michigan Electrical Engineering and Computer Science

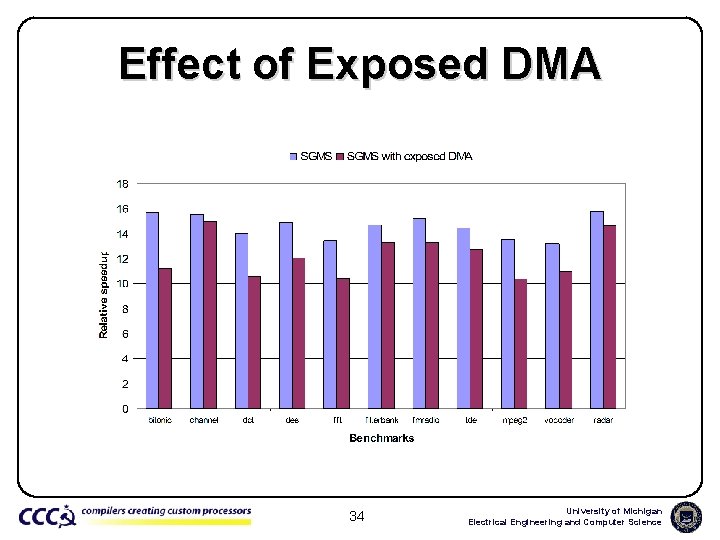

Effect of Exposed DMA 34 University of Michigan Electrical Engineering and Computer Science

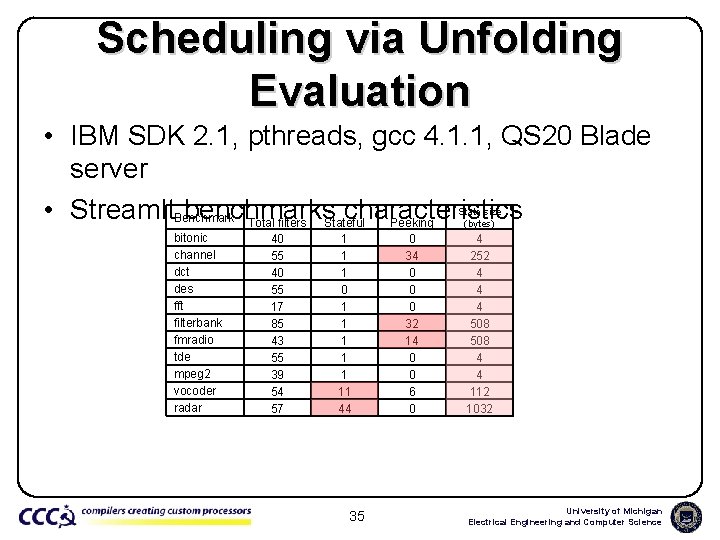

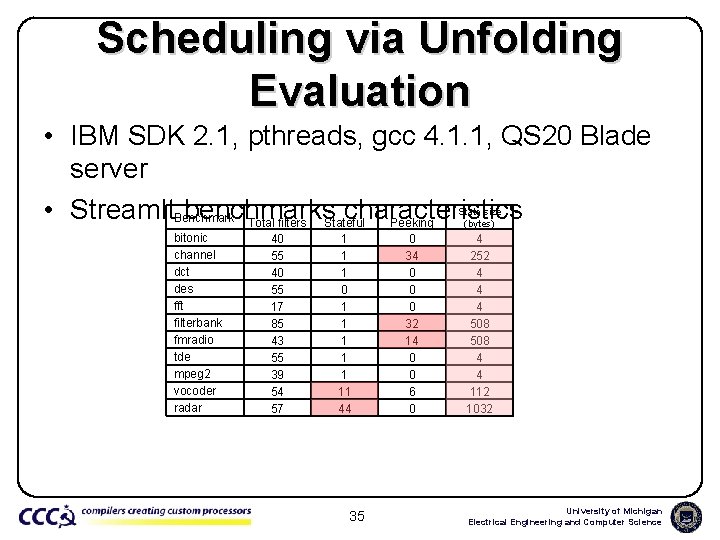

Scheduling via Unfolding Evaluation • IBM SDK 2. 1, pthreads, gcc 4. 1. 1, QS 20 Blade server • Stream. It. Benchmark benchmarks characteristics Total filters Stateful Peeking State size (bytes) bitonic channel dct des fft filterbank fmradio tde mpeg 2 vocoder radar 40 55 17 85 43 55 39 54 57 1 1 1 0 1 1 11 44 35 0 34 0 0 0 32 14 0 0 6 0 4 252 4 4 4 508 4 4 112 1032 University of Michigan Electrical Engineering and Computer Science

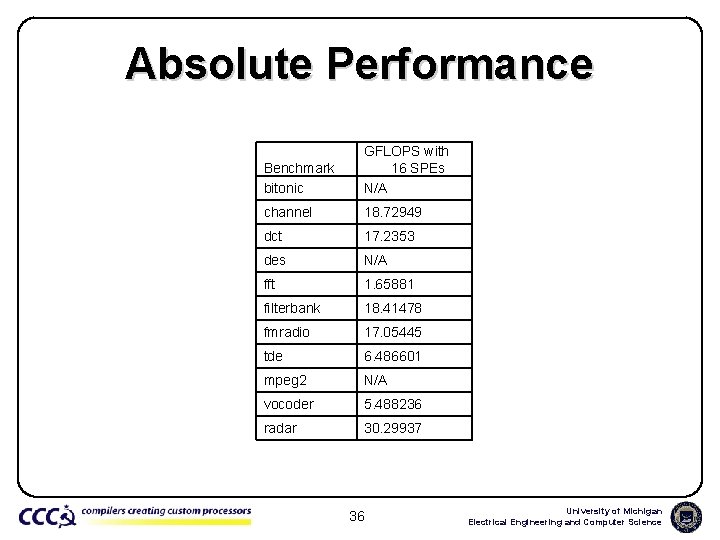

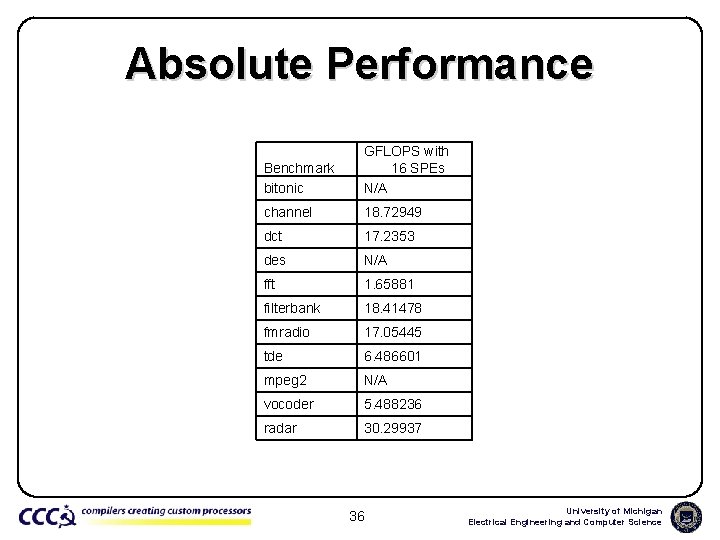

Absolute Performance Benchmark bitonic GFLOPS with 16 SPEs N/A channel 18. 72949 dct 17. 2353 des N/A fft 1. 65881 filterbank 18. 41478 fmradio 17. 05445 tde 6. 486601 mpeg 2 N/A vocoder 5. 488236 radar 30. 29937 36 University of Michigan Electrical Engineering and Computer Science

P Original actor Σa 1, 0, 0, i – b 1, 0 = 0 0_0 i=1 P 3 ΣΣa 1, 1, j, i – b 1, 1 1_2 i=1 j=0 Fissed 2 x 1_0 1_1 P ≤ Mb 1, 1 3 ΣΣa 1, 1, j, i – b 1, 1 – 2 ≥ -M + Mb 1, 1 1_3 i=1 j=0 P 4 ΣΣa 1, 2, j, i – b 1, 2 ≤ Mb 1, 2 2_3 i=1 j=0 Fissed 3 x 2_0 2_1 2_4 2_2 P 4 ΣΣa 1, 2, j, i – b 1, 2 – 3 ≥ -M + Mb 1, 2 i=1 j=0 b 1, 0 + b 1, 1 + b 1, 2 = 1 37 University of Michigan Electrical Engineering and Computer Science

![SPE Code Template void spework char stageN 0 0 SPE Code Template void spe_work() { char stage[N] = {0, 0, . . .](https://slidetodoc.com/presentation_image/ce10f5ad5b5f394bc7224f9293b1417f/image-38.jpg)

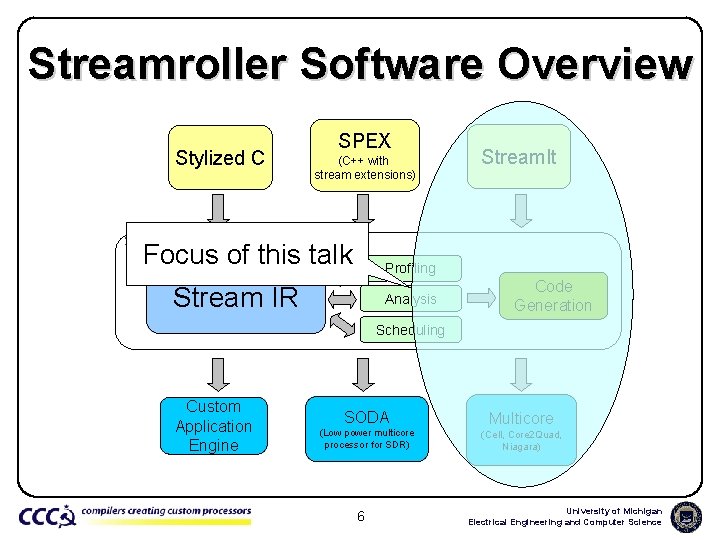

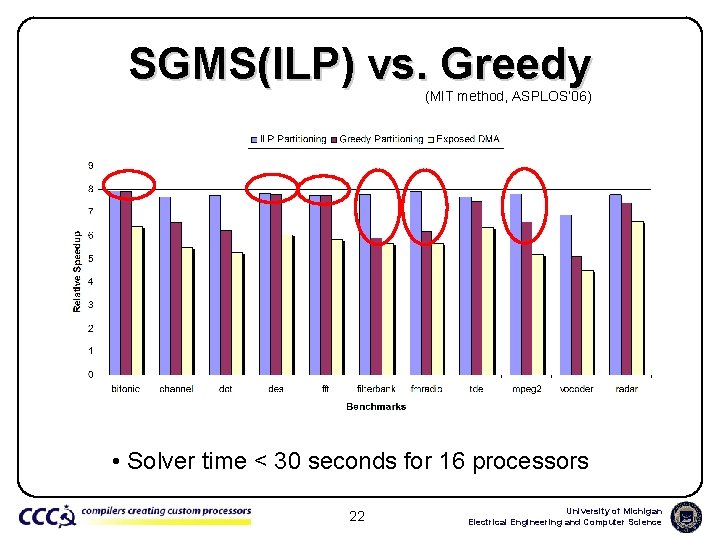

SPE Code Template void spe_work() { char stage[N] = {0, 0, . . . , 0}; stage[0] = 1; Bit mask to control active stages for (i=0; i<max_iter+N-1; i++) { if (stage[N-1]) { Activate Stage 0 Go through all input items Bit mask controls what gets executed } if (stage[N-2]) { } } } . . . if (stage[0]) { Start DMA operation Start_DMA(); Call filter work function Filter. A_work(); } if (i == max_iter-1) stage[0] = 0; Left shift bit mask for(j=N-1; j>=1; j--) (activate more stages) stage[j] = stage[j-1]; Poll for completion of all wait_for_dma(); outstanding DMAs barrier(); Barrier synchronization 38 University of Michigan Electrical Engineering and Computer Science