Orca A language for parallel programming of distributed

Orca A language for parallel programming of distributed systems 1

Orca • Parallel language designed at VU • Design and first implementation (‘ 88 -’ 92): – Bal, Kaashoek, Tanenbaum • Portable Orca system (‘ 93 -’ 97): – Bal, Bhoedjang, Langendoen, Rühl, Jacobs, Hofman • Used by ~30 M. Sc. Students 2

Overview • Distributed shared memory • Orca – Shared data-object model – Processes – Condition synchronization – Language aspects • Examples: TSP and ASP • Implementation 3

Orca’s Programming Model • Explicit parallelism (processes) • Communication model: – Shared memory: hard to build – Distributed memory: hard to program • Idea: shared memory programming model on distributed memory machine • Distributed shared memory (DSM) 4

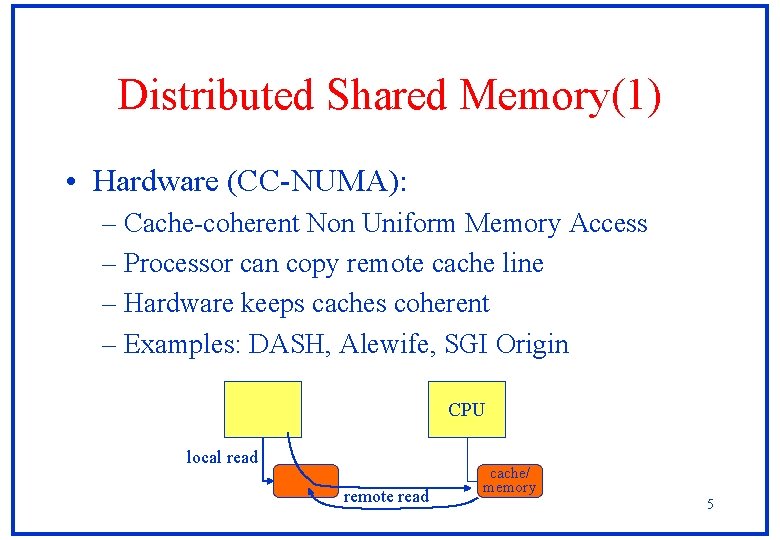

Distributed Shared Memory(1) • Hardware (CC-NUMA): – Cache-coherent Non Uniform Memory Access – Processor can copy remote cache line – Hardware keeps caches coherent – Examples: DASH, Alewife, SGI Origin CPU local read remote read cache/ memory 5

Distributed Shared Memory(2) • Operating system: – Shared virtual memory – Processor can fetch remote pages – OS keeps copies of pages coherent • User-level system – Treadmarks – Uses OS-like techniques – Implemented with mmap and signals 6

Distributed Shared Memory(3) • Languages and libraries – Do not provide flat address space – Examples: • Linda: tuple spaces • CRL: shared regions • Orca: shared data-objects 7

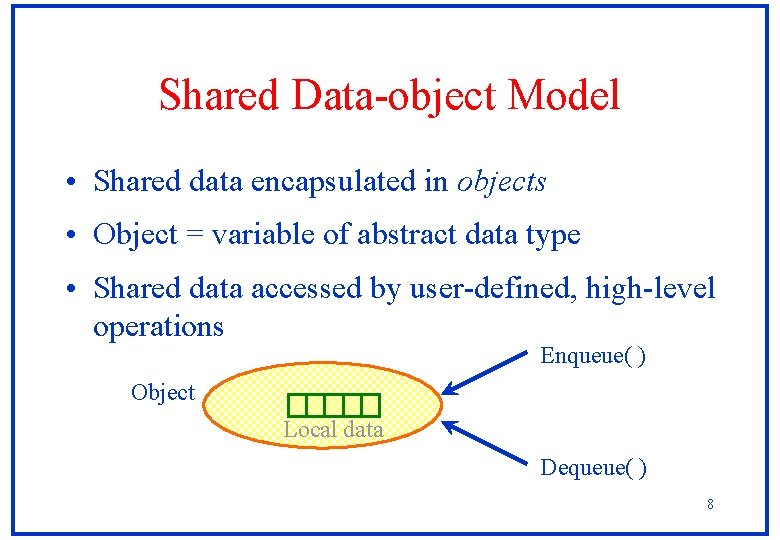

Shared Data-object Model • Shared data encapsulated in objects • Object = variable of abstract data type • Shared data accessed by user-defined, high-level operations Enqueue( ) Object Local data Dequeue( ) 8

Semantics • Each operation is executed atomically – As if operations were executed 1 at a time – Mutual exclusion synchronization – Similar to monitors • Each operation applies to single object – Allows efficient implementation – Atomic operations on multiple objects are seldom needed and hard to implement 9

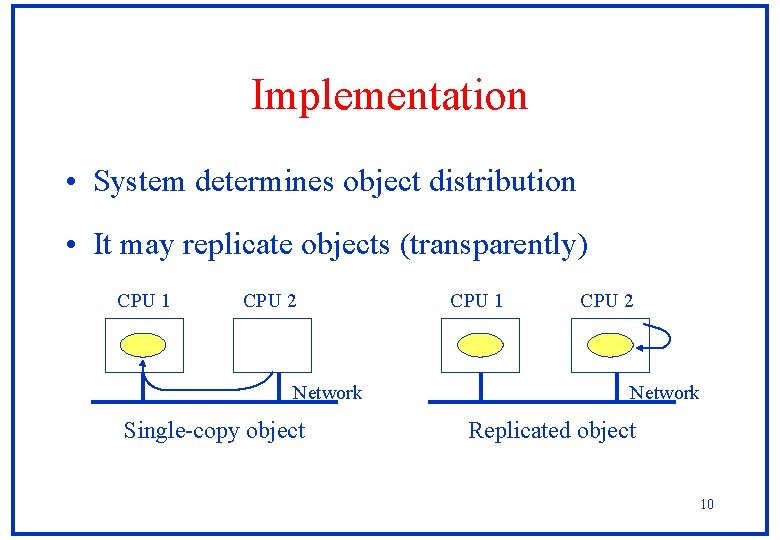

Implementation • System determines object distribution • It may replicate objects (transparently) CPU 1 CPU 2 Network Single-copy object CPU 1 CPU 2 Network Replicated object 10

Object Types • Abstract data type • Two parts: 1 Specification part • ADT operations 2 Implementation part • Local data • Code for operations • Optional initialization code 11

Example: Intobject • Specification part object specification Int. Object; operation Value(): integer; operation Assign(Val: integer); operation Min(Val: integer); end; 12

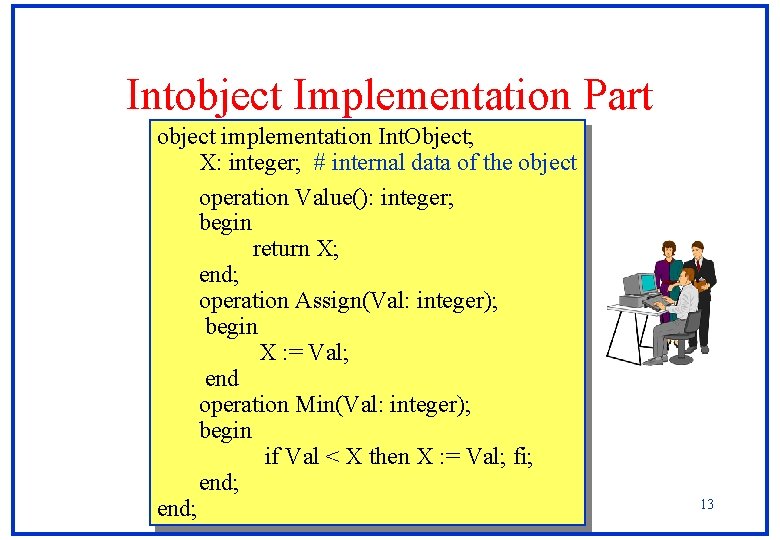

Intobject Implementation Part object implementation Int. Object; X: integer; # internal data of the object operation Value(): integer; begin return X; end; operation Assign(Val: integer); begin X : = Val; end operation Min(Val: integer); begin if Val < X then X : = Val; fi; end; 13

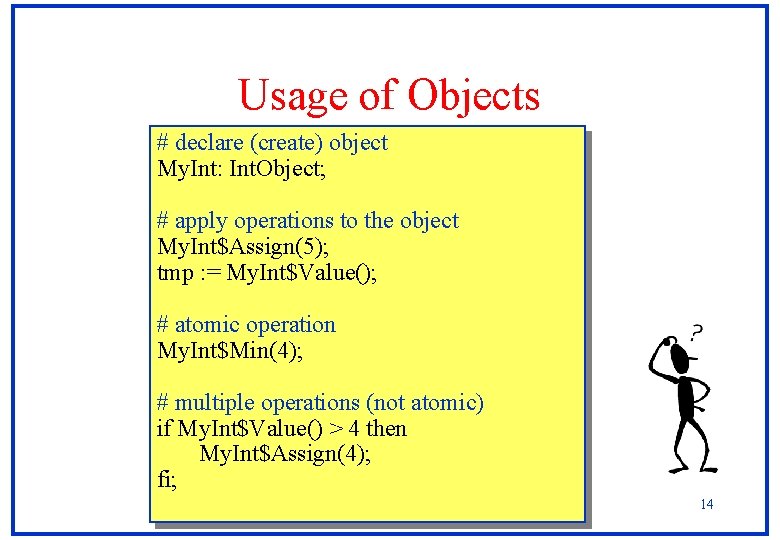

Usage of Objects # declare (create) object My. Int: Int. Object; # apply operations to the object My. Int$Assign(5); tmp : = My. Int$Value(); # atomic operation My. Int$Min(4); # multiple operations (not atomic) if My. Int$Value() > 4 then My. Int$Assign(4); fi; 14

Parallelism • Expressed through processes – Process declaration: defines behavior – Fork statement: creates new process • Object made accessible by passing it as shared parameter (call-by-reference) • Any other data structure can be passed by value (copied) 15

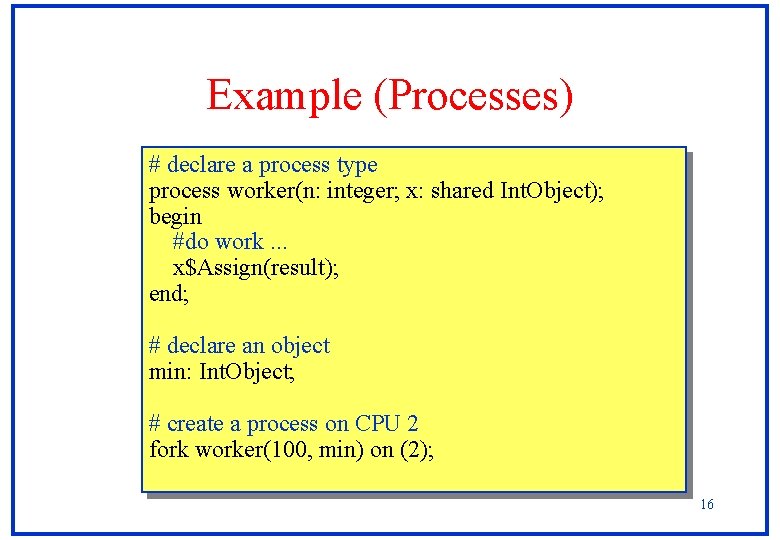

Example (Processes) # declare a process type process worker(n: integer; x: shared Int. Object); begin #do work. . . x$Assign(result); end; # declare an object min: Int. Object; # create a process on CPU 2 fork worker(100, min) on (2); 16

Structure of Orca Programs • Initially there is one process (Orca. Main) • A process can create child processes and share objects with them • Hierarchy of processes communicating through objects • No lightweight treads 17

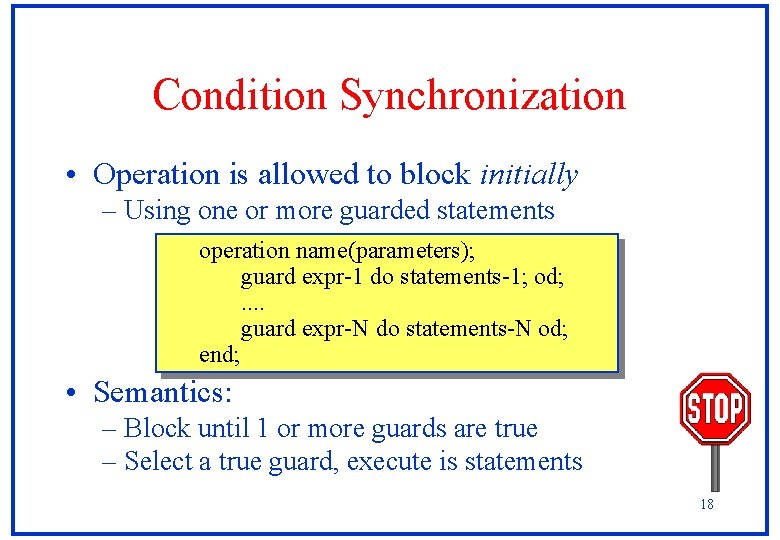

Condition Synchronization • Operation is allowed to block initially – Using one or more guarded statements operation name(parameters); guard expr-1 do statements-1; od; . . guard expr-N do statements-N od; end; • Semantics: – Block until 1 or more guards are true – Select a true guard, execute is statements 18

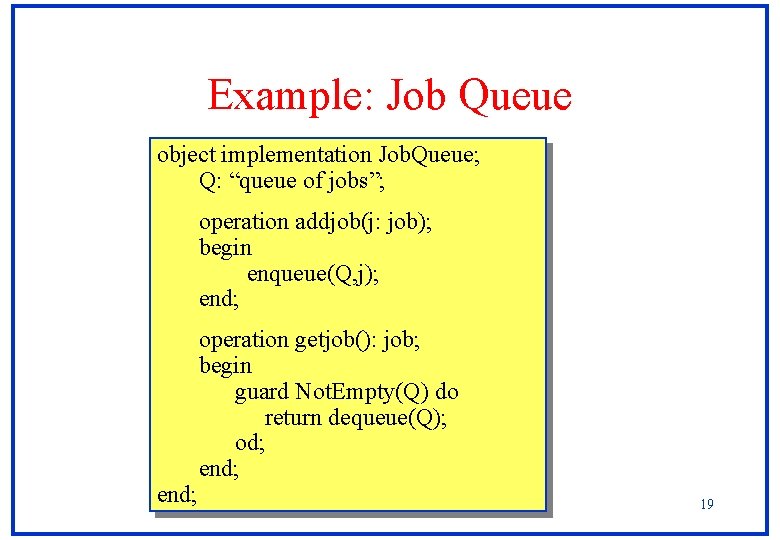

Example: Job Queue object implementation Job. Queue; Q: “queue of jobs”; operation addjob(j: job); begin enqueue(Q, j); end; operation getjob(): job; begin guard Not. Empty(Q) do return dequeue(Q); od; end; 19

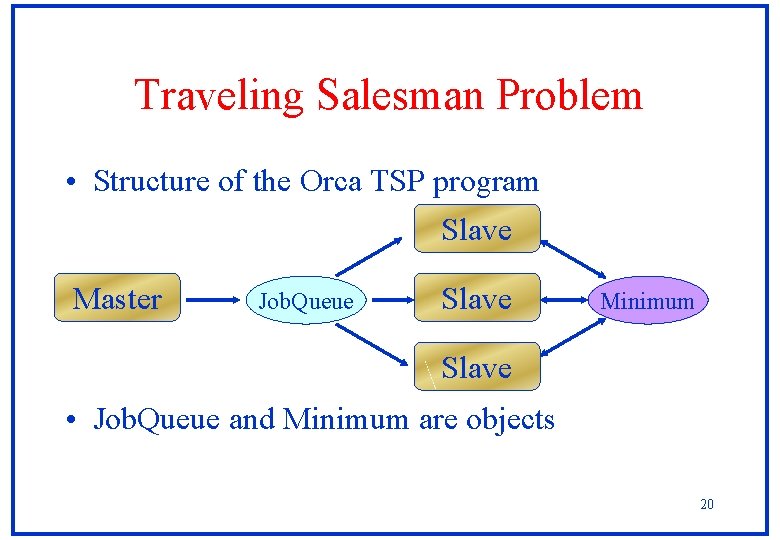

Traveling Salesman Problem • Structure of the Orca TSP program Slave Master Job. Queue Slave Minimum Slave • Job. Queue and Minimum are objects 20

Language Aspects (1) • Syntax somewhat similar to Modula-2 • Standard types: – Scalar (integer, real) – Dynamic arrays – Records, unions, sets, bags – Graphs • Generic types (as in Ada) • User-defined abstract data types 21

Language Aspects (2) • No global variables • No pointers • Type-secure – Every error against the language rules will be detected by the compiler or runtime system • Not object-oriented – No inheritance, dynamic binding, polymorphism 22

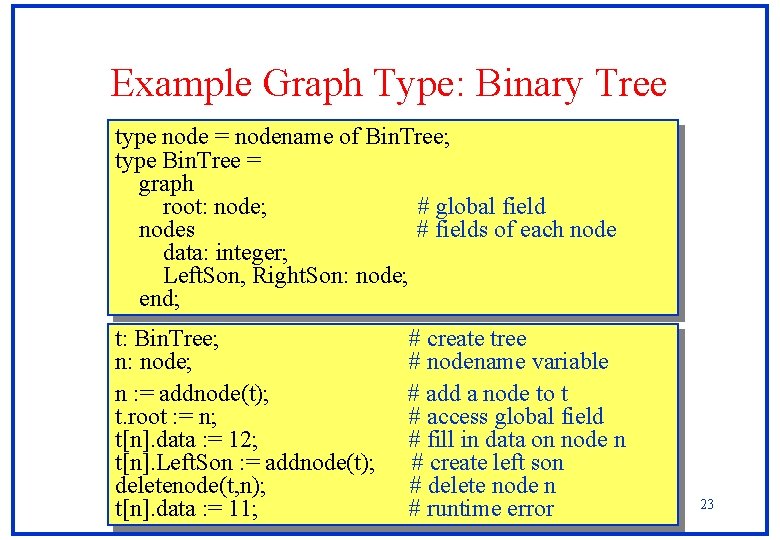

Example Graph Type: Binary Tree type node = nodename of Bin. Tree; type Bin. Tree = graph root: node; # global field nodes # fields of each node data: integer; Left. Son, Right. Son: node; end; t: Bin. Tree; n: node; n : = addnode(t); t. root : = n; t[n]. data : = 12; t[n]. Left. Son : = addnode(t); deletenode(t, n); t[n]. data : = 11; # create tree # nodename variable # add a node to t # access global field # fill in data on node n # create left son # delete node n # runtime error 23

Performance Issues • Orca provides high level of abstraction easy to program hard to understand performance behavior • Example: X$foo() can either result in: – function call (if X is not shared) – monitor call (if X is shared and stored locally) – remote procedure call (if X is stored remotely) – broadcast (if X is replicated) 24

Performance model • The Orca system will: – replicate objects with a high read/write-ratio – store nonreplicated object on ``best’’ location • Communication is generated for: – writing replicated object (broadcast) – accessing remote nonreplicated object (RPC) • Programmer must think about locality 25

Summary of Orca • Object-based distributed shared memory – Hides the underlying network from the user – Applications can use shared data • Language is especially designed for distributed systems • User-defined, high-level operations 26

- Slides: 26