Oracle Solutions for Data Archiving Luca Canali CERNITDM

- Slides: 14

Oracle Solutions for Data Archiving Luca Canali, CERN/IT-DM Distributed Database Operations Workshop November 11 th, 2008 CERN IT Department CH-1211 Genève 23 Switzerland www. cern. ch/it

Data life cycle management - WHAT • Use cases ultimately come from the experiments – Typically require removal of large chunks of data on a time-based selection – Related use case: put read only part of data CERN IT Department CH-1211 Genève 23 Switzerland www. cern. ch/it

Data life cycle management - WHY • Manageability – A database should not become a ‘data dump’ – Very large DBs are hard to manage • Performance – Some application-critical SQL statements may not scale well (full scans, hash joins, etc) – More and more attention to SQL tuning is needed with very large tables • Cost – When the DB grows extra HW resources are needed to maintain the service levels • ex: extra spindles to provide IOPS to read stale data CERN IT Department CH-1211 Genève 23 Switzerland www. cern. ch/it

Why it’s hard • There is no default Oracle mechanism to archive parts of applications – It must be custom implemented – Rules must be set in place by the ‘data owners’ on how to move and restore • What can be of help – Oracle features for bulk data movement – Typically require DBA privileges – Additional HW to hold ‘offline data’ CERN IT Department CH-1211 Genève 23 Switzerland www. cern. ch/it

Oracle and large data movements • Normal DML is too slow – delete operations in particular • Alternatives – – CERN IT Department CH-1211 Genève 23 Switzerland www. cern. ch/it DDL such as partition exchange/movement Transportablespace CTAS (create table a select) Datapump

Oracle and large data sets • Data (tables) need to have the possibility to allow extracting ‘chunks’ in a time-based manner – Oracle partitioning by time is a possibility – Beware of many limitations of partitioning (it’s not a magic wand) – Do-it-yourself partitioning by generating multiple tables/schema every year (for example) CERN IT Department CH-1211 Genève 23 Switzerland www. cern. ch/it

Additional challenge • Lookup tables/metadata is needed too – Besides the main table(s) to archive – Can be a large number of tables – Not easy to identify a ‘consistent set’ to archive CERN IT Department CH-1211 Genève 23 Switzerland www. cern. ch/it

Technology • Main requirements – Must be able to restore the data if and when need (over a time scale of 10 years, i. e. 3 -4 Oracle versions) – Must keep track of what is where – Should use one of the few Oracle technologies for moving large chunks of data • Possible solutions – Move data to a specialized Oracle database to collect ‘application chunks’ – Export to files (flat files or datapump) is not considered reliable given current experience CERN IT Department CH-1211 Genève 23 Switzerland www. cern. ch/it

Possible Scenarios • Scenario 1 – Application owners identify consistent sets of data to be moved – Identified data sets are moved by the DBAs – Data is then dropped from production. – requires considerable effort from the developers – gives the cleaner result CERN IT Department CH-1211 Genève 23 Switzerland www. cern. ch/it

Possible Scenarios • Scenario 2 – A clone (complete copy) of the selected applications is taken (for example at the end of each year) and archived according to the chosen archive technology – Application owners then take care of deleting from production trusting that data is archived and kept. – easy to implement – more data than strictly needed is copied CERN IT Department CH-1211 Genève 23 Switzerland www. cern. ch/it

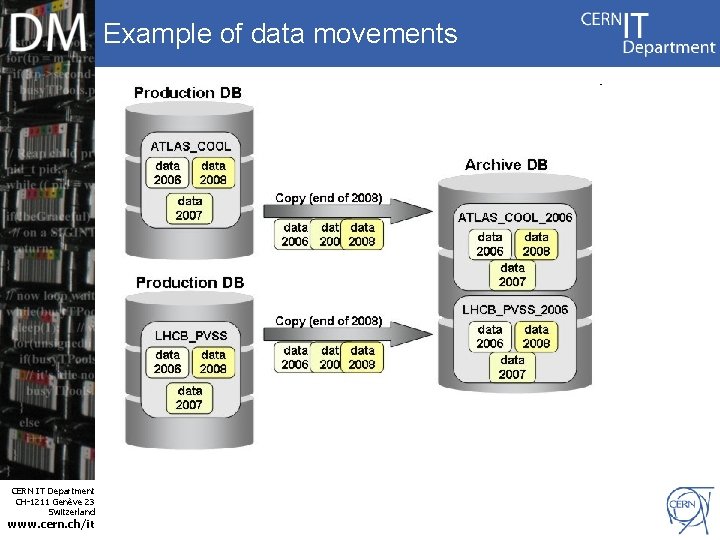

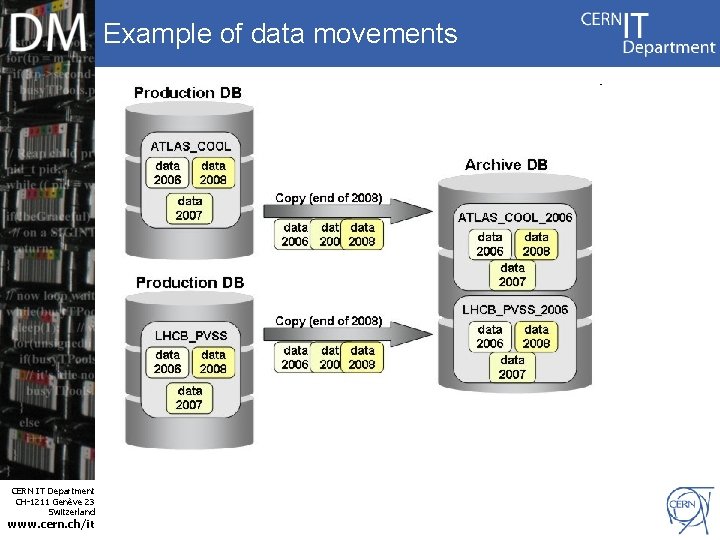

Example of data movements CERN IT Department CH-1211 Genève 23 Switzerland www. cern. ch/it

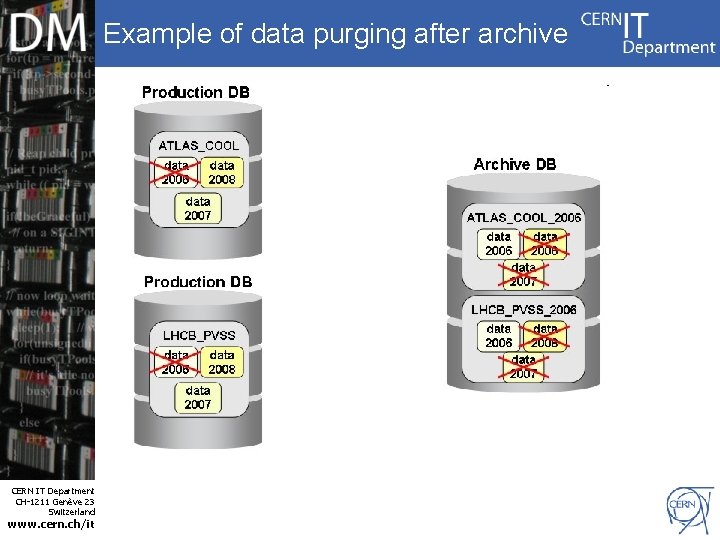

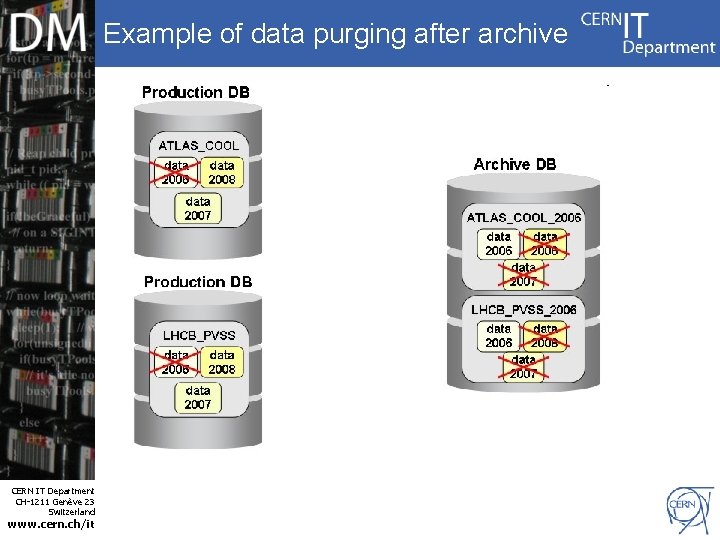

Example of data purging after archive CERN IT Department CH-1211 Genève 23 Switzerland www. cern. ch/it

Conclusions • LHC databases are growing fast – Offline data for significant performance and cost gains – Mechanisms for offlining are application dependent • DB services and ideas to provide tools to move and restore data – A large Oracle ‘Archive DB’ could be part of the solution CERN IT Department CH-1211 Genève 23 Switzerland www. cern. ch/it

Q&A Thank you CERN IT Department CH-1211 Genève 23 Switzerland www. cern. ch/it