Oracle EXADATA and ZFS NAS Appliance for Faster

- Slides: 26

Oracle EXADATA and ZFS NAS Appliance for Faster Platform as Service 11

Pranilesh Chand Lead Engineer - Database Oracle Certified Professional 9 i, 10 g, 11 g Speciality: Performance Tuning Oracle RAC/HA Oracle Exadata Disaster Recovery 22

Agenda • • • Technology Overview Why we chose this solution How we did it Issues Q&A 33

SUN Oracle Database Machine • 2 Sun Fire™X 4170 Oracle Database servers • 3 Exadata Storage Servers (All SAS or all SATA) • 2 Sun Datacenter Infini. Band Switch • Preconfigured out of the box high performance machine • Balanced performance configuration • Oracle database 11. 2 preinstalled • No ASM Instance • Smart Scan 44

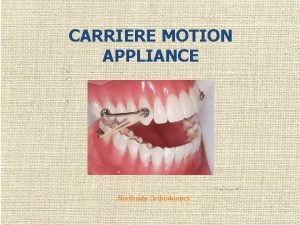

Sun ZFS Storage 7420 • Only NAS appliance that supports Hybrid Columnar Compression • Cluster Ready System for HA • Can be easily integrated with Oracle database • ZFS Filesystem • Inline de-duplication and compression to save space • Filesystem snapshot and cloning 55

Why We Chose This Solution 66

Problem Presented • Performance Slowness • Batch jobs taking 9 hrs to run • User experience affected as some had to work in weekends • Database backups • OLTP reports not able to run and times out • Some important reports had to run in weekend as it took 14 hrs • No High Availability • Database Refresh • Takes almost 16 -20 hrs to refresh 77

Problem Analysis Performance • Database IO Bound • Server Low RAM Environment Refresh Cost 88

Solution Accepted • Platform as a service to customer • Oracle Exadata for production DB hosting • Consolidation of all production databases • Sun ZFS and Sun Fire servers for test/dev environment • RAC environment to be created • Consolidation of all test/dev databases 99

Exadata Benefits Data Warehouse Jobs • From 9 hrs to 90 mins • From 8 hrs to 40 mins • ETL now running in minutes from hours before OLTP Performance • Reports running in minutes from hours Business Improvement • Staff engagement • Cost Faster RMAN Backup Consolidation 10 10

Oracle ZFS Appliance Benefits Hybrid Columnar Compression Quick Refresh of Test/Dev from standby database Consolidated platform for test databases Attached to Exadata via IB Clone DB requires very small space 11 11

How We Did It 12 12

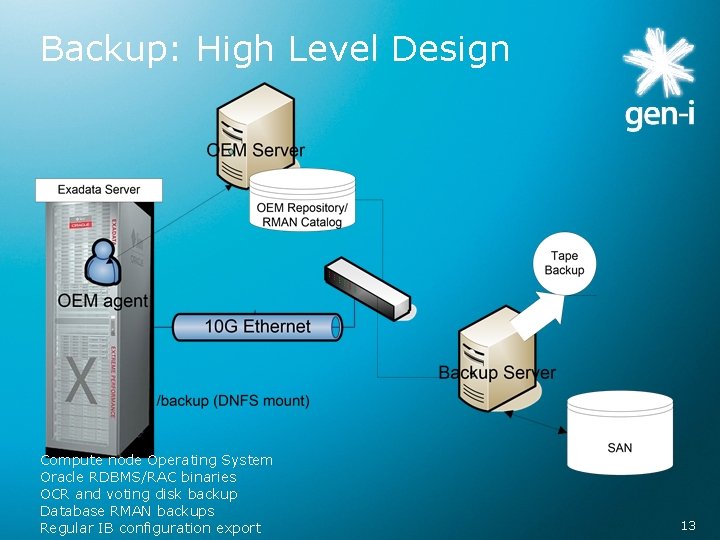

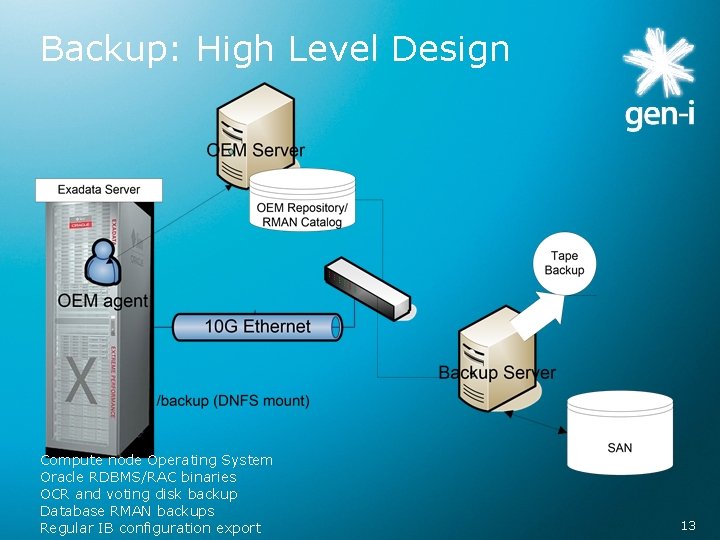

Backup: High Level Design Compute node Operating System Oracle RDBMS/RAC binaries OCR and voting disk backup Database RMAN backups Regular IB configuration export 13 13

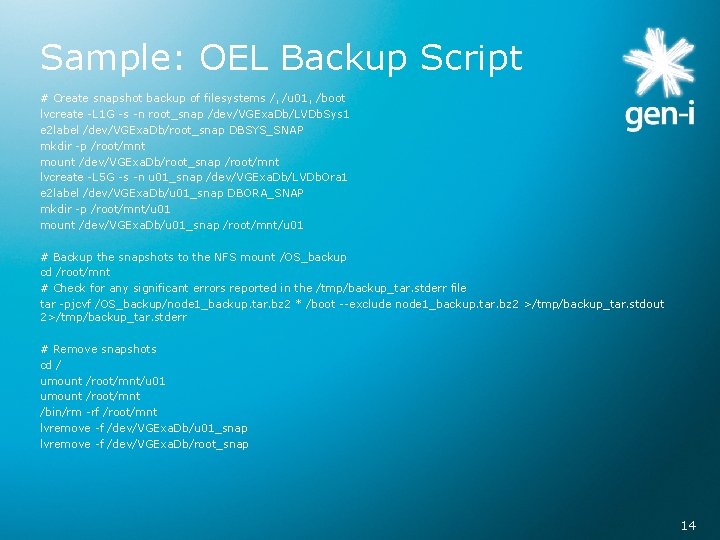

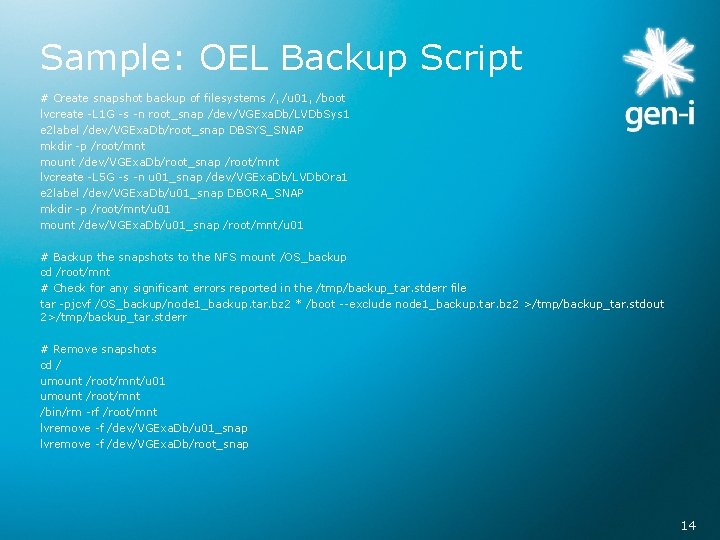

Sample: OEL Backup Script # Create snapshot backup of filesystems /, /u 01, /boot lvcreate -L 1 G -s -n root_snap /dev/VGExa. Db/LVDb. Sys 1 e 2 label /dev/VGExa. Db/root_snap DBSYS_SNAP mkdir -p /root/mnt mount /dev/VGExa. Db/root_snap /root/mnt lvcreate -L 5 G -s -n u 01_snap /dev/VGExa. Db/LVDb. Ora 1 e 2 label /dev/VGExa. Db/u 01_snap DBORA_SNAP mkdir -p /root/mnt/u 01 mount /dev/VGExa. Db/u 01_snap /root/mnt/u 01 # Backup the snapshots to the NFS mount /OS_backup cd /root/mnt # Check for any significant errors reported in the /tmp/backup_tar. stderr file tar -pjcvf /OS_backup/node 1_backup. tar. bz 2 * /boot --exclude node 1_backup. tar. bz 2 >/tmp/backup_tar. stdout 2>/tmp/backup_tar. stderr # Remove snapshots cd / umount /root/mnt/u 01 umount /root/mnt /bin/rm -rf /root/mnt lvremove -f /dev/VGExa. Db/u 01_snap lvremove -f /dev/VGExa. Db/root_snap 14 14

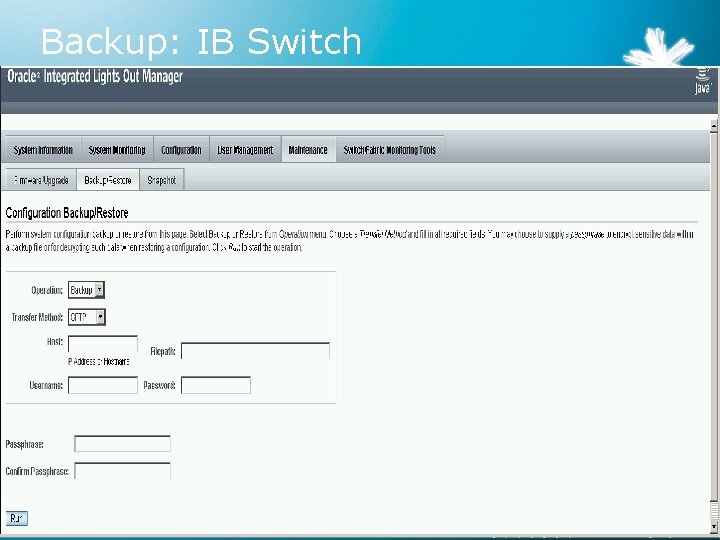

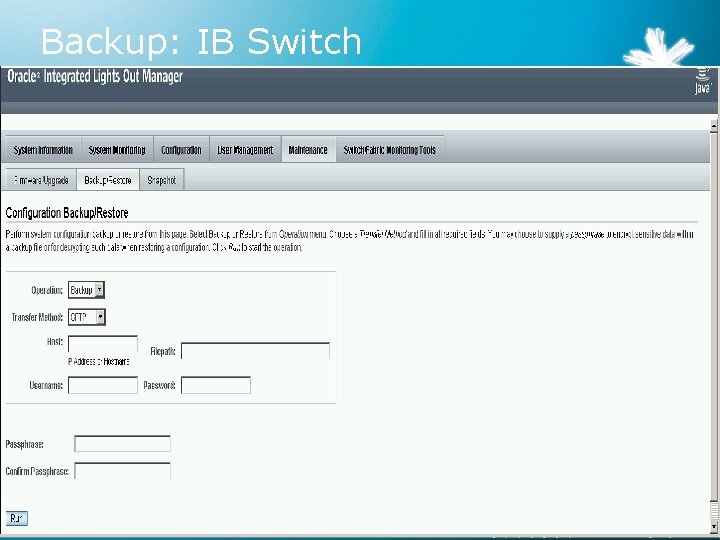

Backup: IB Switch 15 15

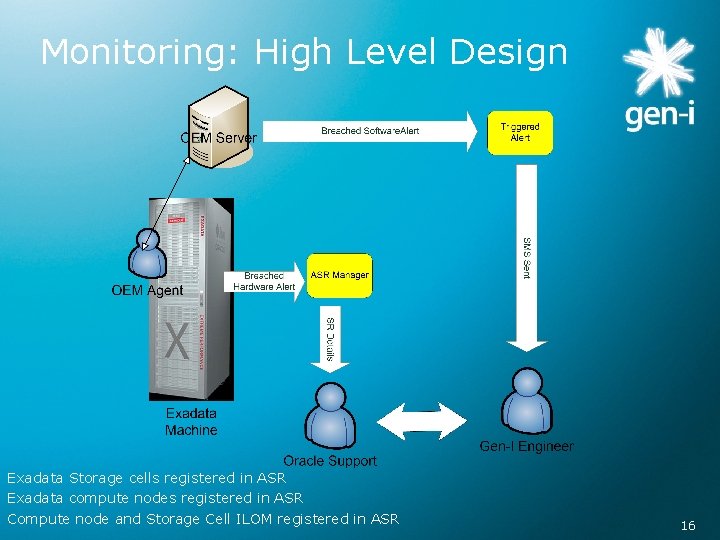

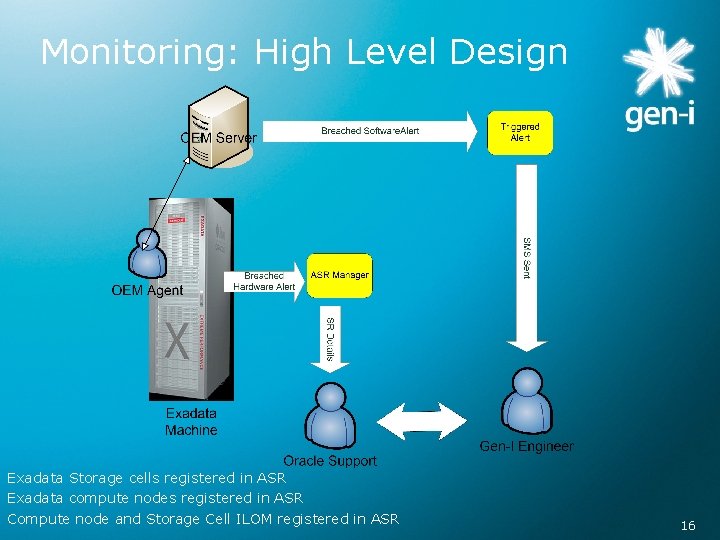

Monitoring: High Level Design Exadata Storage cells registered in ASR Exadata compute nodes registered in ASR Compute node and Storage Cell ILOM registered in ASR 16 16

DR – High Level Design 17 17

NFS Shares Key Notes (762374. 1) • ZFS Shares mounted as NFS on Exadata DB nodes • DNFS configured for each share used by DB • To configure DNFS, run the following for each Oracle Home • make -f ins_rdbms. mk dnfs_on • Restart each instance from the Oracle Home • Add NFS shares to /etc/oranfstab for global access server: heada path: 192. 168. 20. 10 export: /export/db_logs mount: /u 01/app/oracle/db/reco server: headb path: 192. 168. 20. 11 export: /export/db_data mount: /u 01/app/oracle/db/data • Once DNFS is enabled, the following message will be displayed in alert log file • Oracle instance running with ODM: Oracle Direct NFS ODM Library Version 2. 0 • Following query can be used to check if DNFS is used • select * from v$dnfs_servers; 18 18

NFS Mount Options (RAC) Mount options for Oracle Datafiles Mount options for CRS Voting Disk and OCR Operating System Mount options for Binaries Sun Solaris rw, bg, hard, nointr, rsize=32768, wsize=32768, proto=tcp, noac, wsize=32768, proto=tcp, vers=3, forcedirectio, vers=3, suid noac, forcedirectio vers=3, suid AIX (5 L) rw, bg, hard, nointr, rsize=32768, cio, rw, bg, hard, nointr, rsize=3276 cio, rw, bg, hard, intr, rsize=32768, 8, wsize=32768, proto=tcp, wsize=32768, tcp, noac, wsize=32768, proto=tcp, noac, vers=3, timeo=600 HPUX 11. 23 rw, bg, vers=3, proto=tcp, noac, hard, nointr, timeo=600, rsize=32768, wsize=32768, suid rw, bg, vers=3, proto=tcp, noac, forcedirectio, hard, nointr, timeo= 600, rsize=32768, wsize=32768, suid Windows Not Supported rw, bg, vers=3, proto=tcp, noac, forcedirectio, hard, nointr, timeo= 600 , rsize=32768, wsize=32768, suid Linux x 86 Not Supported rw, bg, hard, nointr, rsize=32768, wsize=32768, tcp, noac, actimeo= wsize=32768, tcp, vers=3, wsize=32768, tcp, actimeo=0, 0, timeo=600, actimeo=0 vers=3, timeo=600 Linux x 86 -64 rw, bg, hard, nointr, rsize=32768, wsize=32768, tcp, vers=3, wsize=32768, tcp, actimeo=0, wsize=32768, tcp, noac, vers=3, timeo=600, actimeo=0 Linux - Itanium rw, bg, hard, nointr, rsize=32768, wsize=32768, tcp, vers=3, wsize=32768, tcp, actimeo=0, wsize=32768, tcp, noac, vers=3, timeo=600, actimeo=0 MOSS: 359515. 1 19 19

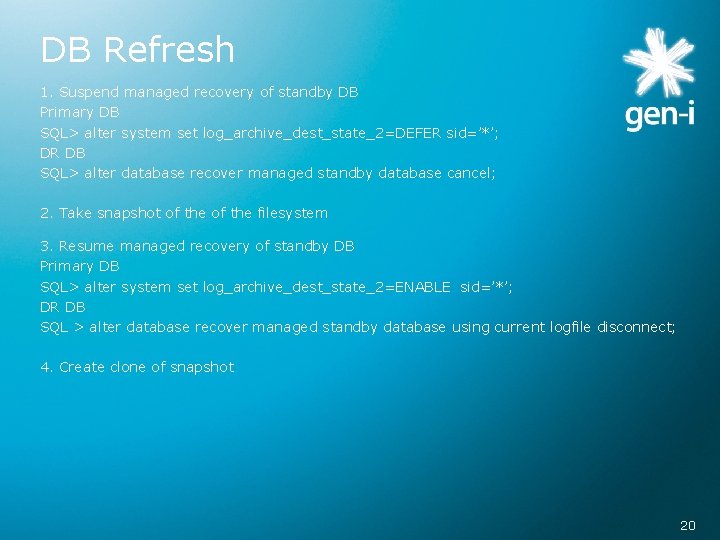

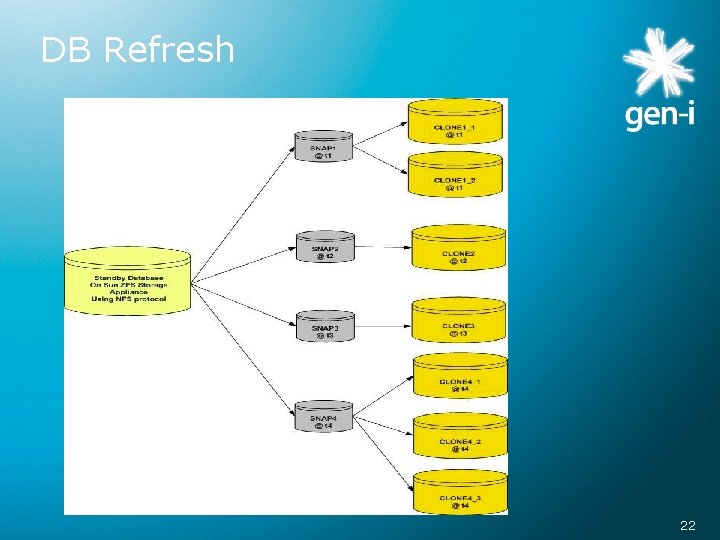

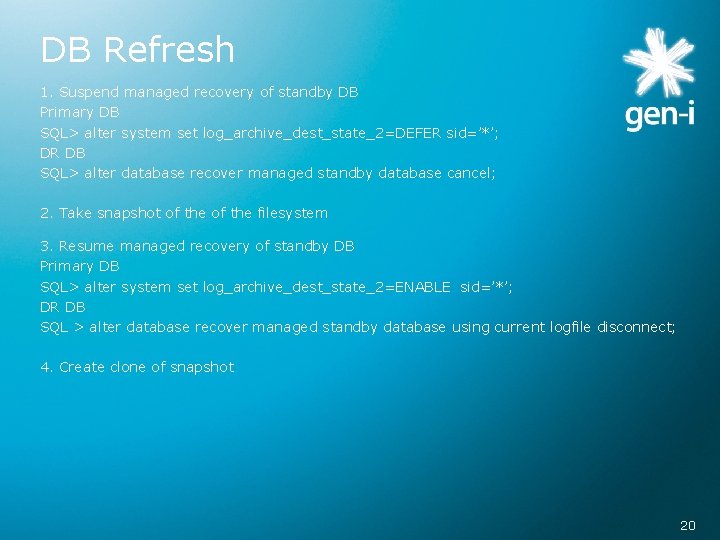

DB Refresh 1. Suspend managed recovery of standby DB Primary DB SQL> alter system set log_archive_dest_state_2=DEFER sid=’*’; DR DB SQL> alter database recover managed standby database cancel; 2. Take snapshot of the filesystem 3. Resume managed recovery of standby DB Primary DB SQL> alter system set log_archive_dest_state_2=ENABLE sid=’*’; DR DB SQL > alter database recover managed standby database using current logfile disconnect; 4. Create clone of snapshot 20 20

DB Refresh 5. Mount the filesystems on both the test RAC servers 6. Create a softlink to refer old location of standby redo logs Update db_file_name_convert and log_file_name_convert 7. Startup DB in mount state 8. Drop the standby redo logs 9. Activate the database SQL> alter database activate standby database ; SQL> shutdown immediate ; SQL> startup 10. Use NID to rename the database. 21 21

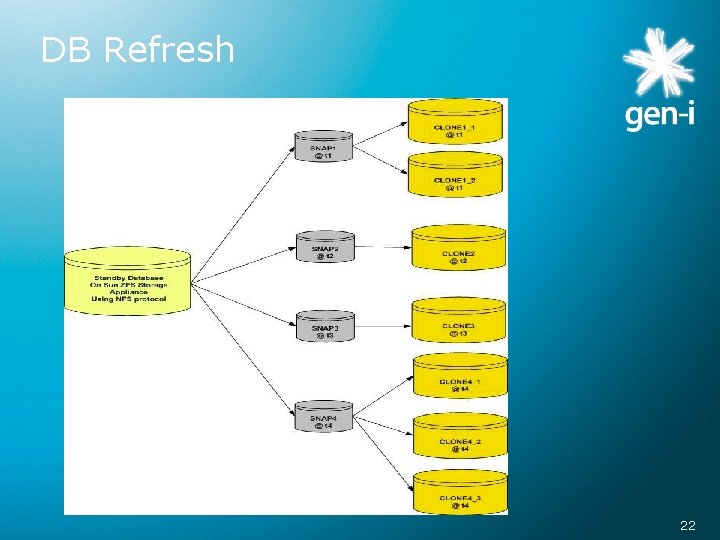

DB Refresh 22 22

Technical Issues 23 23

Issues: Exadata IPo. IB protocol died • The cause is still not known fully • This happened when ZFS was hooked to Exadata via IB cable Workaround • Oracle support has confirmed that ZFS can be hooked to Exadata via IB • Transmit queue length to be increased to 1000 for the IB interfaces #ifconfig ib 0 txqueuelen 1000 #ifconfig ib 1 txqueuelen 1000 24 24

Issues: ZFS NAS Appliance Memory leak at the server heads • Cause known to be with SNMP service • Firmware to be patched until Oracle releases it • Workaround is to disable SNMP service HCC on ZFS needs SNMP service to be enabled 25 25

Q&A 26 26

Oracle private cloud appliance

Oracle private cloud appliance Zfs nas appliance

Zfs nas appliance Zfs cloud backup

Zfs cloud backup Cellinit.ora

Cellinit.ora Tikcdn.net

Tikcdn.net Kompozícia diskusného príspevku

Kompozícia diskusného príspevku Arup nanda exadata

Arup nanda exadata Exadata storage cell architecture

Exadata storage cell architecture Exadata maa

Exadata maa Oracle big data appliance

Oracle big data appliance Zfs trim

Zfs trim Writable volumes

Writable volumes Zfs dnode

Zfs dnode What happened to matt from txg

What happened to matt from txg Zfs vmware

Zfs vmware Vfs.zfs.l2arc_noprefetch

Vfs.zfs.l2arc_noprefetch Zilblock

Zilblock Zfs tuning

Zfs tuning Aleen wittke

Aleen wittke A cart is pushed and undergoes a certain acceleration

A cart is pushed and undergoes a certain acceleration How is mass different from weight? *

How is mass different from weight? * Fast faster fastest examples

Fast faster fastest examples The princess pat lyrics

The princess pat lyrics Faster scale addiction

Faster scale addiction Faster rcnn

Faster rcnn Reaction pairs

Reaction pairs Painted stations whistle by

Painted stations whistle by