Optimizing RAMlatency Dominated Applications Yandong Mao Cody Cutler

![Related Work • PALM[Jason 11]: B+tree with same interleaving technique • RAM parallelization at Related Work • PALM[Jason 11]: B+tree with same interleaving technique • RAM parallelization at](https://slidetodoc.com/presentation_image_h2/9be21b3cfab8801641038de27fac593b/image-20.jpg)

![Single-threaded Masstree is RAMlatency dominated … B+tree, indexed by k[0: 7] Trie: a tree Single-threaded Masstree is RAMlatency dominated … B+tree, indexed by k[0: 7] Trie: a tree](https://slidetodoc.com/presentation_image_h2/9be21b3cfab8801641038de27fac593b/image-23.jpg)

- Slides: 23

Optimizing RAM-latency Dominated Applications Yandong Mao, Cody Cutler, Robert Morris MIT CSAIL

RAM-latency may dominate performance • RAM-latency dominated applications – follow long pointer chains – working set >> on-chip cache • A lot of cache misses -> stalling on RAM fetches • Example: Garbage Collector – Identify live objects by following inter-object pointers – Spend much of its time stalling to follow pointers, due to RAM latency

Addressing RAM-latency bottleneck? • View RAM as we view disk • High latency • A similar set of optimization techniques – Batching – Sorting – Access I/O in parallel and asynchronously

Outline • Hardware Background • Three techniques to address RAM-latency – Linearization: Garbage Collector – Interleaving: Masstree – Parallelization: Masstree • Discussion • Conclusion

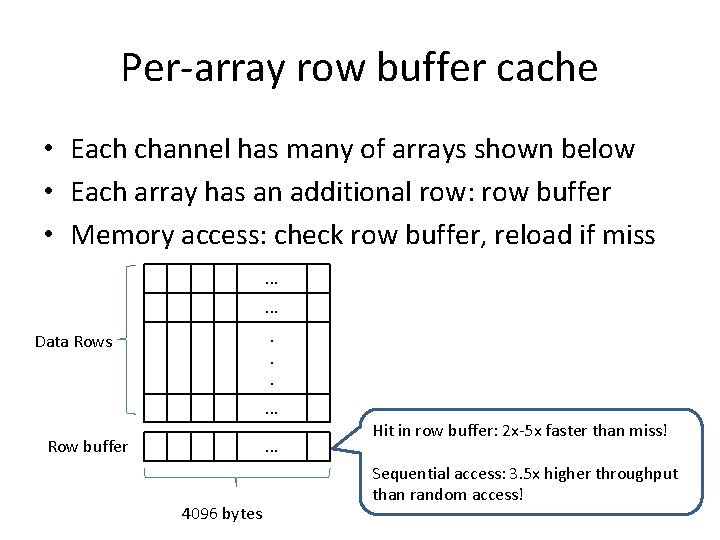

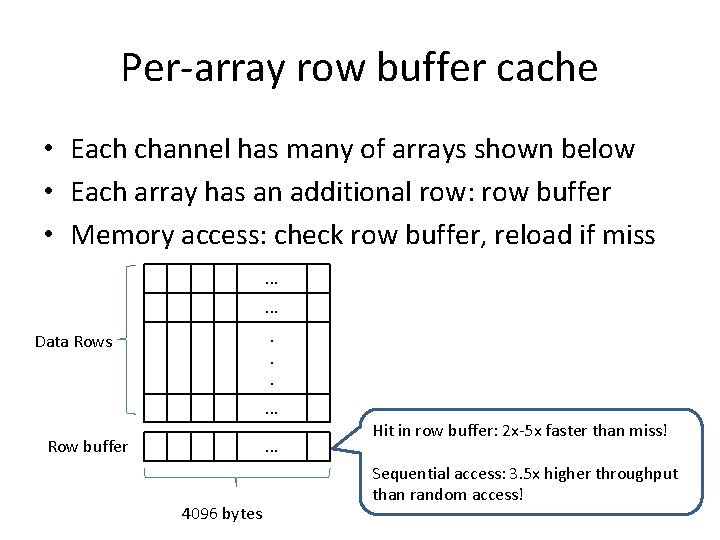

Three Relevant Hardware Features • Intel Xeon X 5690 0 1 2 3 4 5 1. Fetch RAM before needed - Hardware prefetcher – sequential or strided access pattern - Software prefetch - Out-of-order execution RAM Controller 2. Parallel accesses to different channels Channel 0 Channel 1 Channel 2 3. Row buffer cache inside memory channel

Per-array row buffer cache • Each channel has many of arrays shown below • Each array has an additional row: row buffer • Memory access: check row buffer, reload if miss. . Data Rows . . . Row buffer 4096 bytes Hit in row buffer: 2 x-5 x faster than miss! Sequential access: 3. 5 x higher throughput than random access!

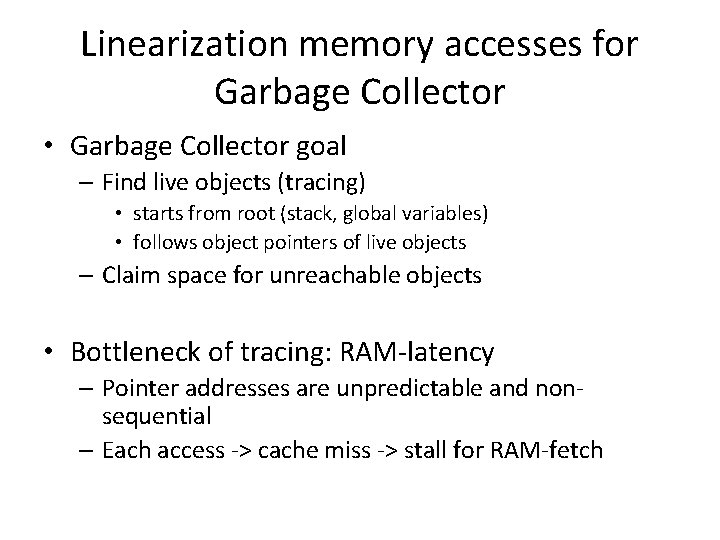

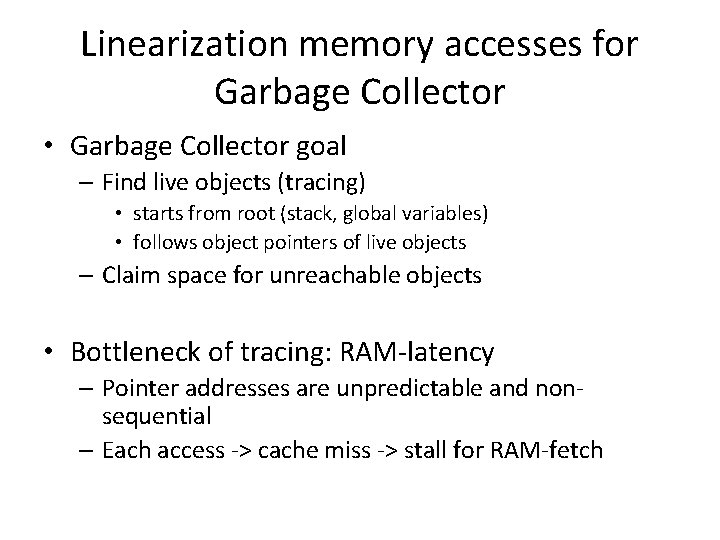

Linearization memory accesses for Garbage Collector • Garbage Collector goal – Find live objects (tracing) • starts from root (stack, global variables) • follows object pointers of live objects – Claim space for unreachable objects • Bottleneck of tracing: RAM-latency – Pointer addresses are unpredictable and nonsequential – Each access -> cache miss -> stall for RAM-fetch

Observation • Arrange objects in tracing order during garbage collection – Subsequent tracing would access memory in sequential order • Take advantage of two hardware features – Hardware prefechers: prefetch into cache – Higher row buffer hit rate

Benchmark and result • Time of tracing 1. 8 GB of live data • HSQLDB 2. 2. 9: a RDBMS engine in Java • Compacting Collector of Hotspot JVM from Open. JDK 7 u 6 – Use copy collection to reorder objects in tracing order • Result: tracing in sequential order is 1. 3 X faster than random order • Future work – better linearizing algorithm than copy collection algorithm (use twice the memory!) – measure application-level performance improvement

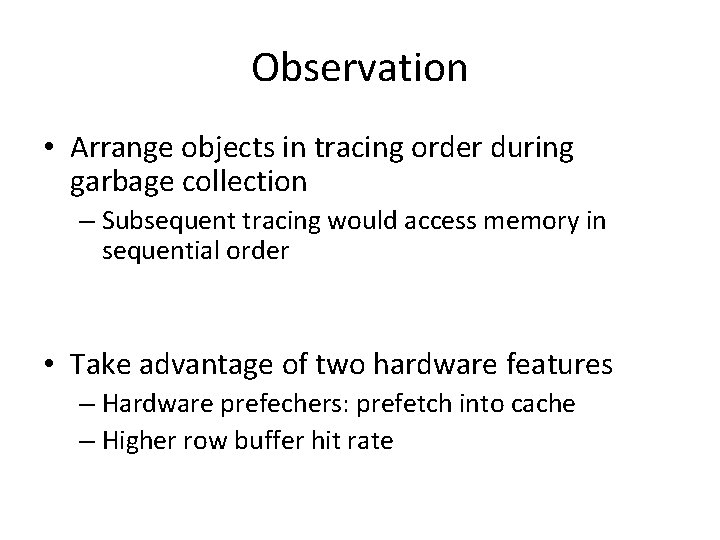

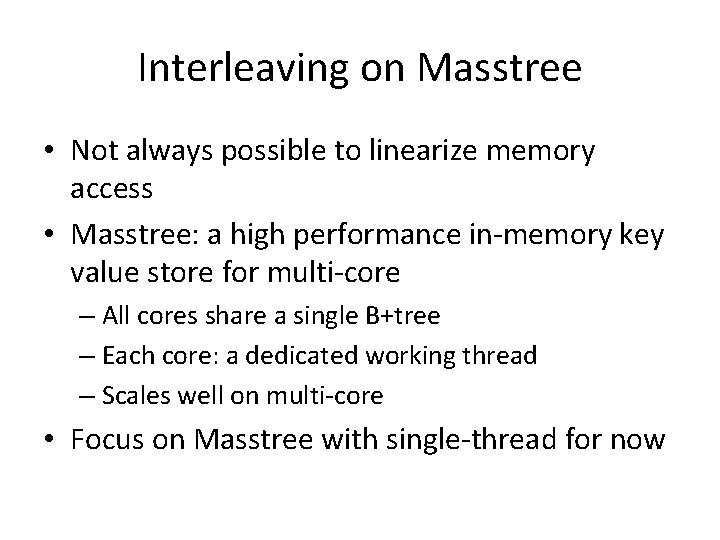

Interleaving on Masstree • Not always possible to linearize memory access • Masstree: a high performance in-memory key value store for multi-core – All cores share a single B+tree – Each core: a dedicated working thread – Scales well on multi-core • Focus on Masstree with single-thread for now

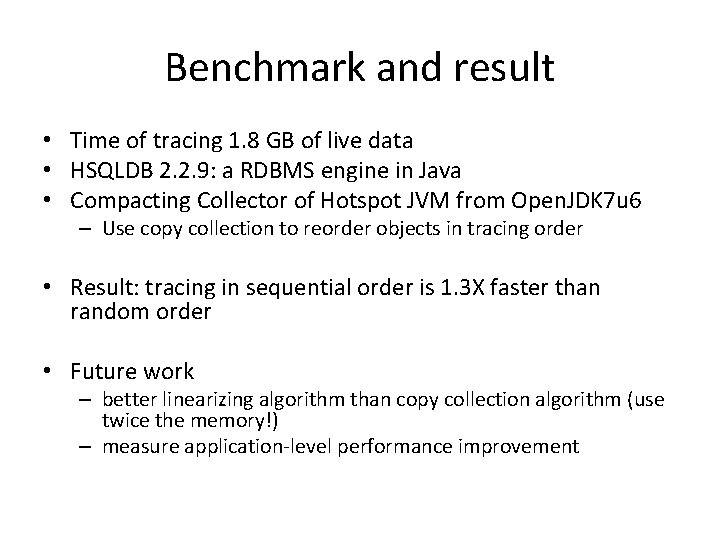

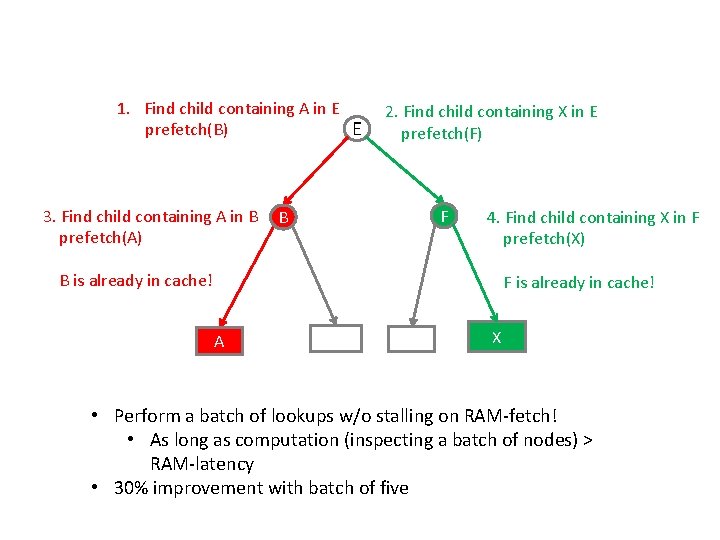

Single-threaded Masstree is RAMlatency dominated • Careful design to avoid RAM fetches – trie of B+trees, inline key fragments and children in tree nodes – Accessing one fat B+tree node in one RAM-latency • Still RAM-latency dominated! – Each key-lookup follows a random path – O(N) RAM-latency (hundreds of cycles) per-lookup – A million lookups per second

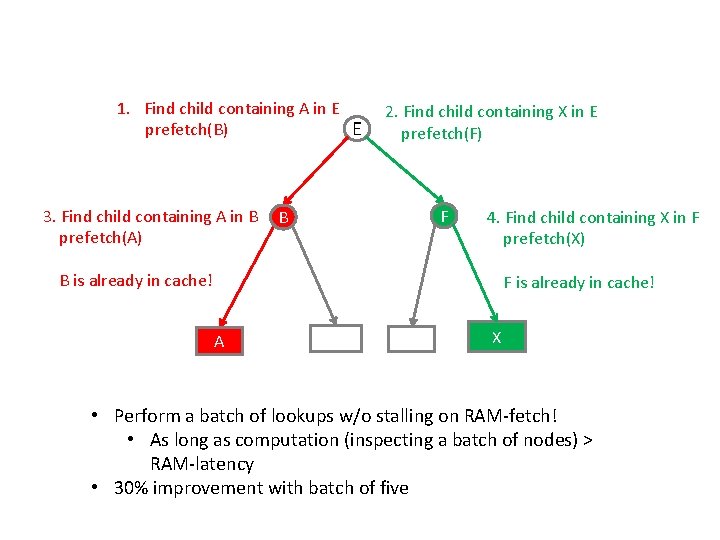

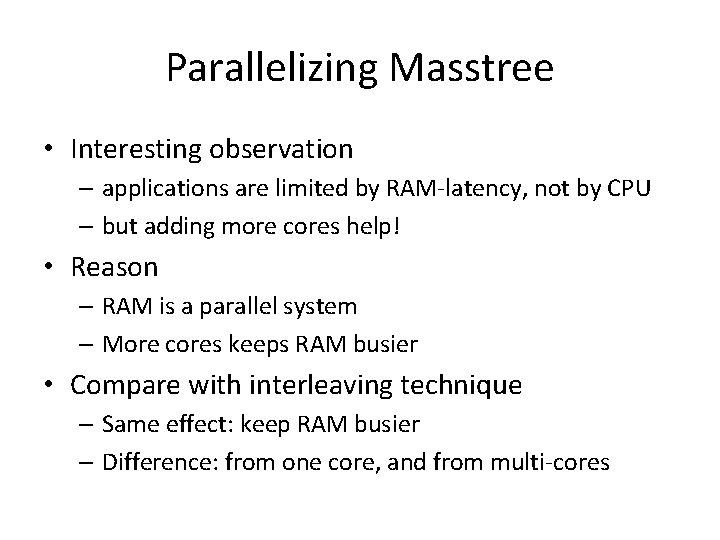

Batch and interleave tree lookups • Batch key lookups • Interleave computation and RAM fetch using software prefetch

1. Find child containing A in E prefetch(B) E 3. Find child containing A in B prefetch(A) 2. Find child containing X in E prefetch(F) F B 4. Find child containing X in F prefetch(X) B is already in cache! F is already in cache! A D X • Perform a batch of lookups w/o stalling on RAM-fetch! • As long as computation (inspecting a batch of nodes) > RAM-latency • 30% improvement with batch of five

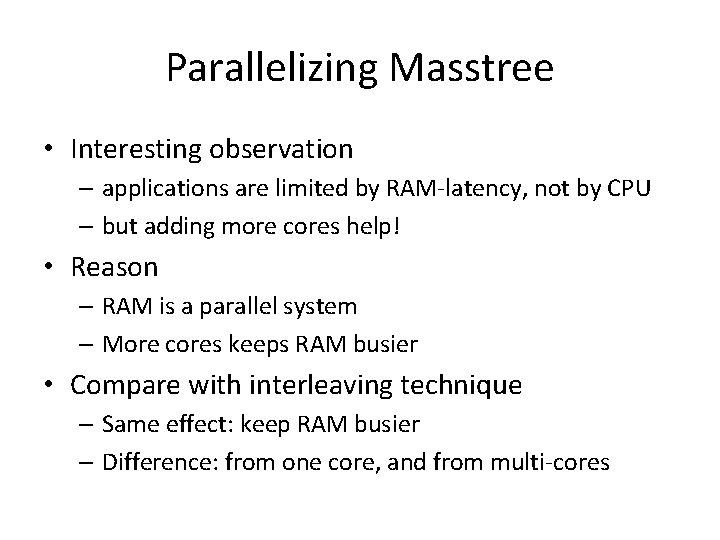

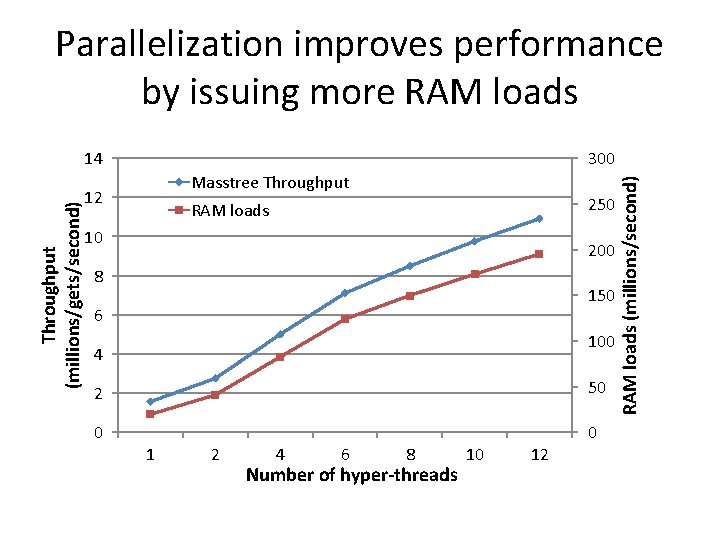

Parallelizing Masstree • Interesting observation – applications are limited by RAM-latency, not by CPU – but adding more cores help! • Reason – RAM is a parallel system – More cores keeps RAM busier • Compare with interleaving technique – Same effect: keep RAM busier – Difference: from one core, and from multi-cores

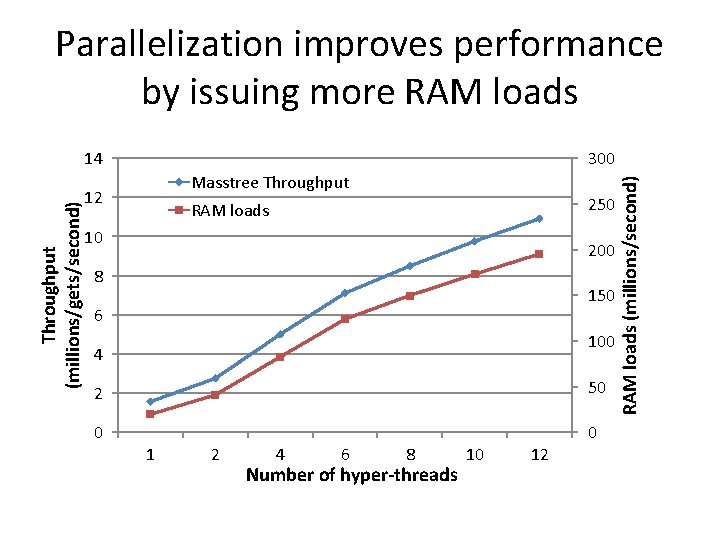

Parallelization improves performance by issuing more RAM loads 300 Masstree Throughput 12 250 RAM loads 10 200 8 150 6 100 4 2 50 0 0 1 2 4 6 8 Number of hyper-threads 10 12 RAM loads (millions/second) Throughput (millions/gets/second) 14

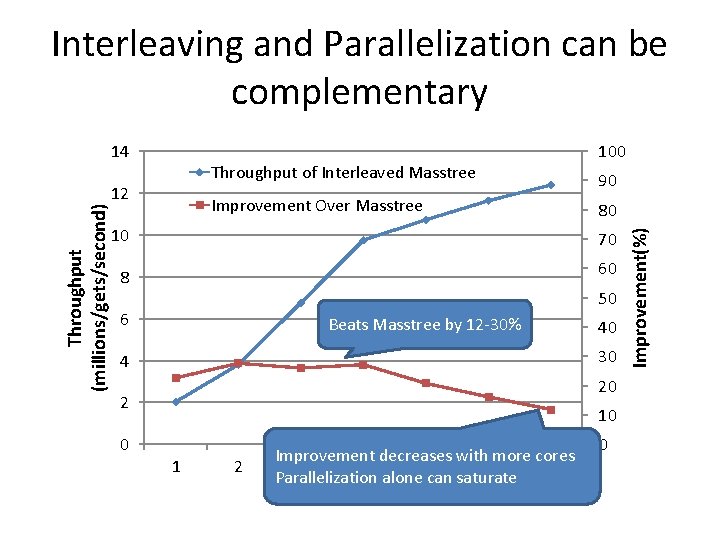

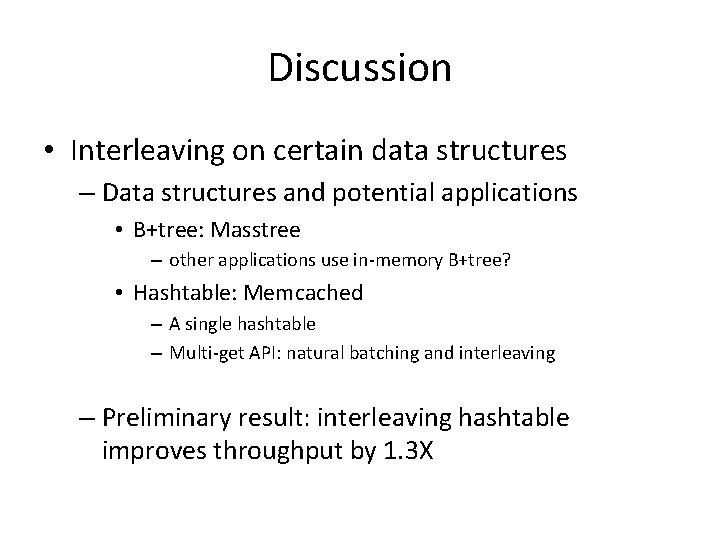

Interleaving and Parallelization can be complementary Throughput of Interleaved Masstree Throughput (millions/gets/second) 12 Improvement Over Masstree 100 90 80 10 70 8 60 50 6 Beats Masstree by 12 -30% 30 4 20 2 0 40 10 1 2 Improvement decreases with more cores 4 6 8 10 12 Parallelization alone can saturate Number of hyper-threads 0 Improvement(%) 14

Discussion • Applicability – Lessons • Interleaving seems more general than linearization – applied to Garbage Collector? • Interleaving is more difficult than parallelization – requires batching and concurrency control – Challenges in automatic interleaving • Need to identify and resolve conflicting access • Difficult or impossible without programmers’ help

Discussion • Interleaving on certain data structures – Data structures and potential applications • B+tree: Masstree – other applications use in-memory B+tree? • Hashtable: Memcached – A single hashtable – Multi-get API: natural batching and interleaving – Preliminary result: interleaving hashtable improves throughput by 1. 3 X

Discussion • Profiling tools – Linux perf • Look at most expensive function • Manually inspect – Maybe misleading • computation limited or RAM-latency limited? – RAM stalls based tool?

![Related Work PALMJason 11 Btree with same interleaving technique RAM parallelization at Related Work • PALM[Jason 11]: B+tree with same interleaving technique • RAM parallelization at](https://slidetodoc.com/presentation_image_h2/9be21b3cfab8801641038de27fac593b/image-20.jpg)

Related Work • PALM[Jason 11]: B+tree with same interleaving technique • RAM parallelization at different levels: regulation considered harmful[Park 13]

Conclusion • Identifies a class of applications: dominated by RAM-latency • Three techniques to address RAM-latency bottleneck of two applications • Improve your program similarly?

Questions?

![Singlethreaded Masstree is RAMlatency dominated Btree indexed by k0 7 Trie a tree Single-threaded Masstree is RAMlatency dominated … B+tree, indexed by k[0: 7] Trie: a tree](https://slidetodoc.com/presentation_image_h2/9be21b3cfab8801641038de27fac593b/image-23.jpg)

Single-threaded Masstree is RAMlatency dominated … B+tree, indexed by k[0: 7] Trie: a tree where each level is indexed by fixedlength key fragment … B+tree, indexed by k[8: 15]