Optimizing File Systems for Fast Storage Devices 2015

- Slides: 22

Optimizing File Systems for Fast Storage Devices 2015, published in the 8 th ACM International Systems and Storage Conference

Outline • Introduction • Background and Motivation • System design • Evaluation • Conclusion

Introduction (1/3) • The huge performance gap between main memory and storage devices has become a major topic. • The traditional Linux I/O stack cannot keep pace with the speed of these new devices since the stack has been developed for hard disk for decades.

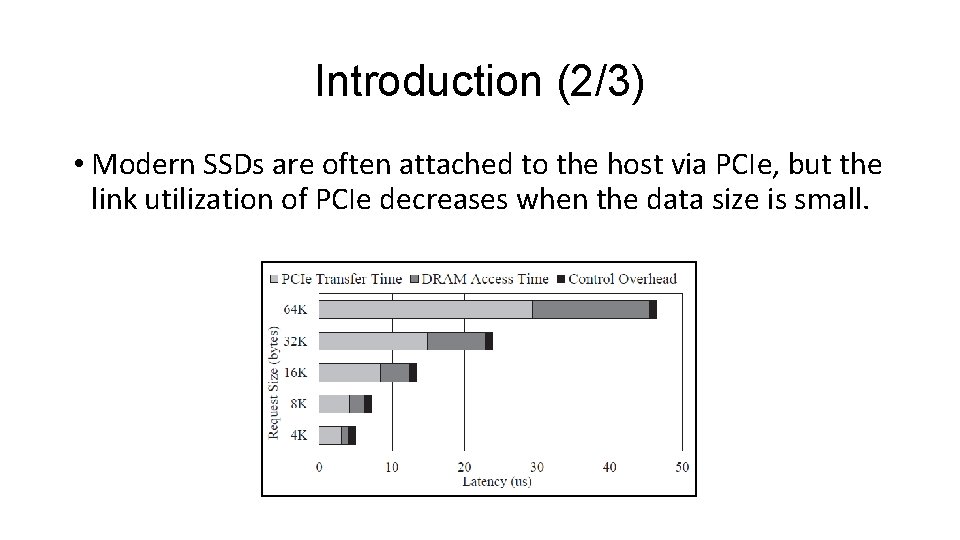

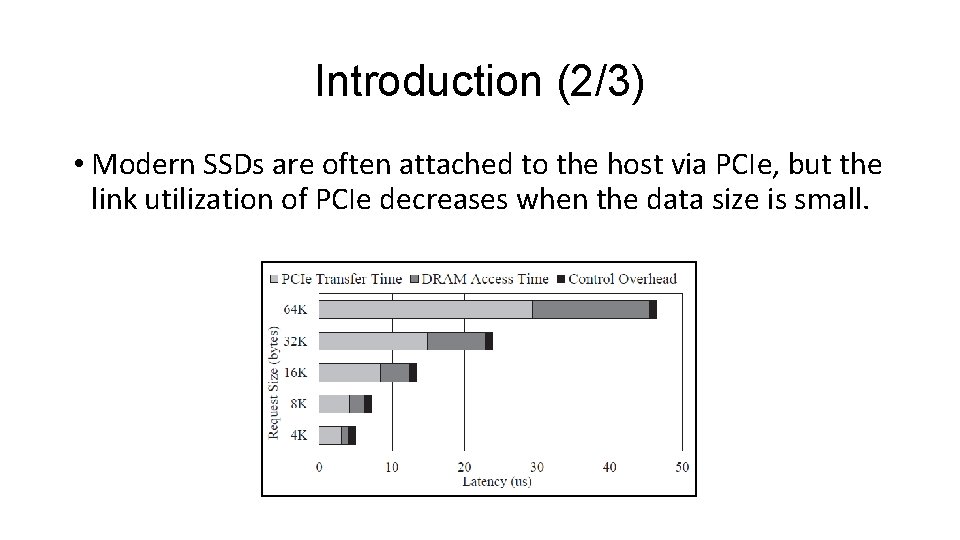

Introduction (2/3) • Modern SSDs are often attached to the host via PCIe, but the link utilization of PCIe decreases when the data size is small.

Introduction (3/3) • In this study, we focus on buffered I/O of file systems. We analyze internal functions such as prefetch and write-back operations. • Our key idea is to transfer data from discontiguous host memory buffers to discontiguous storage segments in one I/O request.

Outline • Introduction • Background and Motivation • System design • Evaluation • Conclusion

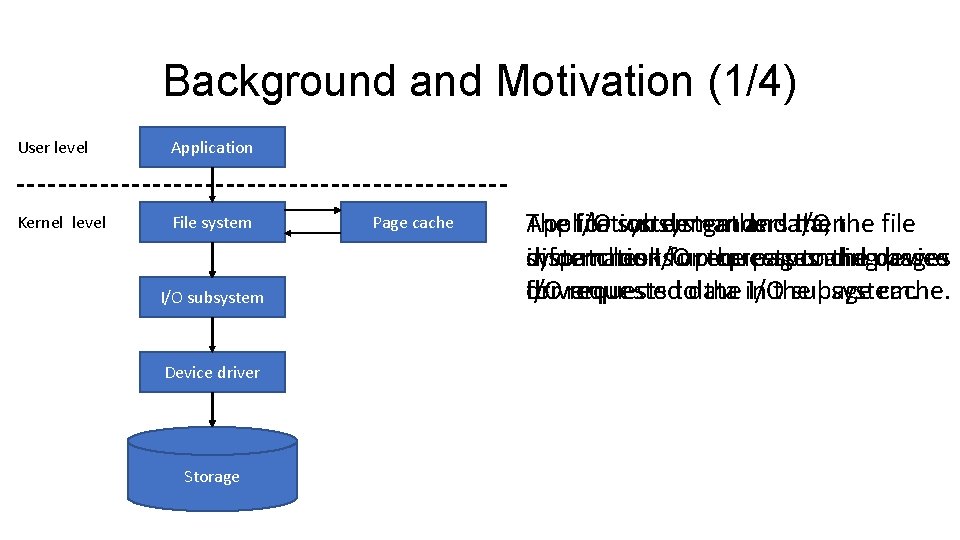

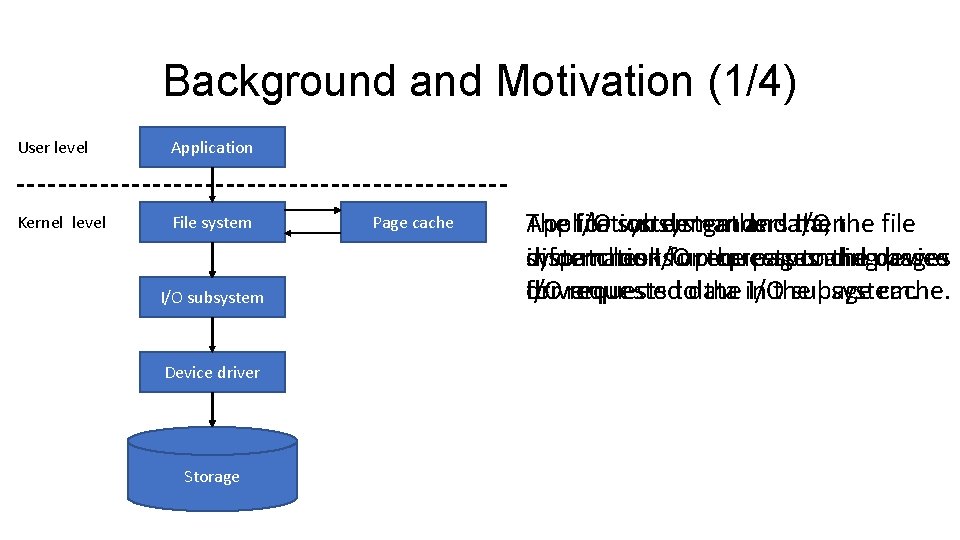

Background and Motivation (1/4) User level Application Kernel level File system I/O subsystem Device driver Storage Page cache The Application I/O file system subsystem demands gathers and data, then I/O the file dispatches system information looks I/O for uprequests the corresponding pages toand the passes device pages driver. for I/O requested requests todata the in I/Othe subsystem. page cache.

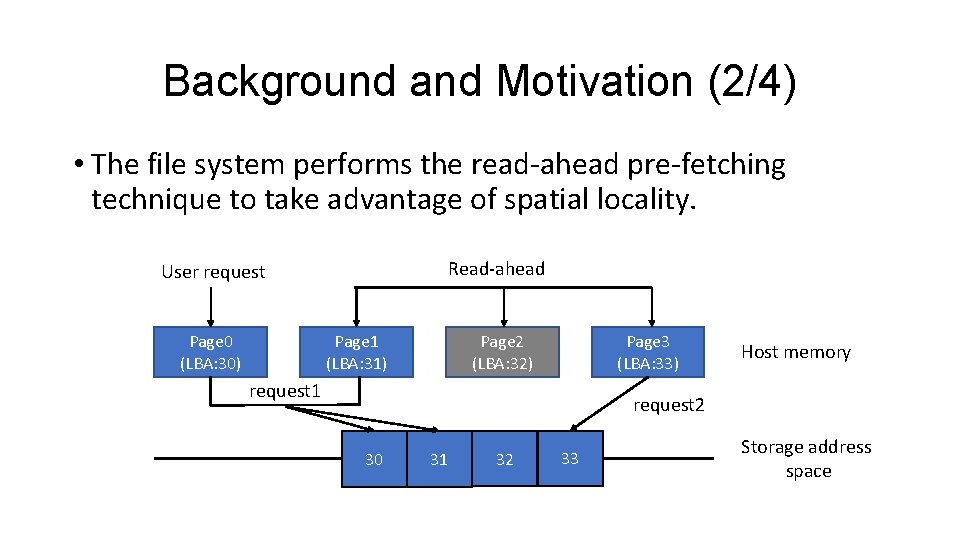

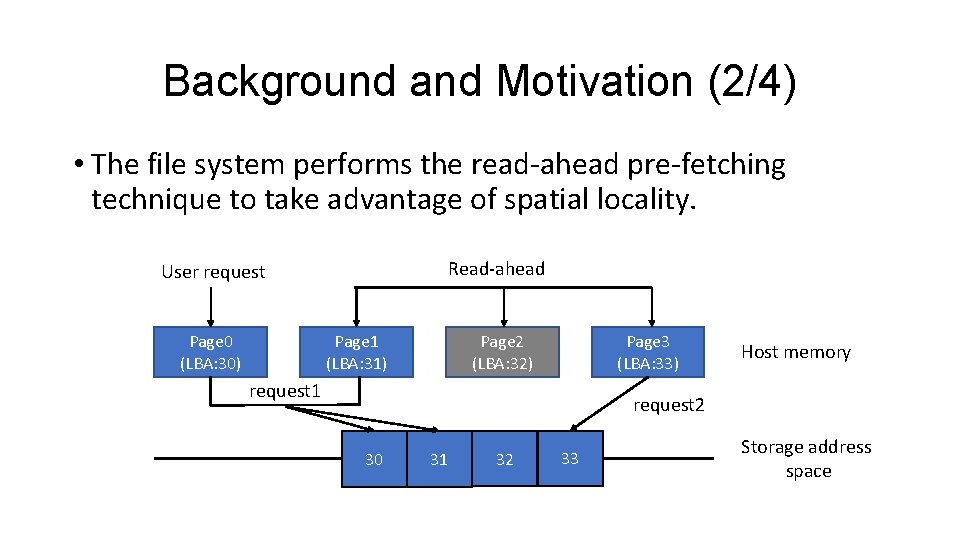

Background and Motivation (2/4) • The file system performs the read-ahead pre-fetching technique to take advantage of spatial locality. Read-ahead User request Page 1 (LBA: 31) Page 0 (LBA: 30) Page 3 (LBA: 33) Page 2 (LBA: 32) request 1 Host memory request 2 30 31 32 33 Storage address space

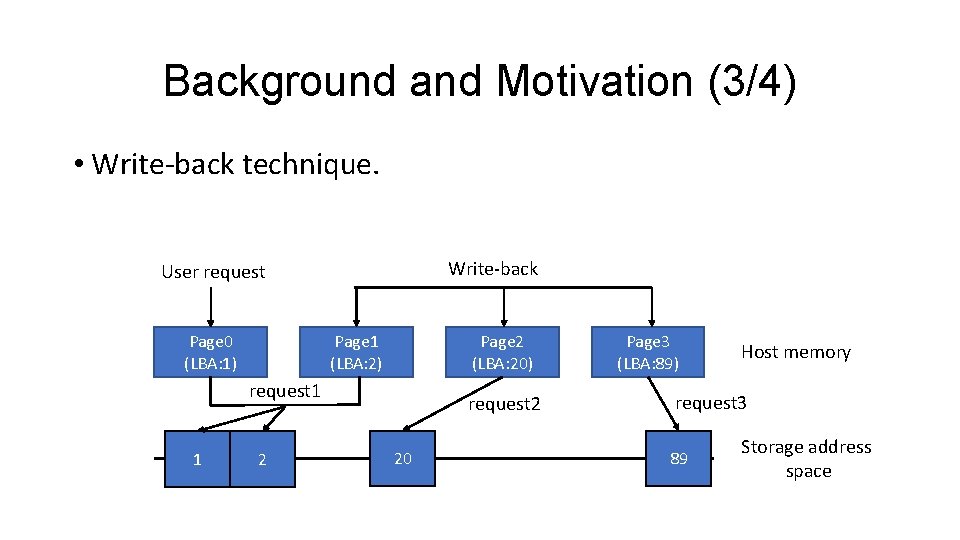

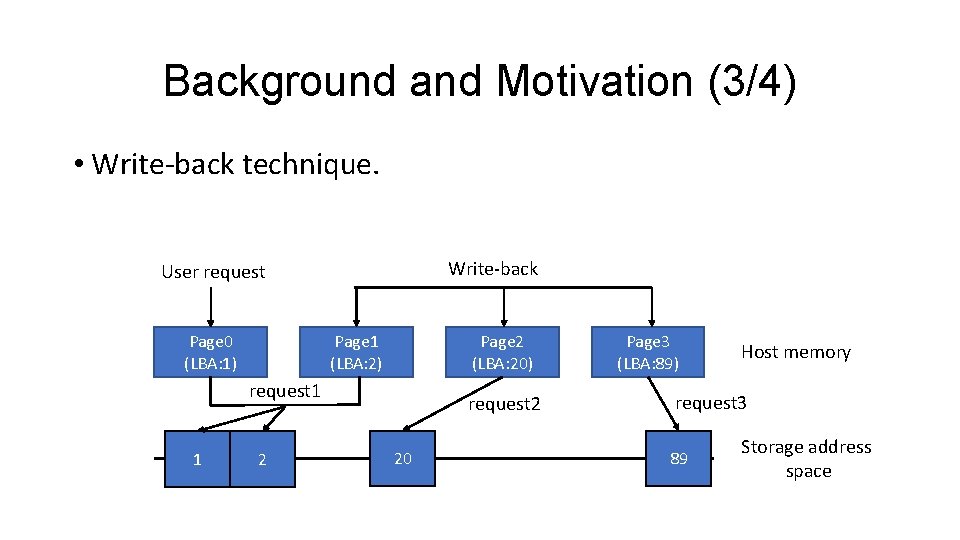

Background and Motivation (3/4) • Write-back technique. Write-back User request Page 1 (LBA: 2) Page 0 (LBA: 1) Page 2 (LBA: 20) request 1 1 2 request 2 20 Page 3 (LBA: 89) Host memory request 3 89 Storage address space

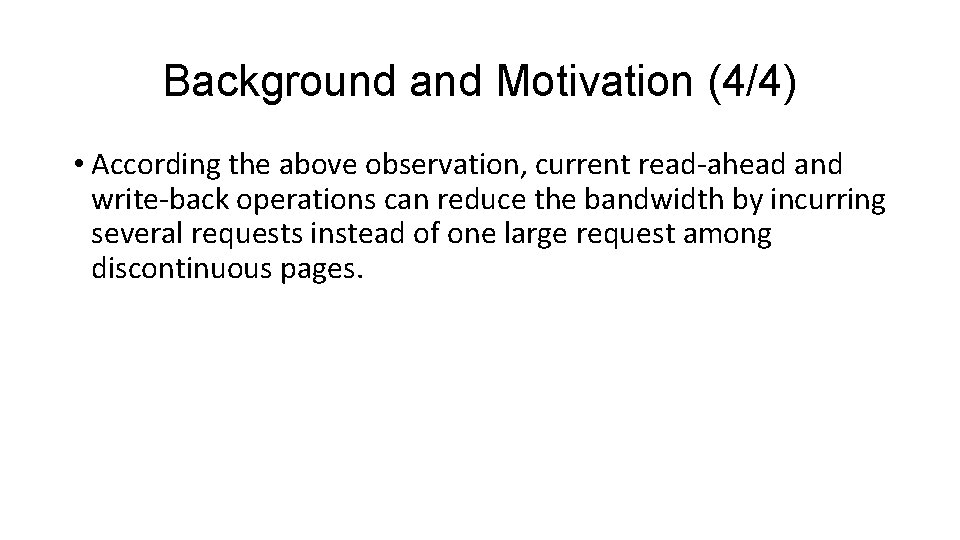

Background and Motivation (4/4) • According the above observation, current read-ahead and write-back operations can reduce the bandwidth by incurring several requests instead of one large request among discontinuous pages.

Outline • Introduction • Background and Motivation • System design • Evaluation • Conclusion

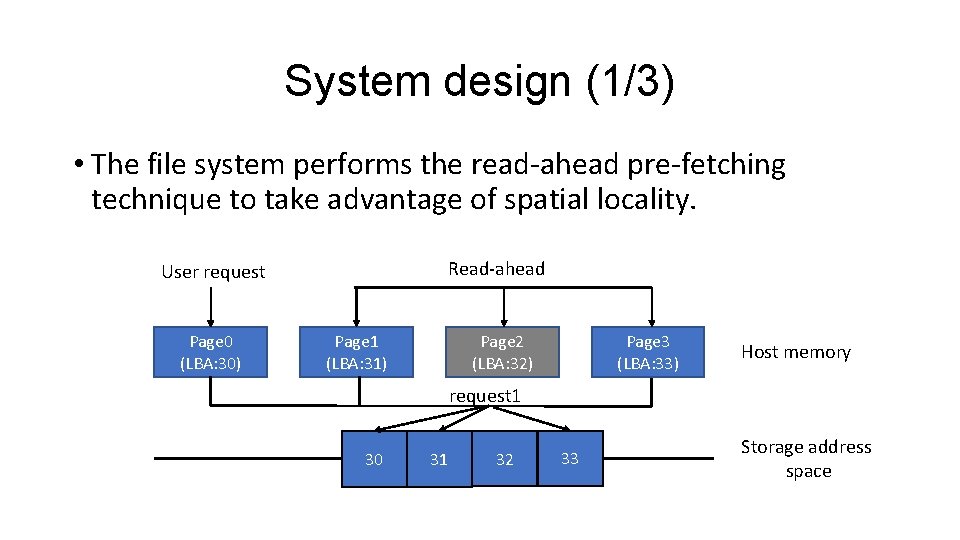

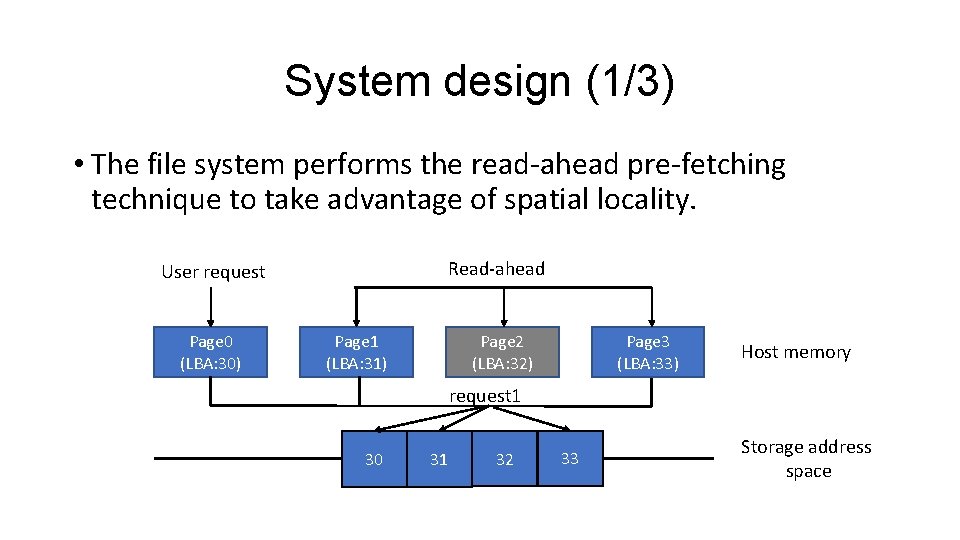

System design (1/3) • The file system performs the read-ahead pre-fetching technique to take advantage of spatial locality. Read-ahead User request Page 0 (LBA: 30) Page 1 (LBA: 31) Page 3 (LBA: 33) Page 2 (LBA: 32) Host memory request 1 30 31 32 33 Storage address space

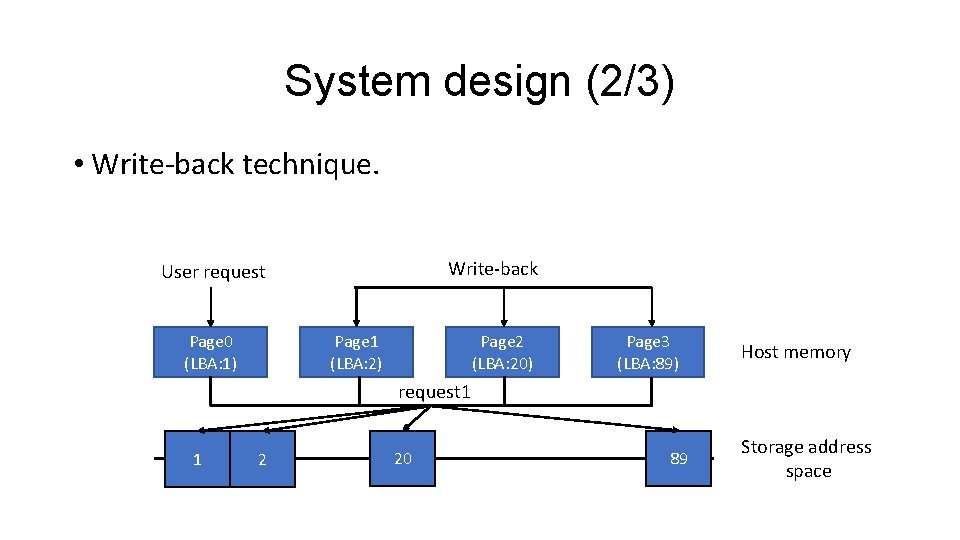

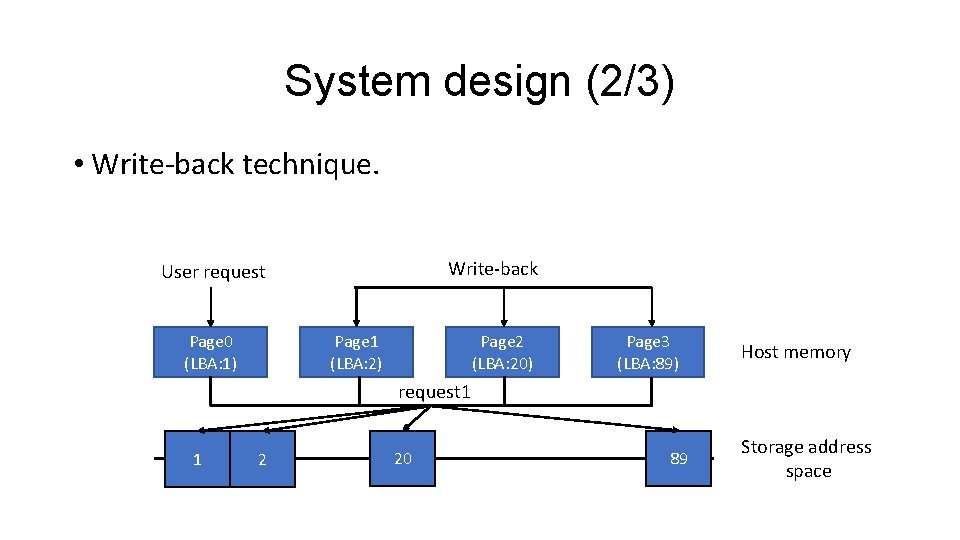

System design (2/3) • Write-back technique. Write-back User request Page 1 (LBA: 2) Page 0 (LBA: 1) Page 2 (LBA: 20) Page 3 (LBA: 89) Host memory request 1 1 2 20 89 Storage address space

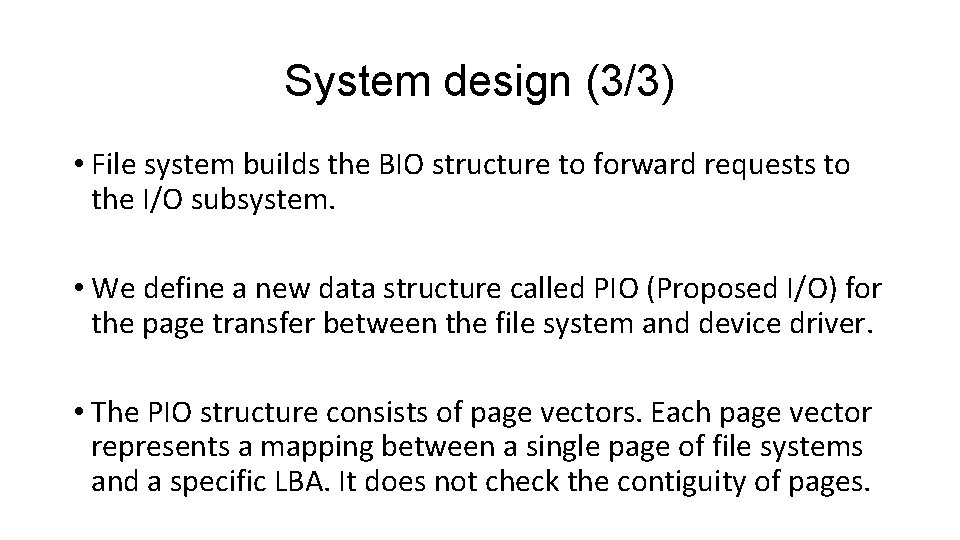

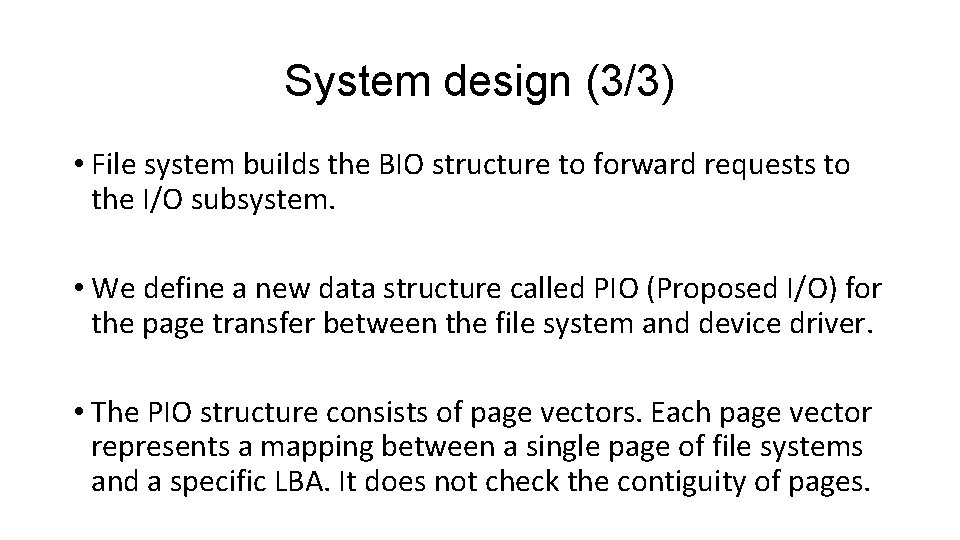

System design (3/3) • File system builds the BIO structure to forward requests to the I/O subsystem. • We define a new data structure called PIO (Proposed I/O) for the page transfer between the file system and device driver. • The PIO structure consists of page vectors. Each page vector represents a mapping between a single page of file systems and a specific LBA. It does not check the contiguity of pages.

Outline • Introduction • Background and Motivation • System design • Evaluation • Conclusion

Evaluation (1/5) • An Intel Xeon E 5630 2. 53 GHz quad core processor. • 8 GB memory • Linux 3. 14. 3 • 512 GB Battery-backed DRAM-SSD • FIO benchmark and TPC-C benchmark

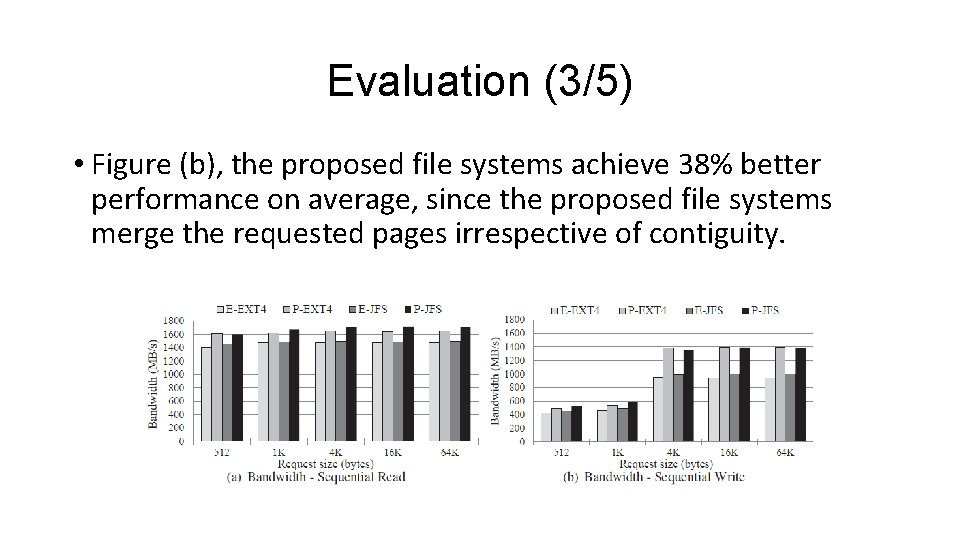

Evaluation (2/5) • We ran the FIO benchmark performing multiple request sizes and buffering I/O under 8 threads (each thread creates a 4 GB file) in terms of bandwidth. • We conducted TPC-C benchmark with Inno. DB. ØPage size is 4 KB Øread : write ratio is kept at 1. 9 : 1 ØPattern is random access.

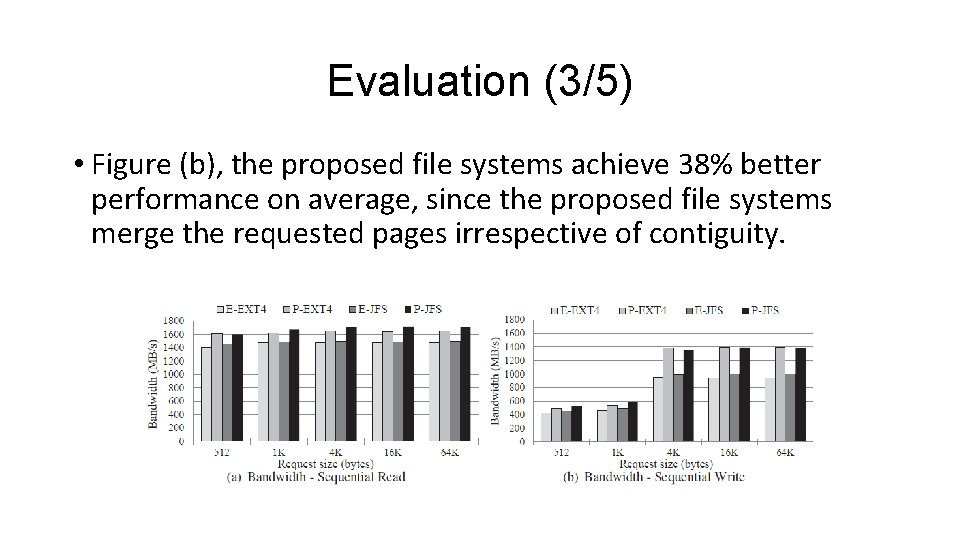

Evaluation (3/5) • Figure (b), the proposed file systems achieve 38% better performance on average, since the proposed file systems merge the requested pages irrespective of contiguity.

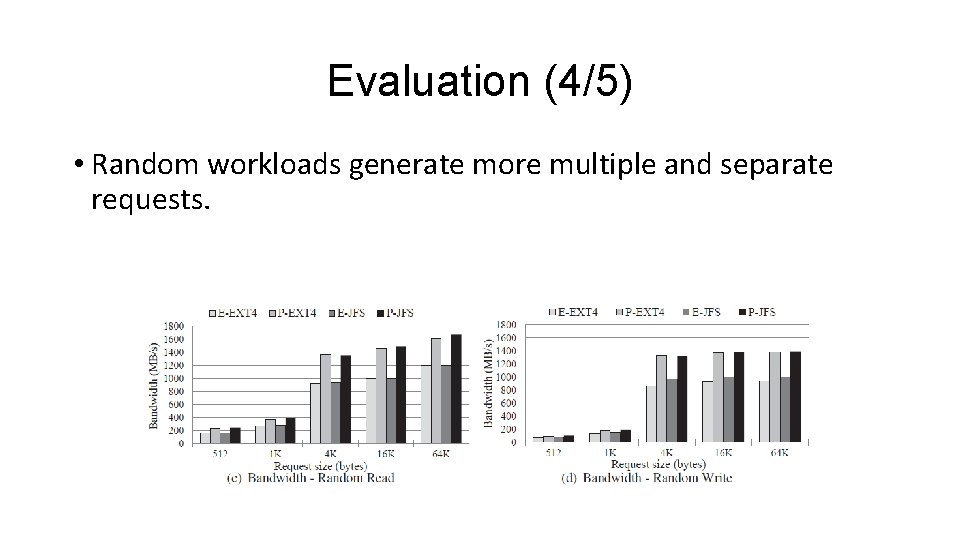

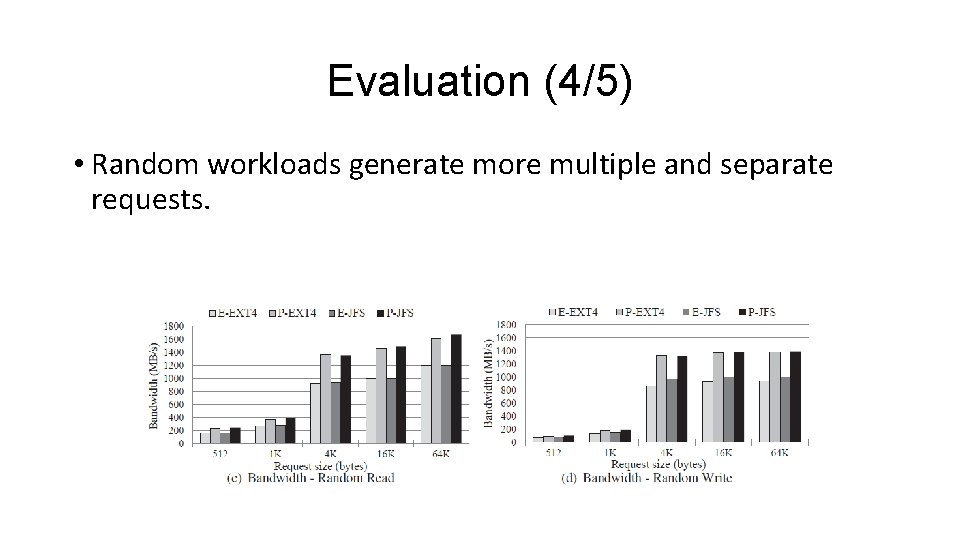

Evaluation (4/5) • Random workloads generate more multiple and separate requests.

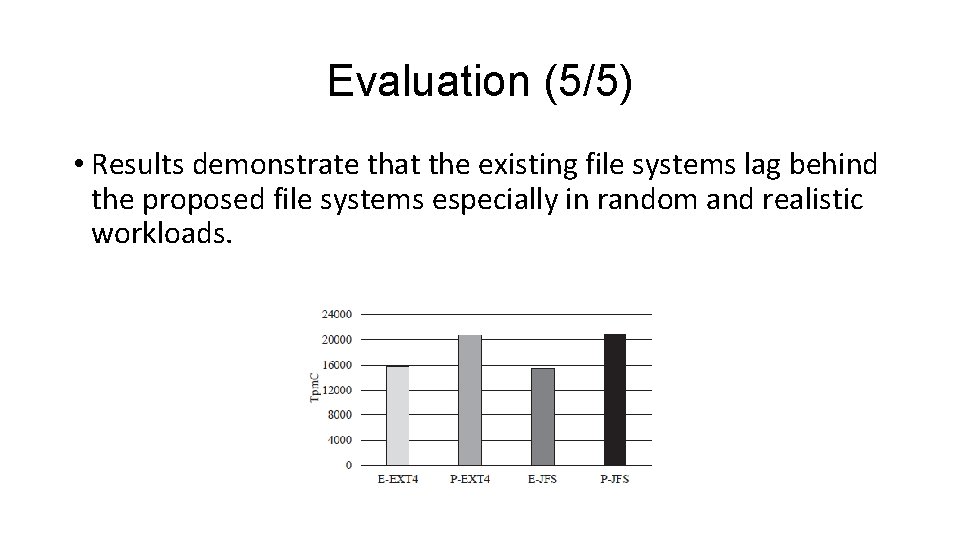

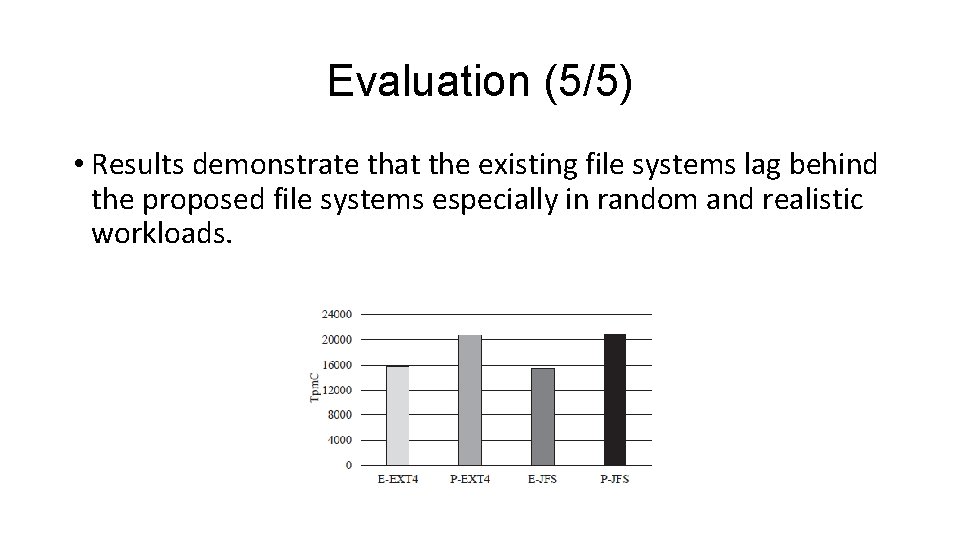

Evaluation (5/5) • Results demonstrate that the existing file systems lag behind the proposed file systems especially in random and realistic workloads.

Outline • Introduction • Background and Motivation • System design • Evaluation • Conclusion

Conclusion • We proposed enhanced I/O strategies that merge multiple requests to issue one large request irrespective of contiguity. • The proposed technique is especially beneficial for random workloads.