Optimizing a 2 D Discontinuous Galerkin dynamical core

- Slides: 24

Optimizing a 2 D Discontinuous Galerkin dynamical core for both CPU and GPU execution Pranay Reddy Kommera* Dr. Ram Nair** Dr. Richard Loft** Raghu Raj Prasanna Kumar** *Department of Electrical and Computer Engineering, University of Wyoming ** Computational and Information Systems Lab, National Center for Atmospheric Research

Outline 1. Introduction 2. Overview of the Code 3. Methodology 4. Results 5. Conclusion & Future Work 1

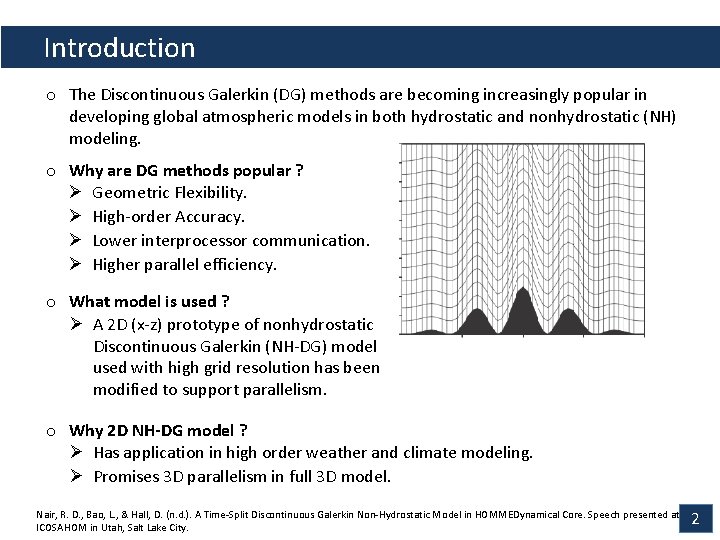

Introduction o The Discontinuous Galerkin (DG) methods are becoming increasingly popular in developing global atmospheric models in both hydrostatic and nonhydrostatic (NH) modeling. o Why are DG methods popular ? Ø Geometric Flexibility. Ø High-order Accuracy. Ø Lower interprocessor communication. Ø Higher parallel efficiency. o What model is used ? Ø A 2 D (x-z) prototype of nonhydrostatic Discontinuous Galerkin (NH-DG) model used with high grid resolution has been modified to support parallelism. o Why 2 D NH-DG model ? Ø Has application in high order weather and climate modeling. Ø Promises 3 D parallelism in full 3 D model. Nair, R. D. , Bao, L. , & Hall, D. (n. d. ). A Time-Split Discontinuous Galerkin Non-Hydrostatic Model in HOMMEDynamical Core. Speech presented at ICOSAHOM in Utah, Salt Lake City. 2

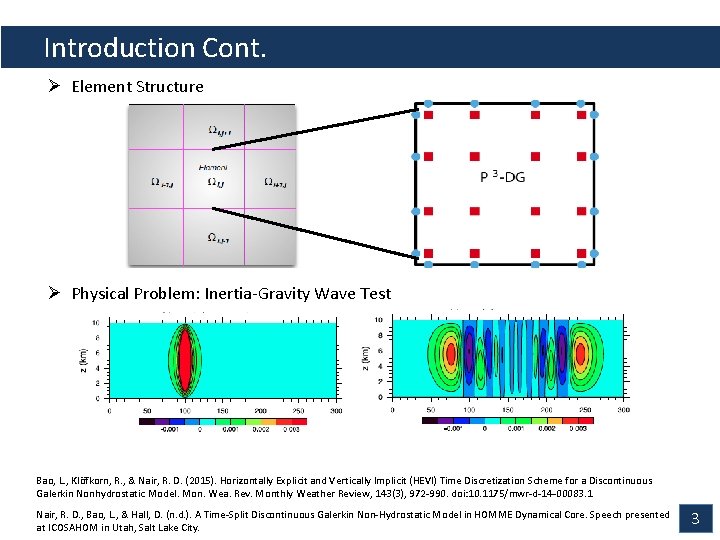

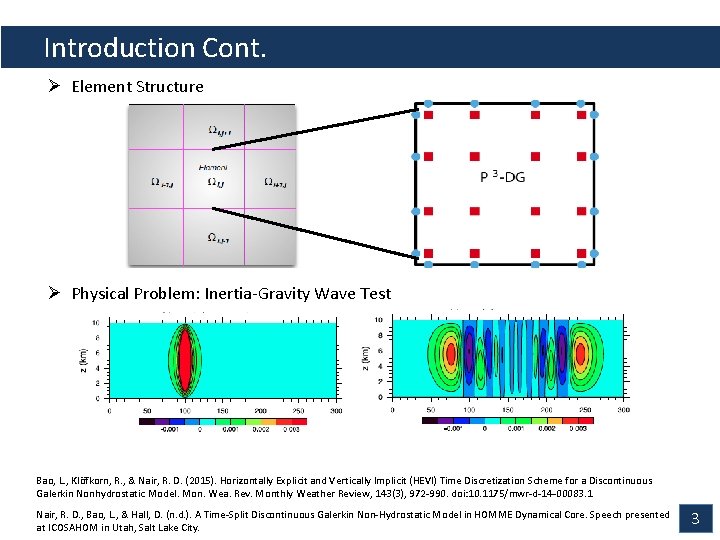

Introduction Cont. Ø Element Structure Ø Physical Problem: Inertia-Gravity Wave Test Bao, L. , Klöfkorn, R. , & Nair, R. D. (2015). Horizontally Explicit and Vertically Implicit (HEVI) Time Discretization Scheme for a Discontinuous Galerkin Nonhydrostatic Model. Mon. Wea. Rev. Monthly Weather Review, 143(3), 972 -990. doi: 10. 1175/mwr-d-14 -00083. 1 Nair, R. D. , Bao, L. , & Hall, D. (n. d. ). A Time-Split Discontinuous Galerkin Non-Hydrostatic Model in HOMME Dynamical Core. Speech presented at ICOSAHOM in Utah, Salt Lake City. 3

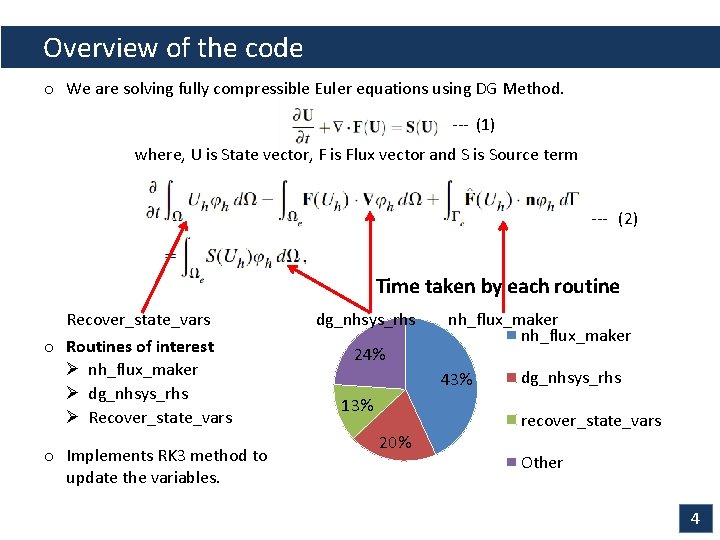

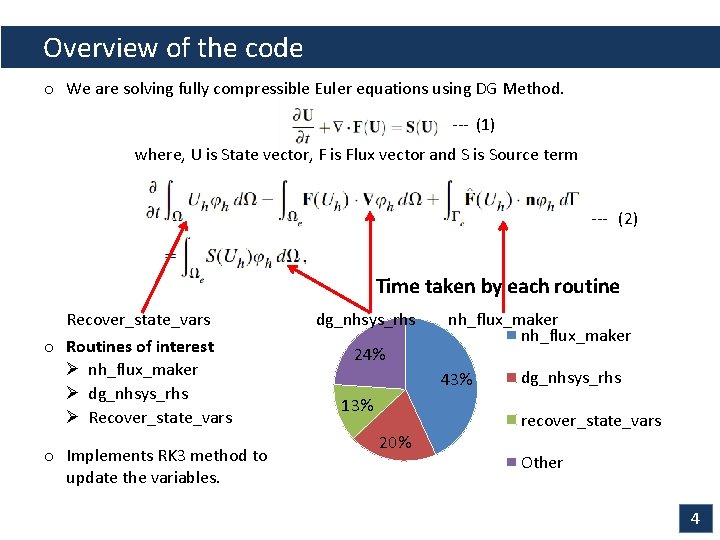

Overview of the code o We are solving fully compressible Euler equations using DG Method. --- (1) where, U is State vector, F is Flux vector and S is Source term --- (2) Time taken by each routine Recover_state_vars o Routines of interest Ø nh_flux_maker Ø dg_nhsys_rhs Ø Recover_state_vars o Implements RK 3 method to update the variables. dg_nhsys_rhs 24% nh_flux_maker 43% 13% dg_nhsys_rhs recover_state_vars 20% Other 4

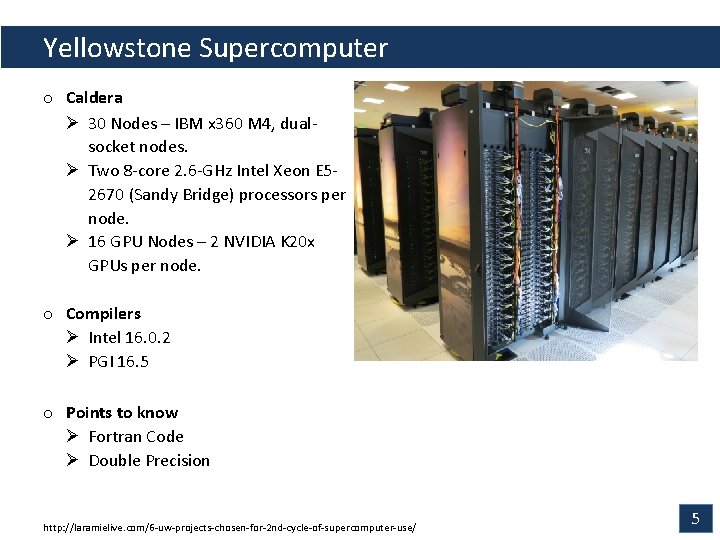

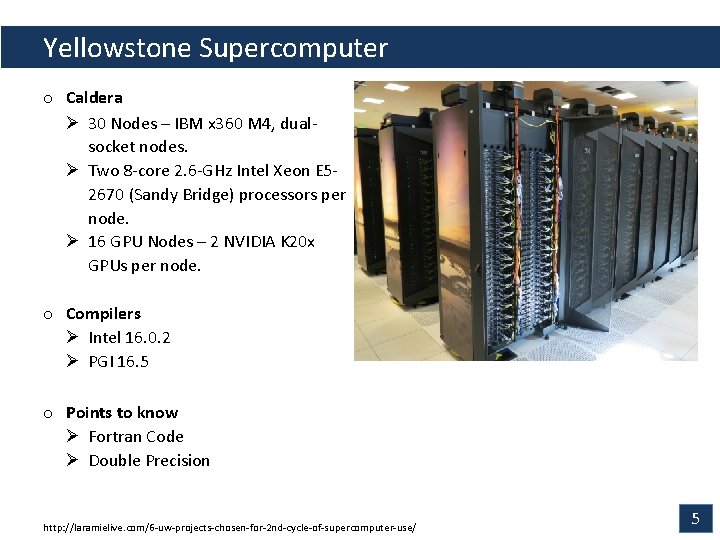

Yellowstone Supercomputer o Caldera Ø 30 Nodes – IBM x 360 M 4, dualsocket nodes. Ø Two 8 -core 2. 6 -GHz Intel Xeon E 52670 (Sandy Bridge) processors per node. Ø 16 GPU Nodes – 2 NVIDIA K 20 x GPUs per node. o Compilers Ø Intel 16. 0. 2 Ø PGI 16. 5 o Points to know Ø Fortran Code Ø Double Precision http: //laramielive. com/6 -uw-projects-chosen-for-2 nd-cycle-of-supercomputer-use/ 5

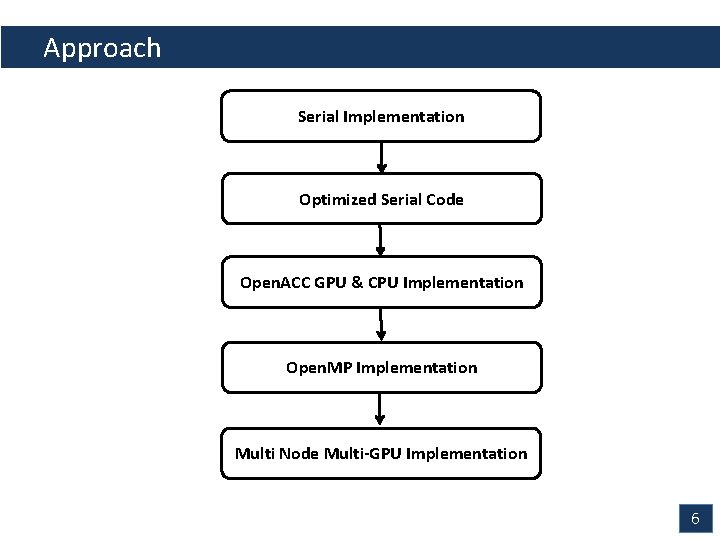

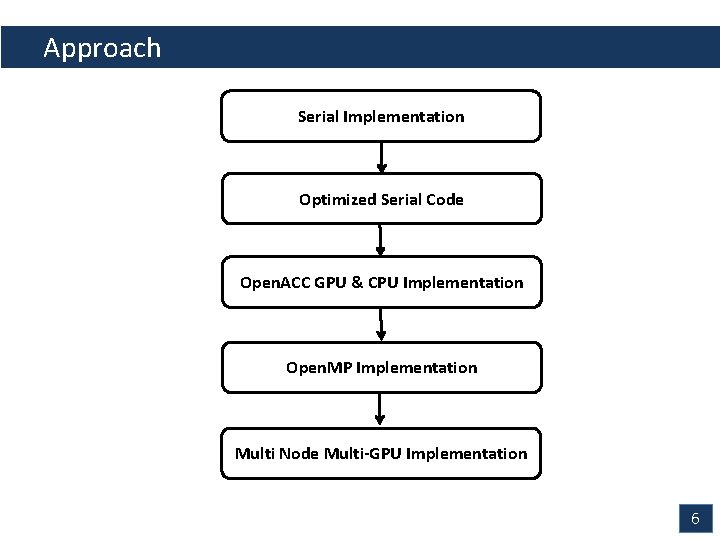

Approach Serial Implementation Optimized Serial Code Open. ACC GPU & CPU Implementation Open. MP Implementation Multi Node Multi-GPU Implementation 6

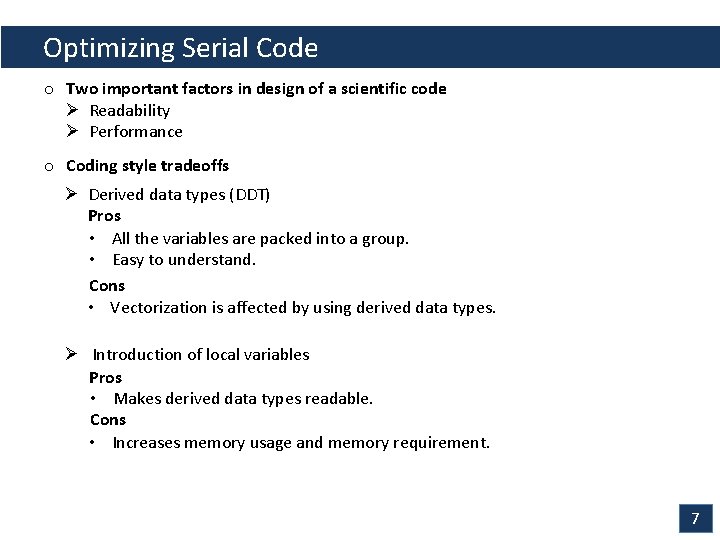

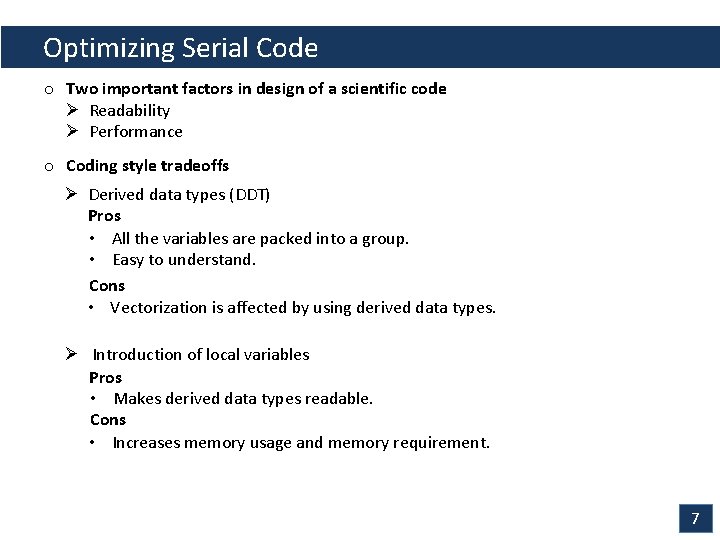

Optimizing Serial Code o Two important factors in design of a scientific code Ø Readability Ø Performance o Coding style tradeoffs Ø Derived data types (DDT) Pros • All the variables are packed into a group. • Easy to understand. Cons • Vectorization is affected by using derived data types. Ø Introduction of local variables Pros • Makes derived data types readable. Cons • Increases memory usage and memory requirement. 7

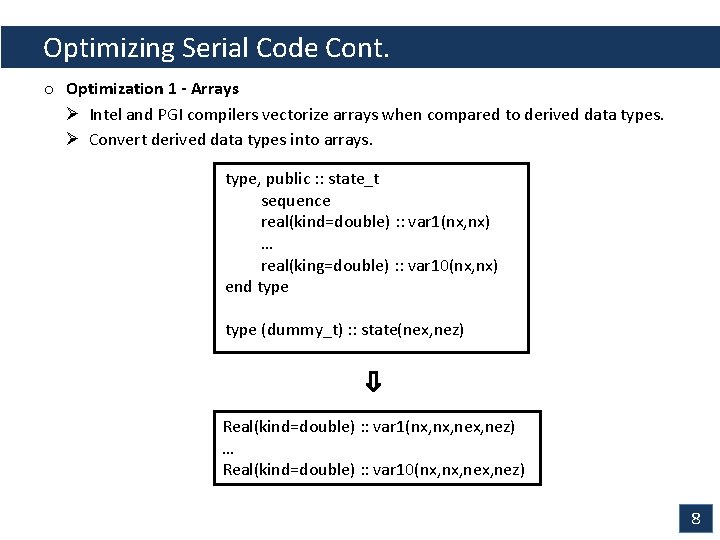

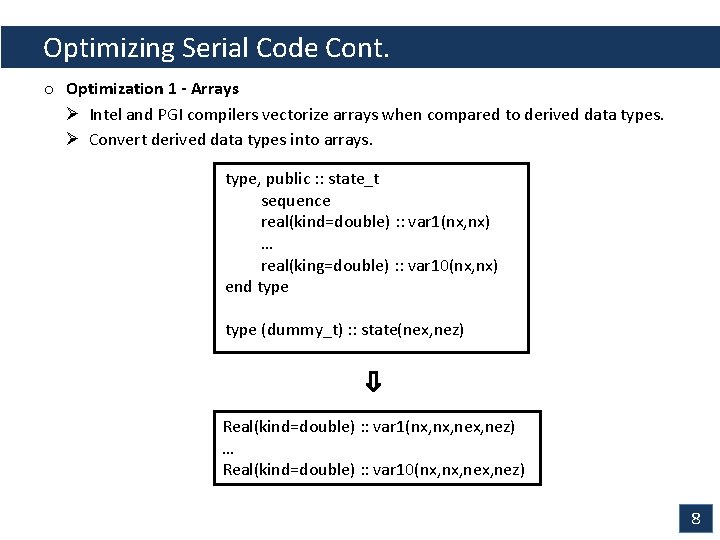

Optimizing Serial Code Cont. o Optimization 1 - Arrays Ø Intel and PGI compilers vectorize arrays when compared to derived data types. Ø Convert derived data types into arrays. type, public : : state_t sequence real(kind=double) : : var 1(nx, nx) … real(king=double) : : var 10(nx, nx) end type (dummy_t) : : state(nex, nez) Real(kind=double) : : var 1(nx, nex, nez) … Real(kind=double) : : var 10(nx, nex, nez) 8

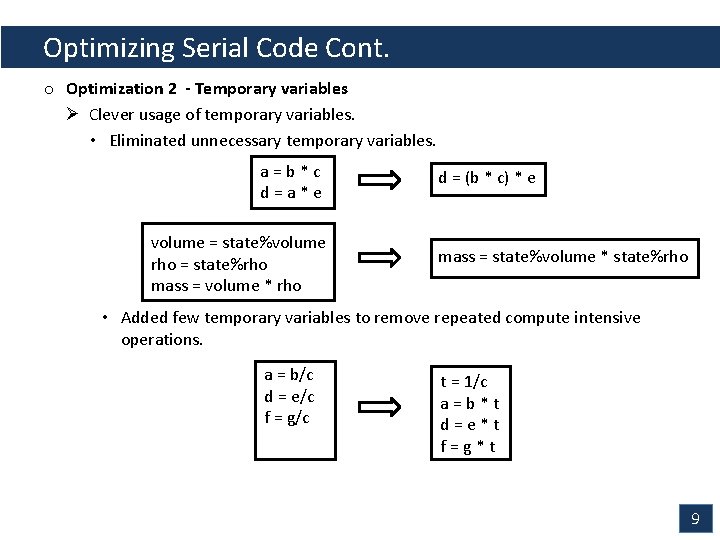

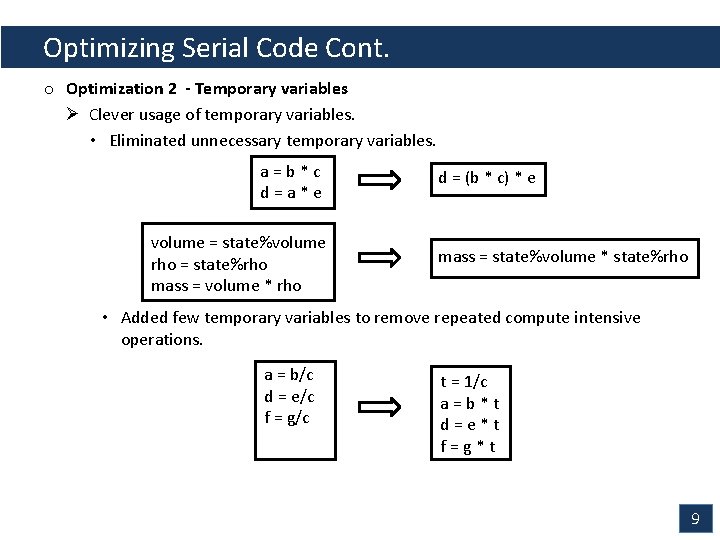

Optimizing Serial Code Cont. o Optimization 2 - Temporary variables Ø Clever usage of temporary variables. • Eliminated unnecessary temporary variables. a=b*c d=a*e volume = state%volume rho = state%rho mass = volume * rho d = (b * c) * e mass = state%volume * state%rho • Added few temporary variables to remove repeated compute intensive operations. a = b/c d = e/c f = g/c t = 1/c a=b*t d=e*t f=g*t 9

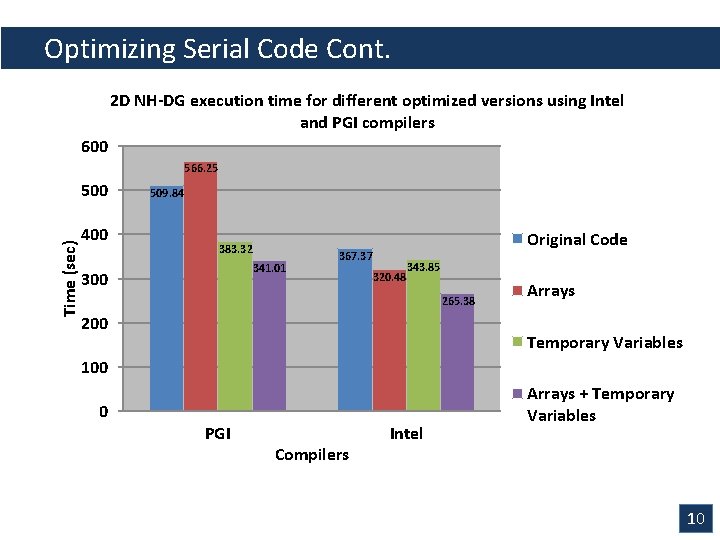

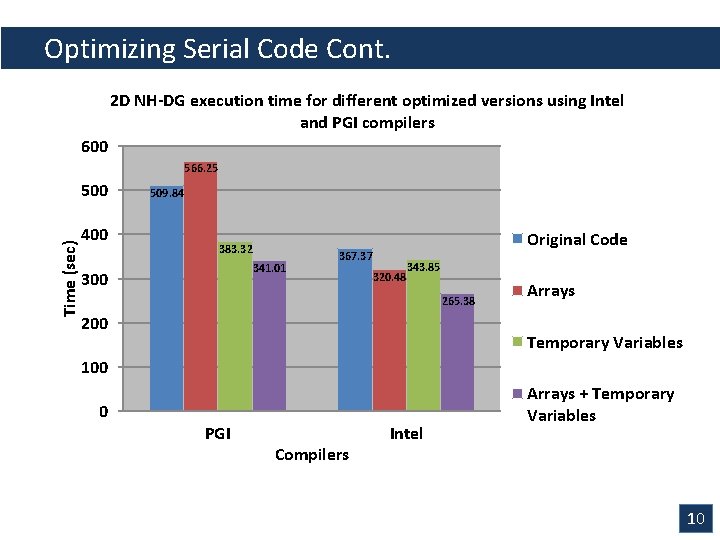

Outline Optimizing Serial Code Cont. 2 D NH-DG execution time for different optimized versions using Intel and PGI compilers 600 566. 25 Time (sec) 500 400 509. 84 383. 32 341. 01 300 Original Code 367. 37 320. 48 343. 85 265. 38 200 Arrays Temporary Variables 100 0 PGI Compilers Intel Arrays + Temporary Variables 10

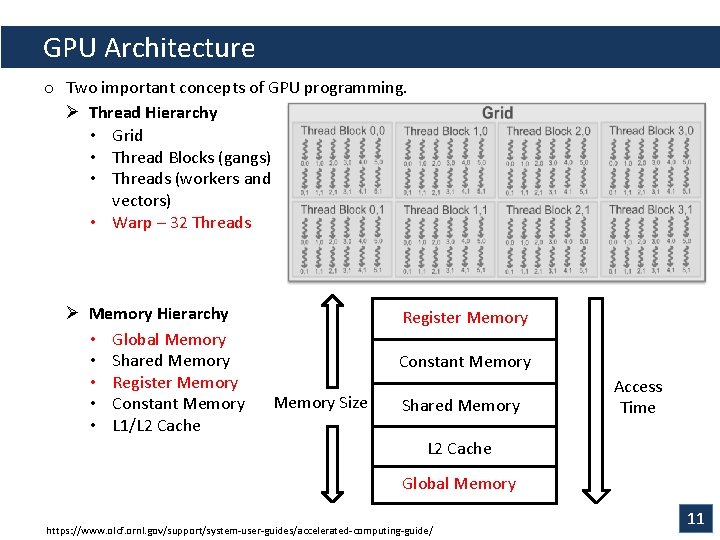

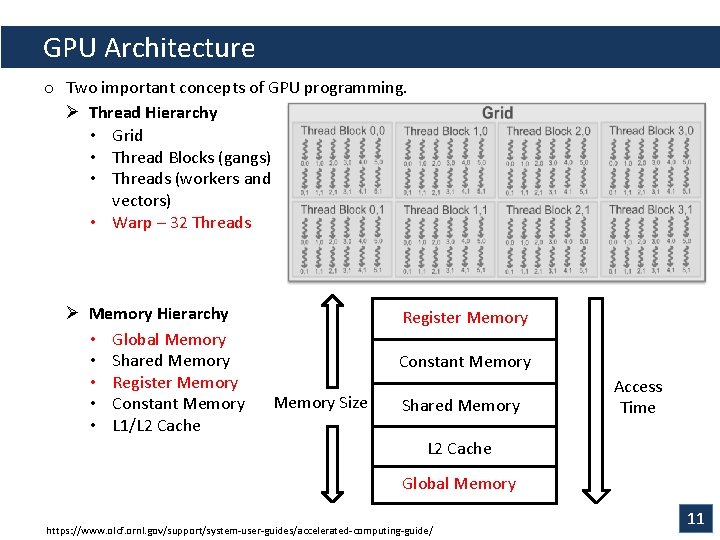

GPU Architecture o Two important concepts of GPU programming. Ø Thread Hierarchy • Grid • Thread Blocks (gangs) • Threads (workers and vectors) • Warp – 32 Threads Ø Memory Hierarchy • Global Memory • Shared Memory • Register Memory • Constant Memory • L 1/L 2 Cache Register Memory Constant Memory Size Shared Memory Access Time L 2 Cache Global Memory https: //www. olcf. ornl. gov/support/system-user-guides/accelerated-computing-guide/ 11

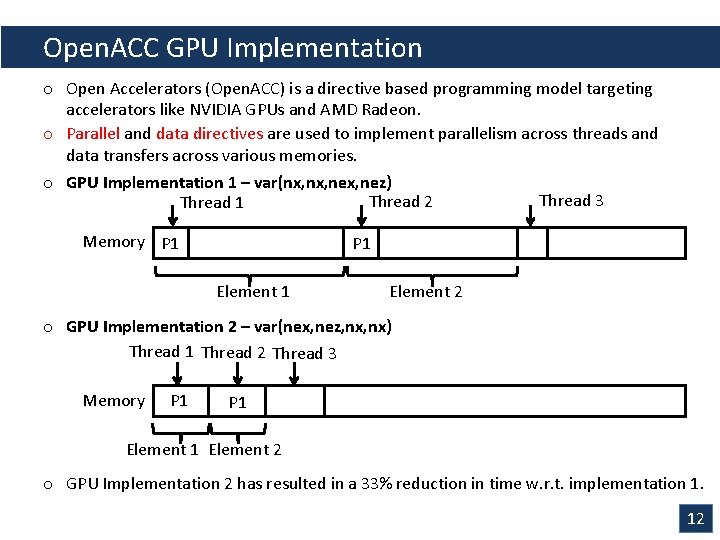

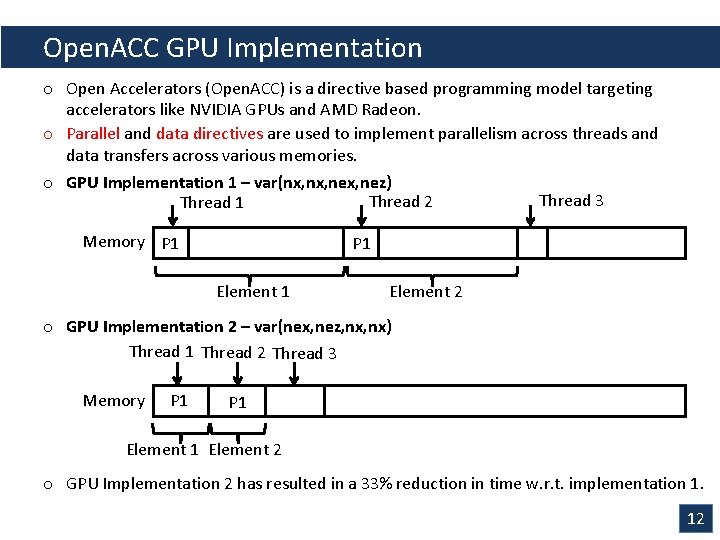

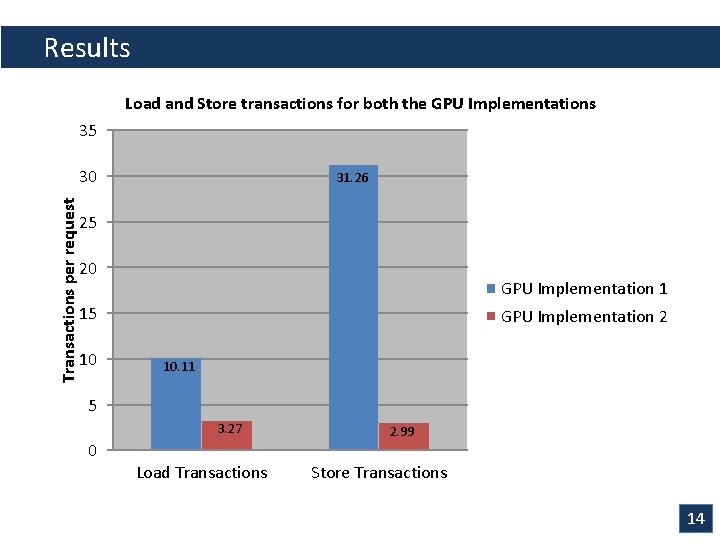

Open. ACC GPU Implementation o Open Accelerators (Open. ACC) is a directive based programming model targeting accelerators like NVIDIA GPUs and AMD Radeon. o Parallel and data directives are used to implement parallelism across threads and data transfers across various memories. o GPU Implementation 1 – var(nx, nex, nez) Thread 3 Thread 2 Thread 1 Memory P 1 Element 2 o GPU Implementation 2 – var(nex, nez, nx) Thread 1 Thread 2 Thread 3 Memory P 1 Element 2 o GPU Implementation 2 has resulted in a 33% reduction in time w. r. t. implementation 1. 12

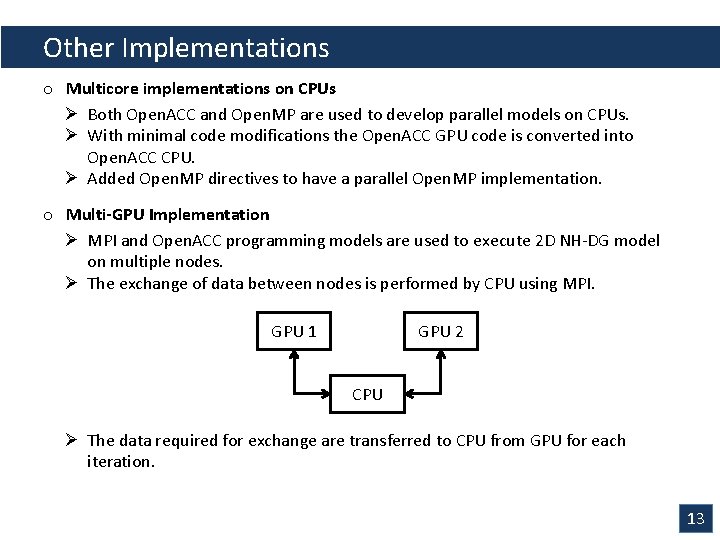

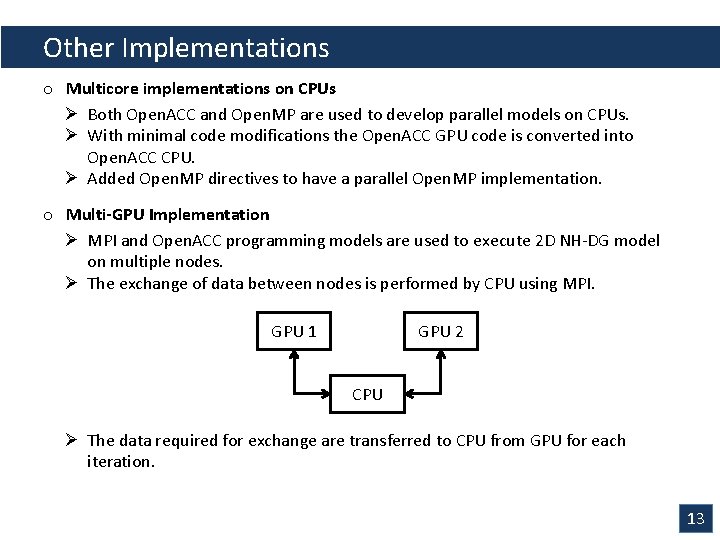

Other Implementations o Multicore implementations on CPUs Ø Both Open. ACC and Open. MP are used to develop parallel models on CPUs. Ø With minimal code modifications the Open. ACC GPU code is converted into Open. ACC CPU. Ø Added Open. MP directives to have a parallel Open. MP implementation. o Multi-GPU Implementation Ø MPI and Open. ACC programming models are used to execute 2 D NH-DG model on multiple nodes. Ø The exchange of data between nodes is performed by CPU using MPI. GPU 1 GPU 2 CPU Ø The data required for exchange are transferred to CPU from GPU for each iteration. 13

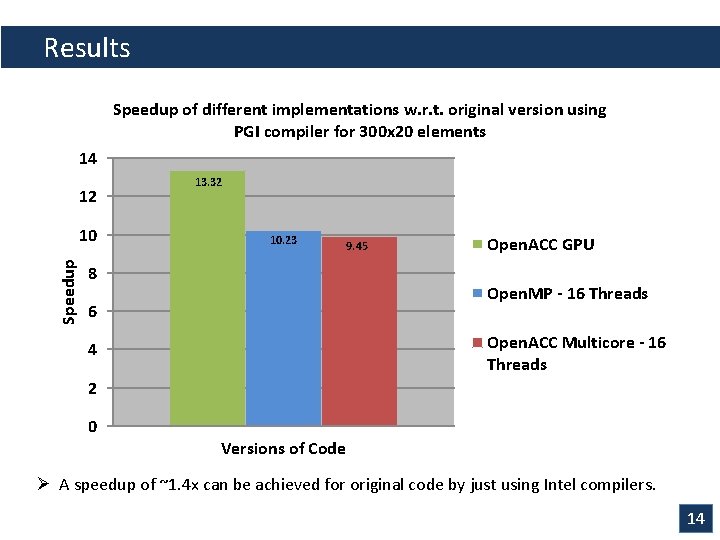

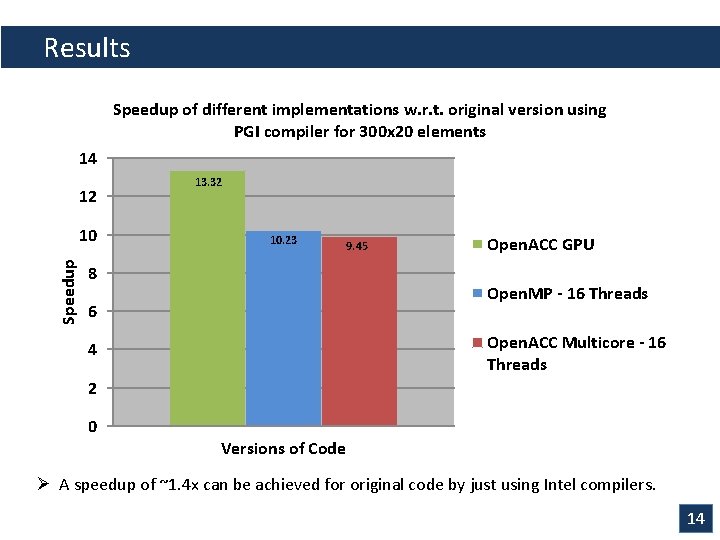

Results Speedup of different implementations w. r. t. original version using PGI compiler for 300 x 20 elements 14 12 Speedup 10 13. 32 10. 23 8 9. 45 Open. ACC GPU Open. MP - 16 Threads 6 Open. ACC Multicore - 16 Threads 4 2 0 Versions of Code Ø A speedup of ~1. 4 x can be achieved for original code by just using Intel compilers. 14

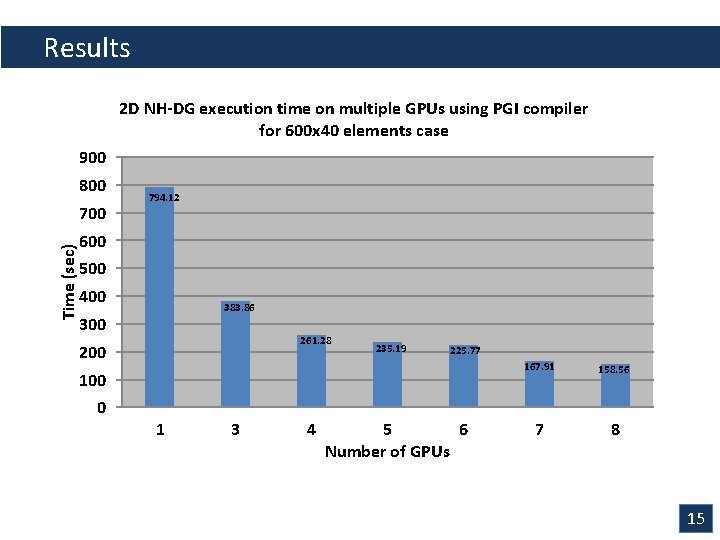

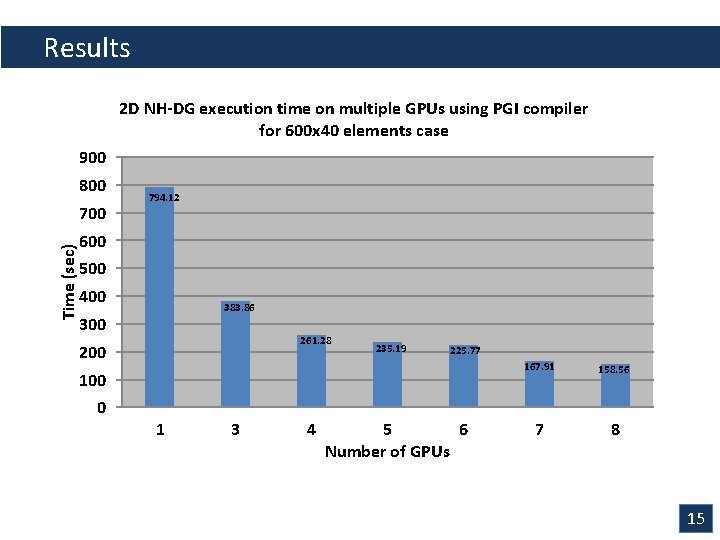

Results 2 D NH-DG execution time on multiple GPUs using PGI compiler for 600 x 40 elements case 900 800 Time (sec) 700 794. 12 600 500 400 383. 86 300 261. 28 200 235. 19 225. 77 100 0 1 3 4 5 6 Number of GPUs 167. 91 158. 56 7 8 15

Conclusion & Future Work o Conclusion Ø A single Kepler K 20 x outperforms a dual socketed Sandy Bridge Xeon node. Ø Demonstrated performance portability with Open. ACC. Ø Open. ACC and Open. MP have comparable performance on the CPU for 1 thread per core cases. Ø However, Open. ACC does not support hyperthreading. Ø The serial performance of the PGI compiler is significantly slower than Intel’s. o Future Work Ø Use GPU direct for GPU-to-GPU communication to improve scaling. Ø Optimize the load and store transactions further on GPUs. Ø Benchmark contemporary systems such as Knights Landing, Pascal and Broadwell. 16

References o Bao, L. , Klöfkorn, R. , & Nair, R. D. (2015). Horizontally Explicit and Vertically Implicit (HEVI) Time Discretization Scheme for a Discontinuous Galerkin Nonhydrostatic Model. Mon. Wea. Rev. Monthly Weather Review, 143(3), 972 -990. doi: 10. 1175/mwr -d-14 -00083. 1 o Nair, R. D. , Bao, L. , & Hall, D. (n. d. ). A Time-Split Discontinuous Galerkin Non. Hydrostatic Model in HOMMEDynamical Core. Speech presented at ICOSAHOM in Utah, Salt Lake City. 17

THANK YOU

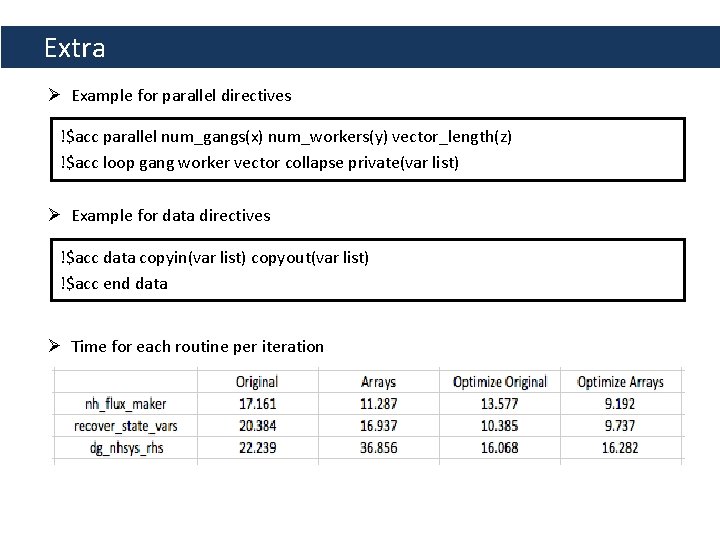

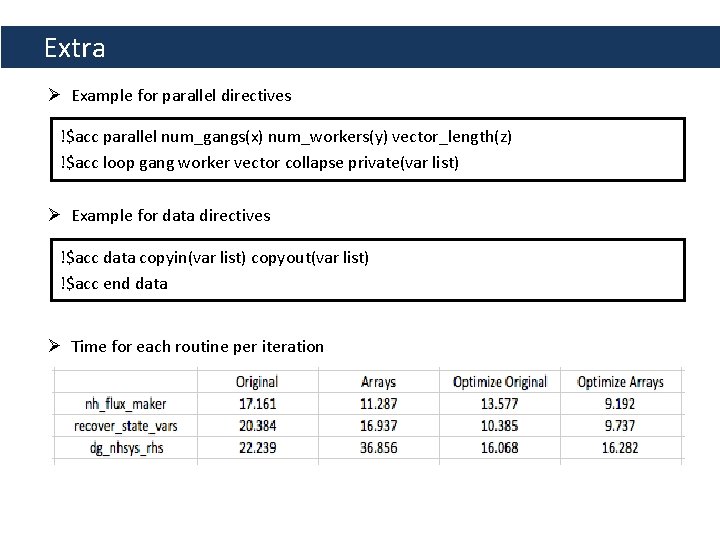

Extra Ø Example for parallel directives !$acc parallel num_gangs(x) num_workers(y) vector_length(z) !$acc loop gang worker vector collapse private(var list) Ø Example for data directives !$acc data copyin(var list) copyout(var list) !$acc end data Ø Time for each routine per iteration

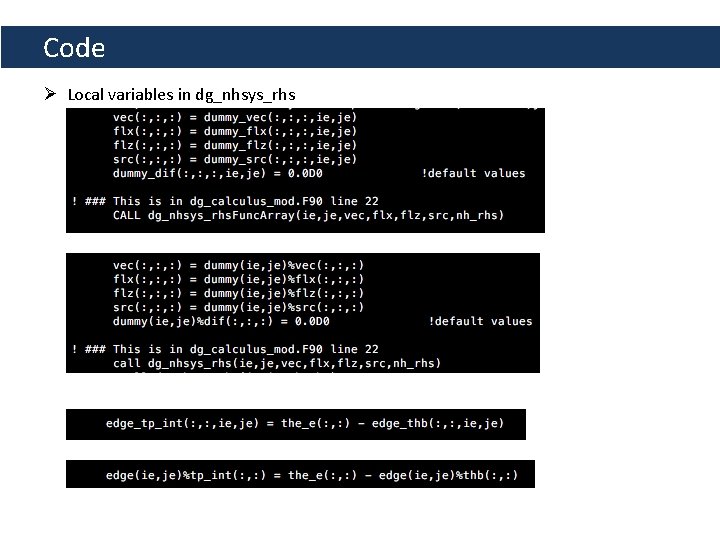

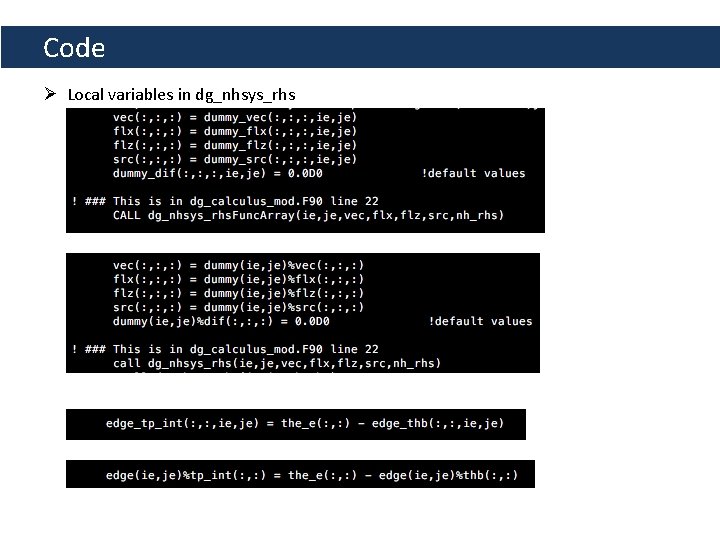

Code Ø Local variables in dg_nhsys_rhs

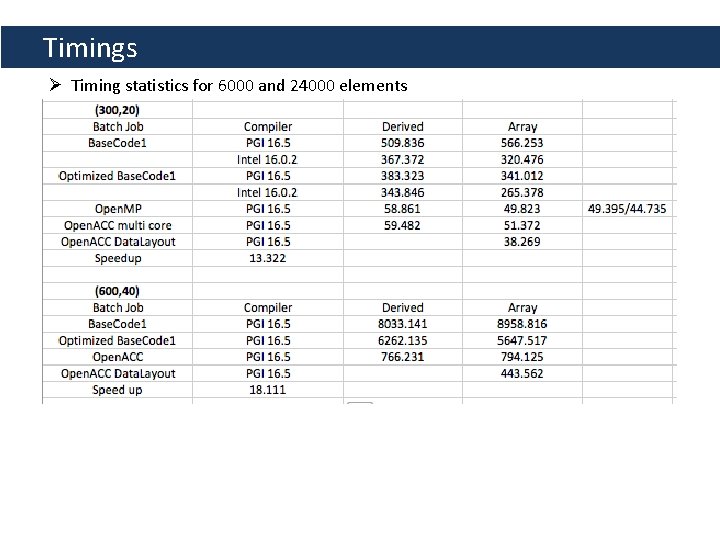

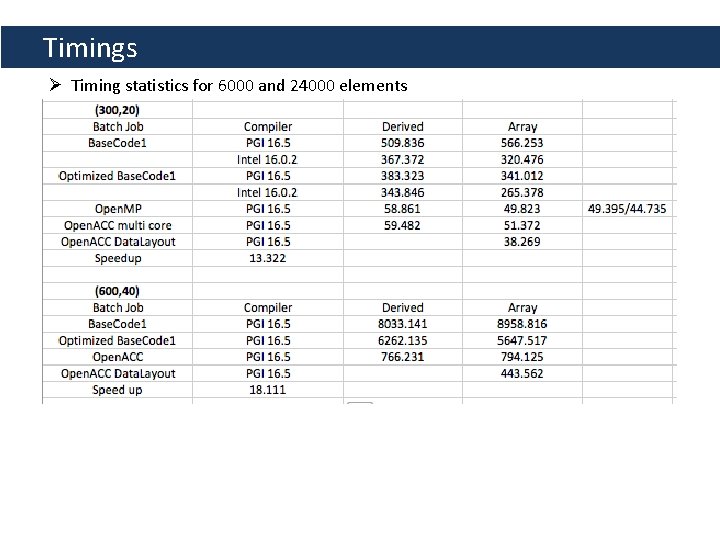

Timings Ø Timing statistics for 6000 and 24000 elements

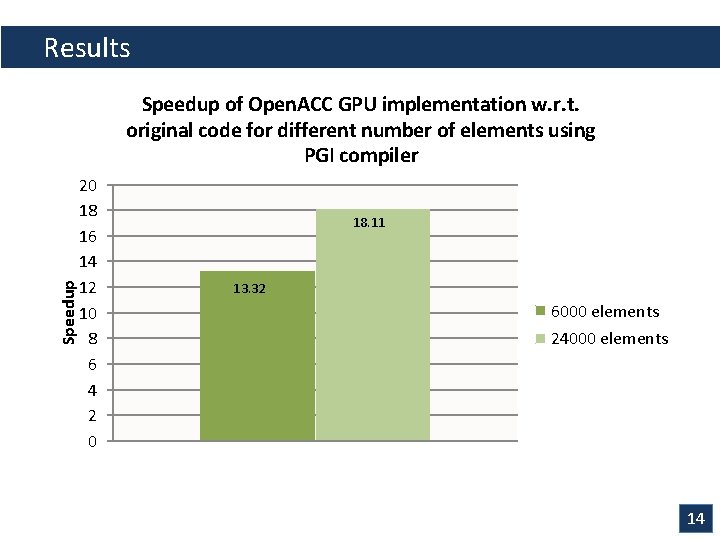

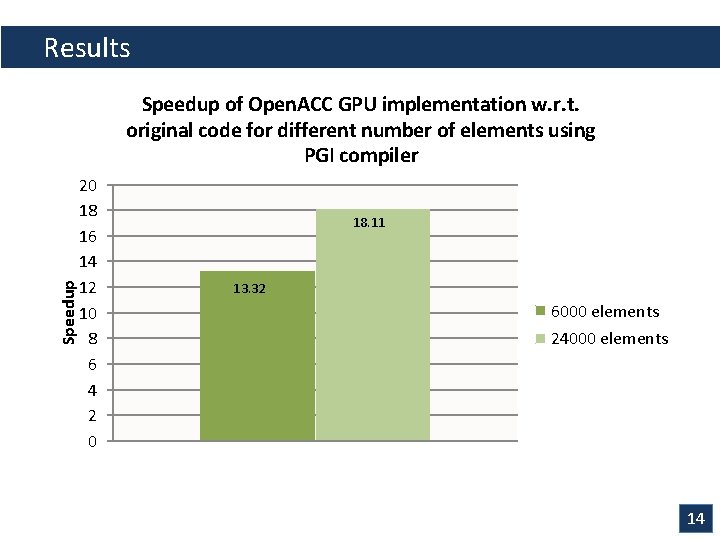

Results Speedup of Open. ACC GPU implementation w. r. t. original code for different number of elements using PGI compiler 20 18 16 14 12 10 8 6 4 2 0 18. 11 13. 32 6000 elements 24000 elements 14

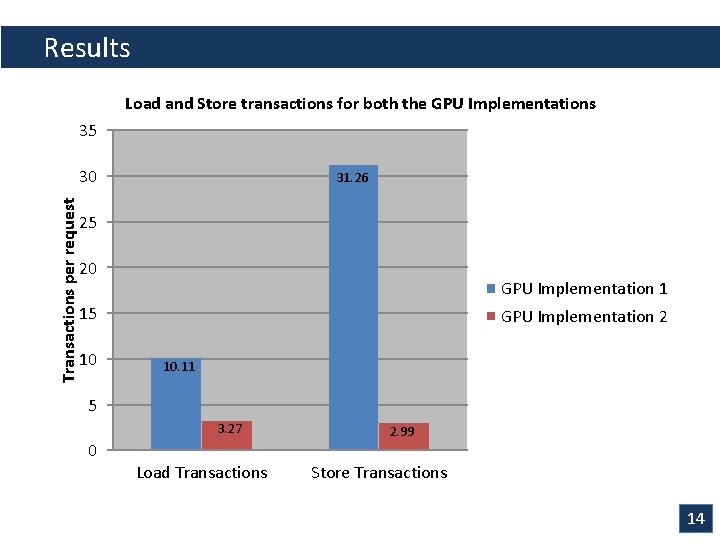

Results Load and Store transactions for both the GPU Implementations 35 Transactions per request 30 31. 26 25 20 GPU Implementation 1 15 10 GPU Implementation 2 10. 11 5 3. 27 0 Load Transactions 2. 99 Store Transactions 14