Optimization with Constraints Standard formulation Why are constraints

![Useful additional information [X, FVAL, EXITFLAG] = fmincon(FUN, X 0, . . . ) Useful additional information [X, FVAL, EXITFLAG] = fmincon(FUN, X 0, . . . )](https://slidetodoc.com/presentation_image_h/0c07a83536f50a3f63d7a76fdee4c772/image-15.jpg)

![Making it harder for fmincon • >> a=1. 1; • [x, fval]=fmincon(@quad 2, x Making it harder for fmincon • >> a=1. 1; • [x, fval]=fmincon(@quad 2, x](https://slidetodoc.com/presentation_image_h/0c07a83536f50a3f63d7a76fdee4c772/image-17.jpg)

- Slides: 18

Optimization with Constraints • Standard formulation • Why are constraints a problem? – Canyons in design space – Equality constraints are particularly bad!

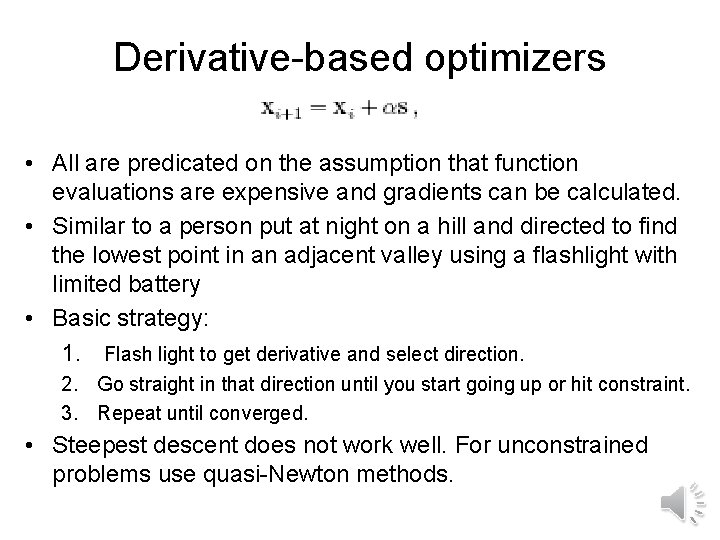

Derivative-based optimizers • All are predicated on the assumption that function evaluations are expensive and gradients can be calculated. • Similar to a person put at night on a hill and directed to find the lowest point in an adjacent valley using a flashlight with limited battery • Basic strategy: 1. Flash light to get derivative and select direction. 2. Go straight in that direction until you start going up or hit constraint. 3. Repeat until converged. • Steepest descent does not work well. For unconstrained problems use quasi-Newton methods.

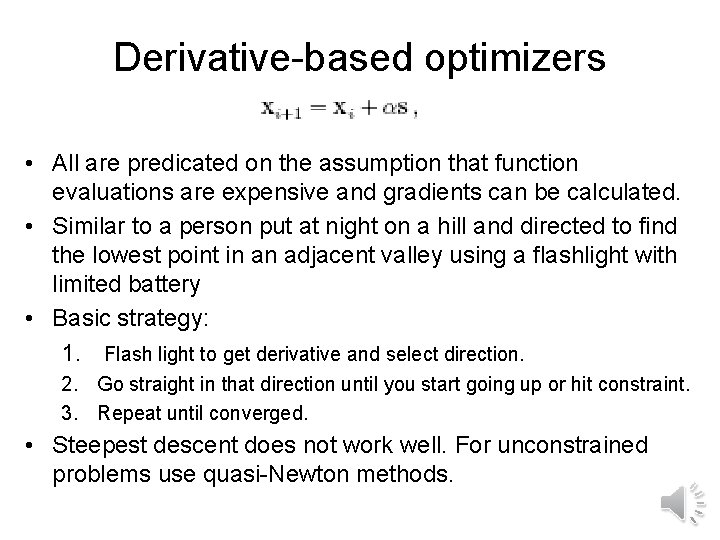

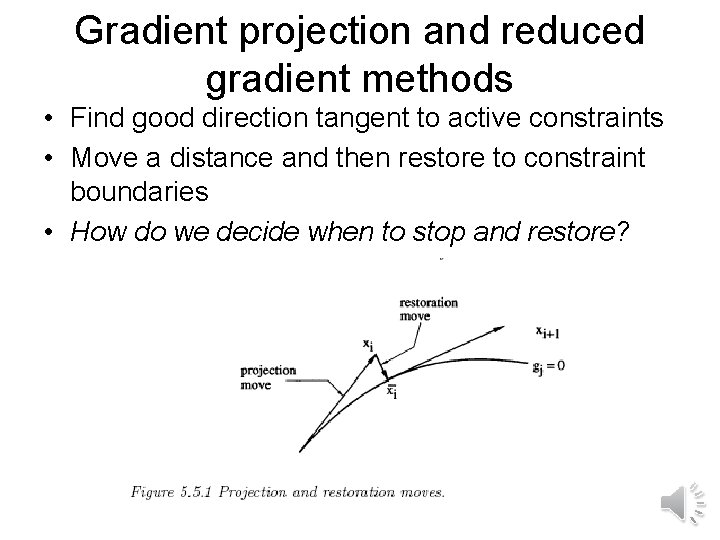

Gradient projection and reduced gradient methods • Find good direction tangent to active constraints • Move a distance and then restore to constraint boundaries • How do we decide when to stop and restore?

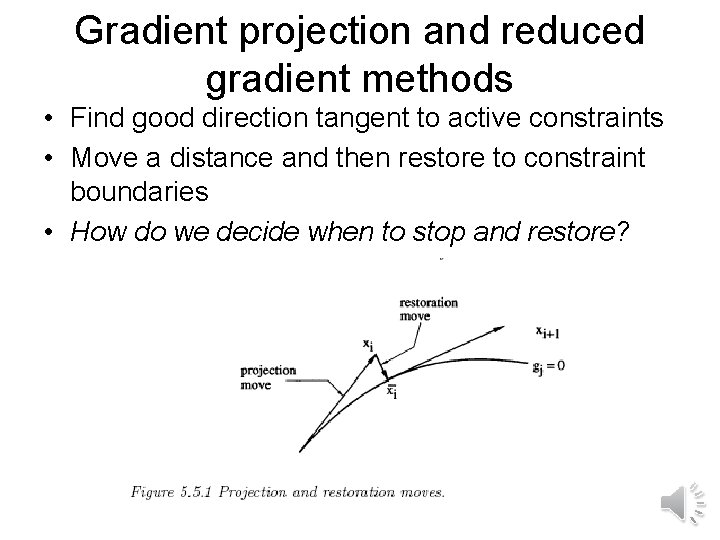

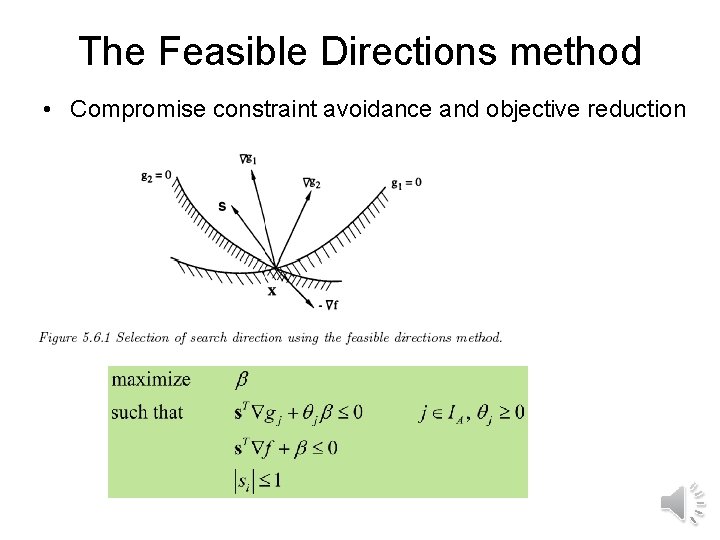

The Feasible Directions method • Compromise constraint avoidance and objective reduction

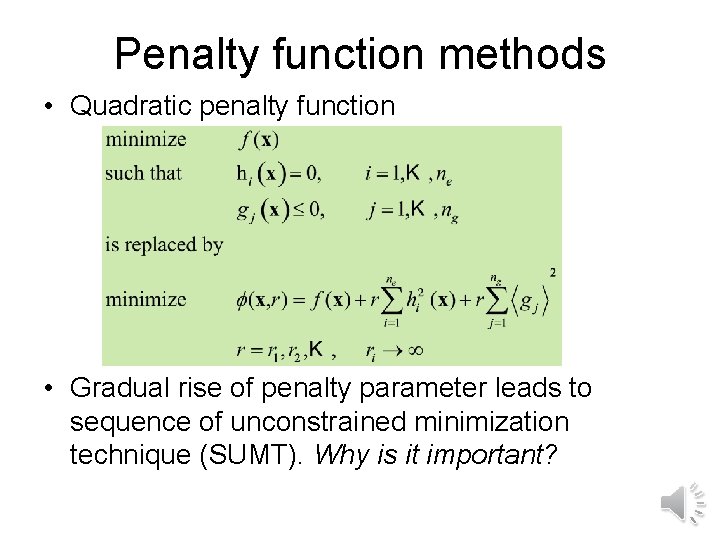

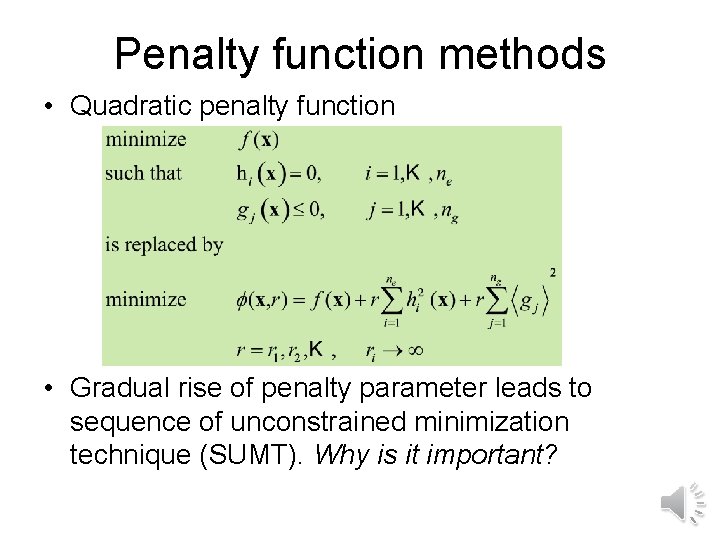

Penalty function methods • Quadratic penalty function • Gradual rise of penalty parameter leads to sequence of unconstrained minimization technique (SUMT). Why is it important?

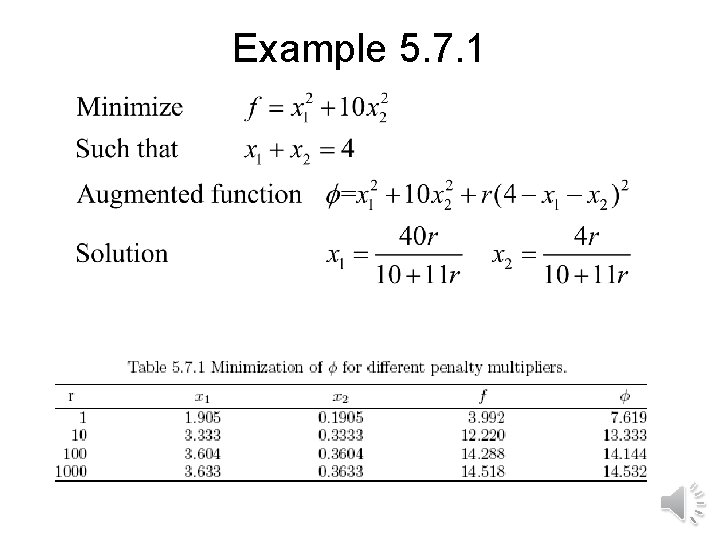

Example 5. 7. 1

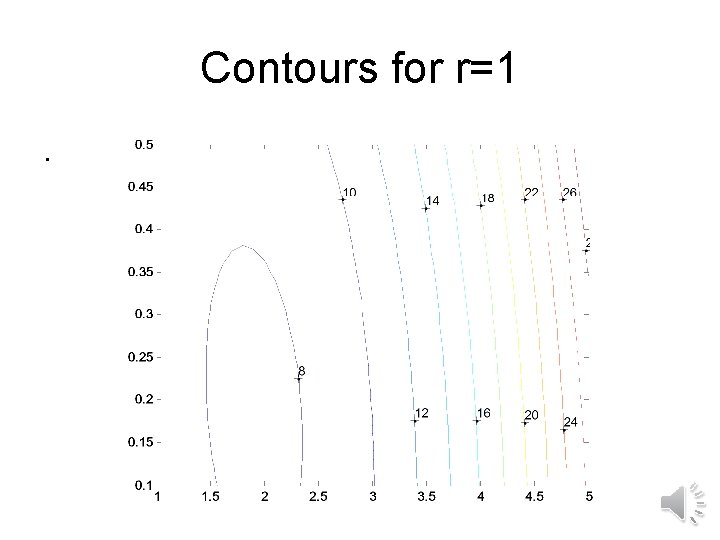

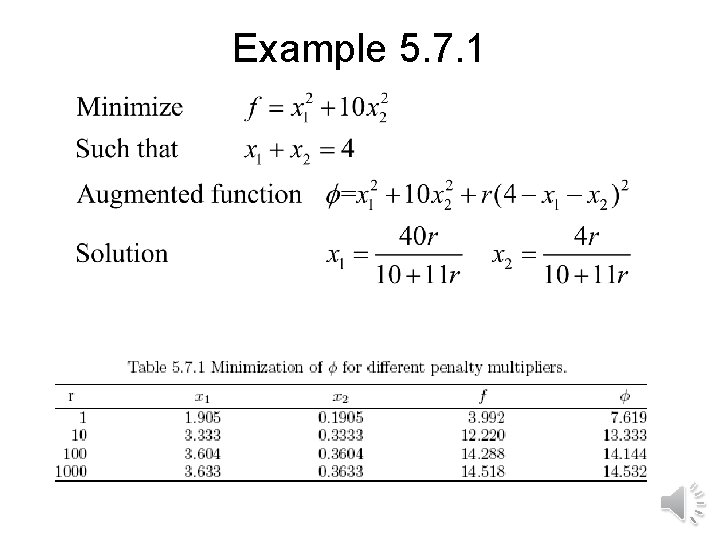

Contours for r=1.

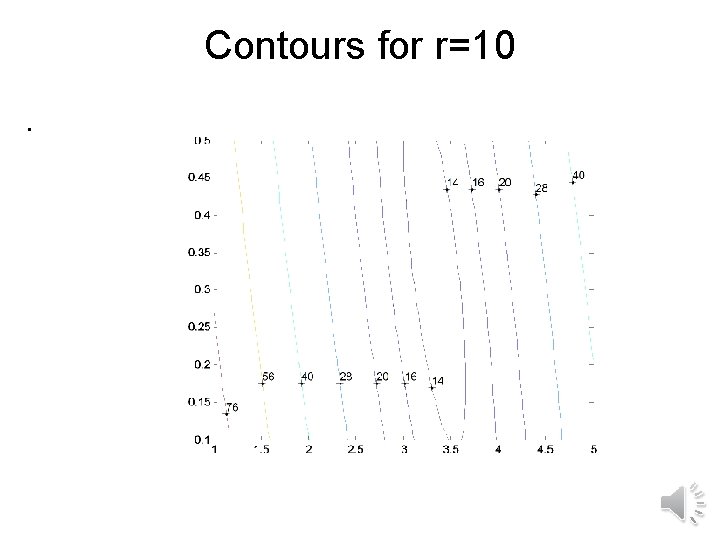

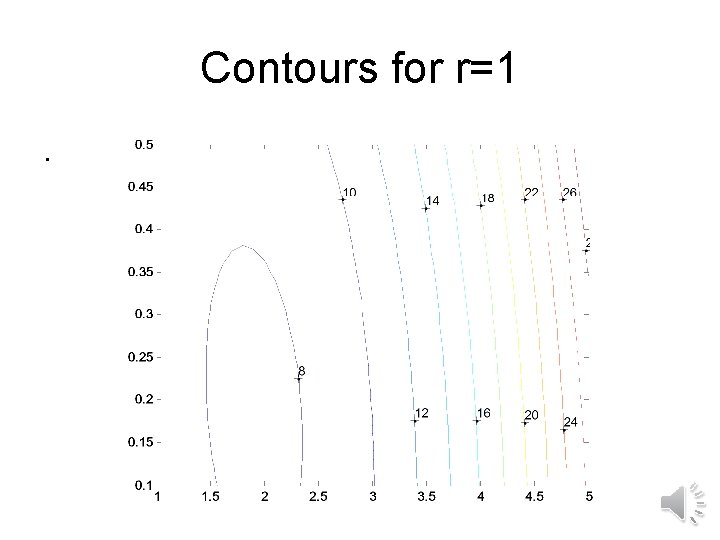

Contours for r=10.

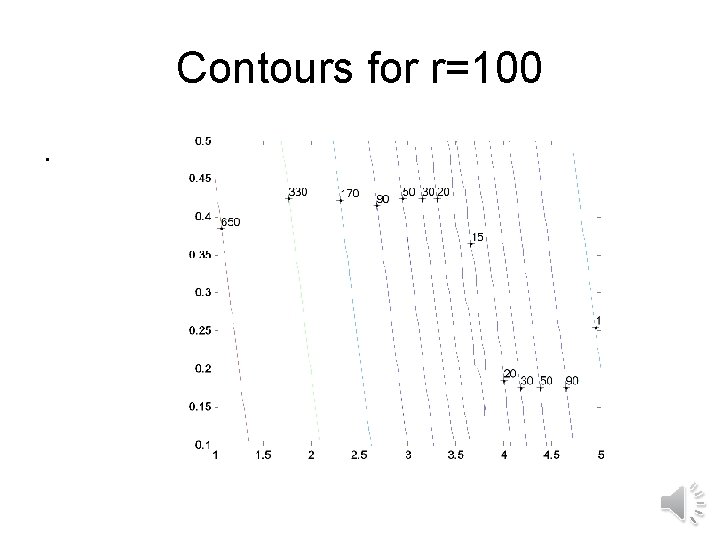

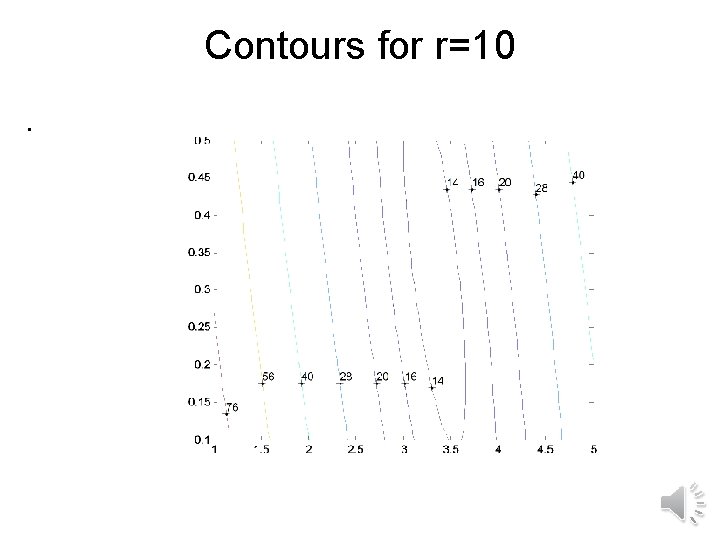

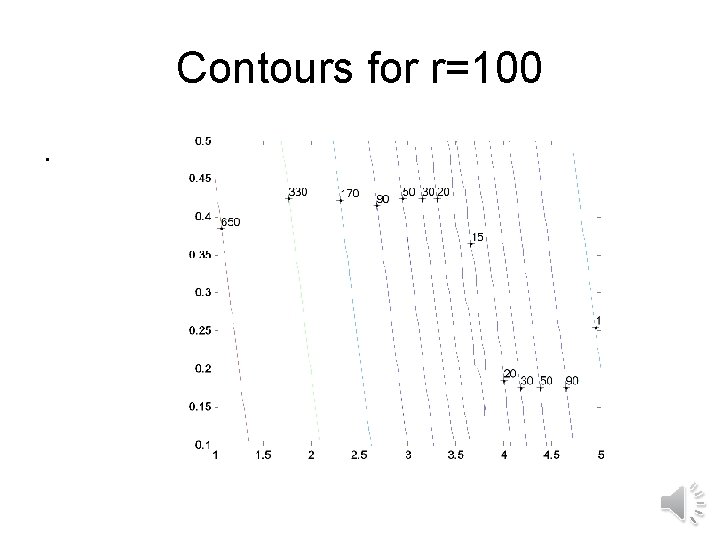

Contours for r=100.

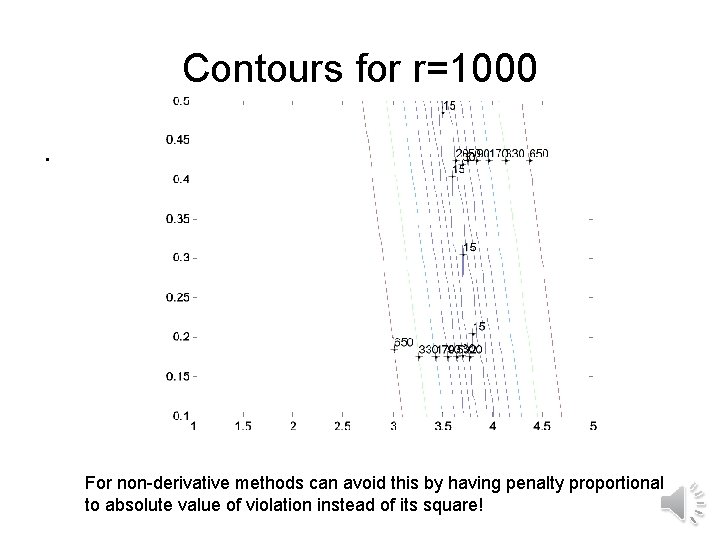

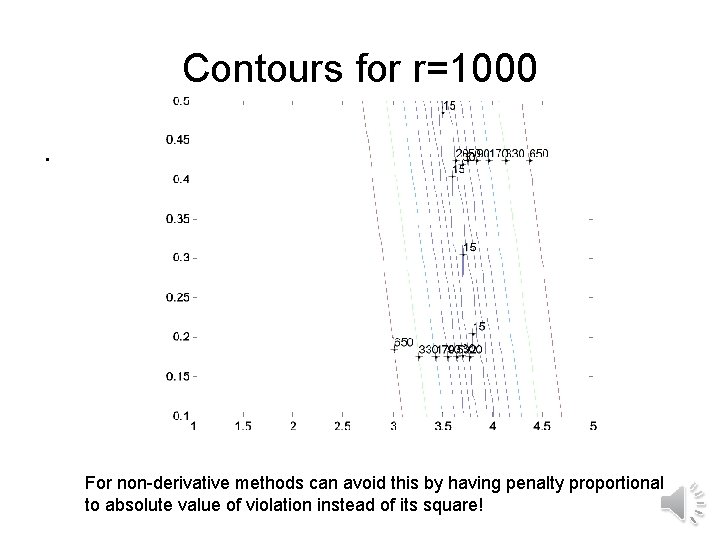

Contours for r=1000. For non-derivative methods can avoid this by having penalty proportional to absolute value of violation instead of its square!

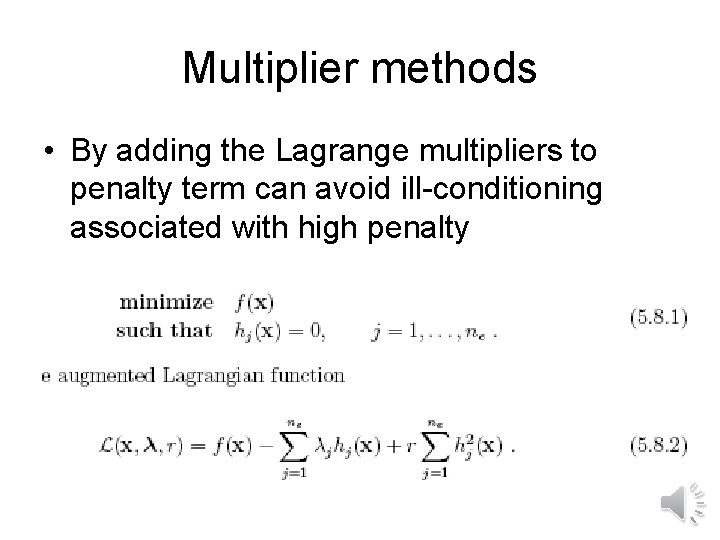

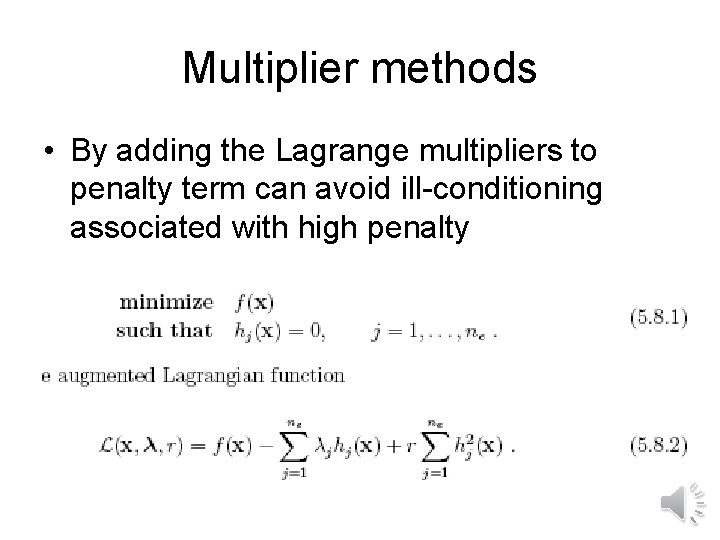

Multiplier methods • By adding the Lagrange multipliers to penalty term can avoid ill-conditioning associated with high penalty

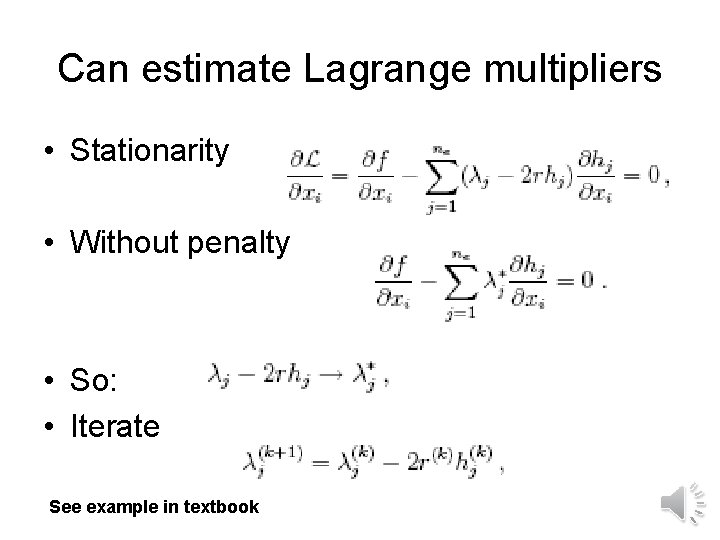

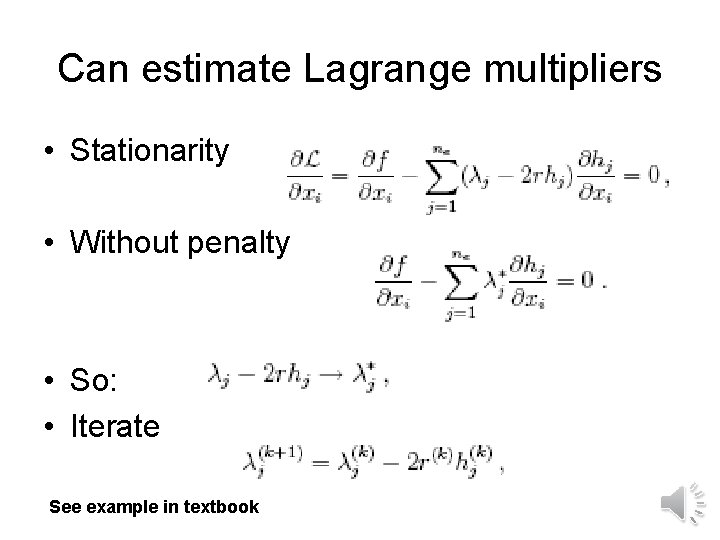

Can estimate Lagrange multipliers • Stationarity • Without penalty • So: • Iterate See example in textbook

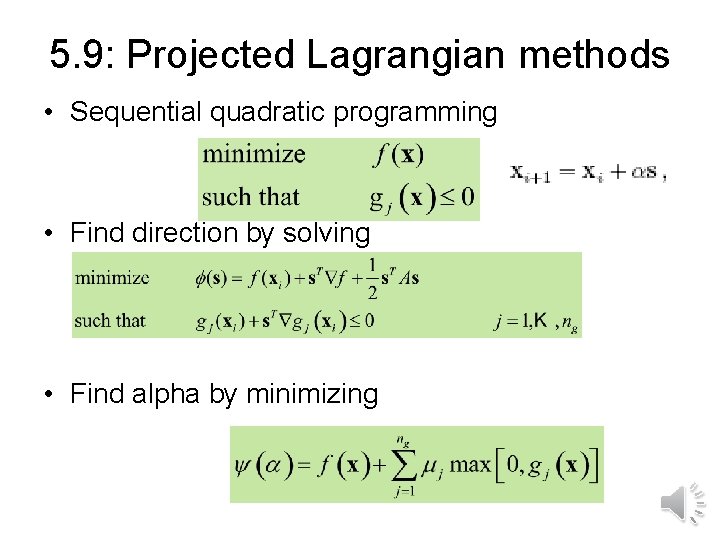

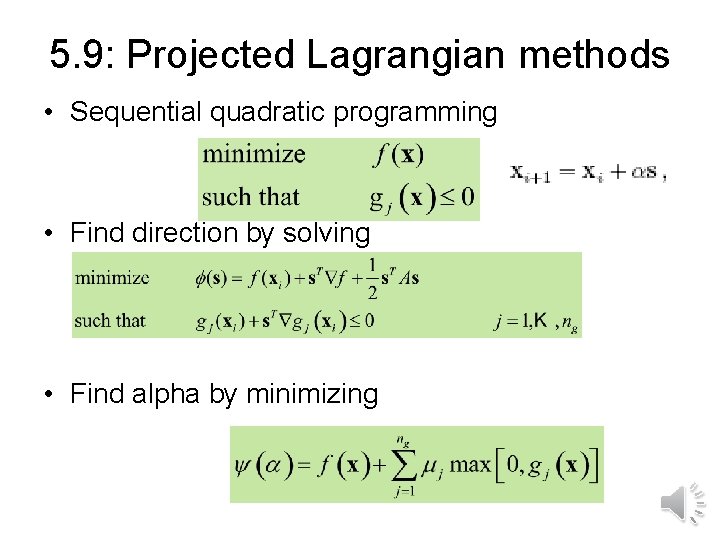

5. 9: Projected Lagrangian methods • Sequential quadratic programming • Find direction by solving • Find alpha by minimizing

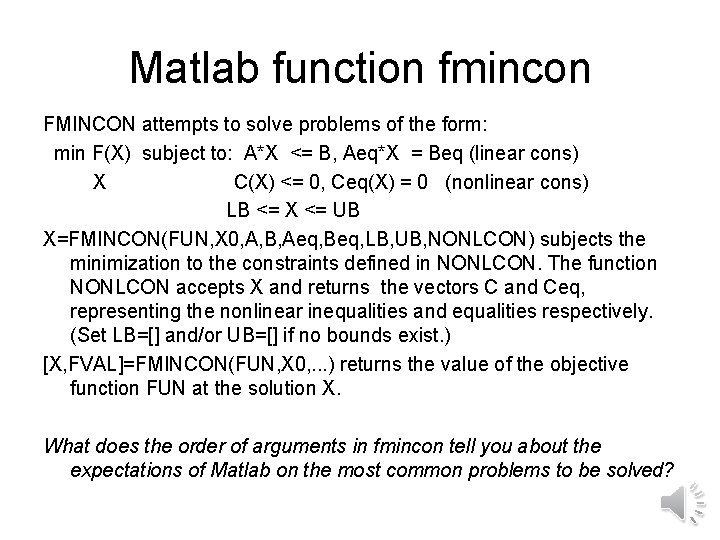

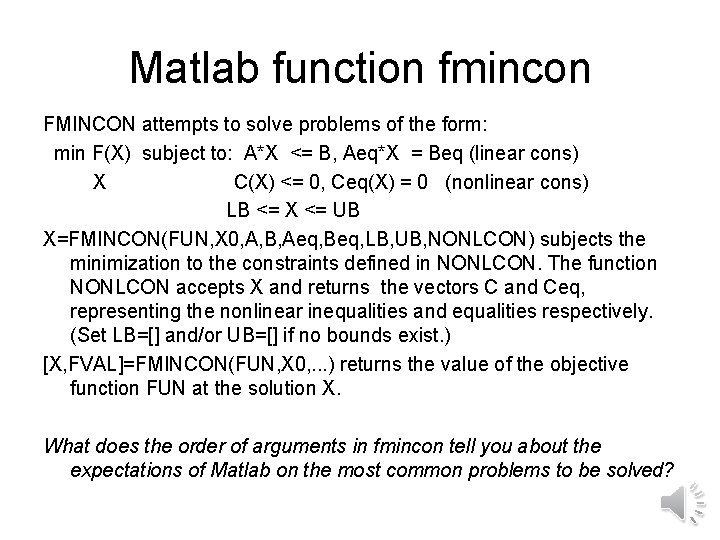

Matlab function fmincon FMINCON attempts to solve problems of the form: min F(X) subject to: A*X <= B, Aeq*X = Beq (linear cons) X C(X) <= 0, Ceq(X) = 0 (nonlinear cons) LB <= X <= UB X=FMINCON(FUN, X 0, A, B, Aeq, Beq, LB, UB, NONLCON) subjects the minimization to the constraints defined in NONLCON. The function NONLCON accepts X and returns the vectors C and Ceq, representing the nonlinear inequalities and equalities respectively. (Set LB=[] and/or UB=[] if no bounds exist. ) [X, FVAL]=FMINCON(FUN, X 0, . . . ) returns the value of the objective function FUN at the solution X. What does the order of arguments in fmincon tell you about the expectations of Matlab on the most common problems to be solved?

![Useful additional information X FVAL EXITFLAG fminconFUN X 0 Useful additional information [X, FVAL, EXITFLAG] = fmincon(FUN, X 0, . . . )](https://slidetodoc.com/presentation_image_h/0c07a83536f50a3f63d7a76fdee4c772/image-15.jpg)

Useful additional information [X, FVAL, EXITFLAG] = fmincon(FUN, X 0, . . . ) returns an EXITFLAG that describes the exit condition of fmincon. Possible values of EXITFLAG 1 First order optimality conditions satisfied. 0 Too many function evaluations or iterations. -1 Stopped by output/plot function. -2 No feasible point found. [X, FVAL, EXITFLAG, OUTPUT, LAMBDA] = fmincon(FUN, X 0, . . . ) returns Lagrange multipliers at the solution X: LAMBDA. lower for LB, LAMBDA. upper for UB, LAMBDA. ineqlin is for the linear inequalities, LAMBDA. eqlin is for the linear equalities, LAMBDA. ineqnonlin is for the nonlinear inequalities, and LAMBDA. eqnonlin is for the nonlinear equalities.

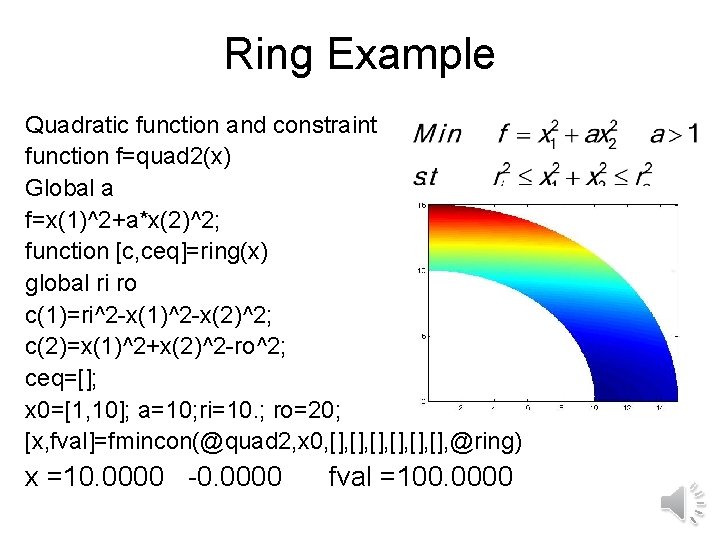

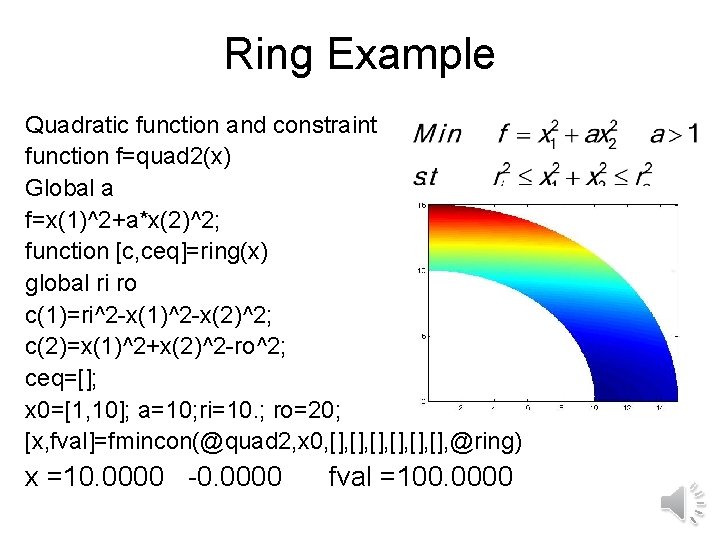

Ring Example Quadratic function and constraint function f=quad 2(x) Global a f=x(1)^2+a*x(2)^2; function [c, ceq]=ring(x) global ri ro c(1)=ri^2 -x(1)^2 -x(2)^2; c(2)=x(1)^2+x(2)^2 -ro^2; ceq=[]; x 0=[1, 10]; a=10; ri=10. ; ro=20; [x, fval]=fmincon(@quad 2, x 0, [], [], [], @ring) x =10. 0000 -0. 0000 fval =100. 0000

![Making it harder for fmincon a1 1 x fvalfminconquad 2 x Making it harder for fmincon • >> a=1. 1; • [x, fval]=fmincon(@quad 2, x](https://slidetodoc.com/presentation_image_h/0c07a83536f50a3f63d7a76fdee4c772/image-17.jpg)

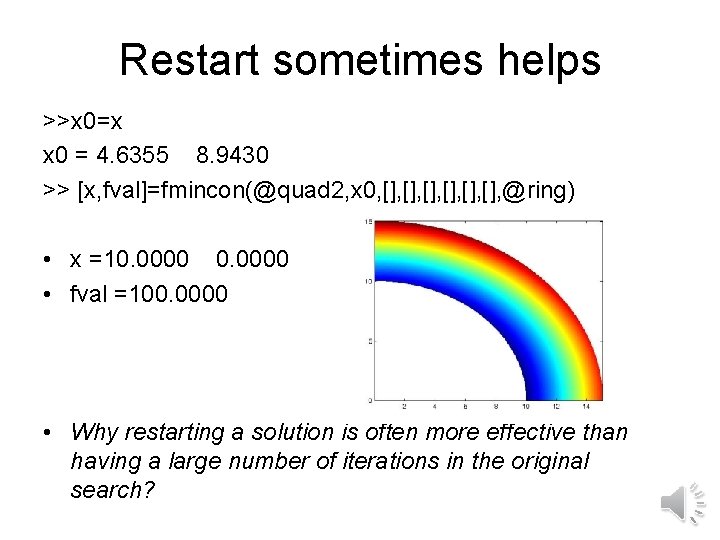

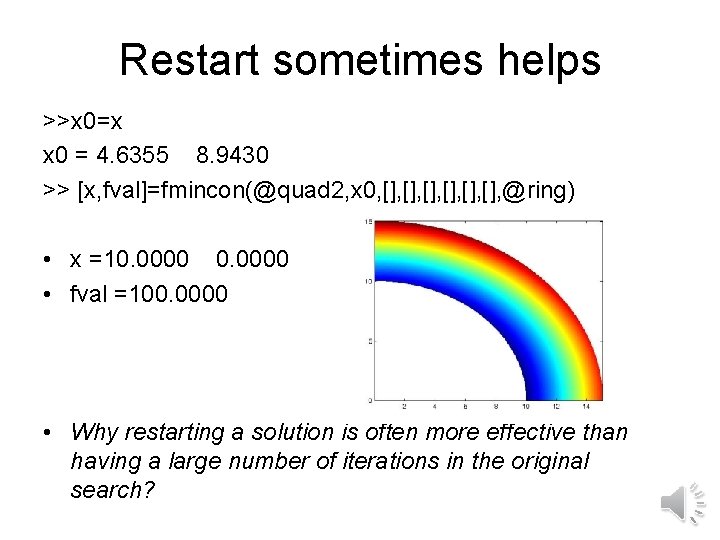

Making it harder for fmincon • >> a=1. 1; • [x, fval]=fmincon(@quad 2, x 0, [], [], [], @ring) • Warning: Trust-region-reflective method does not currently solve this type of problem, • using active-set (line search) instead. • > In fmincon at 437 • Maximum number of function evaluations exceeded; • increase OPTIONS. Max. Fun. Evals. • x =4. 6355 8. 9430 fval =109. 4628

Restart sometimes helps >>x 0=x x 0 = 4. 6355 8. 9430 >> [x, fval]=fmincon(@quad 2, x 0, [], [], [], @ring) • x =10. 0000 • fval =100. 0000 • Why restarting a solution is often more effective than having a large number of iterations in the original search?