Optimality Conditions for Unconstrained optimization One dimensional optimization

- Slides: 16

Optimality Conditions for Unconstrained optimization • One dimensional optimization – Necessary and sufficient conditions • Multidimensional optimization – Classification of stationary points – Necessary and sufficient conditions for local optima. • Convexity and global optimality

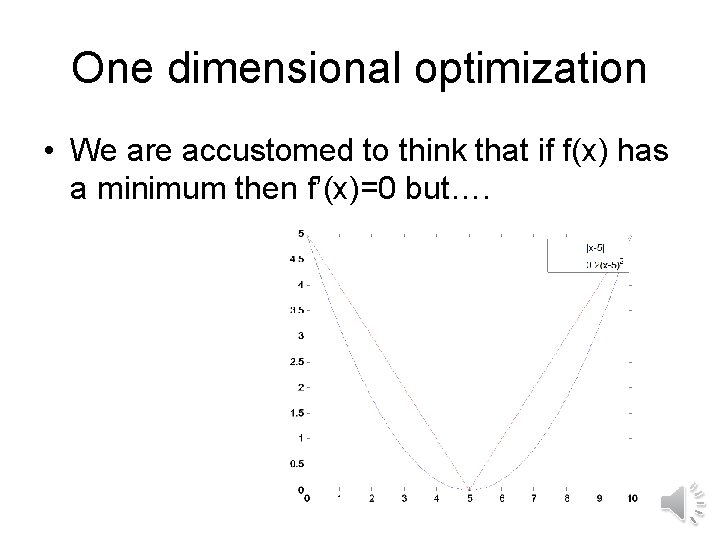

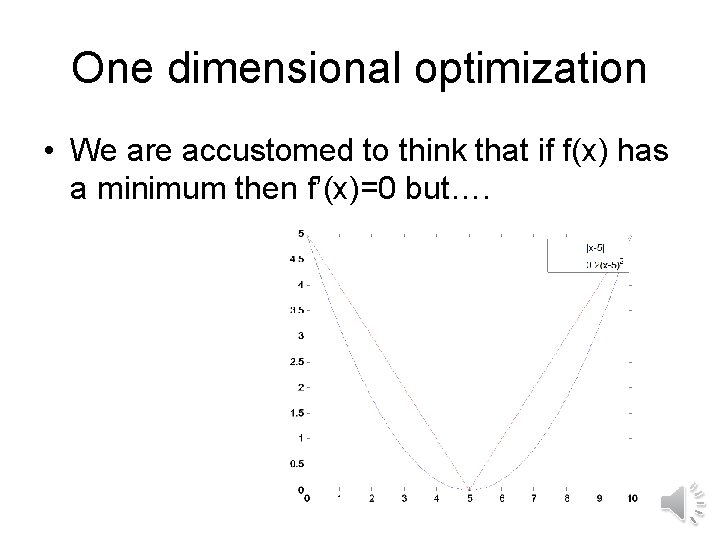

One dimensional optimization • We are accustomed to think that if f(x) has a minimum then f’(x)=0 but….

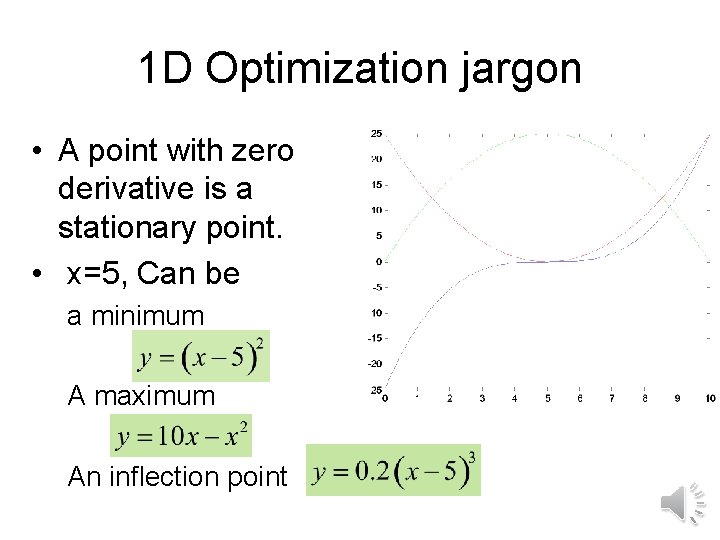

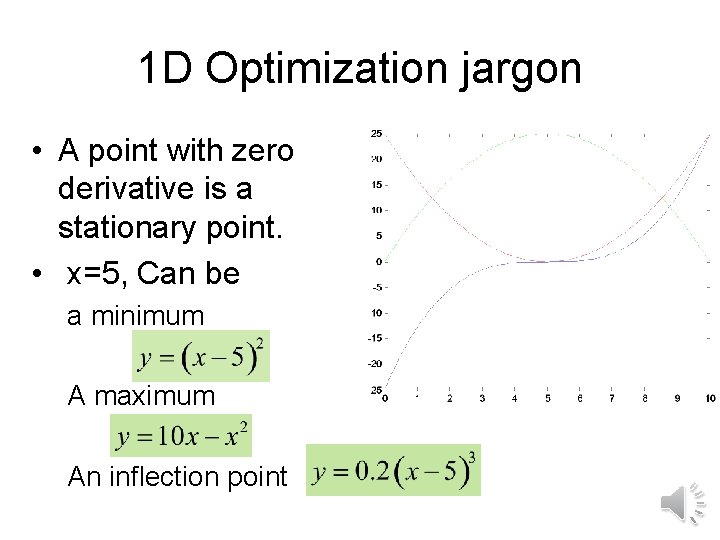

1 D Optimization jargon • A point with zero derivative is a stationary point. • x=5, Can be a minimum A maximum An inflection point

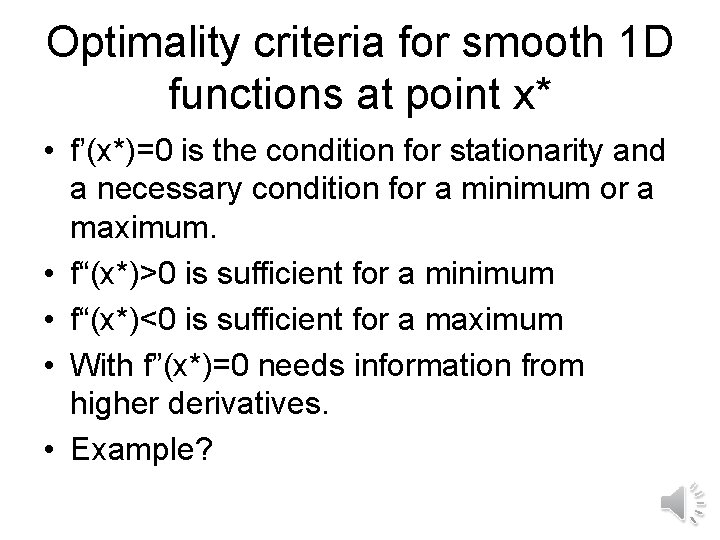

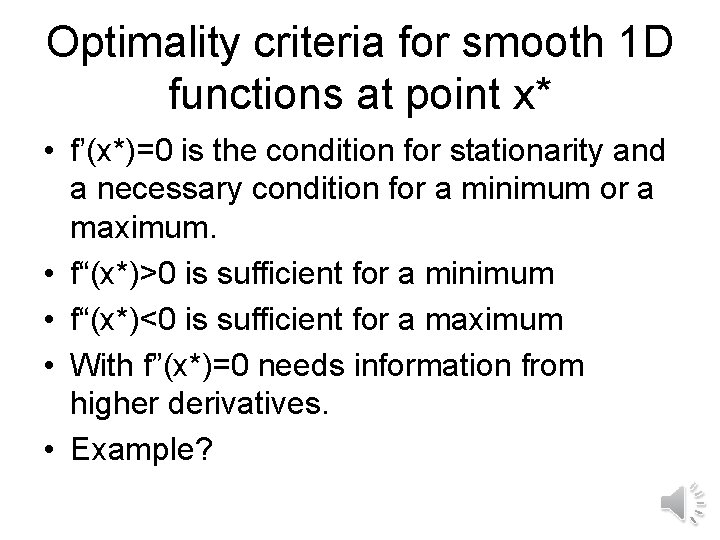

Optimality criteria for smooth 1 D functions at point x* • f’(x*)=0 is the condition for stationarity and a necessary condition for a minimum or a maximum. • f“(x*)>0 is sufficient for a minimum • f“(x*)<0 is sufficient for a maximum • With f”(x*)=0 needs information from higher derivatives. • Example?

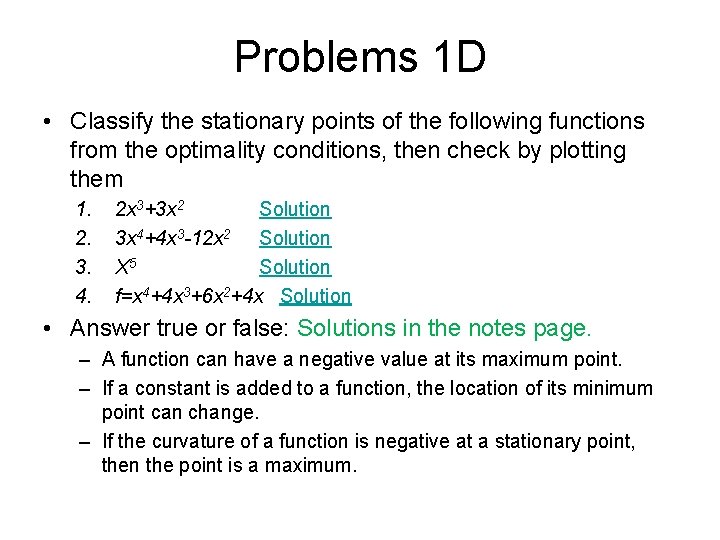

Problems 1 D • Classify the stationary points of the following functions from the optimality conditions, then check by plotting them 1. 2. 3. 4. 2 x 3+3 x 2 Solution 3 x 4+4 x 3 -12 x 2 Solution X 5 Solution f=x 4+4 x 3+6 x 2+4 x Solution • Answer true or false: Solutions in the notes page. – A function can have a negative value at its maximum point. – If a constant is added to a function, the location of its minimum point can change. – If the curvature of a function is negative at a stationary point, then the point is a maximum.

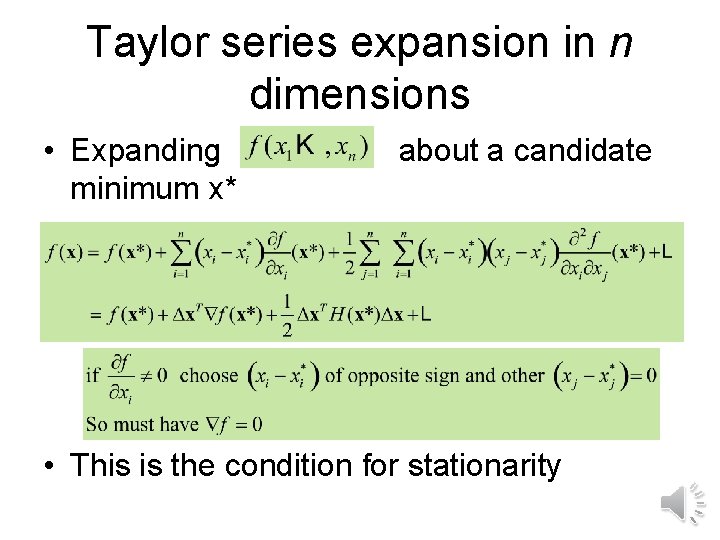

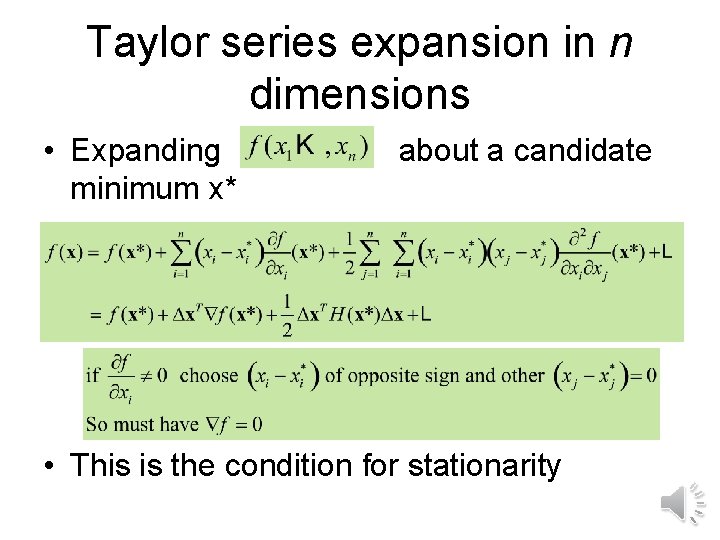

Taylor series expansion in n dimensions • Expanding minimum x* about a candidate • This is the condition for stationarity

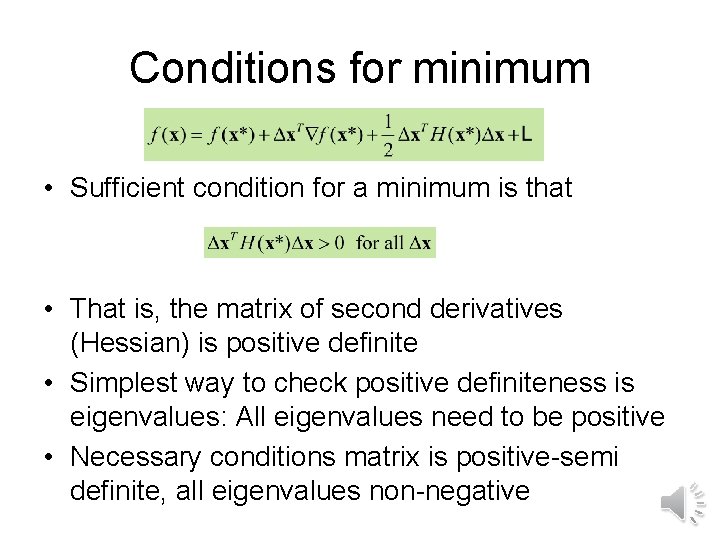

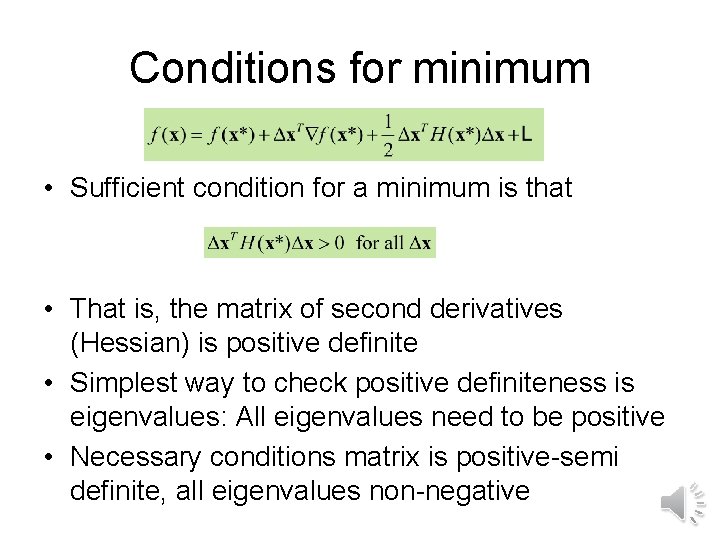

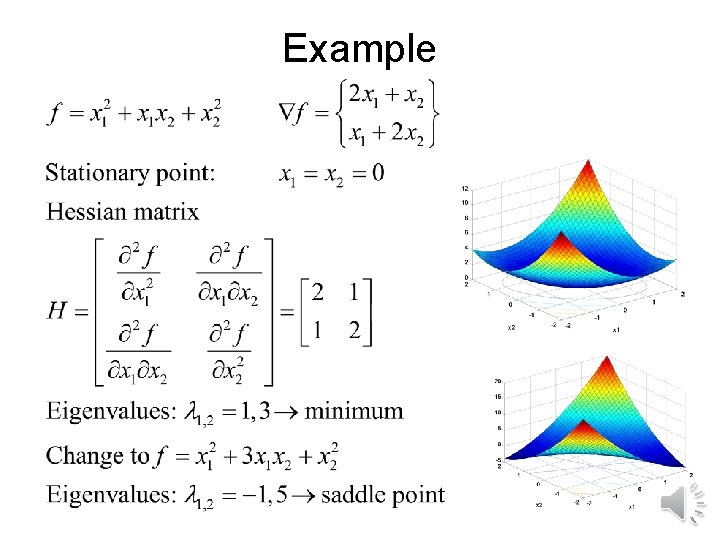

Conditions for minimum • Sufficient condition for a minimum is that • That is, the matrix of second derivatives (Hessian) is positive definite • Simplest way to check positive definiteness is eigenvalues: All eigenvalues need to be positive • Necessary conditions matrix is positive-semi definite, all eigenvalues non-negative

Types of stationary points • • • Positive definite: Minimum Positive semi-definite: possibly minimum Indefinite: Saddle point Negative semi-definite: possibly maximum Negative definite: maximum

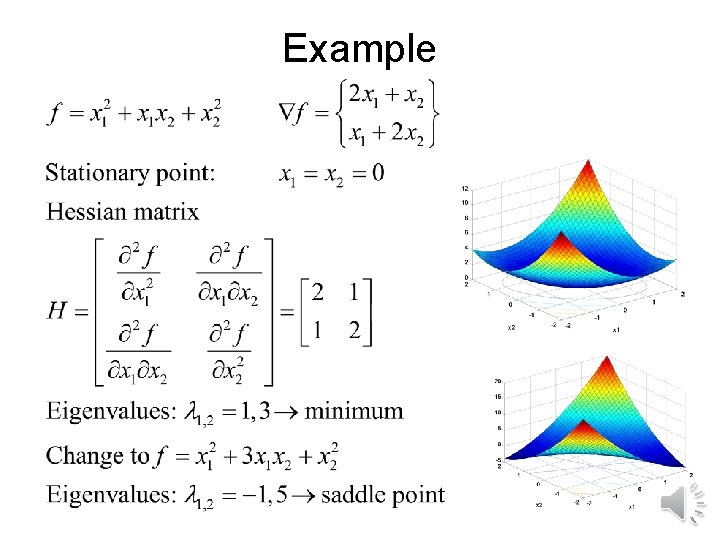

Example

Problems n-dimensional • Find the stationary points of the following functions and classify them: Solution

Global optimization • The function x+sin(2 x)

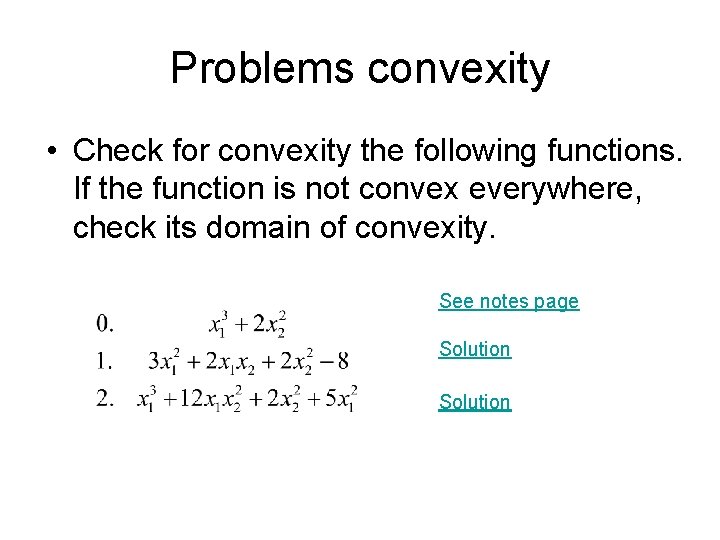

Convex function • A straight line connecting two points will not dip below the function graph. • Convex function will have a single minimum. Sufficient condition: Positive semi-definite Hessian everywhere.

Problems convexity • Check for convexity the following functions. If the function is not convex everywhere, check its domain of convexity. See notes page Solution

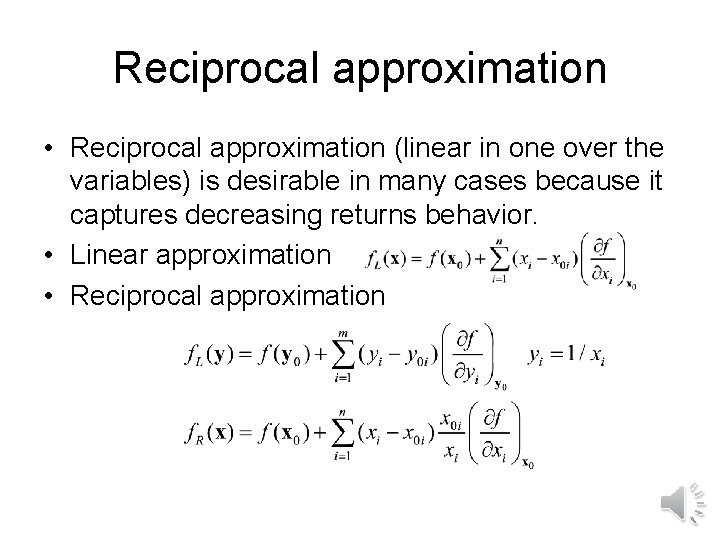

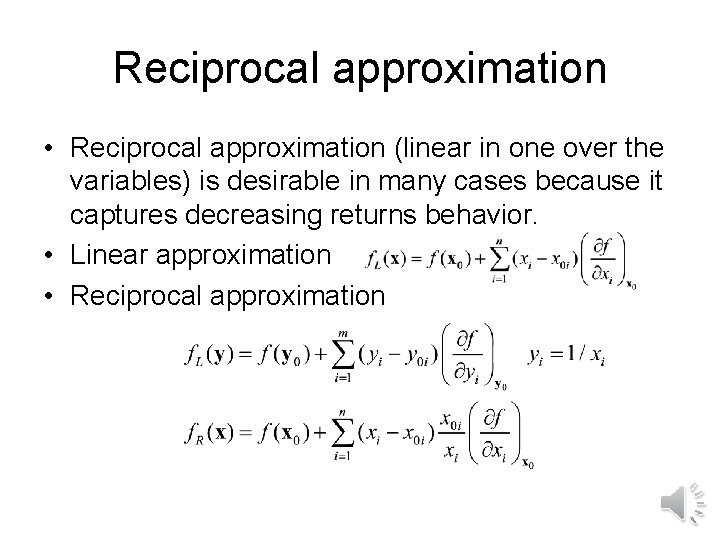

Reciprocal approximation • Reciprocal approximation (linear in one over the variables) is desirable in many cases because it captures decreasing returns behavior. • Linear approximation • Reciprocal approximation

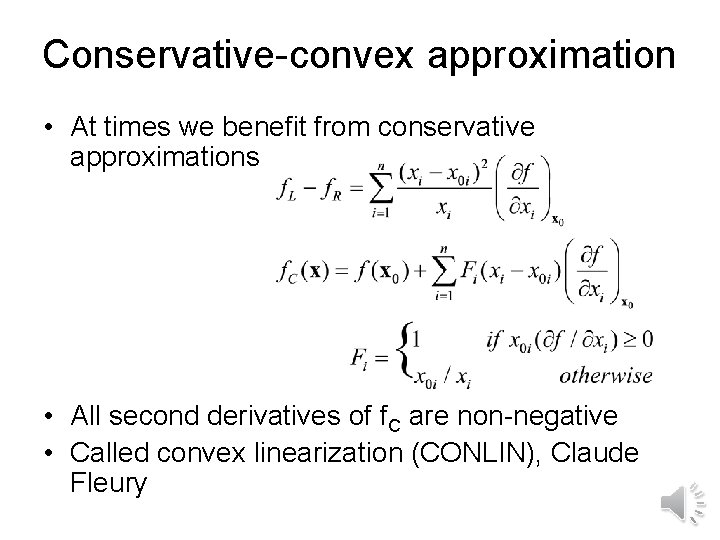

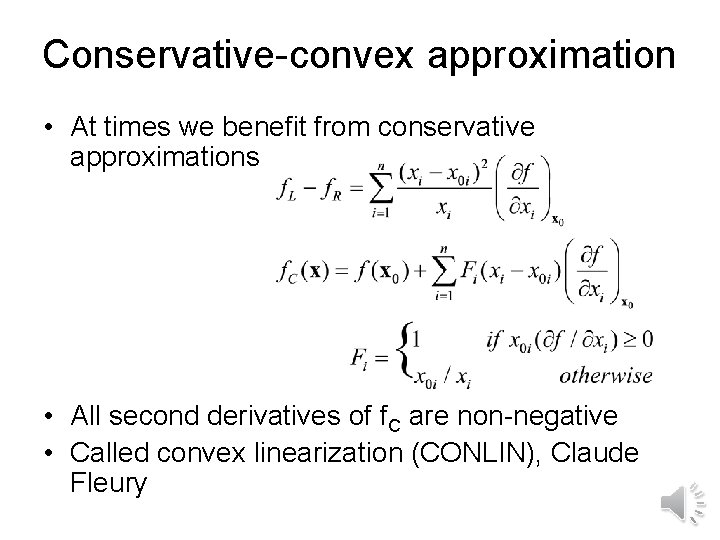

Conservative-convex approximation • At times we benefit from conservative approximations • All second derivatives of f. C are non-negative • Called convex linearization (CONLIN), Claude Fleury

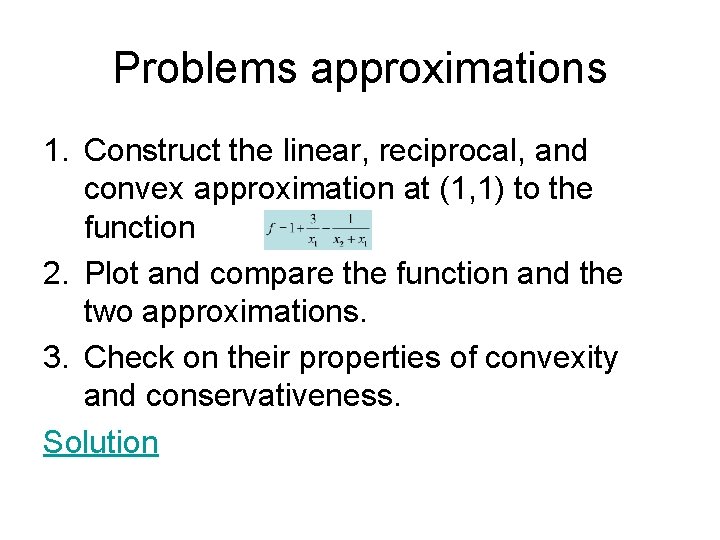

Problems approximations 1. Construct the linear, reciprocal, and convex approximation at (1, 1) to the function 2. Plot and compare the function and the two approximations. 3. Check on their properties of convexity and conservativeness. Solution