Operating Systems Memory Systems Address Translation Computer Science

![Careful Page Mapping [Kessler 92, Bershad 94] • Select a page frame such that Careful Page Mapping [Kessler 92, Bershad 94] • Select a page frame such that](https://slidetodoc.com/presentation_image/354014329d9870984f3a31241f0461c1/image-43.jpg)

![Dynamic Page Coloring • Cache Miss Lookaside (CML) buffer [Bershad 94] – proposed hardware Dynamic Page Coloring • Cache Miss Lookaside (CML) buffer [Bershad 94] – proposed hardware](https://slidetodoc.com/presentation_image/354014329d9870984f3a31241f0461c1/image-52.jpg)

- Slides: 52

Operating Systems & Memory Systems: Address Translation Computer Science 220 ECE 252 Professor Alvin R. Lebeck Fall 2006

Outline • Finish Main Memory • Address Translation – basics – 64 -bit Address Space • Managing memory • OS Performance Throughout • Review Computer Architecture • Interaction with Architectural Decisions © Alvin R. Lebeck 2001 CPS 220 2

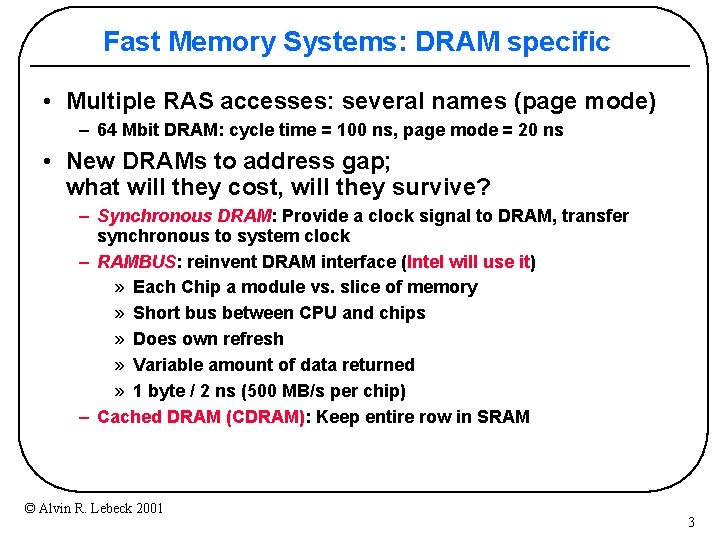

Fast Memory Systems: DRAM specific • Multiple RAS accesses: several names (page mode) – 64 Mbit DRAM: cycle time = 100 ns, page mode = 20 ns • New DRAMs to address gap; what will they cost, will they survive? – Synchronous DRAM: Provide a clock signal to DRAM, transfer synchronous to system clock – RAMBUS: reinvent DRAM interface (Intel will use it) » Each Chip a module vs. slice of memory » Short bus between CPU and chips » Does own refresh » Variable amount of data returned » 1 byte / 2 ns (500 MB/s per chip) – Cached DRAM (CDRAM): Keep entire row in SRAM © Alvin R. Lebeck 2001 3

Main Memory Summary • Big DRAM + Small SRAM = Cost Effective – Cray C-90 uses all SRAM (how many sold? ) • Wider Memory • Interleaved Memory: for sequential or independent accesses • Avoiding bank conflicts: SW & HW • DRAM specific optimizations: page mode & Specialty DRAM, CDRAM – Niche memory or main memory? » e. g. , Video RAM for frame buffers, DRAM + fast serial output • IRAM: Do you know what it is? © Alvin R. Lebeck 2001 4

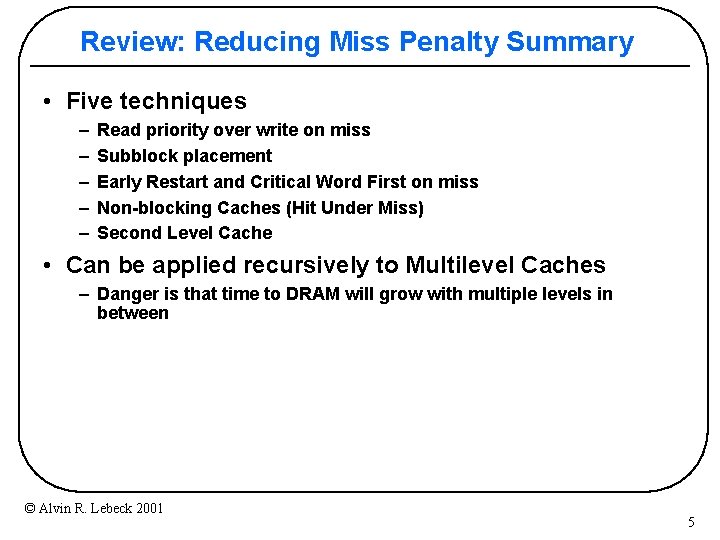

Review: Reducing Miss Penalty Summary • Five techniques – – – Read priority over write on miss Subblock placement Early Restart and Critical Word First on miss Non-blocking Caches (Hit Under Miss) Second Level Cache • Can be applied recursively to Multilevel Caches – Danger is that time to DRAM will grow with multiple levels in between © Alvin R. Lebeck 2001 5

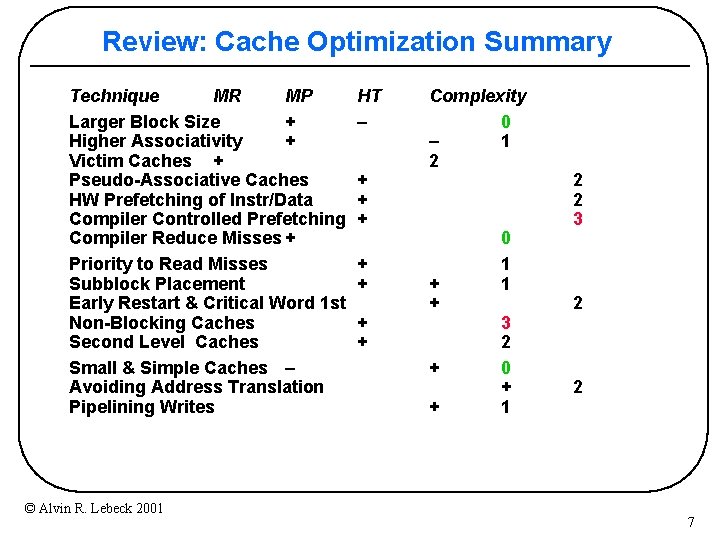

Review: Improving Cache Performance 1. Reduce the miss rate, 2. Reduce the miss penalty, or 3. Reduce the time to hit in the cache © Alvin R. Lebeck 2001 6

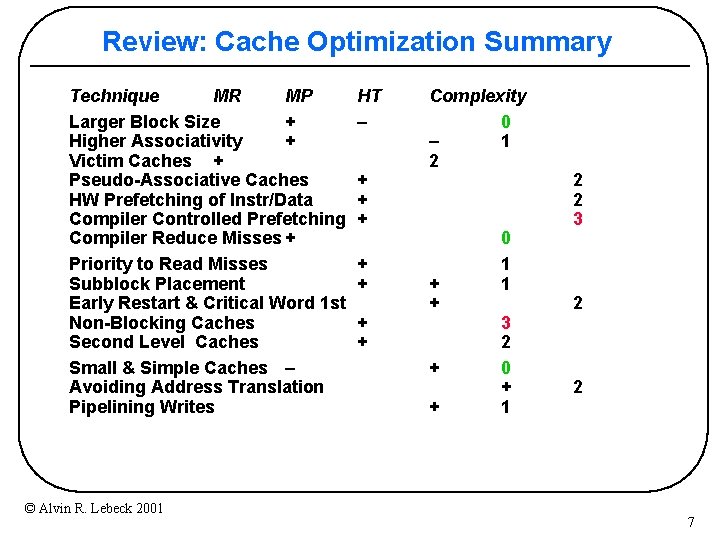

Review: Cache Optimization Summary Technique MR MP Larger Block Size + Higher Associativity + Victim Caches + Pseudo-Associative Caches HW Prefetching of Instr/Data Compiler Controlled Prefetching Compiler Reduce Misses + Priority to Read Misses Subblock Placement Early Restart & Critical Word 1 st Non-Blocking Caches Second Level Caches Small & Simple Caches – Avoiding Address Translation Pipelining Writes © Alvin R. Lebeck 2001 HT – + + + + Complexity 0 – 1 2 + + 0 1 1 3 2 0 + 1 2 2 3 2 2 7

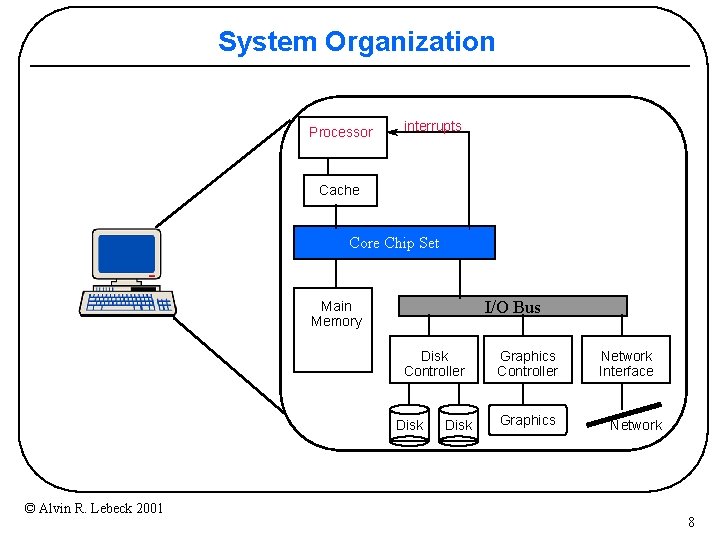

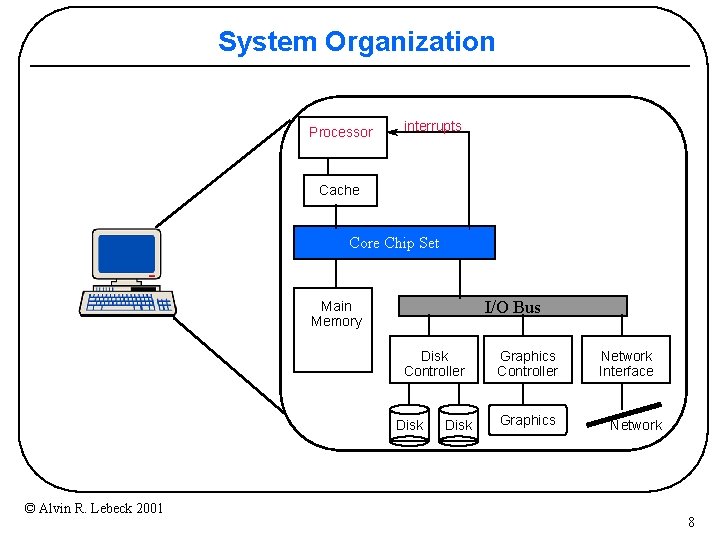

System Organization Processor interrupts Cache Core Chip Set I/O Bus Main Memory Disk Controller Disk © Alvin R. Lebeck 2001 Disk Graphics Controller Graphics Network Interface Network 8

Computer Architecture • Interface Between Hardware and Software Applications Operating System CPU Software Compiler Memory Multiprocessor © Alvin R. Lebeck 2001 This is IT I/O Networks Hardware 9

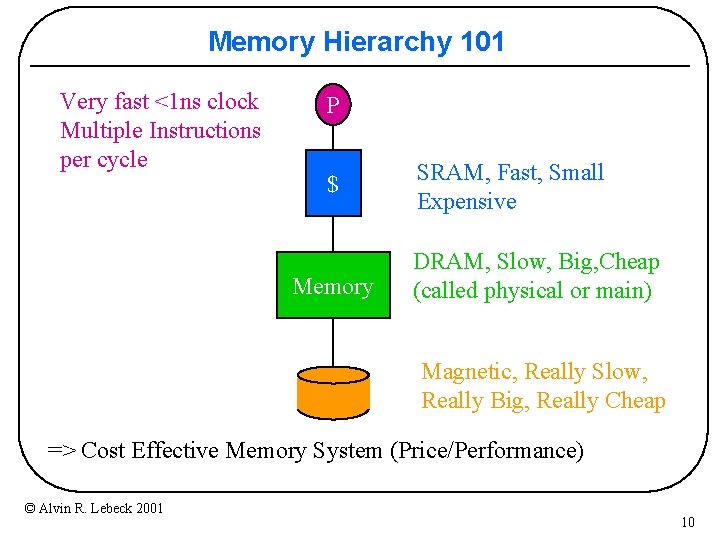

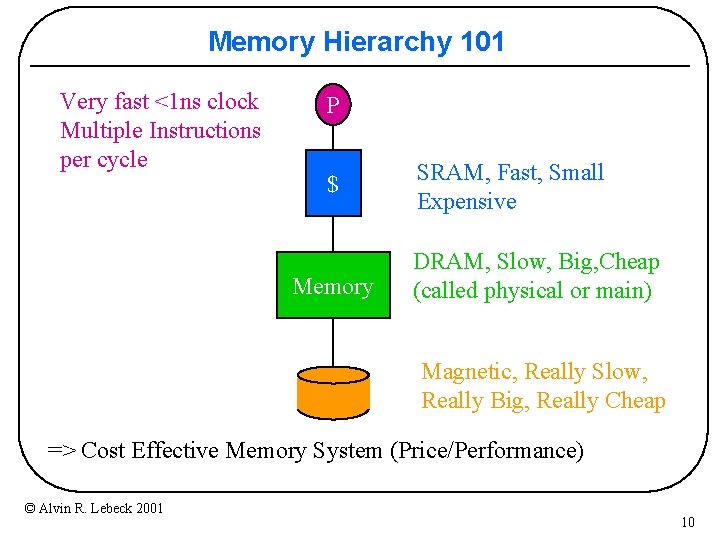

Memory Hierarchy 101 Very fast <1 ns clock Multiple Instructions per cycle P $ Memory SRAM, Fast, Small Expensive DRAM, Slow, Big, Cheap (called physical or main) Magnetic, Really Slow, Really Big, Really Cheap => Cost Effective Memory System (Price/Performance) © Alvin R. Lebeck 2001 10

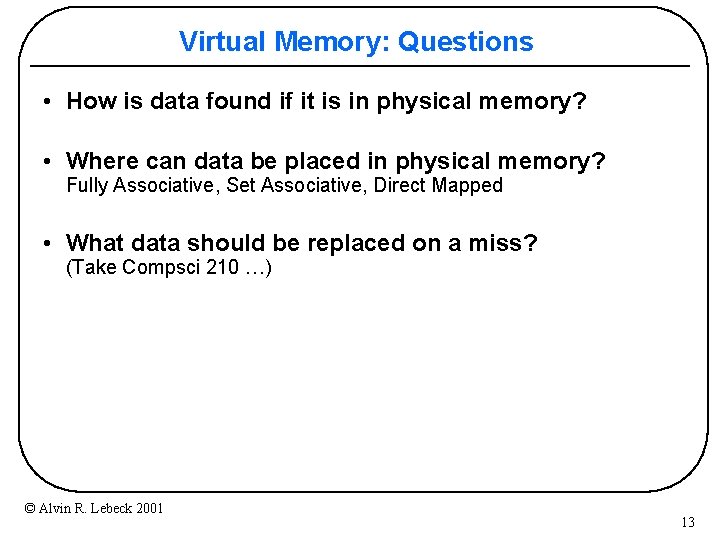

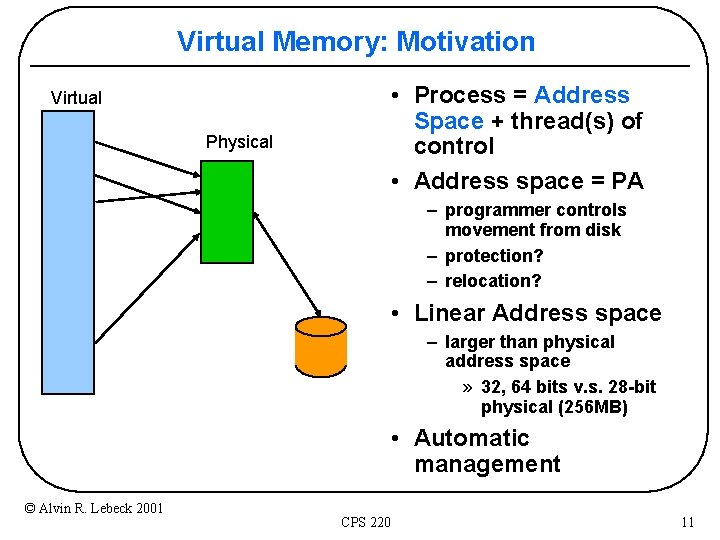

Virtual Memory: Motivation • Process = Address Space + thread(s) of control • Address space = PA Virtual Physical – programmer controls movement from disk – protection? – relocation? • Linear Address space – larger than physical address space » 32, 64 bits v. s. 28 -bit physical (256 MB) • Automatic management © Alvin R. Lebeck 2001 CPS 220 11

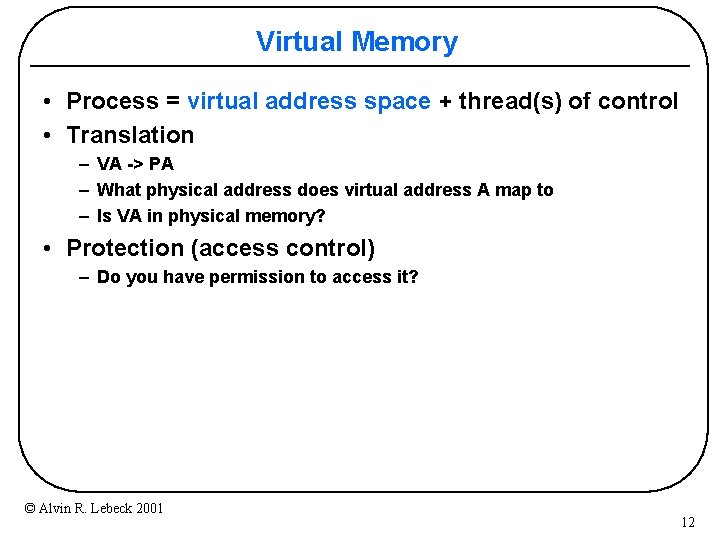

Virtual Memory • Process = virtual address space + thread(s) of control • Translation – VA -> PA – What physical address does virtual address A map to – Is VA in physical memory? • Protection (access control) – Do you have permission to access it? © Alvin R. Lebeck 2001 12

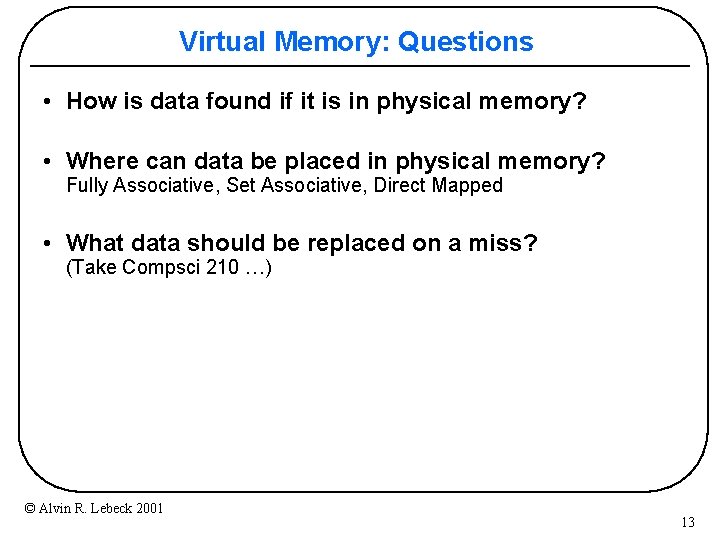

Virtual Memory: Questions • How is data found if it is in physical memory? • Where can data be placed in physical memory? Fully Associative, Set Associative, Direct Mapped • What data should be replaced on a miss? (Take Compsci 210 …) © Alvin R. Lebeck 2001 13

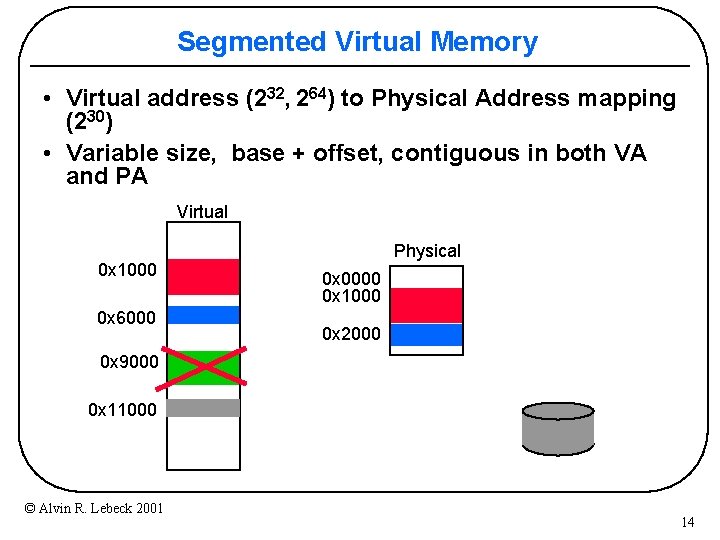

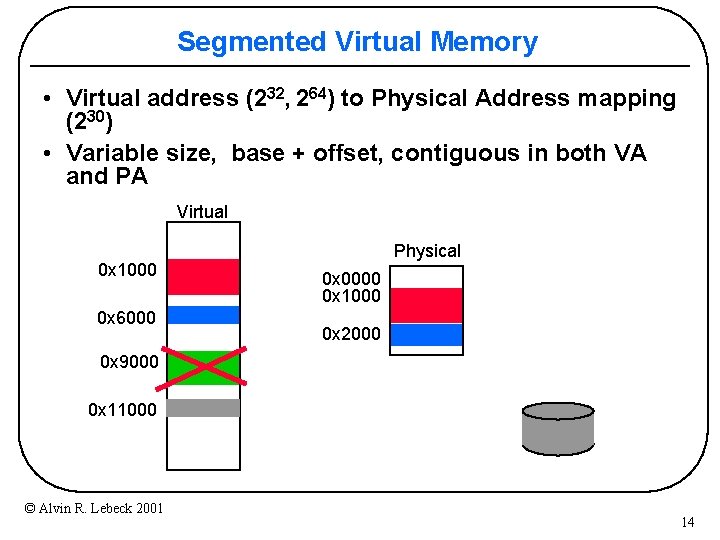

Segmented Virtual Memory • Virtual address (232, 264) to Physical Address mapping (230) • Variable size, base + offset, contiguous in both VA and PA Virtual 0 x 1000 0 x 6000 Physical 0 x 0000 0 x 1000 0 x 2000 0 x 9000 0 x 11000 © Alvin R. Lebeck 2001 14

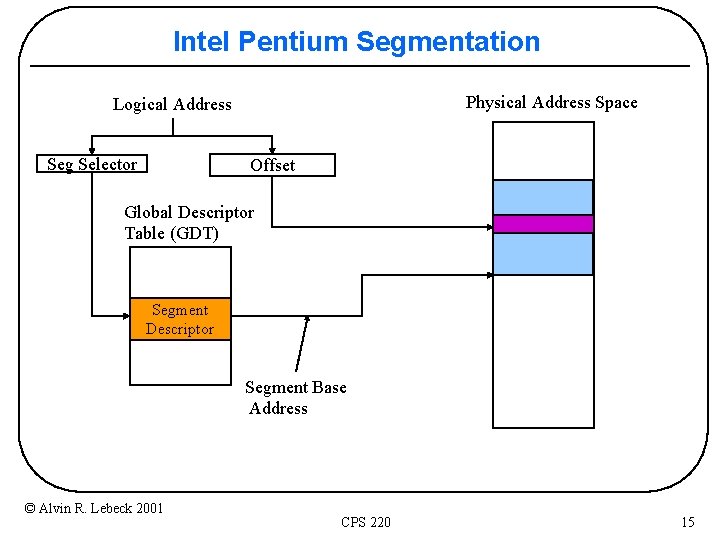

Intel Pentium Segmentation Physical Address Space Logical Address Seg Selector Offset Global Descriptor Table (GDT) Segment Descriptor Segment Base Address © Alvin R. Lebeck 2001 CPS 220 15

Pentium Segmention (Continued) • Segment Descriptors – Local and Global – base, limit, access rights – Can define many • Segment Registers – contain segment descriptors (faster than load from mem) – Only 6 • Must load segment register with a valid entry before segment can be accessed – generally managed by compiler, linker, not programmer © Alvin R. Lebeck 2001 CPS 220 16

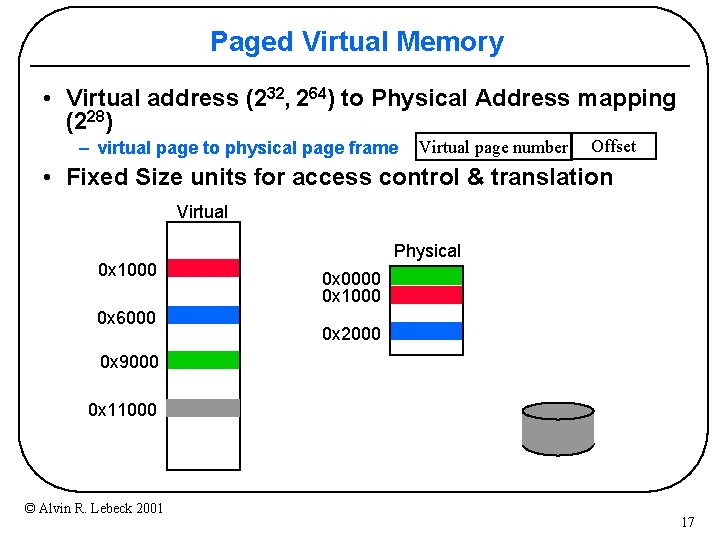

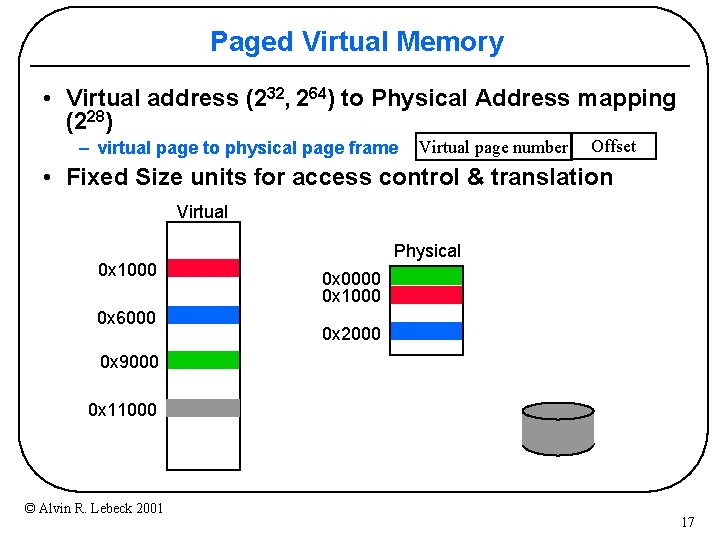

Paged Virtual Memory • Virtual address (232, 264) to Physical Address mapping (228) – virtual page to physical page frame Virtual page number Offset • Fixed Size units for access control & translation Virtual 0 x 1000 0 x 6000 Physical 0 x 0000 0 x 1000 0 x 2000 0 x 9000 0 x 11000 © Alvin R. Lebeck 2001 17

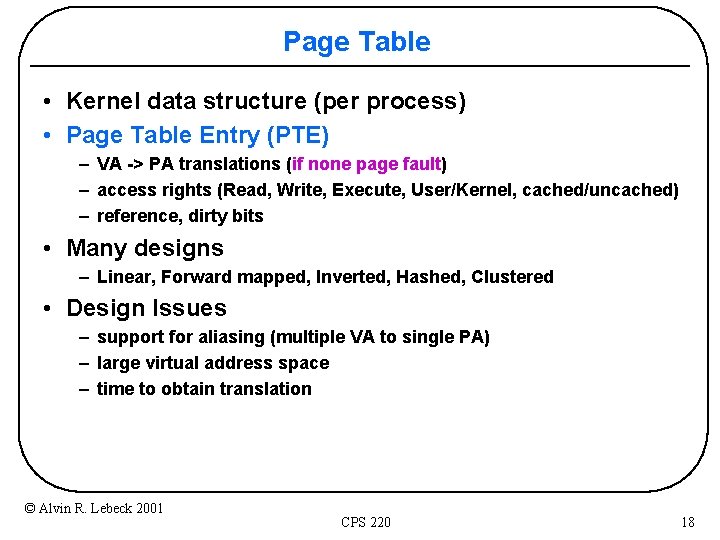

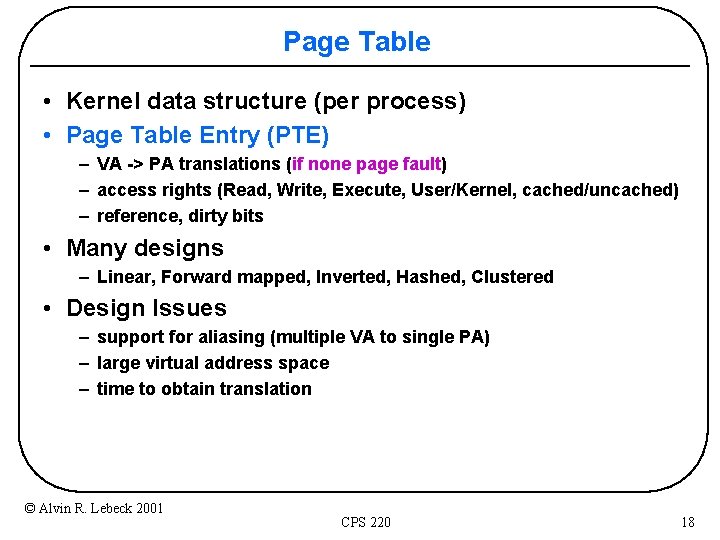

Page Table • Kernel data structure (per process) • Page Table Entry (PTE) – VA -> PA translations (if none page fault) – access rights (Read, Write, Execute, User/Kernel, cached/uncached) – reference, dirty bits • Many designs – Linear, Forward mapped, Inverted, Hashed, Clustered • Design Issues – support for aliasing (multiple VA to single PA) – large virtual address space – time to obtain translation © Alvin R. Lebeck 2001 CPS 220 18

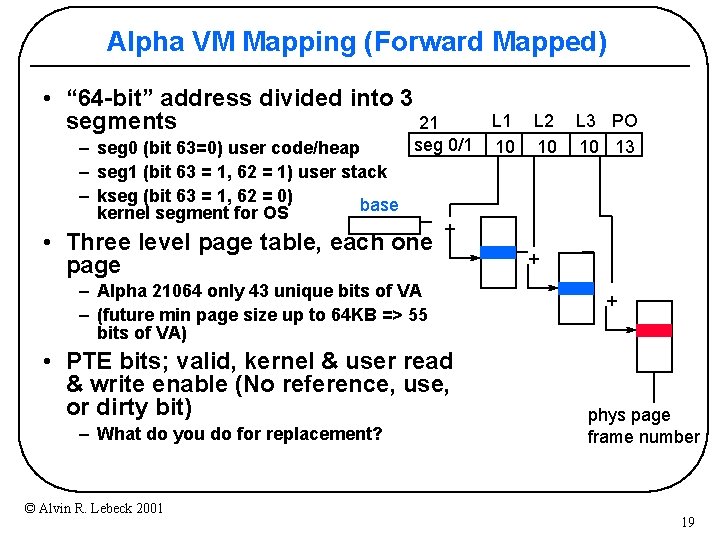

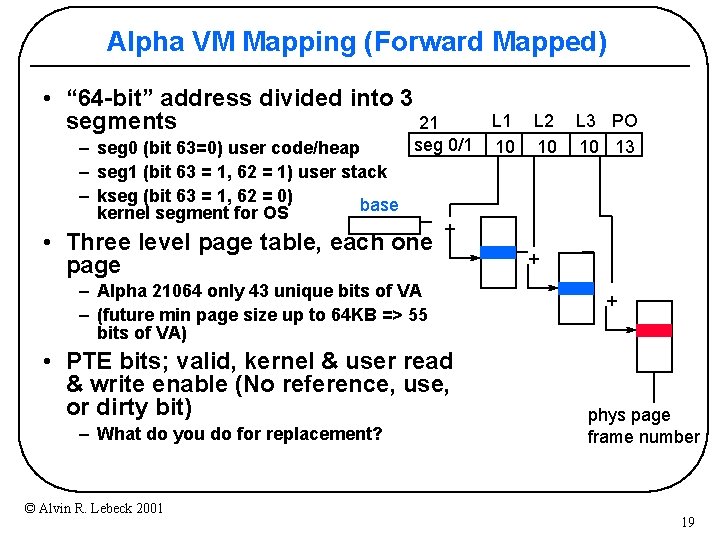

Alpha VM Mapping (Forward Mapped) • “ 64 -bit” address divided into 3 21 segments seg 0/1 – seg 0 (bit 63=0) user code/heap – seg 1 (bit 63 = 1, 62 = 1) user stack – kseg (bit 63 = 1, 62 = 0) base kernel segment for OS + • Three level page table, each one page – Alpha 21064 only 43 unique bits of VA – (future min page size up to 64 KB => 55 bits of VA) • PTE bits; valid, kernel & user read & write enable (No reference, use, or dirty bit) – What do you do for replacement? © Alvin R. Lebeck 2001 L 1 10 L 2 10 L 3 PO 10 13 + + phys page frame number 19

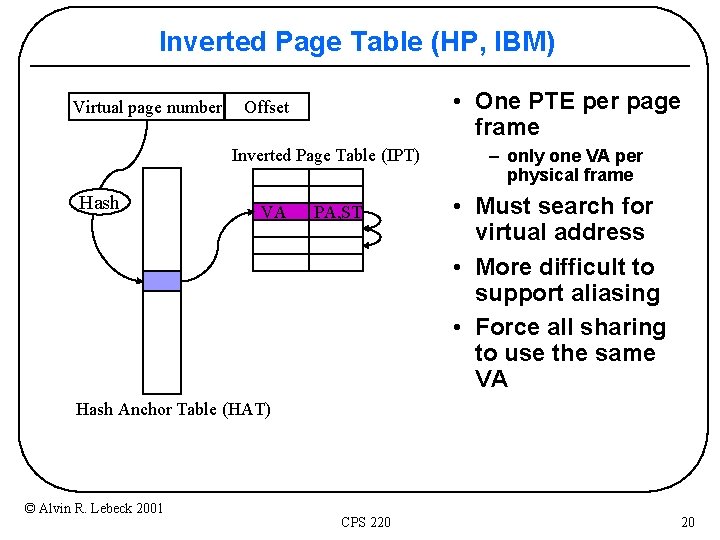

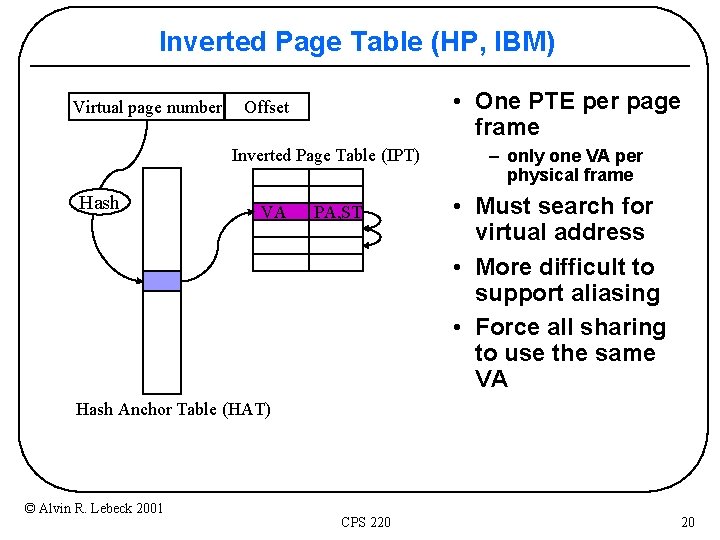

Inverted Page Table (HP, IBM) Virtual page number • One PTE per page frame Offset Inverted Page Table (IPT) Hash VA PA, ST – only one VA per physical frame • Must search for virtual address • More difficult to support aliasing • Force all sharing to use the same VA Hash Anchor Table (HAT) © Alvin R. Lebeck 2001 CPS 220 20

Intel Pentium Segmentation + Paging Logical Address Dir Table Seg Selector Physical Address Space Linear Address Space Offset Page Table Global Descriptor Table (GDT) Page Dir Segment Descriptor Segment Base Address © Alvin R. Lebeck 2001 CPS 220 21

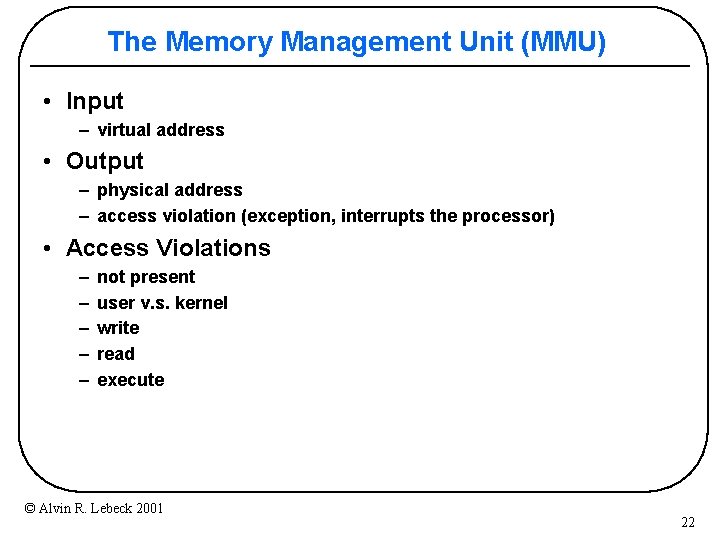

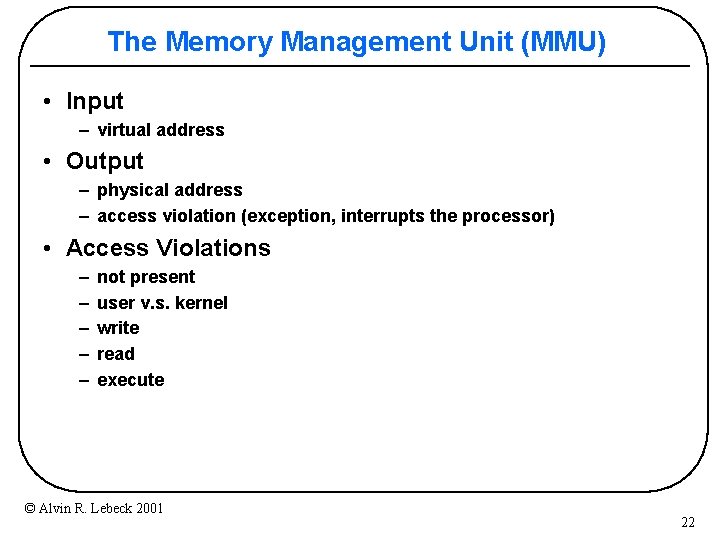

The Memory Management Unit (MMU) • Input – virtual address • Output – physical address – access violation (exception, interrupts the processor) • Access Violations – – – not present user v. s. kernel write read execute © Alvin R. Lebeck 2001 22

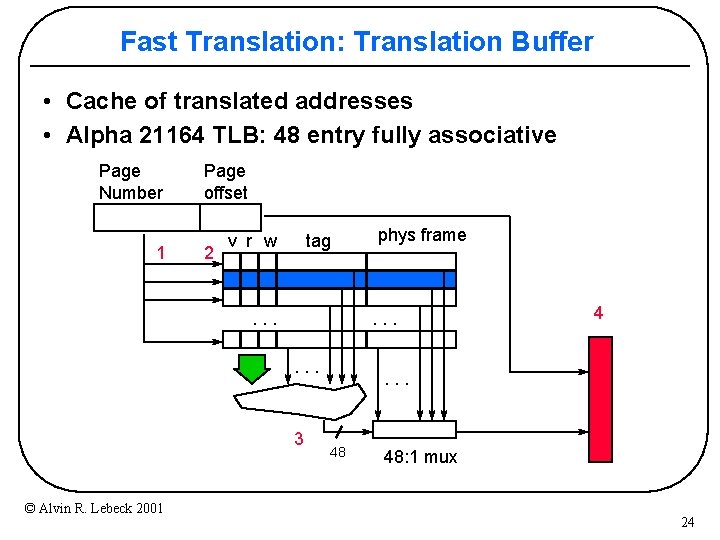

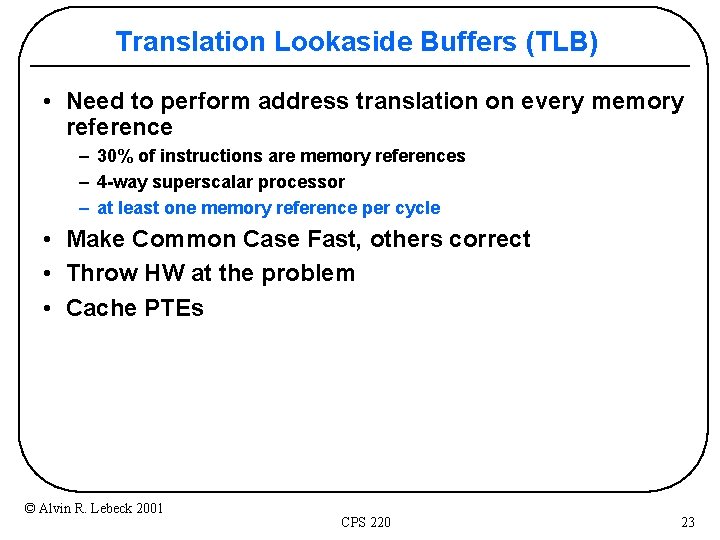

Translation Lookaside Buffers (TLB) • Need to perform address translation on every memory reference – 30% of instructions are memory references – 4 -way superscalar processor – at least one memory reference per cycle • Make Common Case Fast, others correct • Throw HW at the problem • Cache PTEs © Alvin R. Lebeck 2001 CPS 220 23

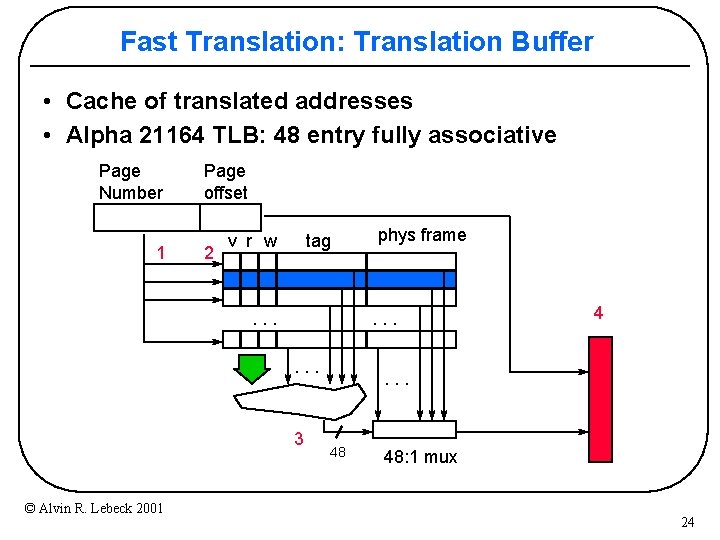

Fast Translation: Translation Buffer • Cache of translated addresses • Alpha 21164 TLB: 48 entry fully associative Page Number 1 Page offset 2 v r w tag . . 3 © Alvin R. Lebeck 2001 phys frame 4 . . . 48 48: 1 mux 24

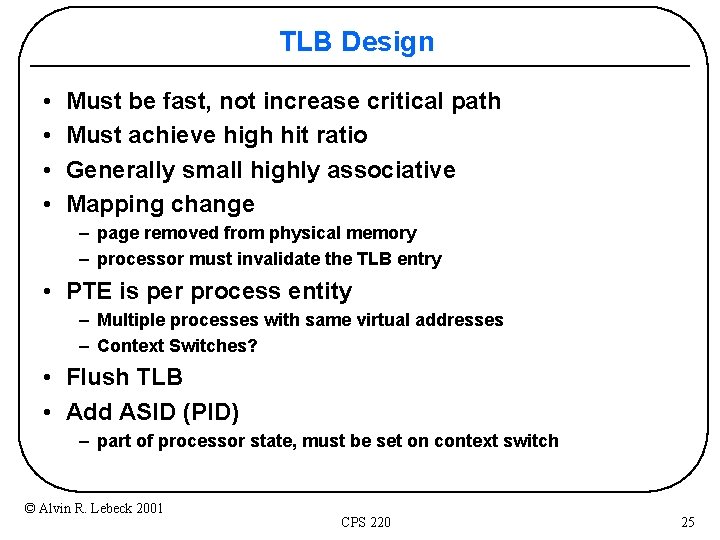

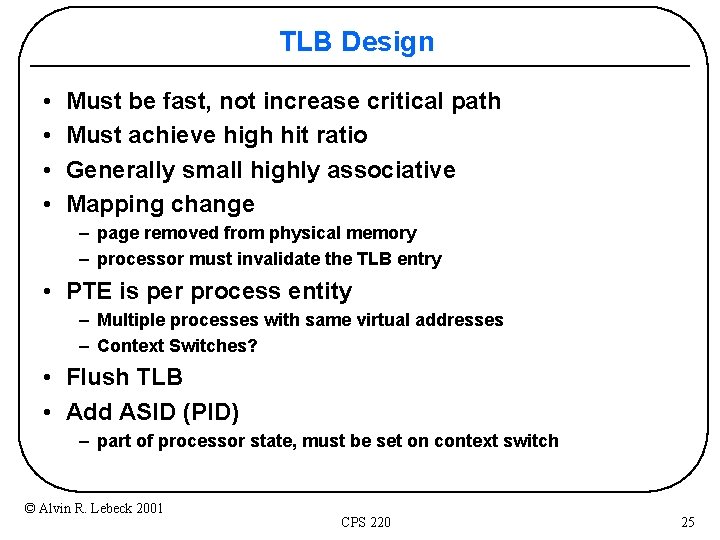

TLB Design • • Must be fast, not increase critical path Must achieve high hit ratio Generally small highly associative Mapping change – page removed from physical memory – processor must invalidate the TLB entry • PTE is per process entity – Multiple processes with same virtual addresses – Context Switches? • Flush TLB • Add ASID (PID) – part of processor state, must be set on context switch © Alvin R. Lebeck 2001 CPS 220 25

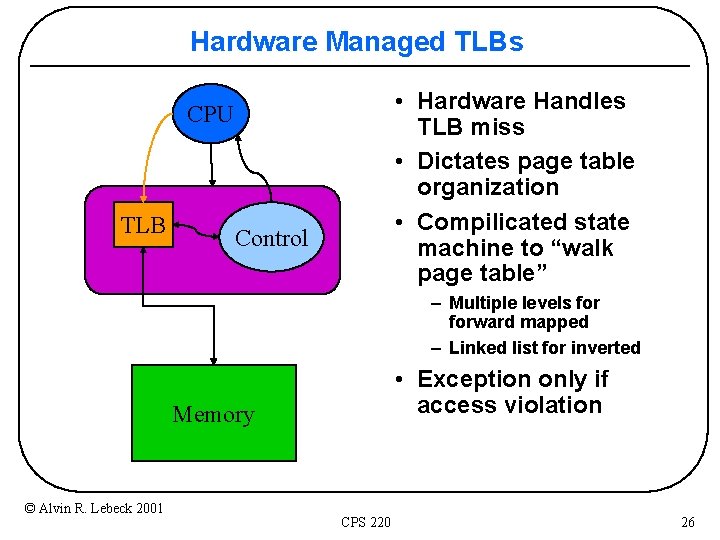

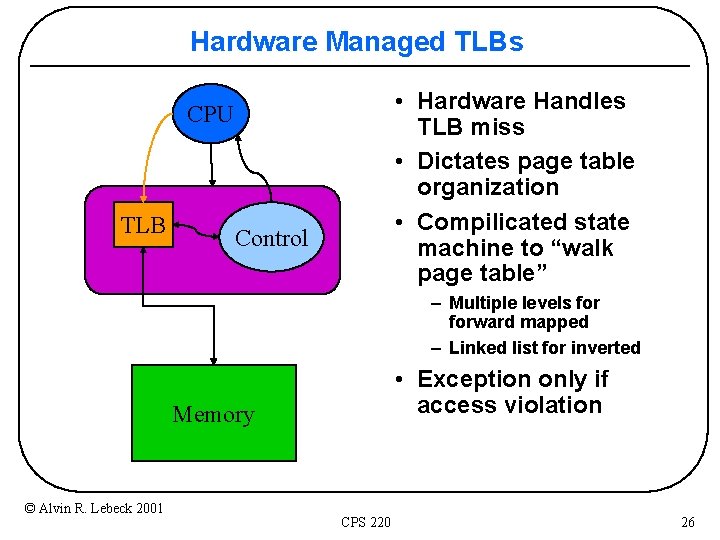

Hardware Managed TLBs • Hardware Handles TLB miss • Dictates page table organization • Compilicated state machine to “walk page table” CPU TLB Control – Multiple levels forward mapped – Linked list for inverted • Exception only if access violation Memory © Alvin R. Lebeck 2001 CPS 220 26

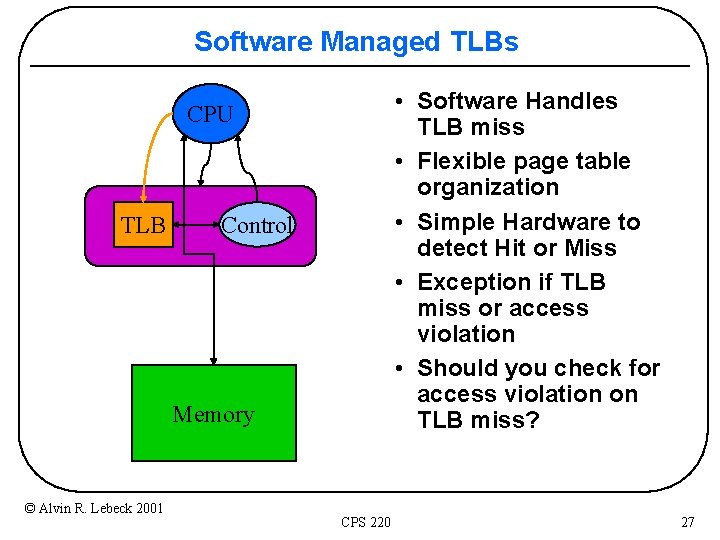

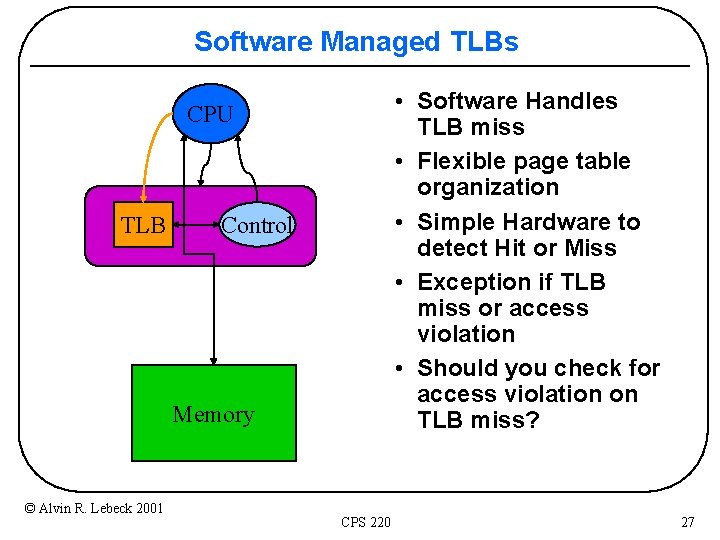

Software Managed TLBs • Software Handles TLB miss • Flexible page table organization • Simple Hardware to detect Hit or Miss • Exception if TLB miss or access violation • Should you check for access violation on TLB miss? CPU TLB Control Memory © Alvin R. Lebeck 2001 CPS 220 27

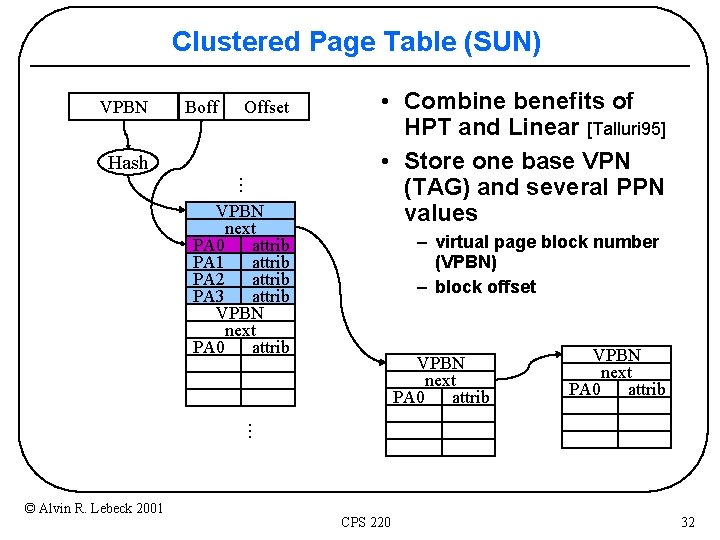

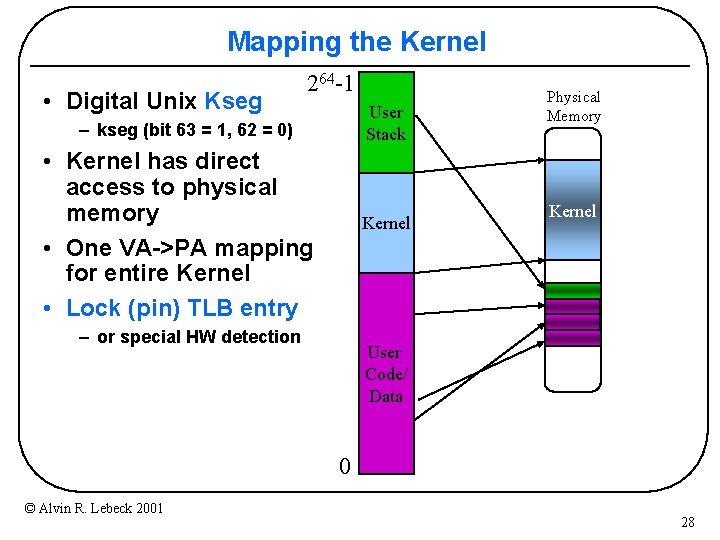

Mapping the Kernel • Digital Unix Kseg 264 -1 User Stack – kseg (bit 63 = 1, 62 = 0) • Kernel has direct access to physical memory • One VA->PA mapping for entire Kernel • Lock (pin) TLB entry Kernel – or special HW detection Physical Memory Kernel User Code/ Data 0 © Alvin R. Lebeck 2001 28

Considerations for Address Translation Large virtual address space • Can map more things – – files frame buffers network interfaces memory from another workstation • Sparse use of address space • Page Table Design – space – less locality => TLB misses OS structure • microkernel => more TLB misses © Alvin R. Lebeck 2001 CPS 220 29

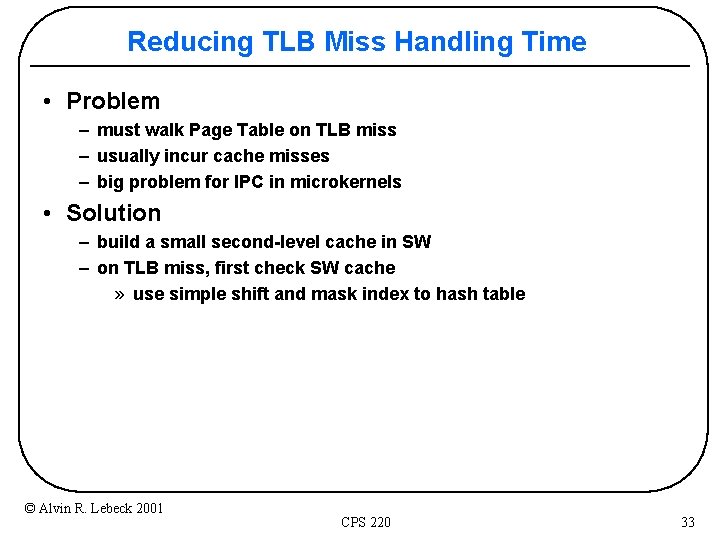

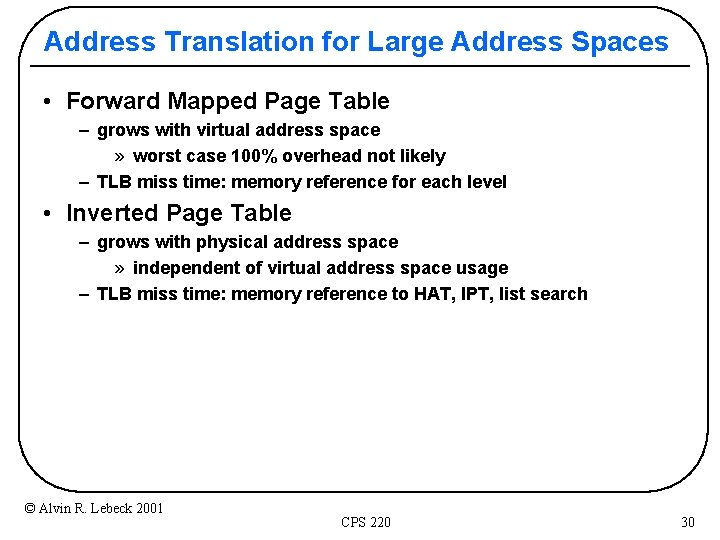

Address Translation for Large Address Spaces • Forward Mapped Page Table – grows with virtual address space » worst case 100% overhead not likely – TLB miss time: memory reference for each level • Inverted Page Table – grows with physical address space » independent of virtual address space usage – TLB miss time: memory reference to HAT, IPT, list search © Alvin R. Lebeck 2001 CPS 220 30

Hashed Page Table (HP) Virtual page number • Combine Hash Table and IPT [Huck 96] Offset – can have more entries than physical page frames Hashed Page Table (HPT) VA PA, ST • Must search for virtual address • Easier to support aliasing than IPT • Space – grows with physical space • TLB miss – one less memory ref than IPT © Alvin R. Lebeck 2001 CPS 220 31

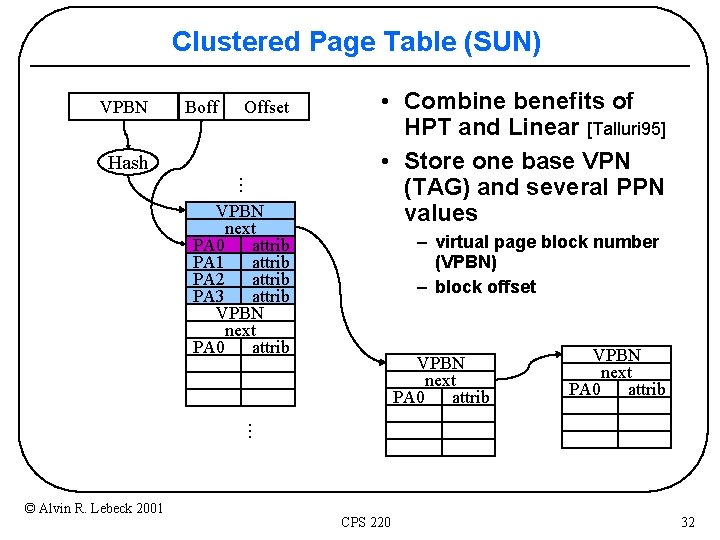

Clustered Page Table (SUN) VPBN Boff Offset . . . Hash – virtual page block number (VPBN) – block offset VPBN next PA 0 attrib . . . VPBN next PA 0 attrib PA 1 attrib PA 2 attrib PA 3 attrib VPBN next PA 0 attrib • Combine benefits of HPT and Linear [Talluri 95] • Store one base VPN (TAG) and several PPN values © Alvin R. Lebeck 2001 CPS 220 32

Reducing TLB Miss Handling Time • Problem – must walk Page Table on TLB miss – usually incur cache misses – big problem for IPC in microkernels • Solution – build a small second-level cache in SW – on TLB miss, first check SW cache » use simple shift and mask index to hash table © Alvin R. Lebeck 2001 CPS 220 33

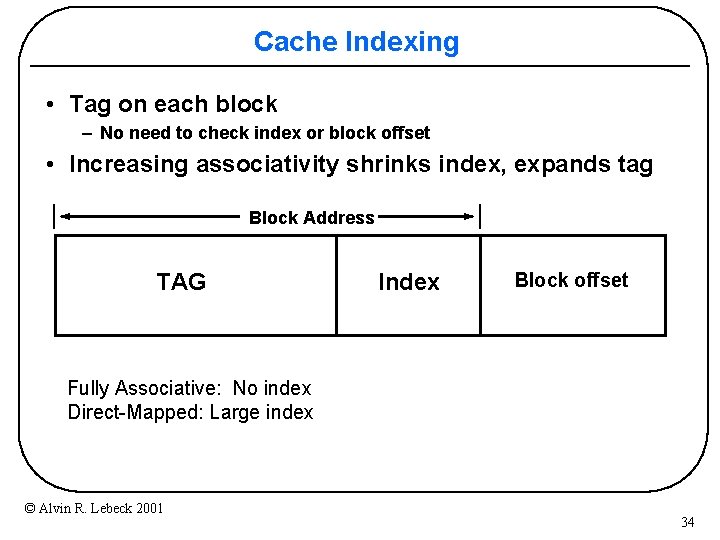

Cache Indexing • Tag on each block – No need to check index or block offset • Increasing associativity shrinks index, expands tag Block Address TAG Index Block offset Fully Associative: No index Direct-Mapped: Large index © Alvin R. Lebeck 2001 34

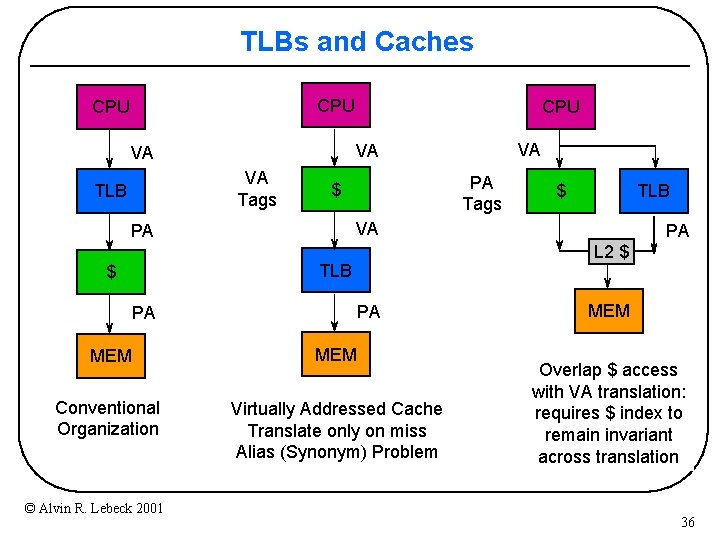

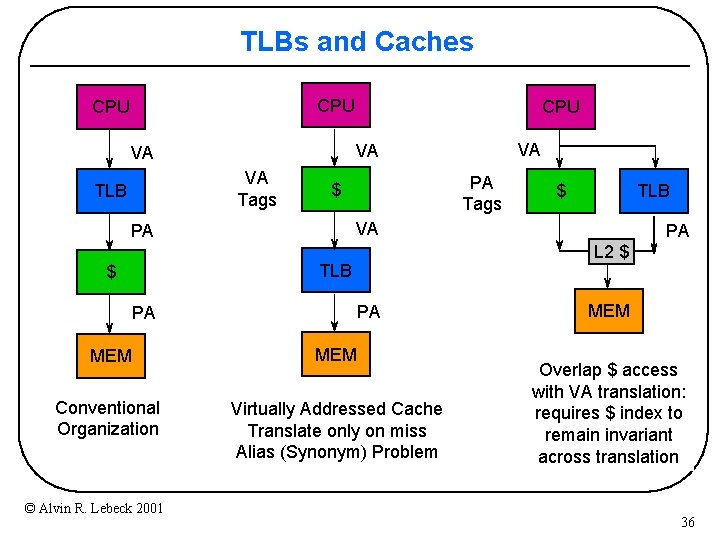

Address Translation and Caches • Where is the TLB wrt the cache? • What are the consequences? • Most of today’s systems have more than 1 cache – Digital 21164 has 3 levels – 2 levels on chip (8 KB-data, 8 KB-inst, 96 KB-unified) – one level off chip (2 -4 MB) • Does the OS need to worry about this? Definition: page coloring = careful selection of va->pa mapping © Alvin R. Lebeck 2001 CPS 220 35

TLBs and Caches CPU VA Tags PA Tags $ TLB PA PA PA L 2 $ TLB MEM Conventional Organization Virtually Addressed Cache Translate only on miss Alias (Synonym) Problem © Alvin R. Lebeck 2001 $ VA PA $ VA VA VA TLB CPU MEM Overlap $ access with VA translation: requires $ index to remain invariant across translation 36

Virtual Caches • Send virtual address to cache. Called Virtually Addressed Cache or just Virtual Cache vs. Physical Cache or Real Cache • Avoid address translation before accessing cache – faster hit time to cache • Context Switches? – Just like the TLB (flush or pid) – Cost is time to flush + “compulsory” misses from empty cache – Add process identifier tag that identifies process as well as address within process: can’t get a hit if wrong process • I/O must interact with cache © Alvin R. Lebeck 2001 CPS 220 37

I/O and Virtual Caches Virtual Cache Physical Addresses I/O is accomplished with physical addresses DMA • flush pages from cache • need pa->va reverse translation • coherent DMA © Alvin R. Lebeck 2001 Processor interrupts Cache Memory Bus I/O Bridge I/O Bus Main Memory Disk Controller Disk Graphics Controller Graphics Network Interface Network 38

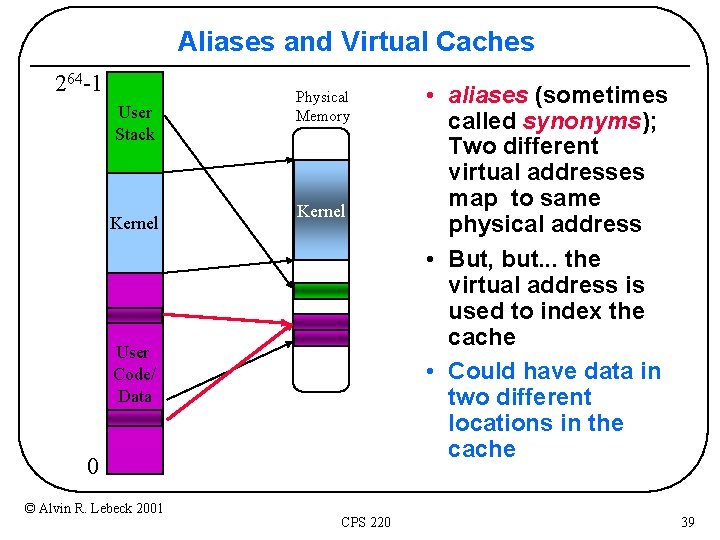

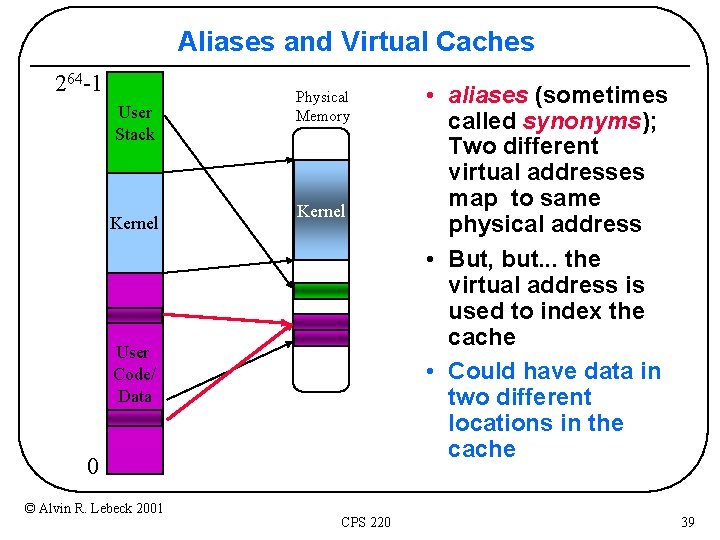

Aliases and Virtual Caches 264 -1 User Stack Kernel Physical Memory Kernel User Code/ Data 0 © Alvin R. Lebeck 2001 CPS 220 • aliases (sometimes called synonyms); Two different virtual addresses map to same physical address • But, but. . . the virtual address is used to index the cache • Could have data in two different locations in the cache 39

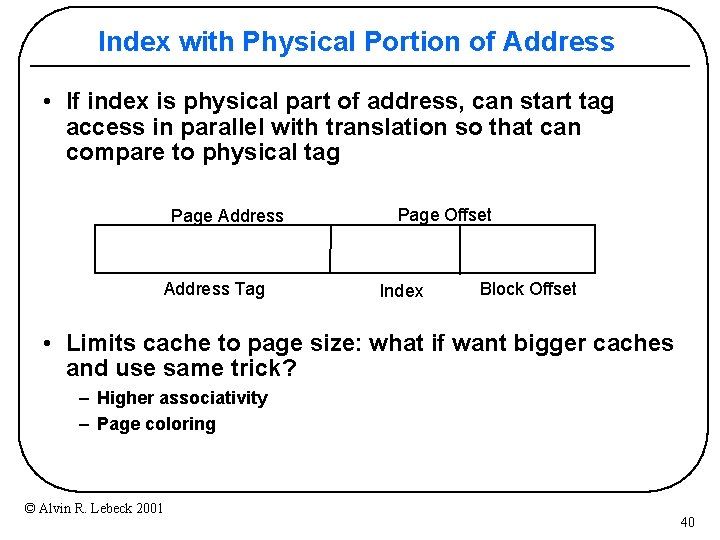

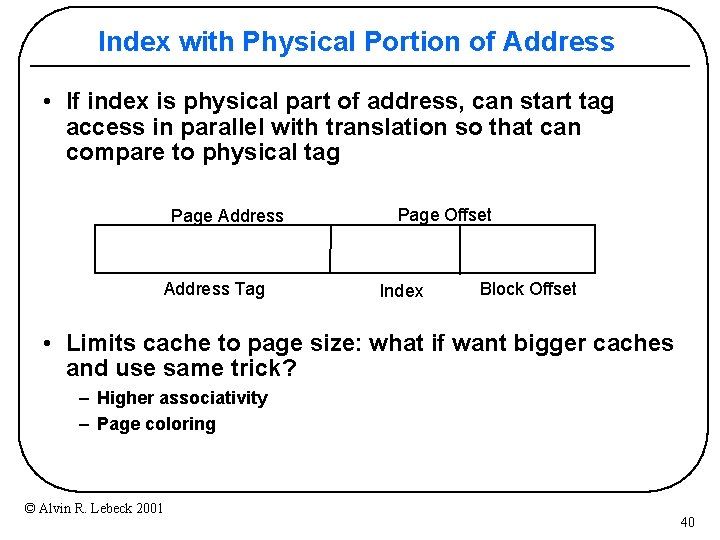

Index with Physical Portion of Address • If index is physical part of address, can start tag access in parallel with translation so that can compare to physical tag Page Address Tag Page Offset Index Block Offset • Limits cache to page size: what if want bigger caches and use same trick? – Higher associativity – Page coloring © Alvin R. Lebeck 2001 40

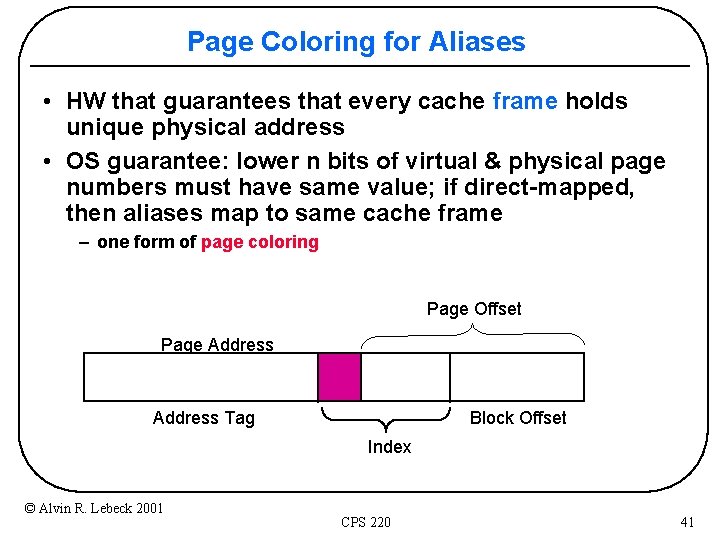

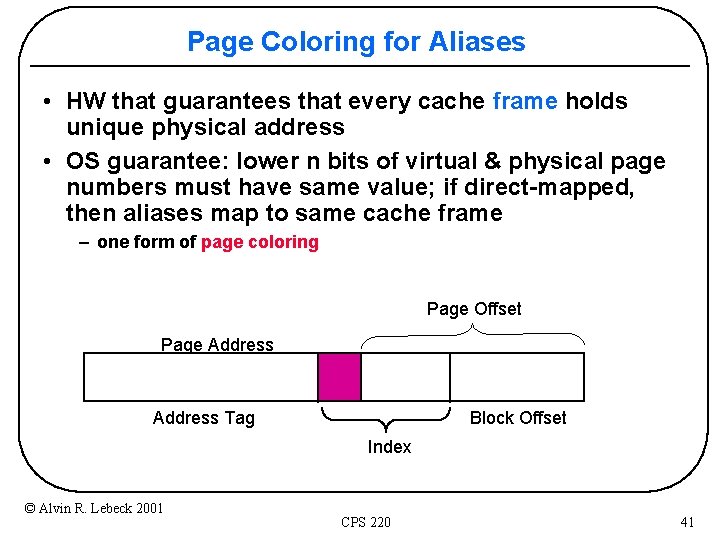

Page Coloring for Aliases • HW that guarantees that every cache frame holds unique physical address • OS guarantee: lower n bits of virtual & physical page numbers must have same value; if direct-mapped, then aliases map to same cache frame – one form of page coloring Page Offset Page Address Tag Block Offset Index © Alvin R. Lebeck 2001 CPS 220 41

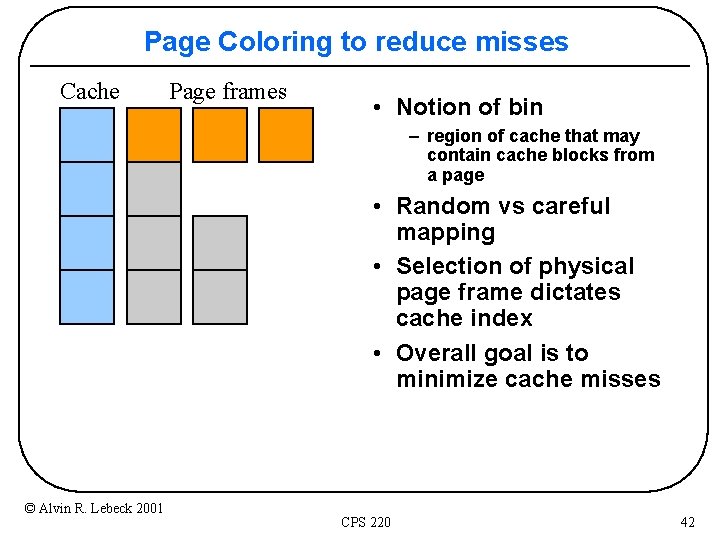

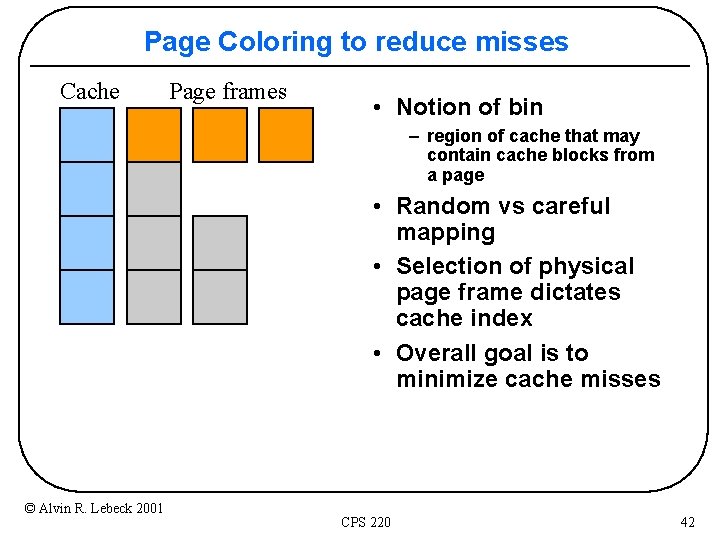

Page Coloring to reduce misses Cache Page frames • Notion of bin – region of cache that may contain cache blocks from a page • Random vs careful mapping • Selection of physical page frame dictates cache index • Overall goal is to minimize cache misses © Alvin R. Lebeck 2001 CPS 220 42

![Careful Page Mapping Kessler 92 Bershad 94 Select a page frame such that Careful Page Mapping [Kessler 92, Bershad 94] • Select a page frame such that](https://slidetodoc.com/presentation_image/354014329d9870984f3a31241f0461c1/image-43.jpg)

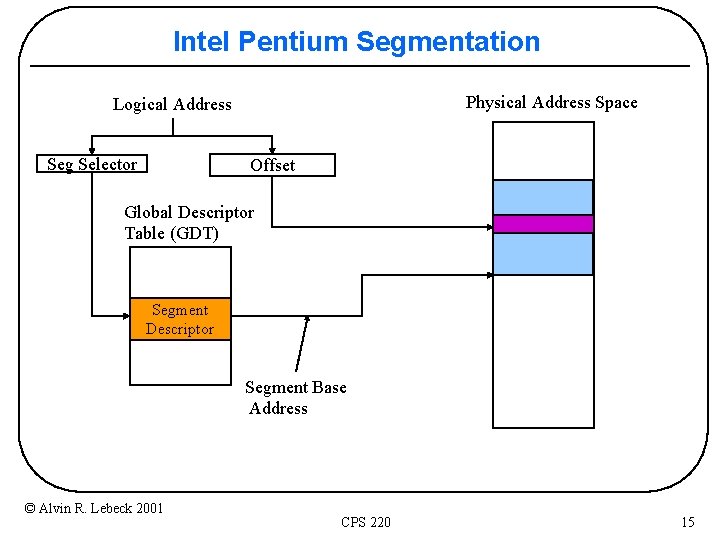

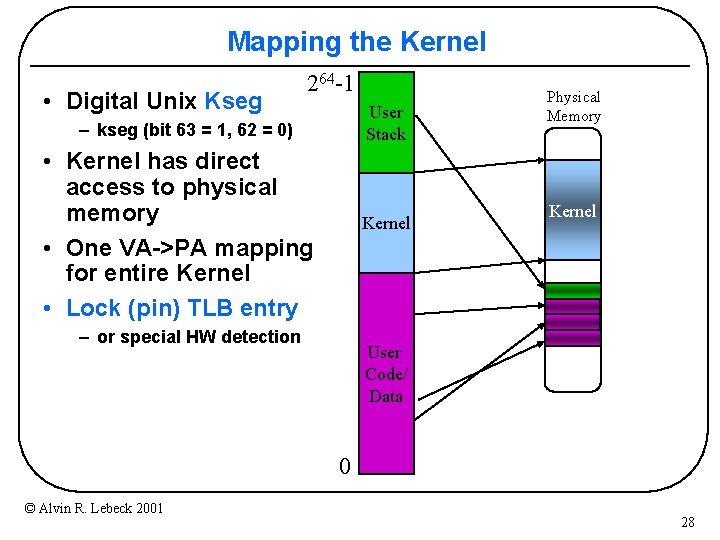

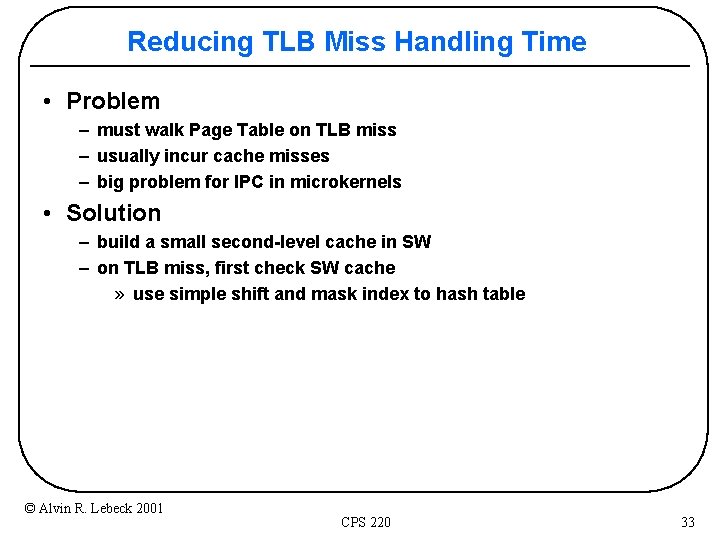

Careful Page Mapping [Kessler 92, Bershad 94] • Select a page frame such that cache conflict misses are reduced – only choose from available pages (no VM replacement induced) • static – “smart” selection of page frame at page fault time • dynamic – move pages around © Alvin R. Lebeck 2001 CPS 220 43

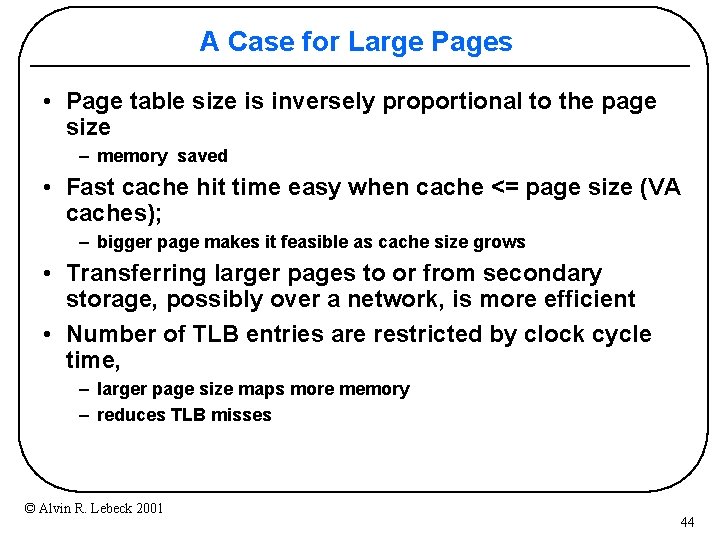

A Case for Large Pages • Page table size is inversely proportional to the page size – memory saved • Fast cache hit time easy when cache <= page size (VA caches); – bigger page makes it feasible as cache size grows • Transferring larger pages to or from secondary storage, possibly over a network, is more efficient • Number of TLB entries are restricted by clock cycle time, – larger page size maps more memory – reduces TLB misses © Alvin R. Lebeck 2001 44

A Case for Small Pages • Fragmentation – large pages can waste storage – data must be contiguous within page • Quicker process start for small processes(? ? ) © Alvin R. Lebeck 2001 CPS 220 45

Superpages • Hybrid solution: multiple page sizes – 8 KB, 16 KB, 32 KB, 64 KB pages – 4 KB, 64 KB, 256 KB, 1 MB, 4 MB, 16 MB pages • Need to identify candidate superpages – Kernel – Frame buffers – Database buffer pools • Application/compiler hints • Detecting superpages – static, at page fault time – dynamically create superpages • Page Table & TLB modifications © Alvin R. Lebeck 2001 CPS 220 46

Page Coloring • Make physical index match virtual index • Behaves like virtual index cache – no conflicts for sequential pages • Possibly many conflicts between processes – address spaces all have same structure (stack, code, heap) – modify to xor PID with address (MIPS used variant of this) • Simplementation • Pick abitrary page if necessary © Alvin R. Lebeck 2001 CPS 220 47

Bin Hopping • Allocate sequentially mapped pages (time) to sequential bins (space) • Can exploit temporal locality – pages mapped close in time will be accessed close in time • Search from last allocated bin until bin with available page frame • Separate search list per process • Simplementation © Alvin R. Lebeck 2001 CPS 220 48

Best Bin • Keep track of two counters per bin – used: # of pages allocated to this bin for this address space – free: # of available pages in the system for this bin • Bin selection is based on low values of used and high values of free • Low used value – reduce conflicts within the address space • High free value – reduce conflicts between address spaces © Alvin R. Lebeck 2001 CPS 220 49

Hierarchical • Best bin could be linear in # of bins • Build a tree – internal nodes contain sum of child <used, free> values • Independent of cache size – simply stop at a particular level in the tree © Alvin R. Lebeck 2001 CPS 220 50

Benefit of Static Page Coloring • Reduces cache misses by 10% to 20% • Multiprogramming – want to distribute mapping to avoid inter-address space conflicts © Alvin R. Lebeck 2001 CPS 220 51

![Dynamic Page Coloring Cache Miss Lookaside CML buffer Bershad 94 proposed hardware Dynamic Page Coloring • Cache Miss Lookaside (CML) buffer [Bershad 94] – proposed hardware](https://slidetodoc.com/presentation_image/354014329d9870984f3a31241f0461c1/image-52.jpg)

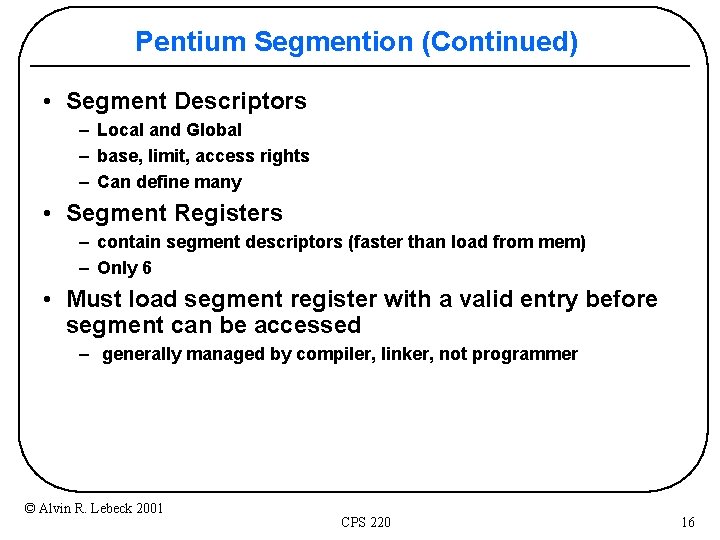

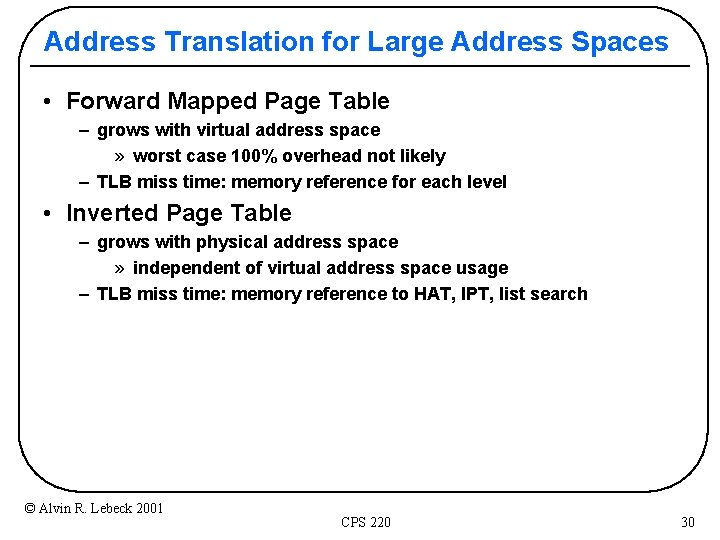

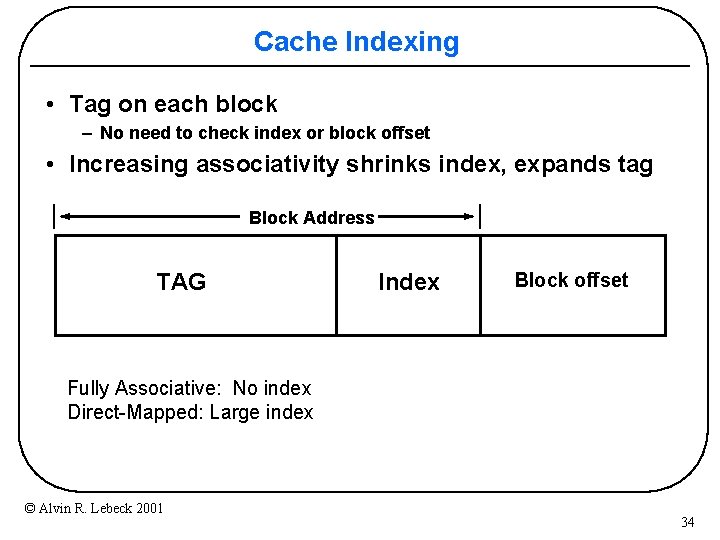

Dynamic Page Coloring • Cache Miss Lookaside (CML) buffer [Bershad 94] – proposed hardware device • Monitor # of misses per page • If # of misses >> # of cache blocks in page – must be conflict misses – interrupt processor – move a page (recolor) • Cost of moving page << benefit © Alvin R. Lebeck 2001 CPS 220 52