Open Stack Resource Scheduler Nova Scheduler Takes a

- Slides: 18

Open. Stack Resource Scheduler

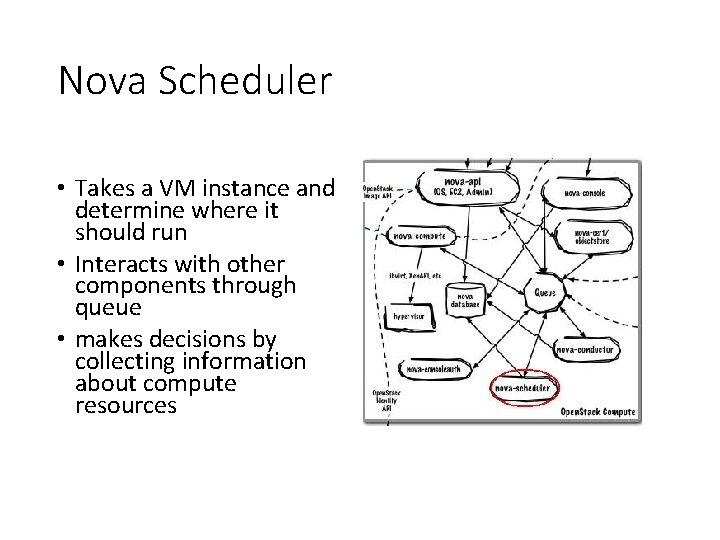

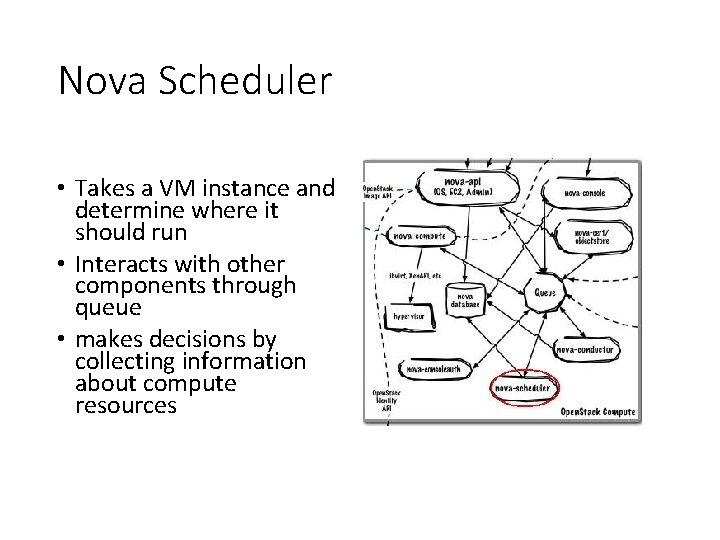

Nova Scheduler • Takes a VM instance and determine where it should run • Interacts with other components through queue • makes decisions by collecting information about compute resources

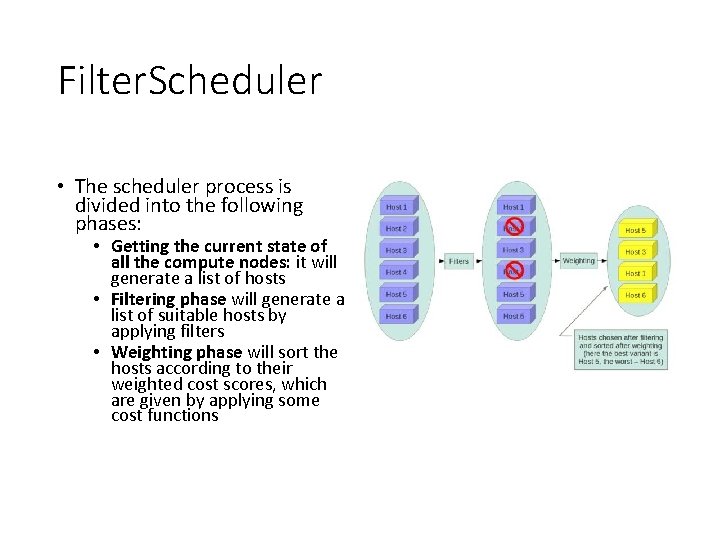

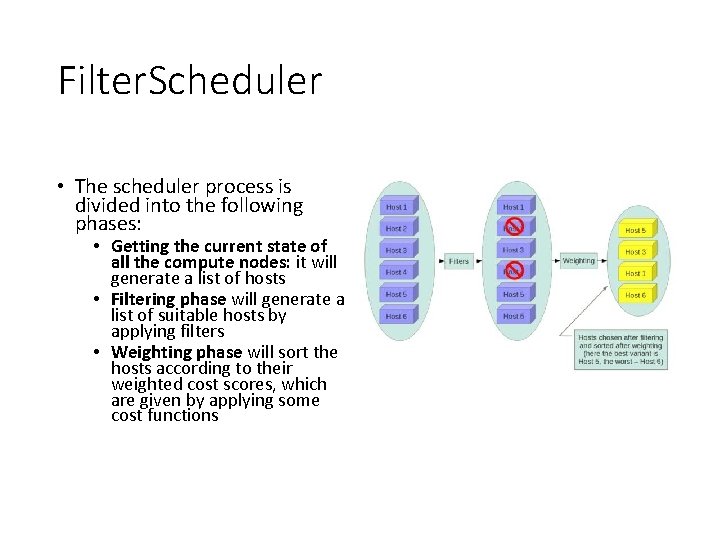

Filter. Scheduler • The scheduler process is divided into the following phases: • Getting the current state of all the compute nodes: it will generate a list of hosts • Filtering phase will generate a list of suitable hosts by applying filters • Weighting phase will sort the hosts according to their weighted cost scores, which are given by applying some cost functions

Filtering • Have not been attempted for scheduling purposes (Retry. Filter) • Are in the requested availability zone (Availability. Zone. Filter). • Have sufficient RAM available (Ram. Filter). • Are capable of servicing the request (Compute. Filter). • Satisfy the extra specs associated with the instance type (Compute. Capabilities. Filter). • Satisfy any architecture, hypervisor type, or virtual machine mode properties specified on the instance's image properties. (Image. Properties. Filter).

Standard filter • All. Hosts. Filter: no operation, passes all the available hosts • Image. Properties. Filter: passes hosts that can support the specified image properties contained in the instance • Availability. Zone. Filter: passes hosts matching the availability zone specified in the instance properties • Compute. Capability. Filter: pass hosts that can create the specified instance type • Core. Filter: passes hosts with sufficient number of CPU cores. • Json. Filter: allows simple JSON-based grammar for selecting hosts. • And others…

Weights • A way to select the best suitable host from a group of valid hosts by giving weights to all the hosts in the list • Weigher • RAMWeigher: Hosts are weighted and sorted with the largest RAM winning • Metrics. Weigher: This weigher can compute the weight based on the compute node host’s various metrics. • Io. Ops. Weigher: compute the weight based on the compute node host’s workload. The default is to preferably choose light workload compute hosts.

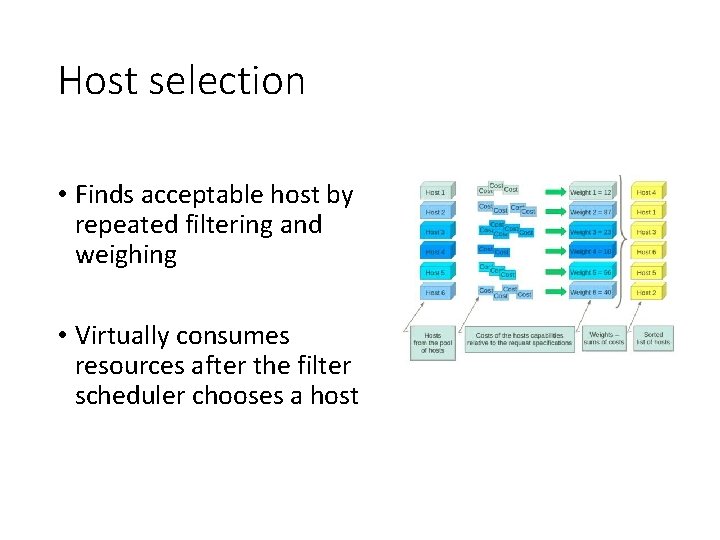

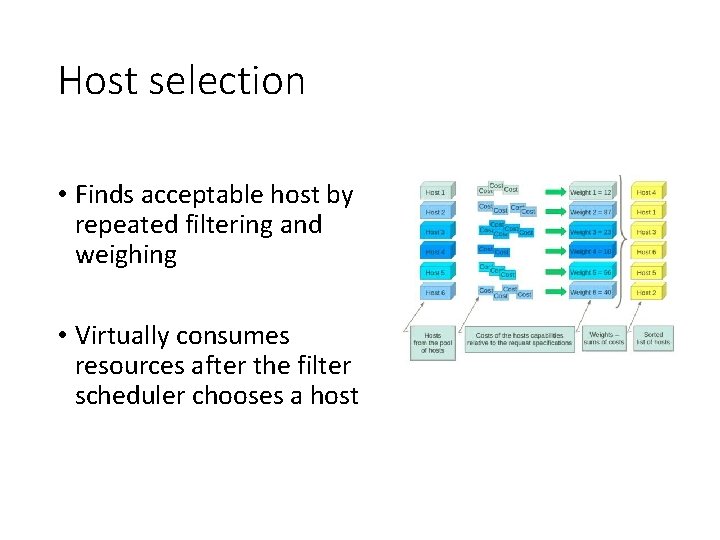

Host selection • Finds acceptable host by repeated filtering and weighing • Virtually consumes resources after the filter scheduler chooses a host

Host aggregates • A mechanism to further partition an availability zone • Only visible to administrators • Allow administrators to assign key-value pairs to groups of machines • Each node can have multiple aggregates • Usage • Advanced scheduling • Hypervisor resource pool • Logical groups for migration

Scheduler of other services • Storage • Cinder volume scheduler • Network • Dhcp agent scheduler • L 3 agent scheduler • Lbaas agent scheduler

Scheduler as a Service (Gantt) • Still incubation • Objective • Provide scheduling services with API • Dynamic scheduling

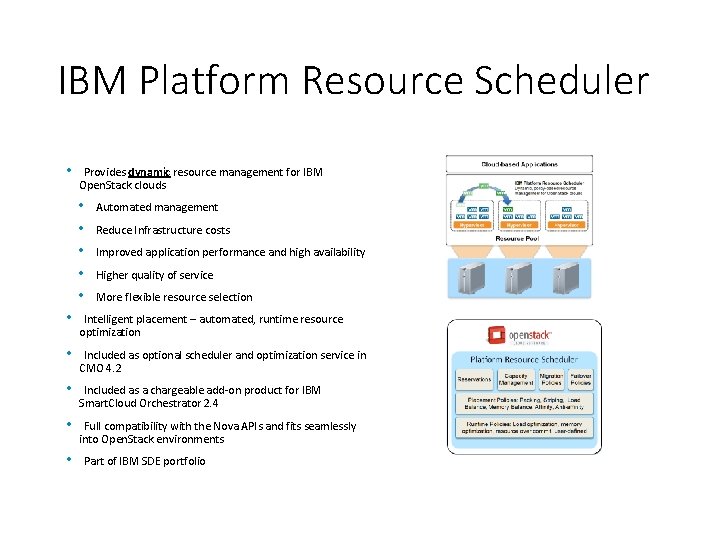

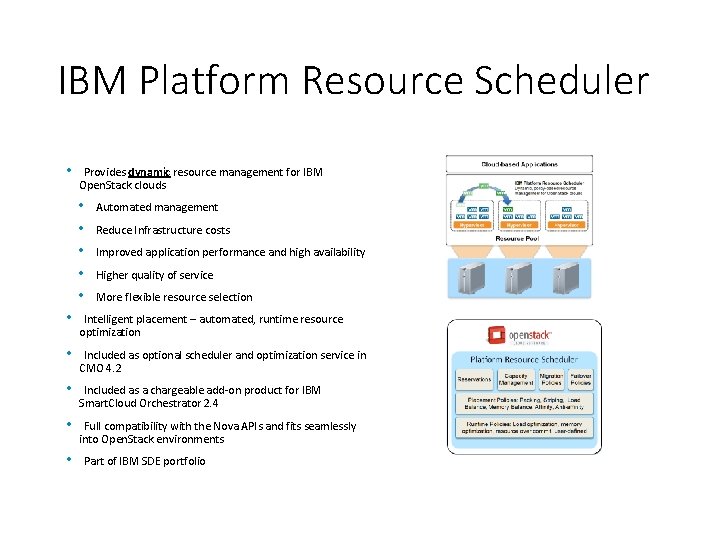

IBM Platform Resource Scheduler • Provides dynamic resource management for IBM Open. Stack clouds • • • Automated management Reduce Infrastructure costs Improved application performance and high availability Higher quality of service More flexible resource selection • Intelligent placement – automated, runtime resource optimization • Included as optional scheduler and optimization service in CMO 4. 2 • Included as a chargeable add-on product for IBM Smart. Cloud Orchestrator 2. 4 • Full compatibility with the Nova APIs and fits seamlessly into Open. Stack environments • Part of IBM SDE portfolio

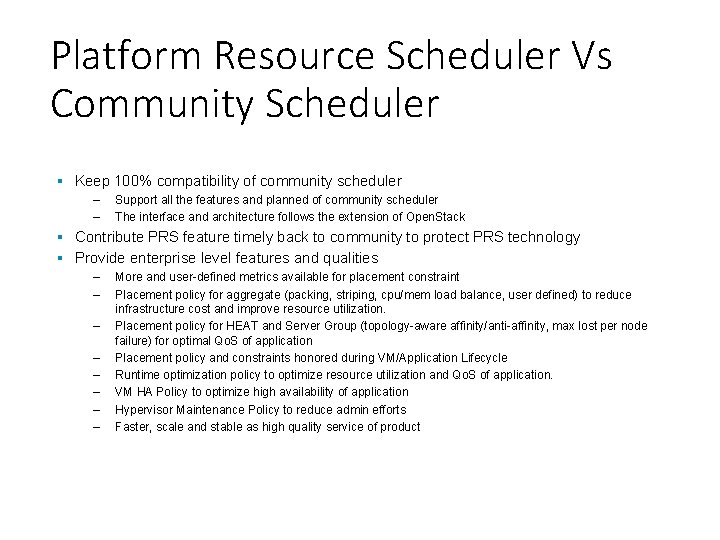

Platform Resource Scheduler Vs Community Scheduler § Keep 100% compatibility of community scheduler – – Support all the features and planned of community scheduler The interface and architecture follows the extension of Open. Stack § Contribute PRS feature timely back to community to protect PRS technology § Provide enterprise level features and qualities – – – – More and user-defined metrics available for placement constraint Placement policy for aggregate (packing, striping, cpu/mem load balance, user defined) to reduce infrastructure cost and improve resource utilization. Placement policy for HEAT and Server Group (topology-aware affinity/anti-affinity, max lost per node failure) for optimal Qo. S of application Placement policy and constraints honored during VM/Application Lifecycle Runtime optimization policy to optimize resource utilization and Qo. S of application. VM HA Policy to optimize high availability of application Hypervisor Maintenance Policy to reduce admin efforts Faster, scale and stable as high quality service of product

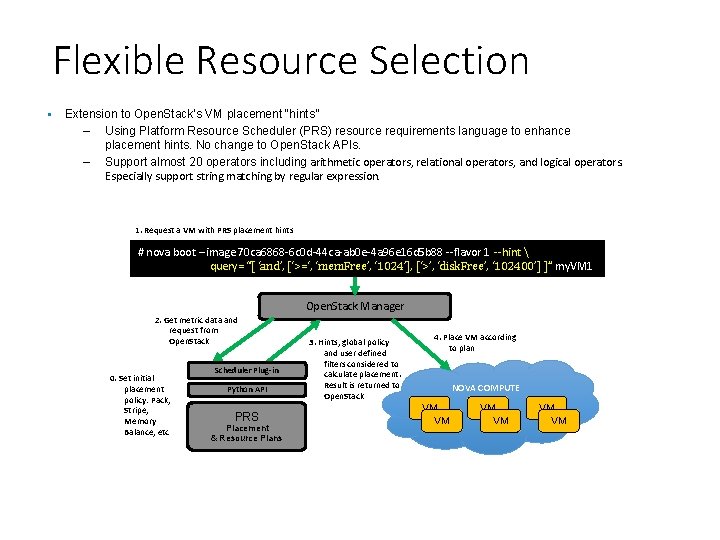

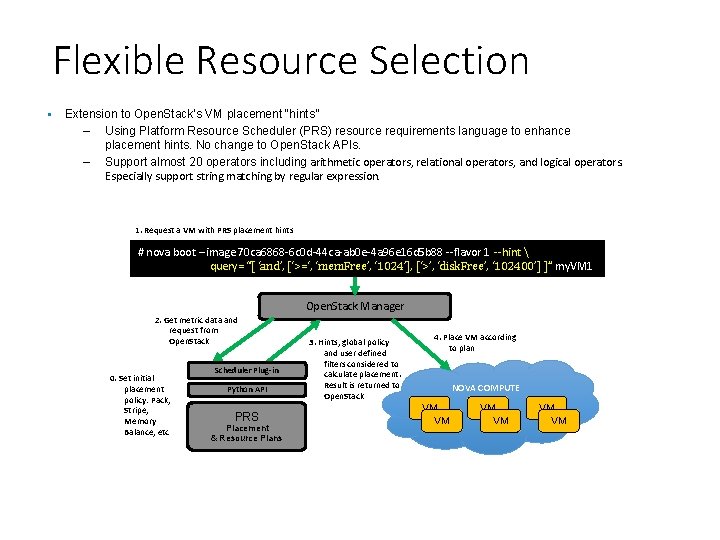

Flexible Resource Selection § Extension to Open. Stack’s VM placement “hints” – Using Platform Resource Scheduler (PRS) resource requirements language to enhance placement hints. No change to Open. Stack APIs. – Support almost 20 operators including arithmetic operators, relational operators, and logical operators. Especially support string matching by regular expression. 1. Request a VM with PRS placement hints # nova boot --image 70 ca 6868 -6 c 0 d-44 ca-ab 0 e-4 a 96 e 16 d 5 b 88 --flavor 1 --hint query= “[ ‘and’, [‘>=‘, ‘mem. Free’, ‘ 1024’], [‘>’, ‘disk. Free’, ‘ 102400’] ]” my. VM 1 Open. Stack Manager 2. Get metric data and request from Open. Stack 0. Set initial placement policy: Pack, Stripe, Memory Balance, etc Scheduler Plug-in Python API PRS Placement & Resource Plans 3. Hints, global policy and user defined filters considered to calculate placement. Result is returned to Open. Stack 4. Place VM according to plan NOVA COMPUTE VM VM VM

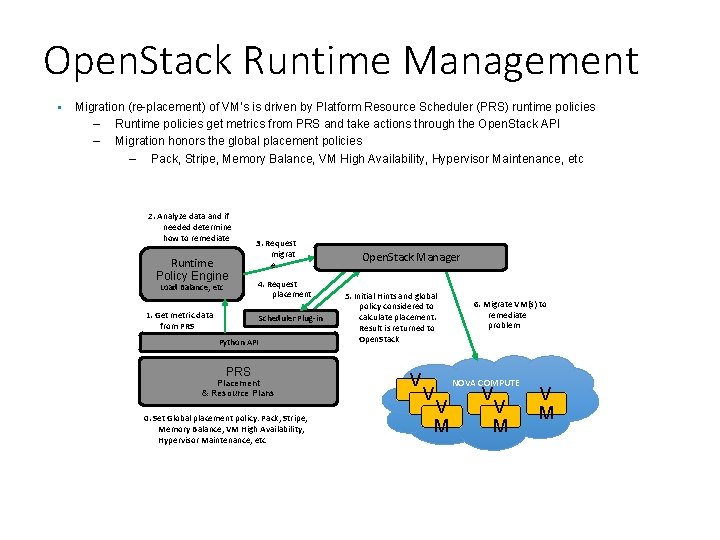

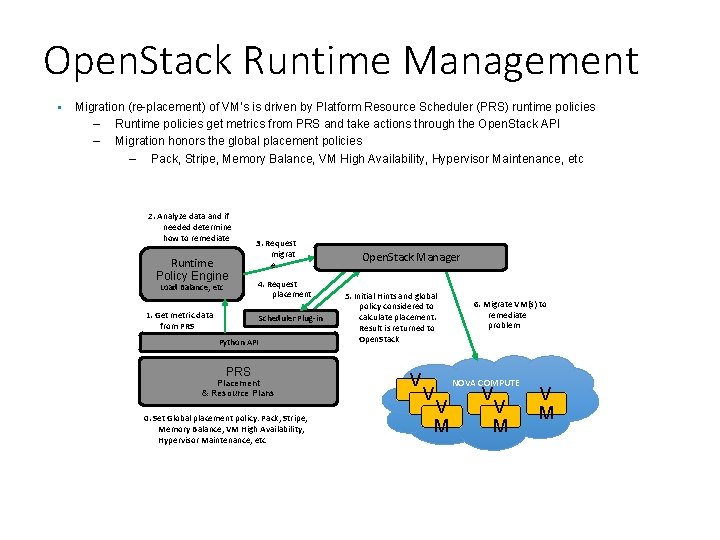

Open. Stack Runtime Management § Migration (re-placement) of VM’s is driven by Platform Resource Scheduler (PRS) runtime policies – Runtime policies get metrics from PRS and take actions through the Open. Stack API – Migration honors the global placement policies – Pack, Stripe, Memory Balance, VM High Availability, Hypervisor Maintenance, etc 2. Analyze data and if needed determine how to remediate Runtime Policy Engine Load Balance, etc 3. Request migrat e 4. Request placement 1. Get metric data from PRS Scheduler Plug-in Python API PRS Placement & Resource Plans 0. Set Global placement policy: Pack, Stripe, Memory Balance, VM High Availability, Hypervisor Maintenance, etc Open. Stack Manager 5. Initial Hints and global policy considered to calculate placement. Result is returned to Open. Stack 6. Migrate VM(s) to remediate problem V NOVA COMPUTE V V M MV M M V M

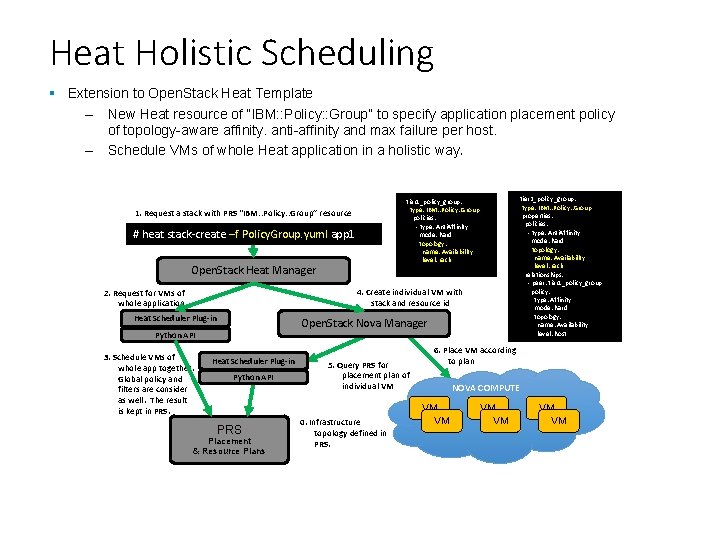

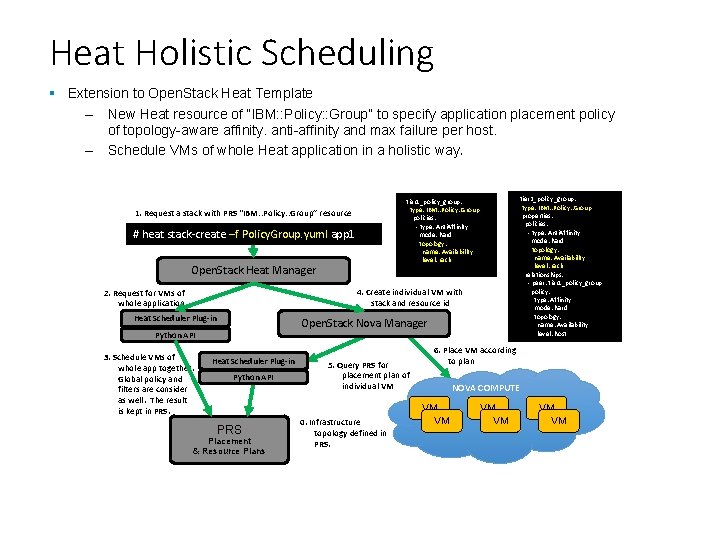

Heat Holistic Scheduling § Extension to Open. Stack Heat Template – New Heat resource of “IBM: : Policy: : Group” to specify application placement policy of topology-aware affinity. anti-affinity and max failure per host. – Schedule VMs of whole Heat application in a holistic way. tier 2_policy_group: type: IBM: : Policy: : Group properties: policies: - type: Anti. Affinity mode: hard topology: name: Availability level: rack relationships: - peer: tier 1_policy_group policy: type: Affinity mode: hard topology: name: Availability level: host tier 1_policy_group: type: IBM: : Policy: : Group policies: - type: Anti. Affinity mode: hard topology: name: Availability level: rack 1. Request a stack with PRS “IBM: : Policy: : Group” resource # heat stack-create –f Policy. Group. yuml app 1 Open. Stack Heat Manager 4. Create individual VM with stack and resource id 2. Request for VMs of whole application Heat Scheduler Plug-in Open. Stack Nova Manager Python API 3. Schedule VMs of whole app together. Global policy and filters are consider as well. The result is kept in PRS. Heat Scheduler Plug-in Python API PRS Placement & Resource Plans 5. Query PRS for placement plan of individual VM 0. Infrastructure topology defined in PRS. 6. Place VM according to plan NOVA COMPUTE VM VM VM

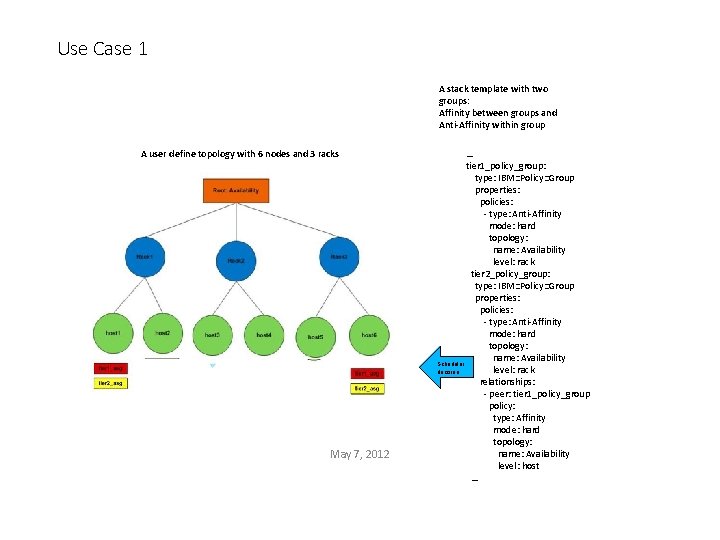

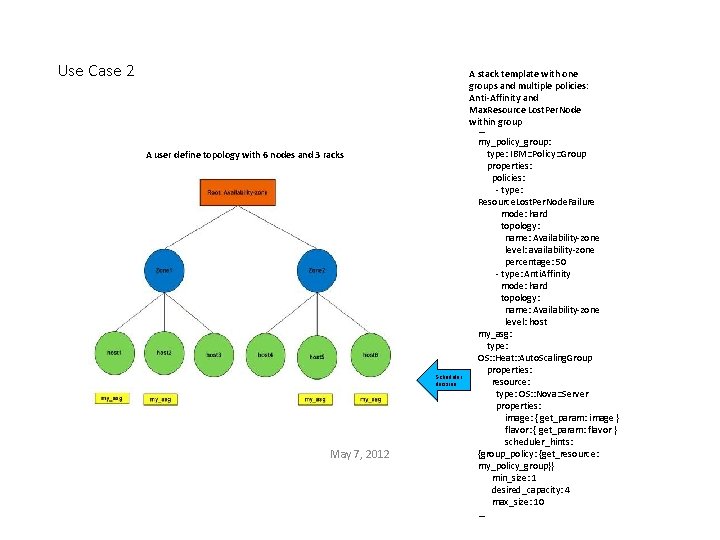

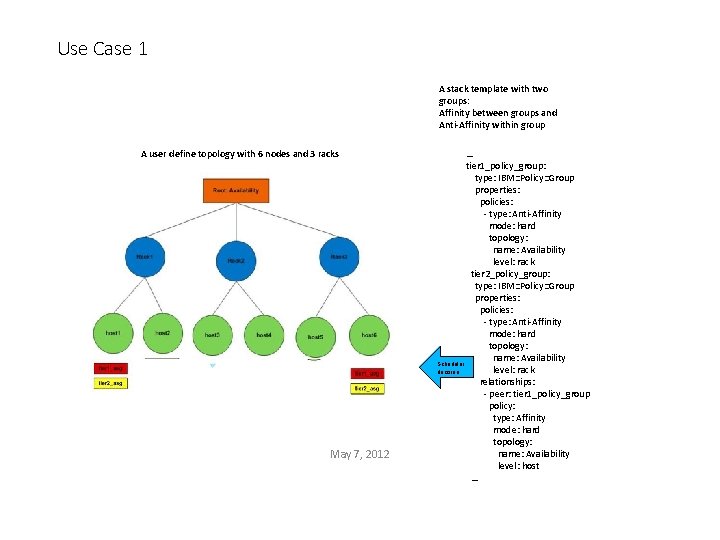

Use Case 1 A stack template with two groups: Affinity between groups and Anti-Affinity within group A user define topology with 6 nodes and 3 racks May 7, 2012 … tier 1_policy_group: type: IBM: : Policy: : Group properties: policies: - type: Anti-Affinity mode: hard topology: name: Availability level: rack tier 2_policy_group: type: IBM: : Policy: : Group properties: policies: - type: Anti-Affinity mode: hard topology: name: Availability Scheduler decision level: rack relationships: - peer: tier 1_policy_group policy: type: Affinity mode: hard topology: name: Availability level: host …

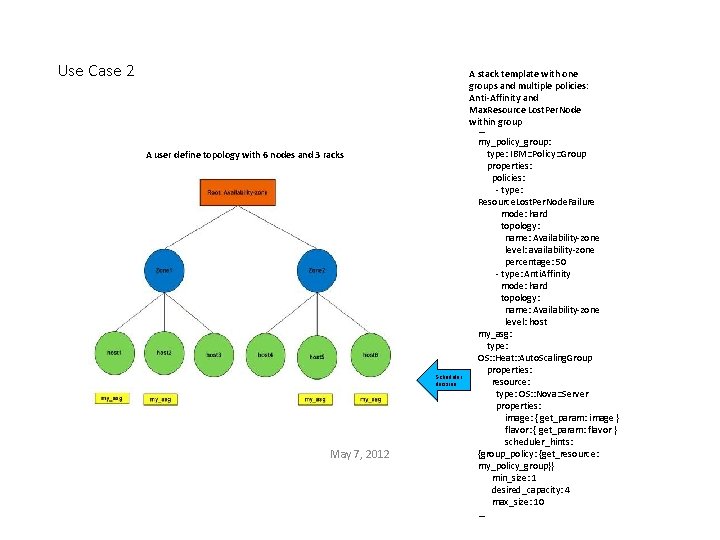

Use Case 2 A user define topology with 6 nodes and 3 racks Scheduler decision May 7, 2012 A stack template with one groups and multiple policies: Anti-Affinity and Max. Resource Lost. Per. Node within group … my_policy_group: type: IBM: : Policy: : Group properties: policies: - type: Resource. Lost. Per. Node. Failure mode: hard topology: name: Availability-zone level: availability-zone percentage: 50 - type: Anti. Affinity mode: hard topology: name: Availability-zone level: host my_asg: type: OS: : Heat: : Auto. Scaling. Group properties: resource: type: OS: : Nova: : Server properties: image: { get_param: image } flavor: { get_param: flavor } scheduler_hints: {group_policy: {get_resource: my_policy_group}} min_size: 1 desired_capacity: 4 max_size: 10 …

Thanks!