Online Platforms MTurk and Prolific Ethics Committee Social

- Slides: 25

Online Platforms: MTurk and Prolific Ethics Committee Social Sciences (ECSS) July, 2019

Content • Online platforms for crowdsourcing behavioural research - Benefits - Recruitment vs data collection • MTurk - Scientific considerations - Ethical considerations • Prolific - Scientific considerations - Ethical considerations • References and further reading

Online platforms for crowdsourcing behavioural research • Benefits - Faster data collection & larger samples - Reductions in costs - More diverse populations than sample of university students - Viable option to replicate findings with a different sample quickly

Online platforms for crowdsourcing behavioural research • Recruitment vs data collection - Some platforms only provide recruitment services (and you can post a link to your study; e. g. , via Qualtrics) - Other platforms also provide services for data collection (e. g. , software, create & host experiments) - And there are platforms that provide both Clearly indicate which platform you are using and for what purpose

MTurk • Amazon Mechanical Turk (MTurk) Founded in 2005 Platform for crowdsourcing participants (‘workers’ / ‘turkers’) According to Amazon, there are more than 500, 000 registered workers from 190 countries* • Researchers and businesses (‘requestors’) can pay a fee to MTurk workers to complete Human Intelligence Tasks (HITs) HITs may include surveys, experiments, coding tasks, or any other work requiring human intelligence HITs can be posted using the internal MTurk survey platform (e. g. , through Turk. Prime) or by posting a link to an external survey platform (e. g. , Qualtrics). • After the HITs are completed, the requestor must either accept or reject the work. Once the work is accepted, the worker is compensated through their Amazon account.

MTurk – Scientific considerations • Sample characteristics - sample tends to be anchored primarily in the US (~90%) or India (~7%) -> not representative of US and/or general population - MTurk workers are less emotionally stable, have higher negative affect, lower levels of wellbeing, and lower social engagement (compared to a community and/or student sample) - MTurk workers are more educated, less religious, and more likely to be unemployed (compared to the general population) to - MTurk samples are less likely to complete a survey, have higher dropout rates, and are prone social desirability bias, dishonesty and inattention (incentivized to complete HITs quickly to maximize return on investment) Understand the characteristics of the general MTurk participant pool and assess whether Mturk demographic characteristics are appropriate for the specific study goals at hand*

MTurk – Scientific considerations • Procedures that may affect the quality of the data* - there is a possibility that more than one individual uses the same Amazon account to complete HITs on MTurk - estimated that 80% of the HITs are being completed by 20% of the most active workers (so called ‘Super Turkers’)**. Related problems: * growing participant non-naivety * familiar with common experimental paradigms and adept at avoiding attention checks * Super Turkers repeat participation in similar research (previous exposure effects to same research design & reading instructions less carefully) -> problem is exacerbated by the existence of several discussion boards that MTurk workers regularly use to discuss, and share information about, tasks - large portion of non-English speakers may affect results - some research found bot-like responses (using VPN to hide their location)

MTurk – Scientific considerations • Procedures that may affect the quality of the data – CONTINUED - using the selection criteria ‘high HIT approval rating’ and/or ‘large number of HITs completed’ may increase the representation of Super Turkers in a sample - researchers can accept or reject work (because participants e. g. , failed attention checks or quality of data is low) * participants may try to anticipate the results they believe requestors expect, to maximize chance of yielding such results and not get rejected

MTurk – Scientific considerations • Low payment - may lead to unreliable / not serious answers - may impact quality of the data on MTurk* • On the other hand, increasing payment - may attract Super Turkers or Mturkers who view MTurk as a substantial source of income - for further discussion, see the ethical considerations on the next slides

MTurk – Ethical considerations • Insufficiently clear whether participant’s interests are respected in line with our national Code of Ethics We will explain the following points in more detail: * Possible exploitation of participants / low payment * Unclear whether participants are adequately protected in the event of deception * No say on work regulations (digital sweatshop) * Dependency of academic research (digital giant)

MTurk – Ethical considerations * Possible exploitation of participants / low payment - Average hourly pay, on average $1. 50 (median ~ $2, only 4% earns more than $7. 25/hour) A survey typically takes a couple of minutes person, so the hourly rate is very low Especially problematic given that the majority of MTurk tasks are completed by a small set of workers who spend long hours on the website, with many of them being very poor, unemployed (underemployed), often elderly or disabled -> for 25% of workers on online platforms sub-minimum wage online tasks are the only work available - 2/3 of MTurkers consider themselves as somewhat exploited

MTurk – Ethical considerations * Possible exploitation of participants / low payment - CONTINUED - Increasing payment is not necessarily preferred it may coerce someone to participate or continue in a study that they do not want to do*** tasks that pay the best and take the least time get snapped up quickly by workers, so workers have to monitor the site closely, waiting to grab them. Workers are not paid for this waiting time. deciding on ethical pay is difficult -> minimum wage of US where Amazon is based, or minimum wage where the individual lives? Not everyone can complete the same amount of HITs in one hour. *** Relatedly, many requestors post tasks that take longer than they say to complete As workers already invested time, they will still complete the task resulting in lower hourly pay

MTurk – Ethical considerations * Unclear whether participants are adequately protected in the event of deception - Explanation and care unclear after deception and manipulation - Researchers are able to post requests in a non-transparent way

MTurk – Ethical considerations * No say on work regulations (digital sweatshop) - Amazon puts little effort into policing MTurk or establishing pay standards In Amazon’s own guidelines for researchers, $6/hour is suggested but not required Researchers can even list payments as low as $0. 01 - Amazon is fairly uninvolved in ensuring that the environment for workers and requestors is a positive one Possible exploitation because workers can not rate researchers - Amazon’s own terms of service describe their involvement only as a ‘payment processor’ - Amazon strives to keep costs down and profits up

MTurk – Ethical considerations * No say on work regulations (digital sweatshop) - CONTINUED - researchers can accept or reject work (because participants e. g. , failed attention checks or quality of data is low) -> Amazon will not intervene when workers believe their work was rejected unfairly - there seems to be a general trust issue towards requestors -> workers try to participate in studies of researchers they already know

MTurk – Ethical considerations * Dependency of academic research (digital giant) - Amazon generates income as a percentage of each HIT payment: 20% fee on what researchers pay to workers [January 2018] -> has caused requestors to offer less money to workers - Speaking negatively about Amazon has led to account suspensions; account can get terminated without notice

Prolific as an alternative?

Prolific • Prolific Academic Launched in 2014, by graduate students from Oxford and Sheffield Universities Platform for subject recruitment 60. 000 accounts (in January 2017); 35. 600 active accounts (in December 2017) • Researchers can integrate any survey or experimental software by using a link. Longitudinal studies can also be implemented. It is not possible to create a survey using Prolific -> Prolific is only for recruitment • Free prescreening based on previous approval rate demographics taking part in previous studies questions used in earlier studies propose your own question to Prolific

Prolific – Scientific considerations • Sample characteristics - access to new, more naïve populations than MTurk degree of overlap between platforms seem to be quite small (22% of Prolific users also use MTurk) - similar distribution in ethnicity as MTurk although a lower % of non-Caucasian participants and more participants outside the US ~25% North America, ~31% UK, ~27% Europe, ~8% South America, ~6% India, rest from Africa and East Asia more diverse participants - less dishonest participants than MTurk

Prolific – Scientific considerations • Quality of the data - data quality comparable to MTurk (1 study) - dropout rate similar to MTurk (1 study -> 10%) - lower cheating degree than on MTurk - completed tasks per (active) worker less skewed more naïve * 69% of Prolific users spend between 1 and 8 hours per week (majority 1 -2 hours per week) -> Mturk: 79% of MTurk users spend 8 or more hours per week on the platform * Median number of tasks completed per participant Prolific 10 -30 MTurk 5900 -7100 - Prolific is actively banning bots

Prolific – Ethical considerations • Payment - minimum reward / fixed pay per unit of time (£ 6. 50/hour) - time required for an experiment is initially estimated by the experimenter, but is then updated with the actual time taken once participants make submissions

Prolific – Ethical considerations • Protection of participants - Participants can be messaged without compromising anonymity - Prolific has detailed rules regarding the treatment of subjects on the platform - Participants are explicitly informed that they are recruited for participation in research - Clear guidelines for researchers for the handling of submissions - Clarity about rights, obligations and compensation - Rejections of submissions have to be reasonable and can be overruled if a participant objects to a rejection - Subjects have several options for terminating a study without negatively affecting their reputation score -> quick and risk-free option for withdrawing consent at any time during the study

Prolific – Ethical considerations • Prolific as a research platform - User-friendly interface - Services explicitly targeted at researchers (and startups) - Supported by the University of Oxford - Providing a subject pool for research is the core of Prolific’s business MTurk is not a focus-product of Amazon and has not seen much development in recent years - GDPR compliant and approved by FSW privacy-officer as a recruitment platform

References and further reading https: //www. brookings. edu/blog/brown-center-chalkboard/2016/02/08/can-crowdsourcing-be-ethical/ https: //www. theatlantic. com/business/archive/2018/01/amazon-mechanical-turk/551192/ https: //www. psychologicalscience. org/observer/using-amazons-mechanical-turk-benefits-drawbacks-andsuggestions Hara, K. , Adams, A. , Milland, K. , Savage, S. , Callison-Burch, C. , & Bigham, J. P. (2018, April). A data-driven analysis of workers' earnings on amazon mechanical turk. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems (p. 449). ACM. Keith, M. G. , Tay, L. , & Harms, P. D. (2017). Systems perspective of Amazon Mechanical Turk for organizational research: Review and recommendations. Frontiers in psychology, 8, 1359. Mc. Credie, M. N. , & Morey, L. C. (2018). Who are the Turkers? A characterization of MTurk workers using the Personality Assessment Inventory. Assessment, 1073191118760709. Milland, K. (2016). A Mechanical Turk worker’s perspective. Journal of Media Ethics, 31(4), 263 -264. Pittman, M. , & Sheehan, K. (2016). Amazon’s Mechanical Turk a digital sweatshop? Transparency and accountability in crowdsourced online research. Journal of media ethics, 31(4), 260 -262.

References and further reading https: //blog. prolific. ac/so-you-want-to-recruit-participants-online/ https: //helpcentre. prolific. ac/hc/en-gb/articles/360009223133 -Is-online-crowdsourcing-a-legitimatealternative-to-lab-based-research. Palan, S. , & Schitter, C. (2018). Prolific. ac—A subject pool for online experiments. Journal of Behavioral and Experimental Finance, 17, 22 -27. Peer, E. , Brandimarte, L. , Samat, S. , & Acquisti, A. (2017). Beyond the Turk: Alternative platforms for crowdsourcing behavioral research. Journal of Experimental Social Psychology, 70, 153 -163.

Prolific crowdsourcing

Prolific crowdsourcing Mturk suite tutorial

Mturk suite tutorial Erdos number calculator

Erdos number calculator Online platforms tools and application

Online platforms tools and application Sharing of diverse information through universal web access

Sharing of diverse information through universal web access Social media platforms

Social media platforms Ethics committee definition

Ethics committee definition Macro and micro ethics

Macro and micro ethics Descriptive ethics

Descriptive ethics Descriptive ethics vs normative ethics

Descriptive ethics vs normative ethics Descriptive ethics vs normative ethics

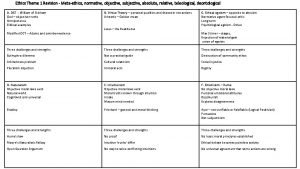

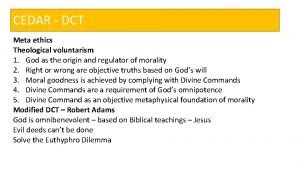

Descriptive ethics vs normative ethics Methaethics

Methaethics Descriptive ethics vs normative ethics

Descriptive ethics vs normative ethics Normative vs descriptive ethics

Normative vs descriptive ethics Meta ethics vs normative ethics

Meta ethics vs normative ethics Metaethics

Metaethics Deontological

Deontological Teleological ethics vs deontological ethics

Teleological ethics vs deontological ethics Apa itu social thinking

Apa itu social thinking Social thinking social influence social relations

Social thinking social influence social relations Security strategies in windows platforms and applications

Security strategies in windows platforms and applications Security strategies in windows platforms and applications

Security strategies in windows platforms and applications Security strategies in linux platforms and applications

Security strategies in linux platforms and applications Security strategies in windows platforms and applications

Security strategies in windows platforms and applications Security strategies in linux platforms and applications

Security strategies in linux platforms and applications Tbpe online ethics exam answers

Tbpe online ethics exam answers