Online Bayesian Models for Personal Analytics in Social

Online Bayesian Models for Personal Analytics in Social Media Svitlana Volkova joint work with Benjamin Van Durme 1, 2, Glen Coppersmith 2 and David Yarowsky 1, 2 1 Center for Language and Speech Processing, Johns Hopkins University, 2 Human Language Technology Center of Excellence

Social Media • Personalized, diverse and timely data • Can reveal user interests, preferences and opinions Social Network Prediction App - https: //apps. facebook. com/snpredictionapp/ Demographics. Pro – http: //www. demographicspro. com/ Wolphral. Alpha Analytics – http: //www. wolframalpha. com/facebook/

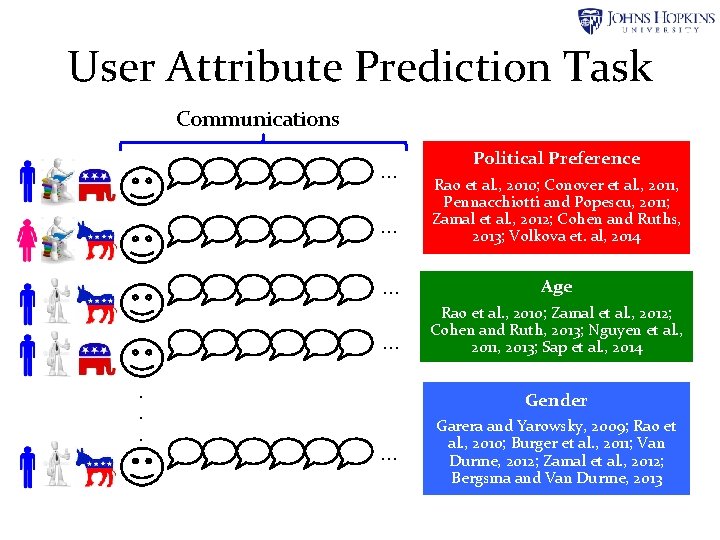

User Attribute Prediction Task Communications … … . . . Political Preference Rao et al. , 2010; Conover et al. , 2011, Pennacchiotti and Popescu, 2011; Zamal et al. , 2012; Cohen and Ruths, 2013; Volkova et. al, 2014 … Age … Rao et al. , 2010; Zamal et al. , 2012; Cohen and Ruth, 2013; Nguyen et al. , 2011, 2013; Sap et al. , 2014 Gender … Garera and Yarowsky, 2009; Rao et al. , 2010; Burger et al. , 2011; Van Durme, 2012; Zamal et al. , 2012; Bergsma and Van Durme, 2013

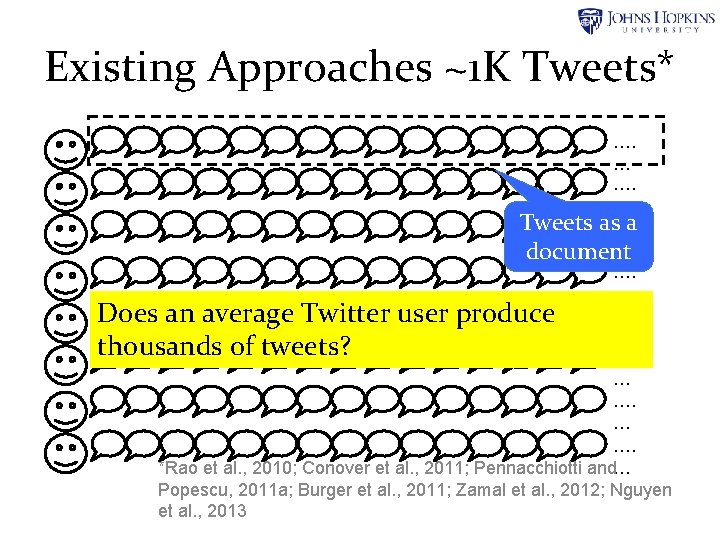

Existing Approaches ~1 K Tweets* …. … Tweets as…. a … document …. … …. Does an average Twitter user produce … thousands of tweets? …. … …. *Rao et al. , 2010; Conover et al. , 2011; Pennacchiotti and… Popescu, 2011 a; Burger et al. , 2011; Zamal et al. , 2012; Nguyen et al. , 2013

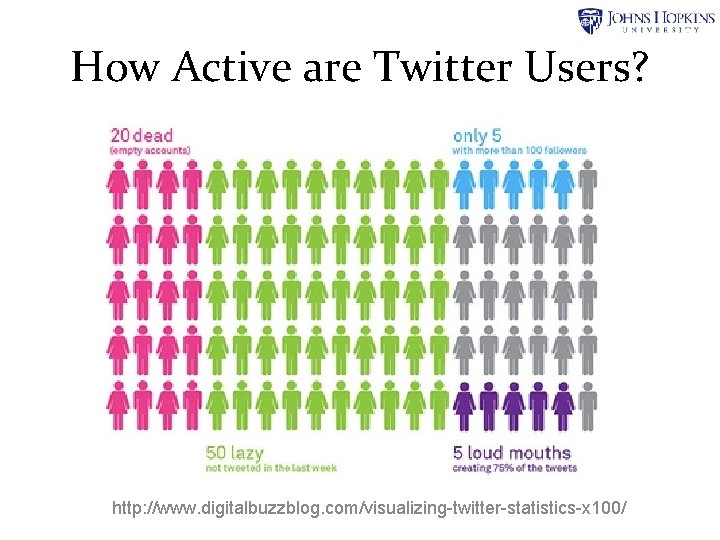

How Active are Twitter Users? http: //www. digitalbuzzblog. com/visualizing-twitter-statistics-x 100/

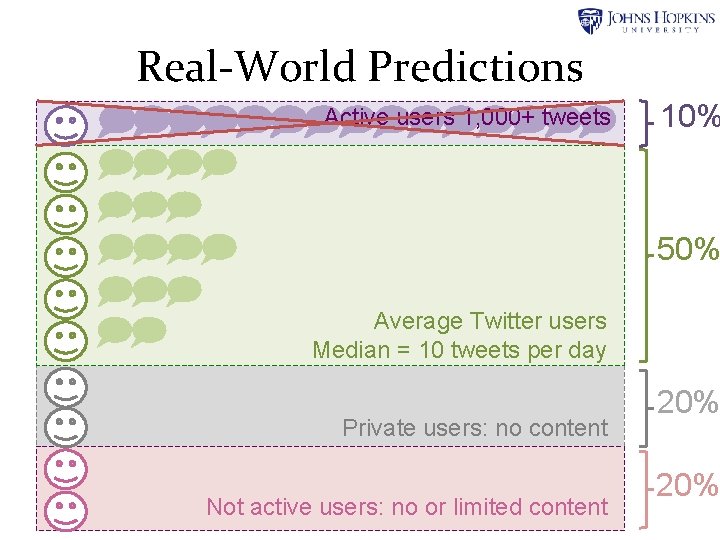

Real-World Predictions Active users 1, 000+ tweets 10% 50% Average Twitter users Median = 10 tweets per day Private users: no content Not active users: no or limited content 20%

Outline I. Static (Batch) Prediction II. Streaming (Online) Inference III. Dynamic (Iterative) Learning and Prediction IV. Inferring User Demographics, Personality, Emotions and Opinions (AAAI Demo)

Outline I. Static (Batch) Prediction II. Streaming (Online) Inference III. Dynamic (Iterative) Learning and Prediction IV. Inferring User Demographics, Personality, Emotions and Opinions (AAAI Demo)

Attributed Social Network User Local Neighborhoods a. k. a. Social Circles

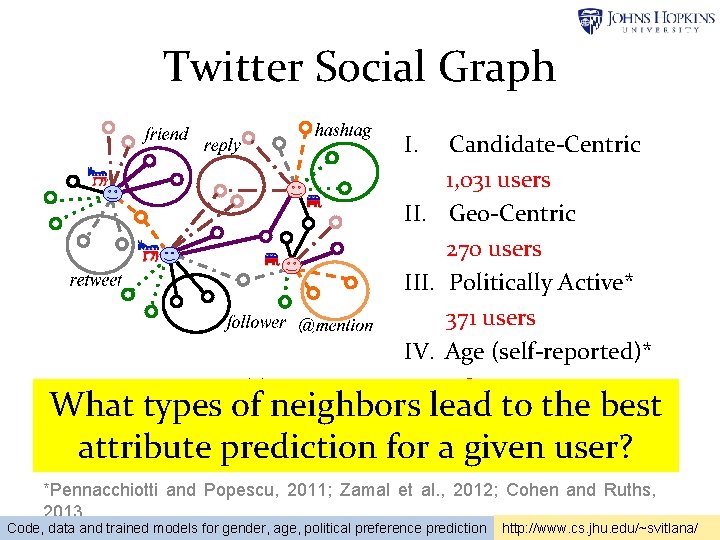

Twitter Social Graph I. Candidate-Centric 1, 031 users II. Geo-Centric 270 users III. Politically Active* 371 users IV. Age (self-reported)* 387 users 10 - 20 neighbors What types lead to the best of each typeof perneighbors user, V. Gender (name)* thousands of nodes and edges for a 384 attribute prediction given users user? *Pennacchiotti and Popescu, 2011; Zamal et al. , 2012; Cohen and Ruths, 2013 Code, data and trained models for gender, age, political preference prediction http: //www. cs. jhu. edu/~svitlana/

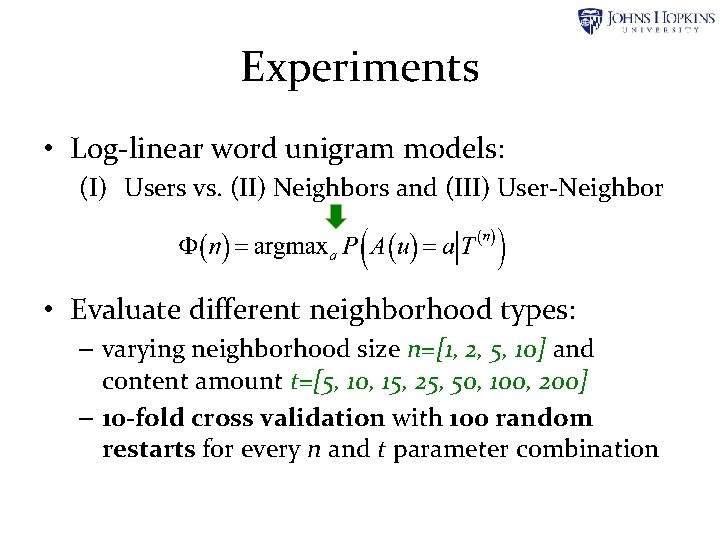

Experiments • Log-linear word unigram models: (I) Users vs. (II) Neighbors and (III) User-Neighbor • Evaluate different neighborhood types: – varying neighborhood size n=[1, 2, 5, 10] and content amount t=[5, 10, 15, 25, 50, 100, 200] – 10 -fold cross validation with 100 random restarts for every n and t parameter combination

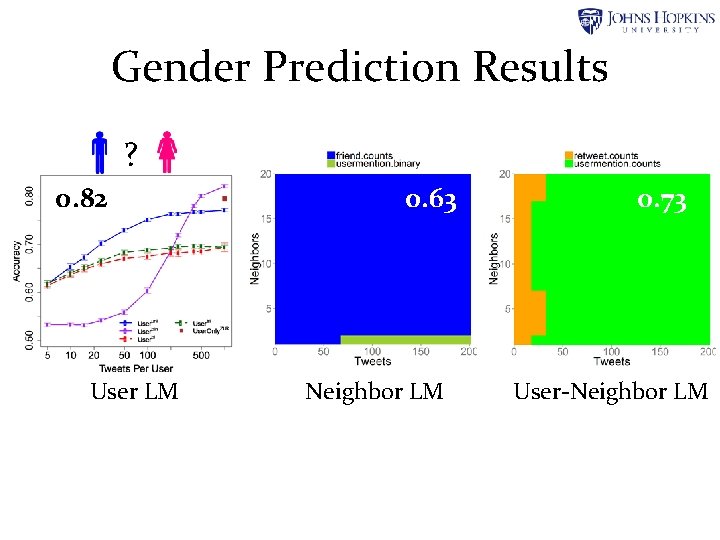

Gender Prediction Results ? 0. 82 User LM 0. 63 Neighbor LM 0. 73 User-Neighbor LM

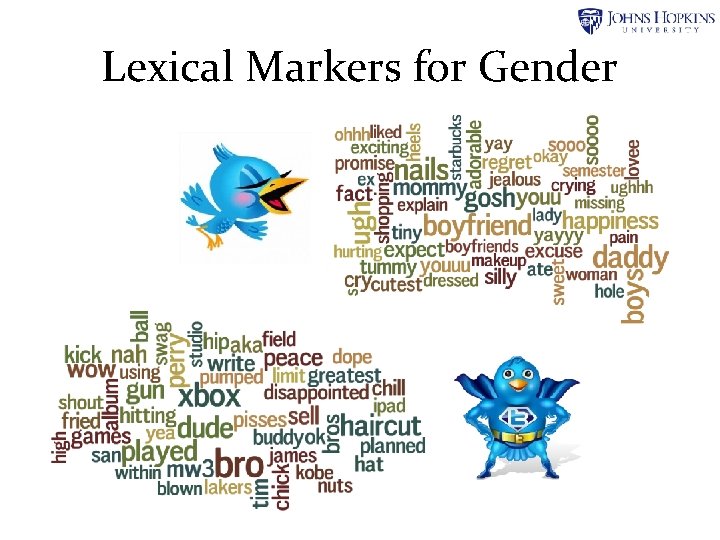

Lexical Markers for Gender

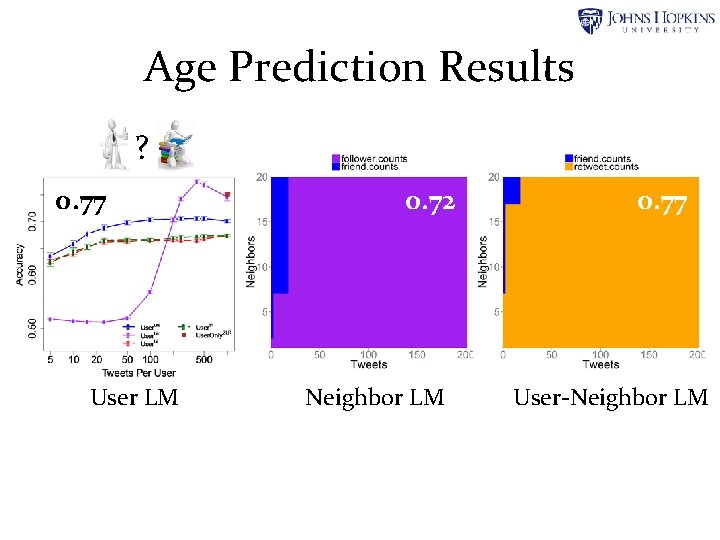

Age Prediction Results ? 0. 77 User LM 0. 72 Neighbor LM 0. 77 User-Neighbor LM

Lexical Markers for Age

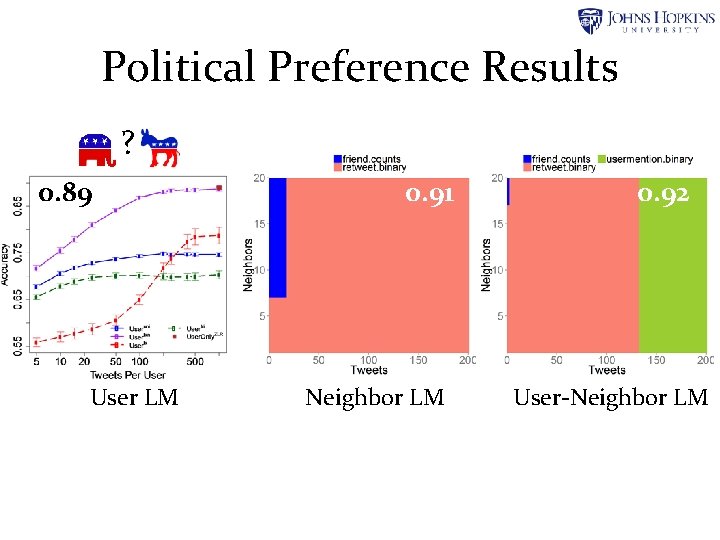

Political Preference Results ? 0. 89 User LM 0. 91 Neighbor LM 0. 92 User-Neighbor LM

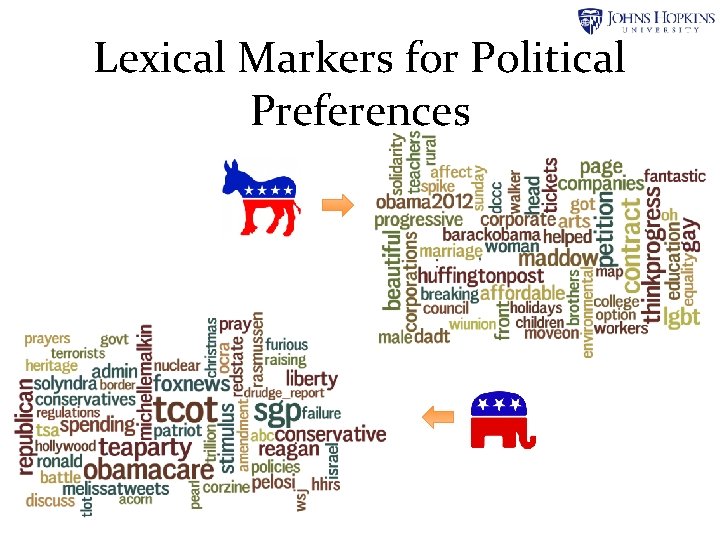

Lexical Markers for Political Preferences

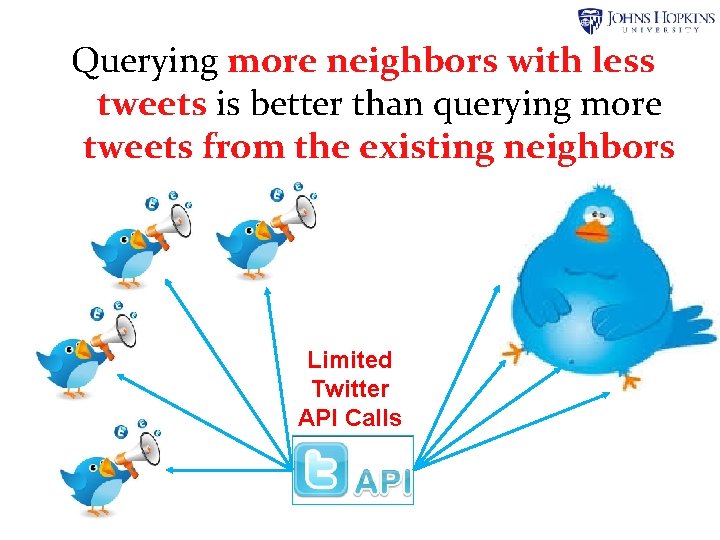

Querying more neighbors with less tweets is better than querying more tweets from the existing neighbors Limited Twitter API Calls

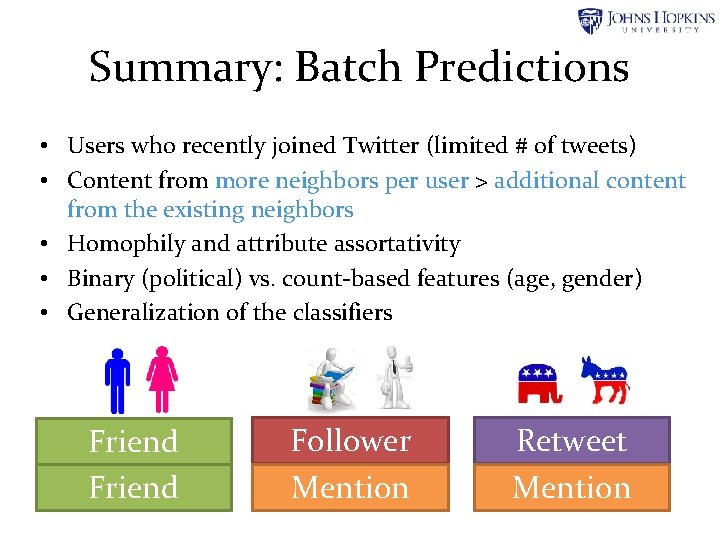

Summary: Batch Predictions • Users who recently joined Twitter (limited # of tweets) • Content from more neighbors per user > additional content from the existing neighbors • Homophily and attribute assortativity • Binary (political) vs. count-based features (age, gender) • Generalization of the classifiers Friend Follower Mention Retweet Mention

Outline I. Static (Batch) Prediction II. Streaming (Online) Inference III. Dynamic (Iterative) Learning and Prediction IV. Inferring User Demographics, Personality, Emotions and Opinions (AAAI Demo)

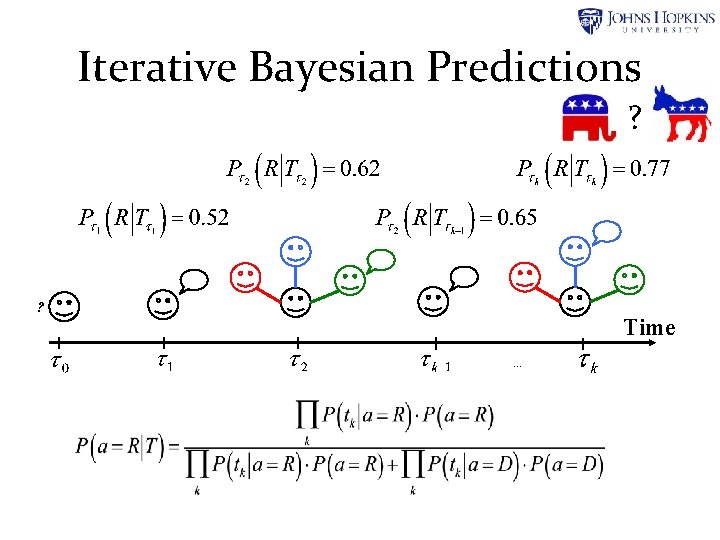

Iterative Bayesian Predictions ? ? Time …

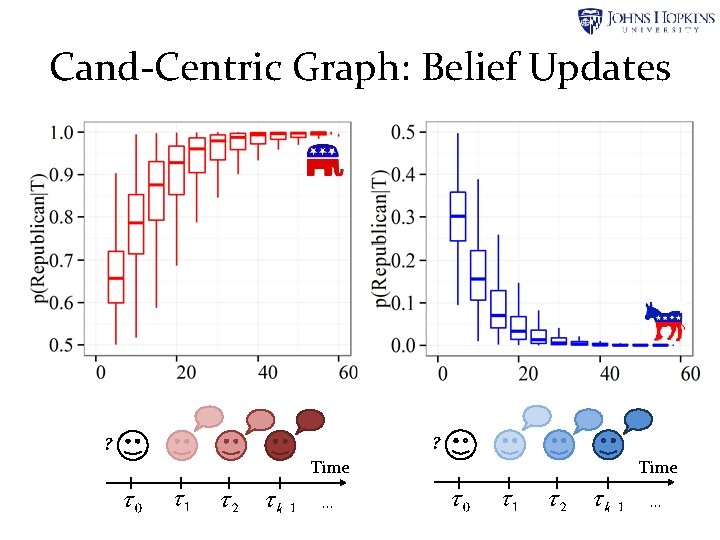

Cand-Centric Graph: Belief Updates ? ? Time … …

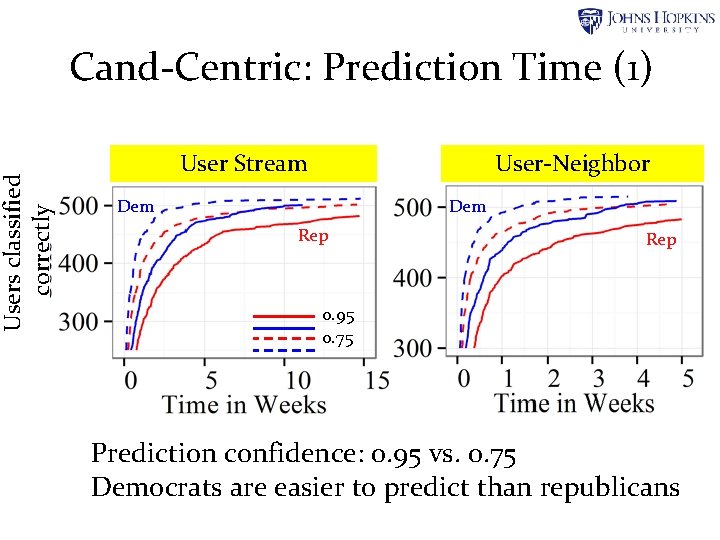

Users classified correctly Cand-Centric: Prediction Time (1) User Stream User-Neighbor Dem Rep 0. 95 0. 75 Prediction confidence: 0. 95 vs. 0. 75 Democrats are easier to predict than republicans

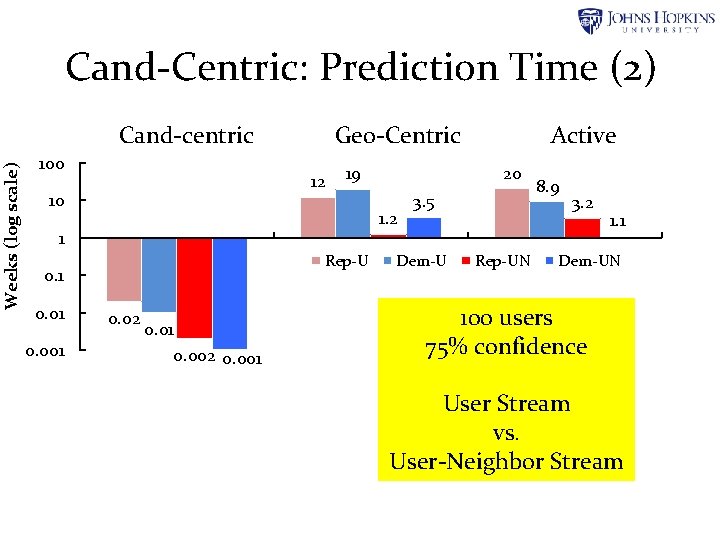

Weeks (log scale) Cand-Centric: Prediction Time (2) Cand-centric 100 Geo-Centric 12 10 1. 2 Rep-U 0. 1 0. 001 20 19 1 0. 02 0. 01 0. 002 0. 001 Active 3. 5 Dem-U Rep-UN 8. 9 3. 2 1. 1 Dem-UN 100 users 75% confidence User Stream vs. User-Neighbor Stream

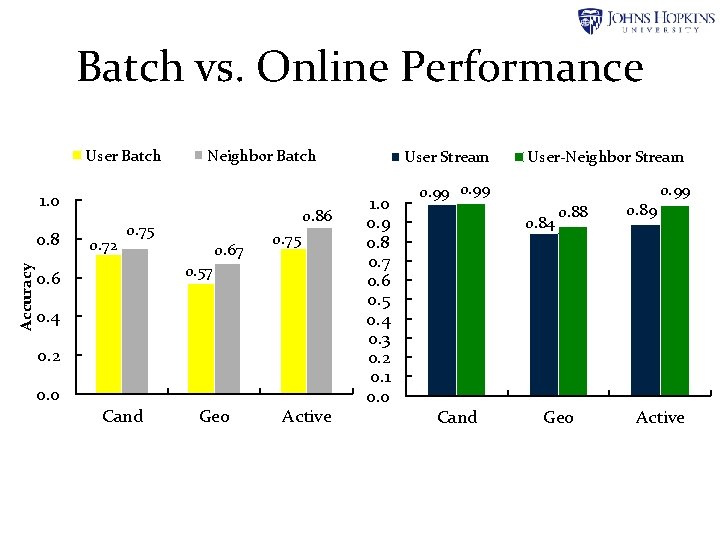

Batch vs. Online Performance User Batch Neighbor Batch 1. 0 Accuracy 0. 8 0. 72 0. 86 0. 75 0. 67 0. 75 0. 57 0. 6 0. 4 0. 2 0. 0 Cand Geo Active User Stream 1. 0 0. 9 0. 8 0. 7 0. 6 0. 5 0. 4 0. 3 0. 2 0. 1 0. 0 User-Neighbor Stream 0. 99 0. 84 Cand 0. 88 Geo 0. 89 0. 99 Active

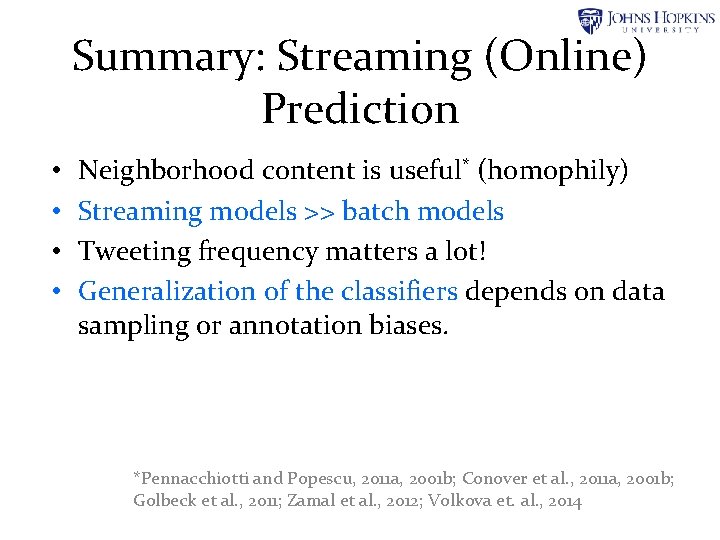

Summary: Streaming (Online) Prediction • • Neighborhood content is useful* (homophily) Streaming models >> batch models Tweeting frequency matters a lot! Generalization of the classifiers depends on data sampling or annotation biases. *Pennacchiotti and Popescu, 2011 a, 2001 b; Conover et al. , 2011 a, 2001 b; Golbeck et al. , 2011; Zamal et al. , 2012; Volkova et. al. , 2014

Outline I. Static (Batch) Prediction II. Streaming (Online) Inference III. Dynamic (Iterative) Learning and Prediction IV. Inferring User Demographics, Personality, Emotions and Opinions (AAAI Demo)

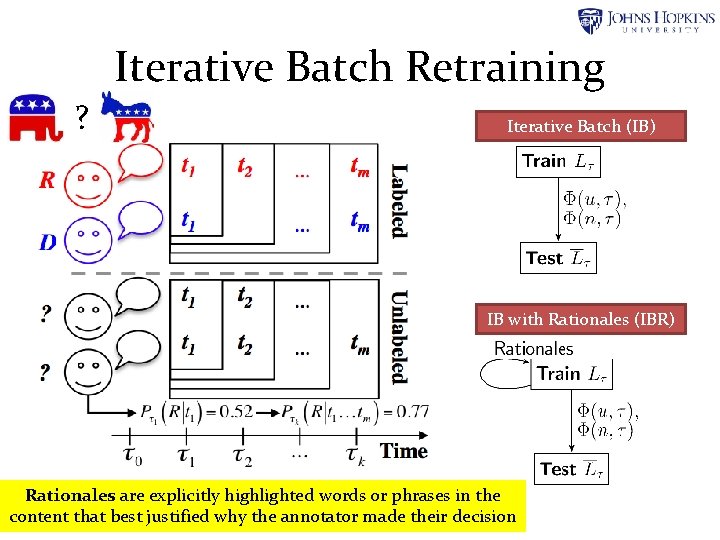

Iterative Batch Retraining ? Iterative Batch (IB) IB with Rationales (IBR) Rationales are explicitly highlighted words or phrases in the content that best justified why the annotator made their decision

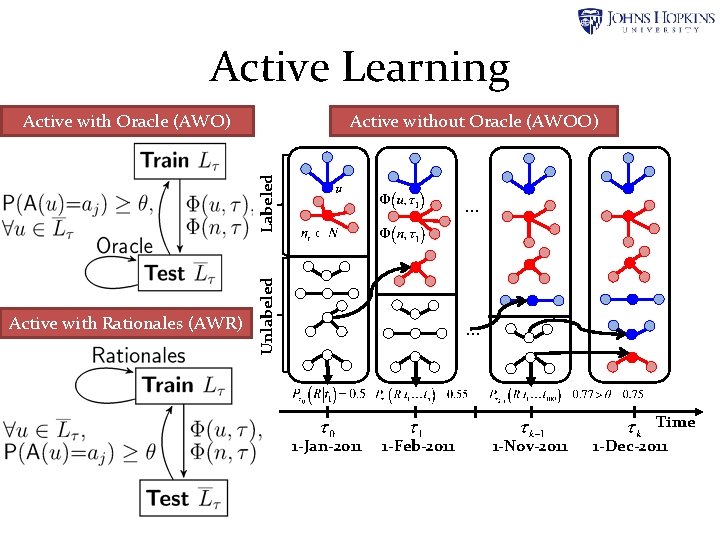

Active Learning Active without Oracle (AWOO) Labeled Active with Oracle (AWO) Unlabeled Active with Rationales (AWR) … … 1 -Jan-2011 1 -Feb-2011 1 -Nov-2011 Time 1 -Dec-2011

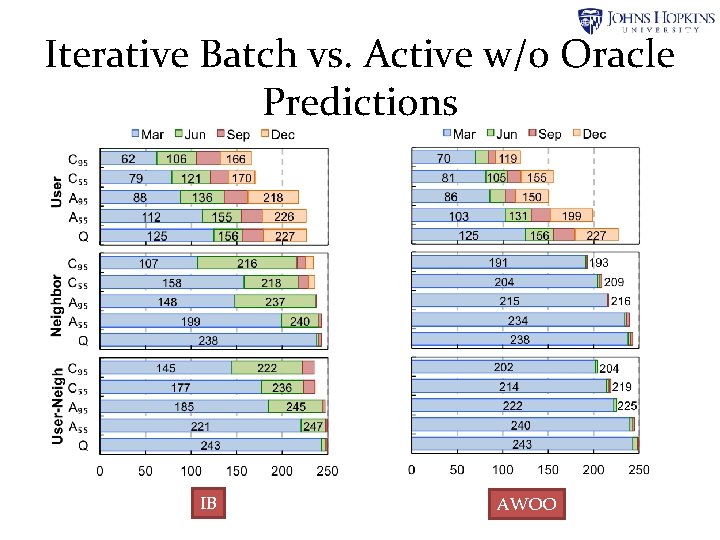

Iterative Batch vs. Active w/o Oracle Predictions IB AWOO

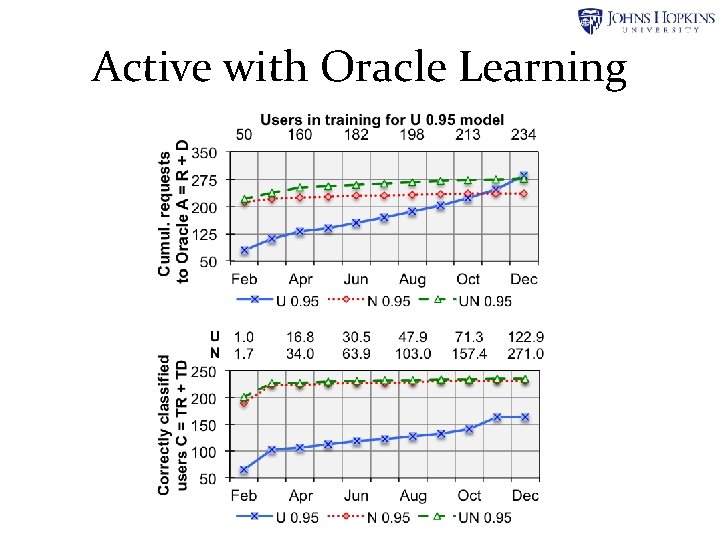

Active with Oracle Learning

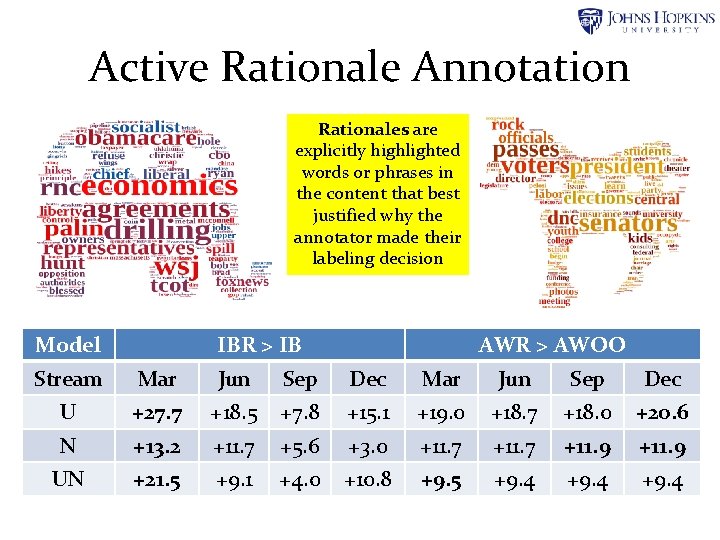

Active Rationale Annotation Rationales are explicitly highlighted words or phrases in the content that best justified why the annotator made their labeling decision Model IBR > IB AWR > AWOO Stream Mar Jun Sep Dec U +27. 7 +18. 5 +7. 8 +15. 1 +19. 0 +18. 7 +18. 0 +20. 6 N +13. 2 +11. 7 +5. 6 +3. 0 +11. 7 +11. 9 UN +21. 5 +9. 1 +4. 0 +10. 8 +9. 5 +9. 4

Summary: Iterative Learning and Prediction • Active retraining outperforms iterative batch retraining • Predicting from UN or N streams > U stream • Models with higher confidence yield higher precision and with lower confidence yield higher recall (as has been expected) • Active retraining with oracle annotations yields the highest recall (the upper bound) • Rationale annotation and filtering significantly improves results

Outline I. Static (Batch) Prediction II. Streaming (Online) Inference III. Dynamic (Iterative) Learning and Prediction IV. Inferring User Demographics, Personality, Emotions and Opinions (AAAI Demo)

Applications (1) Online targeted advertising • Targeting ads based on predicted user features Personalized marketing • Detecting opinions users express about products or services within targeted populations Large-scale real-time healthcare analytics • Identifying patterns of depression or mental illnesses within users of certain demographics

Applications (2) Recruitment and human resource management • Estimating emotional stability and personality of the potential employees • Measuring the overall well-being of the employees e. g. , life satisfaction, happiness. Personalized recommendation systems and search • Making recommendations based on user emotional tone, demographics and personality

Applications (3) Dating services • Matching user profiles by comparing their personalities, interests and emotional tone in social media Large-scale passive polling and real-time live polling • Mining political opinions, voting predictions for the groups of users with certain demographics

Thank you! Labeled Twitter network data for gender, age, political preference prediction: http: //www. cs. jhu. edu/~svitlana/ Code and pre-trained models for all attributes, emotions and sentiment available on request: svitlana@jhu. edu

- Slides: 38