OneShot Deep Learning Models of LongTerm Declarative Episodic

![Episodic memory is memory of the personally experienced and remembered events of life time[5]. Episodic memory is memory of the personally experienced and remembered events of life time[5].](https://slidetodoc.com/presentation_image_h2/0744931bea3f2921f785cc99341350b2/image-5.jpg)

- Slides: 45

One-Shot Deep Learning Models of Long-Term Declarative Episodic Memory Yousef Alhwaiti Dissertation Advisor: Dr. Charles C. Tappert 1

Outline: ØIntroduction & Literature Review ØProblem statement ØMethodology ØDataset ØMethod#1 ØExperiment & Results ØMethod#2 ØExperiment & Results ØExperiments on probability of common a units ØExperiments demonstrating older memories fading away ØEstimating recall accuracy for human lifespan ØExperiments on the A_Z Handwritten Alphabet Dataset ØExperiments on the Fashion MNIST Dataset ØExperiments on the CFAR-10 Dataset ØAn improvement for the algorithm 2

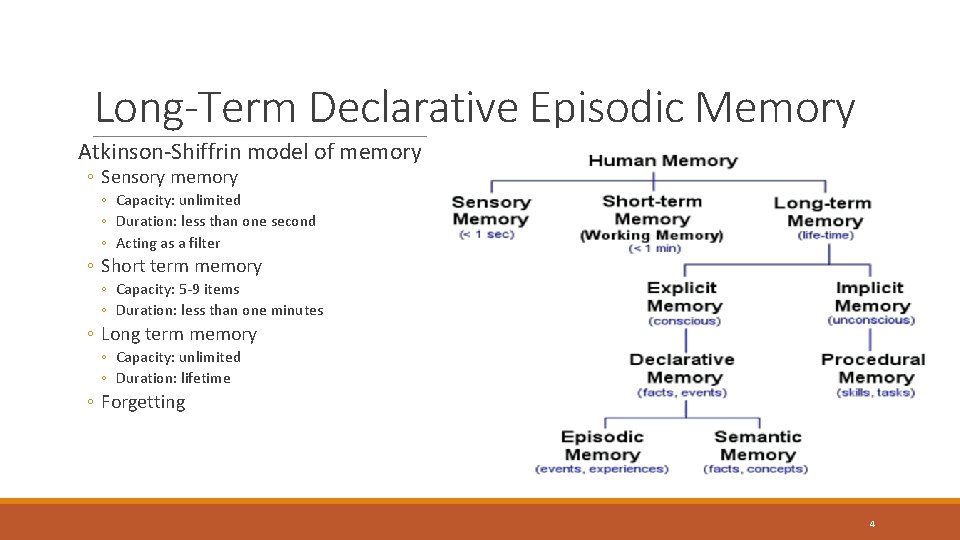

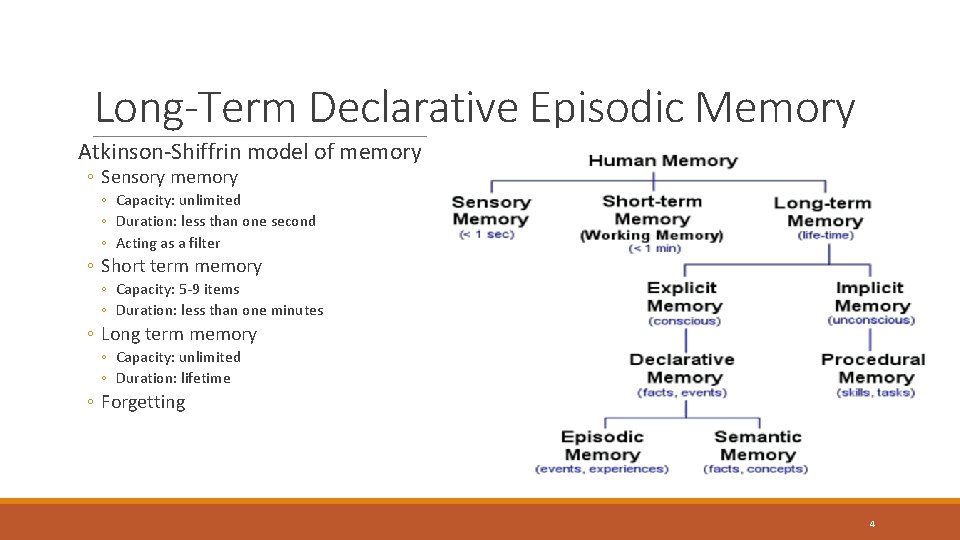

Introduction and literature review Long-Term Declarative Episodic Memory ◦ Atkinson-Shiffrin model of memory Deep learning classification problems One-shot learning Weights versus features in the CNN 3

Long-Term Declarative Episodic Memory Atkinson-Shiffrin model of memory ◦ Sensory memory ◦ Capacity: unlimited ◦ Duration: less than one second ◦ Acting as a filter ◦ Short term memory ◦ Capacity: 5 -9 items ◦ Duration: less than one minutes ◦ Long term memory ◦ Capacity: unlimited ◦ Duration: lifetime ◦ Forgetting 4

![Episodic memory is memory of the personally experienced and remembered events of life time5 Episodic memory is memory of the personally experienced and remembered events of life time[5].](https://slidetodoc.com/presentation_image_h2/0744931bea3f2921f785cc99341350b2/image-5.jpg)

Episodic memory is memory of the personally experienced and remembered events of life time[5]. Include information about recent or past events and experience[6]. The recollection of experiences is contingent on three steps of memory processing[6]: ◦ encoding ◦ Storing ◦ retrieval 5

Deep learning classification problems ◦ Large number of examples for each category ◦ The network can not be tested on a new class. 6

One-shot learning-overview Human can learn from one or few examples One-shot learning aims to learn information about object categories from one, or only a few, training images. Why one-shot learning ◦ Few data for training/testing ◦ Human Memory records quickly like one-shot learning ◦ Flexibility in adding new categories 7

One-shot learning Cont. Calculating the distance between the input images and reference images. If the distance between the input image and the reference images is lower than a certain threshold, then the new image will be added to the database as a reference image. d(image 1; image 2) = the degree of the difference between images ◦ If d(image 1; image 2) >= γ ◦ If d(image 1; image 2) < γ same different 8

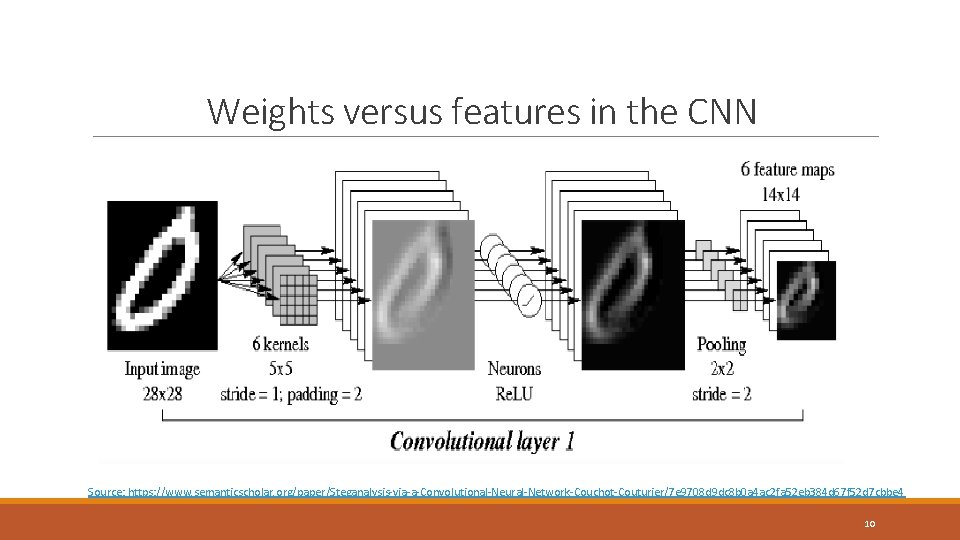

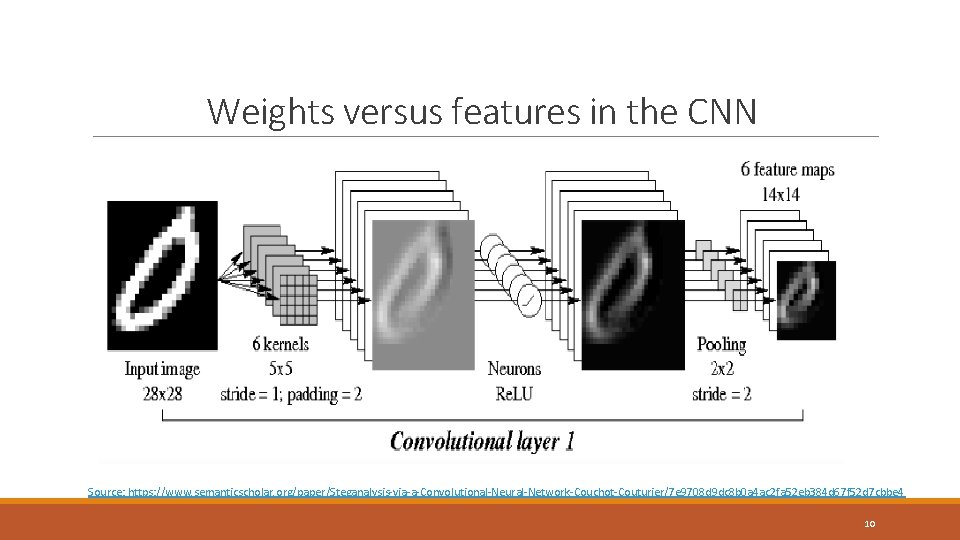

Weights versus features in the CNN Source : https: //towardsdatascience. com/converting-a-simple-deep-learning-model-from-pytorch-to-tensorflow-b 6 b 353351 f 5 d 9

Weights versus features in the CNN Source: https: //www. semanticscholar. org/paper/Steganalysis-via-a-Convolutional-Neural-Network-Couchot-Couturier/7 e 9708 d 9 dc 8 b 0 a 4 ac 2 fa 52 eb 384 d 67 f 52 d 7 cbbe 4 10

Problem statement This research is to identify mechanisms to replicate human long-term declarative episodic memory. 11

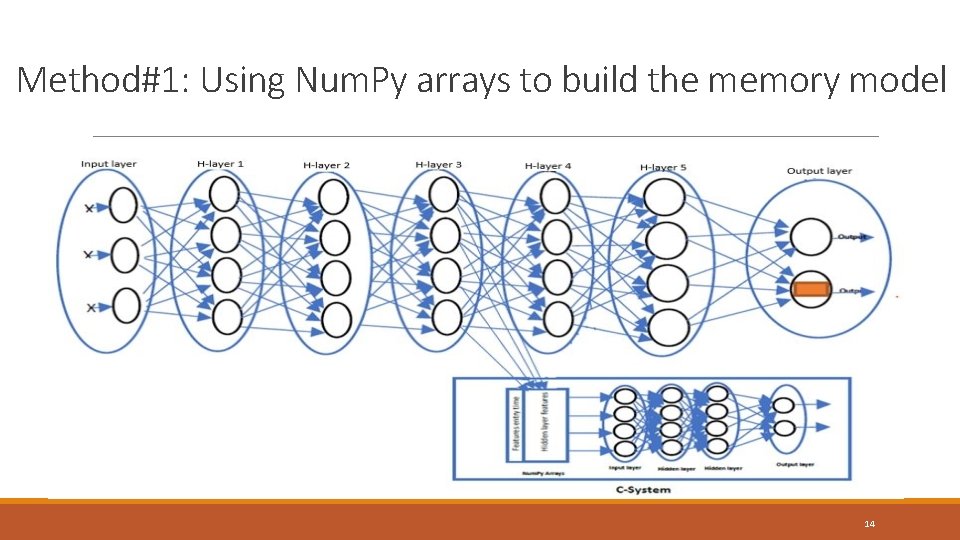

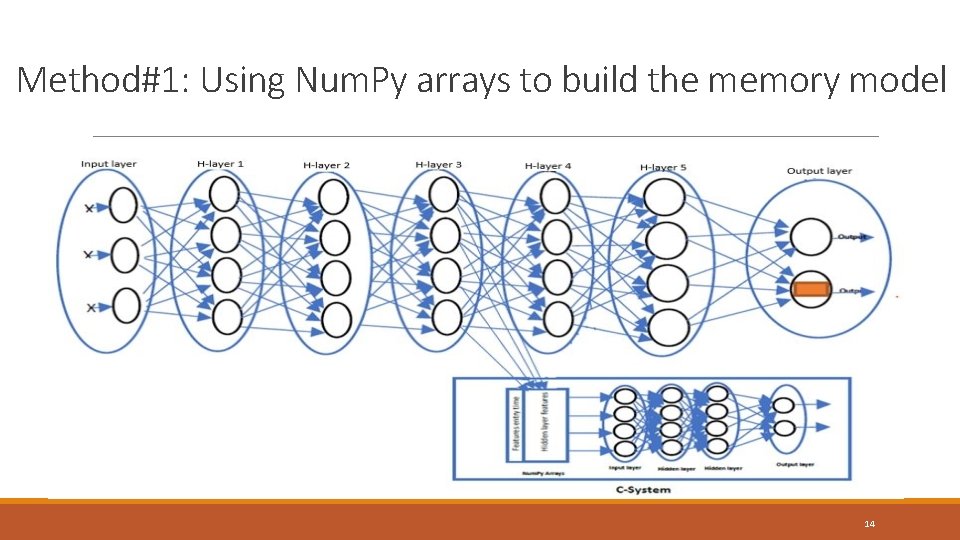

Methodology The Convolutional network will be used to create the recognition system. To create memory system, there are two methods: ◦ Method#1: Extracting hidden layer feature and store them in Num. Py array. ◦ Method#2: Using clock system 12

MNIST Dataset ◦ ◦ ◦ Set of handwritten digits 60, 000 samples for training and 10, 000 for testing. The samples are in gray-scale Fixed size 28 X 28 pixels We have used MNIST dataset to show the sequence to be remembered in the experiments. Source: https: //medium. com/syncedreview/mnist-reborn-restored-and-expanded-additional-50 k-training-samples-70 c 6 f 8 a 9 e 9 a 9 13

Method#1: Using Num. Py arrays to build the memory model 14

Method#1: Experiment Results ◦ Images sent through system over 4 days, recall accuracy is 100% for all images. ◦ Output results of recalling the sequence 8, 1, 5, 1, 2, 8, 4, 2, 7, 1, 1, 5 images Recalled sequence date time 15

Method#1: Experiment limitation ◦ Recall accuracy 100% for a sequence of any length ◦ Read and recall each of the following sequence ◦ 2 , 4, 6, 3 ◦ 4, 5 , 7 , 6 , 9 , 2 , 6 , 3 , 1 , 8 , 4 , 7 , 4 , 5 , 2 ◦ The shape of each stored feature is (1, 4, 4, 16) ◦ Long sequence = number of the sequence in life time ◦ 3. 15× 109 seconds in a generous 100 -year lifespan 16

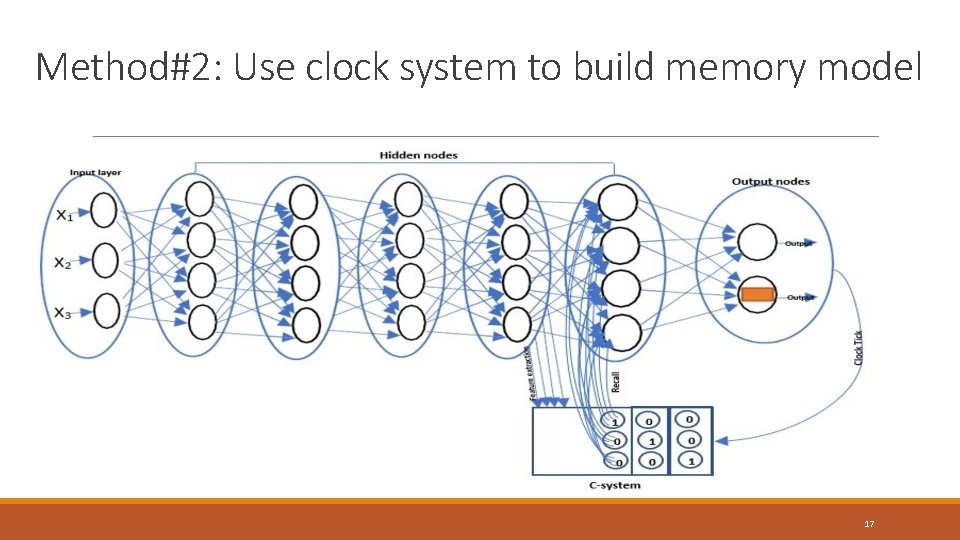

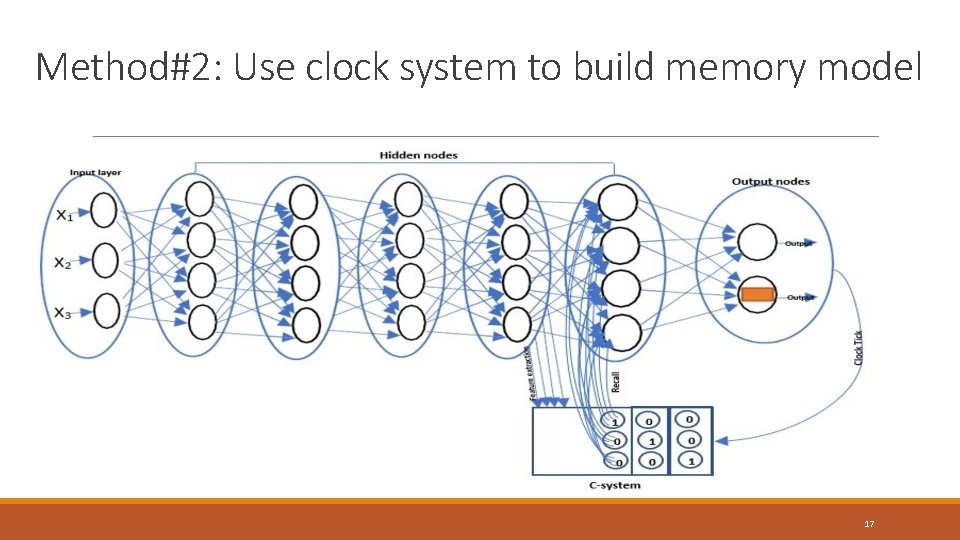

Method#2: Use clock system to build memory model 17

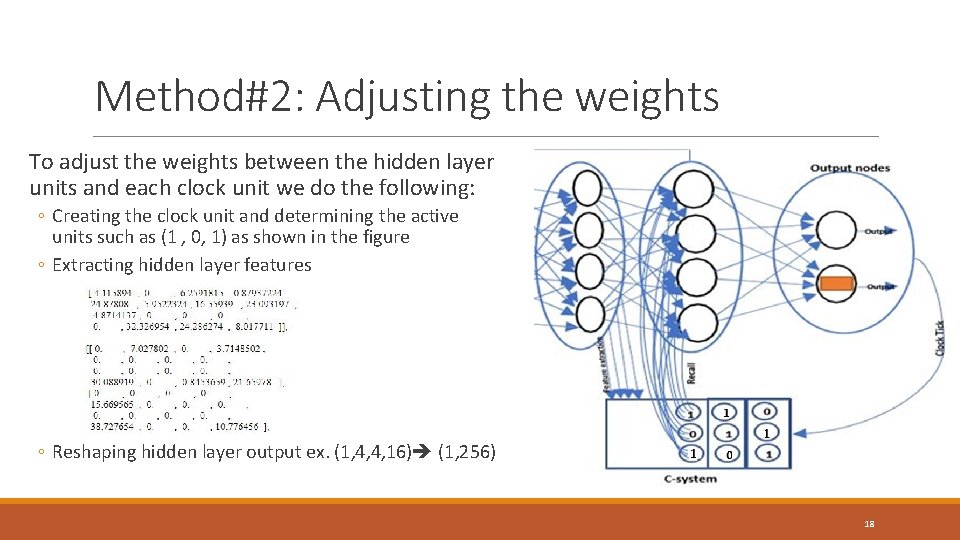

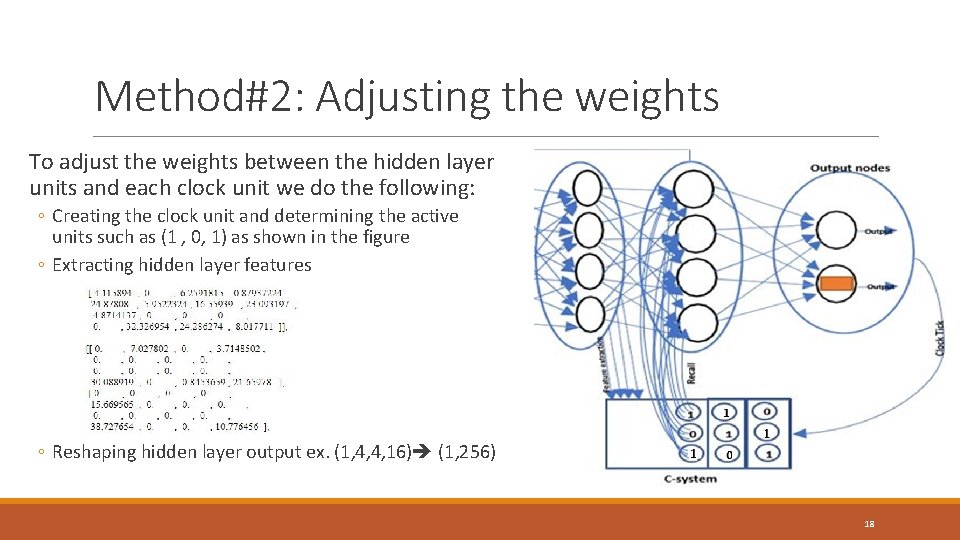

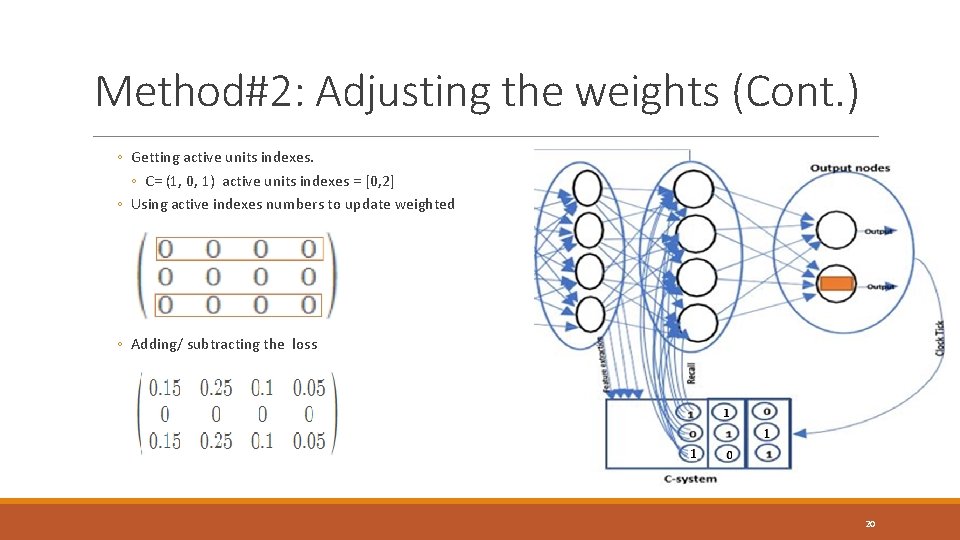

Method#2: Adjusting the weights To adjust the weights between the hidden layer units and each clock unit we do the following: ◦ Creating the clock unit and determining the active units such as (1 , 0, 1) as shown in the figure ◦ Extracting hidden layer features ◦ Reshaping hidden layer output ex. (1, 4, 4, 16) (1, 256) 18

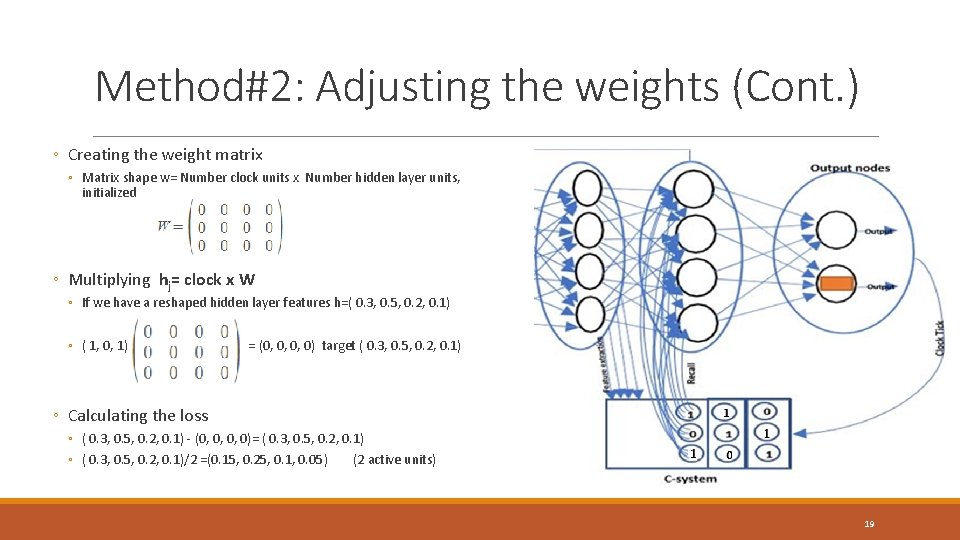

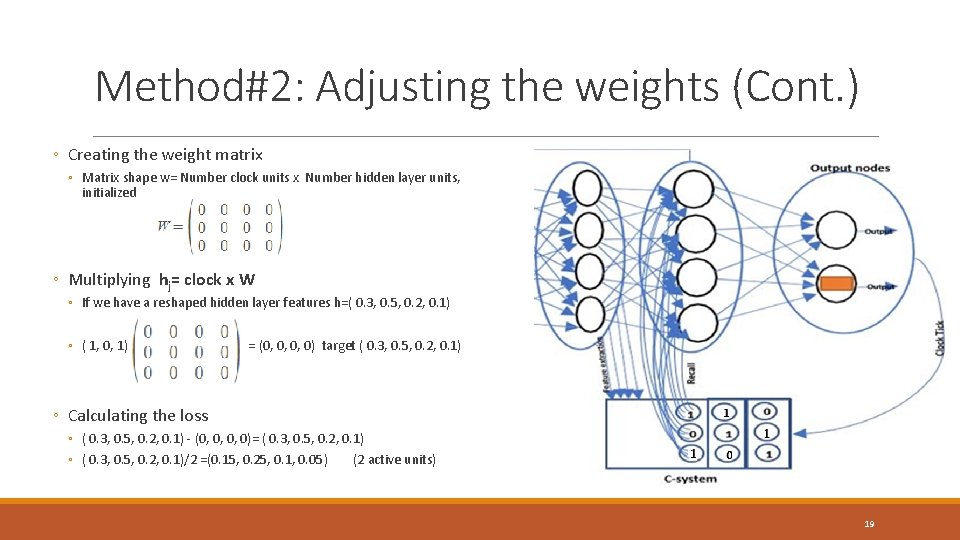

Method#2: Adjusting the weights (Cont. ) ◦ Creating the weight matrix ◦ Matrix shape w= Number clock units x Number hidden layer units, initialized ◦ Multiplying hj= clock x W ◦ If we have a reshaped hidden layer features h=( 0. 3, 0. 5, 0. 2, 0. 1) ◦ ( 1, 0, 1) = (0, 0, 0, 0) target ( 0. 3, 0. 5, 0. 2, 0. 1) ◦ Calculating the loss ◦ ( 0. 3, 0. 5, 0. 2, 0. 1) - (0, 0, 0, 0)= ( 0. 3, 0. 5, 0. 2, 0. 1) ◦ ( 0. 3, 0. 5, 0. 2, 0. 1)/2 =(0. 15, 0. 25, 0. 1, 0. 05) (2 active units) 19

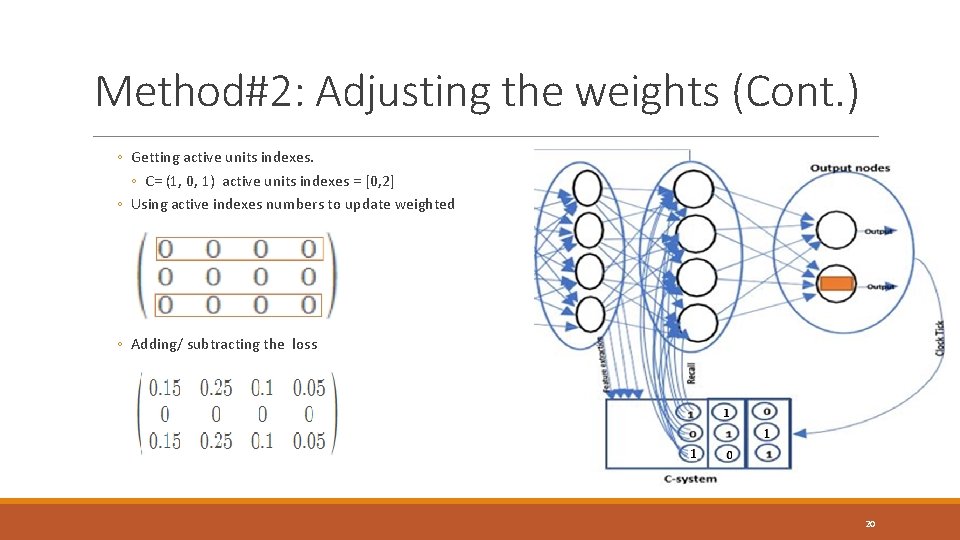

Method#2: Adjusting the weights (Cont. ) ◦ Getting active units indexes. ◦ C= (1, 0, 1) active units indexes = [0, 2] ◦ Using active indexes numbers to update weighted ◦ Adding/ subtracting the loss 20

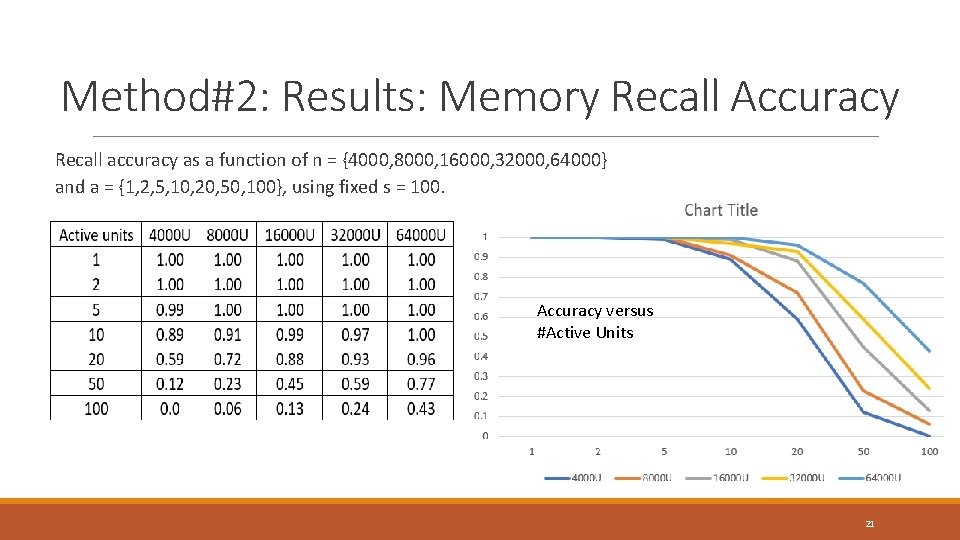

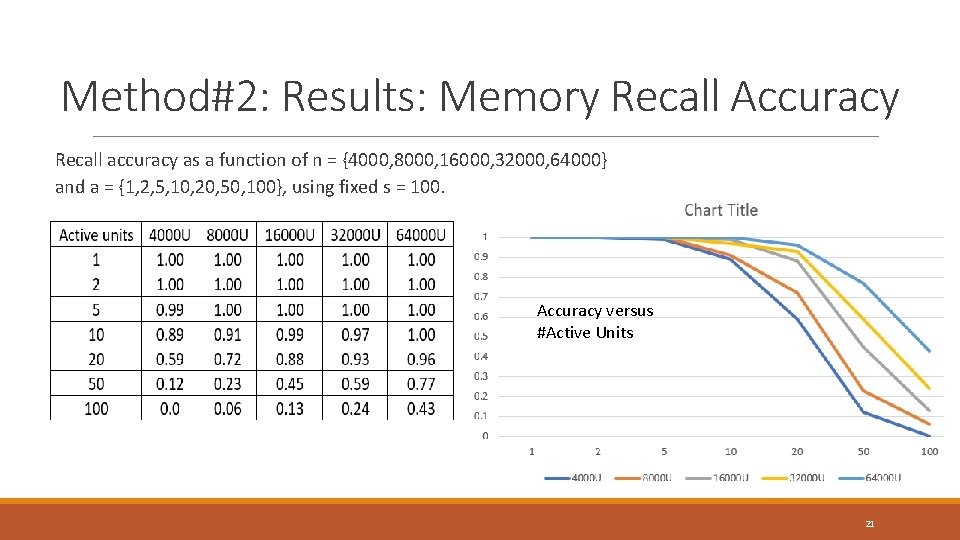

Method#2: Results: Memory Recall Accuracy Recall accuracy as a function of n = {4000, 8000, 16000, 32000, 64000} and a = {1, 2, 5, 10, 20, 50, 100}, using fixed s = 100. Accuracy versus #Active Units 21

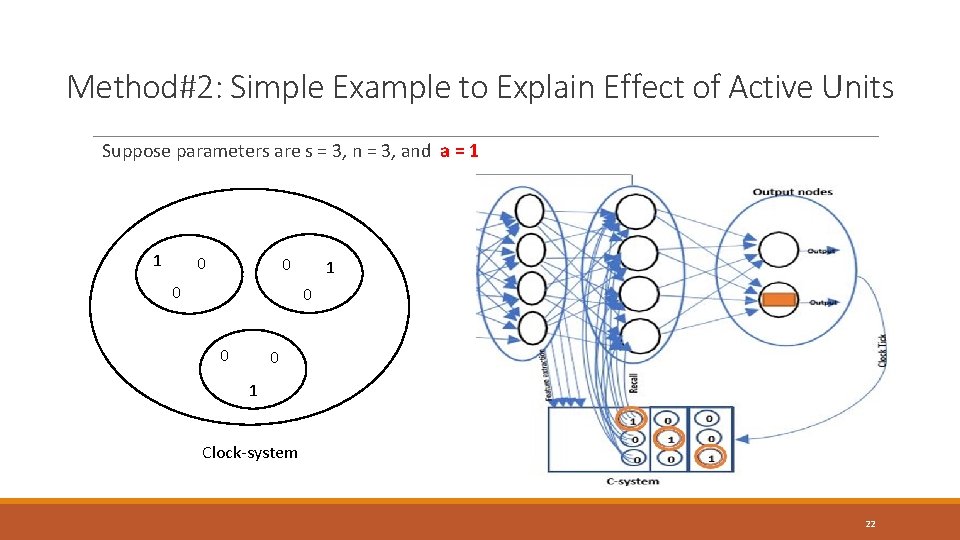

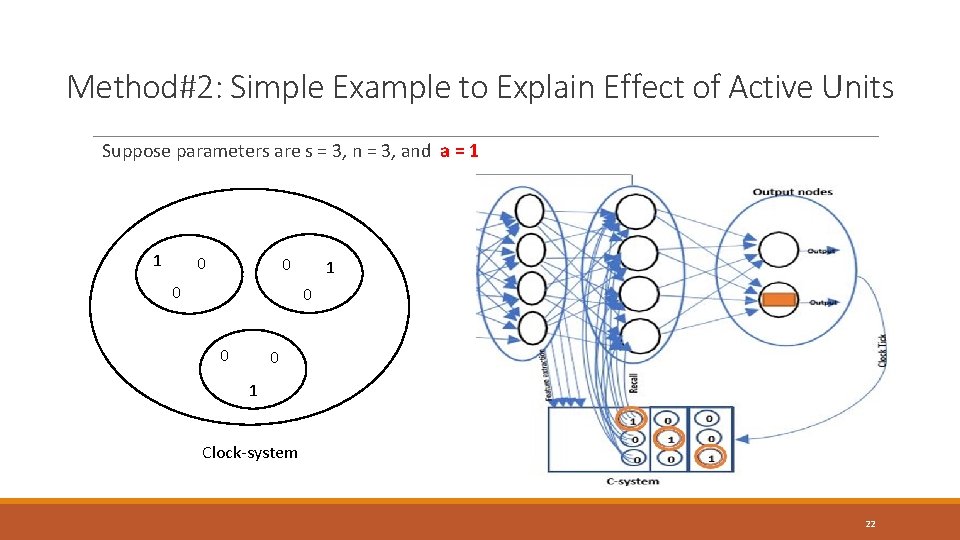

Method#2: Simple Example to Explain Effect of Active Units Suppose parameters are s = 3, n = 3, and a = 1 1 0 0 0 1 Clock-system 22

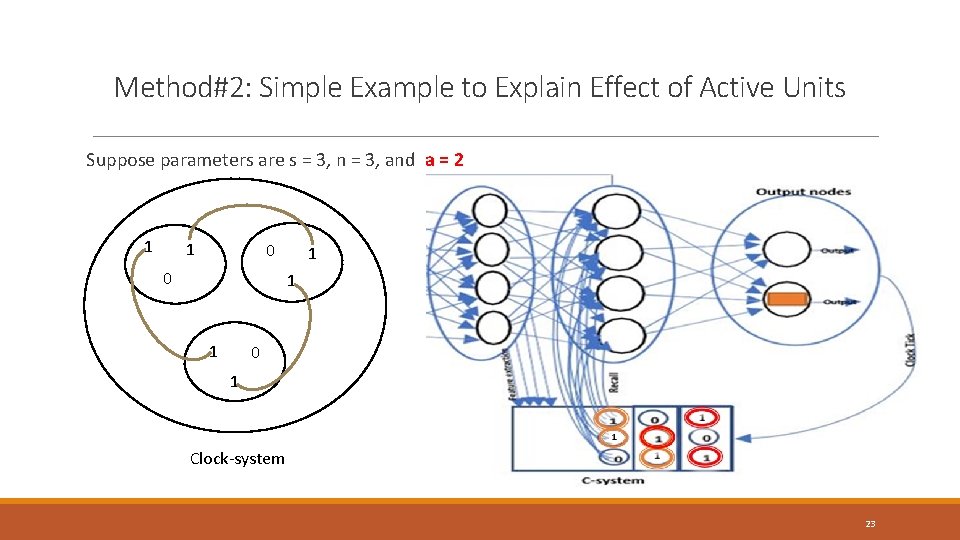

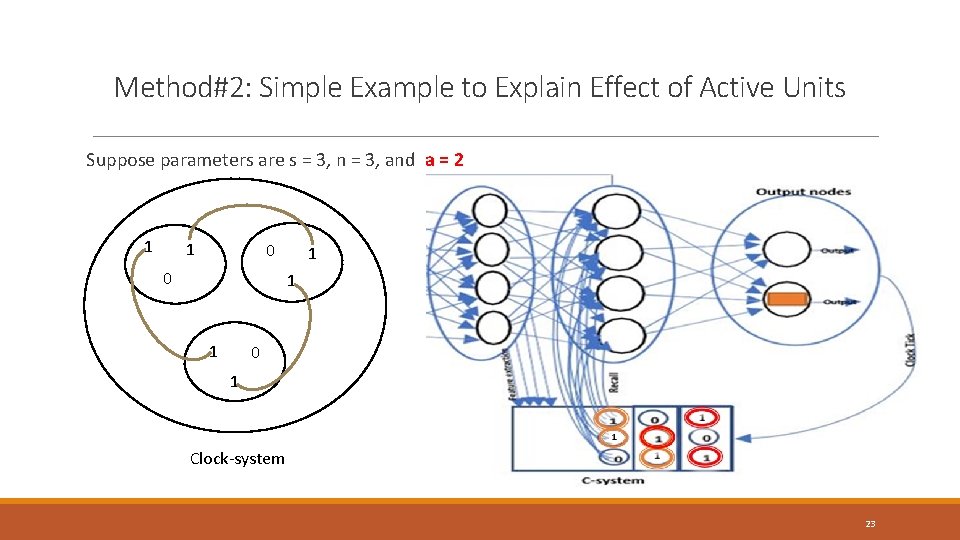

Method#2: Simple Example to Explain Effect of Active Units Suppose parameters are s = 3, n = 3, and a = 2 1 1 0 0 1 1 1 0 1 Clock-system 23

Method#2: Experiment Results Recall accuracy as a function of n={4000, 8000, 16000, 32000, 64000} and s={100 , 200, 500, 1000, 2000, 5000, 10000}, using fixed a =2 24

Method#2: Experiments on probability of common a units § Common active units occur when two or more clock vectors in the sequence share the same active units. Suppose n=5, a=2 • C 1= [0, 0, 1] C 2= [1, 0, 0, 0, 1] Sample of the simulator results for the inputs s= 200 images , n= 100 , and a= 20 active units and 6 iteration §To calculate the probability of common units for c units in common is 25

Method#2: Experiments on probability of common a units § Also, we used the simulator to check the relation between common units and the sequence length. The inputs were s= {100, 500, 1000}, a=10, n=2000. 26

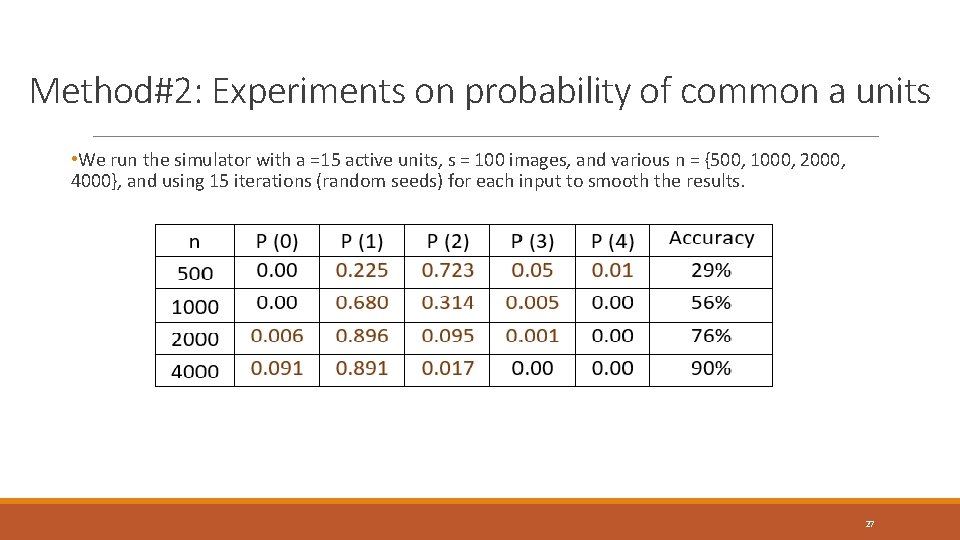

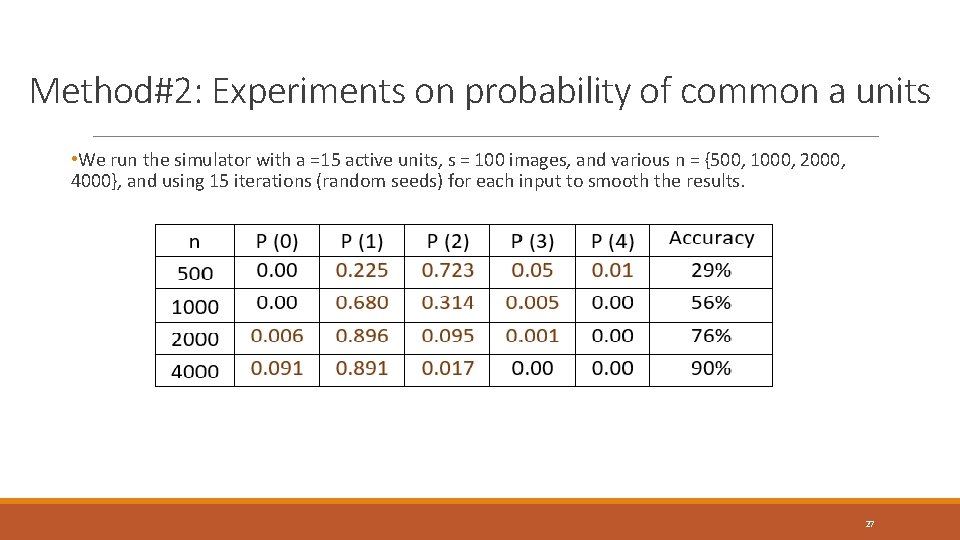

Method#2: Experiments on probability of common a units • We run the simulator with a =15 active units, s = 100 images, and various n = {500, 1000, 2000, 4000}, and using 15 iterations (random seeds) for each input to smooth the results. 27

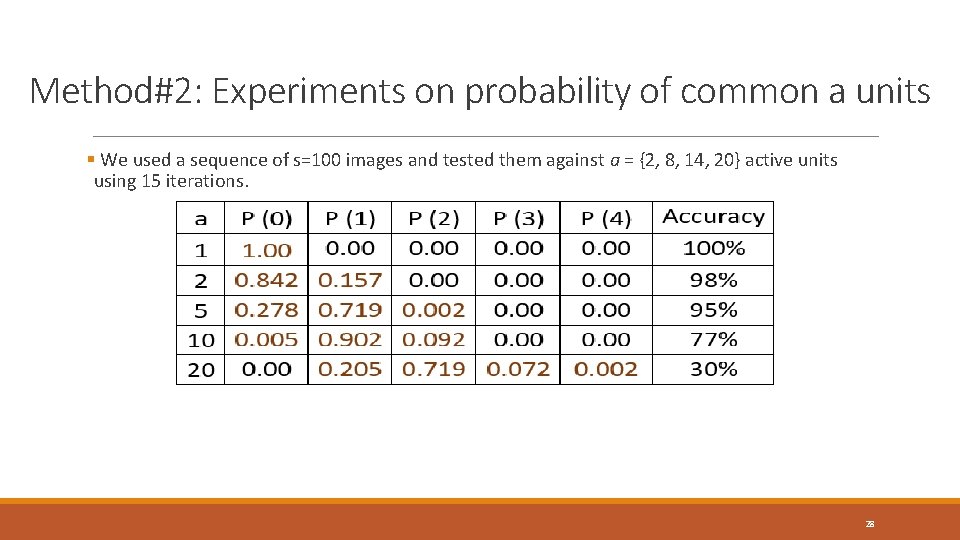

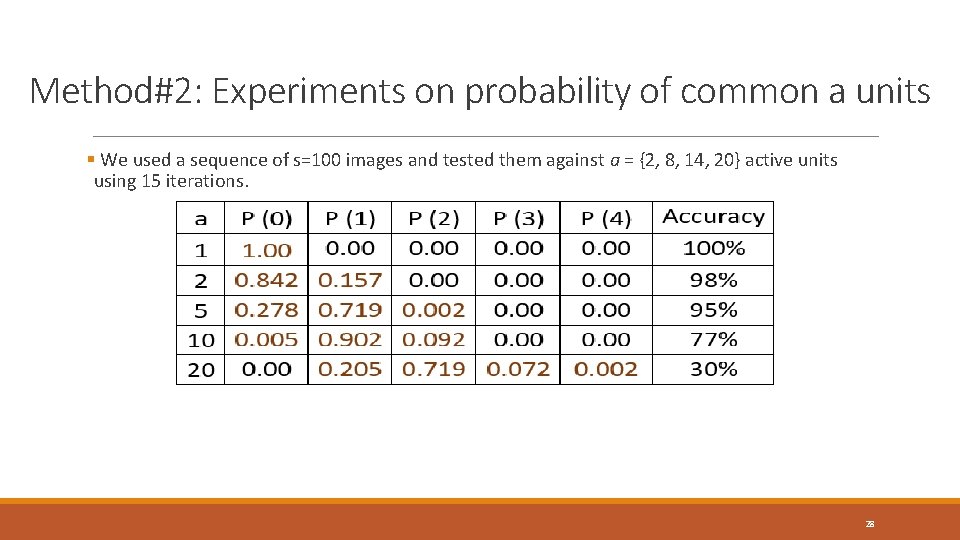

Method#2: Experiments on probability of common a units § We used a sequence of s=100 images and tested them against a = {2, 8, 14, 20} active units using 15 iterations. 28

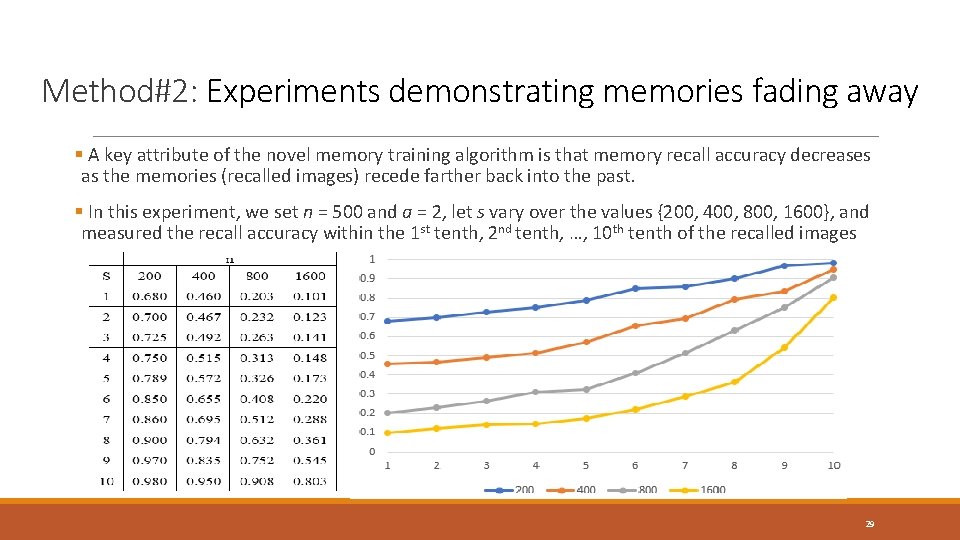

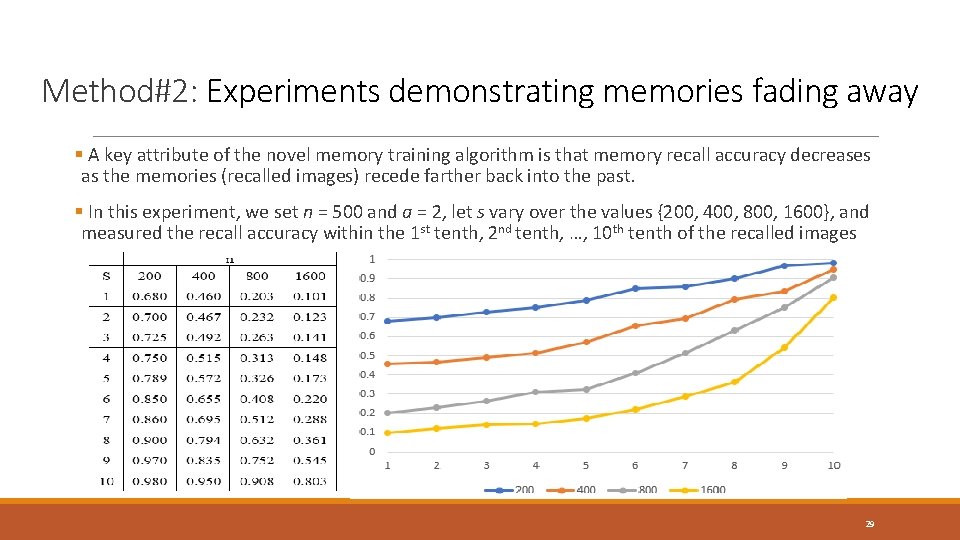

Method#2: Experiments demonstrating memories fading away § A key attribute of the novel memory training algorithm is that memory recall accuracy decreases as the memories (recalled images) recede farther back into the past. § In this experiment, we set n = 500 and a = 2, let s vary over the values {200, 400, 800, 1600}, and measured the recall accuracy within the 1 st tenth, 2 nd tenth, …, 10 th tenth of the recalled images 29

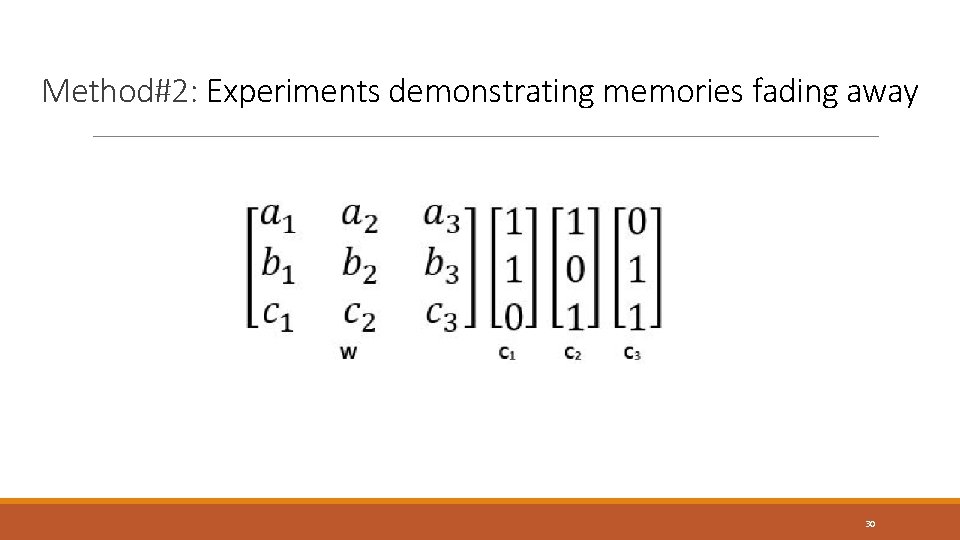

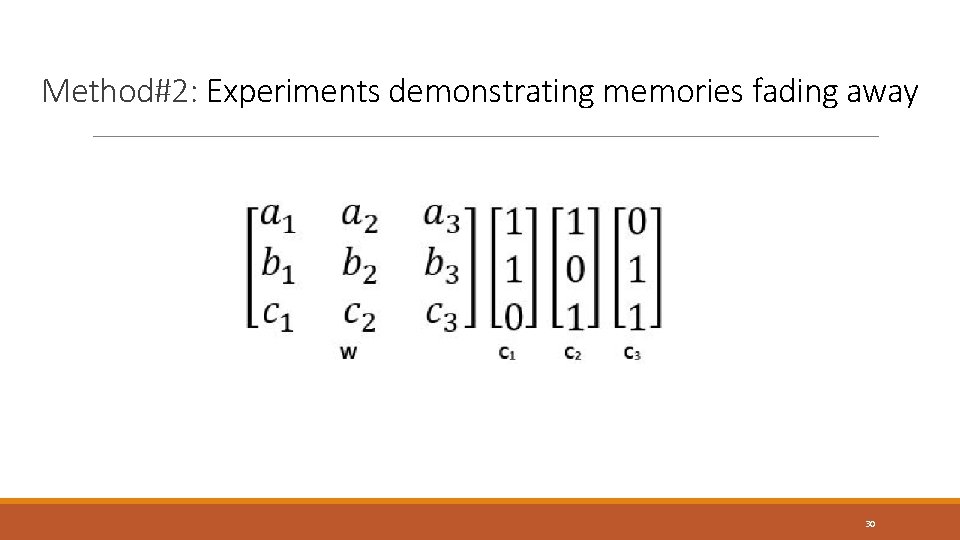

Method#2: Experiments demonstrating memories fading away 30

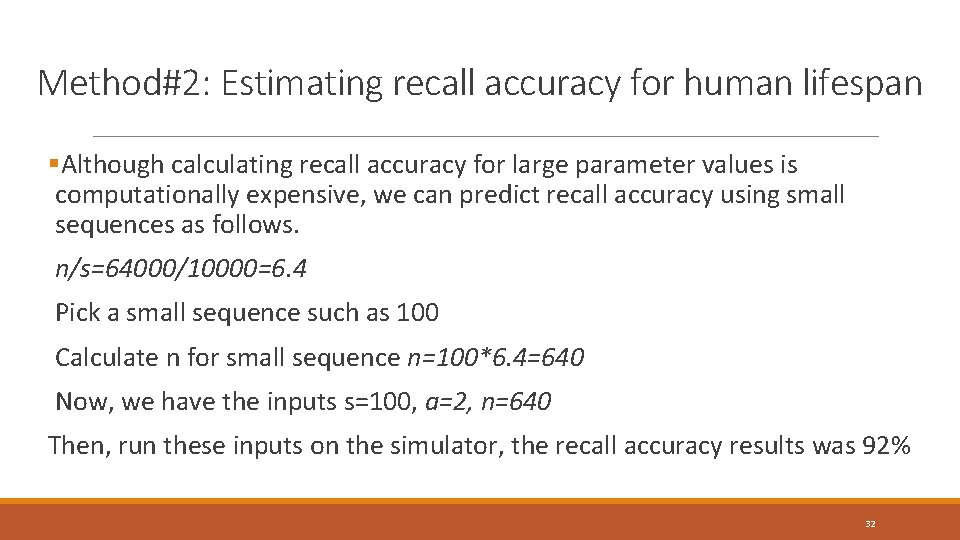

Method#2: Estimating recall accuracy for human lifespan §Recall accuracy depends on the relationship between the sequence length s and the total units n. §Assume humans have a memory event every second of their lives. §There are 3. 15× 107 seconds per year, or 3. 15× 109 seconds in a generous 100 -year lifespan §We can predict the accuracy for any sequence length using smaller sequences 31

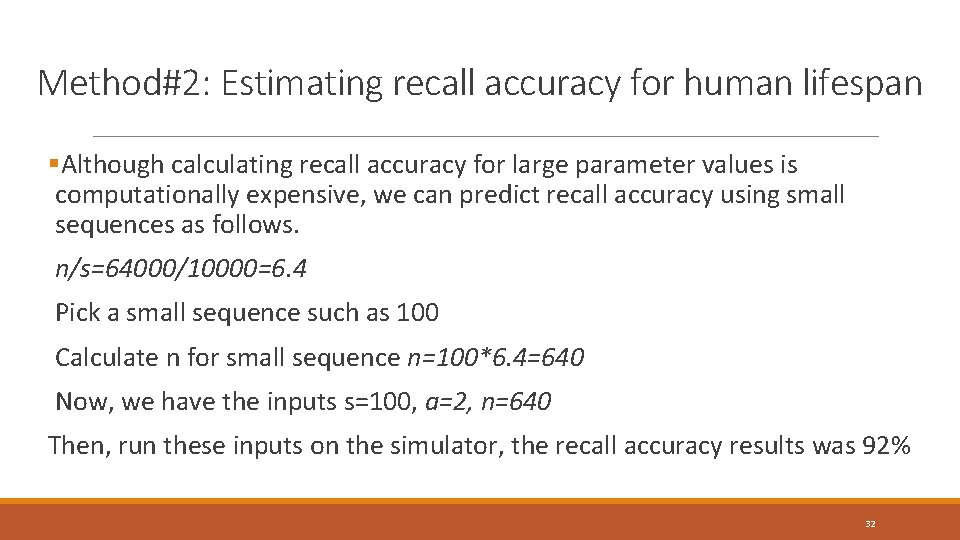

Method#2: Estimating recall accuracy for human lifespan §Although calculating recall accuracy for large parameter values is computationally expensive, we can predict recall accuracy using small sequences as follows. n/s=64000/10000=6. 4 Pick a small sequence such as 100 Calculate n for small sequence n=100*6. 4=640 Now, we have the inputs s=100, a=2, n=640 Then, run these inputs on the simulator, the recall accuracy results was 92% 32

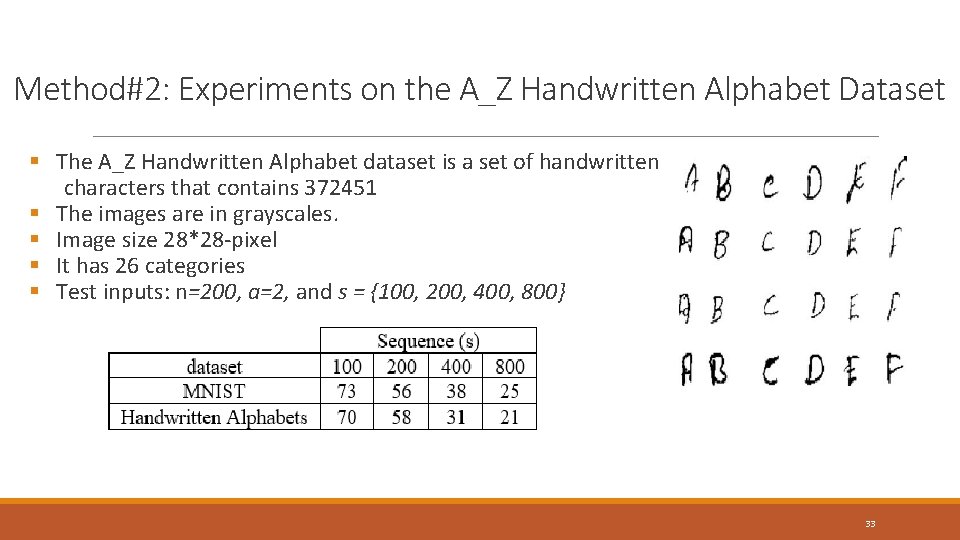

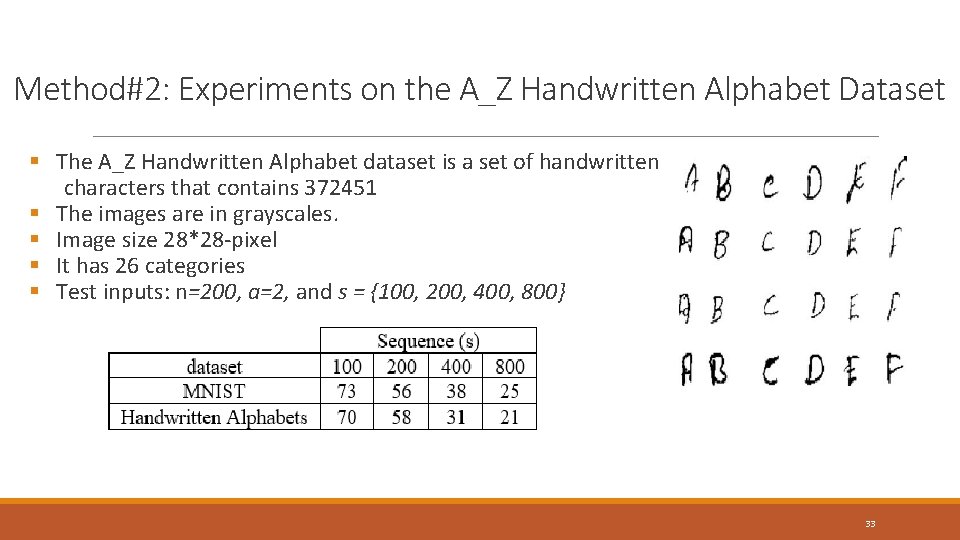

Method#2: Experiments on the A_Z Handwritten Alphabet Dataset § The A_Z Handwritten Alphabet dataset is a set of handwritten characters that contains 372451 § The images are in grayscales. § Image size 28*28 -pixel § It has 26 categories § Test inputs: n=200, a=2, and s = {100, 200, 400, 800} 33

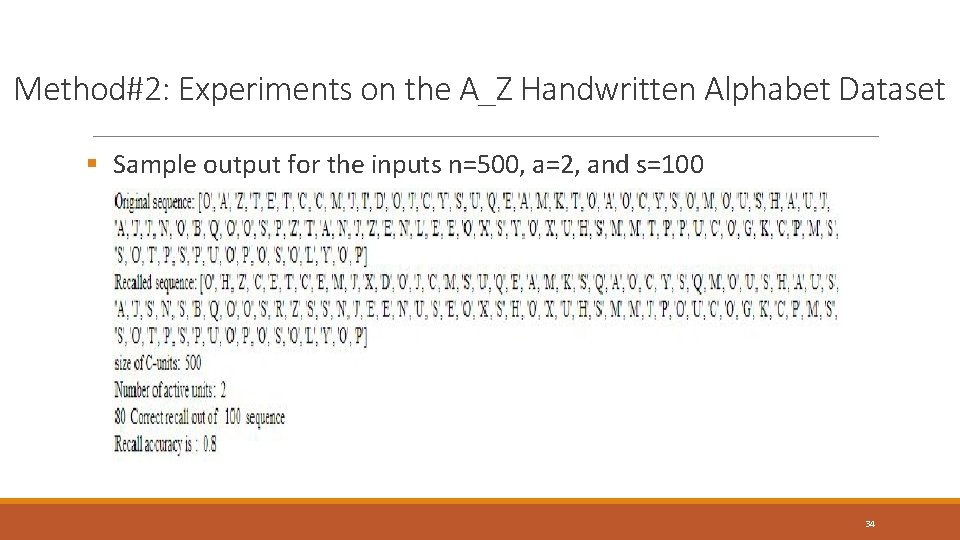

Method#2: Experiments on the A_Z Handwritten Alphabet Dataset § Sample output for the inputs n=500, a=2, and s=100 34

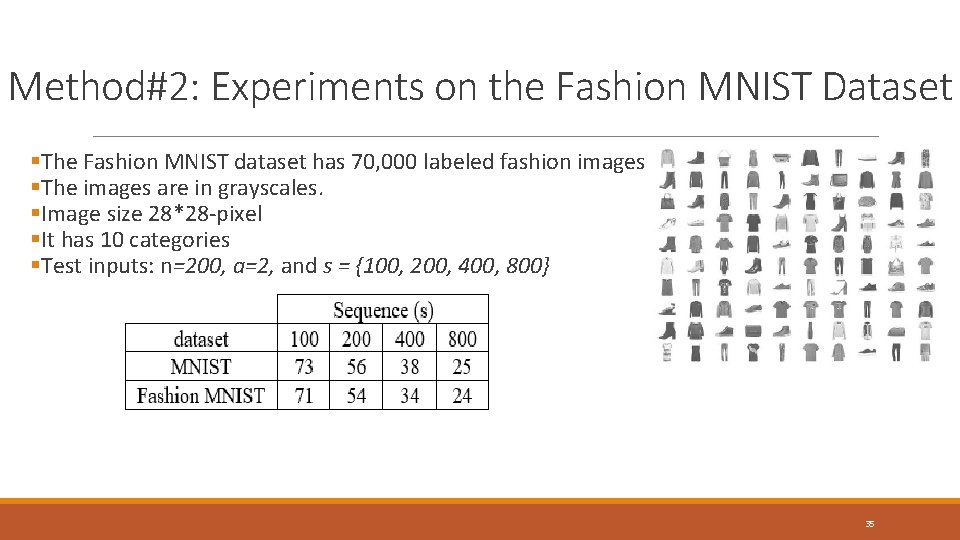

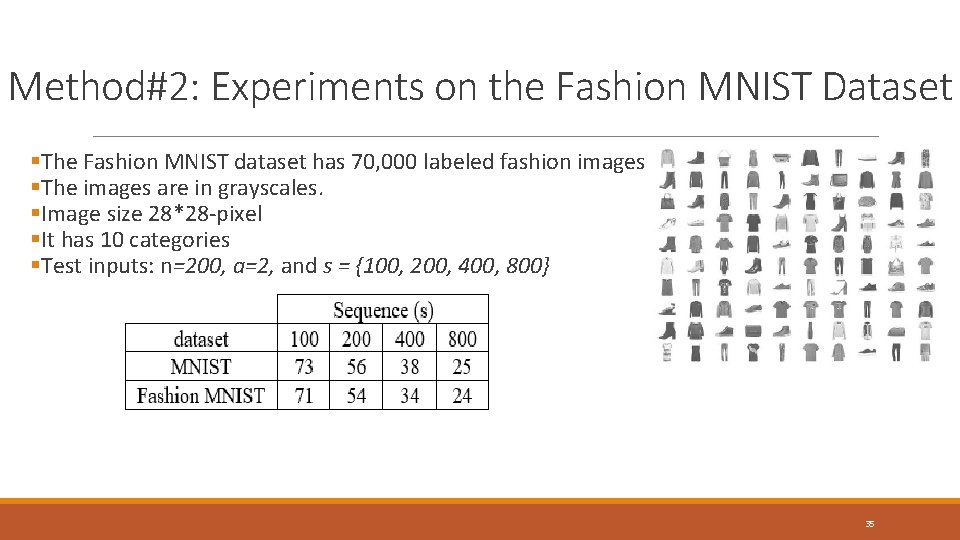

Method#2: Experiments on the Fashion MNIST Dataset §The Fashion MNIST dataset has 70, 000 labeled fashion images §The images are in grayscales. §Image size 28*28 -pixel §It has 10 categories §Test inputs: n=200, a=2, and s = {100, 200, 400, 800} 35

Method#2: Experiments on the Fashion MNIST Dataset §Sample output for the inputs n=500, a=2, and s=100 36

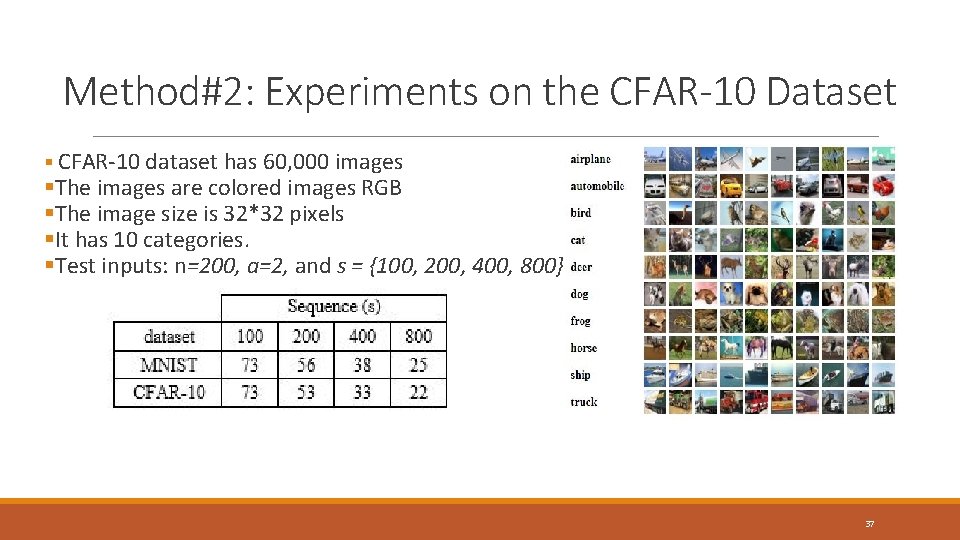

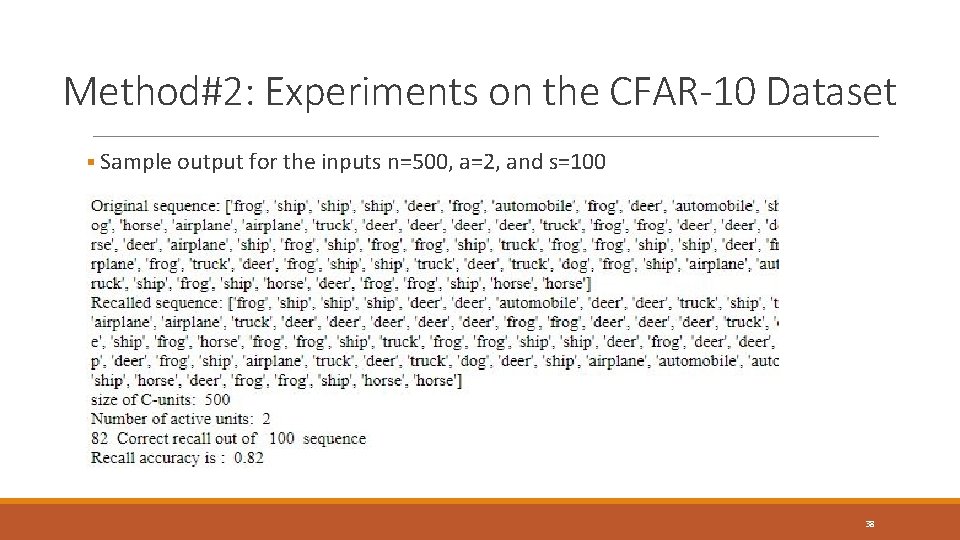

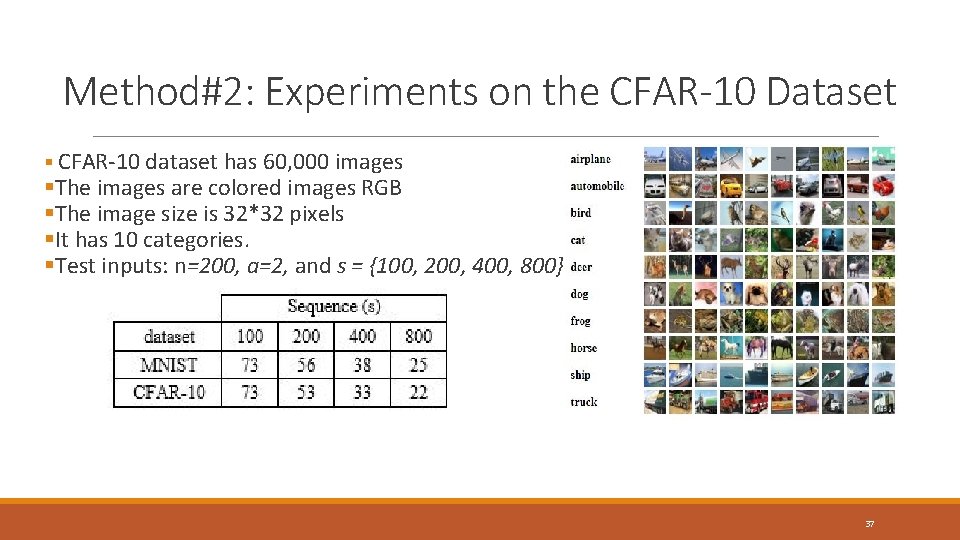

Method#2: Experiments on the CFAR-10 Dataset § CFAR-10 dataset has 60, 000 images §The images are colored images RGB §The image size is 32*32 pixels §It has 10 categories. §Test inputs: n=200, a=2, and s = {100, 200, 400, 800} 37

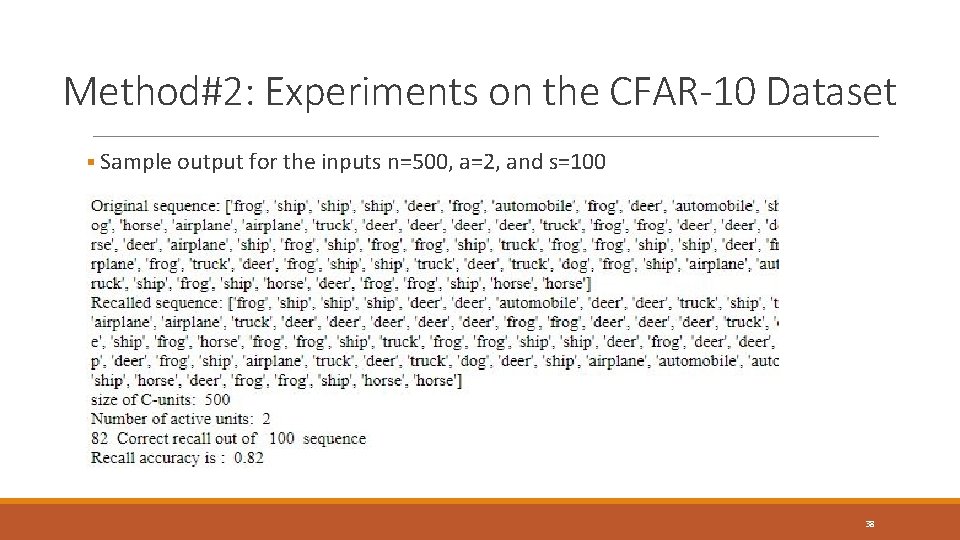

Method#2: Experiments on the CFAR-10 Dataset § Sample output for the inputs n=500, a=2, and s=100 38

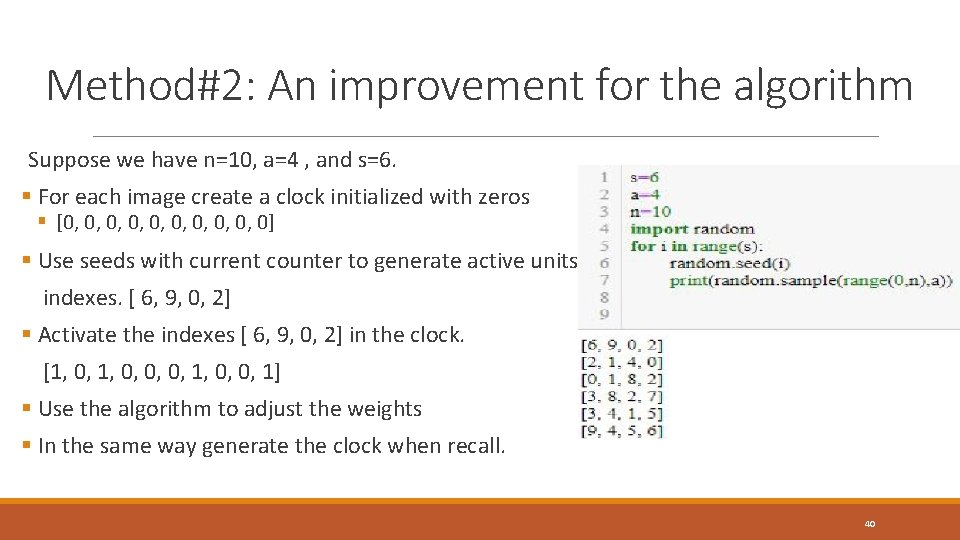

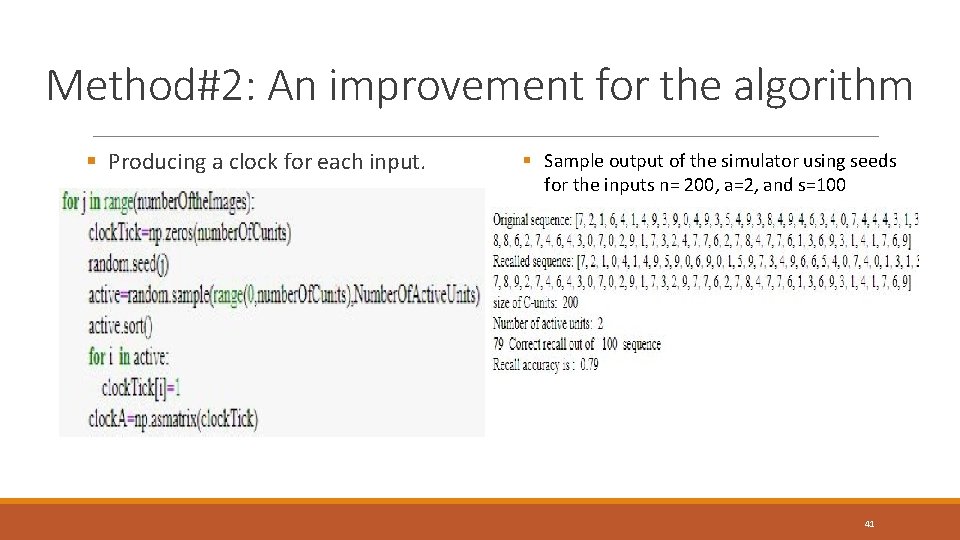

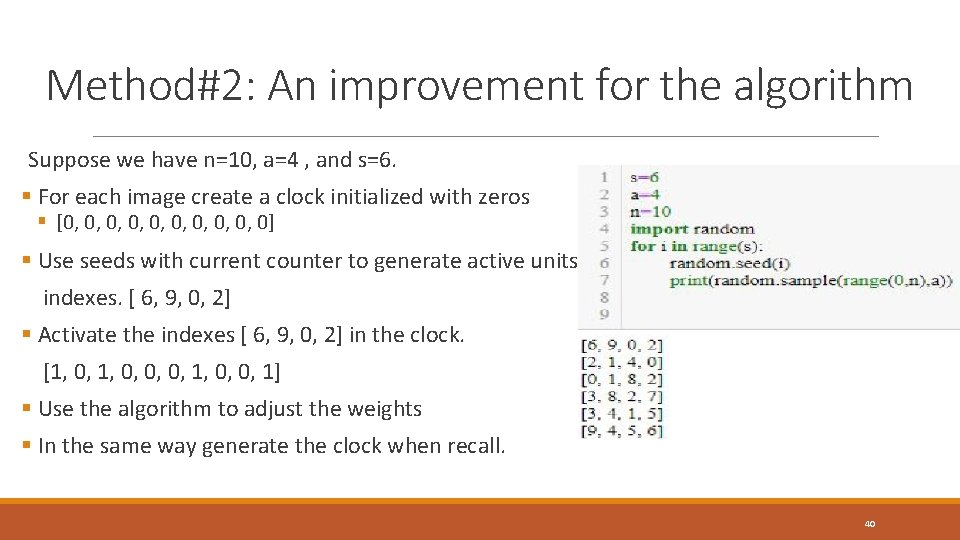

Method#2: An improvement for the algorithm § Current algorithm works by storing the clocks to use them when recall the sequence. §Every image has a clock. § 100 -year lifespan of 3153600000 seconds, need store 3153600000 clocks to recall the sequence. § Using seed() to generate the active units indexes. 39

Method#2: An improvement for the algorithm Suppose we have n=10, a=4 , and s=6. § For each image create a clock initialized with zeros § [0, 0, 0, 0] § Use seeds with current counter to generate active units indexes. [ 6, 9, 0, 2] § Activate the indexes [ 6, 9, 0, 2] in the clock. [1, 0, 0, 0, 1, 0, 0, 1] § Use the algorithm to adjust the weights § In the same way generate the clock when recall. 40

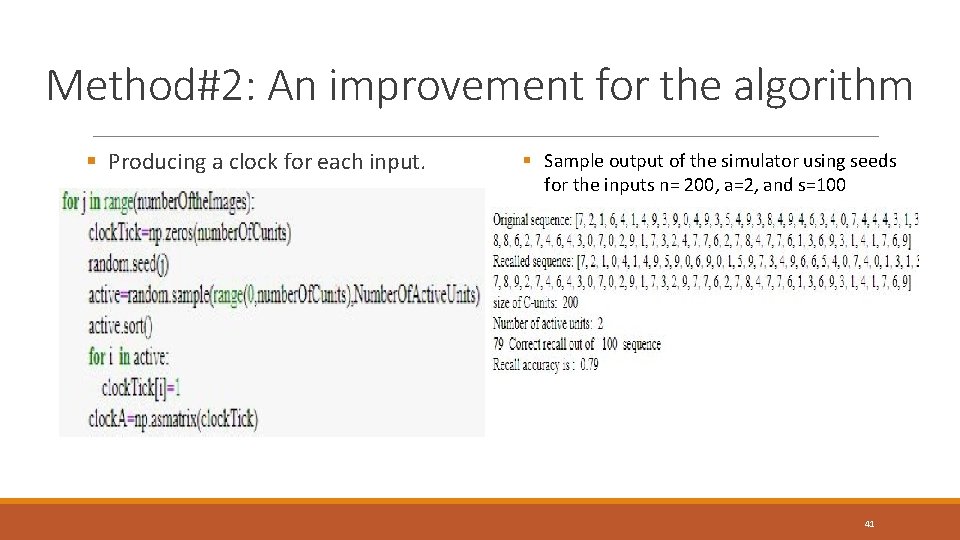

Method#2: An improvement for the algorithm § Producing a clock for each input. § Sample output of the simulator using seeds for the inputs n= 200, a=2, and s=100 41

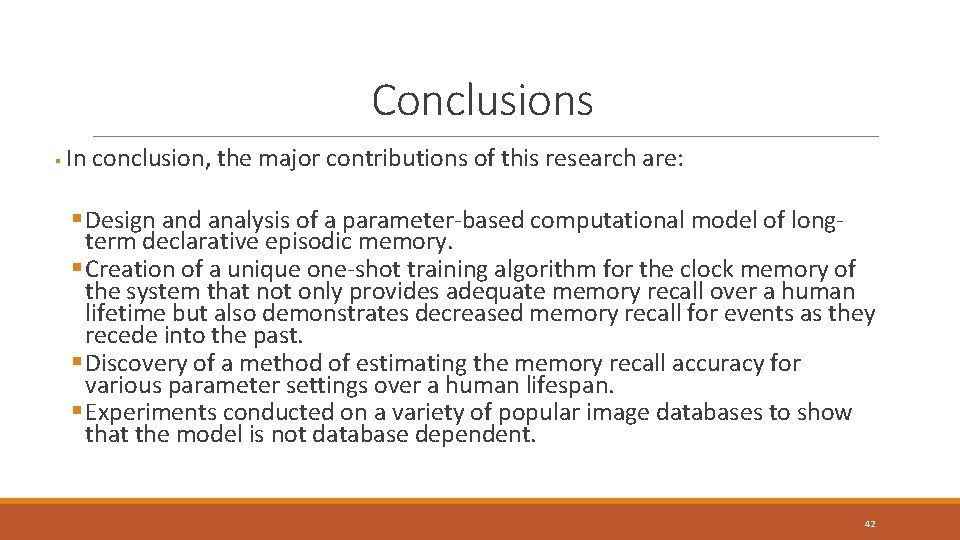

Conclusions § In conclusion, the major contributions of this research are: § Design and analysis of a parameter-based computational model of longterm declarative episodic memory. § Creation of a unique one-shot training algorithm for the clock memory of the system that not only provides adequate memory recall over a human lifetime but also demonstrates decreased memory recall for events as they recede into the past. § Discovery of a method of estimating the memory recall accuracy for various parameter settings over a human lifespan. § Experiments conducted on a variety of popular image databases to show that the model is not database dependent. 42

Publications ◦ Yousef Alhwaiti, M. Z. Chowdhury, A. Kamruzzaman, and C. C. Tappert, ”Analysis of a Parameter-Based Computational Model of Long-Term Declarative Episodic Memory. ”Proc. 6 th Annual Conf. Comp. Science Comp. Intelligence: Artificial Intelligence (CSCI-ISAI), Las Vegas, Dec 2019 ◦ Abu Kamruzzaman, Yousef Alhwaiti, and Charles C. Tappert "A Comparative Study of Convolutional Neural Network Models with Rosenblatt’s Brain Model, "Proc. IEEE Future of Information and Communication Conf. (FICC) , San Francisco, Mar 2019 ◦ A. Kamruzzaman, Yousef Alhwaiti, A. Leider, and C. C. Tappert, "Quantum Deep Learning Neural Network, " Proc. IEEE Future of Information and Communication Conf. (FICC) , San Francisco, Mar 2019 ◦ Sukun Li, Abu Kamruzzaman, Yousef Alhwaiti, Peiyi Shen, and Charles C. Tappert "Emotion Recognition using Unsupervised and Supervised Learning and Simulate Emotions with Brain Model" Pace CSIS Research, 2018 ◦ Yousef Alhwaiti, Mohammad Chowdhury, Abu Kamruzzaman, Charles C. Tappert “One-Shot Deep Learning Models of Long-Term Declarative Episodic Memory” Pace CSIS Research, 2019 43

1. References Couchot, J. , Couturier, R. , Guyeux, C. , & Salomon, M. (2016). Steganalysis via a Convolutional Neural Network using Large Convolution Filters. Ar. Xiv, abs/1605. 07946. 2. Lee, Y. X. (2019, May 23). Converting a Simple Deep Learning Model from Py. Torch to Tensor. Flow. Retrieved from https: //towardsdatascience. com/converting-a-simple-deep-learning-model-from-pytorch-to-tensorflowb 6 b 353351 f 5 d. 3. One-shot Learning. (2018) Retrived from http: //ice. dlut. edu. cn/ valse 2018/ppt/07. one_shot_add_v 2. pdf 4. Mc. Leod, Saul. “Saul Mc. Leod. ” Atkinson and Shiffrin | Multi Store Model of Memory | Simply Psychology, 1 Jan. 1970, www. simplypsychology. org/multi-store. html. https: //www. sciencedirect. com /topics/agricult-ural-andbiological-sciences/declarative-memory 5. Betournay, Scott. “Long Term Memory. ” Slide. Player, 2016, slideplayer. com /slide/ 5224563/. 6. Mastin, Luke. “ EPISODIC & SEMANTIC MEMORY. ” Episodic Memory and Semantic Memory - Types of Memory The Human Memory, 2018, www. human-memory. net/types_episodic. html. 7. “Memory. ” Memory and Aging Center, University of California San Francisco, memory. ucsf. edu/memory. 8. Rosebrock, A. Fashion MNIST with Keras and Deep Learning. https: //www. pyimagesearch. com/2019/02/ 11 / fashion-mnist-with-keras-and-deeplearning/. 9. Krizhevsky, A. The CIFAR-10 dataset, 2009. Available at https: //www. cs. toronto. edu/ kriz/cifar. html. 10. Lee, Yu Xuan. “Converting a Simple Deep Learning Model from Py. Torch to Tensor. Flow. ” Medium, Towards Data Science, 23 May 2019, https: //towardsdatascience. com/converting-a-simple-deep-learning-model-frompytorch-to-tensorflow-b 6 b 353351 f 5 d. 44

Thank you 45