On the Importance of Word and Sentence Representation

![4. Aggregation and Prediction • Limitation of [CLS] – The missing connective is located 4. Aggregation and Prediction • Limitation of [CLS] – The missing connective is located](https://slidetodoc.com/presentation_image_h2/4c975d36409a6505668f190397a4240c/image-15.jpg)

![References • • • • • [Bahdanau et al. , 2015] Neural Machine Translation References • • • • • [Bahdanau et al. , 2015] Neural Machine Translation](https://slidetodoc.com/presentation_image_h2/4c975d36409a6505668f190397a4240c/image-23.jpg)

- Slides: 24

On the Importance of Word and Sentence Representation Learning in Implicit Discourse Relation Classification Xin Liu 1, Jiefu Ou 1, Yangqiu Song 1, Xin Jiang 2 1 The Hong Kong University of Science and Technology 2 Huawei Noah’s Ark Lab 1

Outline • Implicit Discourse Relation Classification • Bilateral Matching and Gated Fusion with Ro. BERTa • Experiments and Ablation Studies • Conclusion 2

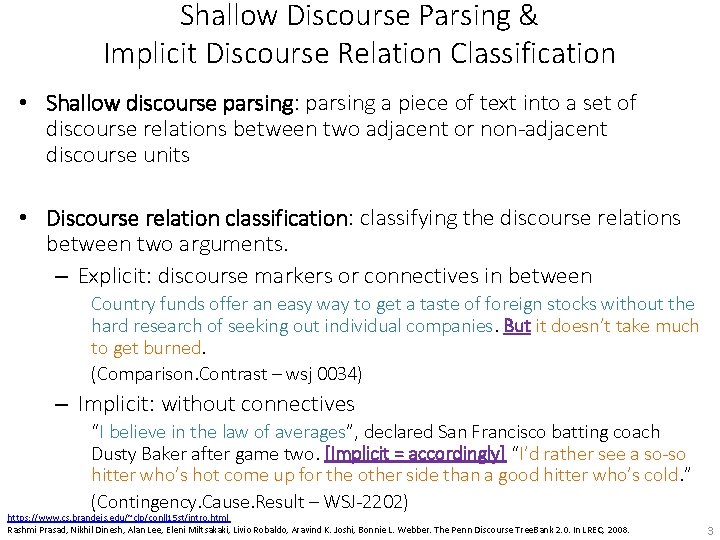

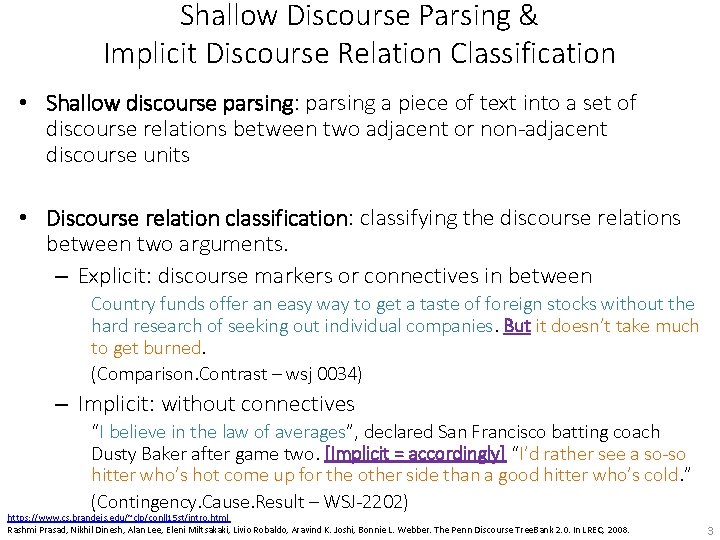

Shallow Discourse Parsing & Implicit Discourse Relation Classification • Shallow discourse parsing: parsing a piece of text into a set of discourse relations between two adjacent or non-adjacent discourse units • Discourse relation classification: classifying the discourse relations between two arguments. – Explicit: discourse markers or connectives in between Country funds offer an easy way to get a taste of foreign stocks without the hard research of seeking out individual companies. But it doesn’t take much to get burned. (Comparison. Contrast – wsj 0034) – Implicit: without connectives “I believe in the law of averages”, declared San Francisco batting coach Dusty Baker after game two. [Implicit = accordingly] “I’d rather see a so-so hitter who’s hot come up for the other side than a good hitter who’s cold. ” (Contingency. Cause. Result – WSJ-2202) https: //www. cs. brandeis. edu/~clp/conll 15 st/intro. html Rashmi Prasad, Nikhil Dinesh, Alan Lee, Eleni Miltsakaki, Livio Robaldo, Aravind K. Joshi, Bonnie L. Webber. The Penn Discourse Tree. Bank 2. 0. In LREC, 2008. 3

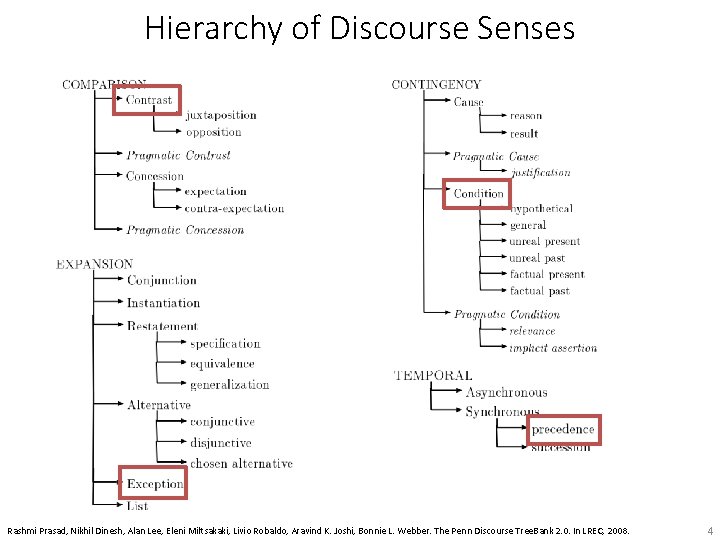

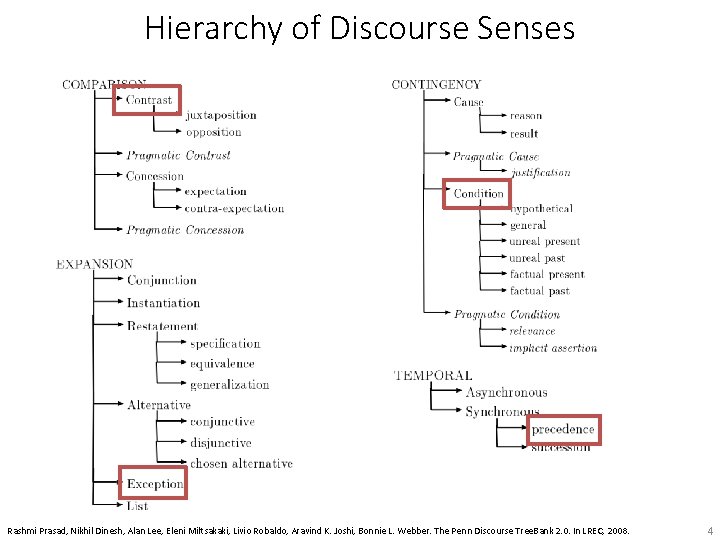

Hierarchy of Discourse Senses Rashmi Prasad, Nikhil Dinesh, Alan Lee, Eleni Miltsakaki, Livio Robaldo, Aravind K. Joshi, Bonnie L. Webber. The Penn Discourse Tree. Bank 2. 0. In LREC, 2008. 4

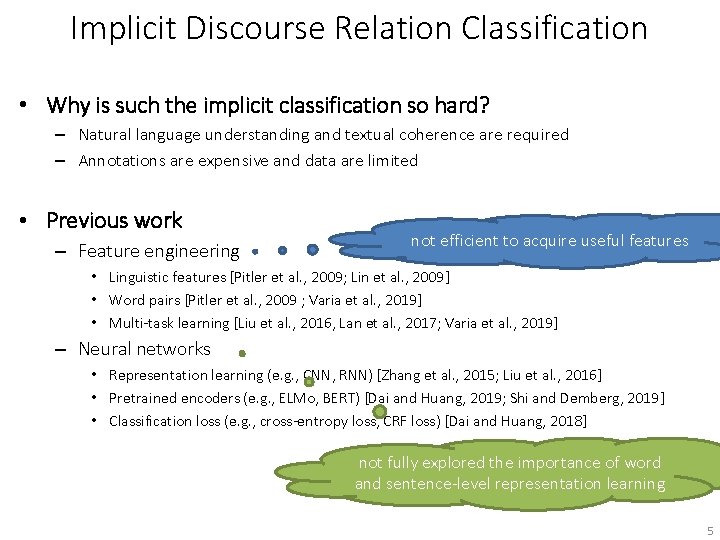

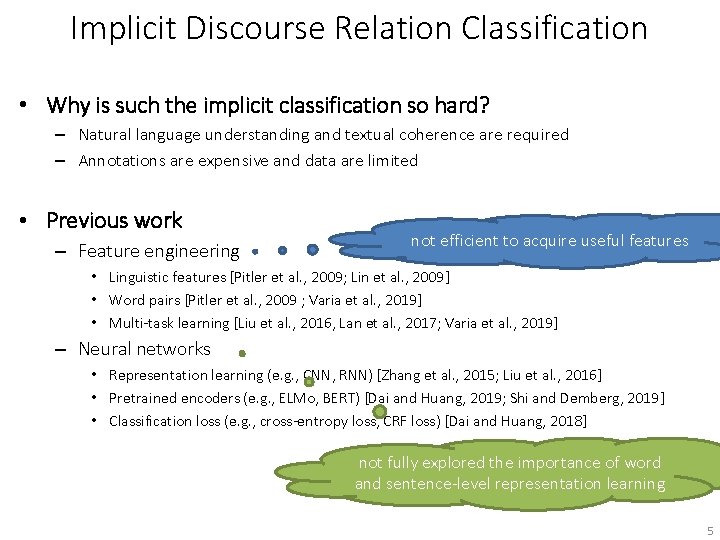

Implicit Discourse Relation Classification • Why is such the implicit classification so hard? – Natural language understanding and textual coherence are required – Annotations are expensive and data are limited • Previous work – Feature engineering not efficient to acquire useful features • Linguistic features [Pitler et al. , 2009; Lin et al. , 2009] • Word pairs [Pitler et al. , 2009 ; Varia et al. , 2019] • Multi-task learning [Liu et al. , 2016, Lan et al. , 2017; Varia et al. , 2019] – Neural networks • Representation learning (e. g. , CNN, RNN) [Zhang et al. , 2015; Liu et al. , 2016] • Pretrained encoders (e. g. , ELMo, BERT) [Dai and Huang, 2019; Shi and Demberg, 2019] • Classification loss (e. g. , cross-entropy loss, CRF loss) [Dai and Huang, 2018] not fully explored the importance of word and sentence-level representation learning 5

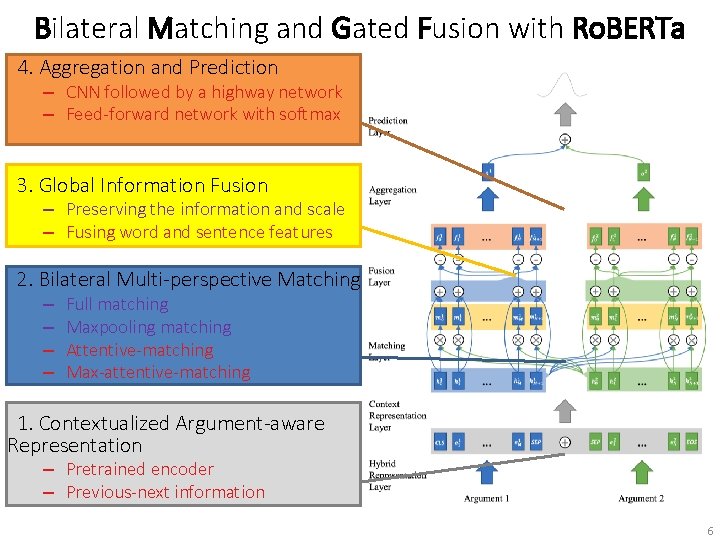

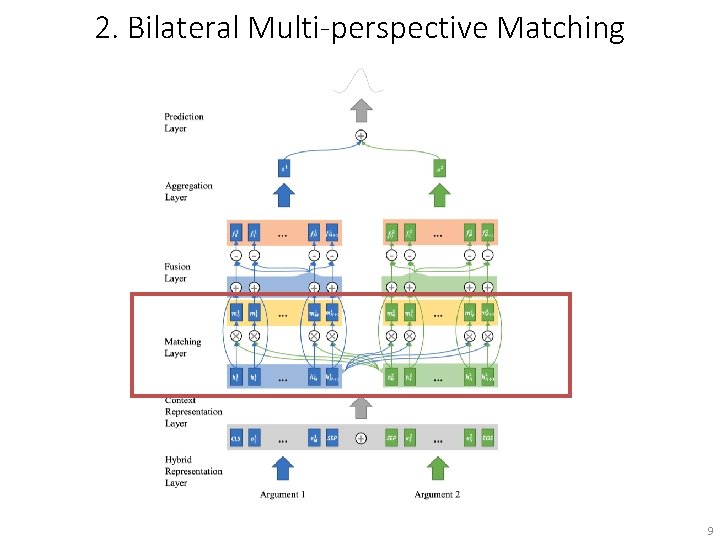

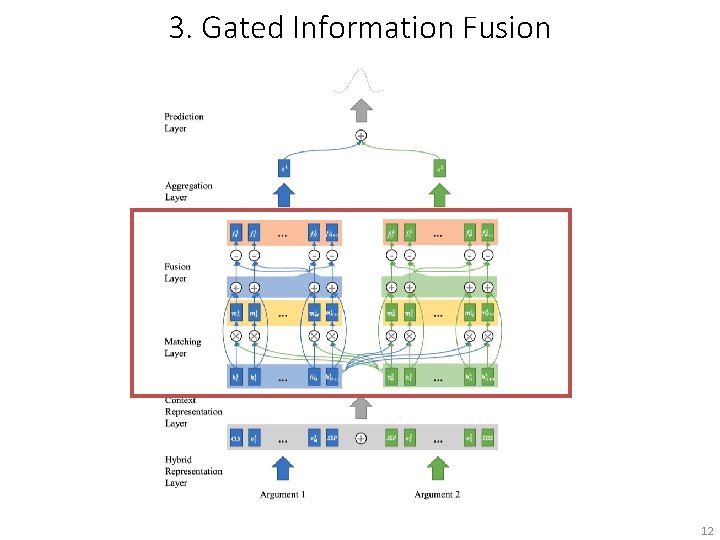

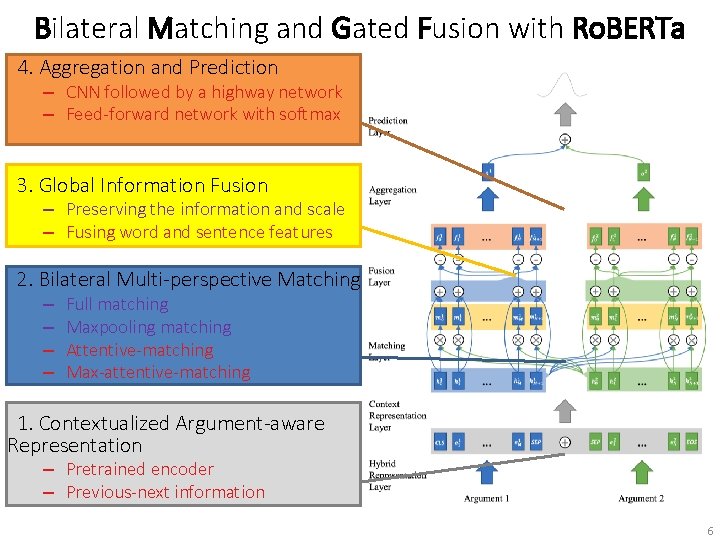

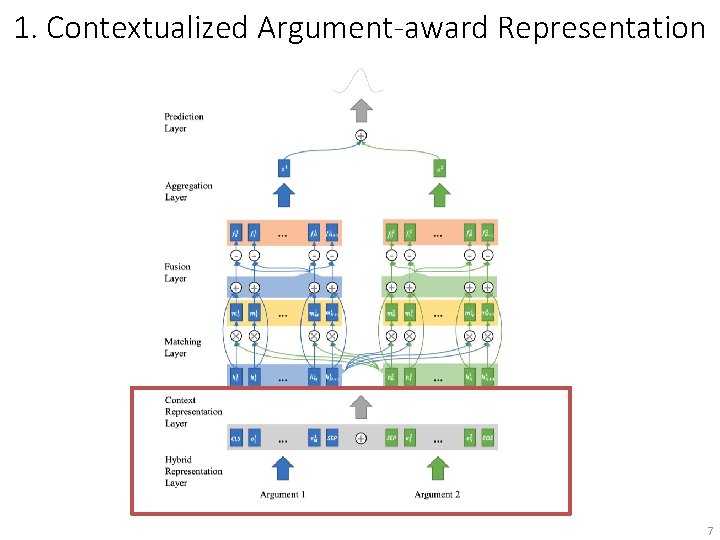

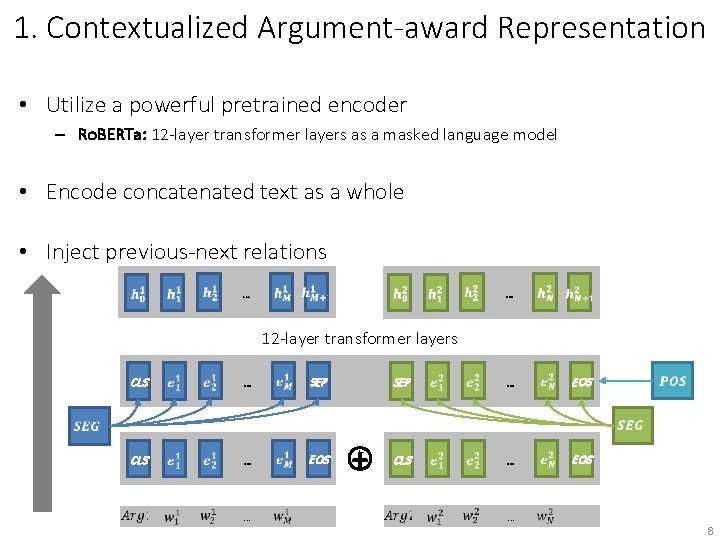

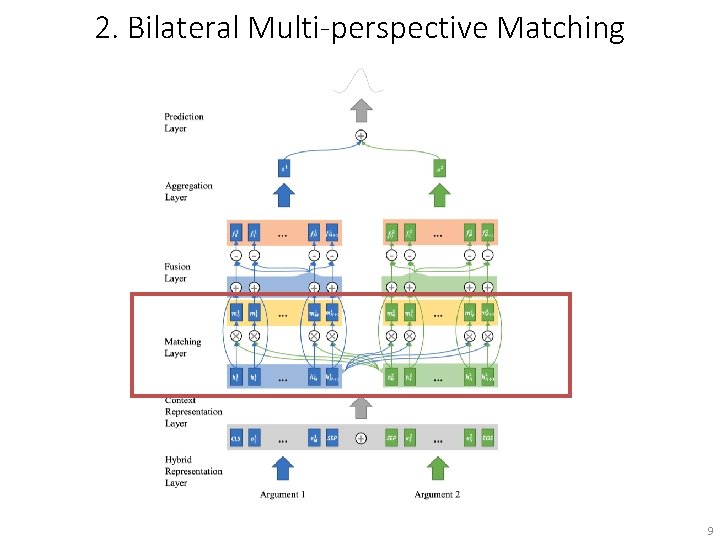

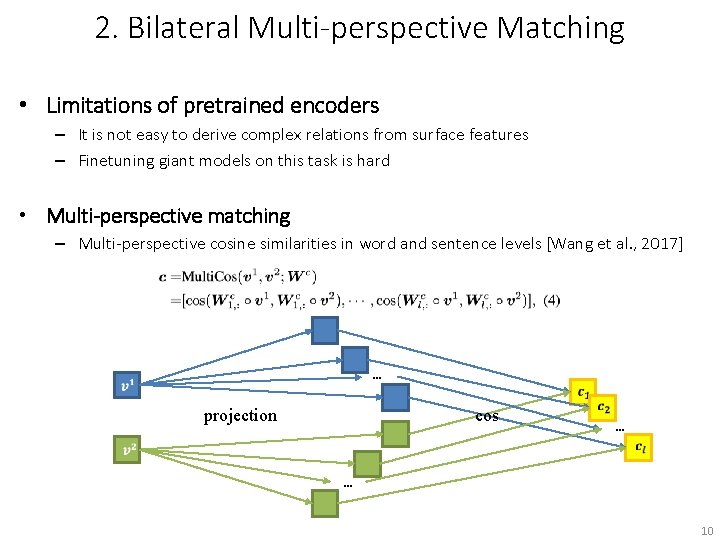

Bilateral Matching and Gated Fusion with Ro. BERTa 4. Aggregation and Prediction – CNN followed by a highway network – Feed-forward network with softmax 3. Global Information Fusion – Preserving the information and scale – Fusing word and sentence features 2. Bilateral Multi-perspective Matching – – Full matching Maxpooling matching Attentive-matching Max-attentive-matching 1. Contextualized Argument-aware Representation – Pretrained encoder – Previous-next information 6

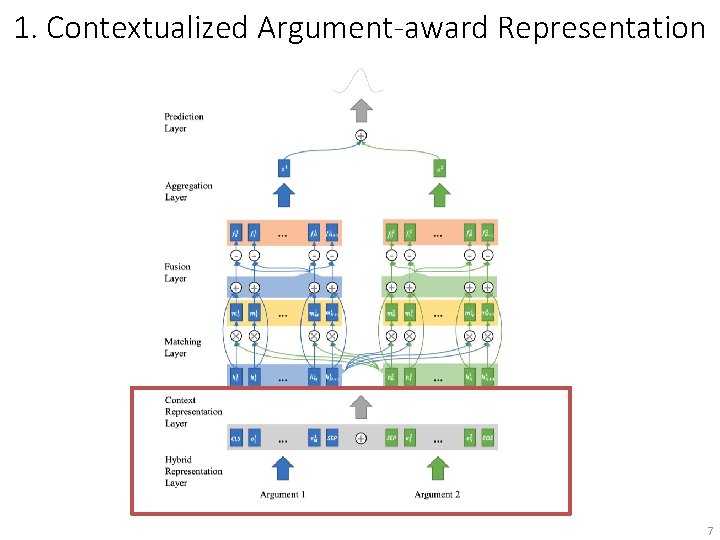

1. Contextualized Argument-award Representation 7

1. Contextualized Argument-award Representation • Utilize a powerful pretrained encoder – Ro. BERTa: 12 -layer transformer layers as a masked language model • Encode concatenated text as a whole • Inject previous-next relations … … 12 -layer transformer layers CLS … SEP CLS … EOS … + SEP … EOS CLS … EOS … 8

2. Bilateral Multi-perspective Matching 9

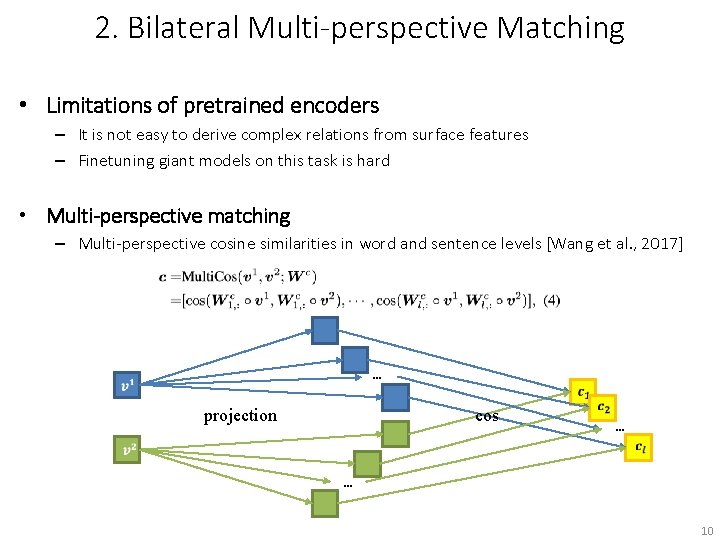

2. Bilateral Multi-perspective Matching • Limitations of pretrained encoders – It is not easy to derive complex relations from surface features – Finetuning giant models on this task is hard • Multi-perspective matching – Multi-perspective cosine similarities in word and sentence levels [Wang et al. , 2017] … projection cos … … 10

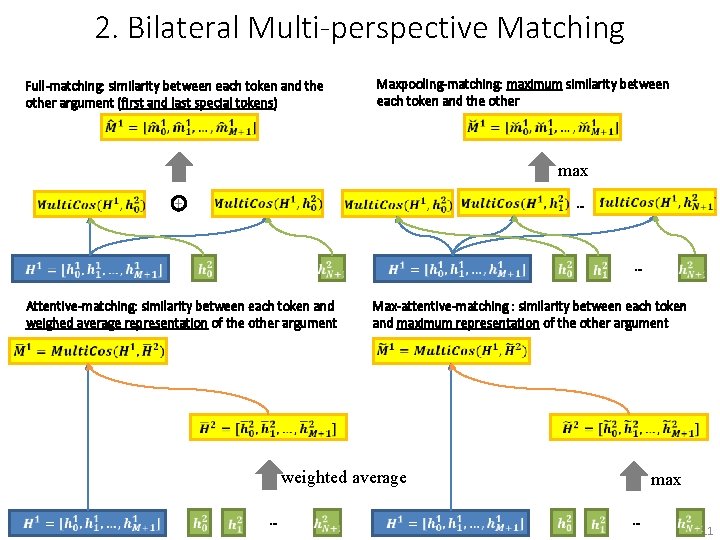

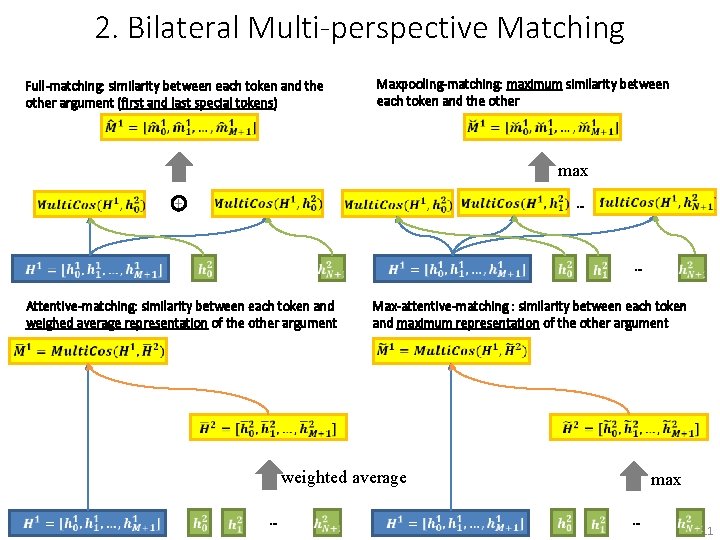

2. Bilateral Multi-perspective Matching Full-matching: similarity between each token and the other argument (first and last special tokens) Maxpooling-matching: maximum similarity between each token and the other max + … … Attentive-matching: similarity between each token and weighed average representation of the other argument Max-attentive-matching : similarity between each token and maximum representation of the other argument weighted average … max … 11

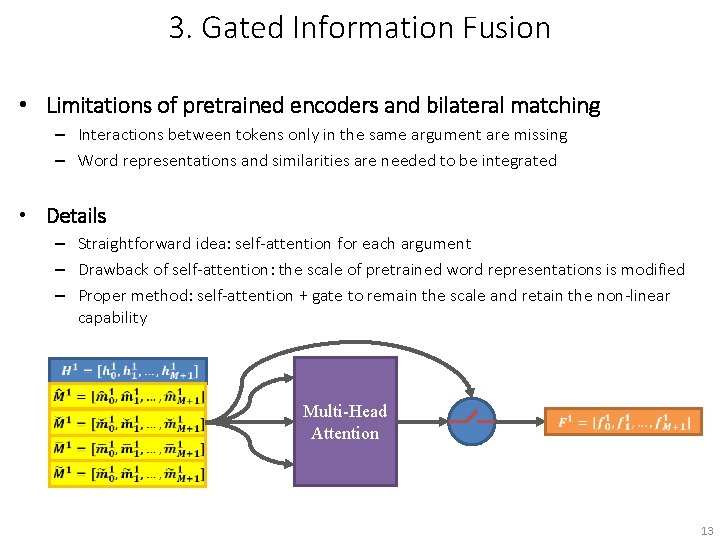

3. Gated Information Fusion 12

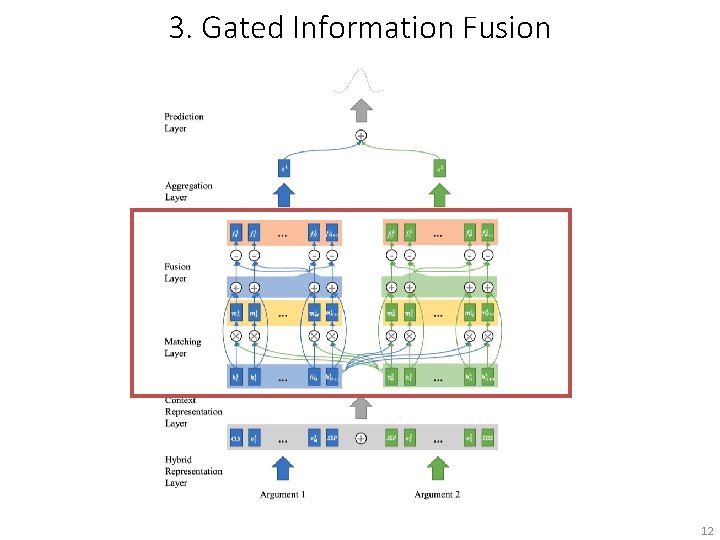

3. Gated Information Fusion • Limitations of pretrained encoders and bilateral matching – Interactions between tokens only in the same argument are missing – Word representations and similarities are needed to be integrated • Details – Straightforward idea: self-attention for each argument – Drawback of self-attention: the scale of pretrained word representations is modified – Proper method: self-attention + gate to remain the scale and retain the non-linear capability Multi-Head Attention 13

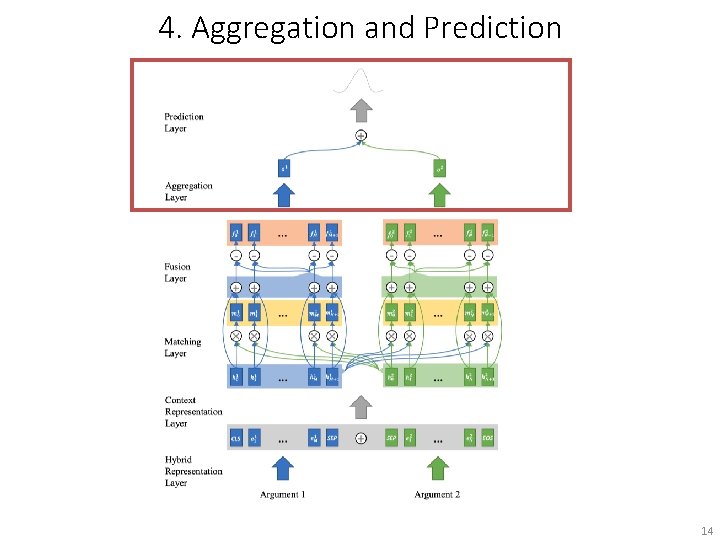

4. Aggregation and Prediction 14

![4 Aggregation and Prediction Limitation of CLS The missing connective is located 4. Aggregation and Prediction • Limitation of [CLS] – The missing connective is located](https://slidetodoc.com/presentation_image_h2/4c975d36409a6505668f190397a4240c/image-15.jpg)

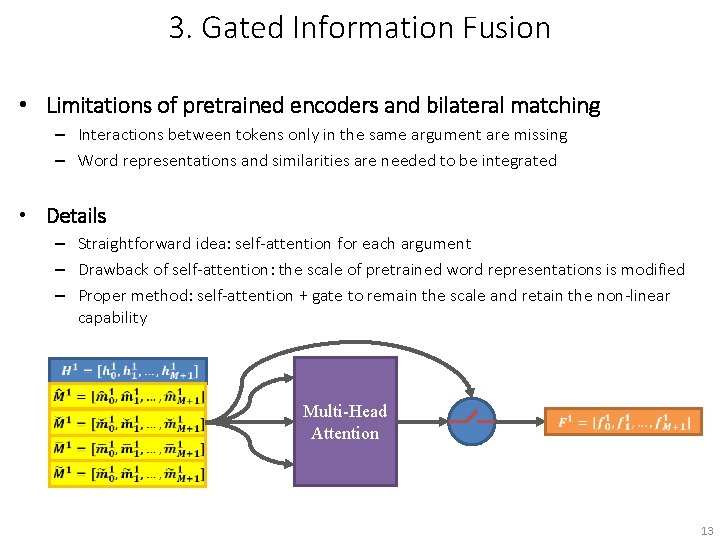

4. Aggregation and Prediction • Limitation of [CLS] – The missing connective is located between two arguments, but the [CLS] is at the beginning position – After matching and fusion, each representation has become more representative • CNN makes full use of all representations + + Highway Network conv + pooling + + + Feed-forward Network softmax Highway Network 15

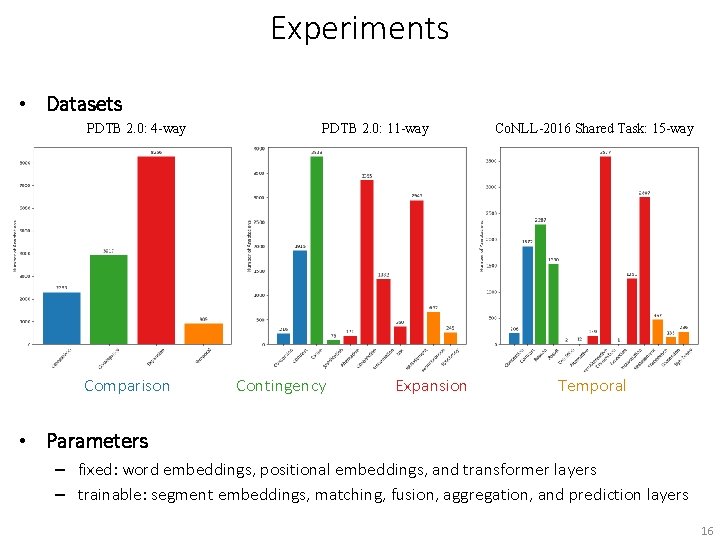

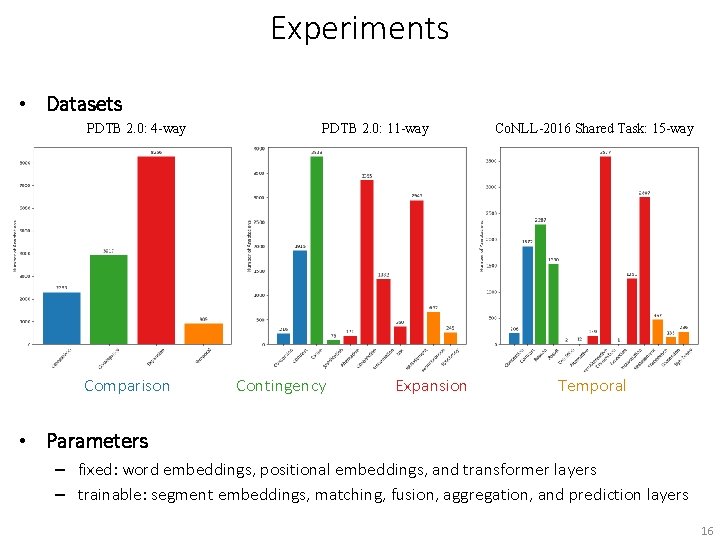

Experiments • Datasets PDTB 2. 0: 4 -way Comparison PDTB 2. 0: 11 -way Contingency Expansion Co. NLL-2016 Shared Task: 15 -way Temporal • Parameters – fixed: word embeddings, positional embeddings, and transformer layers – trainable: segment embeddings, matching, fusion, aggregation, and prediction layers 16

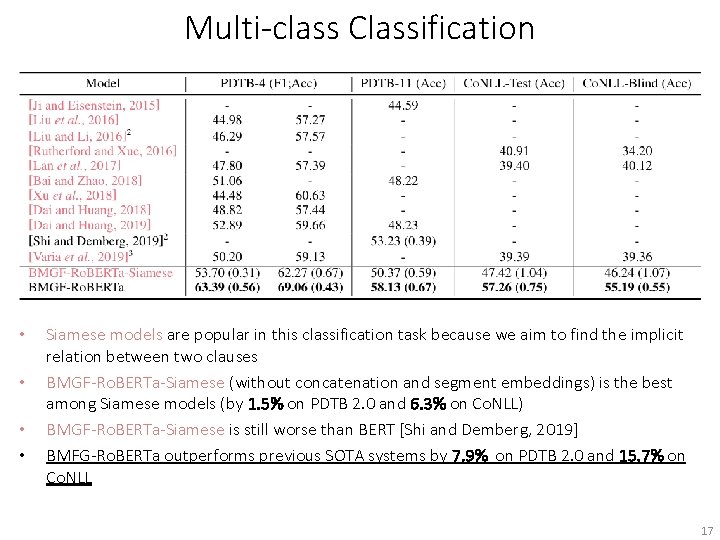

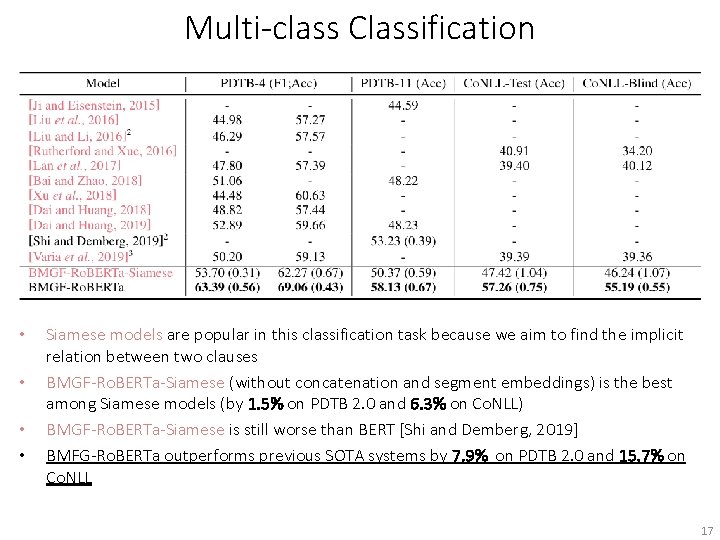

Multi-class Classification • • Siamese models are popular in this classification task because we aim to find the implicit relation between two clauses BMGF-Ro. BERTa-Siamese (without concatenation and segment embeddings) is the best among Siamese models (by 1. 5% on PDTB 2. 0 and 6. 3% on Co. NLL) BMGF-Ro. BERTa-Siamese is still worse than BERT [Shi and Demberg, 2019] BMFG-Ro. BERTa outperforms previous SOTA systems by 7. 9% on PDTB 2. 0 and 15. 7% on Co. NLL 17

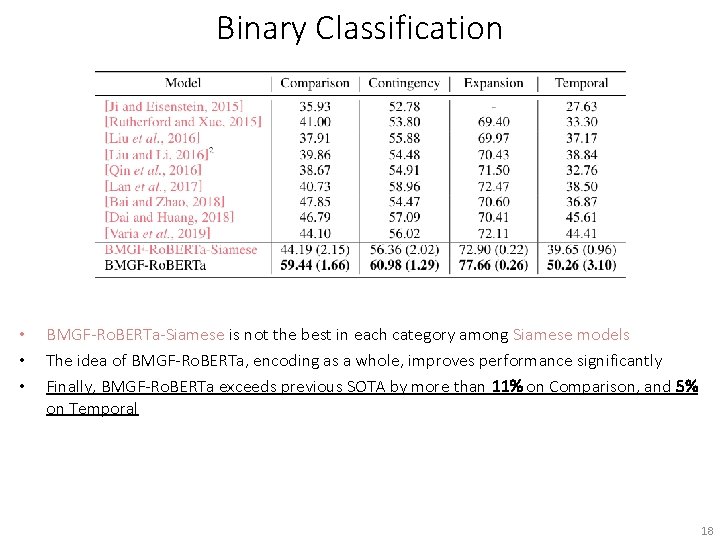

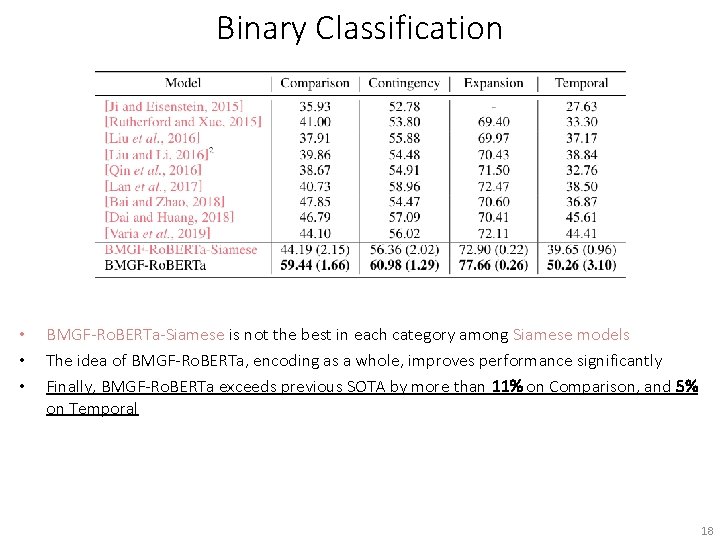

Binary Classification • • • BMGF-Ro. BERTa-Siamese is not the best in each category among Siamese models The idea of BMGF-Ro. BERTa, encoding as a whole, improves performance significantly Finally, BMGF-Ro. BERTa exceeds previous SOTA by more than 11% on Comparison, and 5% on Temporal 18

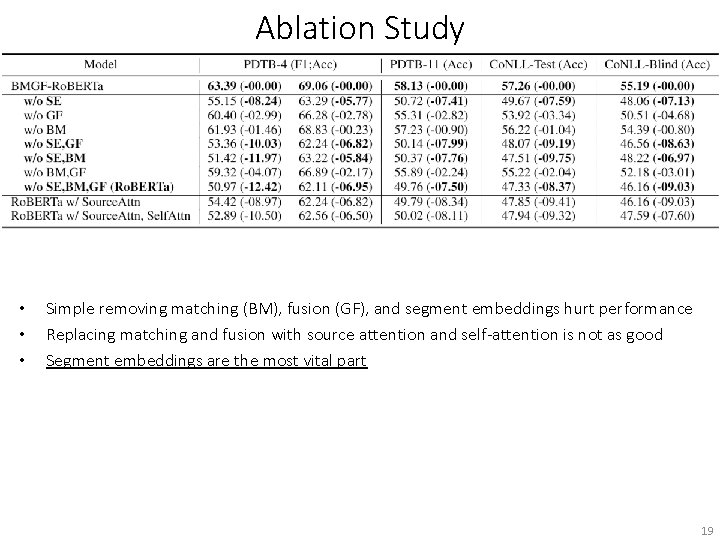

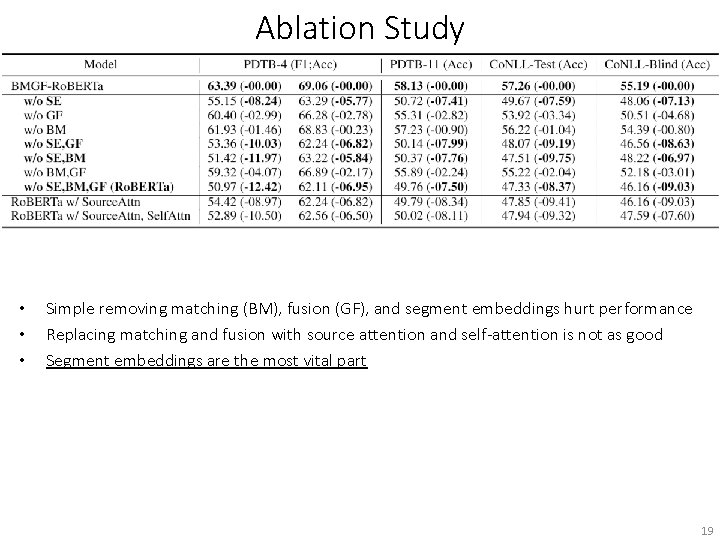

Ablation Study • • • Simple removing matching (BM), fusion (GF), and segment embeddings hurt performance Replacing matching and fusion with source attention and self-attention is not as good Segment embeddings are the most vital part 19

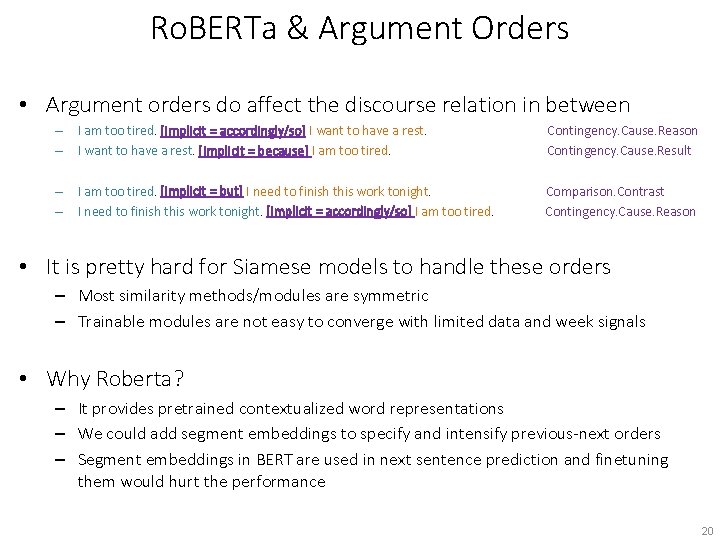

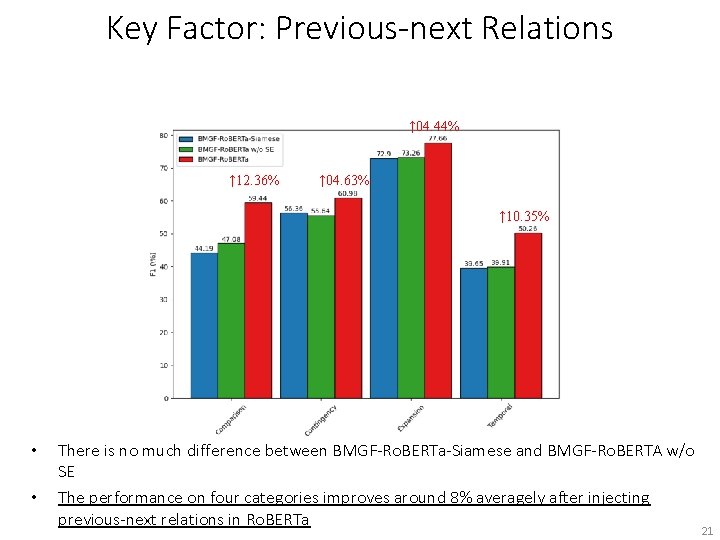

Ro. BERTa & Argument Orders • Argument orders do affect the discourse relation in between – I am too tired. [Implicit = accordingly/so] I want to have a rest. – I want to have a rest. [Implicit = because] I am too tired. Contingency. Cause. Reason Contingency. Cause. Result – I am too tired. [Implicit = but] I need to finish this work tonight. – I need to finish this work tonight. [Implicit = accordingly/so] I am too tired. Comparison. Contrast Contingency. Cause. Reason • It is pretty hard for Siamese models to handle these orders – Most similarity methods/modules are symmetric – Trainable modules are not easy to converge with limited data and week signals • Why Roberta? – It provides pretrained contextualized word representations – We could add segment embeddings to specify and intensify previous-next orders – Segment embeddings in BERT are used in next sentence prediction and finetuning them would hurt the performance 20

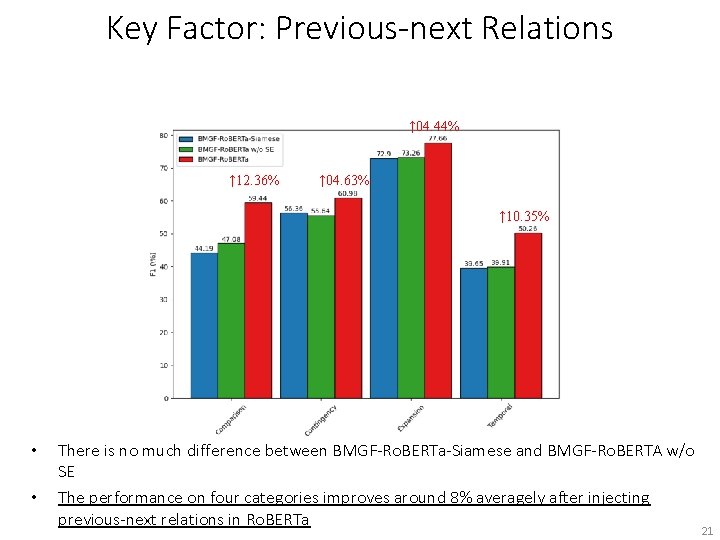

Key Factor: Previous-next Relations ↑ 04. 44% ↑ 12. 36% ↑ 04. 63% ↑ 10. 35% • • There is no much difference between BMGF-Ro. BERTa-Siamese and BMGF-Ro. BERTA w/o SE The performance on four categories improves around 8% averagely after injecting previous-next relations in Ro. BERTa 21

Conclusion • We carefully analyze the difficulties of implicit discourse relation classification and present a novel model, BMGF-Ro. BERTa, which combines representation, matching, and fusion modules • BMGF-Ro. BERTa outperforms BERT and other SOTA systems on the PDTB dataset by around 8% and Co. NLL 2016 datasets around 16% • Ablation studies illustrate the effectiveness of different components and how different levels of representation learning affect the results 22

![References Bahdanau et al 2015 Neural Machine Translation References • • • • • [Bahdanau et al. , 2015] Neural Machine Translation](https://slidetodoc.com/presentation_image_h2/4c975d36409a6505668f190397a4240c/image-23.jpg)

References • • • • • [Bahdanau et al. , 2015] Neural Machine Translation by Jointly Learning to Align and Translate. In ICLR, 2015. [Bai and Zhao, 2018] Hongxiao Bai and Hai Zhao. Deep enhanced representation for implicit discourse relation recognition. In COLING, pages 571 – 583, 2018. [Dai and Huang, 2018] Zeyu Dai and Ruihong Huang. Improving implicit discourse relation classification by modeling interdependencies of discourse units in a paragraph. In NAACL-HLT, pages 141– 151, 2018. [Dai and Huang, 2019] Zeyu Dai and Ruihong Huang. A regularization approach for incorporating event knowledge and coreference relations into neural discourse parsing. In EMNLP, pages 2974– 2985, 2019. [Devlin et al. , 2019] Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. BERT: pre-training of deep bidirectional transformers for language understanding. In NAACL-HLT, pages 4171– 4186, 2019. [Kim et al. , 2016] Yoon Kim, Yacine Jernite, David A. Sontag, and Alexander M. Rush. Character-aware neural language models. In AAAI, pages 2741– 2749, 2016. [Lin et al. , 2009] Ziheng Lin, Min-Yen Kan, and Hwee Tou Ng. Recognizing implicit discourse relations in the penn discourse treebank. In EMNLP, pages 343– 351, 2009. [Liu et al. , 2016] Yang Liu, Sujian Li, Xiaodong Zhang, and Zhifang Sui. Implicit discourse relation classification via multi-task neural networks. In AAAI, pages 2750– 2756, 2016. [Liu et al. , 2019] Yinhan Liu, Myle Ott, Naman Goyal, Jingfei Du, Mandar Joshi, Danqi Chen, Omer Levy, Mike Lewis, Luke Zettlemoyer, and Veselin Stoyanov. Roberta: A robustly optimized bert pretraining approach. ar. Xiv preprint ar. Xiv: 1907. 11692, 2019. [Peters et al. , 2018] Matthew E. Peters, Mark Neumann, Mohit Iyyer, Matt Gardner, Christopher Clark, Kenton Lee, and Luke Zettlemoyer. Deep contextualized word representations. In NAACL-HLT, pages 2227 -2237, 2018. [Pitler et al. , 2009] Emily Pitler, Annie Louis, and Ani Nenkova. Automatic sense prediction for implicit discourse relations in text. In ACL, pages 683– 691, 2009. [Qin et al. , 2017] Lianhui Qin, Zhisong Zhang, Hai Zhao, Zhiting Hu, and Eric P. Xing. Adversarial connective-exploiting networks for implicit discourse relation classification. In ACL, pages 1006– 1017, 2017. [Rutherford and Xue, 2016] Attapol Rutherford and Nianwen Xue. Robust non-explicit neural discourse parser in english and chinese. In Co. NLL, pages 55– 59, 2016. [Shi and Demberg, 2019] Wei Shi and Vera Demberg. Next sentence prediction helps implicit discourse relation classification within and across domains. In EMNLP-IJCNLP, pages 5789– 5795, 2019. [Srivastava et al. , 2015] Rupesh Kumar Srivastava, Klaus Greff, and Jürgen Schmidhuber. Highway Networks. ar. Xiv preprint ar. Xiv: 1505. 00387, 2015. [Varia et al. , 2019] Siddharth Varia, Christopher Hidey, and Tuhin Chakrabarty. Discourse relation prediction: Revisiting word pairs with convolutional networks. In SIGDIAL, pages 442– 452, 2019. [Wang et al. , 2017] Zhiguo Wang, Wael Hamza, and Radu Florian. Bilateral multi-perspective matching for natural language sentences. In IJCAI, pages 4144– 4150, 2017. [Xu et al. , 2018] Yang Xu, Yu Hong, Huibin Ruan, Jianmin Yao, Min Zhang, and Guodong Zhou. Using active learning to expand training data for implicit discourse relation recognition. In EMNLP, pages 725– 731, 2018. 23

Thanks Paper http: //arxiv. org/abs/2004. 12617 Code https: //github. com/HKUST-Know. Comp/BMGF-Ro. BERTa Email: xliucr@cse. ust. hk 24