On Bayesian Networks and Anomaly Detection Andre Torres

On Bayesian Networks and Anomaly Detection Andre Torres Edinboro University of Pennsylvania

Motivations �Rise in malicious attacks �Security in cloud computing �Artificial intelligence �Data Comm next year

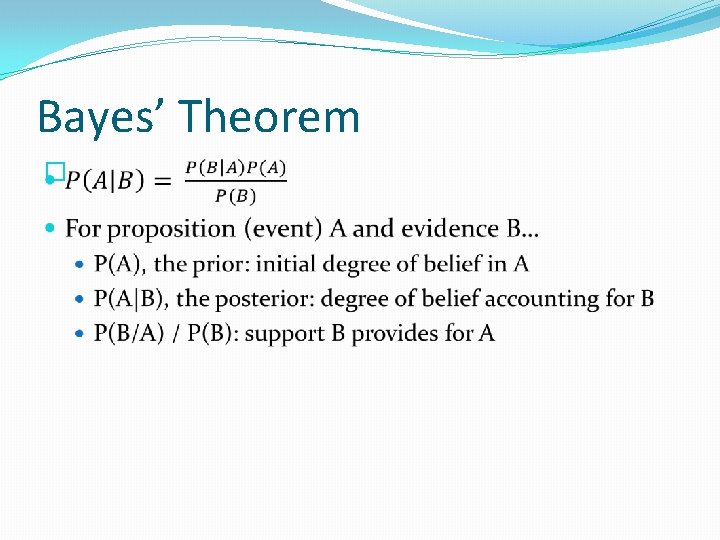

Bayes’ Theorem �

Example: Spam Filtering �

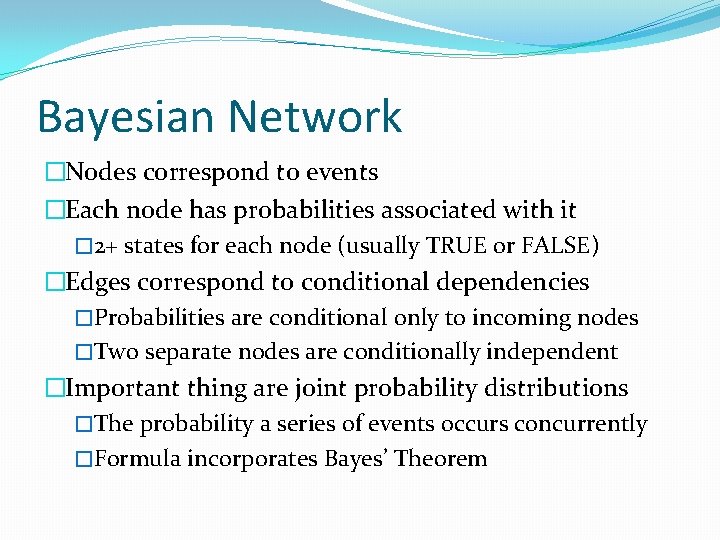

Bayesian Network �Nodes correspond to events �Each node has probabilities associated with it � 2+ states for each node (usually TRUE or FALSE) �Edges correspond to conditional dependencies �Probabilities are conditional only to incoming nodes �Two separate nodes are conditionally independent �Important thing are joint probability distributions �The probability a series of events occurs concurrently �Formula incorporates Bayes’ Theorem

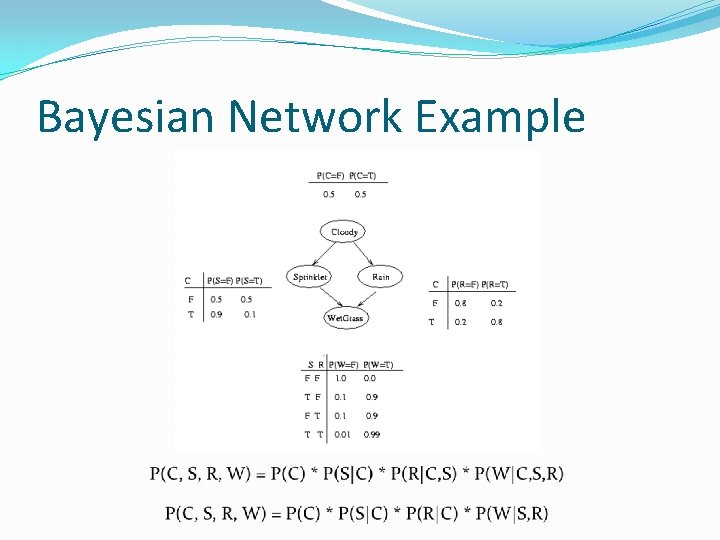

Bayesian Network Example

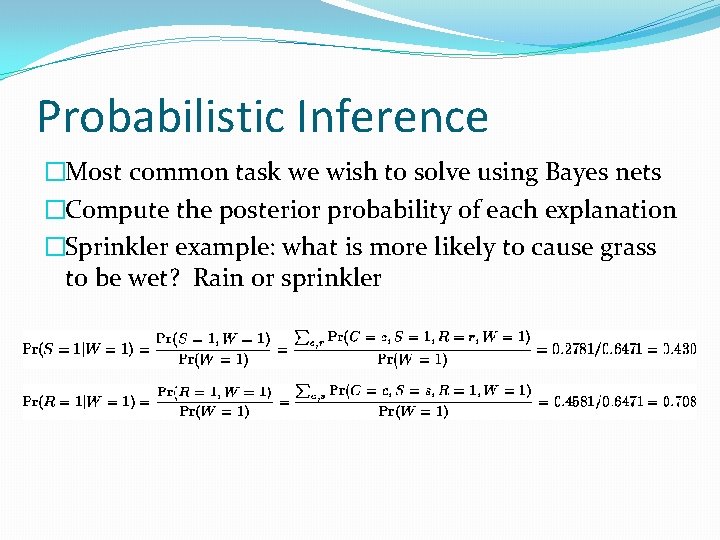

Probabilistic Inference �Most common task we wish to solve using Bayes nets �Compute the posterior probability of each explanation �Sprinkler example: what is more likely to cause grass to be wet? Rain or sprinkler

Applications of Bayesian Networks �Growing usage in AI �Medical diagnostic systems �Network intrusion �Minesweeper �IBM Watson �Sensory stimuli classification and behavioral response decision �Texas Hold ‘Em �True. Skill™ (Xbox Live)

Objectives � 1: Implement a Bayesian network that can be tested within a program � 2: Implement a method of Bayesian structure learning and a method of Bayesian parameter learning � 3: Compare and contrast a Bayesian network and a naïve Bayes classifier for accuracy

1&2: Implementing and Generating Bayesian Networks �Lots of programs and libraries that help with this �bnlearn: R package for Bayes nets �dlib: C++ library, supports Bayes nets, machine learning algorithms �e. Bay’s Bayes net library and others for Python �Weka: machine learning software suite for data mining in Java �Lots of retail programs available �Implementing learning yourself: not so easy

Parameter Learning �Given �A Bayesian network structure �A data set �Estimate conditional probabilities �Necessary to specify each node’s probability distribution �Can be discrete or continuous �Common algorithms �Maximum Likelihood Estimation (MLE) �Bayesian Estimation (BE)

One Node Network and Likelihood �Consider a Bayesian network with one node X �X is the result of tossing a rock and ΩX = {H, T} �Data cases: D 1 = H, D 2 = T, D 3 = H, …, Dn = H �Data set: D = {D 1, D 2, D 3, …, Dn} �Estimate parameter: θ = P(X=H) �Which of the following is the most likely? �θ = 0. 00 �θ = 0. 01 �θ = 0. 5

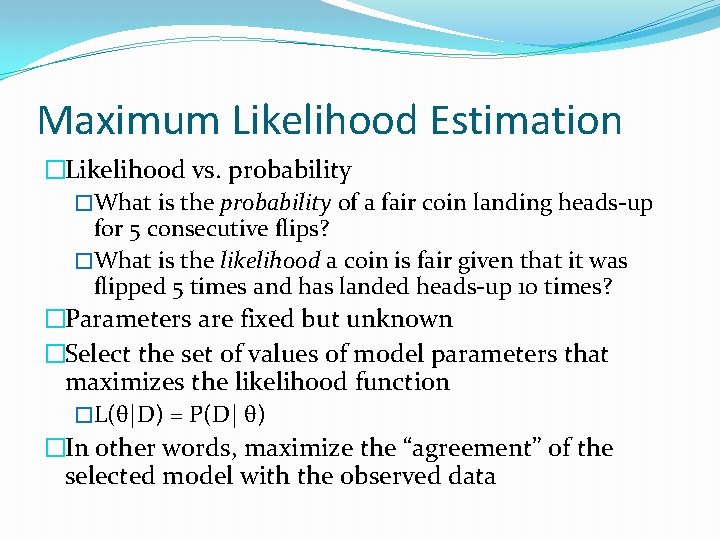

Maximum Likelihood Estimation �Likelihood vs. probability �What is the probability of a fair coin landing heads-up for 5 consecutive flips? �What is the likelihood a coin is fair given that it was flipped 5 times and has landed heads-up 10 times? �Parameters are fixed but unknown �Select the set of values of model parameters that maximizes the likelihood function �L(θ|D) = P(D| θ) �In other words, maximize the “agreement” of the selected model with the observed data

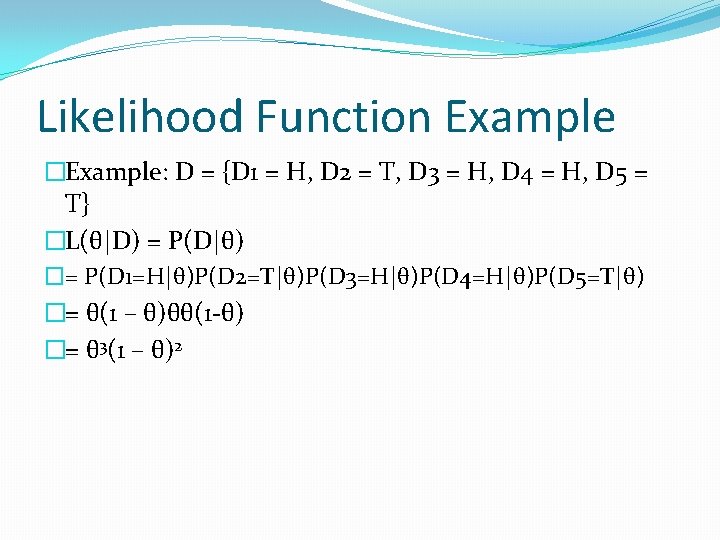

Likelihood Function Example �Example: D = {D 1 = H, D 2 = T, D 3 = H, D 4 = H, D 5 = T} �L(θ|D) = P(D|θ) �= P(D 1=H|θ)P(D 2=T|θ)P(D 3=H|θ)P(D 4=H|θ)P(D 5=T|θ) �= θ(1 – θ)θθ(1 -θ) �= θ 3(1 – θ)2

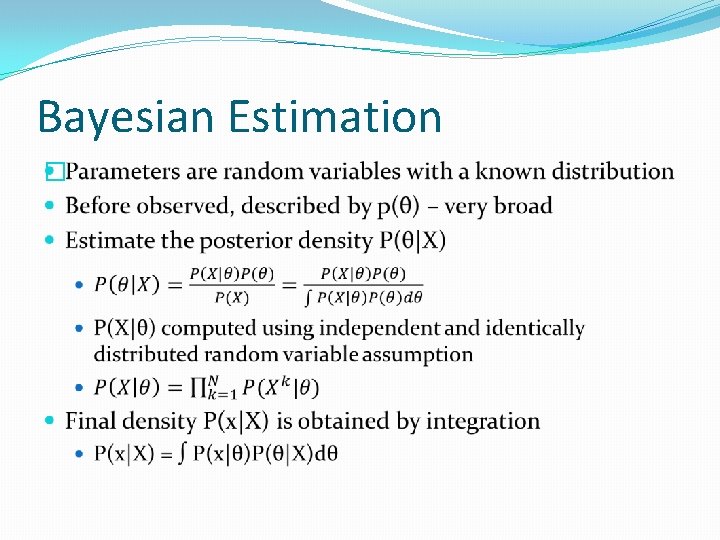

Bayesian Estimation �

Structure Learning �Simplest case: specified by expert �Usually, too difficult for humans �Supervised machine learning techniques that predict structured objects �Trained by means of observed data �Approximate inference and learning is often used �Many different ways to go about this

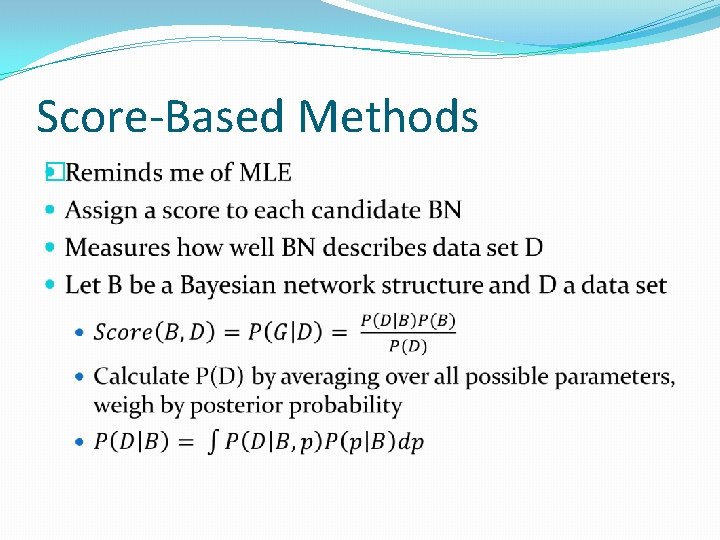

Score-Based Methods �

Constraint-Based Methods �Typically conditional independence constraints �Tests are usually statistical tests on dataset �Assumptions must be made: Causal Sufficiency, Causal Markov, Faithfulness �Poor robustness �Exponential time makes impractical for large domains

3: Comparing Bayesian Methods �Compared Naïve Bayes classifier and Bayesian networks �Naïve Bayes (NB) classifier �Value of some feature is unrelated to other features given class variable � An apple is red, round, can stand on its own, and ~3” in diameter � A banana is yellow and peels �Only requires small amount of training data

3: Comparing Bayesian Methods �Tested using Weka with 10 -fold cross validation �Bayesian Network: 99. 667% �Naïve Bayes Classifier: 92. 7794% �Naïve Bayes had 65. 2% true positive rate for normal data

Conclusions and Future Research �Bayesian networks are extremely useful �Research: not for me �Plenty of programs to implement BNs already exist �Focus on finding new applications �Compare and contrast algorithms more rigorously and find where they are appropriate

- Slides: 21