OCP at SK Telecom and OCP Telco Project

- Slides: 29

OCP at SK Telecom and OCP Telco Project Kang-Won Lee, Ph. D. SVP, SK Telecom Co-Lead, OCP Telco Project

Agenda l Part 1: SKT’s Vision on Open Hardware and Software l Part 2: OCP Telco Project 1

Part 1 SKT’s Vision on Open Hardware and Software 2

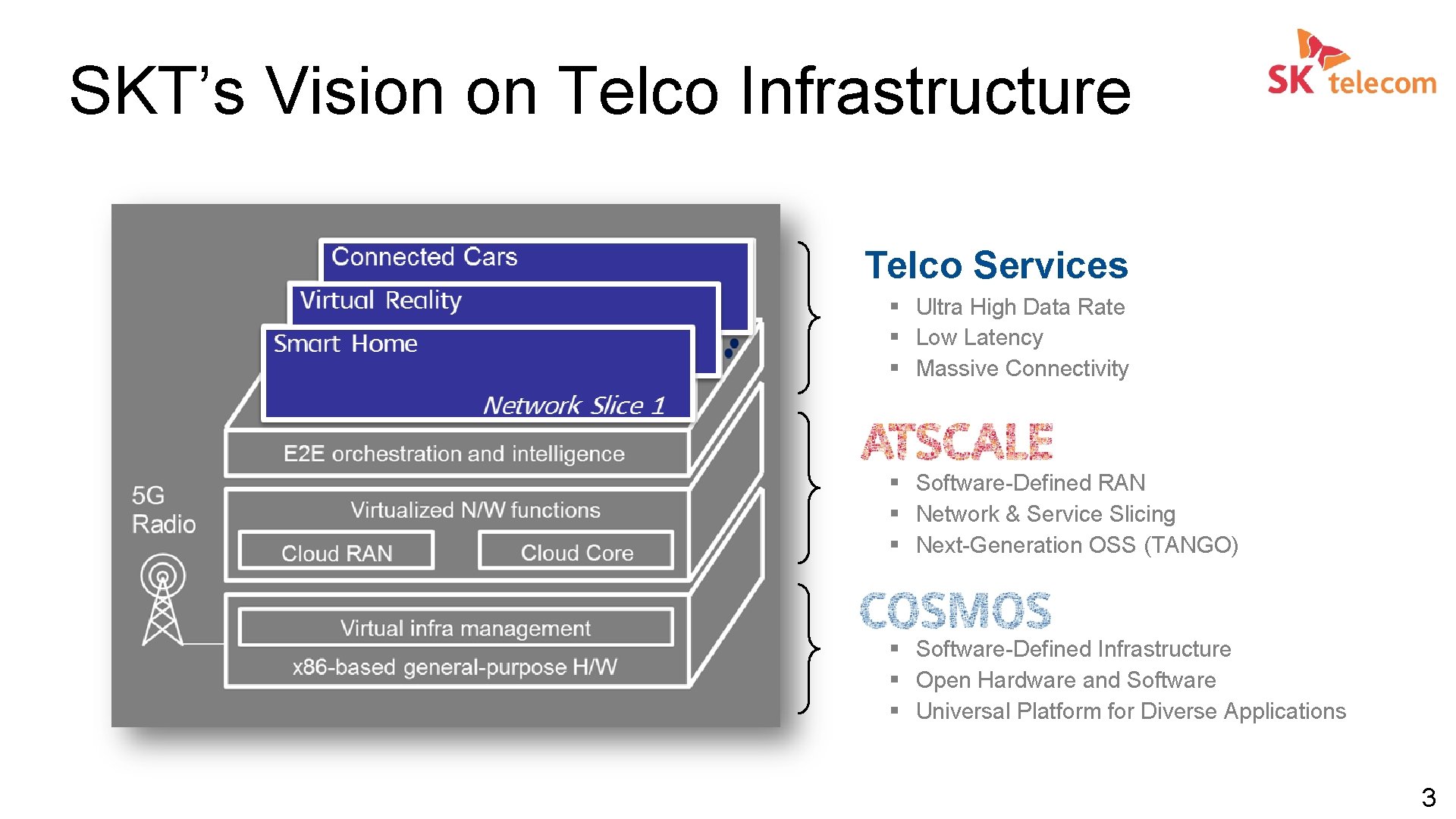

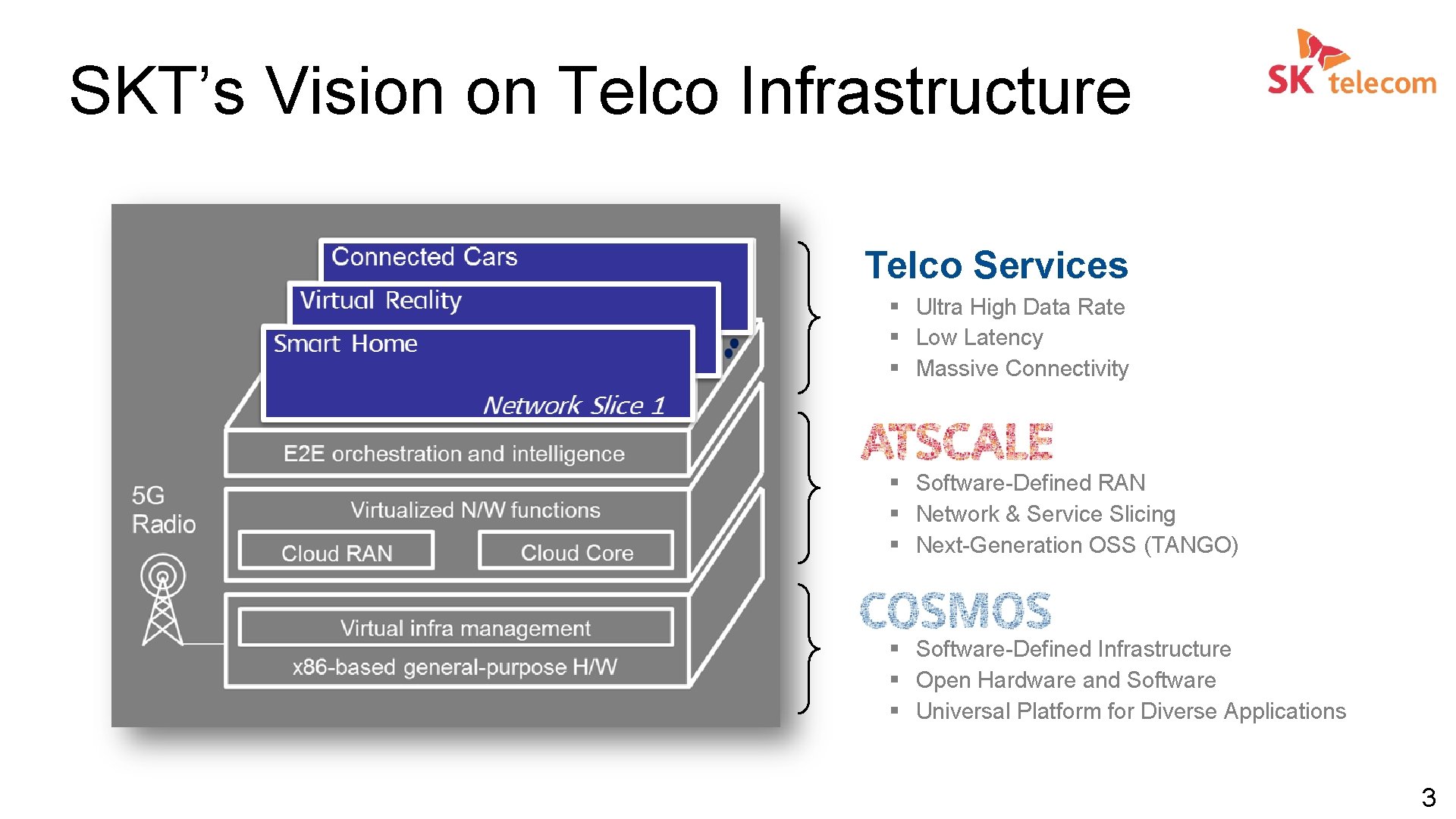

SKT’s Vision on Telco Infrastructure Telco Services § Ultra High Data Rate § Low Latency § Massive Connectivity § Software-Defined RAN § Network & Service Slicing § Next-Generation OSS (TANGO) § Software-Defined Infrastructure § Open Hardware and Software § Universal Platform for Diverse Applications 3

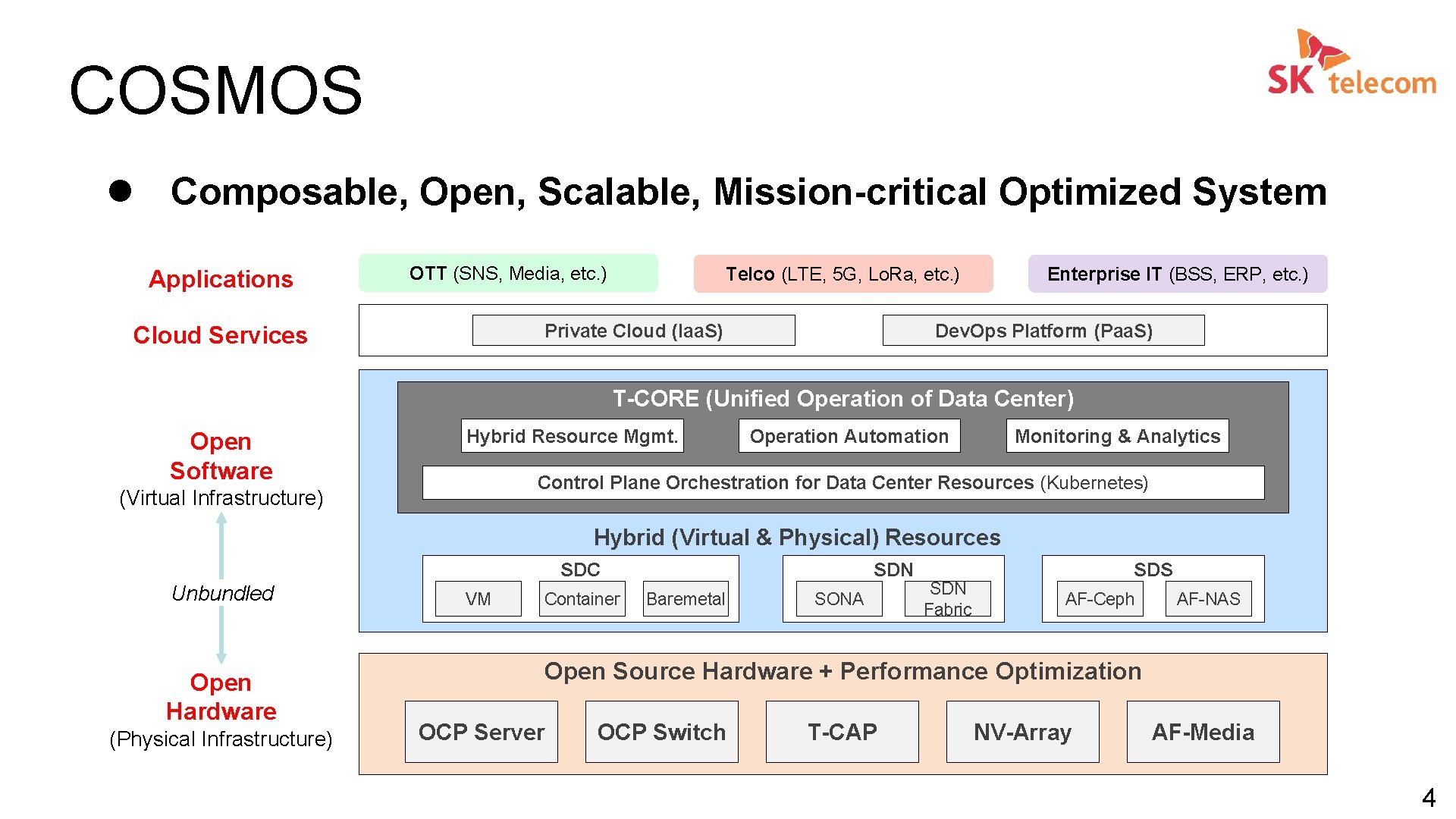

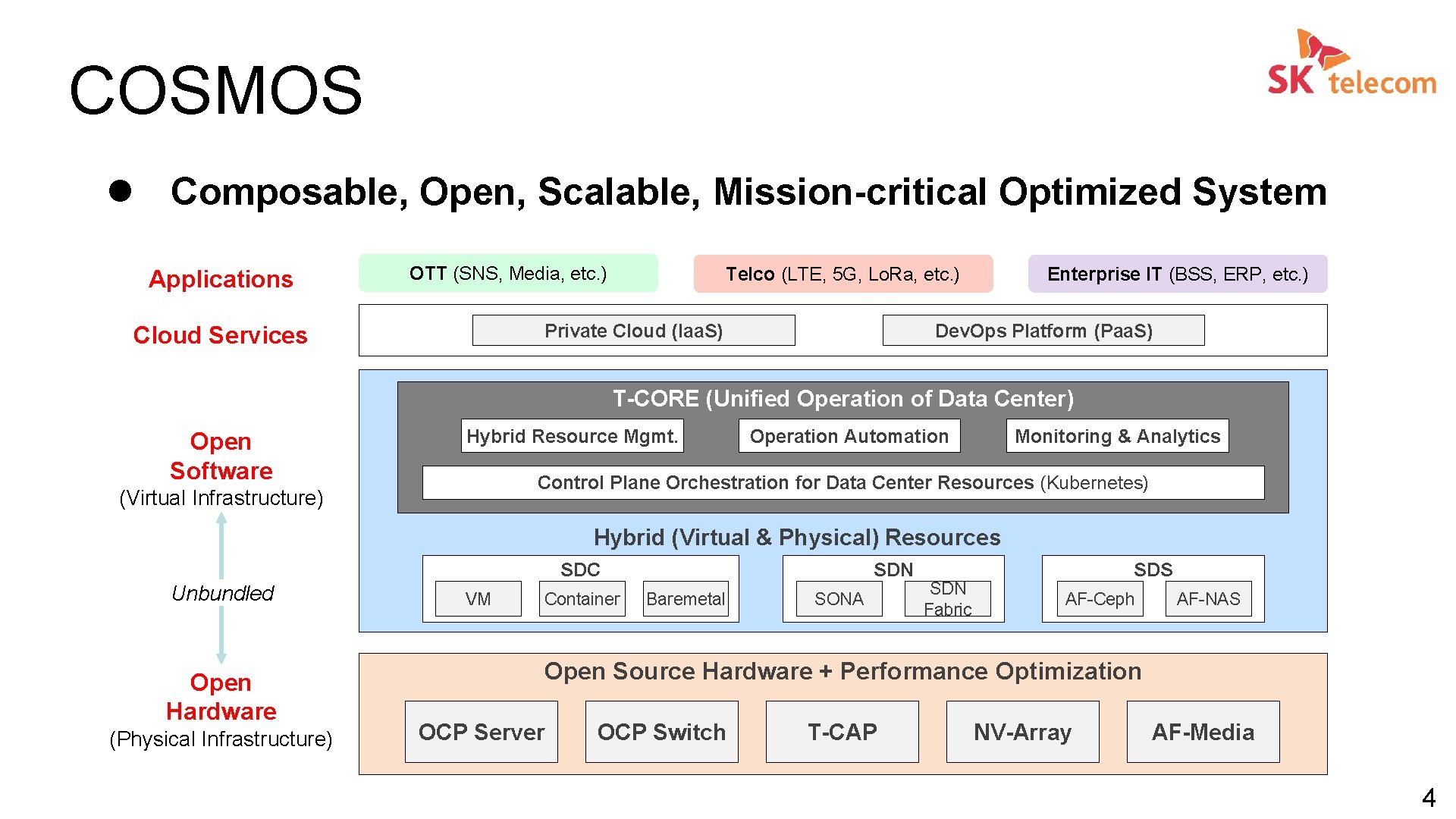

COSMOS l Composable, Open, Scalable, Mission-critical Optimized System Applications OTT (SNS, Media, etc. ) Telco (LTE, 5 G, Lo. Ra, etc. ) Private Cloud (Iaa. S) Cloud Services Enterprise IT (BSS, ERP, etc. ) Dev. Ops Platform (Paa. S) T-CORE (Unified Operation of Data Center) Open Software Hybrid Resource Mgmt. Operation Automation Monitoring & Analytics Control Plane Orchestration for Data Center Resources (Kubernetes) (Virtual Infrastructure) Hybrid (Virtual & Physical) Resources SDC Unbundled Open Hardware (Physical Infrastructure) VM Container SDN Baremetal SONA SDN Fabric SDS AF-Ceph AF-NAS Open Source Hardware + Performance Optimization OCP Server OCP Switch T-CAP NV-Array AF-Media 4

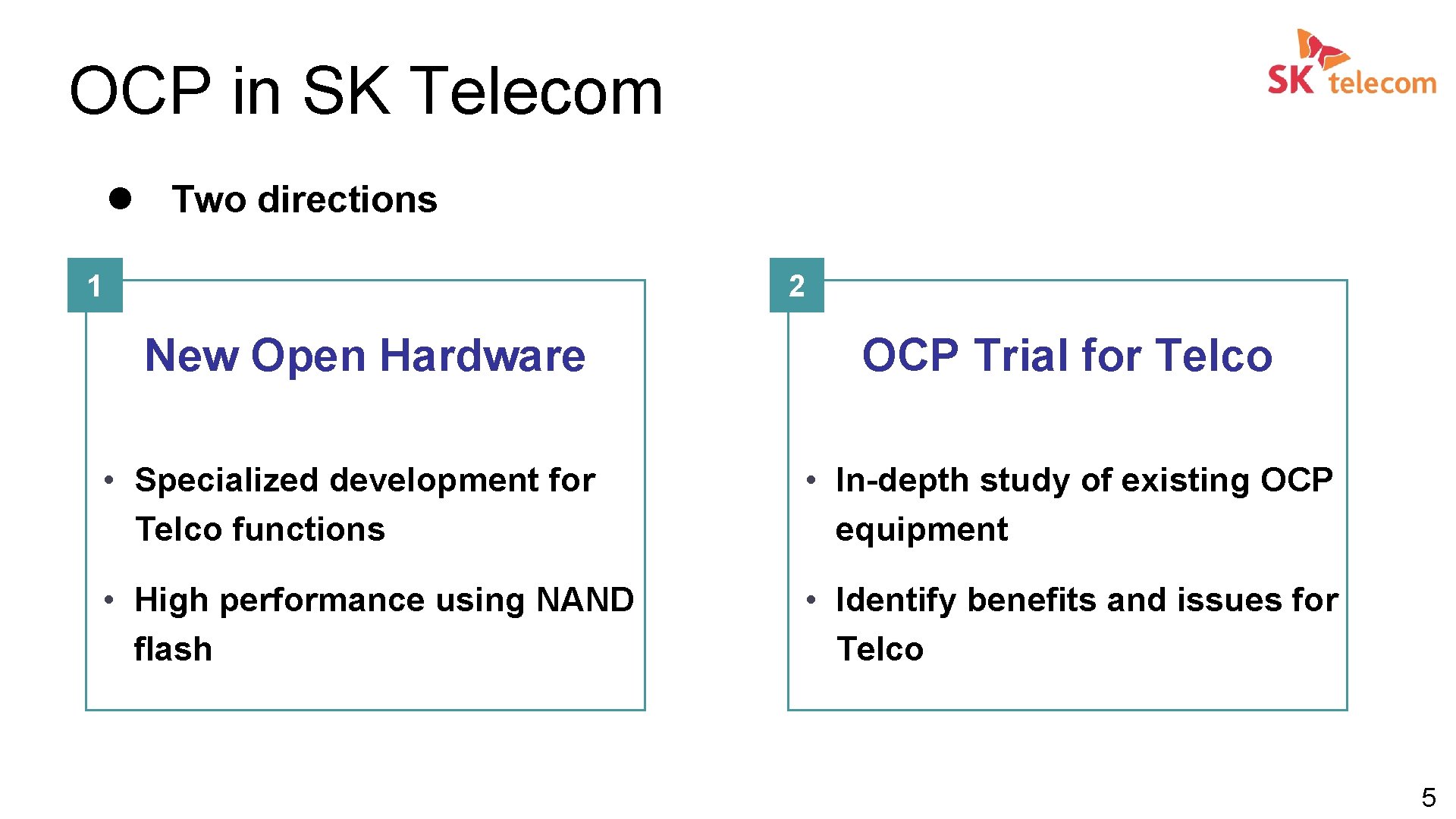

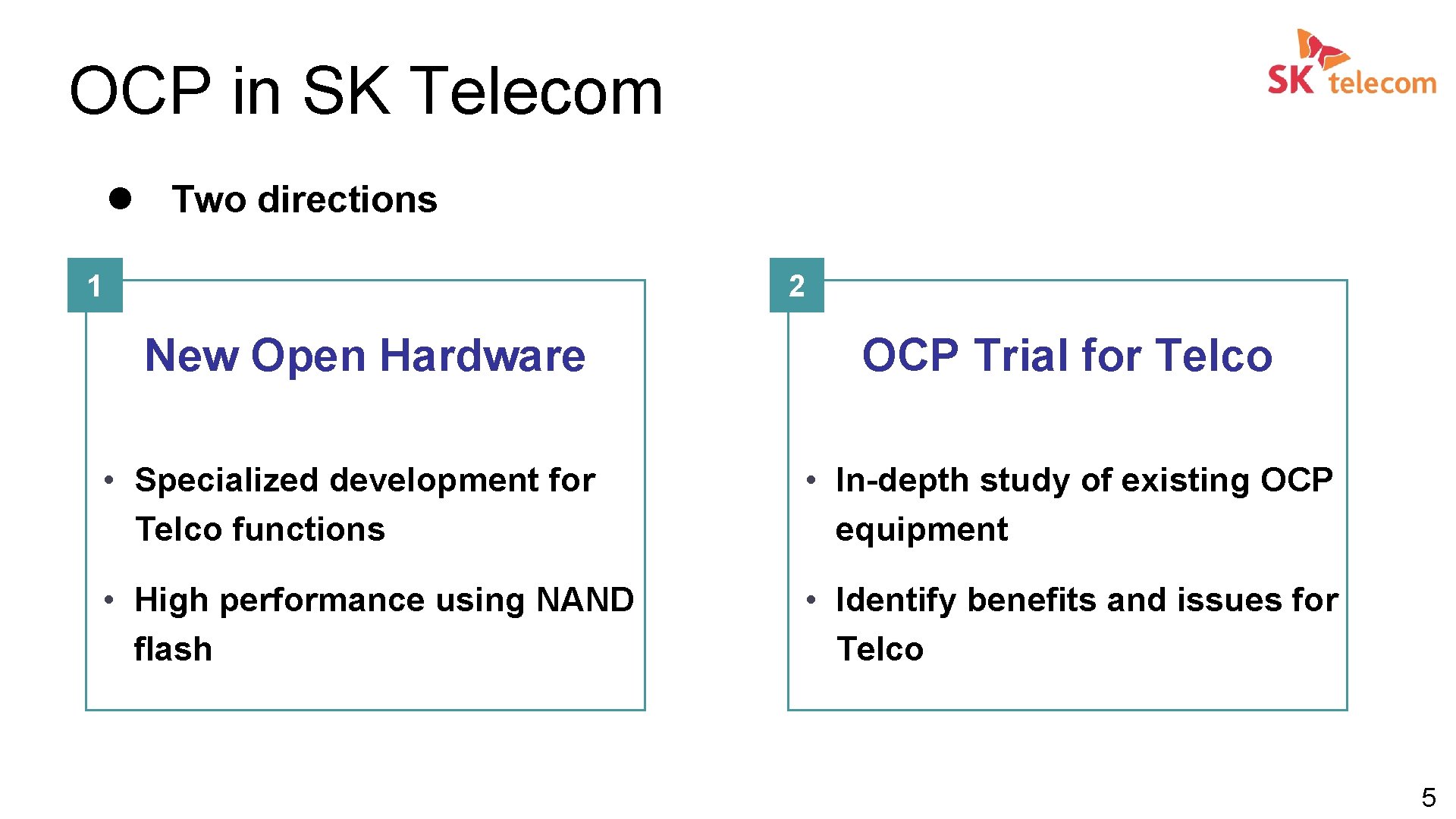

OCP in SK Telecom l Two directions 1 2 New Open Hardware OCP Trial for Telco • Specialized development for Telco functions • In-depth study of existing OCP equipment • High performance using NAND flash • Identify benefits and issues for Telco 5

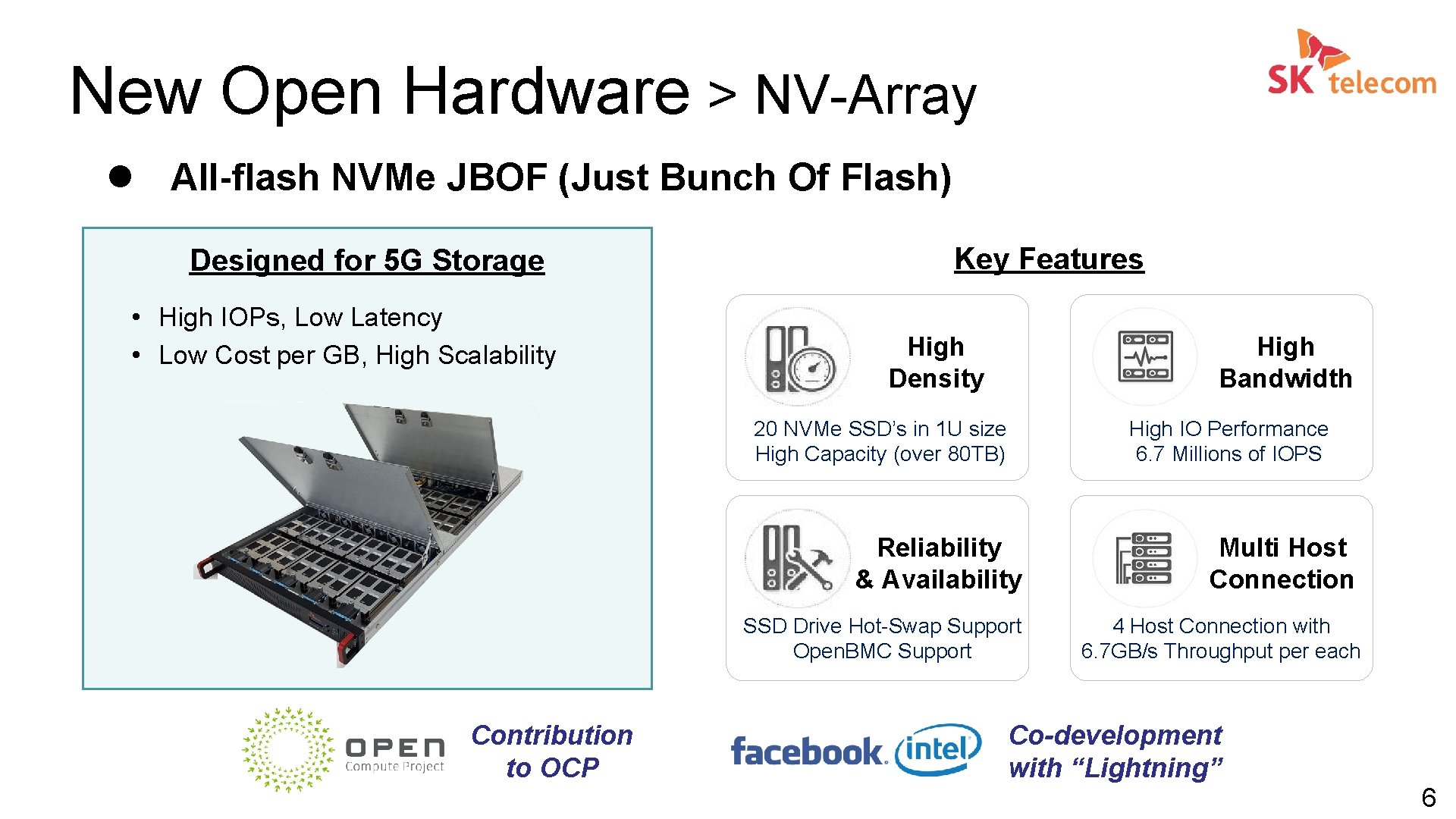

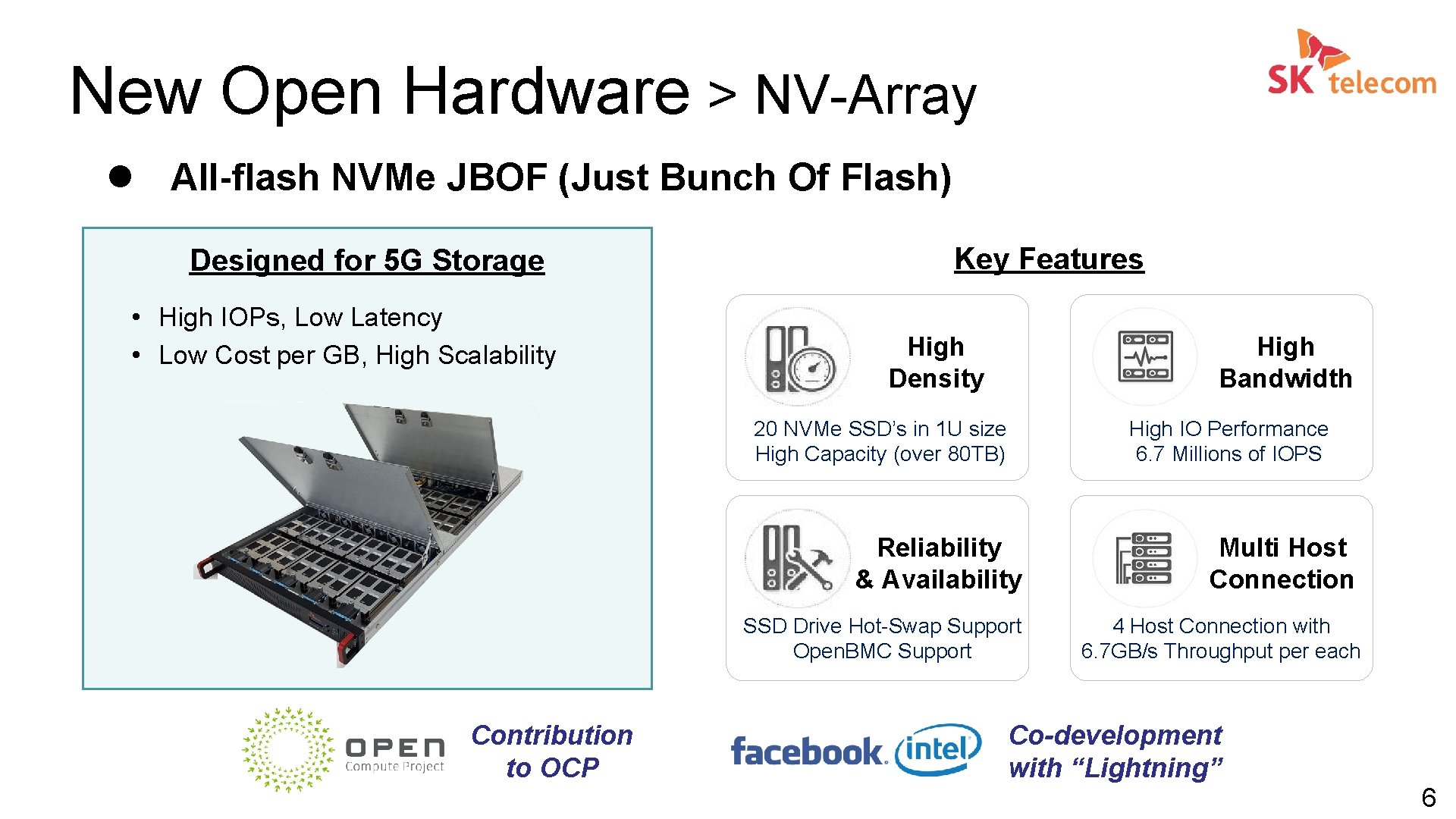

New Open Hardware > NV-Array l All-flash NVMe JBOF (Just Bunch Of Flash) Designed for 5 G Storage • High IOPs, Low Latency • Low Cost per GB, High Scalability Key Features High Density High Bandwidth 20 NVMe SSD’s in 1 U size High Capacity (over 80 TB) Contribution to OCP High IO Performance 6. 7 Millions of IOPS Reliability & Availability Multi Host Connection SSD Drive Hot-Swap Support Open. BMC Support 4 Host Connection with 6. 7 GB/s Throughput per each Co-development with “Lightning” 6

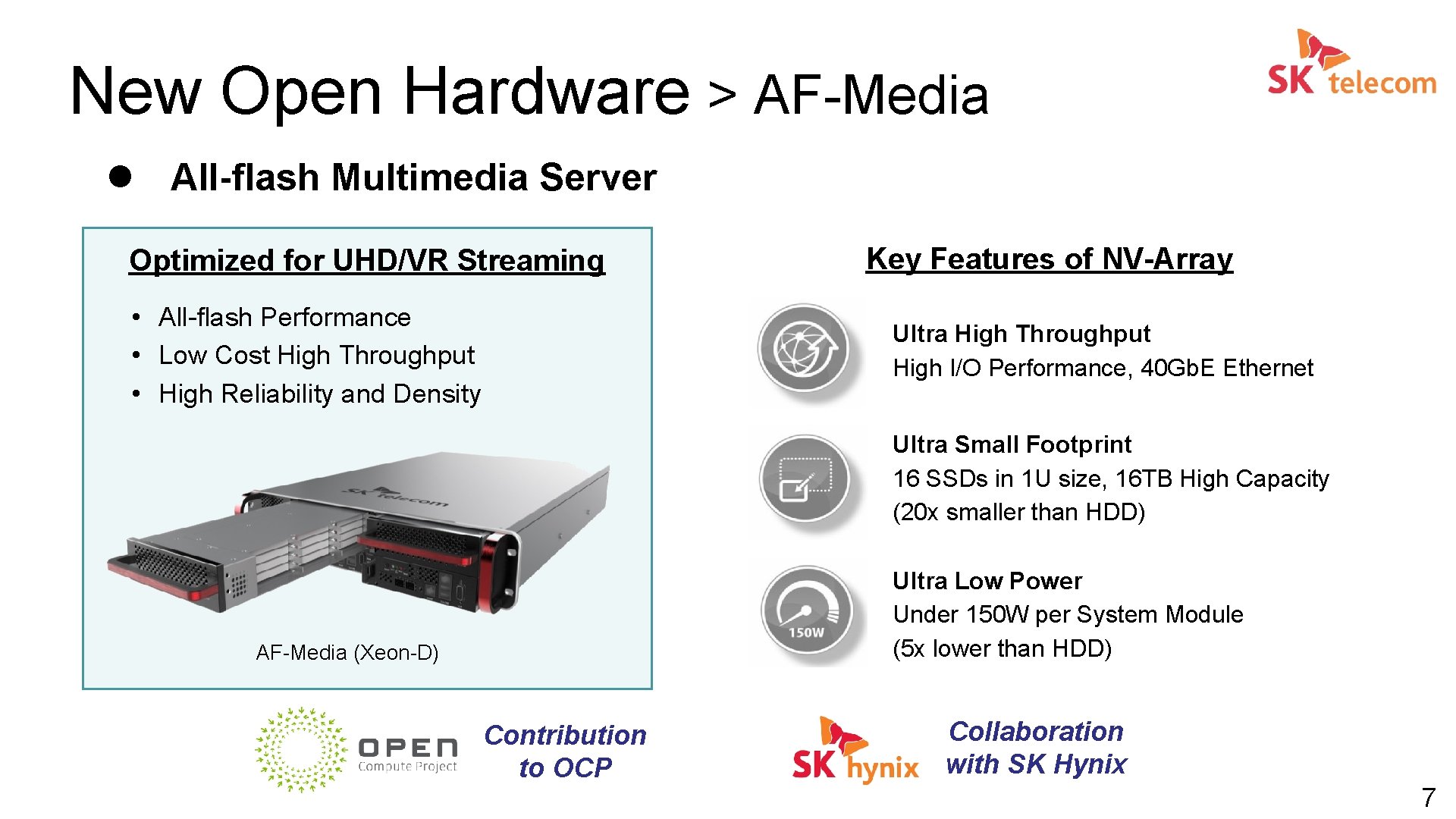

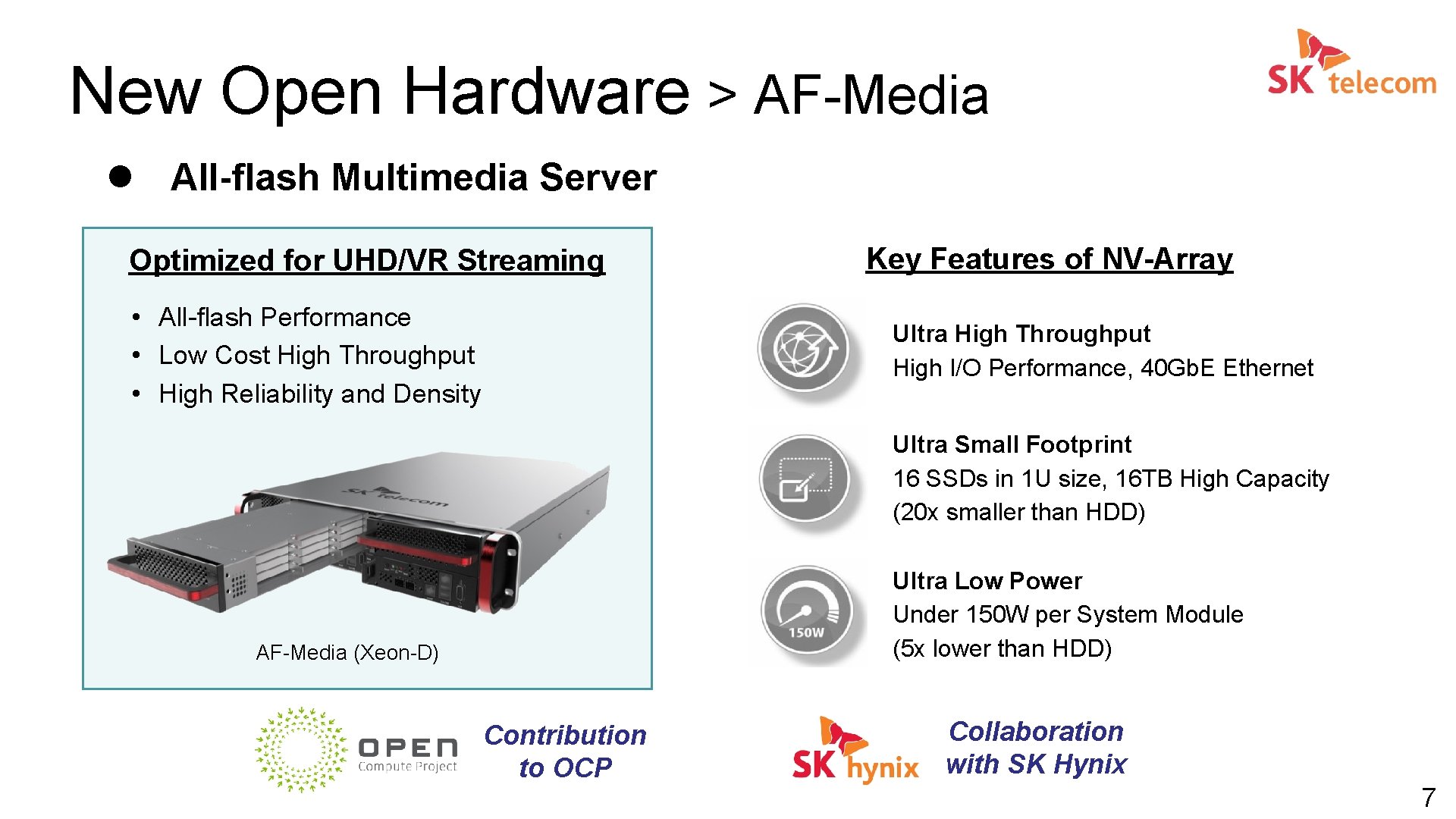

New Open Hardware > AF-Media l All-flash Multimedia Server Optimized for UHD/VR Streaming • All-flash Performance • Low Cost High Throughput • High Reliability and Density Key Features of NV-Array Ultra High Throughput High I/O Performance, 40 Gb. E Ethernet Ultra Small Footprint 16 SSDs in 1 U size, 16 TB High Capacity (20 x smaller than HDD) Ultra Low Power Under 150 W per System Module (5 x lower than HDD) AF-Media (Xeon-D) Contribution to OCP Collaboration with SK Hynix 7

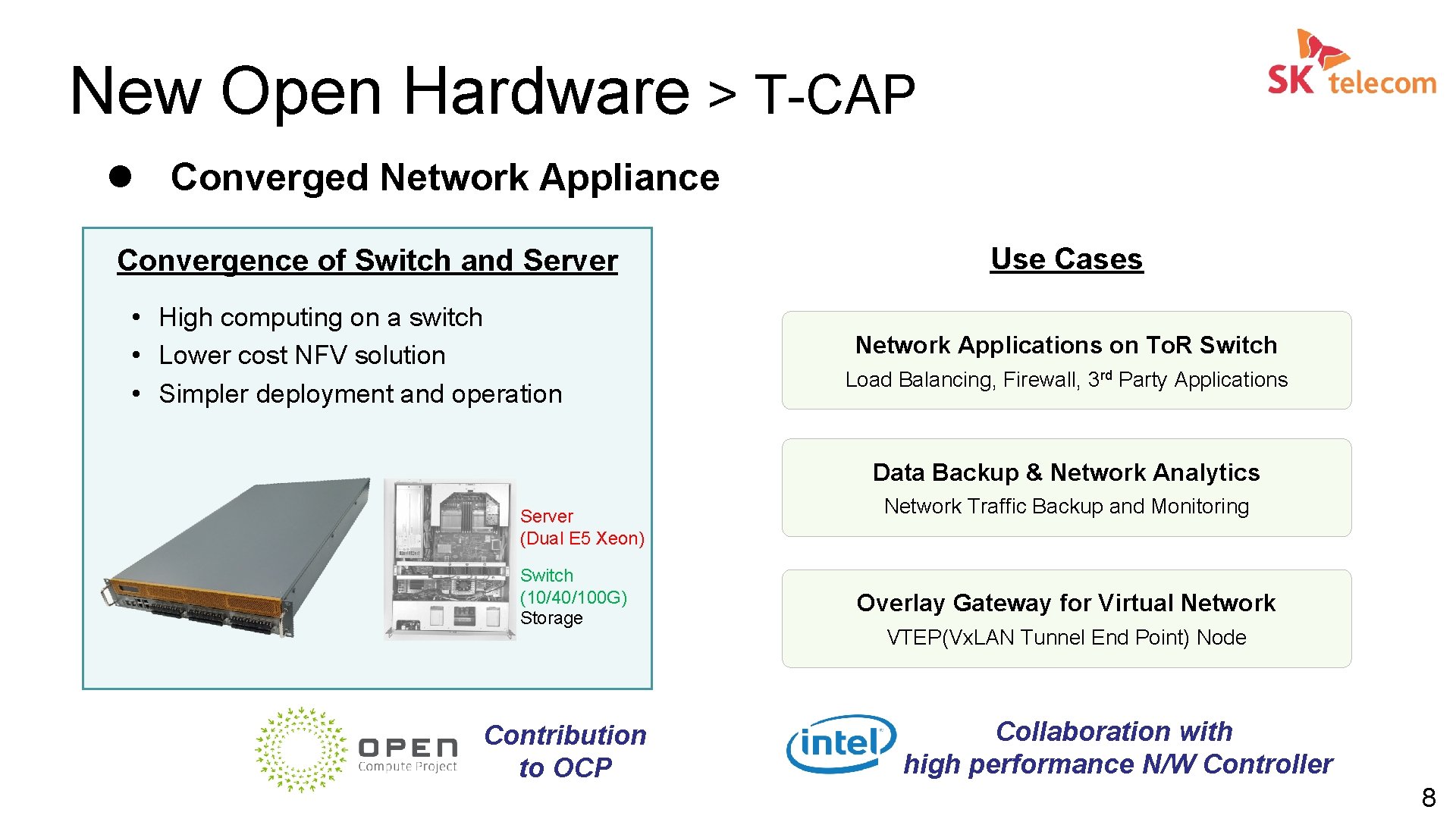

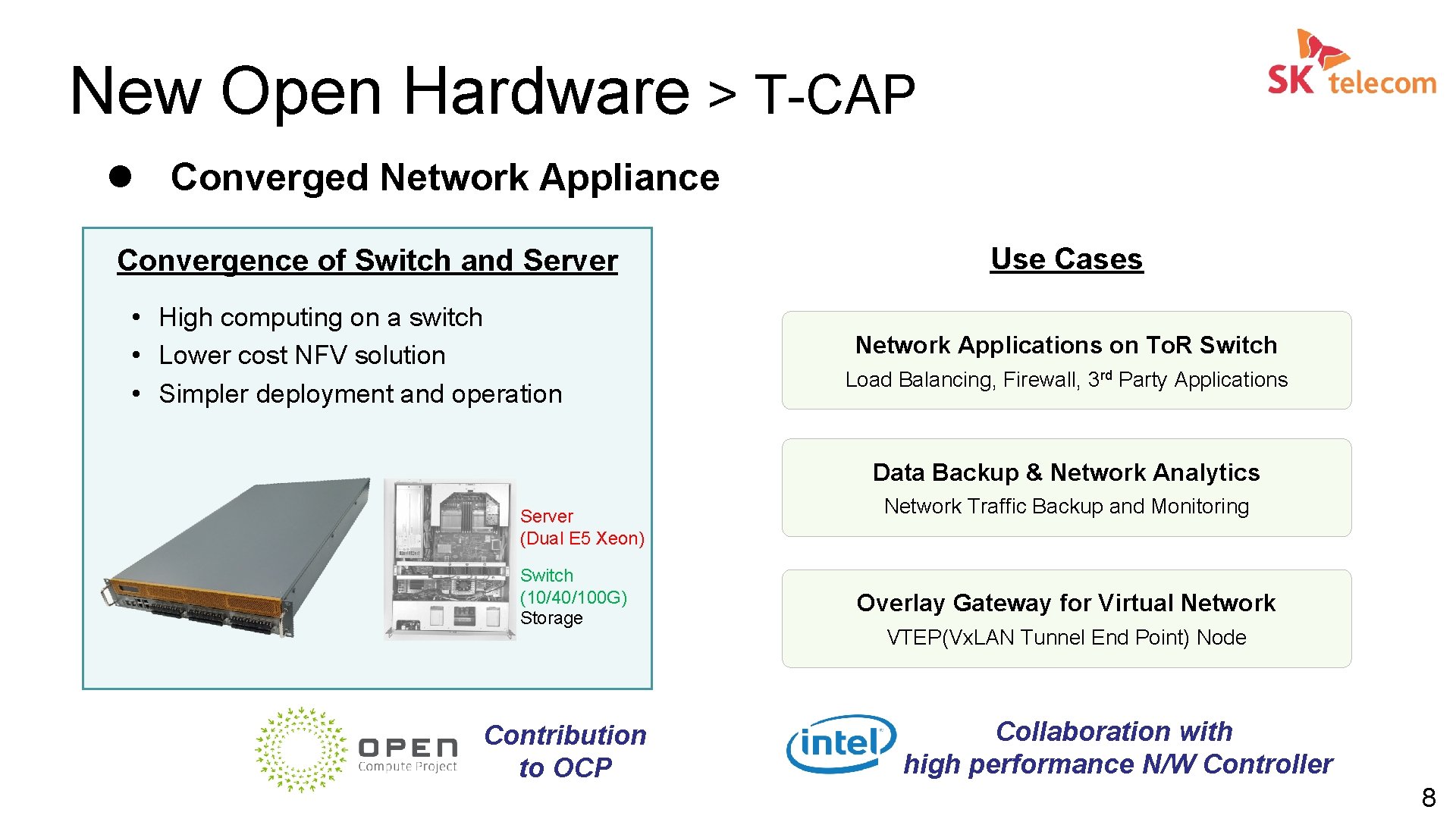

New Open Hardware > T-CAP l Converged Network Appliance Convergence of Switch and Server • High computing on a switch • Lower cost NFV solution • Simpler deployment and operation Use Cases Network Applications on To. R Switch Load Balancing, Firewall, 3 rd Party Applications Data Backup & Network Analytics Server (Dual E 5 Xeon) Switch (10/40/100 G) Storage Contribution to OCP Network Traffic Backup and Monitoring Overlay Gateway for Virtual Network VTEP(Vx. LAN Tunnel End Point) Node Collaboration with high performance N/W Controller 8

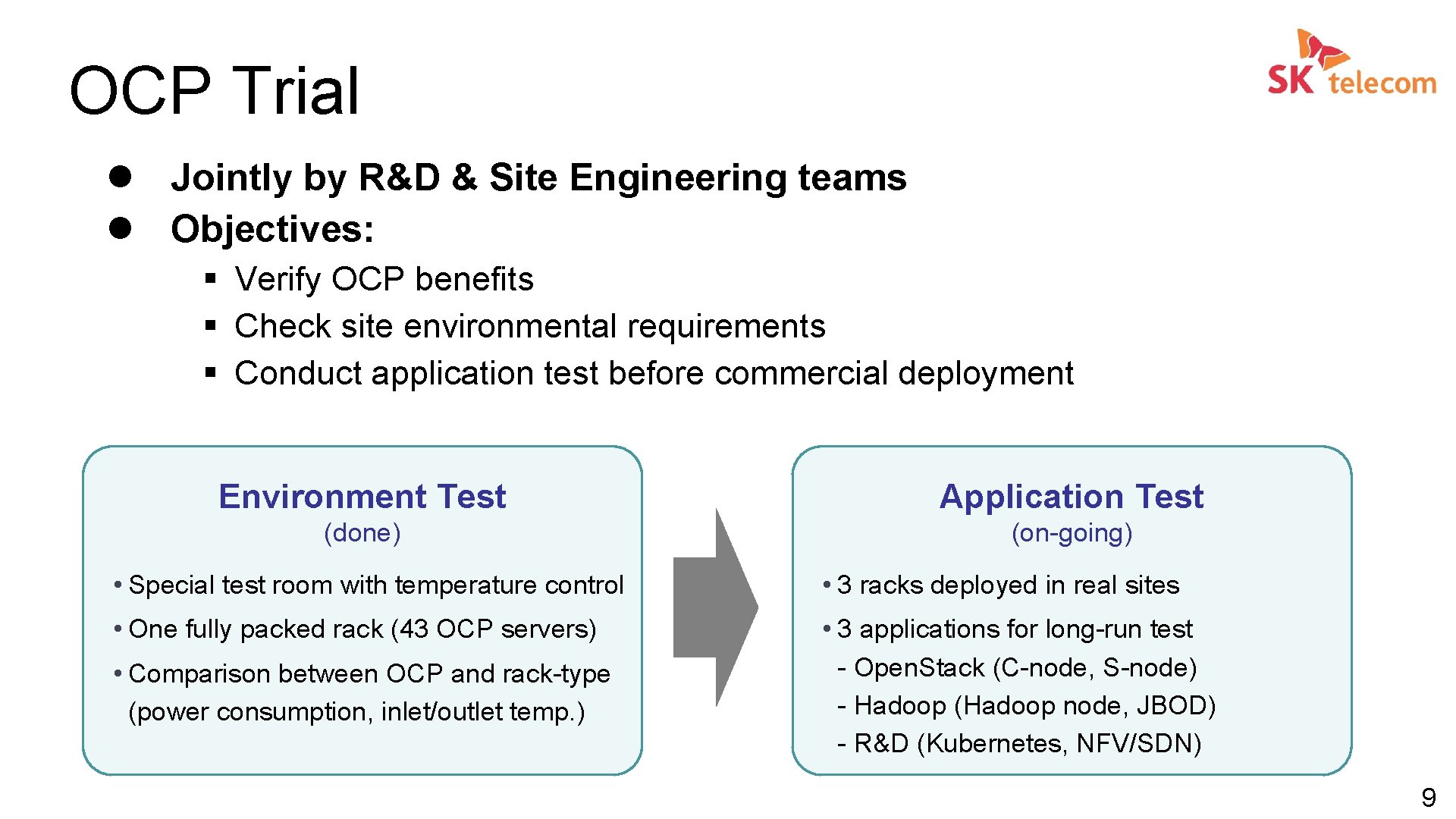

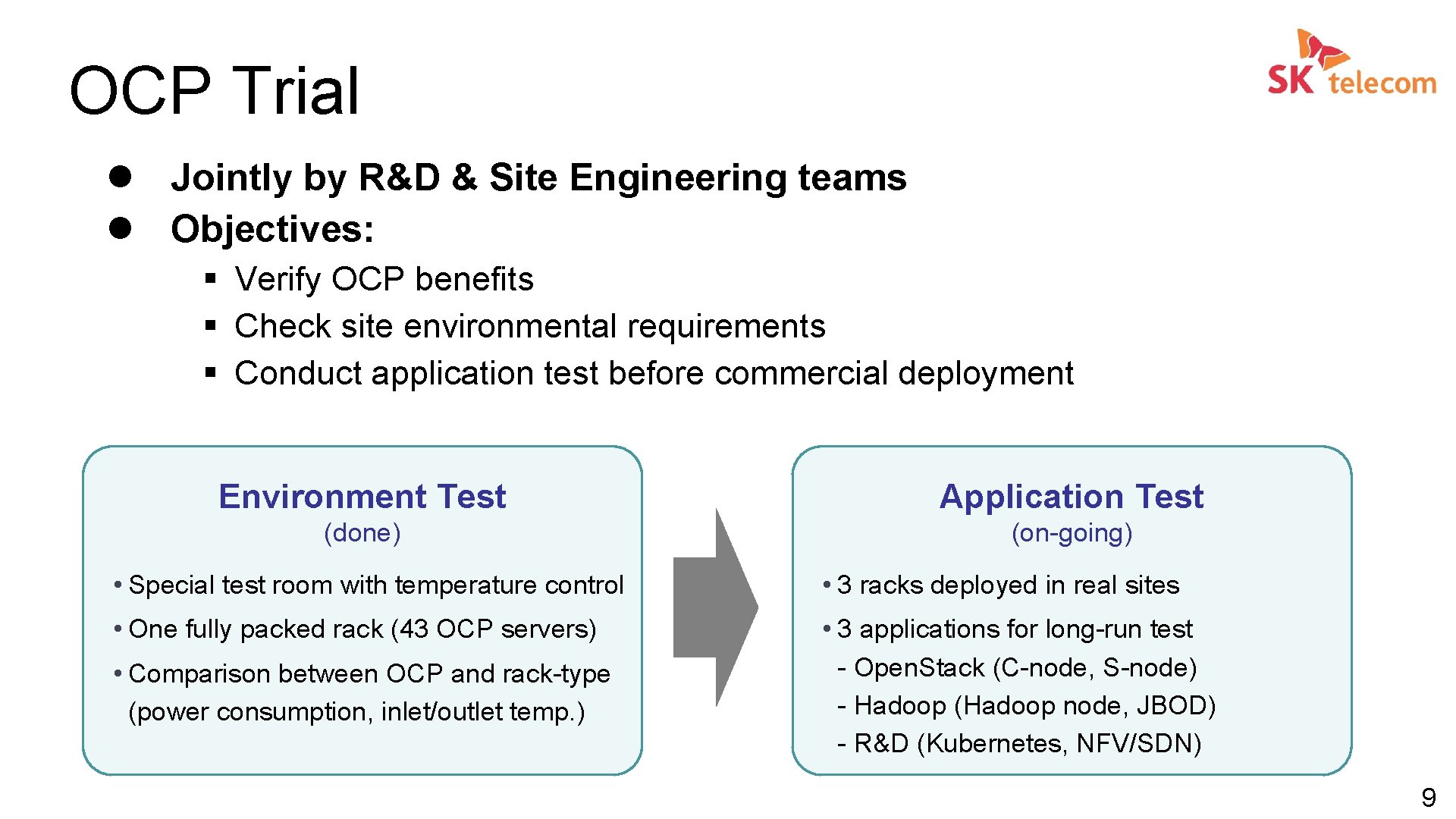

OCP Trial l Jointly by R&D & Site Engineering teams l Objectives: § Verify OCP benefits § Check site environmental requirements § Conduct application test before commercial deployment Environment Test Application Test (done) (on-going) • Special test room with temperature control • 3 racks deployed in real sites • One fully packed rack (43 OCP servers) • 3 applications for long-run test - Open. Stack (C-node, S-node) - Hadoop (Hadoop node, JBOD) - R&D (Kubernetes, NFV/SDN) • Comparison between OCP and rack-type (power consumption, inlet/outlet temp. ) 9

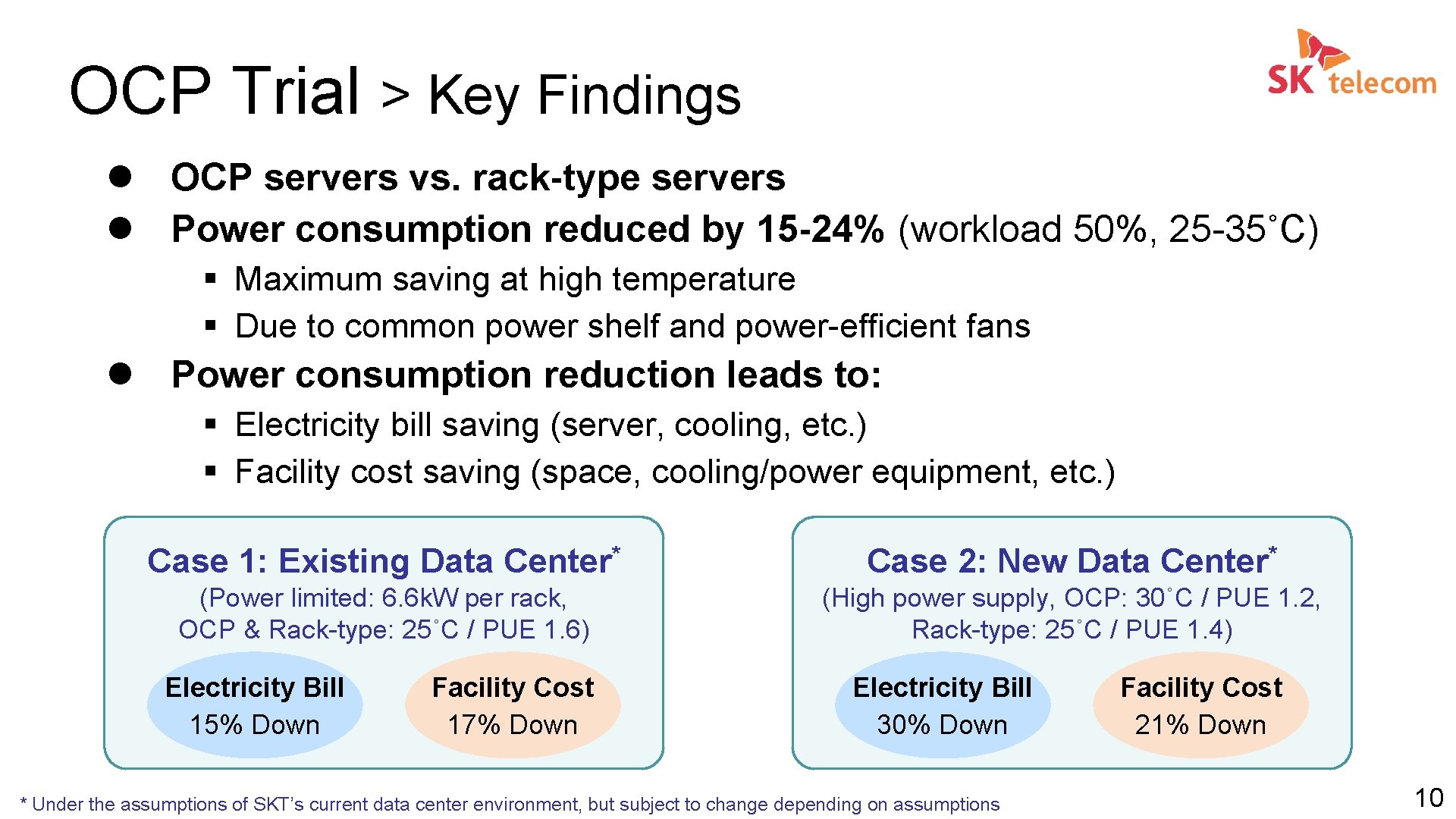

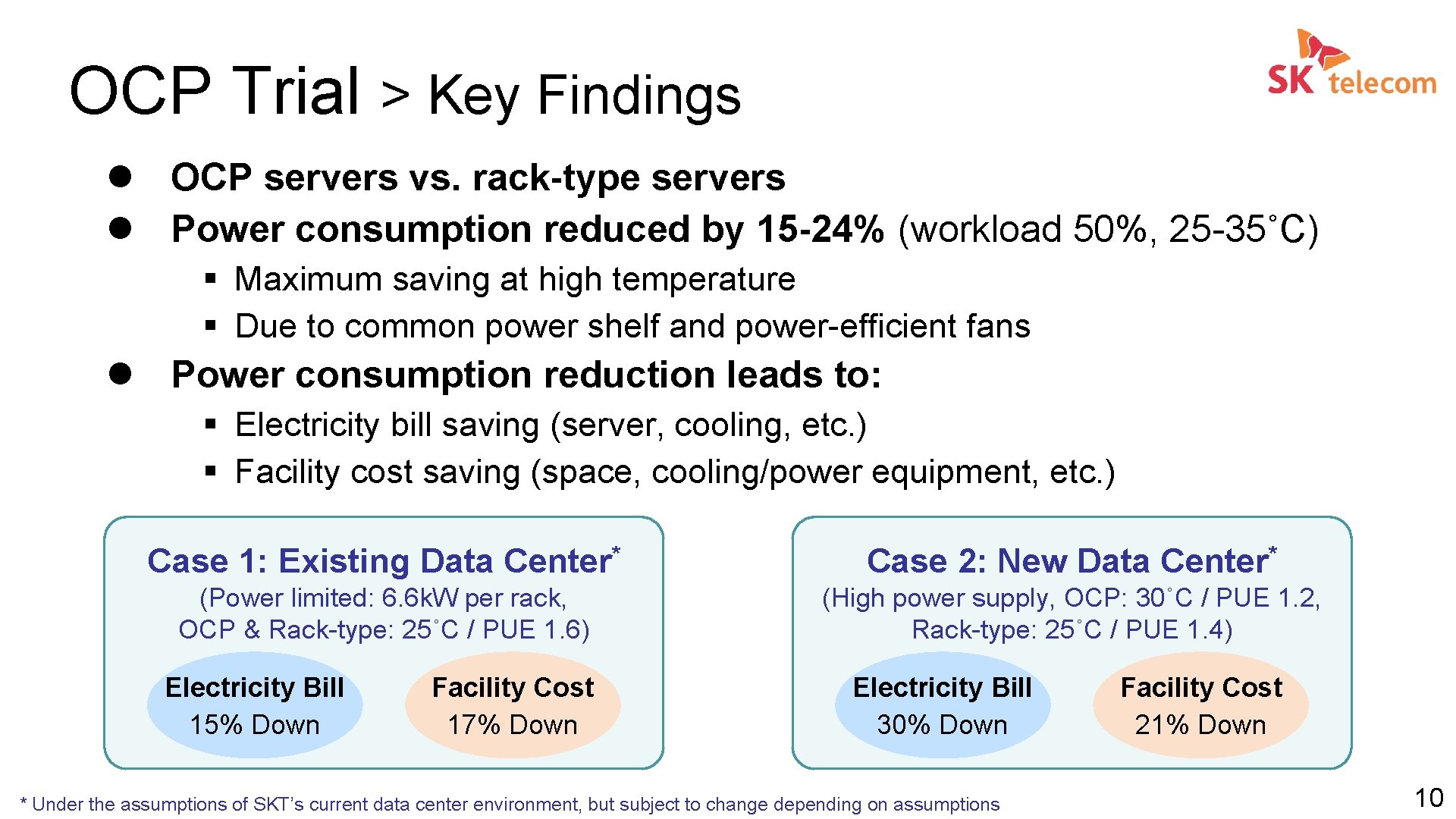

OCP Trial > Key Findings l OCP servers vs. rack-type servers l Power consumption reduced by 15 -24% (workload 50%, 25 -35˚C) § Maximum saving at high temperature § Due to common power shelf and power-efficient fans l Power consumption reduction leads to: § Electricity bill saving (server, cooling, etc. ) § Facility cost saving (space, cooling/power equipment, etc. ) Case 1: Existing Data Center* Case 2: New Data Center* (Power limited: 6. 6 k. W per rack, OCP & Rack-type: 25˚C / PUE 1. 6) (High power supply, OCP: 30˚C / PUE 1. 2, Rack-type: 25˚C / PUE 1. 4) Electricity Bill 15% Down Facility Cost 17% Down Electricity Bill 30% Down * Under the assumptions of SKT’s current data center environment, but subject to change depending on assumptions Facility Cost 21% Down 10

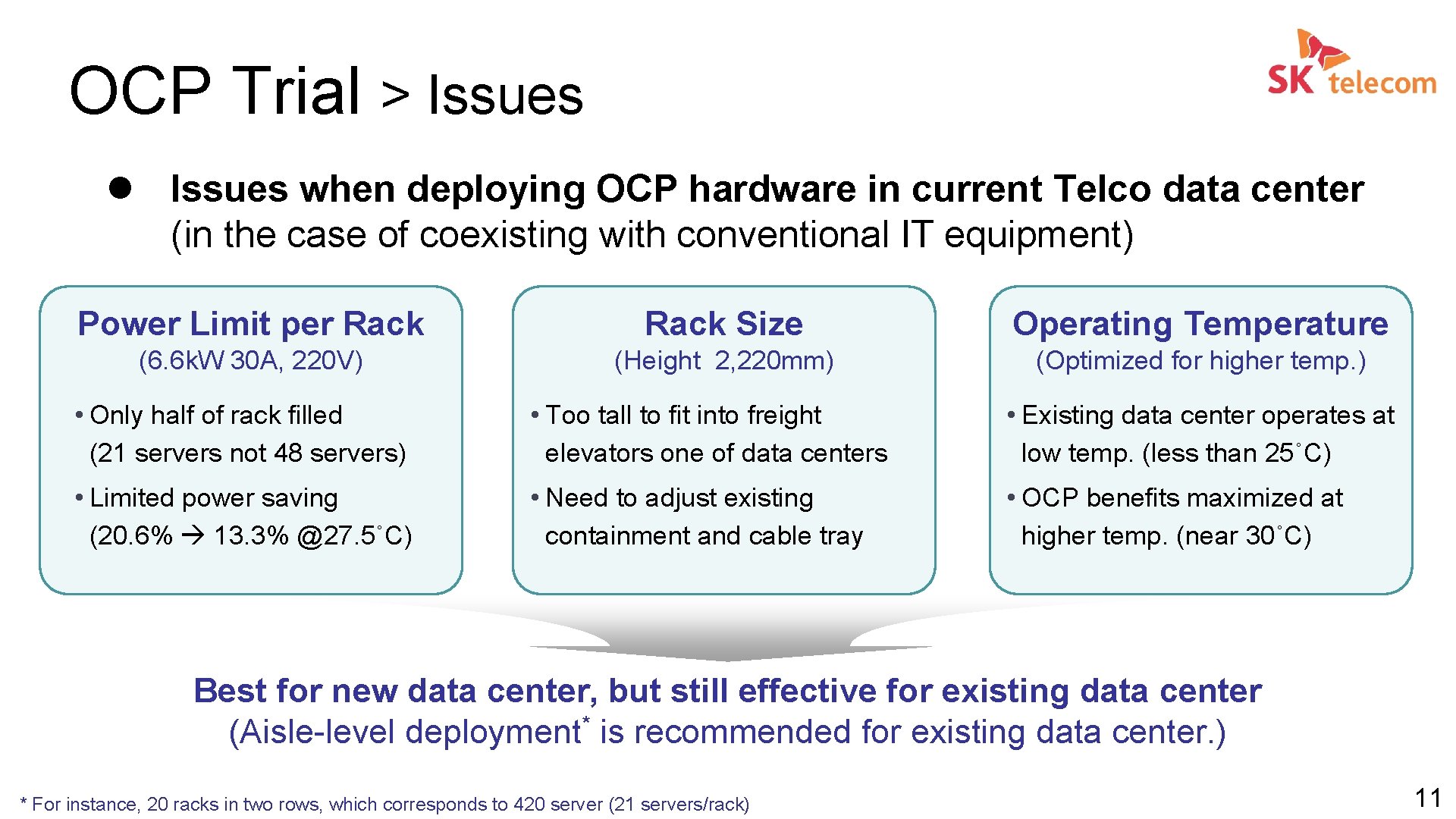

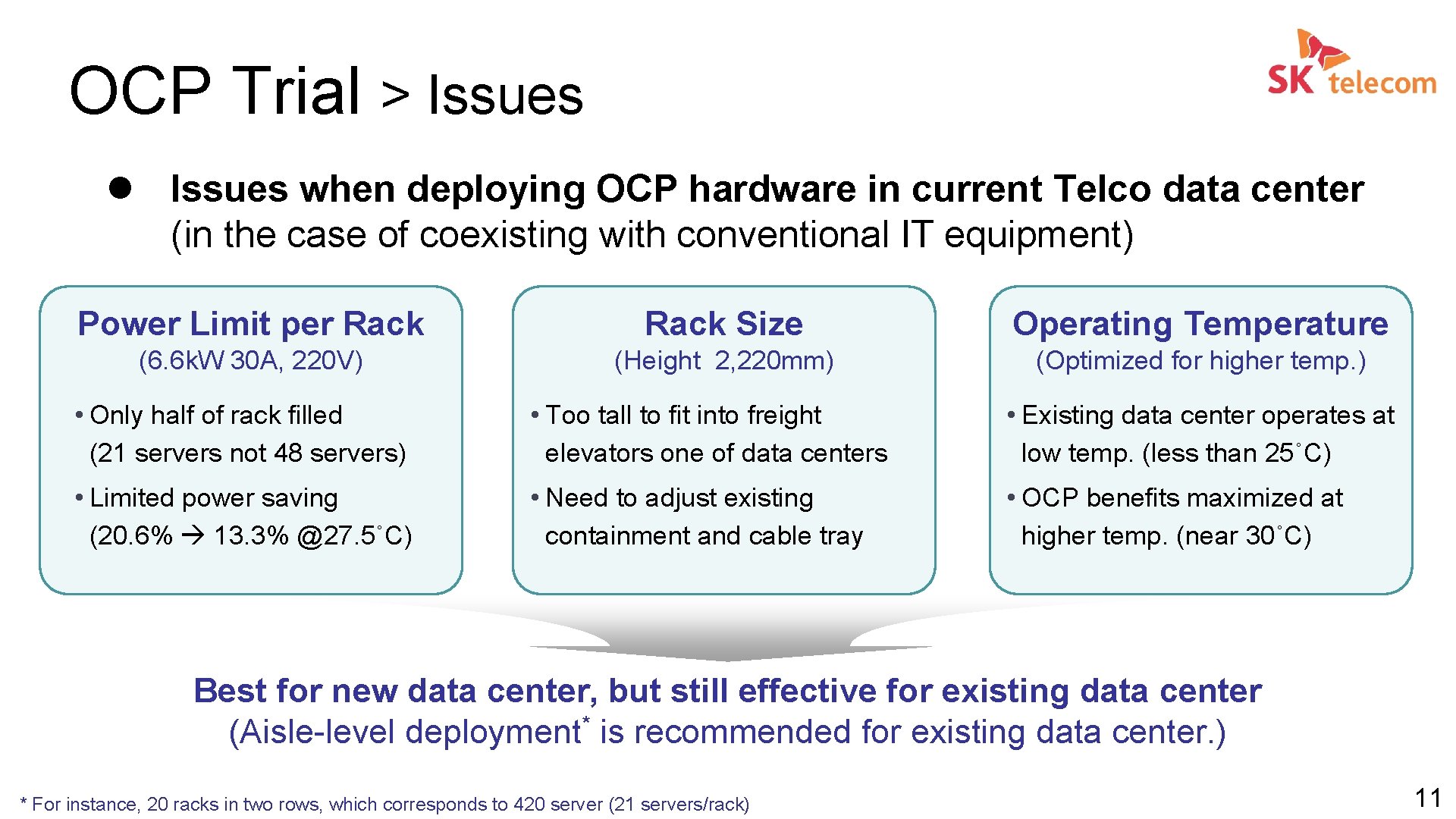

OCP Trial > Issues l Issues when deploying OCP hardware in current Telco data center (in the case of coexisting with conventional IT equipment) Power Limit per Rack Size Operating Temperature (6. 6 k. W 30 A, 220 V) (Height 2, 220 mm) (Optimized for higher temp. ) • Only half of rack filled (21 servers not 48 servers) • Too tall to fit into freight elevators one of data centers • Existing data center operates at low temp. (less than 25˚C) • Limited power saving (20. 6% 13. 3% @27. 5˚C) • Need to adjust existing containment and cable tray • OCP benefits maximized at higher temp. (near 30˚C) Best for new data center, but still effective for existing data center (Aisle-level deployment* is recommended for existing data center. ) * For instance, 20 racks in two rows, which corresponds to 420 server (21 servers/rack) 11

OCP Trial > Next Steps l Will share detailed results internally with Site Engineering Team and externally with OCP Telco Project l Will run Open. Stack’s control plane on top of Kubernetes for SKT’s private cloud l Will apply to Telco workloads (M-CORD, NFV Container, SDRAN) and identify Telco requirements. 12

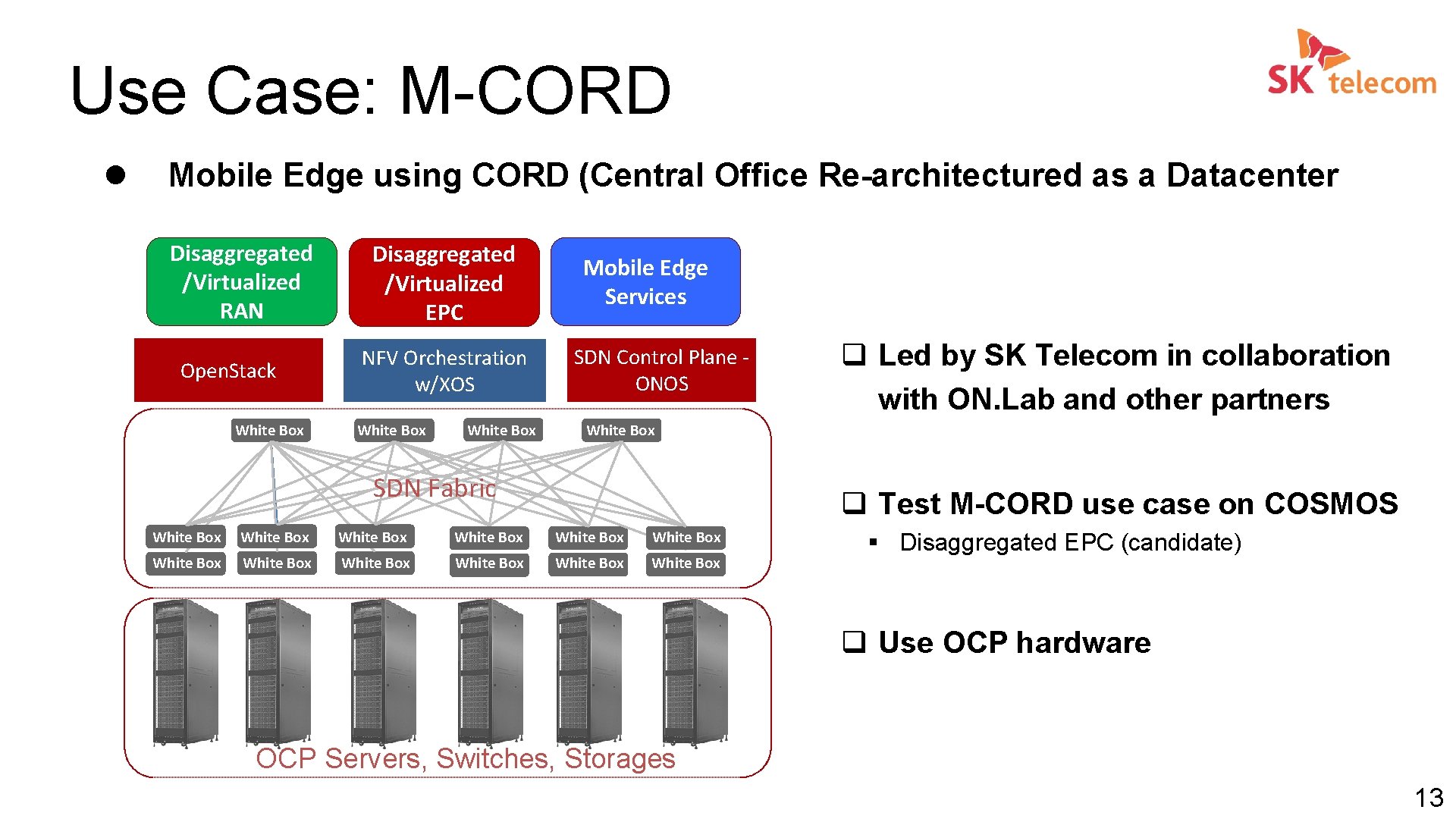

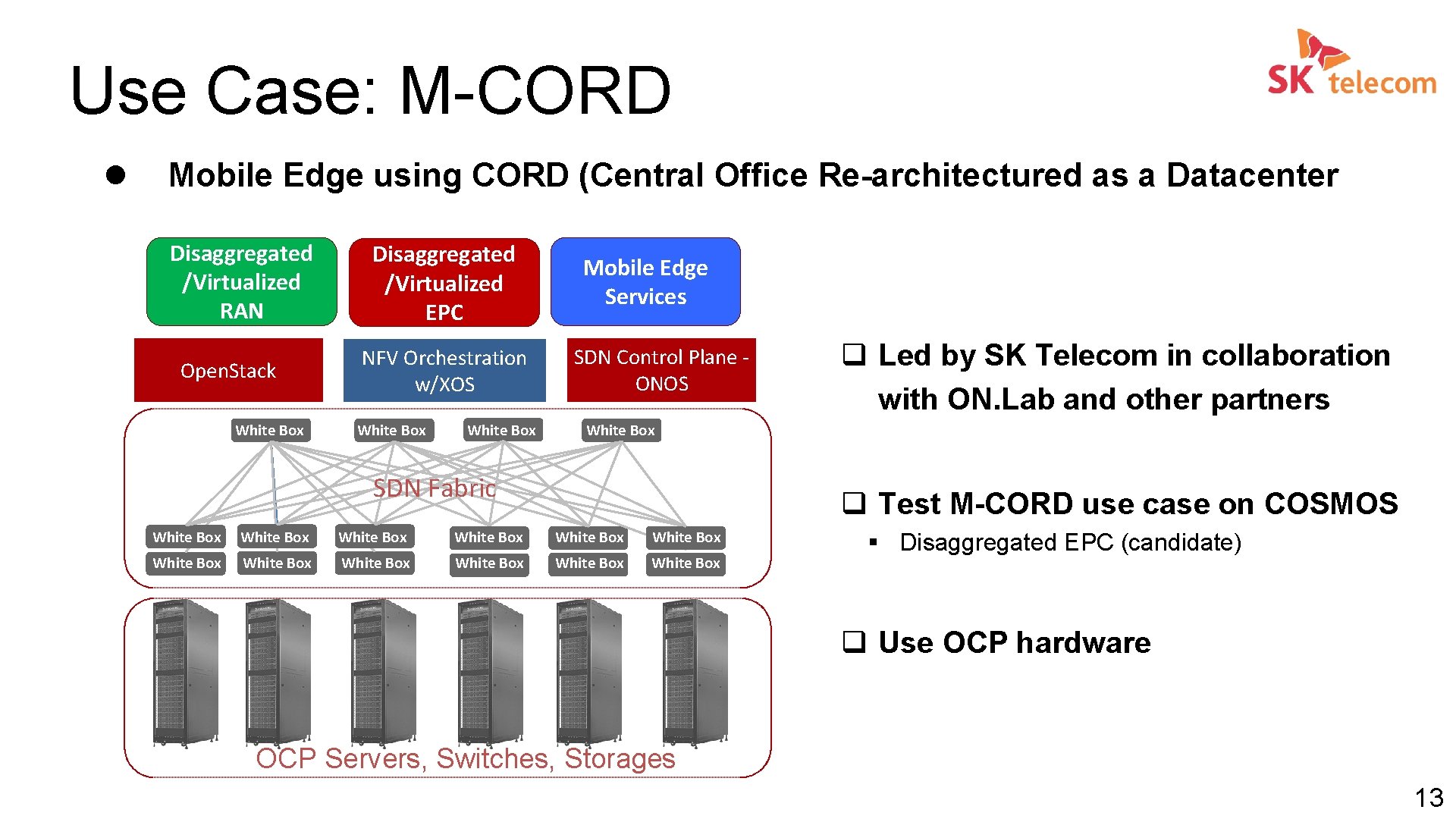

Use Case: M-CORD l Mobile Edge using CORD (Central Office Re-architectured as a Datacenter Disaggregated /Virtualized RAN Open. Stack White Box Disaggregated /Virtualized EPC NFV Orchestration w/XOS White Box Mobile Edge Services SDN Control Plane ONOS q Led by SK Telecom in collaboration with ON. Lab and other partners White Box SDN Fabric q Test M-CORD use case on COSMOS White Box White Box White Box § Disaggregated EPC (candidate) q Use OCP hardware OCP Servers, Switches, Storages 13

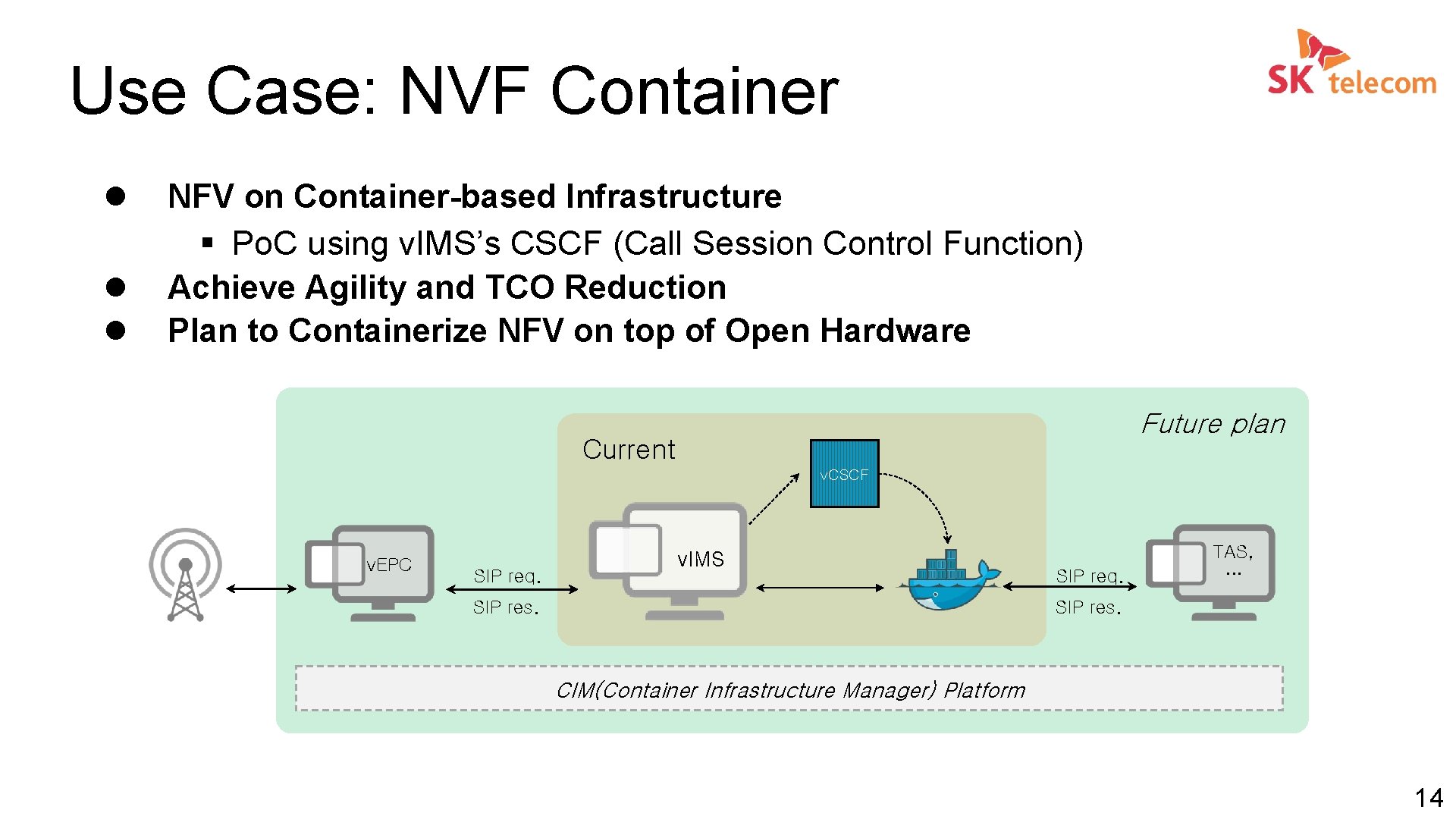

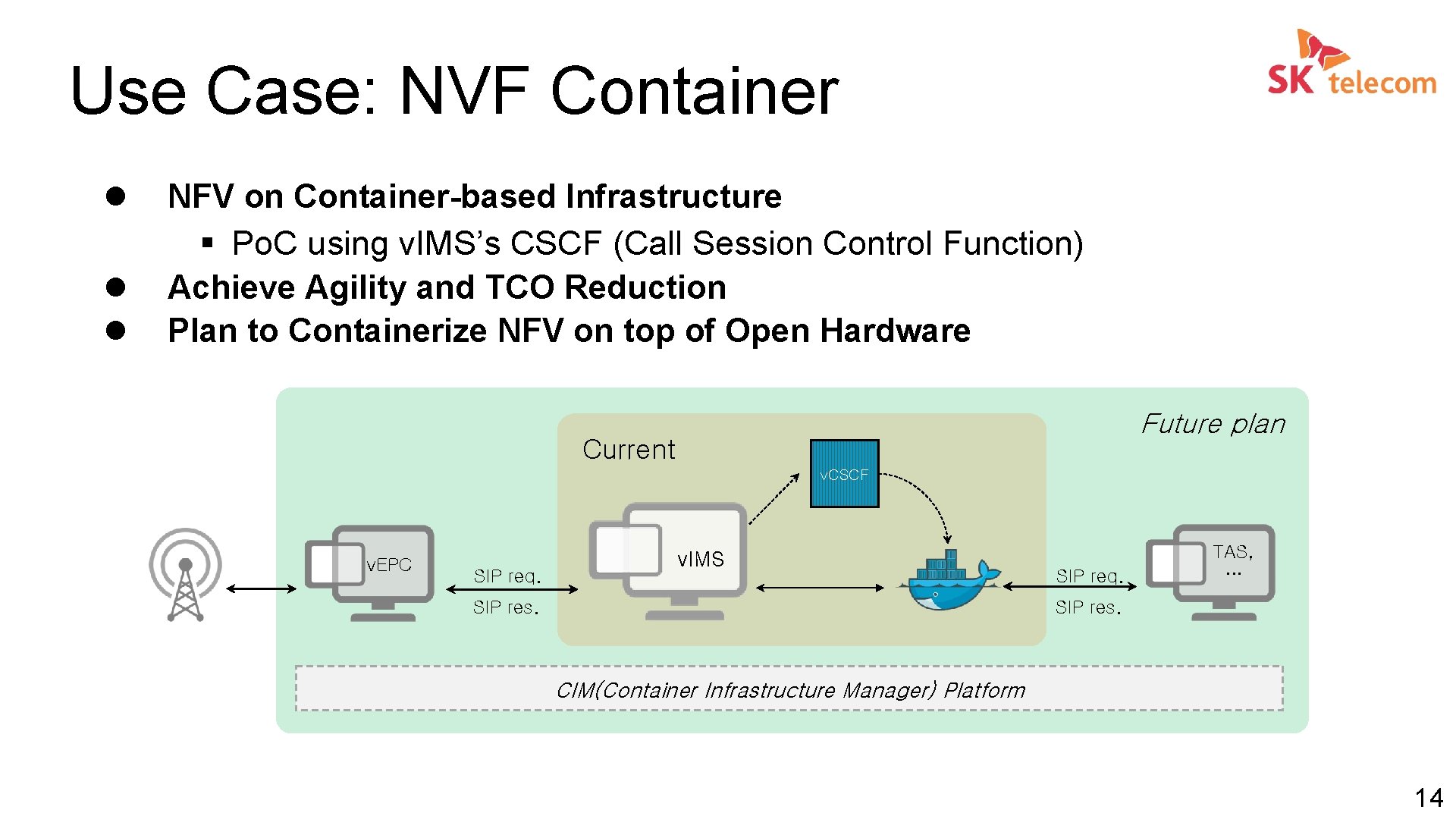

Use Case: NVF Container l l l NFV on Container-based Infrastructure § Po. C using v. IMS’s CSCF (Call Session Control Function) Achieve Agility and TCO Reduction Plan to Containerize NFV on top of Open Hardware Future plan Current v. CSCF v. EPC SIP req. v. IMS SIP res. SIP req. TAS, … SIP res. CIM(Container Infrastructure Manager) Platform 14

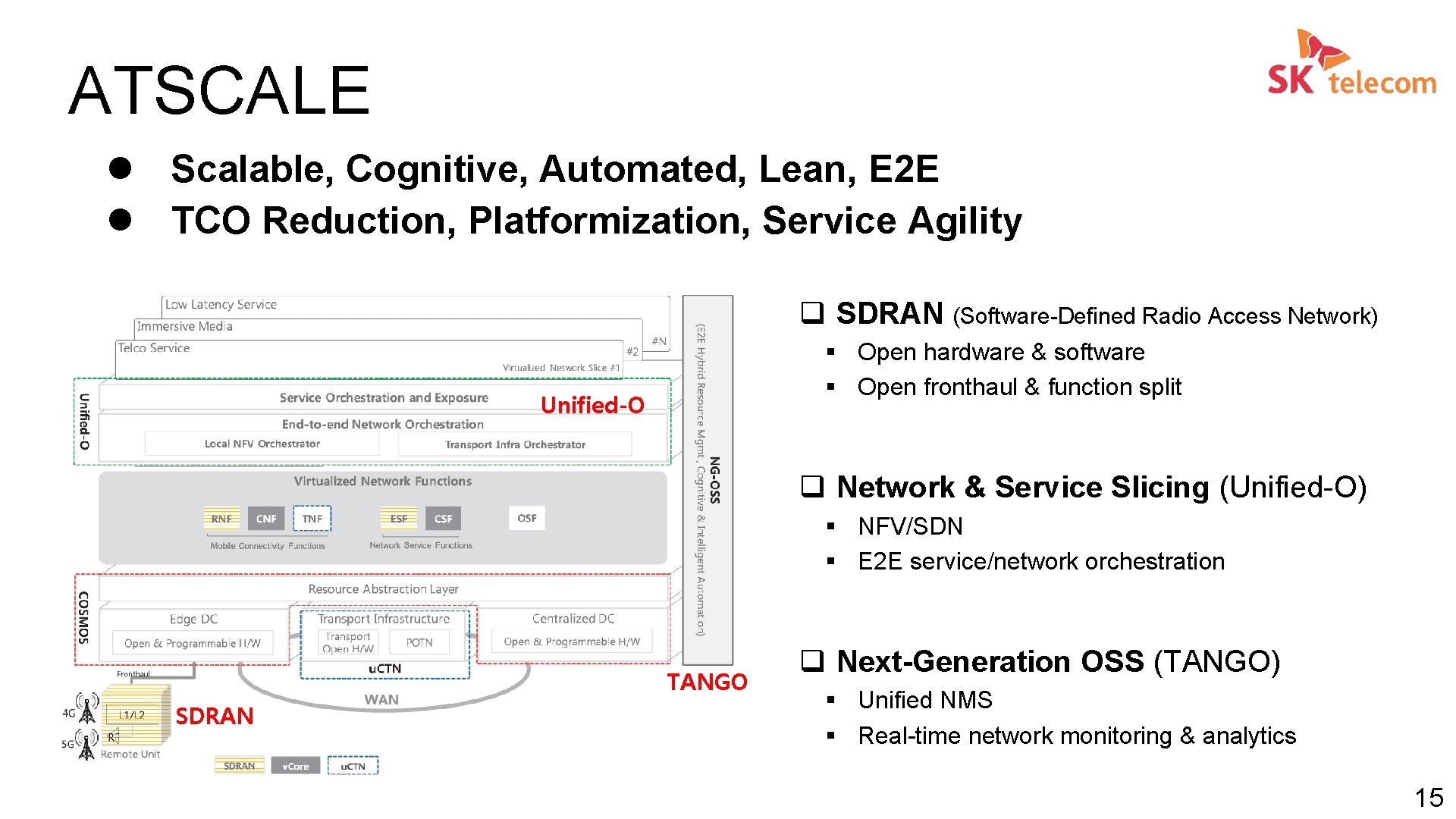

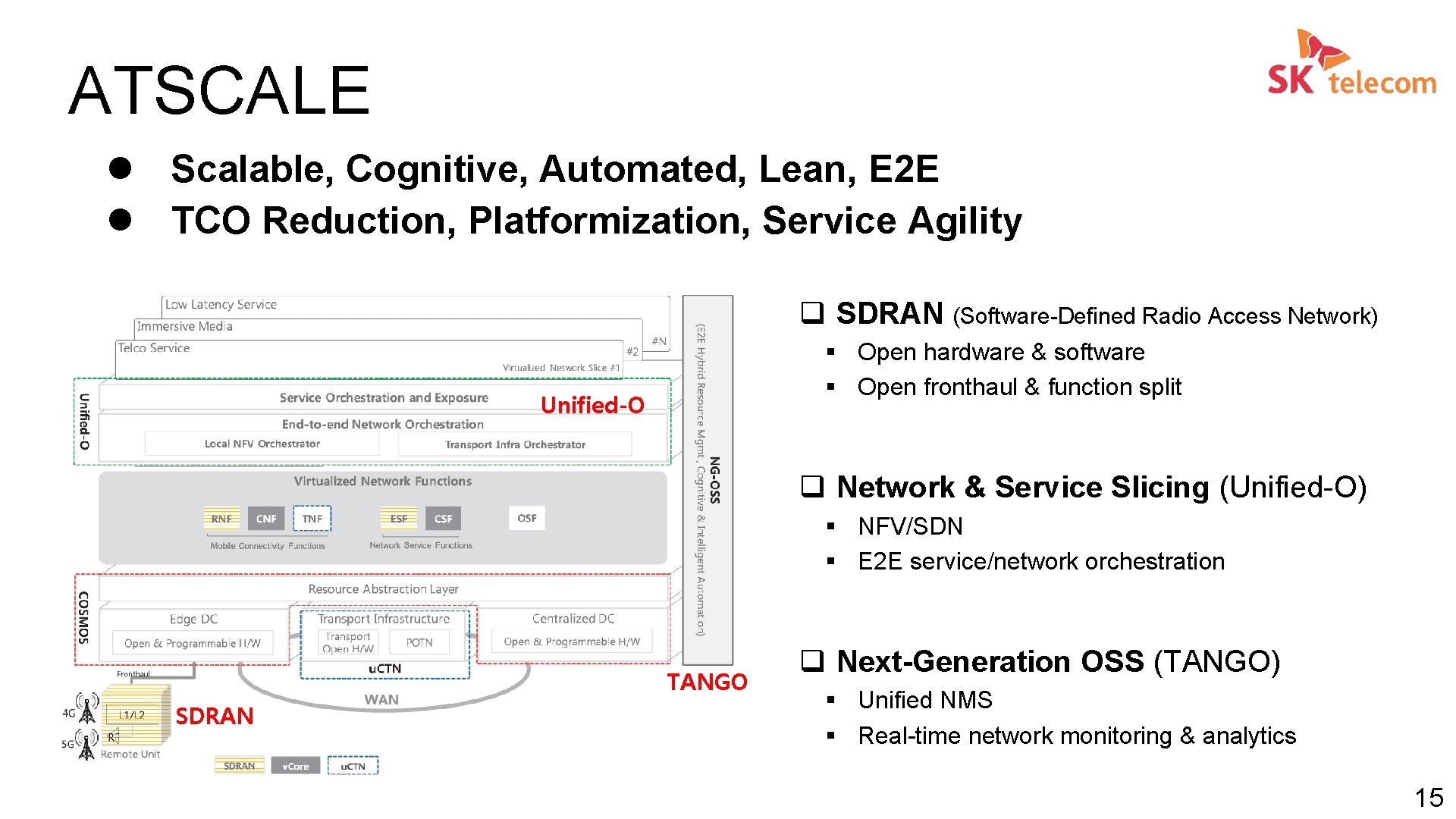

ATSCALE l Scalable, Cognitive, Automated, Lean, E 2 E l TCO Reduction, Platformization, Service Agility q SDRAN (Software-Defined Radio Access Network) § Open hardware & software § Open fronthaul & function split Unified-O q Network & Service Slicing (Unified-O) § NFV/SDN § E 2 E service/network orchestration TANGO SDRAN q Next-Generation OSS (TANGO) § Unified NMS § Real-time network monitoring & analytics 15

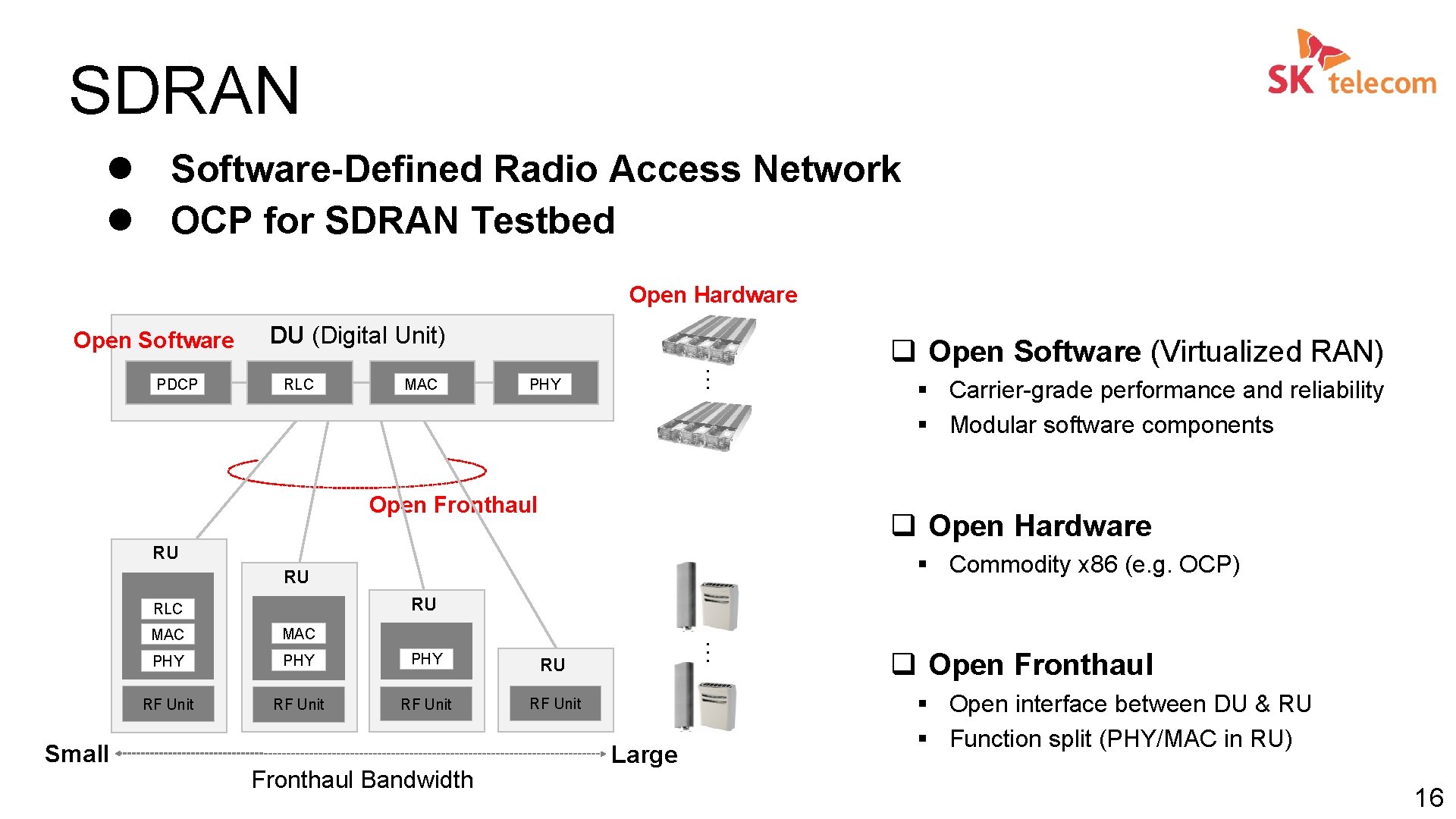

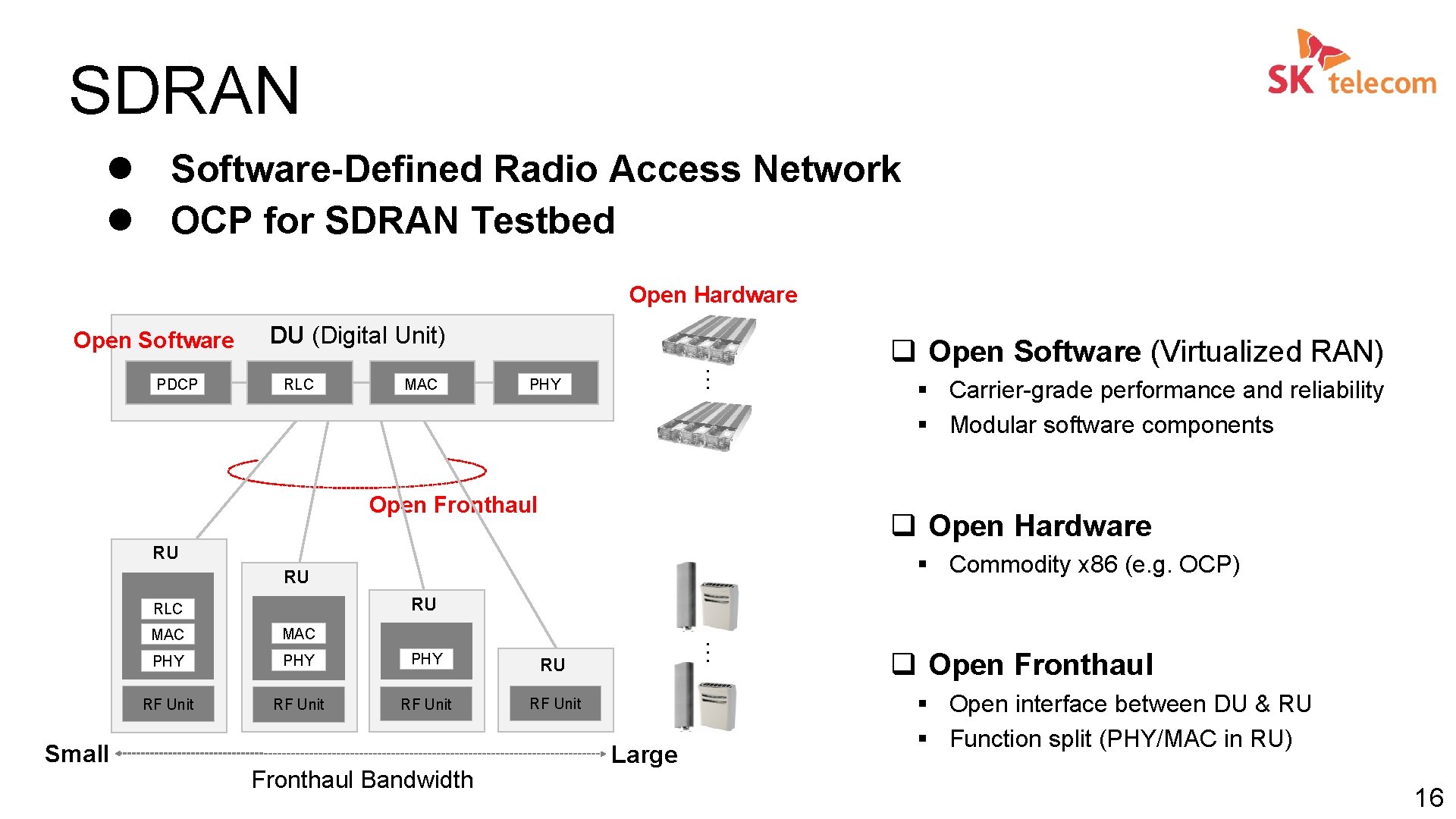

SDRAN l Software-Defined Radio Access Network l OCP for SDRAN Testbed Open Hardware Open Software RLC MAC … PDCP DU (Digital Unit) PHY Open Fronthaul § Carrier-grade performance and reliability § Modular software components q Open Hardware RU § Commodity x 86 (e. g. OCP) RU RU RLC MAC PHY PHY RU RF Unit Fronthaul Bandwidth … Small q Open Software (Virtualized RAN) Large q Open Fronthaul § Open interface between DU & RU § Function split (PHY/MAC in RU) 16

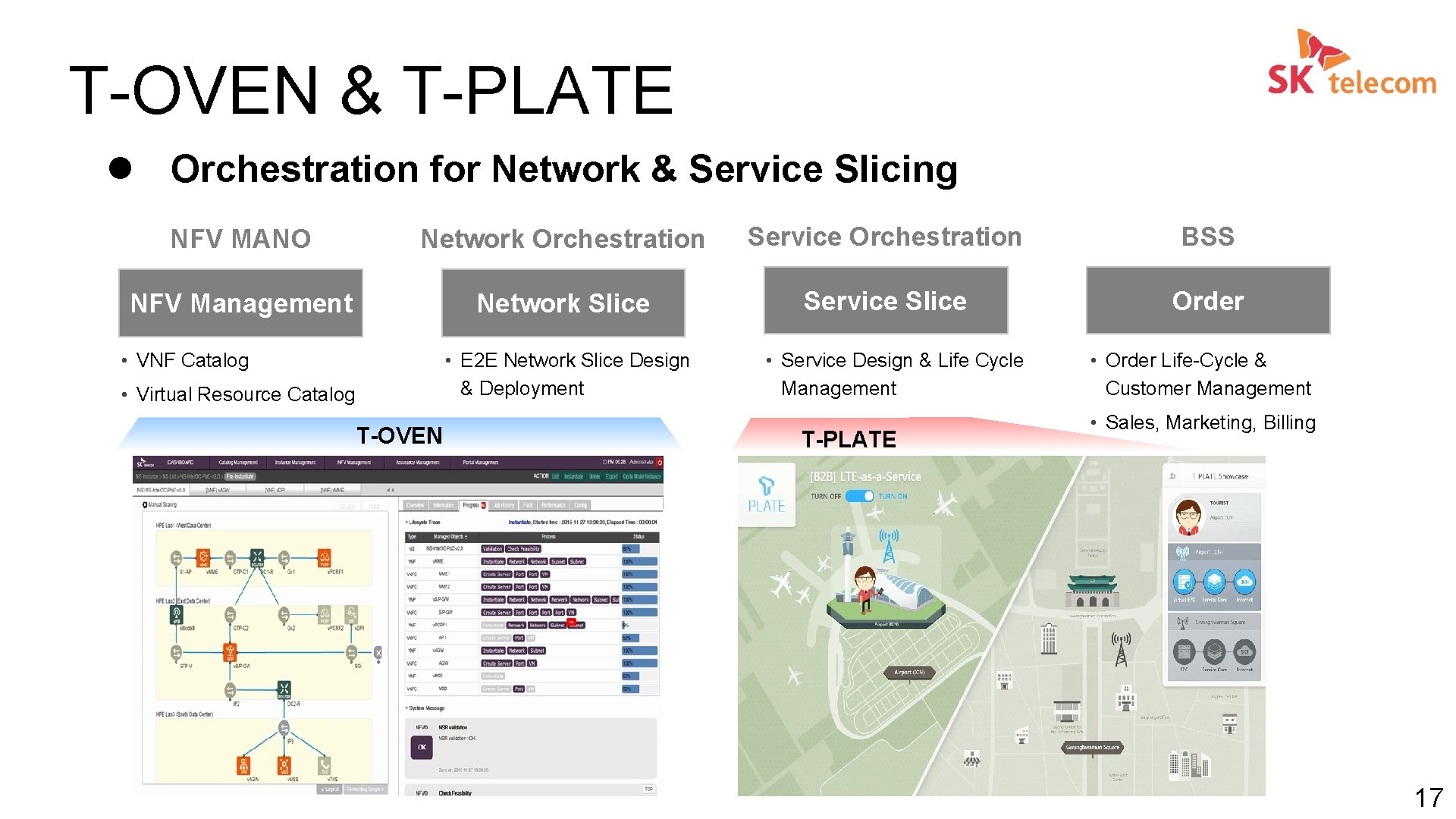

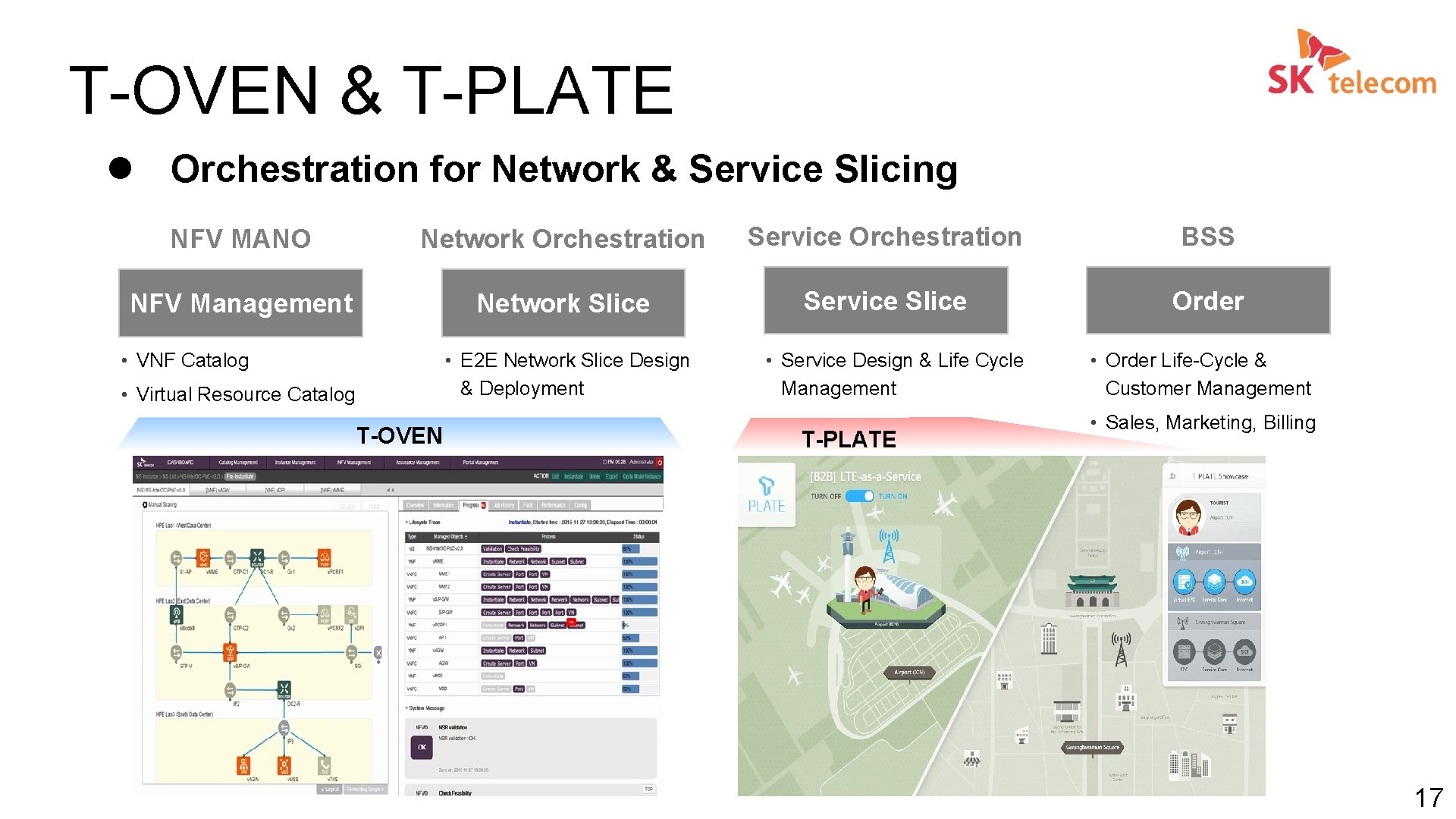

T-OVEN & T-PLATE l Orchestration for Network & Service Slicing NFV MANO Network Orchestration Service Orchestration BSS NFV Management Network Slice Service Slice Order • VNF Catalog • E 2 E Network Slice Design & Deployment • Virtual Resource Catalog T-OVEN • Service Design & Life Cycle Management T-PLATE • Order Life-Cycle & Customer Management • Sales, Marketing, Billing 17

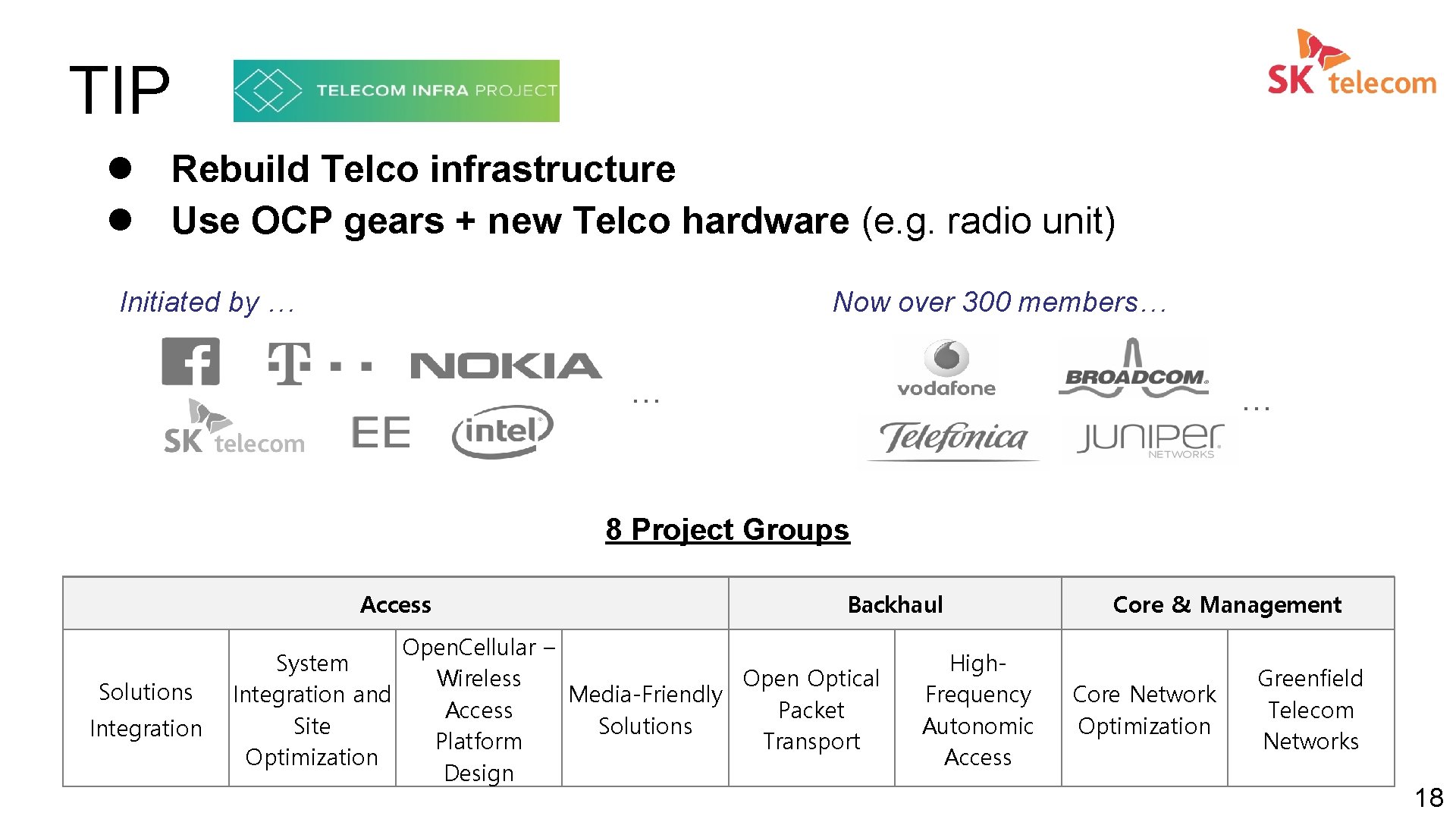

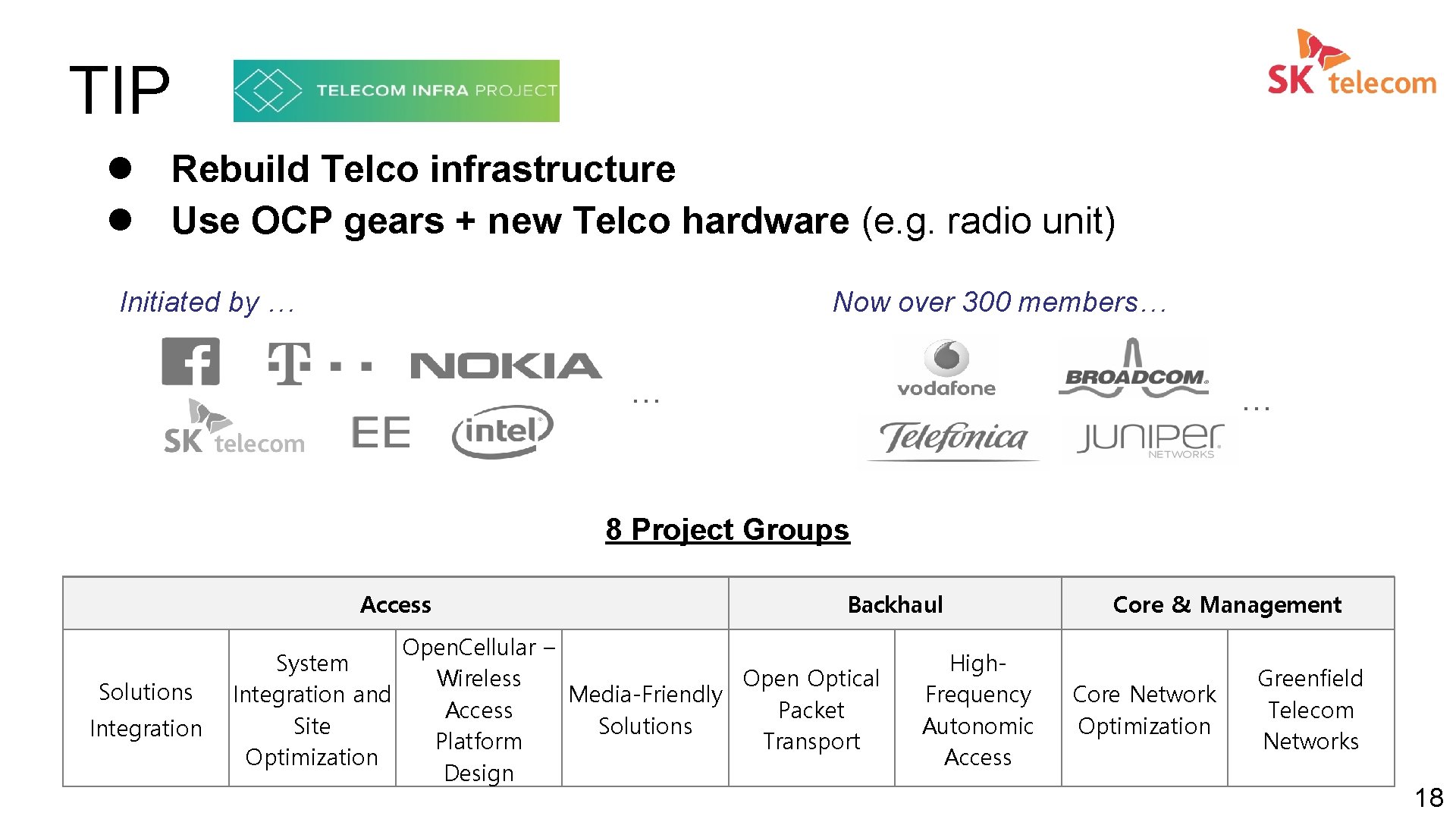

TIP l Rebuild Telco infrastructure l Use OCP gears + new Telco hardware (e. g. radio unit) Initiated by … Now over 300 members… … … 8 Project Groups Access Solutions Integration Backhaul Open. Cellular – System Wireless Open Optical Media-Friendly Integration and Access Packet Site Solutions Platform Transport Optimization Design High. Frequency Autonomic Access Core & Management Core Network Optimization Greenfield Telecom Networks 18

Part 2 OCP Telco Project 19

Project Management l Future Directions § Telco requirements for OCP hardware § New open hardware for Telco § OCP benefits for Telco l Logistics § Monthly Calls § Contributions 20

Telco Requirements l Objectives § Existing OCP hardware is designed for hyperscale data center § Remove roadblocks to widely deploy OCP gears in Telco l New Projects § OCP hardware management solution § Environmental/physical requirements l Actions § Announce first output in OCP Summit 2017 § Coordinate with other OCP projects, such as Hardware Management 21

New Open Hardware l Objectives § New hardware design for Telco workload l On-going Projects § AT&T: Open. GPON, XGS-PON, XGS Mirco. OLT § Radisys: CG-Open. Rack-19 § SK telecom: AF-Media & T-CAP (under review), NV-Array (under development) l Actions § Identify requirements for new hardware for Telco workload (NFV, Disaggregated RAN, etc. ) 22

OCP Benefits for Telco l Objectives § Verify and realize OCP benefits for Telco l Status § Telcos are testing OCP gears, but still at initial stage § Hard to realize the full benefits (CAPEX & OPEX reduction) l Actions § Share OCP experience § Conduct TCO analysis together (with external experts if necessary) § Cooperate as buyers for sourcing (ODM, new vendors) 23

OCP Hardware Management This page is based on Verizon’s input. l Background § OCP hardware should align with the existing processes and workforce § At the same time, processes and the workforce need to be modified to realize all the benefits of OCP l Goal § OCP hardware should provide management software similar to commercial solutions from existing vendors l Status § Identify the needs and requirements from Telco § Coordinate with OCP Hardware Management Project 24

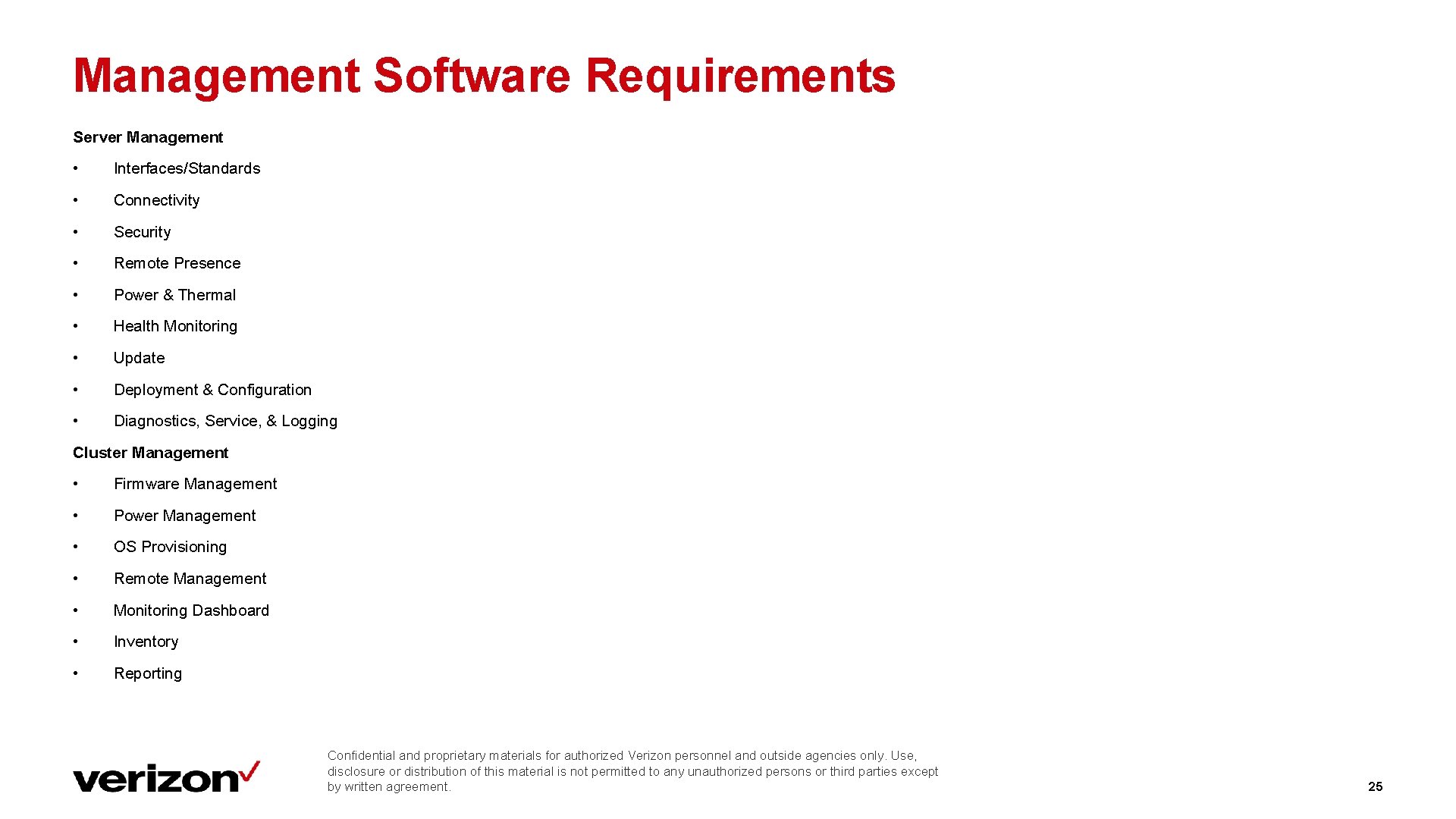

Management Software Requirements Server Management • Interfaces/Standards • Connectivity • Security • Remote Presence • Power & Thermal • Health Monitoring • Update • Deployment & Configuration • Diagnostics, Service, & Logging Cluster Management • Firmware Management • Power Management • OS Provisioning • Remote Management • Monitoring Dashboard • Inventory • Reporting Confidential and proprietary materials for authorized Verizon personnel and outside agencies only. Use, disclosure or distribution of this material is not permitted to any unauthorized persons or third parties except by written agreement. 25

Environmental/Physical Requirements This page is based on Verizon’s input. l Background § Some telco facilities have additional environmental/physical requirements for hardware that are mandated by law or internal policy § OCP hardware cannot be used unless it satisfies the requirements or we find ways to meet the requirements l Goal § Create a single, international requirement list l Status § Identify the needs and requirements from Telco 26

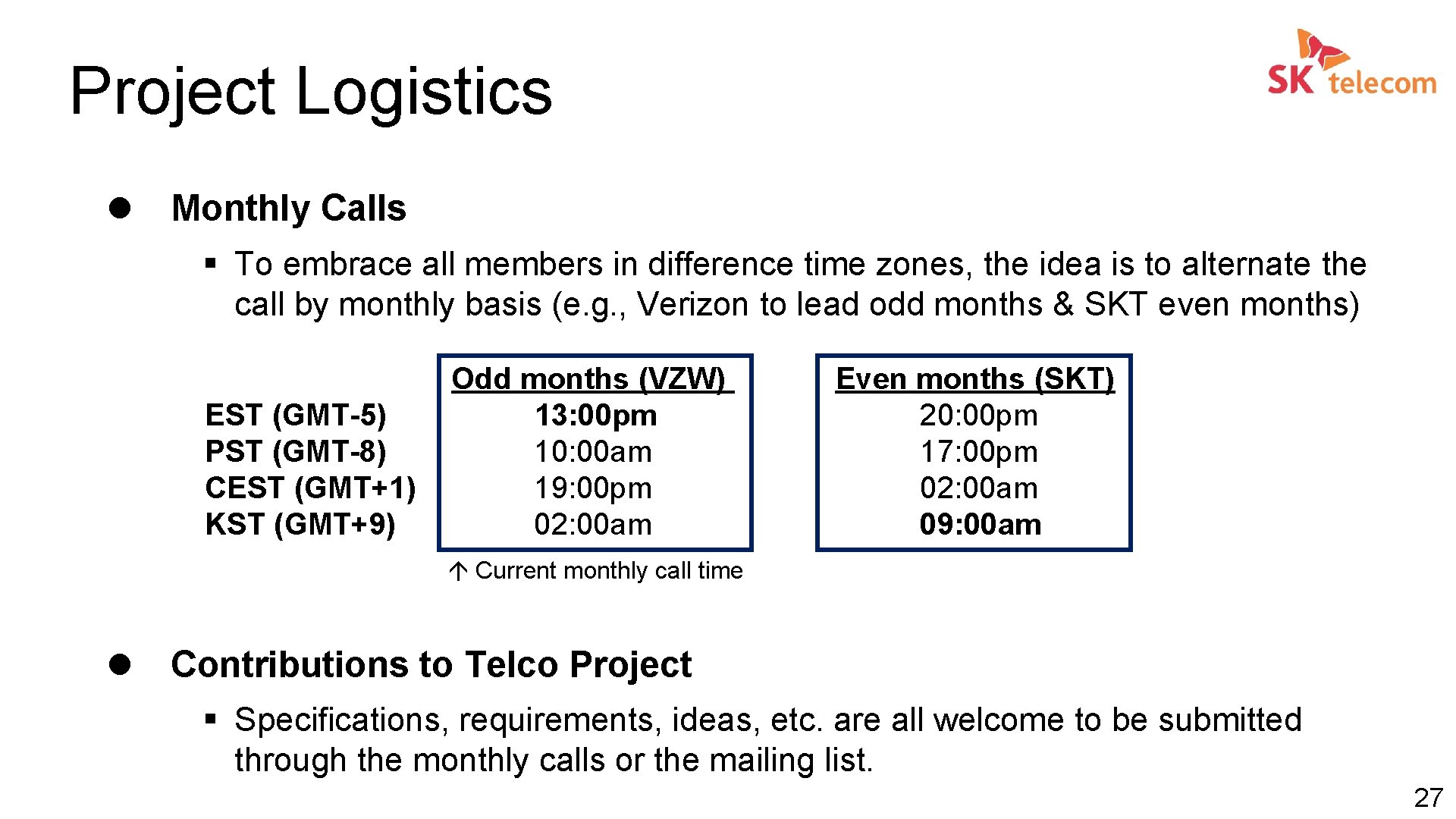

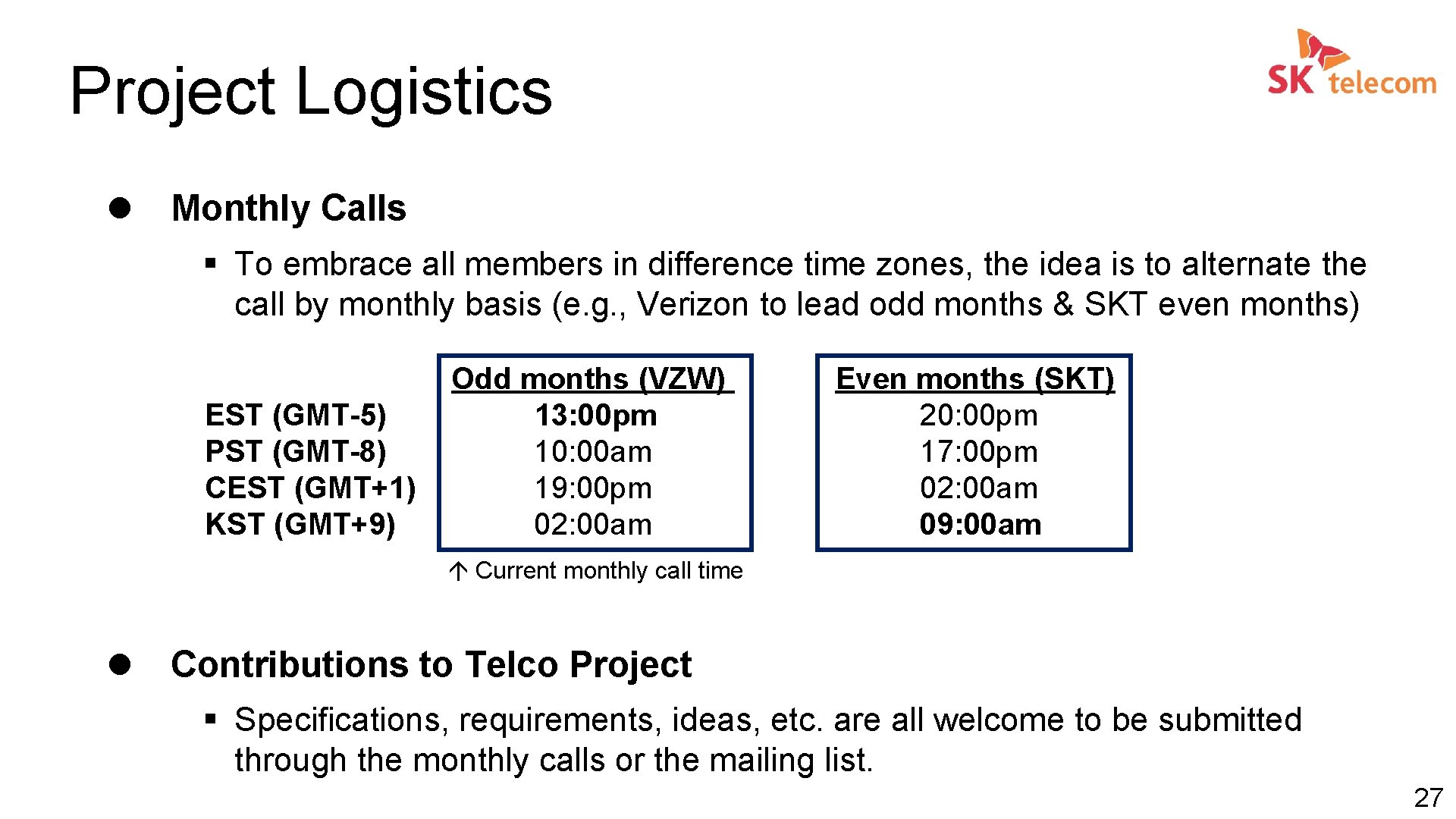

Project Logistics l Monthly Calls § To embrace all members in difference time zones, the idea is to alternate the call by monthly basis (e. g. , Verizon to lead odd months & SKT even months) EST (GMT-5) PST (GMT-8) CEST (GMT+1) KST (GMT+9) Odd months (VZW) Even months (SKT) 13: 00 pm 20: 00 pm 10: 00 am 17: 00 pm 19: 00 pm 02: 00 am 09: 00 am Current monthly call time l Contributions to Telco Project § Specifications, requirements, ideas, etc. are all welcome to be submitted through the monthly calls or the mailing list. 27

Thank you 28