OCP Accelerator Module Whitney Zhao Hardware Engineer Facebook

OCP Accelerator Module Whitney Zhao, Hardware Engineer, Facebook Siamak Tavallaei, Principal Architect, Microsoft (OCP Server Project co-Chair)

AI’s rapid evolution is producing an explosion of new types of hardware accelerators for ML and Deep Learning GPU FPGA ASIC NPU TPU NNP x. PU…

Different Implementations targeting similar requirements!

Common Requirements • Power & Cooling • Robustness & Serviceability • Configuration, Programming, & Management • Inter-module Communication to Scale Up • Input / Output Bandwidth to Scale Out

PCIe CEM Form Factor ?

PCIe CEM Form Factor is not it!

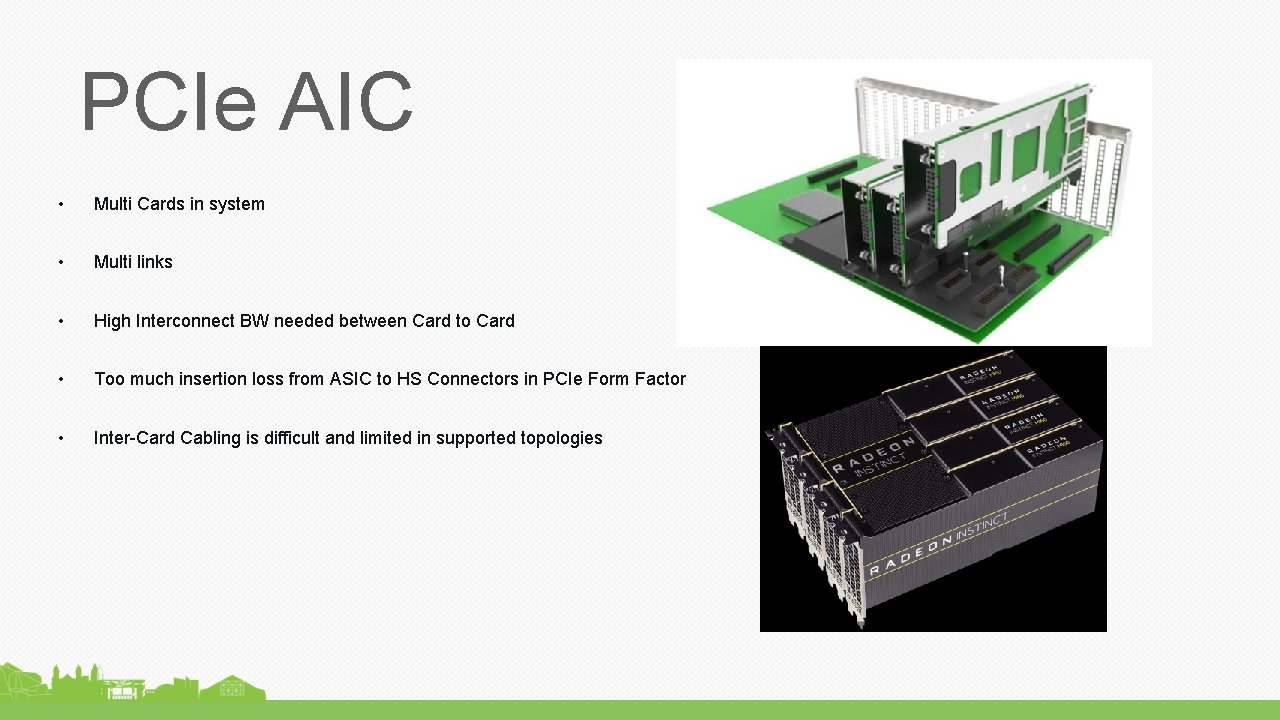

PCIe AIC • Multi Cards in system • Multi links • High Interconnect BW needed between Card to Card • Too much insertion loss from ASIC to HS Connectors in PCIe Form Factor • Inter-Card Cabling is difficult and limited in supported topologies

Mezzanine Module • High-density Connectors for input/output Links • Low insertion loss high-speed interconnect • Enough space for Accelerators and associated local logic & power • Flexible for heatsink design for air-cooled & liquid cooling • Flexible inter-Module interconnect topologies

Need Interoperable Mezzanine Module(accelerators) Baseboard (interconnect topology) Tray (serviceability) Chassis (scale-out deployment)

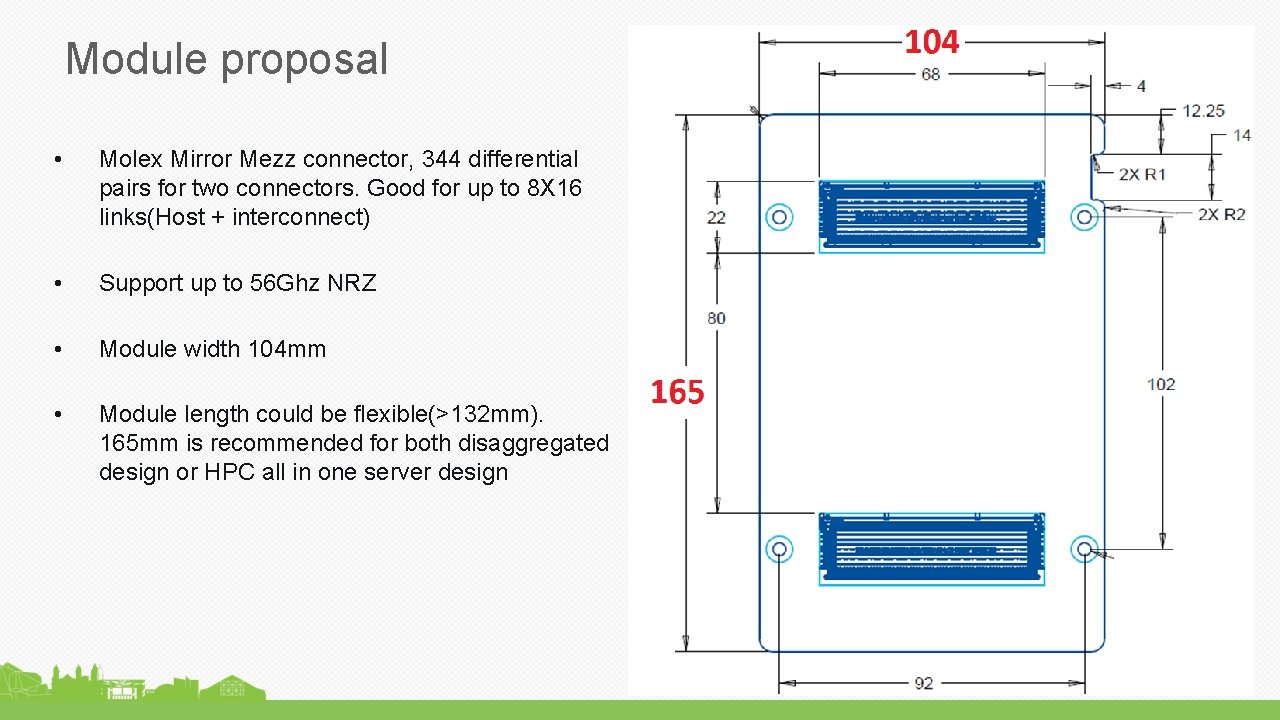

Module proposal • Molex Mirror Mezz connector, 344 differential pairs for two connectors. Good for up to 8 X 16 links(Host + interconnect) • Support up to 56 Ghz NRZ • Module width 104 mm • Module length could be flexible(>132 mm). 165 mm is recommended for both disaggregated design or HPC all in one server design

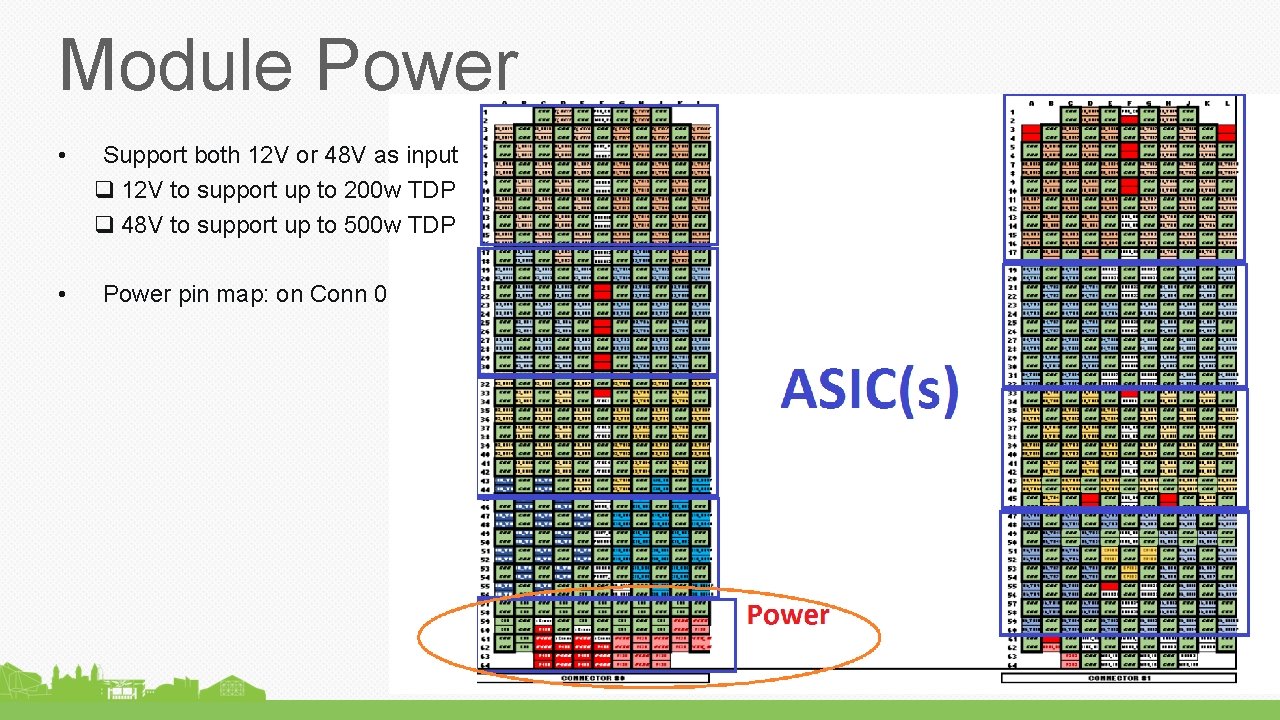

Module Power • • Support both 12 V or 48 V as input q 12 V to support up to 200 w TDP q 48 V to support up to 500 w TDP Power pin map: on Conn 0

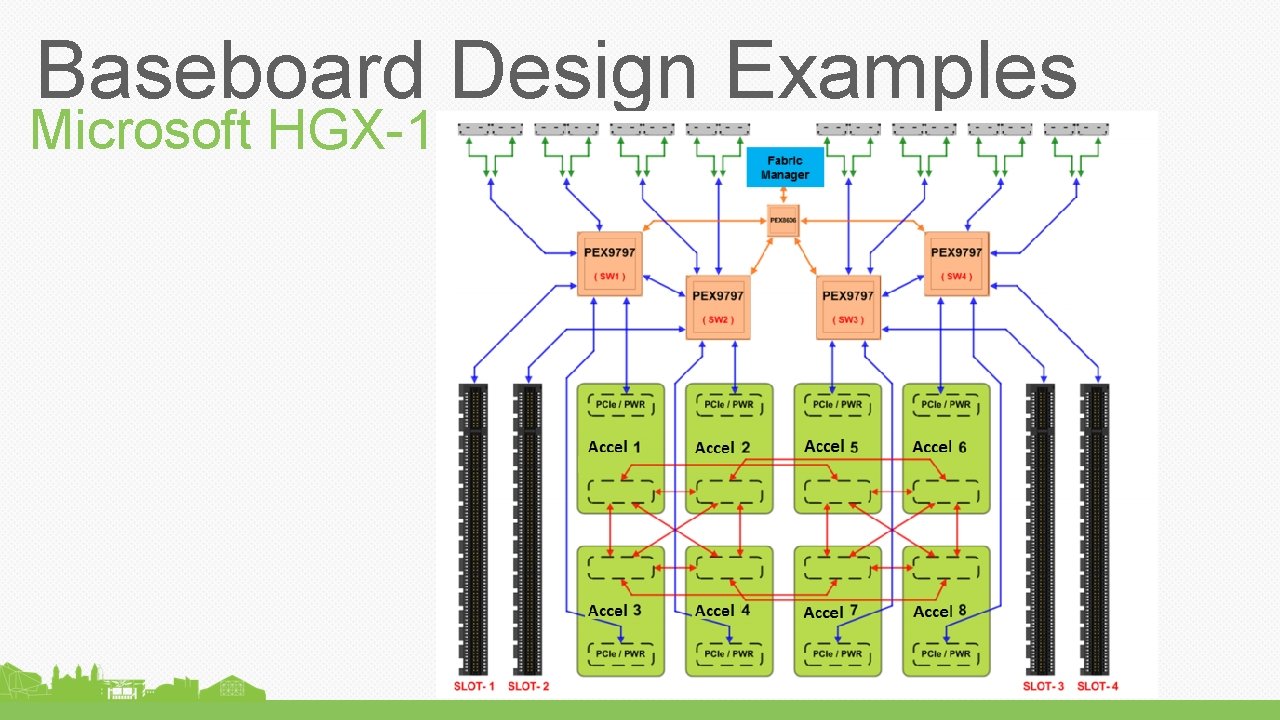

Baseboard Design Examples Microsoft HGX-1

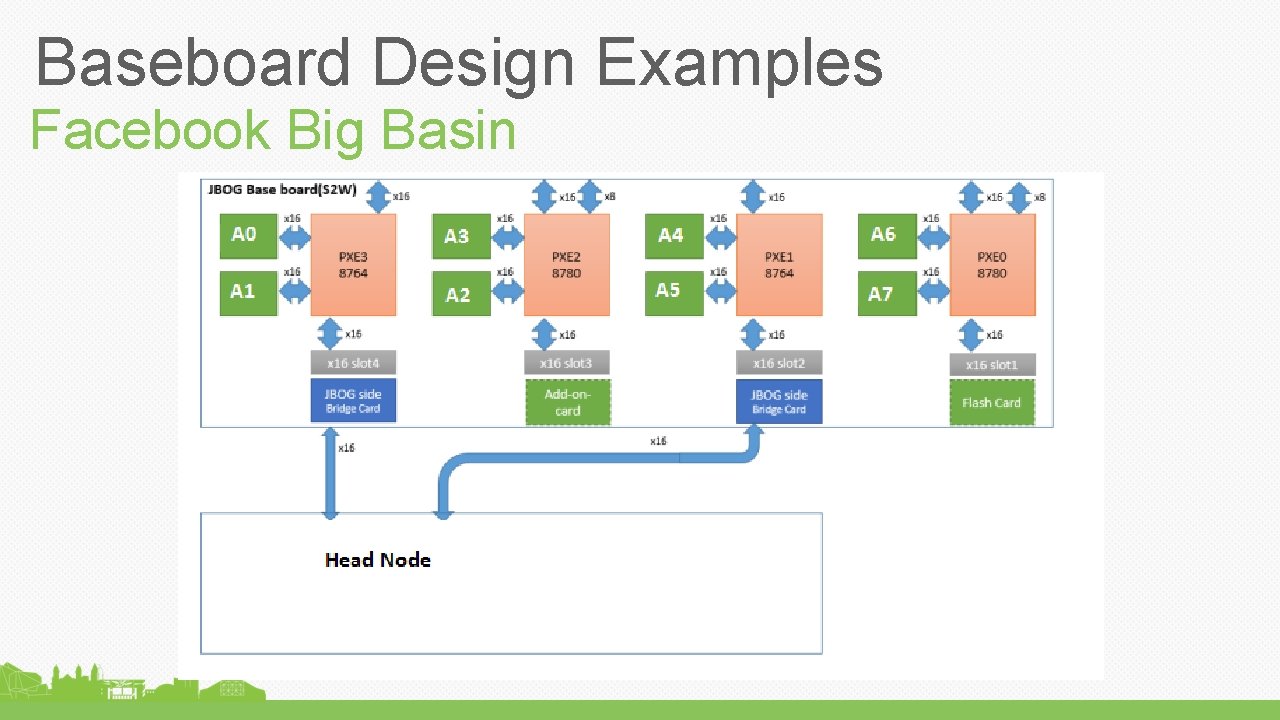

Baseboard Design Examples Facebook Big Basin

Inviting feedback to build the requirement set

Participate & Collaborate at OCP Server Project

- Slides: 16