Object detection tracking and event recognition the ETISEO

Object detection, tracking and event recognition: the ETISEO experience Andrea Cavallaro Multimedia and Vision Lab Queen Mary, University of London andrea. cavallaro@elec. qmul. ac. uk

Outline • QMUL’s object tracking and event recognition • Change detection and object tracking • Event recognition • ETISEO • Evaluation: protocol, data, ground truth • Impact • Improvements of future evaluation campaigns • Conclusions • … and an advert

Outline • QMUL’s object tracking and event recognition • Change detection and object tracking • Event recognition • ETISEO • Evaluation: protocol, data, ground truth • Impact • Improvements of future evaluation campaigns • Conclusions • … and an advert

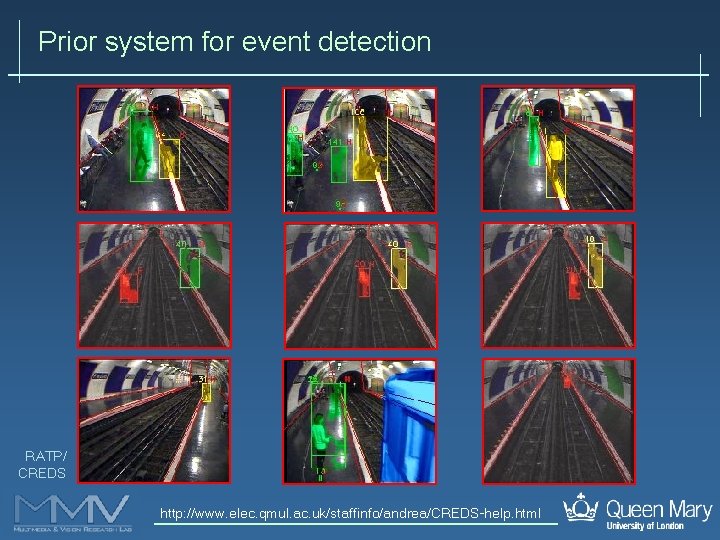

Prior system for event detection RATP/ CREDS http: //www. elec. qmul. ac. uk/staffinfo/andrea/CREDS-help. html

Introduction • QMUL Detection, Tracking, Event Recognition (Q-DTE) • initially designed for Event Detection and Tracking in metro stations • modified to respond to ETISEO • components: • Moving object detection • Background subtraction with noise modeling • Object tracking • Graph matching • Composite target distance based on multiple object features • Event recognition M. Taj, E. Maggio, A. Cavallaro “Multi-feature graph-based object tracking” Proc. of CLEAR Workshop - LNCS 4122, 2006

Object detection and tracking • Change detection • Statistical change detection • Gaussians on colour components • Noise filtering • Contrast enhancement • Problem: data association after object detection • Appearance/disappearance of objects • False detections due to clutter and noisy observations

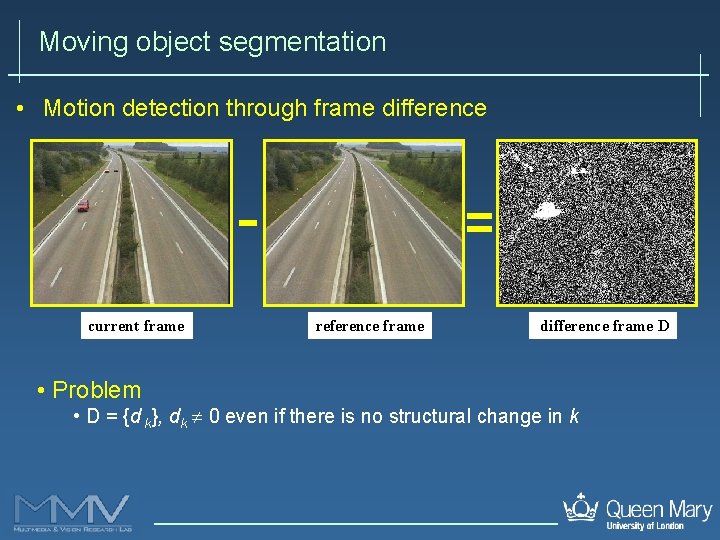

Moving object segmentation • Motion detection through frame difference current frame • Problem reference frame difference frame D • D = {d k}, dk 0 even if there is no structural change in k

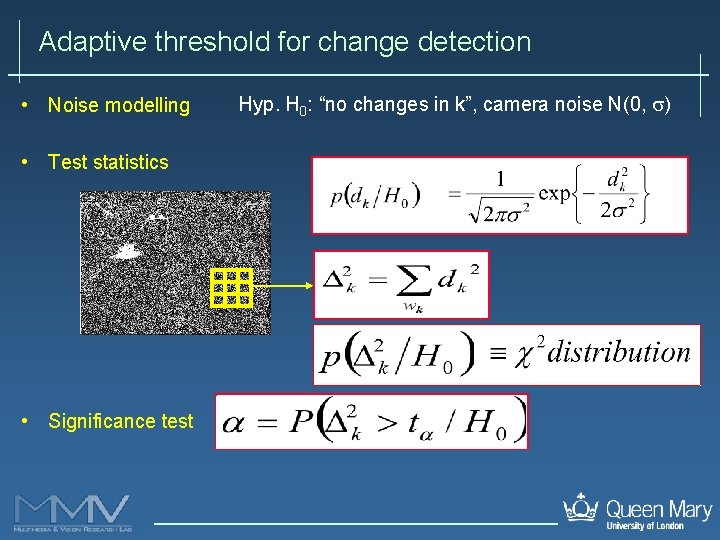

Adaptive threshold for change detection • Noise modelling • Test statistics • Significance test Hyp. H 0: “no changes in k”, camera noise N(0, )

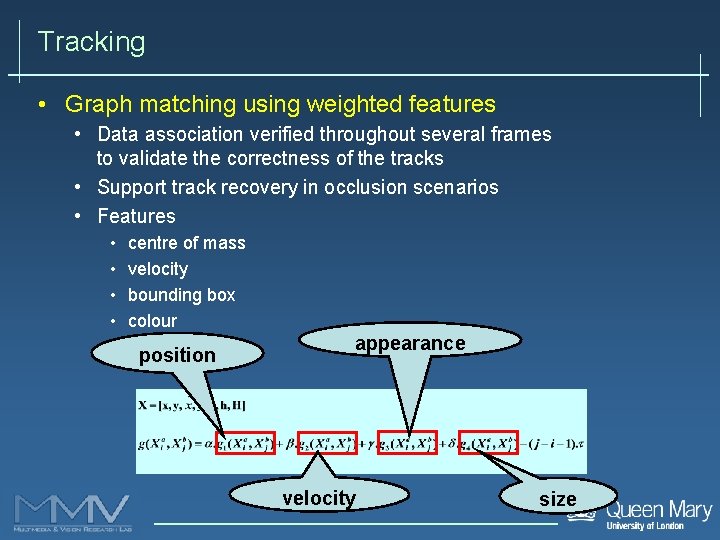

Tracking • Graph matching using weighted features • Data association verified throughout several frames to validate the correctness of the tracks • Support track recovery in occlusion scenarios • Features • • centre of mass velocity bounding box colour position appearance velocity size

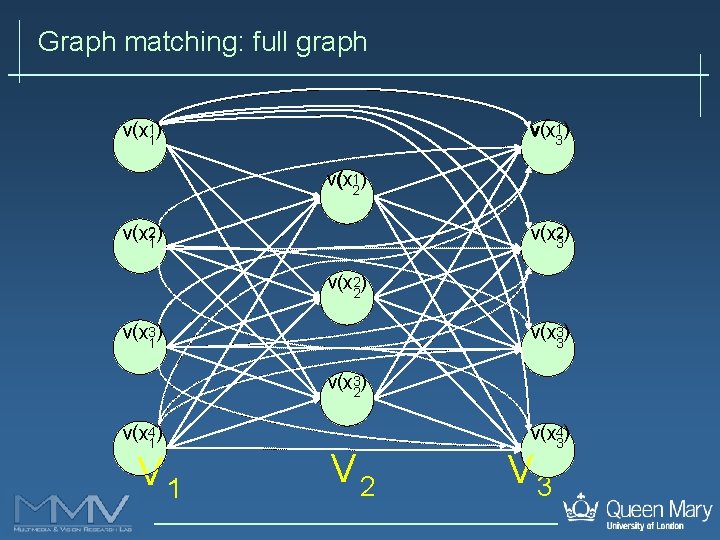

Graph matching: full graph v(x 11) v(x 13) v(x 12) v(x 21) v(x 23) v(x 22) v(x 31) v(x 33) v(x 32) v(x 41) V 1 V 2 v(x 43) V 3

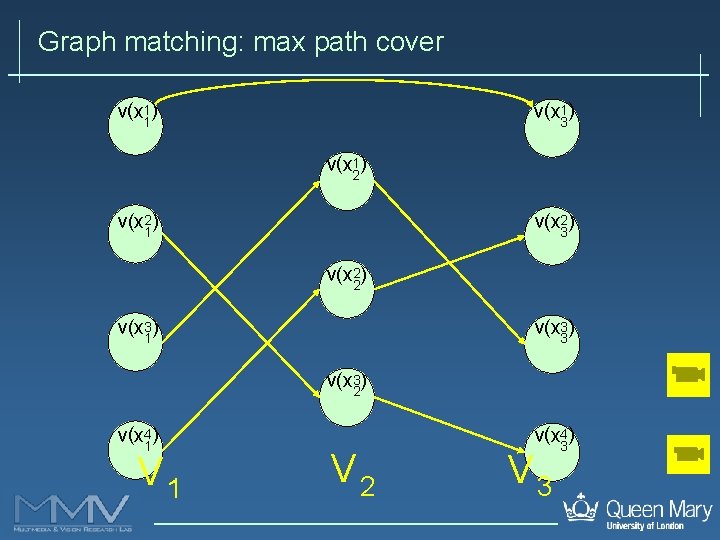

Graph matching: max path cover v(x 11) v(x 13) v(x 12) v(x 21) v(x 23) v(x 22) v(x 31) v(x 33) v(x 32) v(x 41) V 1 V 2 v(x 43) V 3

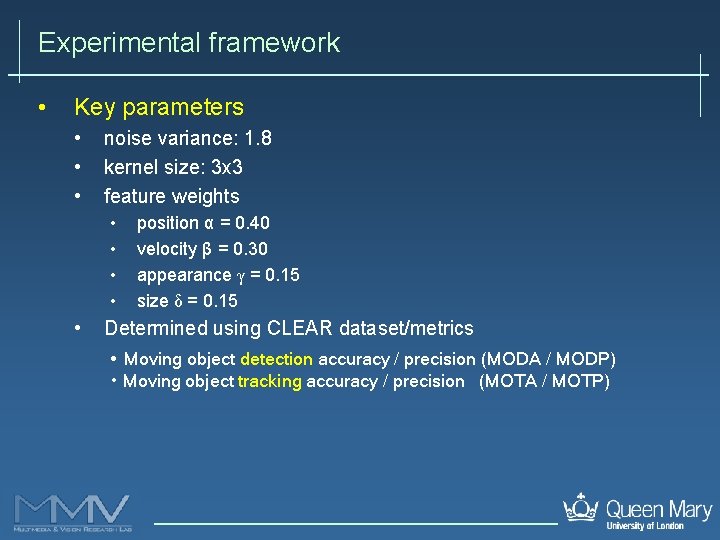

Experimental framework • Key parameters • • • noise variance: 1. 8 kernel size: 3 x 3 feature weights • • • position α = 0. 40 velocity β = 0. 30 appearance γ = 0. 15 size δ = 0. 15 Determined using CLEAR dataset/metrics • Moving object detection accuracy / precision (MODA / MODP) • Moving object tracking accuracy / precision (MOTA / MOTP)

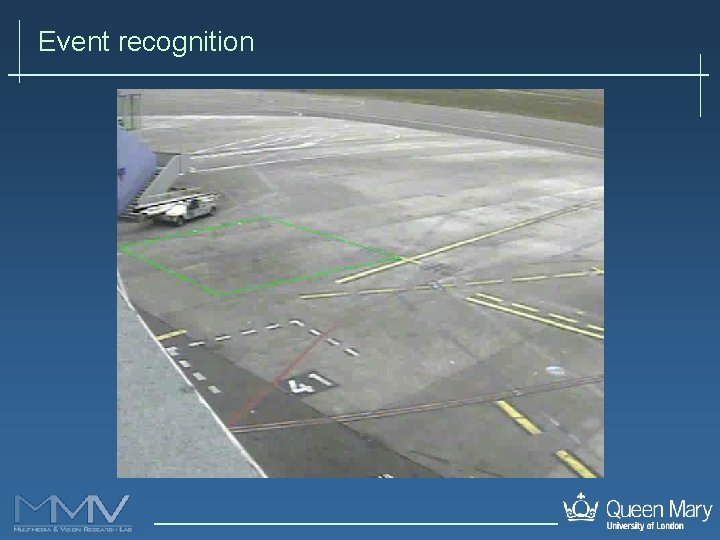

Event recognition

Event recognition

Event recognition

Event recognition

Outline • QMUL’s object tracking and event recognition • Change detection and object tracking • Event recognition • ETISEO • Evaluation: protocol, data, ground truth • Impact • Improvements of future evaluation campaigns • Conclusions • … and an advert

ETISEO • Impact • Promote evaluation • Formal and objective evaluation is (urgently) needed • Data collection and distribution • time consuming! • common ground for research • Priority sequences • Use of an existing XML schema • Discussion forum • Choice of performance measures and experimental data is not obvious

Improvements • Involve stakeholders at earlier stages • More input from end users • what do they want / need? • costs / weights of errors • Involve (more) researchers from the beginning • Facilitate understanding of the protocol • Fix errors / ambiguities early • Use training/testing dataset • see i-Lids and CLEAR • Maybe private dataset too • Give meaning to measures • what is the “value” of these numbers? • e. g. , compare with a naïve result • what is the “value” of a difference of (e. g. ) 0. 1?

Questions • Improvements of future evaluation campaigns • Are we evaluating too many things simultaneously? • Too many variables • Do we need so many measures? • remove redundant measures • Is the ground truth really “truth”? • statistical analysis / more annotators / confidence level • Should we distribute the evaluation tool / ground-truth earlier? • Are we happy with the current demarcation of regions / definition of events? • Do we want to evaluate all the event types together? • should we focus on subsets of events and move on progressively • Is the dataset too heterogeneous? • Can we generalize the results obtained so far?

Conclusions • QMUL submission • • Statistical colour change detection Multi-feature weighted graph matching Event recognition module: evolution from CREDS 2005. Next: extend to 3 D • Feedback on ETISEO • Evaluation + discussion • Extend the community / do not duplicate efforts … • Metrics and an advert More information http: //www. elec. qmul. ac. uk/staffinfo/andrea

IEEE International Conference on Advanced Video and Signal based Surveillance IEEE AVSS 2007 London (UK) 5 -7 September 2007 Paper submission: 28 February 2007

• Acknowledgments • Murtaza Taj • Emilio Maggio

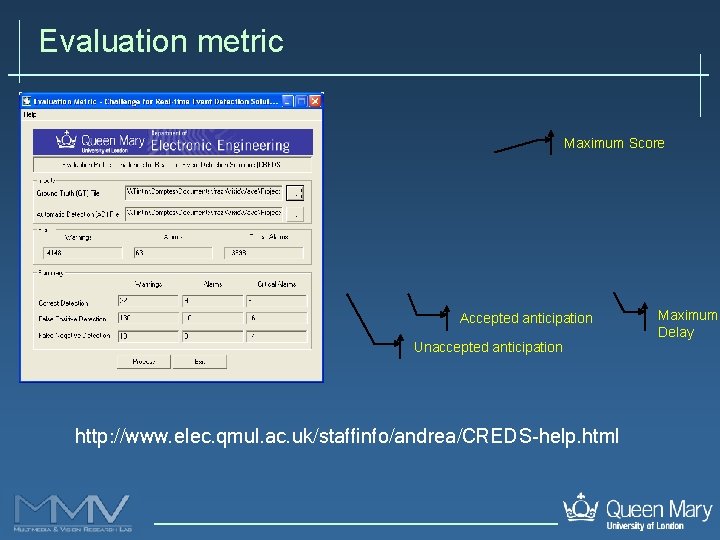

Evaluation metric Maximum Score Accepted anticipation Unaccepted anticipation http: //www. elec. qmul. ac. uk/staffinfo/andrea/CREDS-help. html Maximum Delay

- Slides: 25