NXgraph An Efficient Graph Processing System on a

- Slides: 24

NXgraph: An Efficient Graph Processing System on a Single Machine Yuze Chi 1, Guohao Dai 1, Yu Wang 1, Guangyu Sun 2, Guoliang Li 1 and Huazhong Yang 1 Tsinghua National Laboratory for Information Science and Technology 1, Tsinghua University Center for Energy Efficient Computing and Applications 2, Peking University

Motivation Big Data Structure Big Graph social media science advertising web 2021/6/15 2

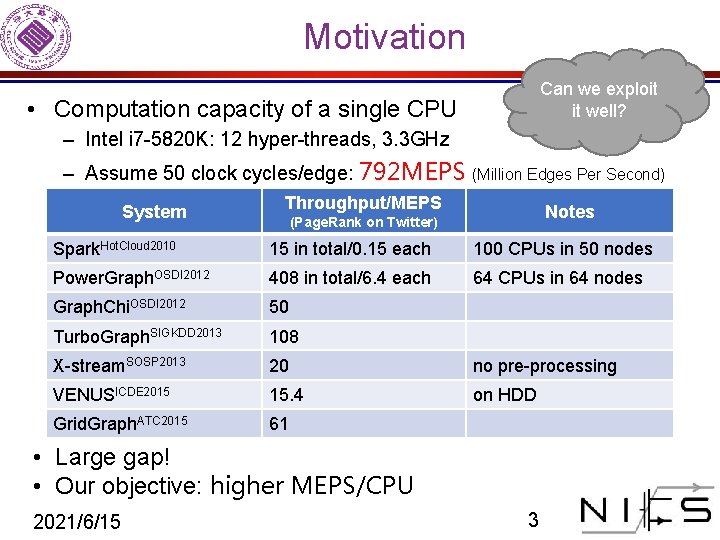

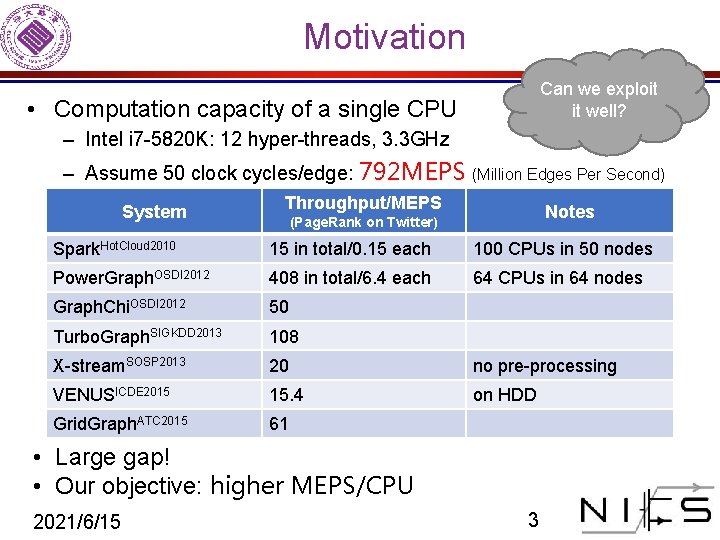

Motivation Can we exploit it well? • Computation capacity of a single CPU – Intel i 7 -5820 K: 12 hyper-threads, 3. 3 GHz – Assume 50 clock cycles/edge: 792 MEPS (Million Edges Per Second) System Throughput/MEPS Notes (Page. Rank on Twitter) Spark. Hot. Cloud 2010 15 in total/0. 15 each 100 CPUs in 50 nodes Power. Graph. OSDI 2012 408 in total/6. 4 each 64 CPUs in 64 nodes Graph. Chi. OSDI 2012 50 Turbo. Graph. SIGKDD 2013 108 X-stream. SOSP 2013 20 no pre-processing VENUSICDE 2015 15. 4 on HDD Grid. Graph. ATC 2015 61 • Large gap! • Our objective: higher MEPS/CPU 2021/6/15 3

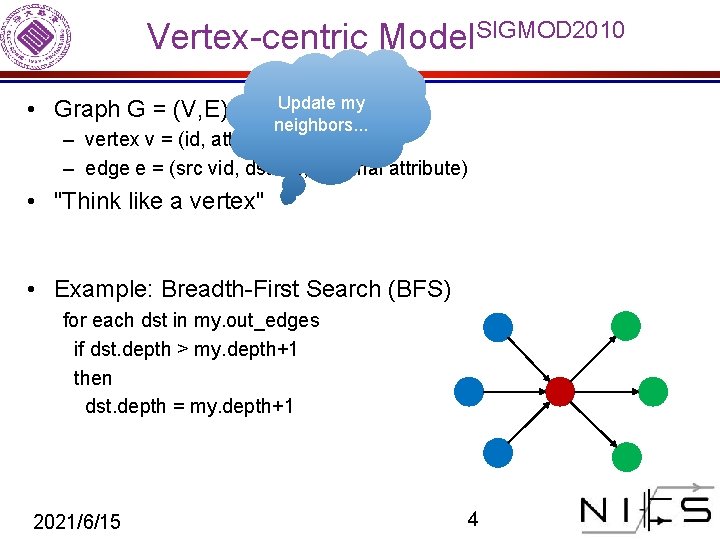

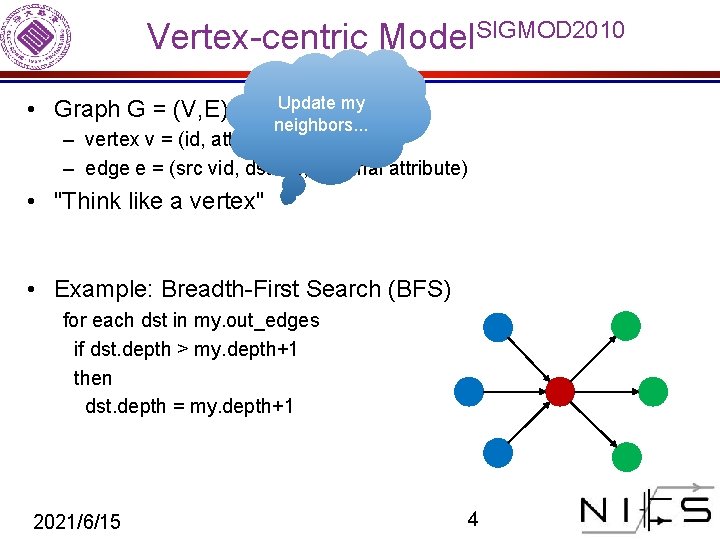

Vertex-centric Model. SIGMOD 2010 • Graph G = (V, E) Update my neighbors. . . – vertex v = (id, attribute) – edge e = (src vid, dst vid, optional attribute) • "Think like a vertex" • Example: Breadth-First Search (BFS) for each dst in my. out_edges if dst. depth > my. depth+1 then dst. depth = my. depth+1 2021/6/15 4

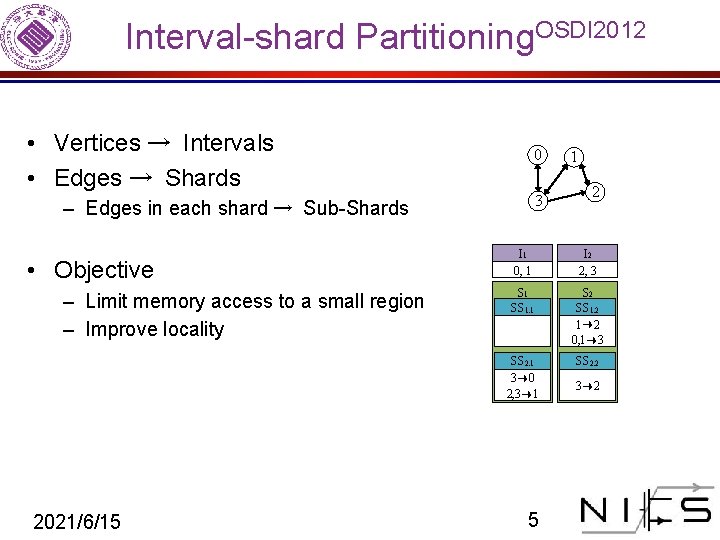

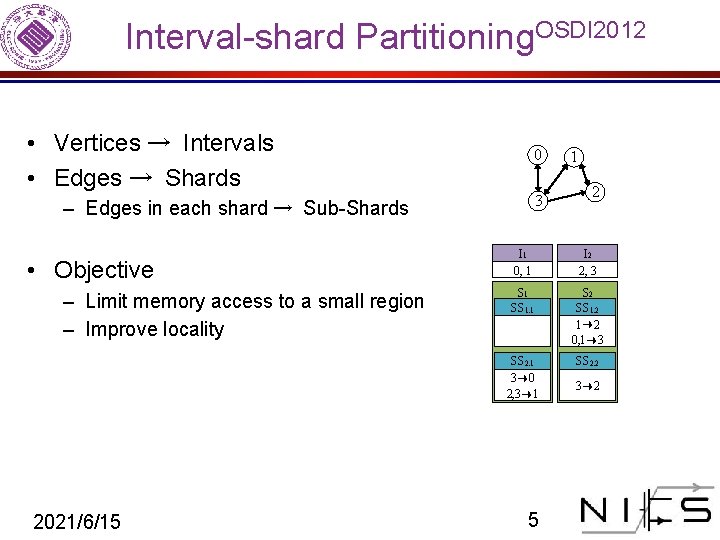

Interval-shard Partitioning. OSDI 2012 • Vertices → Intervals • Edges → Shards 0 3 – Edges in each shard → Sub-Shards • Objective – Limit memory access to a small region – Improve locality 2021/6/15 1 2 I 1 0, 1 I 2 2, 3 S 1 SS 1. 1 S 2 SS 1. 2 1→ 2 0, 1→ 3 SS 2. 1 3→ 0 2, 3→ 1 SS 2. 2 5 3→ 2

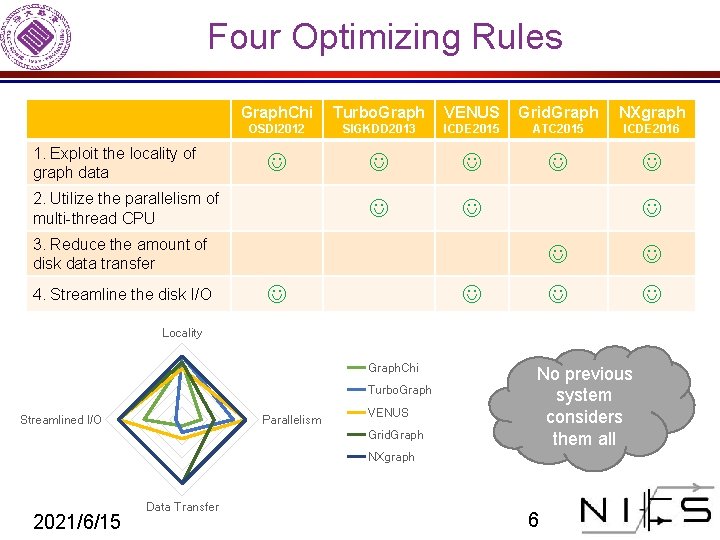

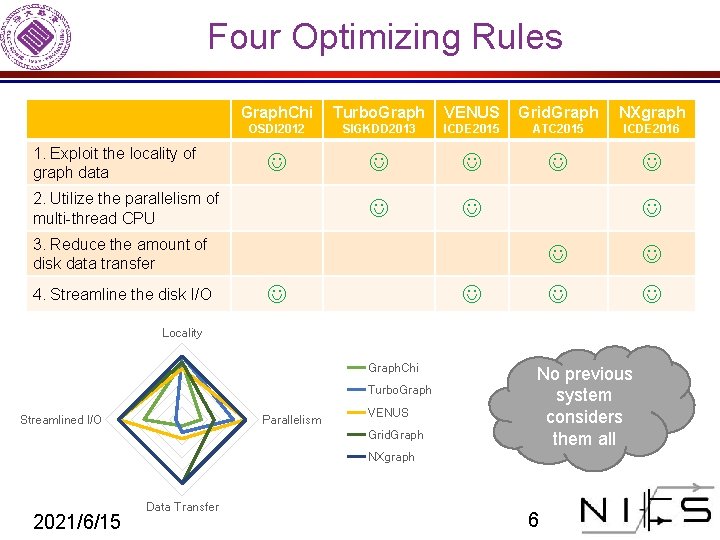

Four Optimizing Rules 1. Exploit the locality of graph data Graph. Chi Turbo. Graph VENUS Grid. Graph NXgraph OSDI 2012 SIGKDD 2013 ICDE 2015 ATC 2015 ICDE 2016 2. Utilize the parallelism of multi-thread CPU 3. Reduce the amount of disk data transfer 4. Streamline the disk I/O Locality Graph. Chi Turbo. Graph Streamlined I/O Parallelism VENUS Grid. Graph No previous system considers them all NXgraph 2021/6/15 Data Transfer 6

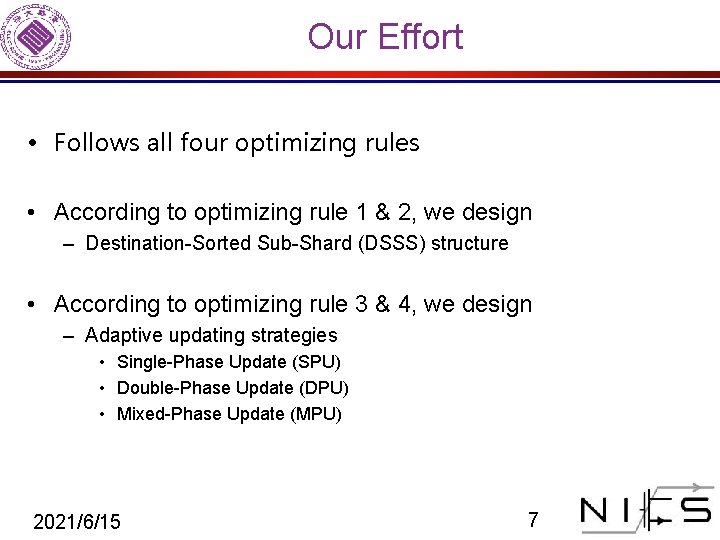

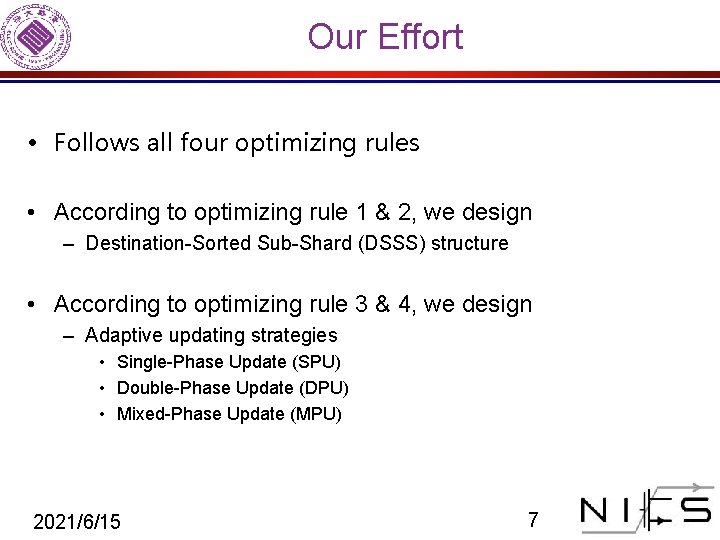

Our Effort • Follows all four optimizing rules • According to optimizing rule 1 & 2, we design – Destination-Sorted Sub-Shard (DSSS) structure • According to optimizing rule 3 & 4, we design – Adaptive updating strategies • Single-Phase Update (SPU) • Double-Phase Update (DPU) • Mixed-Phase Update (MPU) 2021/6/15 7

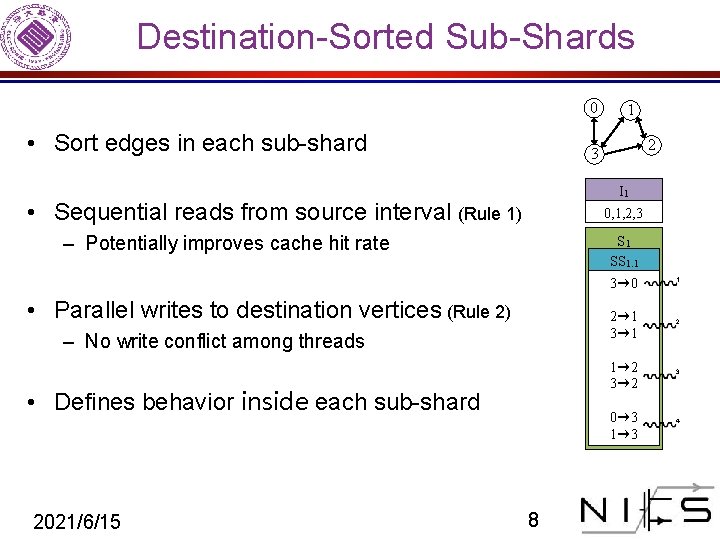

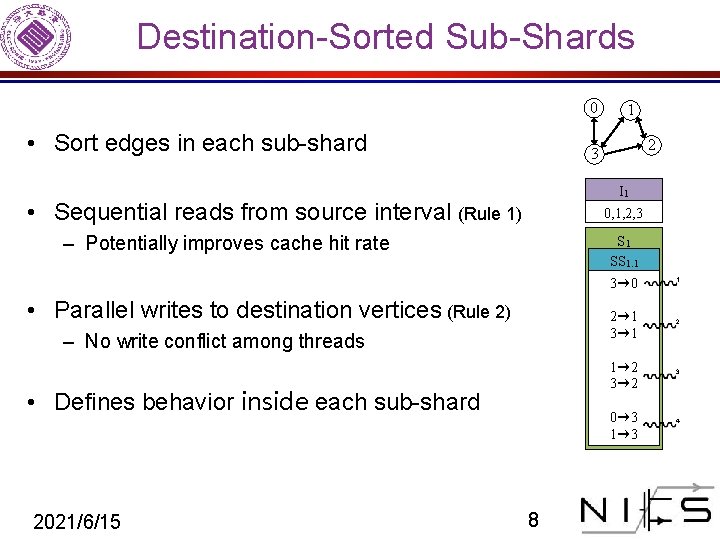

Destination-Sorted Sub-Shards 0 • Sort edges in each sub-shard 1 2 3 I 1 • Sequential reads from source interval (Rule 1) 0, 1, 2, 3 – Potentially improves cache hit rate S 1 SS 1. 1 3→ 0 • Parallel writes to destination vertices (Rule 2) 2→ 1 3→ 1 – No write conflict among threads 1→ 2 3→ 2 • Defines behavior inside each sub-shard 2021/6/15 0→ 3 1→ 3 8 1 2 3 4

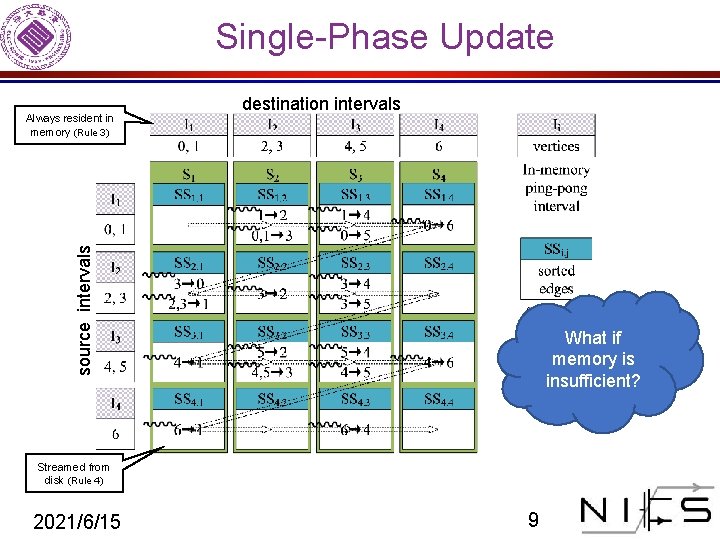

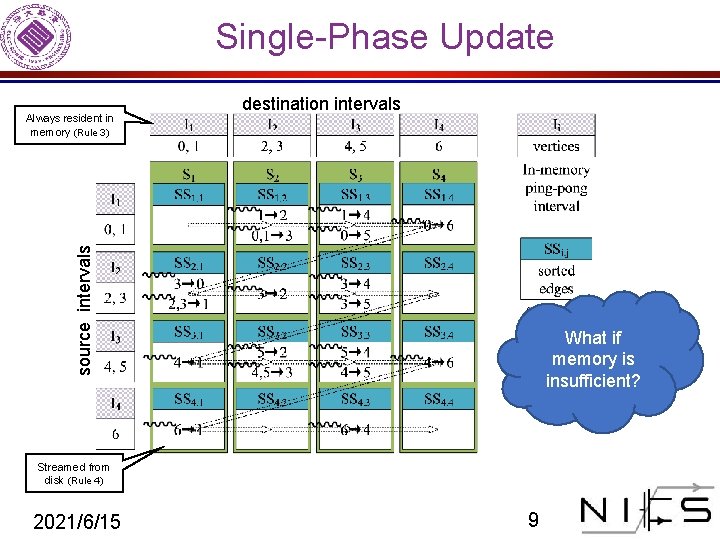

Single-Phase Update destination intervals source intervals Always resident in memory (Rule 3) What if memory is insufficient? Streamed from disk (Rule 4) 2021/6/15 9

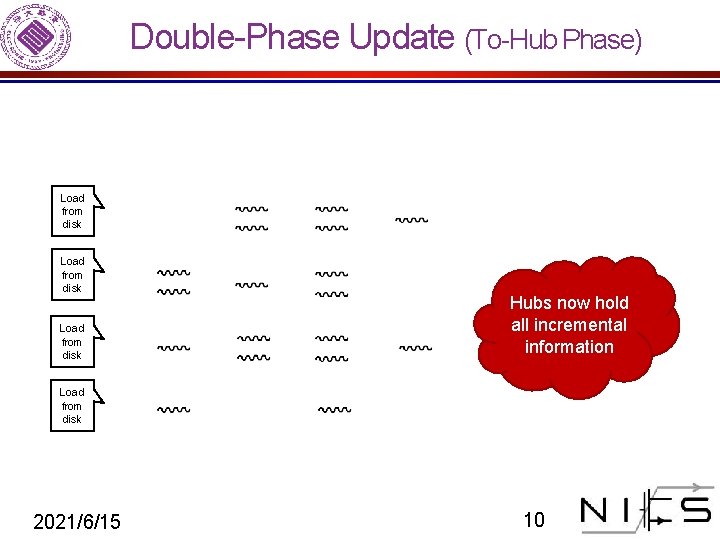

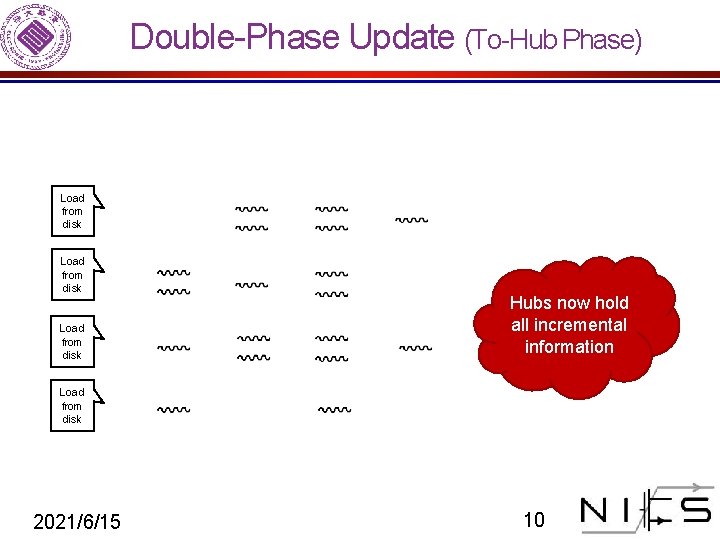

Double-Phase Update (To-Hub Phase) Load from disk Hubs now hold all incremental information Load from disk 2021/6/15 10

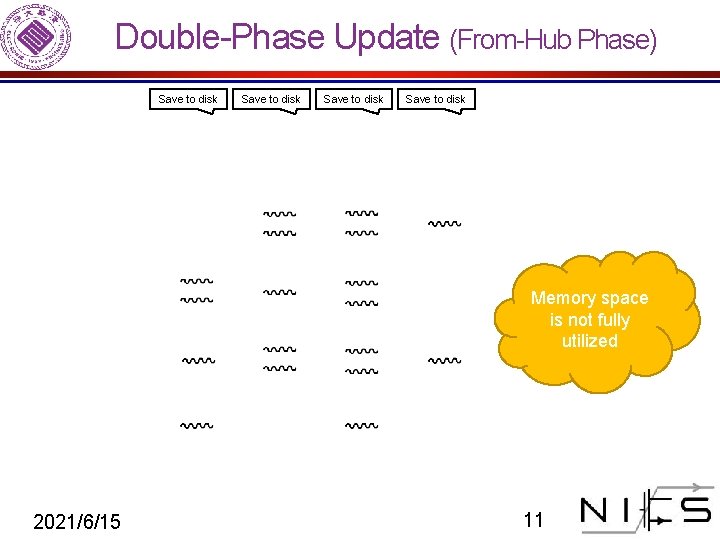

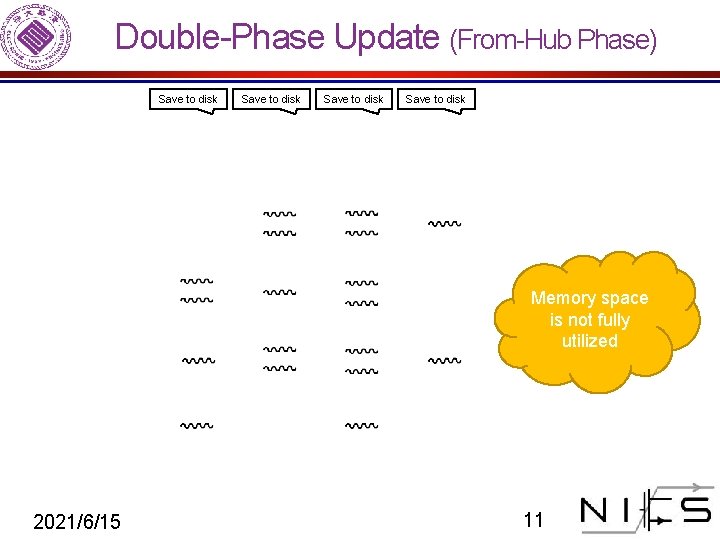

Double-Phase Update (From-Hub Phase) Save to disk Memory space is not fully utilized 2021/6/15 11

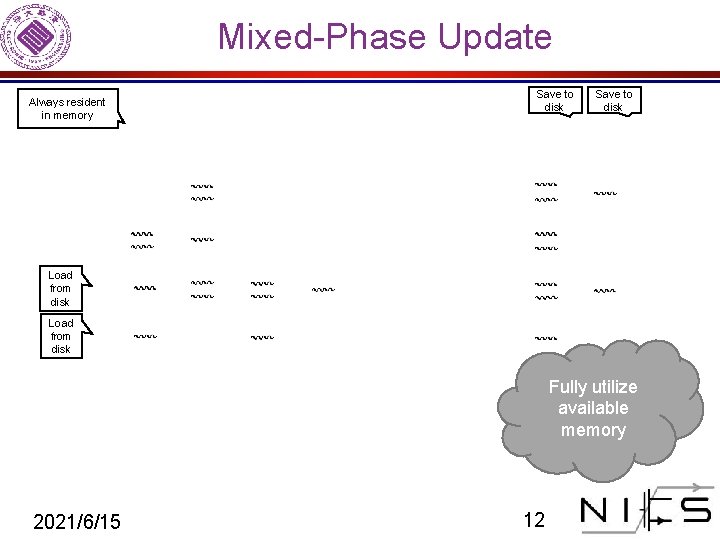

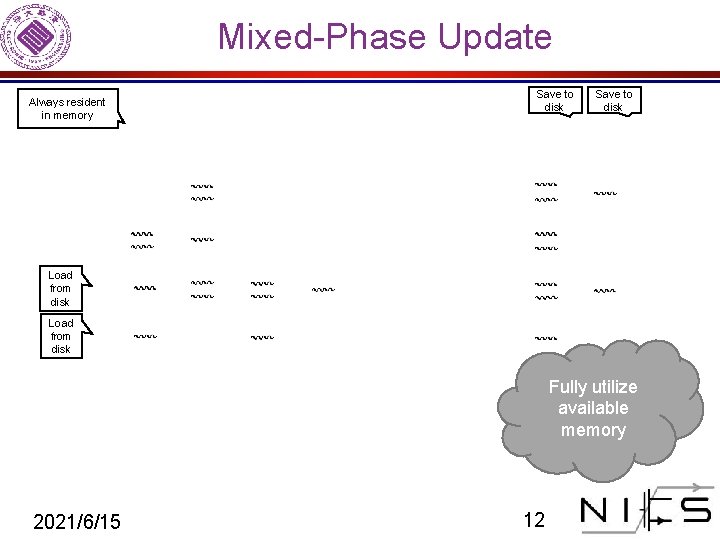

Mixed-Phase Update Always resident in memory Save to disk Load from disk Fully utilize available memory 2021/6/15 12

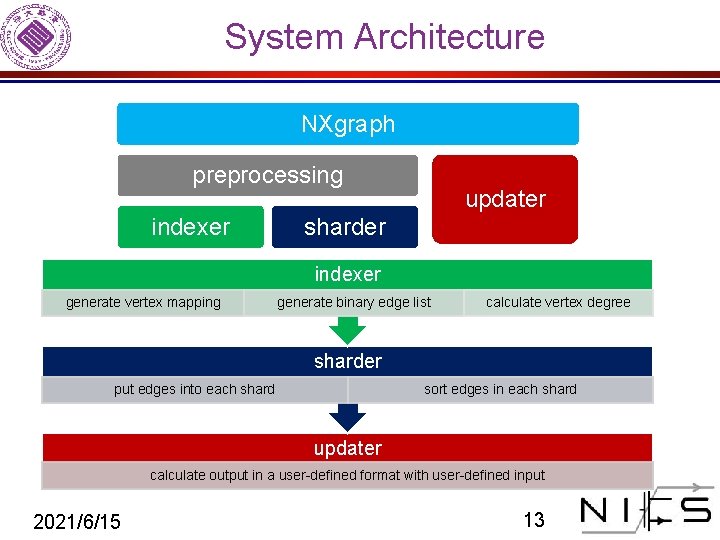

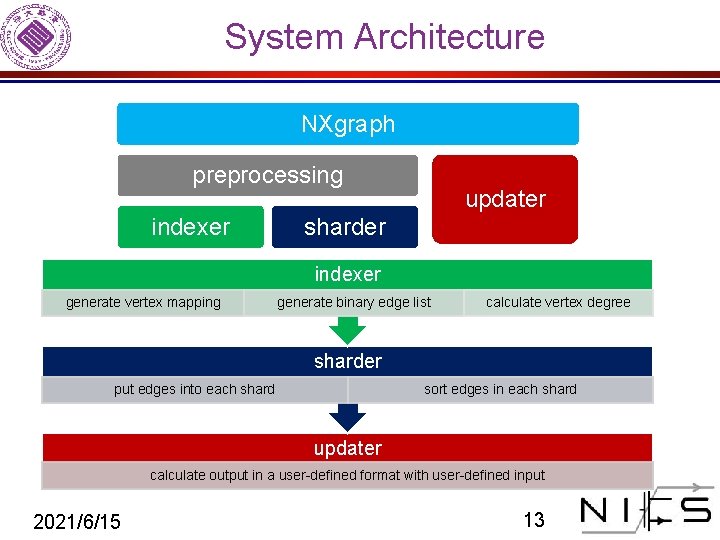

System Architecture NXgraph preprocessing indexer updater sharder indexer generate vertex mapping generate binary edge list calculate vertex degree sharder put edges into each shard sort edges in each shard updater calculate output in a user-defined format with user-defined input 2021/6/15 13

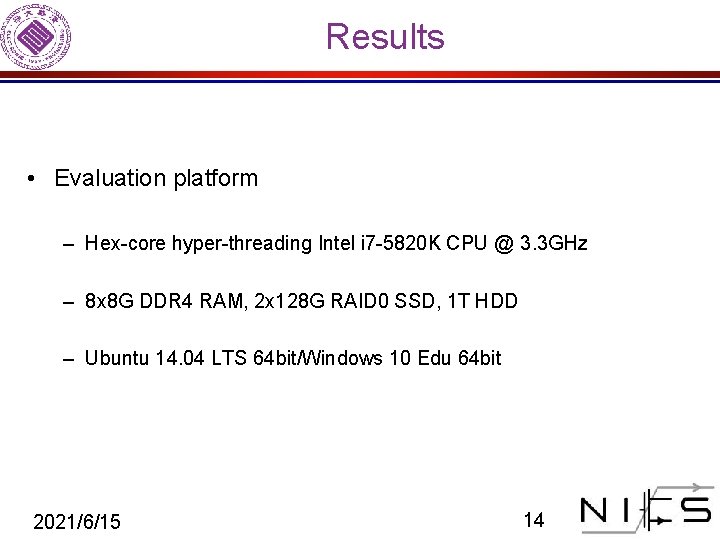

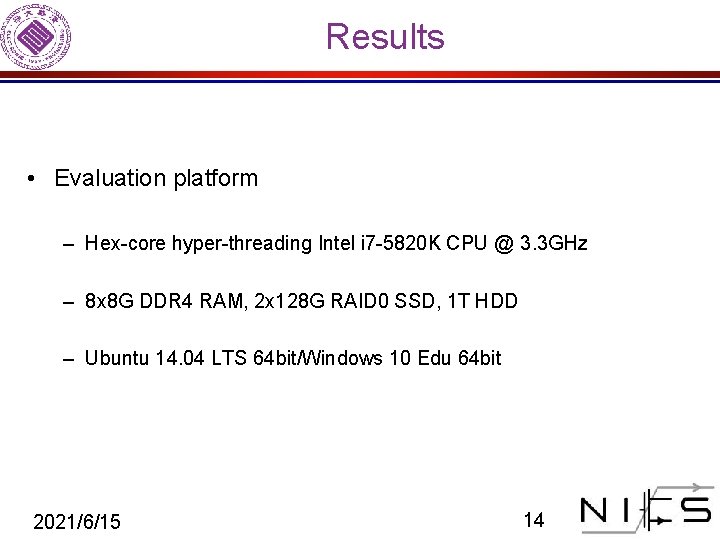

Results • Evaluation platform – Hex-core hyper-threading Intel i 7 -5820 K CPU @ 3. 3 GHz – 8 x 8 G DDR 4 RAM, 2 x 128 G RAID 0 SSD, 1 T HDD – Ubuntu 14. 04 LTS 64 bit/Windows 10 Edu 64 bit 2021/6/15 14

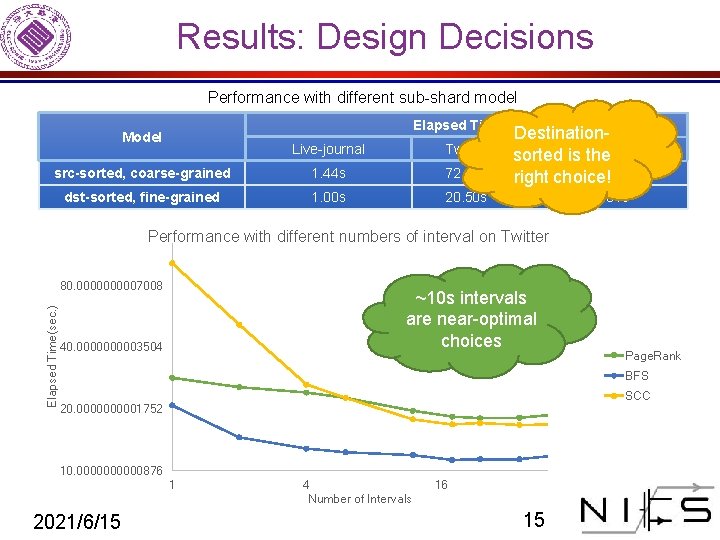

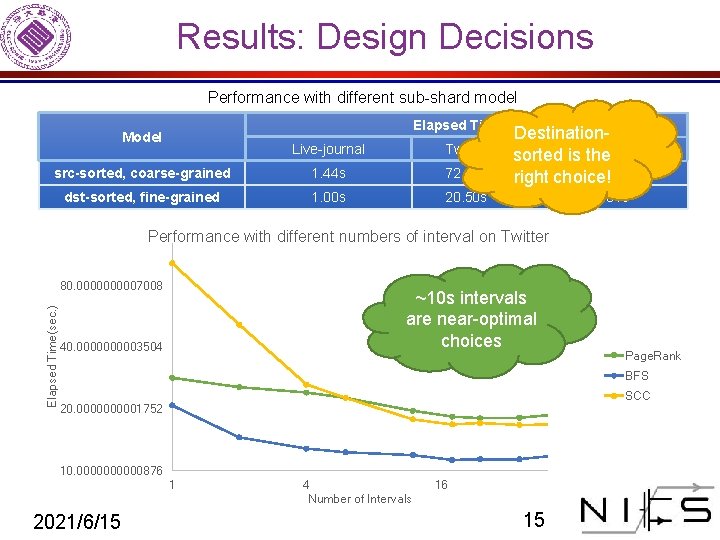

Results: Design Decisions Performance with different sub-shard model Elapsed Time(s) Model Live-journal Twitter src-sorted, coarse-grained 1. 44 s 72. 06 s dst-sorted, fine-grained 1. 00 s 20. 50 s Destination. Yahoo-web sorted is the 696. 14 s right choice! 519. 31 s Performance with different numbers of interval on Twitter Elapsed Time(sec. ) 80. 000007008 ~10 s intervals are near-optimal choices 40. 000003504 Page. Rank BFS SCC 20. 000001752 10. 00000876 1 2021/6/15 4 Number of Intervals 16 15

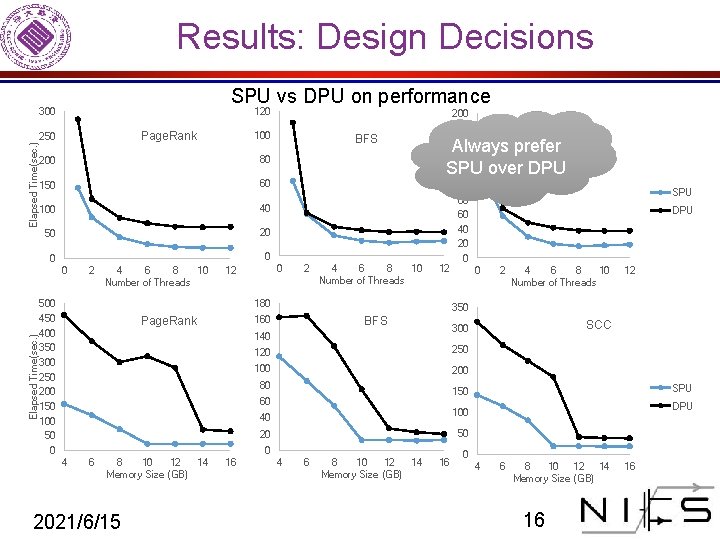

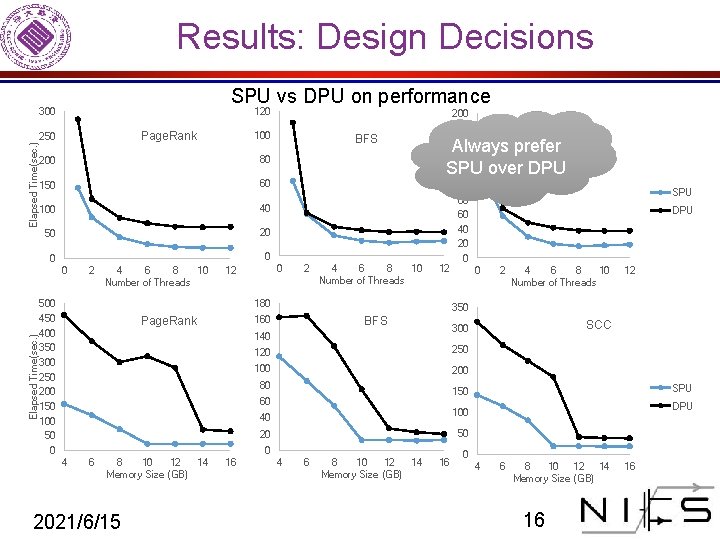

Results: Design Decisions SPU vs DPU on performance Elapsed Time(sec. ) 300 120 Page. Rank 250 200 80 150 60 100 40 50 20 0 Elapsed Time(sec. ) 100 2 4 6 8 10 Number of Threads 500 450 400 350 300 250 200 150 100 50 0 BFS 0 12 2 4 6 8 10 Number of Threads Always prefer SPU over DPU 12 180 BFS 250 100 200 12 SCC SPU DPU 50 2021/6/15 4 6 8 10 Number of Threads 100 40 16 2 150 60 8 10 12 14 Memory Size (GB) DPU 0 120 80 6 SPU 300 140 4 SCC 350 160 Page. Rank 200 180 160 140 120 100 80 60 40 20 0 4 6 8 10 12 14 Memory Size (GB) 16 16

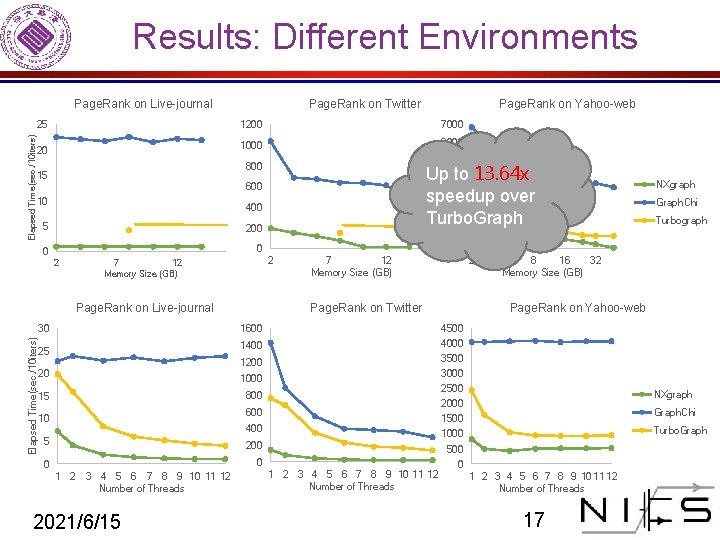

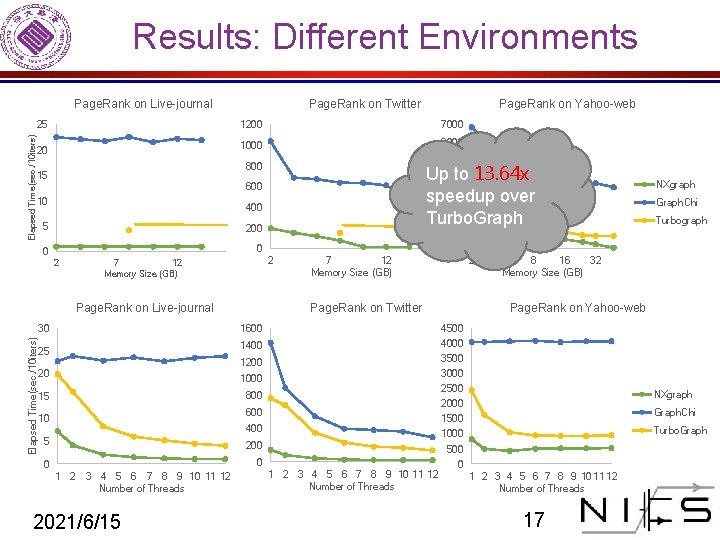

Results: Different Environments Elapsed Time(sec. /10 iters) Page. Rank on Live-journal Page. Rank on Twitter Page. Rank on Yahoo-web 25 1200 7000 20 1000 6000 15 Up 4000 to 13. 64 x 65. 32 x 3000 speedup over 2000 Graph. Chi Turbo. Graph 600 10 400 5 200 1000 0 0 2 30 7 12 Memory Size (GB) 2 Page. Rank on Twitter 4500 1400 4000 1200 3500 20 1000 3000 15 800 200 0 0 1 2 3 4 5 6 7 8 9 10 11 12 Number of Threads 2021/6/15 NXgraph 2000 Graph. Chi 1500 400 5 Turbograph 4 8 16 32 Memory Size (GB) 2500 600 10 Graph. Chi Page. Rank on Yahoo-web 1600 25 NXgraph 0 2 7 12 Memory Size (GB) Page. Rank on Live-journal Elapsed Time(sec. /10 iters) 5000 800 Turbo. Graph 1000 500 1 2 3 4 5 6 7 8 9 10 11 12 Number of Threads 17

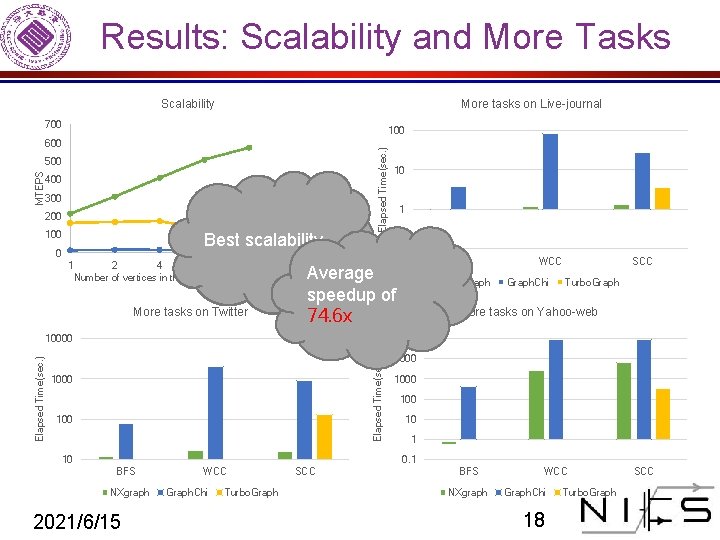

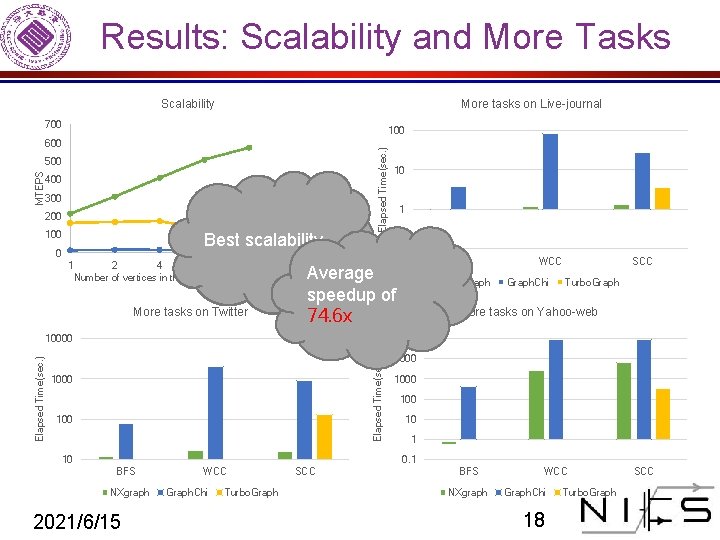

Results: Scalability and More Tasks Scalability More tasks on Live-journal 700 MTEPS 500 400 300 200 100 0 NXgraph Elapsed Time(sec. ) 100 600 1 Throughput. Graph. Chi Turbo. Graph increases as Best scalability 0. 1 graph grows 1 2 4 8 16 larger Average Number of vertices in the graph (× 220) speedup of More tasks on Twitter 74. 6 x BFS NXgraph WCC Graph. Chi SCC Turbo. Graph More tasks on Yahoo-web 100000 Elapsed Time(sec. ) 10 1000 100 10 1 0. 1 BFS NXgraph 2021/6/15 WCC Graph. Chi Turbo. Graph SCC BFS NXgraph WCC Graph. Chi 18 Turbo. Graph SCC

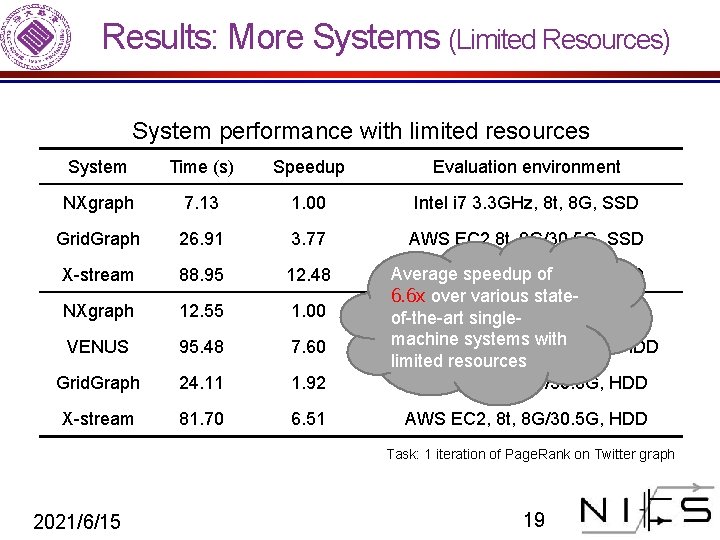

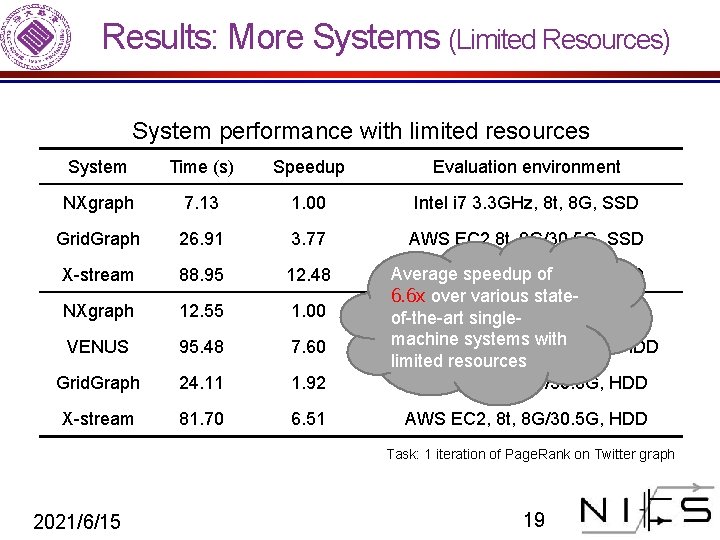

Results: More Systems (Limited Resources) System performance with limited resources System Time (s) Speedup Evaluation environment NXgraph 7. 13 1. 00 Intel i 7 3. 3 GHz, 8 t, 8 G, SSD Grid. Graph 26. 91 3. 77 AWS EC 2 8 t, 8 G/30. 5 G, SSD X-stream 88. 95 12. 48 NXgraph 12. 55 1. 00 VENUS 95. 48 7. 60 Grid. Graph 24. 11 1. 92 Average speedup of AWS EC 2 8 t, 8 G/30. 5 G, SSD 6. 6 x over various state. AWS EC 2 8 t, 8 G/30. 5 G, HDD of-the-art singlemachine systems 8 t, with Intel i 7 3. 4 GHz, 8 G/16 G, HDD limited resources AWS EC 2, 8 t, 8 G/30. 5 G, HDD X-stream 81. 70 6. 51 AWS EC 2, 8 t, 8 G/30. 5 G, HDD Task: 1 iteration of Page. Rank on Twitter graph 2021/6/15 19

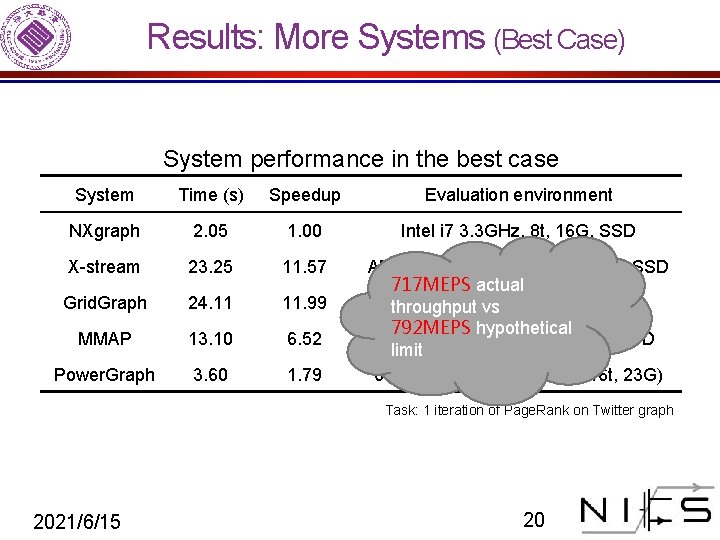

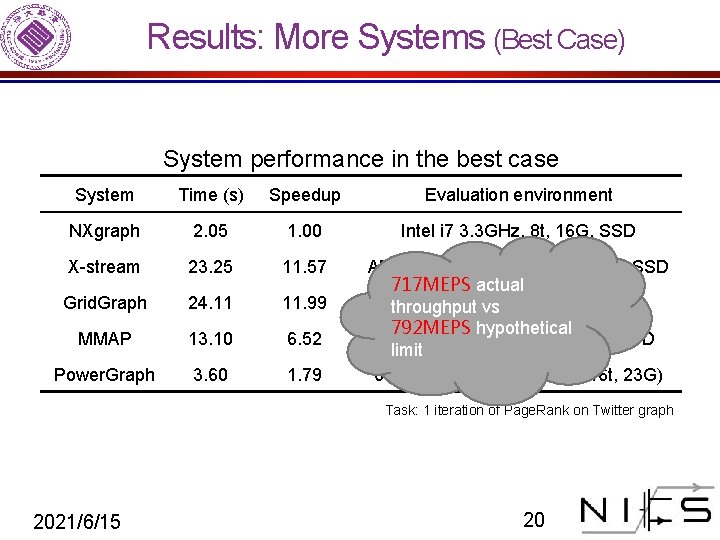

Results: More Systems (Best Case) System performance in the best case System Time (s) Speedup Evaluation environment NXgraph 2. 05 1. 00 Intel i 7 3. 3 GHz, 8 t, 16 G, SSD X-stream 23. 25 11. 57 Grid. Graph 24. 11 11. 99 MMAP 13. 10 6. 52 Power. Graph 3. 60 1. 79 AMD Opteron 2. 1 GHz, Average speedup of 32 t, 64 G, SSD 717 MEPS actual 10. 0 x AWS over EC 2, various 8 t, 8 G/30. 5 G, HDD throughput vs state-of-the-art single 792 MEPS hypothetical Intel i 7 3. 5 GHz, 8 t, in 16 G/32 G, SSD machine systems the limit best case 64×(AWS EC 2 Intel Xeon, 16 t, 23 G) Task: 1 iteration of Page. Rank on Twitter graph 2021/6/15 20

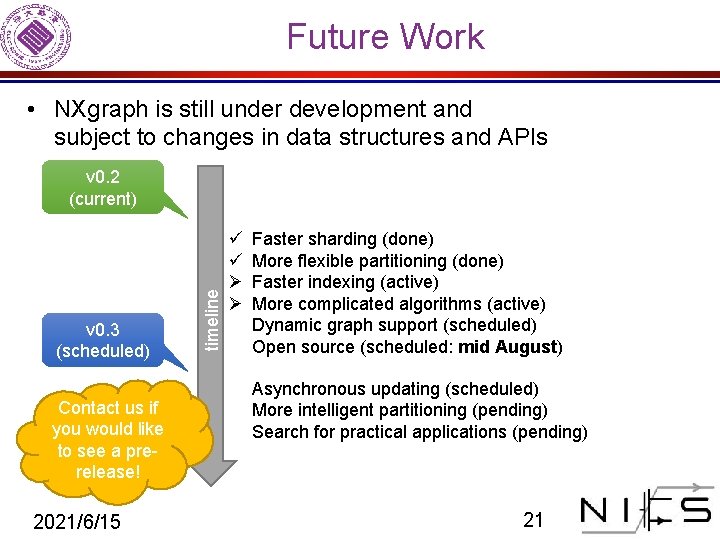

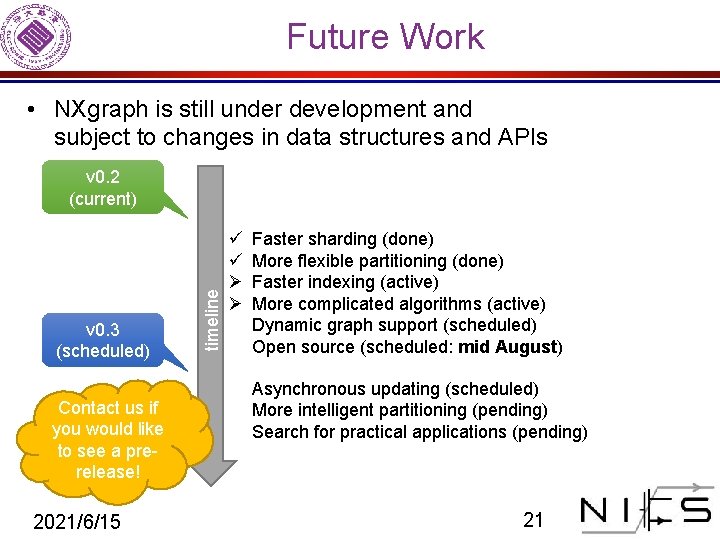

Future Work • NXgraph is still under development and subject to changes in data structures and APIs v 0. 3 (scheduled) Contact us if you would like to see a prerelease! 2021/6/15 timeline v 0. 2 (current) ü ü Ø Ø Faster sharding (done) More flexible partitioning (done) Faster indexing (active) More complicated algorithms (active) Dynamic graph support (scheduled) Open source (scheduled: mid August) Asynchronous updating (scheduled) More intelligent partitioning (pending) Search for practical applications (pending) 21

Reference • • • H. Kwak, C. Lee, H. Park, and S. Moon, “What is twitter, a social network or a news media? ” in WWW. ACM, 2010, pp. 591– 600. J. Gonzalez, Y. Low, and H. Gu, “Powergraph: Distributed graphparallel computation on natural graphs, ” in OSDI, 2012, pp. 17– 30. J. Cheng, Q. Liu, Z. Li, W. Fan, J. C. S. Lui, and C. He, “VENUS: Vertex-Centric Streamlined Graph Computation on a Single PC, ” in ICDE, 2015, pp. 1131– 1142. A. Kyrola, G. Blelloch, and C. Guestrin, “Graph. Chi: Large-Scale Graph Computation on Just a PC, ” in OSDI, 2012, pp. 31– 46. W. -S. Han, S. Lee, K. Park, J. -H. Lee, M. -S. Kim, J. Kim, and H. Yu, “Turbo. Graph: A Fast Parallel Graph Engine Handling Billion-Scale Graphs in a Single PC, ” in SIGKDD, 2013, pp. 77– 85. M. Zaharia, M. Chowdhury, M. J. Franklin, S. Shenker, and I. Stoica, “Spark: cluster computing with working sets, ” in Hot. Cloud, vol. 10, 2010, p. 10. L. Page, S. Brin, R. Motwani, and T. Winograd, “The pagerank citation ranking: bringing order to the web. ” 1999. X. Zhu, W. Han, and W. Chen, “Grid. Graph : Large-Scale Graph Processing on a Single Machine Using 2 -Level Hierarchical Partitioning, ”in ATC, 2015, pp. 375– 386. A. Roy, I. Mihailovic, and W. Zwaenepoel, “X-Stream: Edge-centric Graph Processing using Streaming Partitions, ” in SOSP, 2013, pp. 472– 488. 2021/6/15 22

Reference • • • Yahoo! altavisata web page hyperlink connectivity graph, circa 2002, ” http: //webscope. sandbox. yahoo. com/. “Livejournal social network, ” http: //snap. stanford. edu/data/ soc-Live. Journal 1. html. Z. Lin, M. Kahng, K. Sabrin, D. Horng, and P. Chau, “MMap : Fast Billion-Scale Graph Computation on a PC via Memory Mapping, ” in ICBD. IEEE, 2014. G. Malewicz, M. H. Austern, A. J. C. Bik, J. C. Dehnert, I. Horn, N. Leiser, and G. Czajkowski, “Pregel : A System for Large-Scale Graph Processing, ” in SIGMOD, 2010, pp. 135– 145. 2021/6/15 23

NXgraph Thank you! Q&A 2021/6/15 24