NVIDIA Fermi Architecture Joseph Kider University of Pennsylvania

- Slides: 31

NVIDIA Fermi Architecture Joseph Kider University of Pennsylvania CIS 565 - Fall 2011

Administrivia n Project checkpoint on Monday

Sources Patrick Cozzi Spring 2011 n NVIDIA CUDA Programming Guide n CUDA by Example n Programming Massively Parallel Processors n

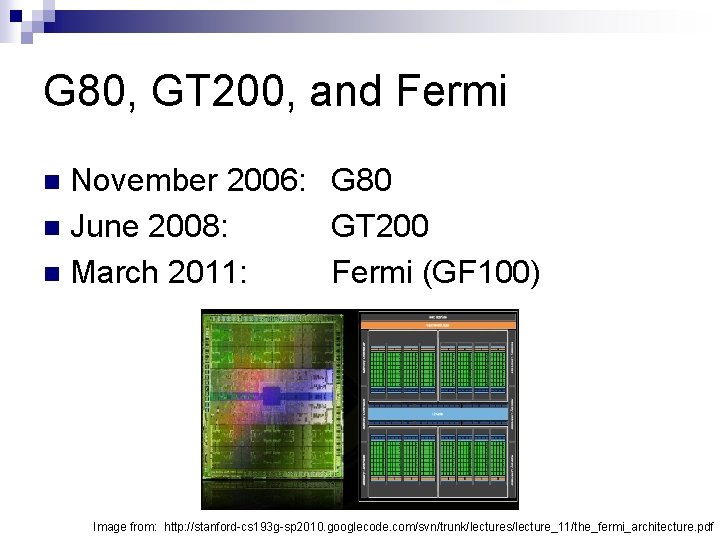

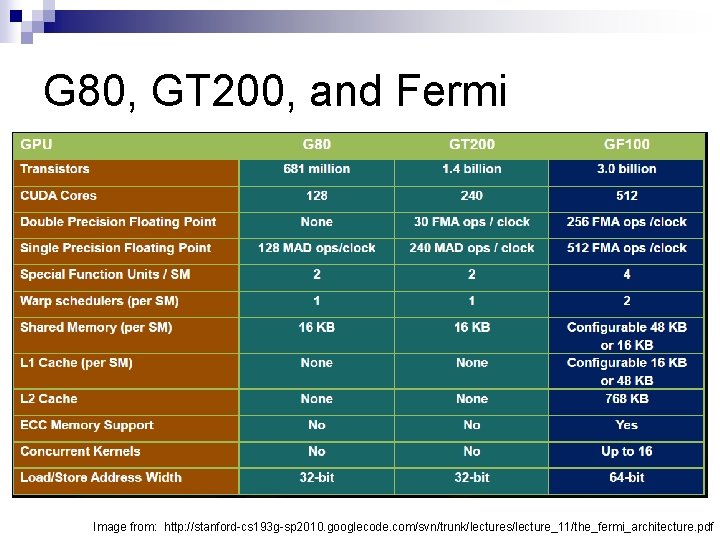

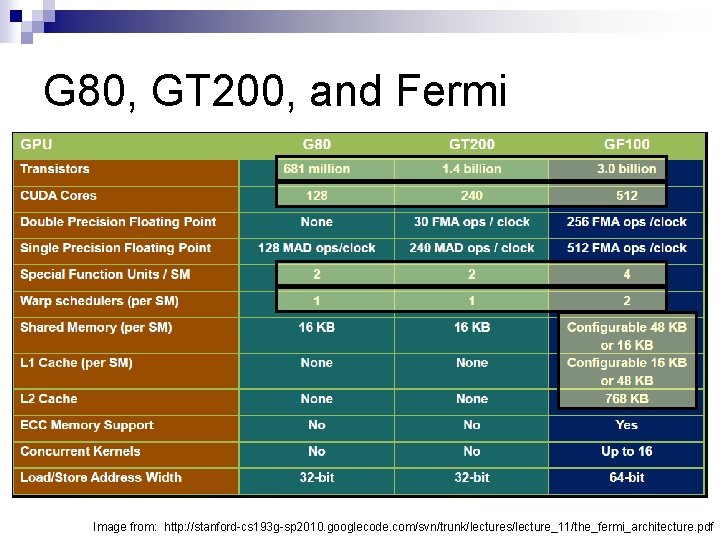

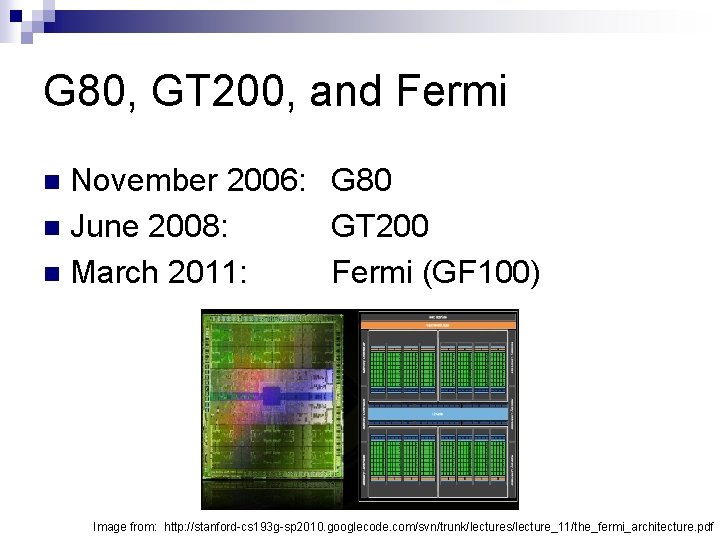

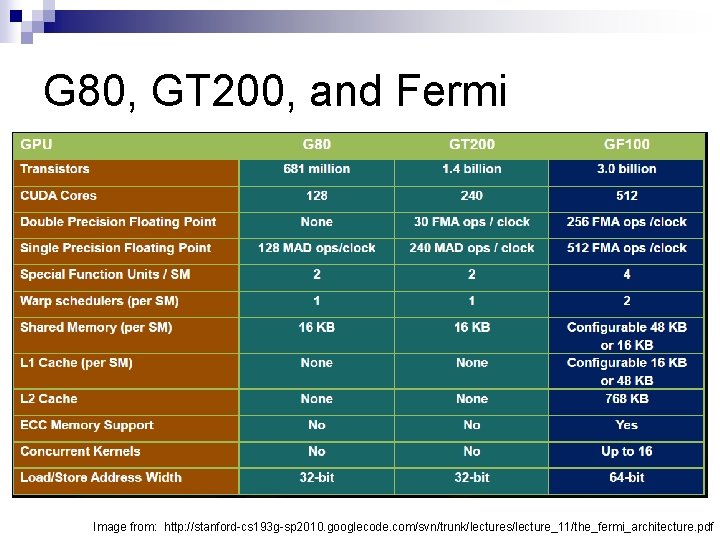

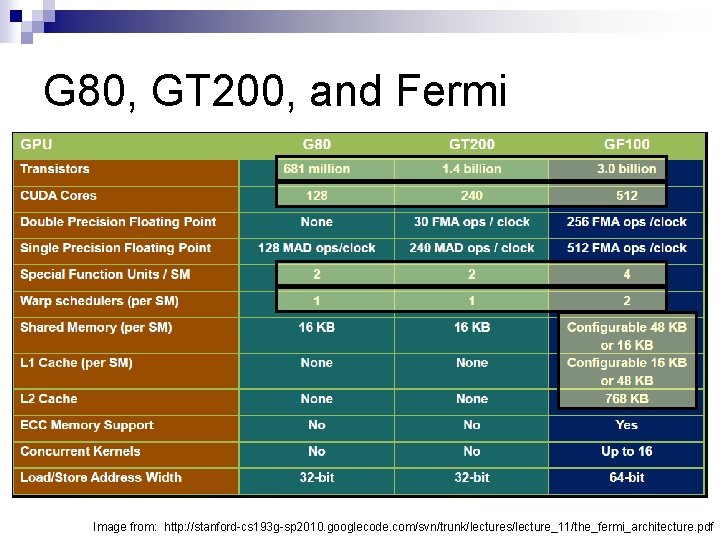

G 80, GT 200, and Fermi November 2006: G 80 n June 2008: GT 200 n March 2011: Fermi (GF 100) n Image from: http: //stanford-cs 193 g-sp 2010. googlecode. com/svn/trunk/lectures/lecture_11/the_fermi_architecture. pdf

New GPU Generation n What are the technical goals for a new GPU generation?

New GPU Generation n What are the technical goals for a new GPU generation? ¨ Improve existing application performance. How?

New GPU Generation n What are the technical goals for a new GPU generation? ¨ Improve existing application performance. How? ¨ Advance programmability. In what ways?

Fermi: What’s More? More total cores (SPs) – not SMs though n More registers: 32 K per SM n More shared memory: up to 48 K per SM n More Super Functional Units (SFUs) n

Fermi: What’s Faster? Faster double precision – 8 x over GT 200 n Faster atomic operations. What for? n ¨ 5 -20 x n Faster context switches ¨ Between applications – 10 x ¨ Between graphics and compute, e. g. , Open. GL and CUDA

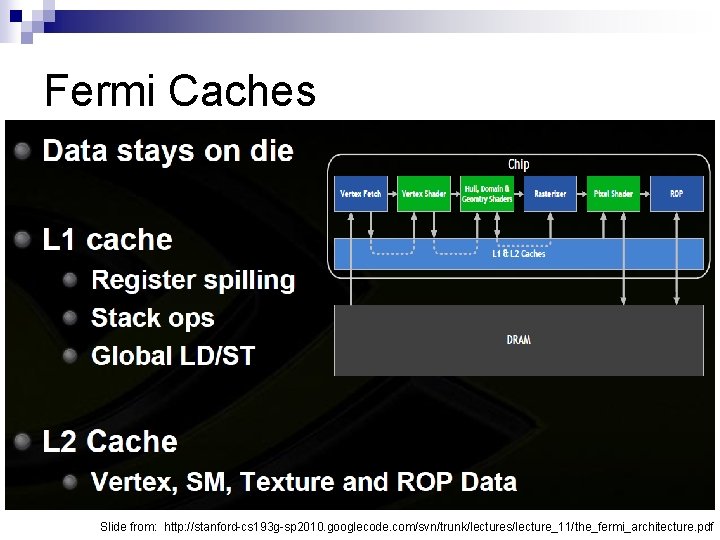

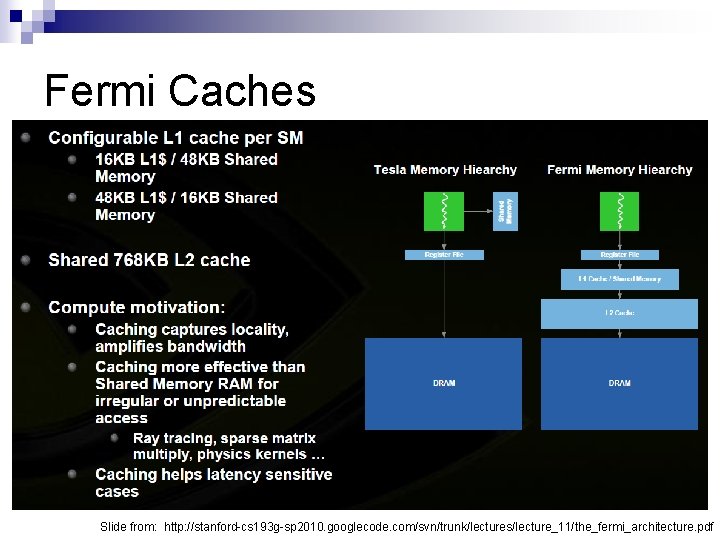

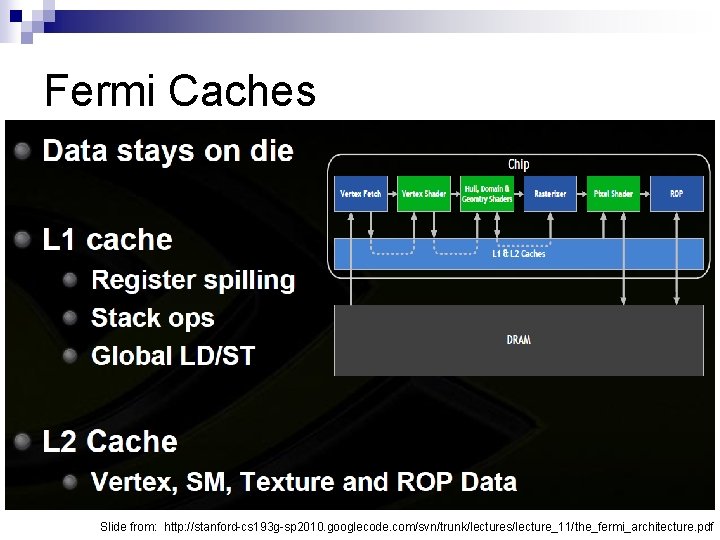

Fermi: What’s New? n L 1 and L 2 caches. ¨ For n n n n compute or graphics? Dual warp scheduling Concurrent kernel execution C++ support Full IEEE 754 -2008 support in hardware Unified address space Error Correcting Code (ECC) memory support Fixed function tessellation for graphics

G 80, GT 200, and Fermi Image from: http: //stanford-cs 193 g-sp 2010. googlecode. com/svn/trunk/lectures/lecture_11/the_fermi_architecture. pdf

G 80, GT 200, and Fermi Image from: http: //stanford-cs 193 g-sp 2010. googlecode. com/svn/trunk/lectures/lecture_11/the_fermi_architecture. pdf

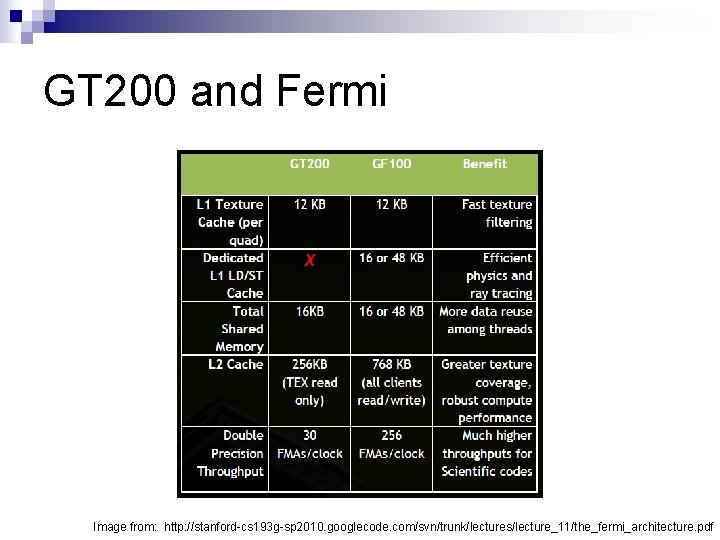

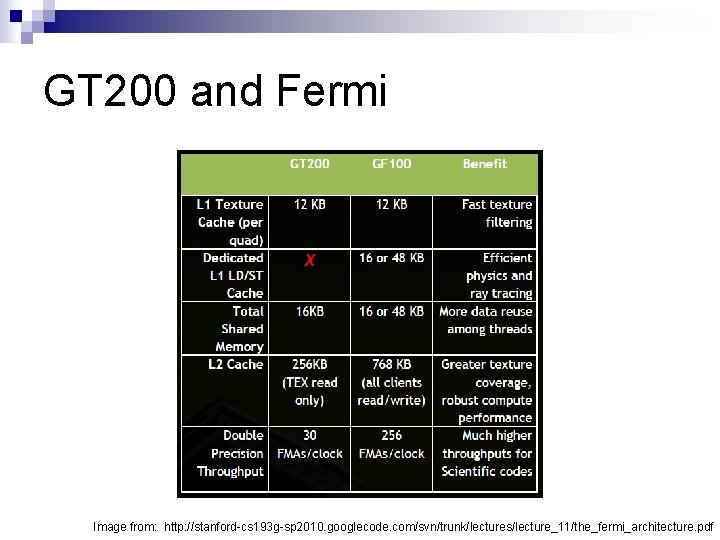

GT 200 and Fermi Image from: http: //stanford-cs 193 g-sp 2010. googlecode. com/svn/trunk/lectures/lecture_11/the_fermi_architecture. pdf

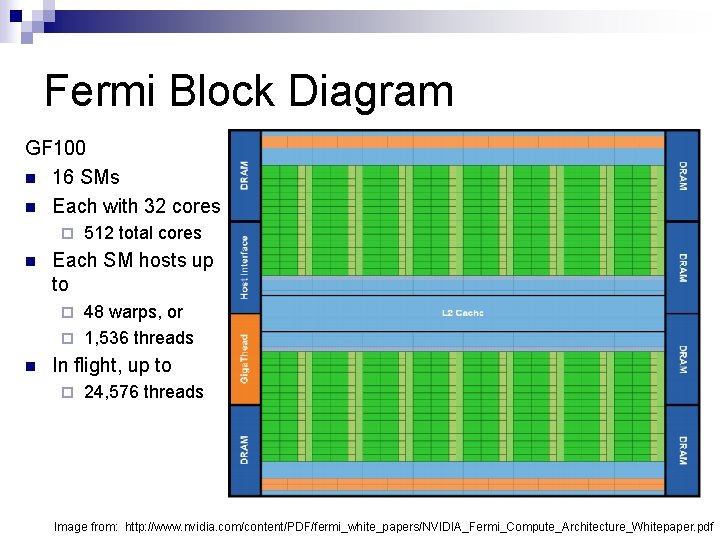

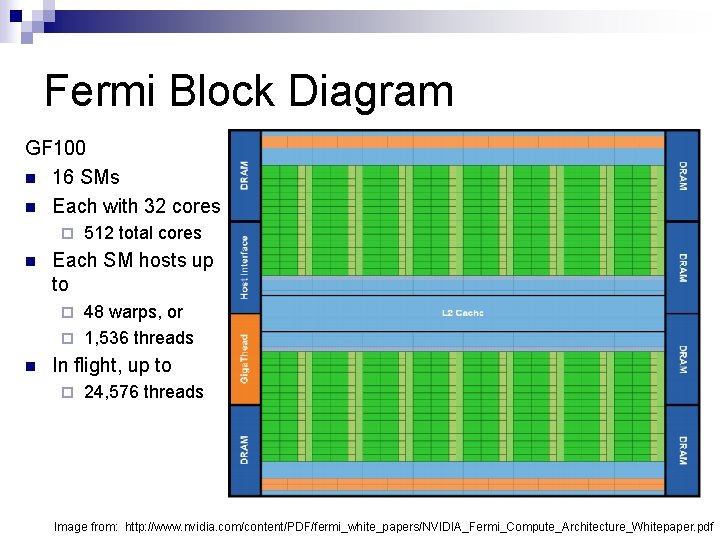

Fermi Block Diagram GF 100 n 16 SMs n Each with 32 cores ¨ n 512 total cores Each SM hosts up to 48 warps, or ¨ 1, 536 threads ¨ n In flight, up to ¨ 24, 576 threads Image from: http: //www. nvidia. com/content/PDF/fermi_white_papers/NVIDIA_Fermi_Compute_Architecture_Whitepaper. pdf

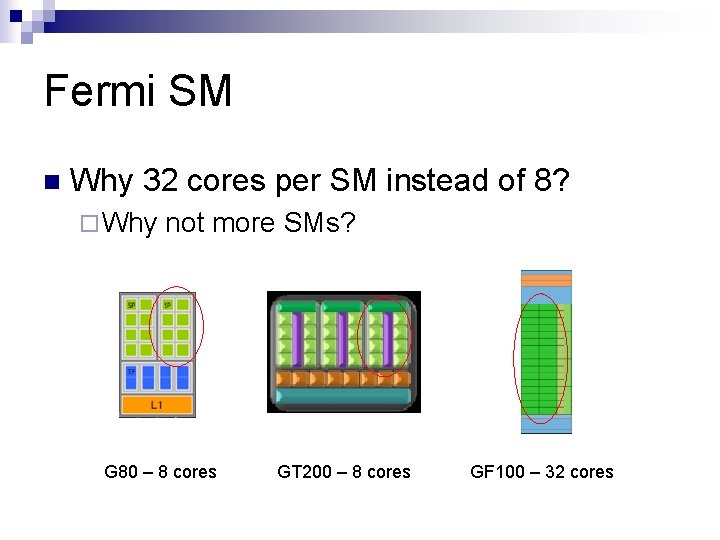

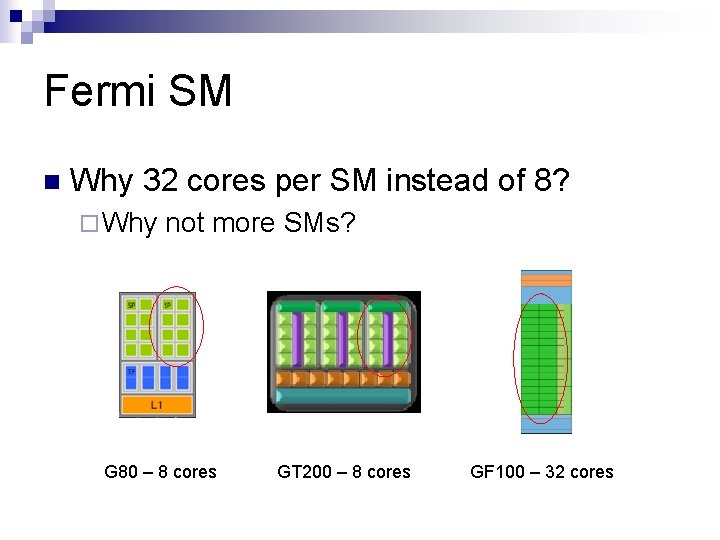

Fermi SM n Why 32 cores per SM instead of 8? ¨ Why not more SMs? G 80 – 8 cores GT 200 – 8 cores GF 100 – 32 cores

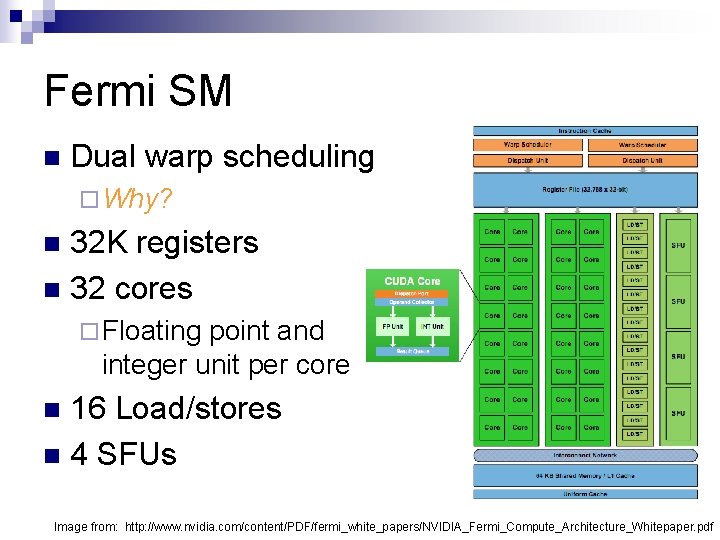

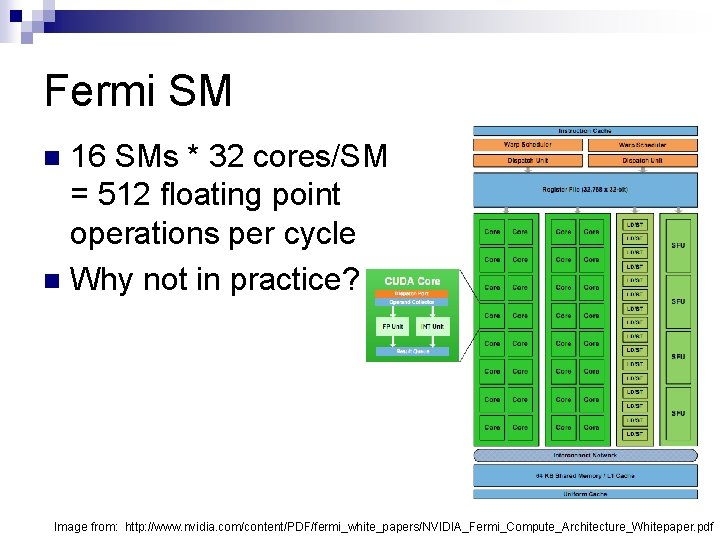

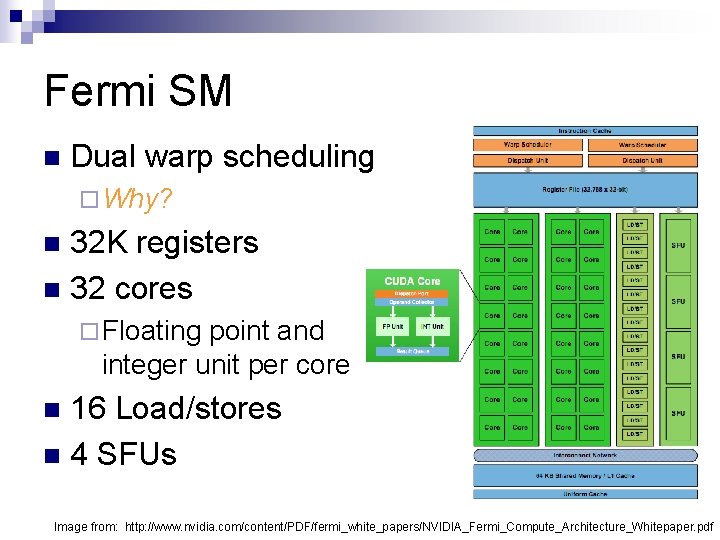

Fermi SM n Dual warp scheduling ¨ Why? 32 K registers n 32 cores n ¨ Floating point and integer unit per core 16 Load/stores n 4 SFUs n Image from: http: //www. nvidia. com/content/PDF/fermi_white_papers/NVIDIA_Fermi_Compute_Architecture_Whitepaper. pdf

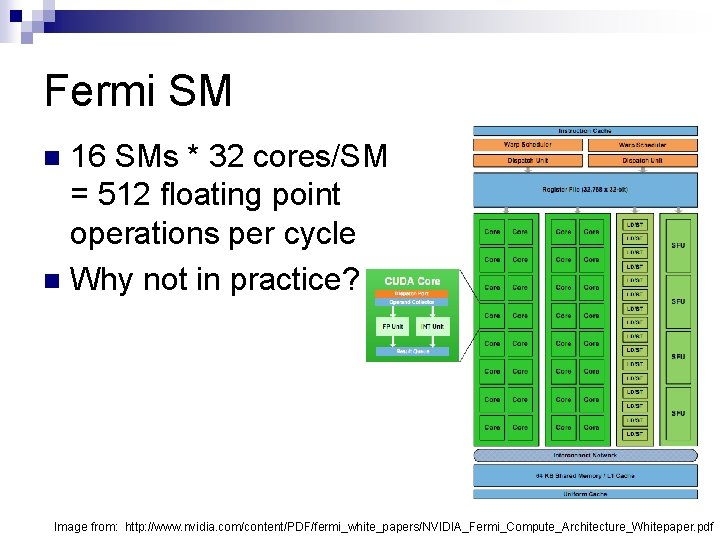

Fermi SM 16 SMs * 32 cores/SM = 512 floating point operations per cycle n Why not in practice? n Image from: http: //www. nvidia. com/content/PDF/fermi_white_papers/NVIDIA_Fermi_Compute_Architecture_Whitepaper. pdf

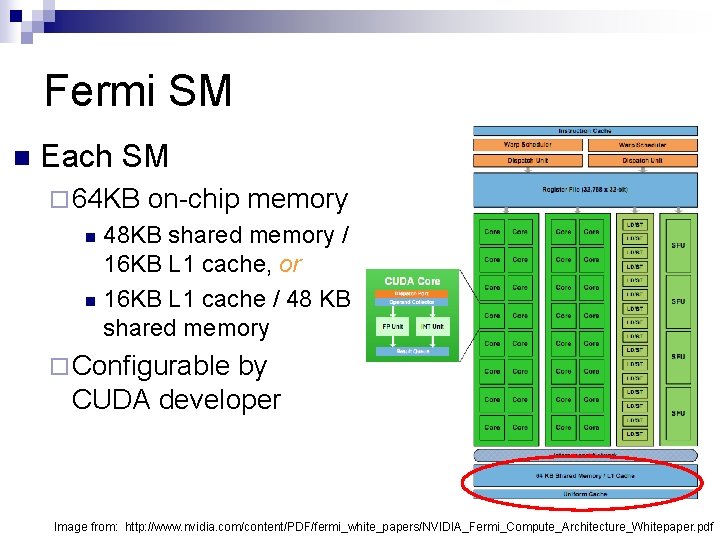

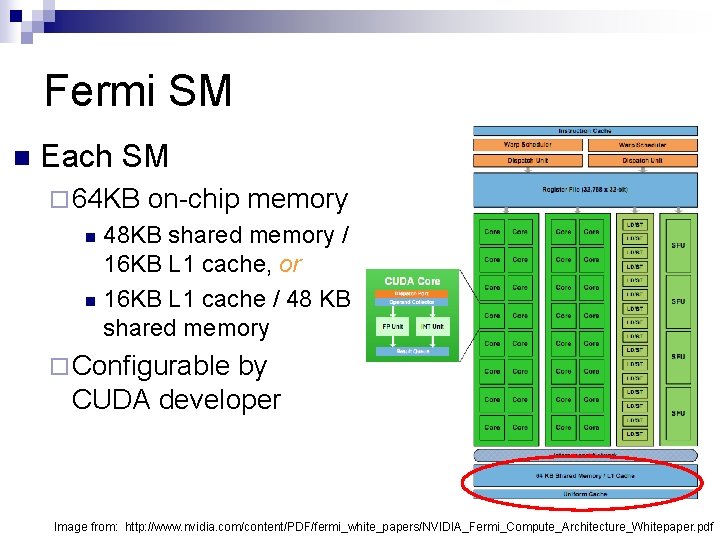

Fermi SM n Each SM ¨ 64 KB on-chip memory 48 KB shared memory / 16 KB L 1 cache, or n 16 KB L 1 cache / 48 KB shared memory n ¨ Configurable by CUDA developer Image from: http: //www. nvidia. com/content/PDF/fermi_white_papers/NVIDIA_Fermi_Compute_Architecture_Whitepaper. pdf

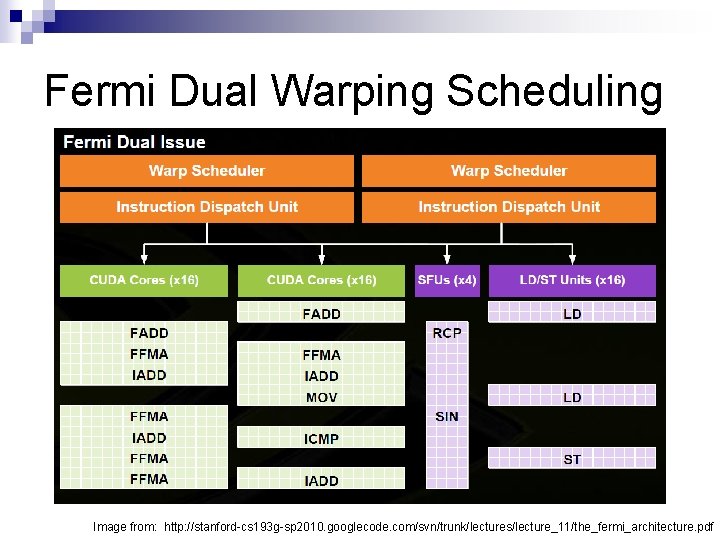

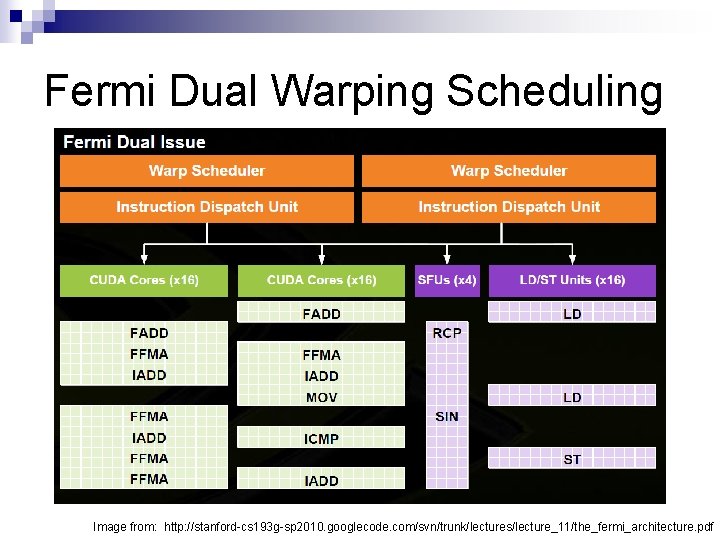

Fermi Dual Warping Scheduling Image from: http: //stanford-cs 193 g-sp 2010. googlecode. com/svn/trunk/lectures/lecture_11/the_fermi_architecture. pdf

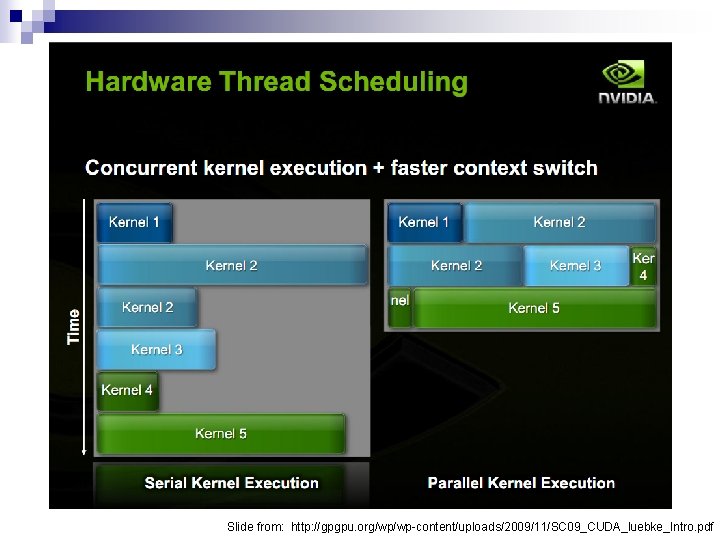

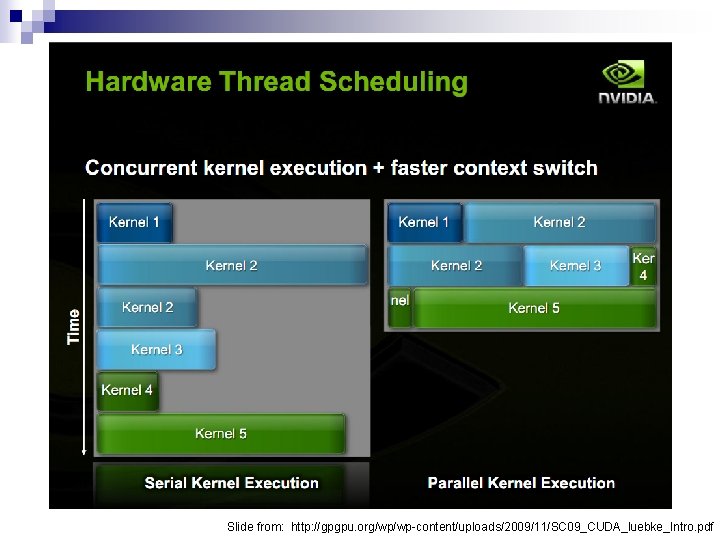

Slide from: http: //gpgpu. org/wp/wp-content/uploads/2009/11/SC 09_CUDA_luebke_Intro. pdf

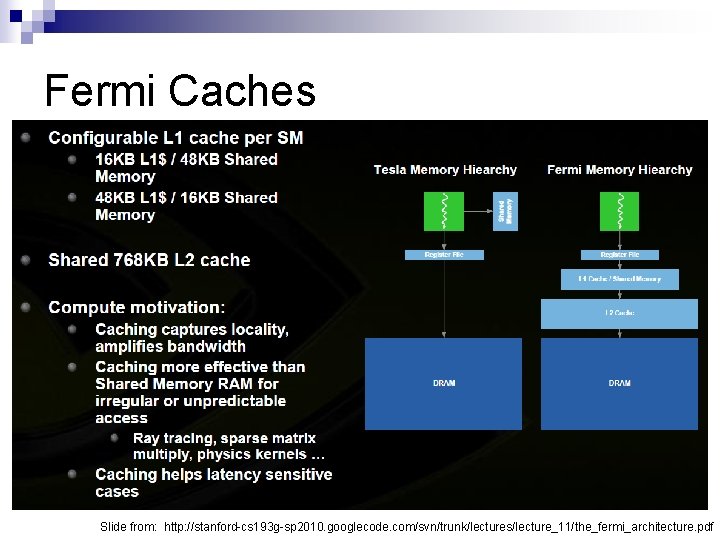

Fermi Caches Slide from: http: //stanford-cs 193 g-sp 2010. googlecode. com/svn/trunk/lectures/lecture_11/the_fermi_architecture. pdf

Fermi Caches Slide from: http: //stanford-cs 193 g-sp 2010. googlecode. com/svn/trunk/lectures/lecture_11/the_fermi_architecture. pdf

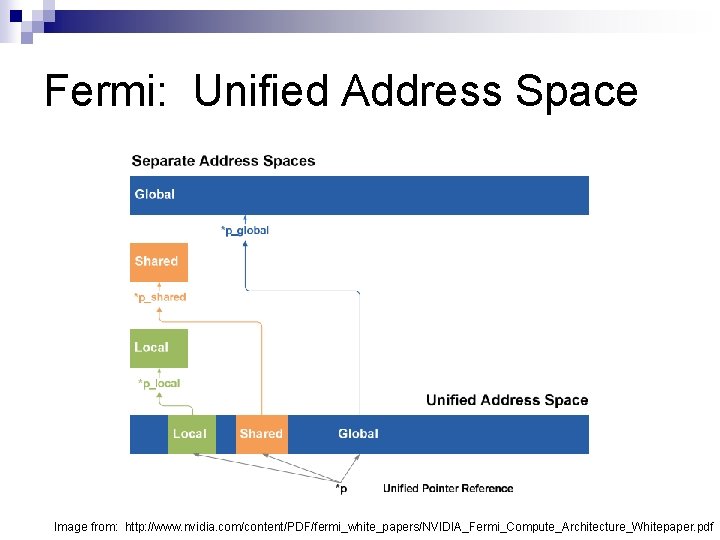

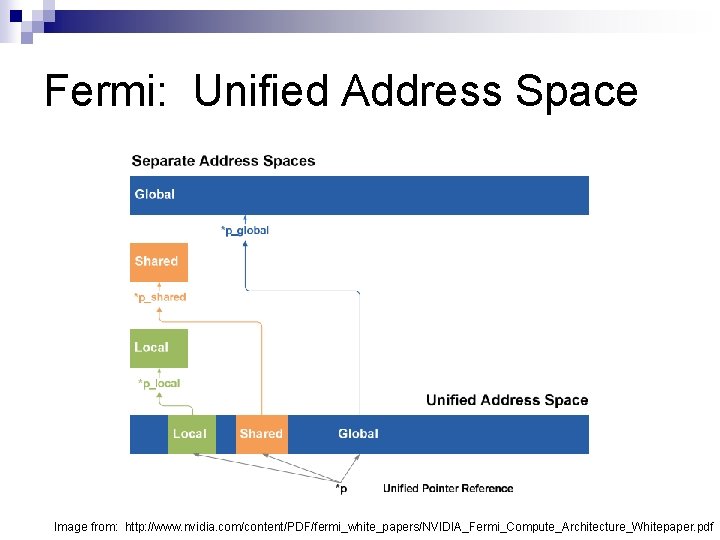

Fermi: Unified Address Space Image from: http: //www. nvidia. com/content/PDF/fermi_white_papers/NVIDIA_Fermi_Compute_Architecture_Whitepaper. pdf

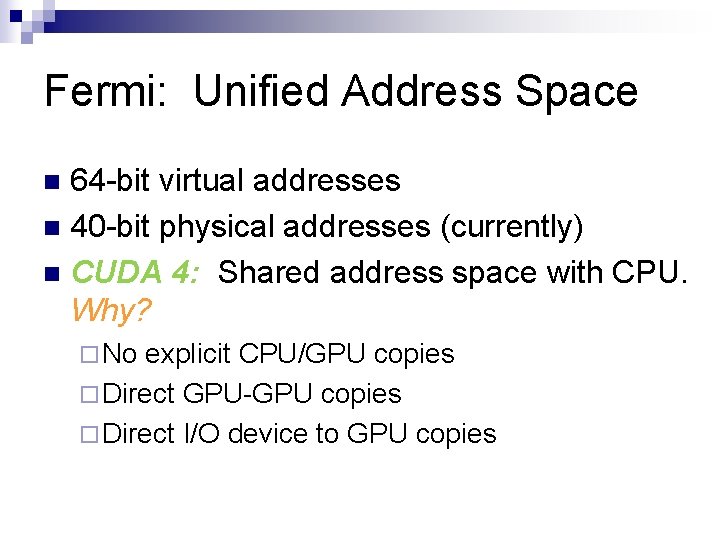

Fermi: Unified Address Space 64 -bit virtual addresses n 40 -bit physical addresses (currently) n CUDA 4: Shared address space with CPU. Why? n

Fermi: Unified Address Space 64 -bit virtual addresses n 40 -bit physical addresses (currently) n CUDA 4: Shared address space with CPU. Why? n ¨ No explicit CPU/GPU copies ¨ Direct GPU-GPU copies ¨ Direct I/O device to GPU copies

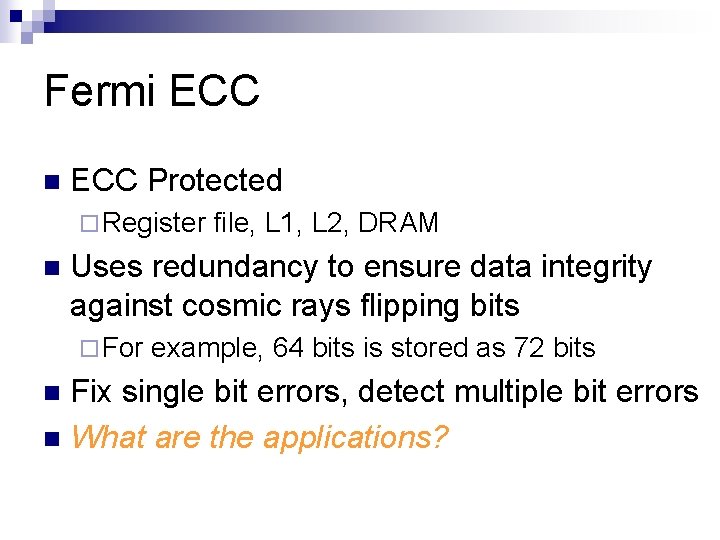

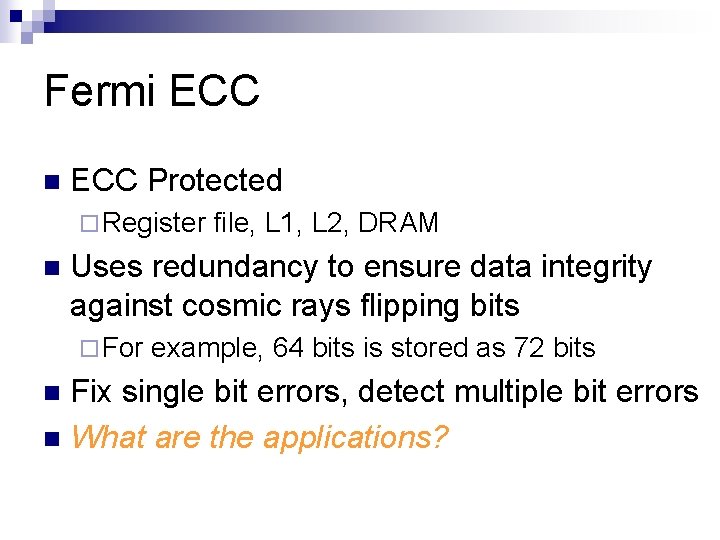

Fermi ECC n ECC Protected ¨ Register n file, L 1, L 2, DRAM Uses redundancy to ensure data integrity against cosmic rays flipping bits ¨ For example, 64 bits is stored as 72 bits Fix single bit errors, detect multiple bit errors n What are the applications? n

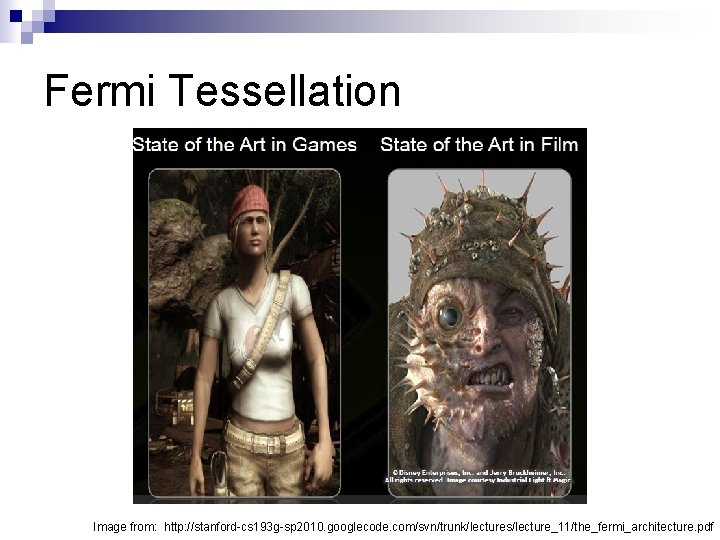

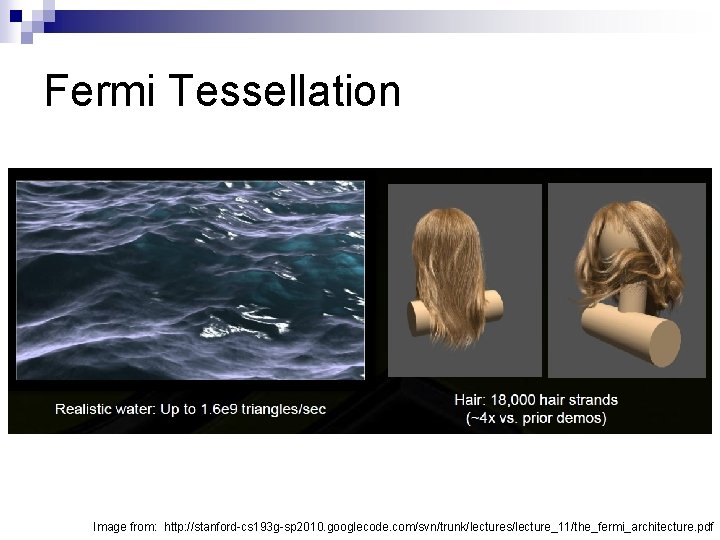

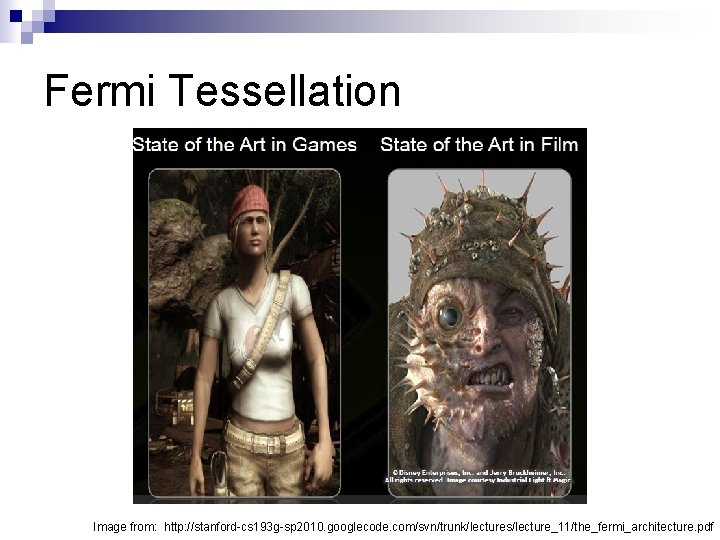

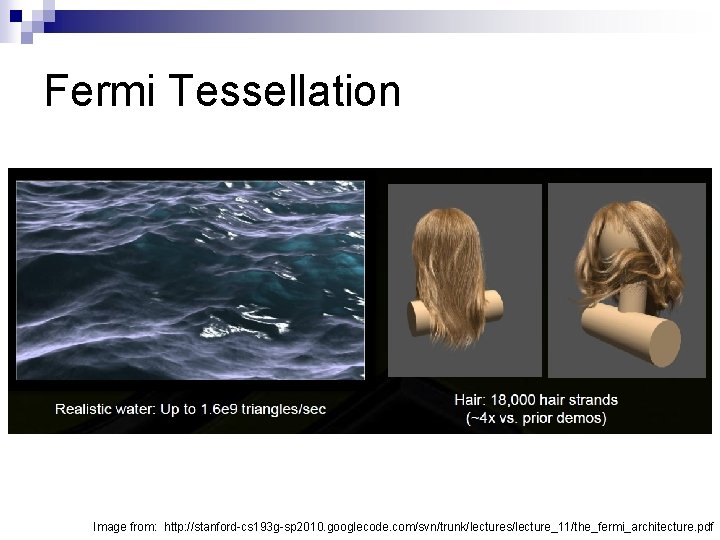

Fermi Tessellation Image from: http: //stanford-cs 193 g-sp 2010. googlecode. com/svn/trunk/lectures/lecture_11/the_fermi_architecture. pdf

Fermi Tessellation Image from: http: //stanford-cs 193 g-sp 2010. googlecode. com/svn/trunk/lectures/lecture_11/the_fermi_architecture. pdf

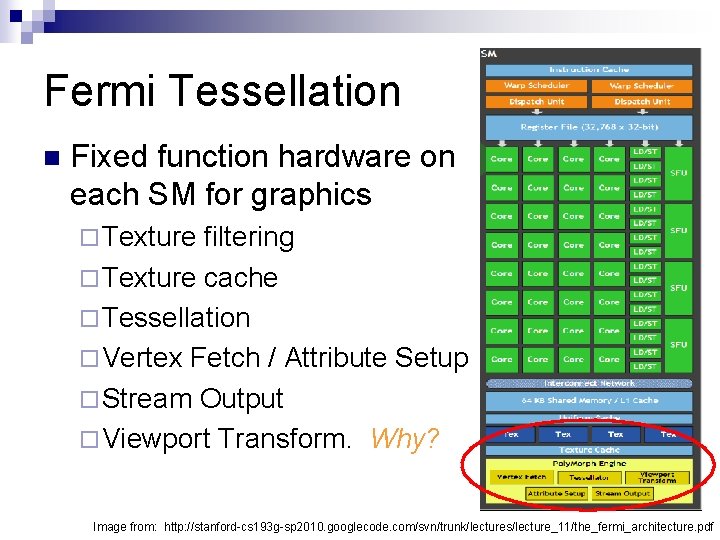

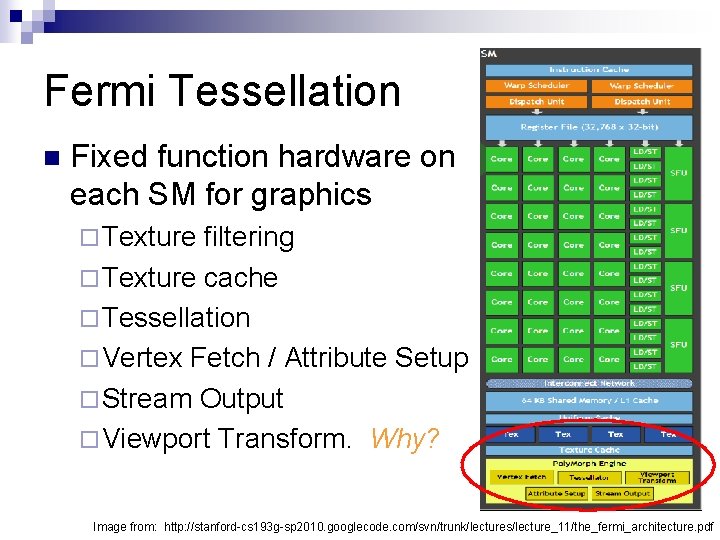

Fermi Tessellation n Fixed function hardware on each SM for graphics ¨ Texture filtering ¨ Texture cache ¨ Tessellation ¨ Vertex Fetch / Attribute Setup ¨ Stream Output ¨ Viewport Transform. Why? Image from: http: //stanford-cs 193 g-sp 2010. googlecode. com/svn/trunk/lectures/lecture_11/the_fermi_architecture. pdf

Observations n Becoming easier to port CPU code to the GPU ¨ Recursion, fast atomics, L 1/L 2 caches, faster global memory n In fact…

Observations n Becoming easier to port CPU code to the GPU ¨ Recursion, fast atomics, L 1/L 2 caches, faster global memory In fact… n GPUs are starting to look like CPUs n ¨ Beefier SMs, L 1 and L 2 caches, dual warp scheduling, double precision, fast atomics