NSF Middleware Initiative GRIDS Center Overview John Mc

- Slides: 36

NSF Middleware Initiative GRIDS Center Overview John Mc. Gee, USC/ISI June 26, 2002 Internet 2 – Base CAMP Boulder, Colorado

GRIDS Center Grid Research Integration Development & Support http: //www. grids-center. org USC/ISI - Chicago - NCSA – SDSC - Wisconsin

Agenda l Vision for Grid Technology l GRIDS Center Operations u Software Components u Packaging and Testing u Documentation and Support u Testbed l Globus Security and Resource Discovery l Campus Enterprise Integration GRIDS Center overview for Base CAMP 3 Enabling Distributed Science www. grids-center. org

Vision for Grid Technologies

Enabling Seamless Collaboration l. GRIDS help distributed communities pursue common goals Scientific research u Engineering design u Education u Artistic creation u l. Focus is on the enabling mechanisms required for collaboration u Resource sharing as a fundamental concept GRIDS Center overview for Base CAMP 5 Enabling Distributed Science www. grids-center. org

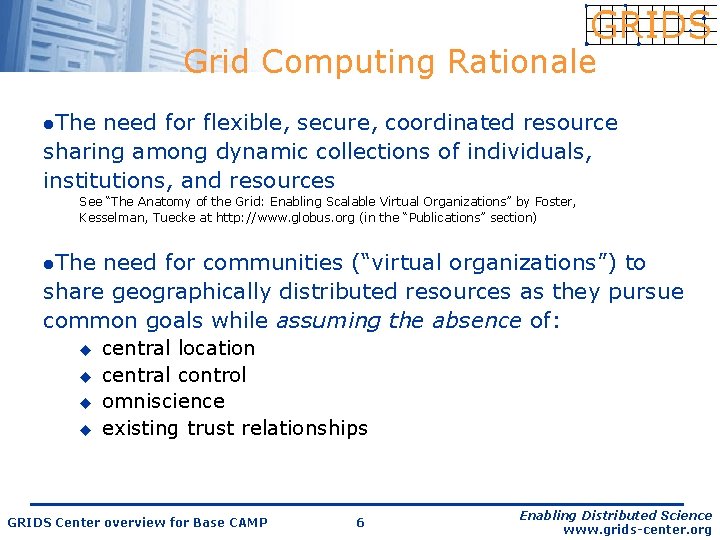

Grid Computing Rationale l. The need for flexible, secure, coordinated resource sharing among dynamic collections of individuals, institutions, and resources See “The Anatomy of the Grid: Enabling Scalable Virtual Organizations” by Foster, Kesselman, Tuecke at http: //www. globus. org (in the “Publications” section) l. The need for communities (“virtual organizations”) to share geographically distributed resources as they pursue common goals while assuming the absence of: u u central location central control omniscience existing trust relationships GRIDS Center overview for Base CAMP 6 Enabling Distributed Science www. grids-center. org

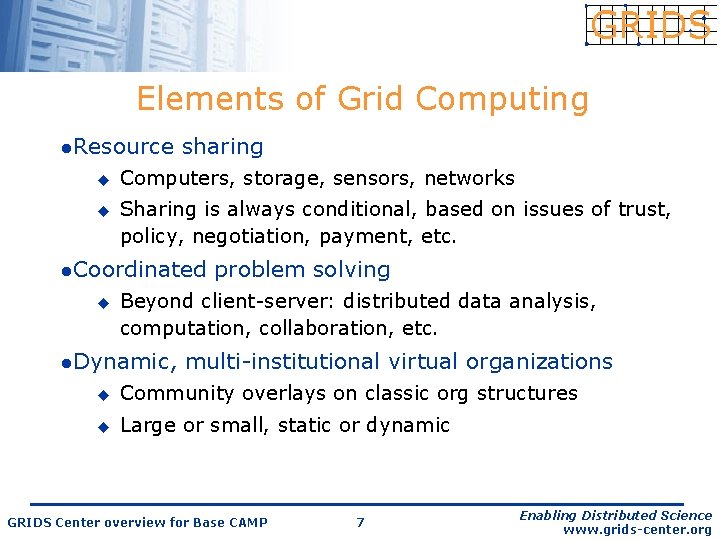

Elements of Grid Computing l. Resource u u sharing Computers, storage, sensors, networks Sharing is always conditional, based on issues of trust, policy, negotiation, payment, etc. l. Coordinated u problem solving Beyond client-server: distributed data analysis, computation, collaboration, etc. l. Dynamic, multi-institutional virtual organizations u Community overlays on classic org structures u Large or small, static or dynamic GRIDS Center overview for Base CAMP 7 Enabling Distributed Science www. grids-center. org

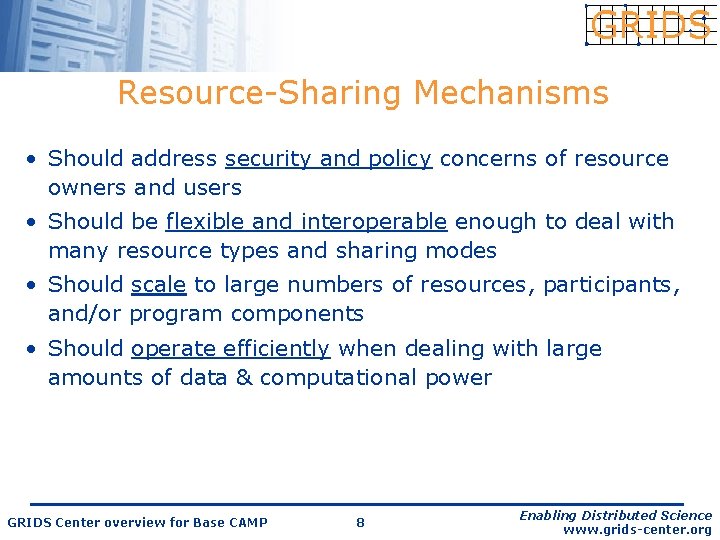

Resource-Sharing Mechanisms • Should address security and policy concerns of resource owners and users • Should be flexible and interoperable enough to deal with many resource types and sharing modes • Should scale to large numbers of resources, participants, and/or program components • Should operate efficiently when dealing with large amounts of data & computational power GRIDS Center overview for Base CAMP 8 Enabling Distributed Science www. grids-center. org

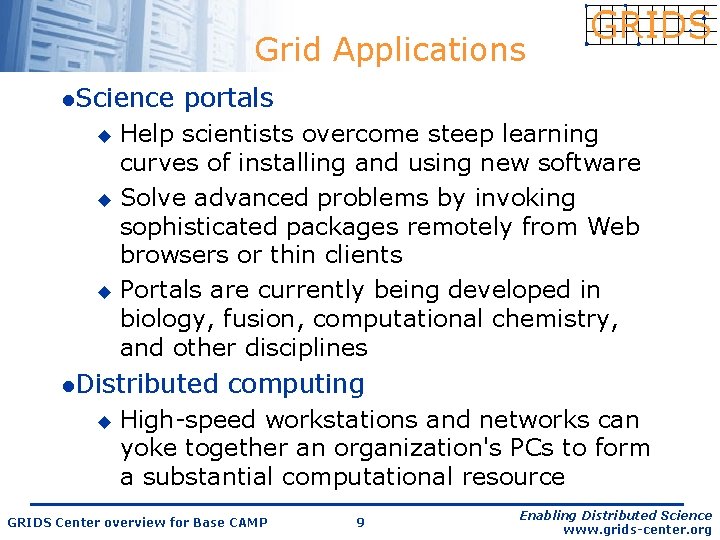

Grid Applications l. Science portals Help scientists overcome steep learning curves of installing and using new software u Solve advanced problems by invoking sophisticated packages remotely from Web browsers or thin clients u Portals are currently being developed in biology, fusion, computational chemistry, and other disciplines u l. Distributed u computing High-speed workstations and networks can yoke together an organization's PCs to form a substantial computational resource GRIDS Center overview for Base CAMP 9 Enabling Distributed Science www. grids-center. org

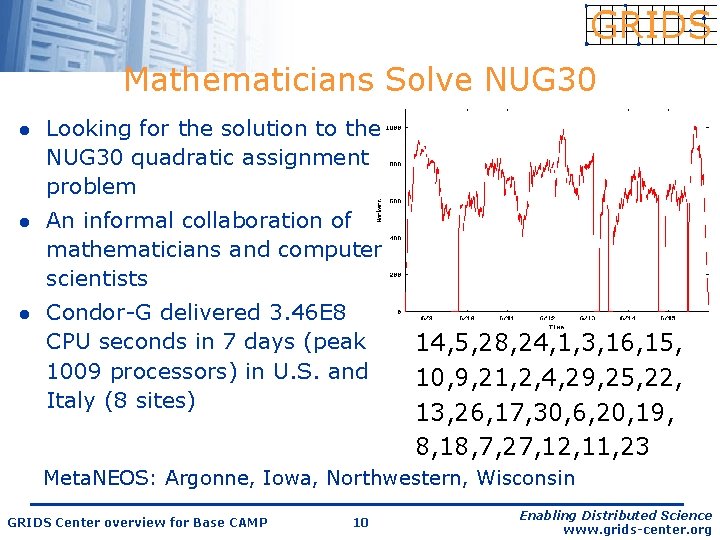

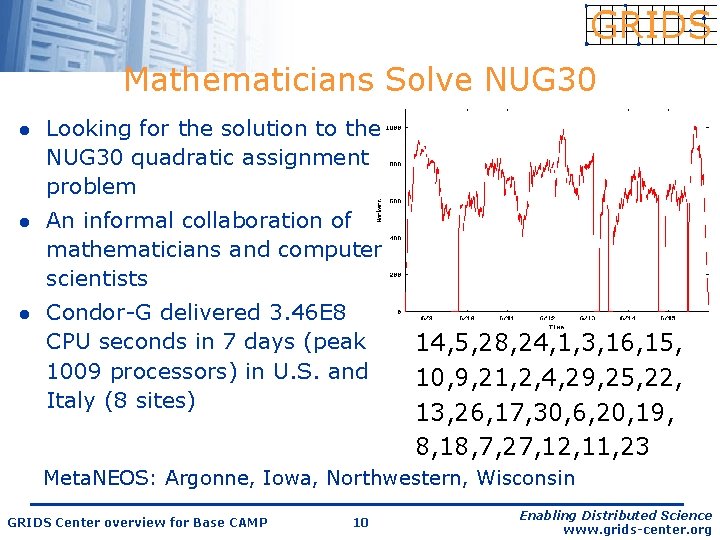

Mathematicians Solve NUG 30 l Looking for the solution to the NUG 30 quadratic assignment problem l An informal collaboration of mathematicians and computer scientists l Condor-G delivered 3. 46 E 8 CPU seconds in 7 days (peak 1009 processors) in U. S. and Italy (8 sites) 14, 5, 28, 24, 1, 3, 16, 15, 10, 9, 21, 2, 4, 29, 25, 22, 13, 26, 17, 30, 6, 20, 19, 8, 18, 7, 27, 12, 11, 23 Meta. NEOS: Argonne, Iowa, Northwestern, Wisconsin GRIDS Center overview for Base CAMP 10 Enabling Distributed Science www. grids-center. org

More Grid Applications l. Large-scale u data analysis Science increasingly relies on large datasets that benefit from distributed computing and storage l. Computer-in-the-loop u u u instrumentation Data from telescopes, synchrotrons, and electron microscopes are traditionally archived for batch processing Grids are permitting quasi-real-time analysis that enhances the instruments’ capabilities E. g. , with sophisticated “on-demand” software, astronomers may be able to use automated detection techniques to zoom in on solar flares as they occur GRIDS Center overview for Base CAMP 11 Enabling Distributed Science www. grids-center. org

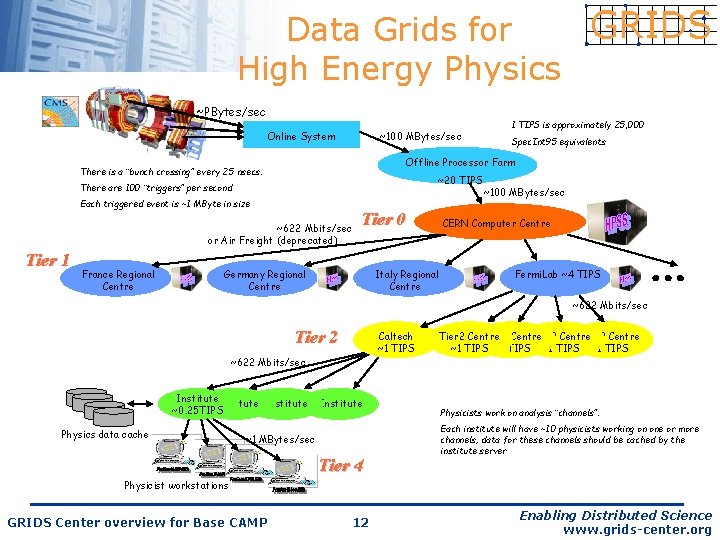

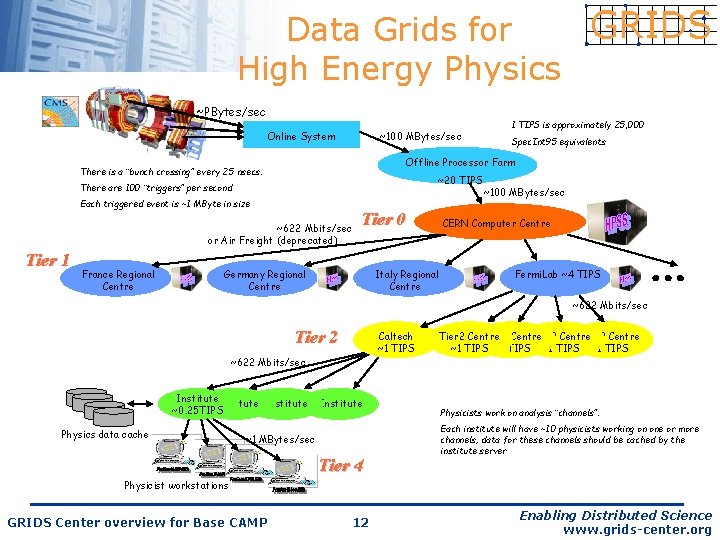

Data Grids for High Energy Physics ~PBytes/sec Online System ~100 MBytes/sec ~20 TIPS There are 100 “triggers” per second Each triggered event is ~1 MByte in size ~622 Mbits/sec or Air Freight (deprecated) France Regional Centre Spec. Int 95 equivalents Offline Processor Farm There is a “bunch crossing” every 25 nsecs. Tier 1 1 TIPS is approximately 25, 000 Tier 0 Germany Regional Centre Italy Regional Centre ~100 MBytes/sec CERN Computer Centre Fermi. Lab ~4 TIPS ~622 Mbits/sec Tier 2 Caltech ~1 TIPS ~622 Mbits/sec Institute ~0. 25 TIPS Physics data cache Institute ~1 MBytes/sec Tier 4 Tier 2 Centre ~1 TIPS Physicists work on analysis “channels”. Each institute will have ~10 physicists working on one or more channels; data for these channels should be cached by the institute server Physicist workstations GRIDS Center overview for Base CAMP 12 Enabling Distributed Science www. grids-center. org

Still More Grid Applications l. Collaborative u u work Researchers often want to aggregate not only data and computing power, but also human expertise Grids enable collaborative problem formulation and data analysis E. g. , an astrophysicist who has performed a large, multiterabyte simulation could let colleagues around the world simultaneously visualize the results, permitting real-time group discussion E. g. , civil engineers collaborate to design, execute, & analyze shake table experiments GRIDS Center overview for Base CAMP 13 Enabling Distributed Science www. grids-center. org

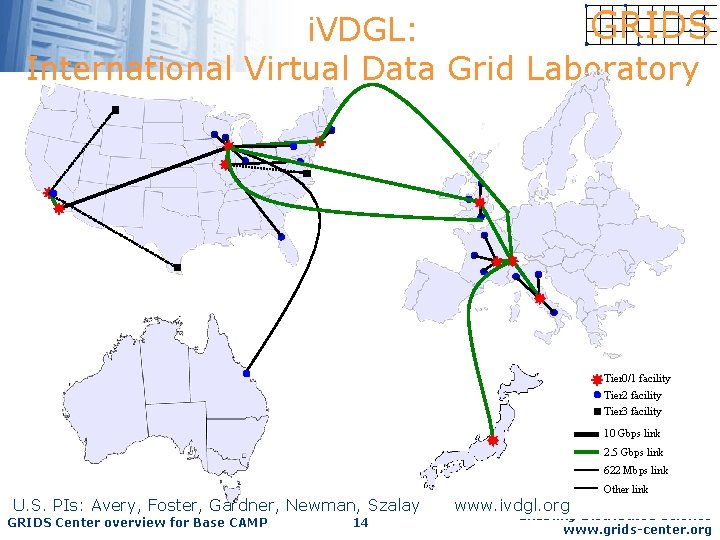

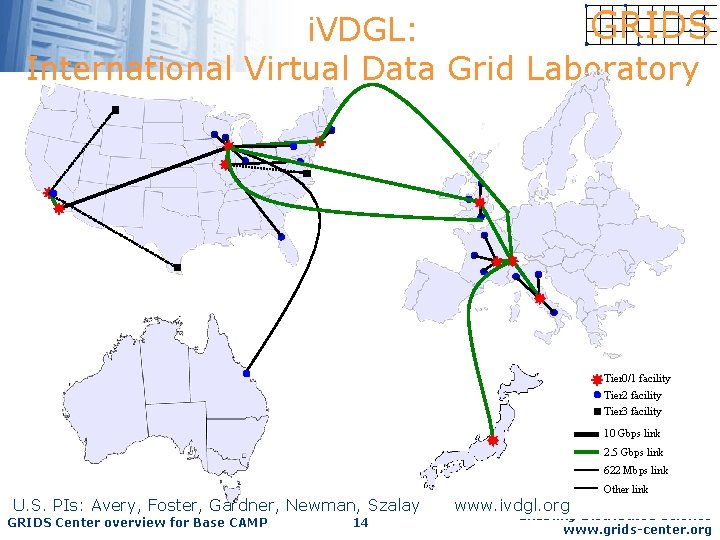

i. VDGL: International Virtual Data Grid Laboratory Tier 0/1 facility Tier 2 facility Tier 3 facility 10 Gbps link 2. 5 Gbps link 622 Mbps link Other link U. S. PIs: Avery, Foster, Gardner, Newman, Szalay GRIDS Center overview for Base CAMP 14 www. ivdgl. org Enabling Distributed Science www. grids-center. org

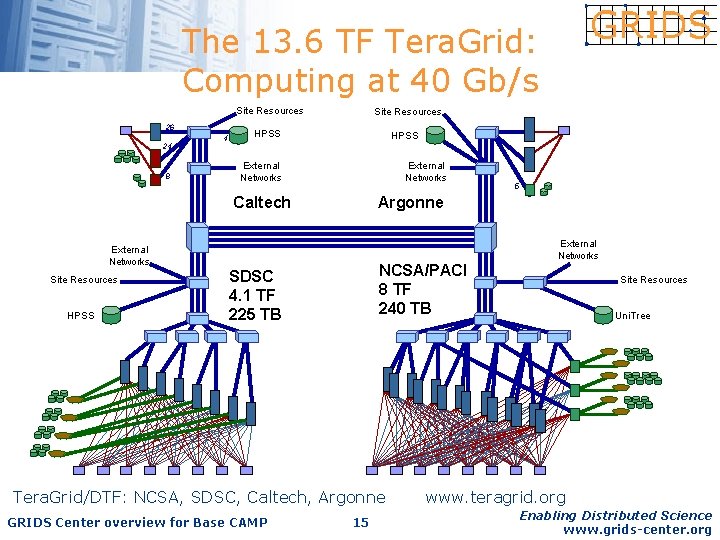

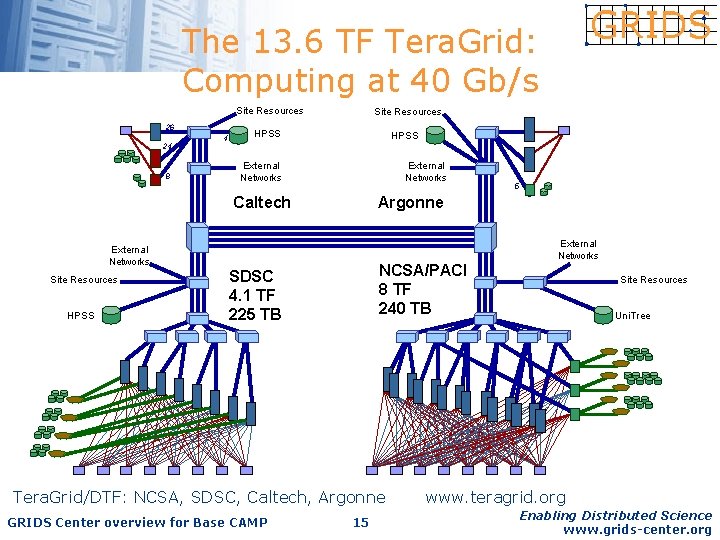

The 13. 6 TF Tera. Grid: Computing at 40 Gb/s Site Resources 26 24 8 4 Site Resources HPSS External Networks Caltech External Networks Site Resources HPSS Argonne NCSA/PACI 8 TF 240 TB SDSC 4. 1 TF 225 TB Tera. Grid/DTF: NCSA, SDSC, Caltech, Argonne GRIDS Center overview for Base CAMP 5 15 External Networks Site Resources Uni. Tree www. teragrid. org Enabling Distributed Science www. grids-center. org

Portal Example l NPACI Hot. Page u https: //hotpage. npaci. edu/ GRIDS Center overview for Base CAMP 16 Enabling Distributed Science www. grids-center. org

Software Components

General Approach l l l Define Grid protocols & APIs u Protocol-mediated access to remote resources u Integrate and extend existing standards u “On the Grid” = speak “Intergrid” protocols Develop a reference implementation u Open source Globus Toolkit u Client and server SDKs, services, tools, etc. Grid-enable wide variety of tools u l Globus Toolkit, FTP, SSH, Condor, SRB, MPI, … Learn through deployment and applications GRIDS Center overview for Base CAMP 18 Enabling Distributed Science www. grids-center. org

Software Components l l GRIDS Center software is a collection of packages developed in the academic research community u Protocol and architecture approach u Reference implementations Each package has at least 2 production level implementations before inclusion into the Grids Center Software Suite GRIDS Center overview for Base CAMP 19 Enabling Distributed Science www. grids-center. org

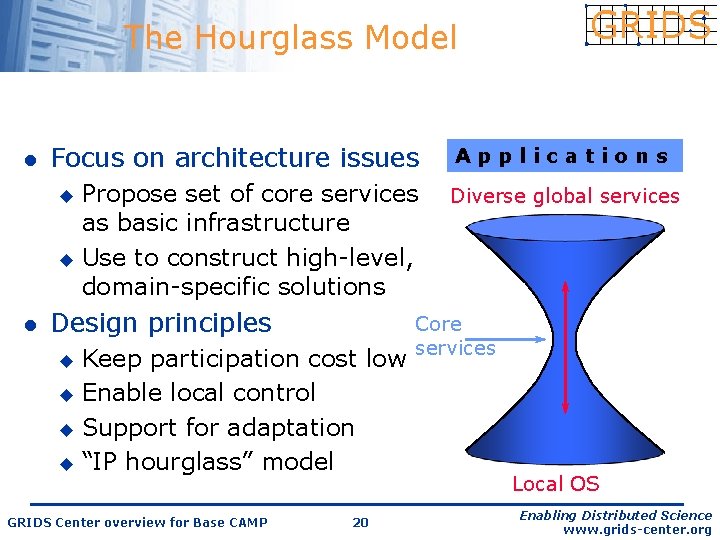

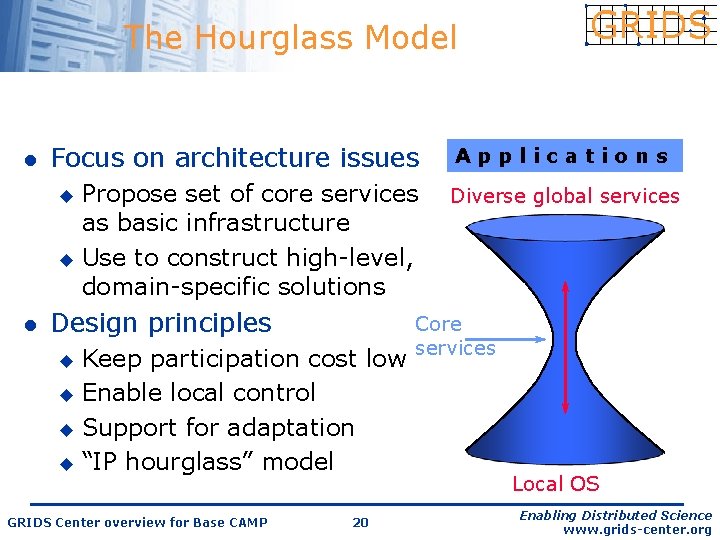

The Hourglass Model l Focus on architecture issues Applications Propose set of core services as basic infrastructure u Use to construct high-level, domain-specific solutions Diverse global services u l Design principles Keep participation cost low u Enable local control u Support for adaptation u “IP hourglass” model u GRIDS Center overview for Base CAMP 20 Core services Local OS Enabling Distributed Science www. grids-center. org

Software Components l Globus Toolkit u l Condor-G u l Advanced job submission and management infrastructure Network Weather Service u l Core Grid computing toolkit Network capability prediction KX. 509 / KCA (NMI-EDIT) u Kerberos to PKI GRIDS Center overview for Base CAMP 21 Enabling Distributed Science www. grids-center. org

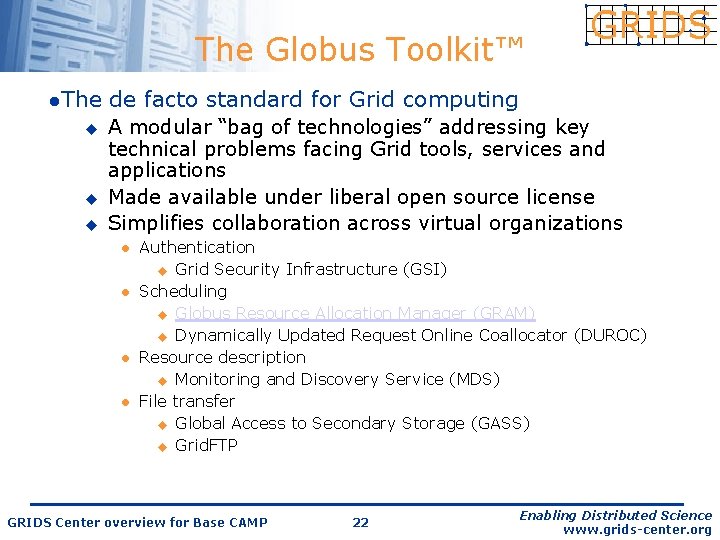

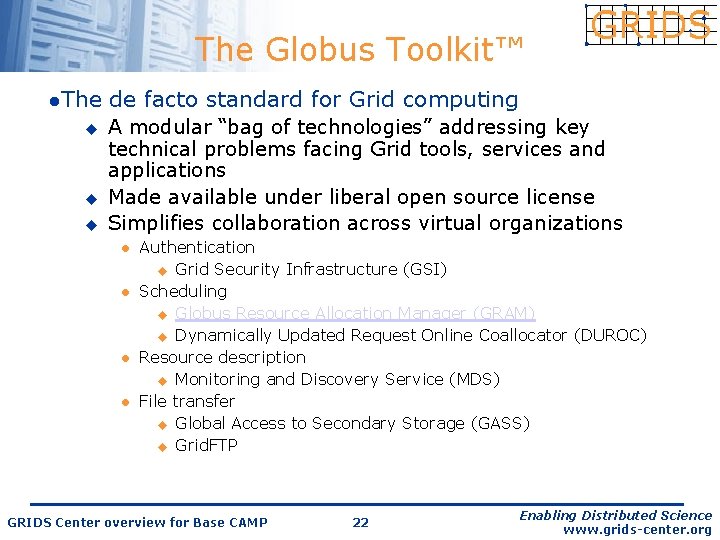

The Globus Toolkit™ l. The u u u de facto standard for Grid computing A modular “bag of technologies” addressing key technical problems facing Grid tools, services and applications Made available under liberal open source license Simplifies collaboration across virtual organizations l l Authentication u Grid Security Infrastructure (GSI) Scheduling u Globus Resource Allocation Manager (GRAM) u Dynamically Updated Request Online Coallocator (DUROC) Resource description u Monitoring and Discovery Service (MDS) File transfer u Global Access to Secondary Storage (GASS) u Grid. FTP GRIDS Center overview for Base CAMP 22 Enabling Distributed Science www. grids-center. org

Condor-G u NMI-R 1 will include Condor-G, an enhanced version of the core Condor software optimized to work with Globus Toolkit™ for managing Grid jobs GRIDS Center overview for Base CAMP 23 Enabling Distributed Science www. grids-center. org

Network Weather Service u u u From UC Santa Barbara, NWS monitors and dynamically forecasts performance of network and computational resources Uses a distributed set of performance sensors (network monitors, CPU monitors, etc. ) for instantaneous readings Numerical models’ ability to predict conditions is analogous to weather forecasting – hence the name l l l For use with the Globus Toolkit and Condor, allowing dynamic schedulers to provide statistical Quality-of-Service readings NWS forecasts end-to-end TCP/IP performance (bandwidth and latency), available CPU percentage and available nonpaged memory NWS automatically identifies the best forecasting technique for any given resource GRIDS Center overview for Base CAMP 24 Enabling Distributed Science www. grids-center. org

KX. 509 for Converting Kerberos Certificates to PKI l. Stand-alone u u u client program from the University of Michigan For a Kerberos-authenticated user, KX. 509 acquires a shortterm X. 509 certificate that can be used by PKI applications Stores the certificate in the local user's Kerberos ticket file Systems that already have a mechanism for removing unused kerberos credentials may also automatically remove the X. 509 credentials Web browser may then load a library (PKCS 11) to use these credentials for https The client reads X. 509 credentials from the user’s Kerberos cache and converts them to PEM, the format used by the Globus Toolkit GRIDS Center overview for Base CAMP 25 Enabling Distributed Science www. grids-center. org

GRIDS Software Packaging l. Grids Center software uses the Grid Packaging Technology 2. 0 u u Perl-based tool eases user installation and setup GPT 2: new version enables creation of RPMs l Lets users install from binaries with familiar packaging l Includes database of all packages, useful for verifying installations l. Packaging enables: u Dependency checking u User customization of configuration u Easy upgrades, patches GRIDS Center overview for Base CAMP 26 Enabling Distributed Science www. grids-center. org

Software Testing l. University of Wisconsin is in charge of testing the GRIDS software for NMI releases u Platforms to date: l l u Red. Hat 7. 2 on IA 32 Solaris 8. 0 on SPARC Release 2 additions: l l Red. Hat 7. 2 on IA 64 AIX-L l. Testing includes: u Builds u Quality assurance u Interoperability of GRIDS components GRIDS Center overview for Base CAMP 27 Enabling Distributed Science www. grids-center. org

Technical Support l. First-level u tech support handled at NCSA One-stop-shop address for users: l u nmi-support@nsf-middleware. org All queries go to NCSA, which responds within 24 hours l. Help requests that NCSA can’t answer get forwarded to people responsible for each of the components: u Globus Toolkit (U. of Chicago/Argonne/ISI) u Condor-G (U. of Wisconsin) u Network Weather Service (UC Santa Barbara) u KX. 509 (Michigan) u Pub. Cookie (U. Washington) u CPM GRIDS Center overview for Base CAMP 28 Enabling Distributed Science www. grids-center. org

Integration Issues l. NMI testbed sites will be early adopters, seeking integration of campus infrastructure and Grid computing l. Via NMI partnerships, GRIDS will help identify points of intersection and divergence between Grid and enterprise computing u Authorization, authentication and security u Directory services u Emphasis is on open standards and architectures as the route to successful collaboration GRIDS Center overview for Base CAMP 29 Enabling Distributed Science www. grids-center. org

A few specifics on the Globus Toolkit

Grid Security Infrastructure (GSI) l Globus Toolkit implements GSI protocols and APIs, to address Grid security needs l GSI protocols extends standard public key protocols l u Standards: X. 509 & SSL/TLS u Extensions: X. 509 Proxy Certificates & Delegation GSI extends standard GSS-API GRIDS Center overview for Base CAMP 31 Enabling Distributed Science www. grids-center. org

Generic Security Service API l The GSS-API is the IETF draft standard for adding authentication, delegation, message integrity, and message protection to apps u l GSS-API separates security from communication, which allows security to be easily added to existing communication code. u l For secure communication between two parties over a reliable channel (e. g. TCP) Effectively placing transformation filters on each end of the communication link Globus Toolkit components all use GSS-API GRIDS Center overview for Base CAMP 32 Enabling Distributed Science www. grids-center. org

Delegation l l Delegation = remote creation of a (second level) proxy credential u New key pair generated remotely on server u Proxy cert and public key sent to client u Clients signs proxy cert and returns it u Server (usually) puts proxy in /tmp Allows remote process to authenticate on behalf of the user u Remote process “impersonates” the user GRIDS Center overview for Base CAMP 33 Enabling Distributed Science www. grids-center. org

Limited Proxy l During delegation, the client can elect to delegate only a “limited proxy”, rather than a “full” proxy u l GRAM (job submission) client does this Each service decides whether it will allow authentication with a limited proxy u u Job manager service requires a full proxy Grid. FTP server allows either full or limited proxy to be used GRIDS Center overview for Base CAMP 34 Enabling Distributed Science www. grids-center. org

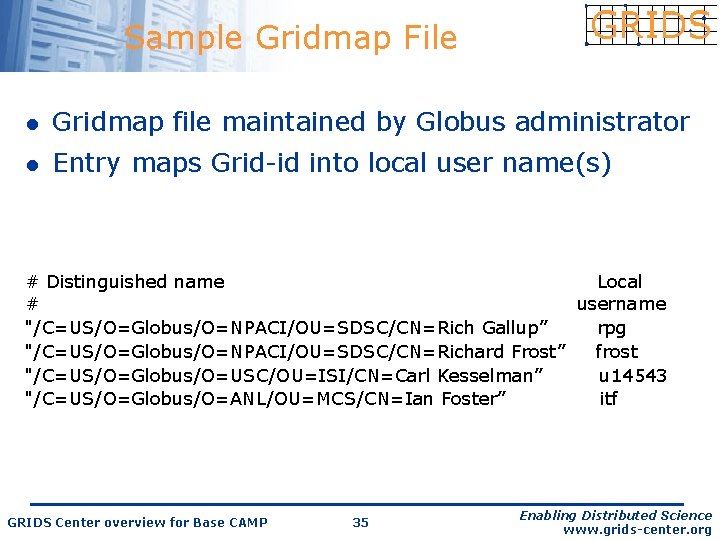

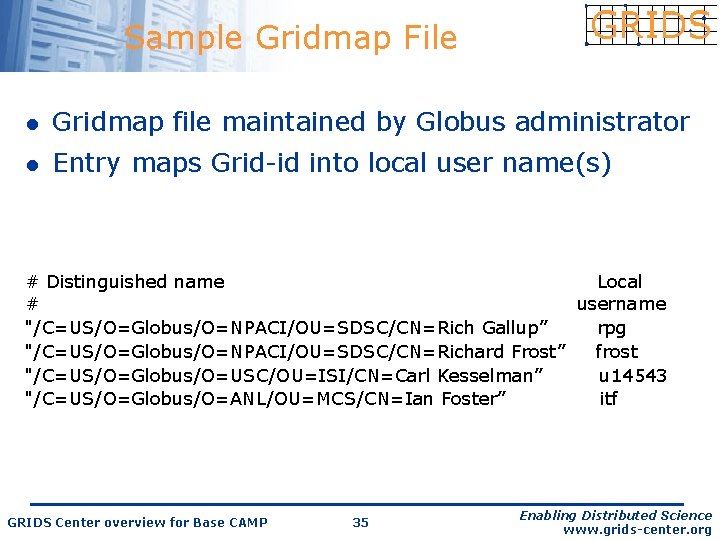

Sample Gridmap File l Gridmap file maintained by Globus administrator l Entry maps Grid-id into local user name(s) # Distinguished name Local # username "/C=US/O=Globus/O=NPACI/OU=SDSC/CN=Rich Gallup” rpg "/C=US/O=Globus/O=NPACI/OU=SDSC/CN=Richard Frost” frost "/C=US/O=Globus/O=USC/OU=ISI/CN=Carl Kesselman” u 14543 "/C=US/O=Globus/O=ANL/OU=MCS/CN=Ian Foster” itf GRIDS Center overview for Base CAMP 35 Enabling Distributed Science www. grids-center. org

Security Issues l GSI handles authentication, but authorization is a separate issue. u u u Management of authorization on a multiorganization grid is still an interesting problem. The grid-mapfile doesn’t scale well, and works only at the resource level, not the collective level. Data access exacerbates authorization issues, which has led us to CAS… GRIDS Center overview for Base CAMP 36 Enabling Distributed Science www. grids-center. org