Novelty Detection and Profile Tracking from Massive Data

- Slides: 35

Novelty Detection and Profile Tracking from Massive Data Jaime Carbonell Eugene Fink Santosh Ananthraman

Motivation Search for interesting patterns in large data sets

Motivation Search for interesting patterns in large data sets Current applications • Processing of intelligence data • Prediction of “natural” threats Future applications • Scientific discoveries • Analysis of business data • … and more …

Outline • Main results of the ARGUS project - Approximate matching - Streaming data - Novelty detection • More about approximate matching - Records and queries - Search for matches - Experimental results

Large data sets Large: From a million (106) to several billion (1010) records Data: Structured records with numbers, strings, and nominal values Sets: Databases and streams of records Specific sets: • Hospital admissions (1. 7 million records) • Network flow (5 trillion records) • Federal wire (simulated data)

Main results We have developed a system that addresses three problems: • Retrieval of approximate matches for known patterns • Processing of streaming data • Identification of new patterns and gradual changes in old patterns

Approximate matching Fast identification of approximate matches in large sets of records Examples • Misspelled names • Inexact numbers • Spatial proximity

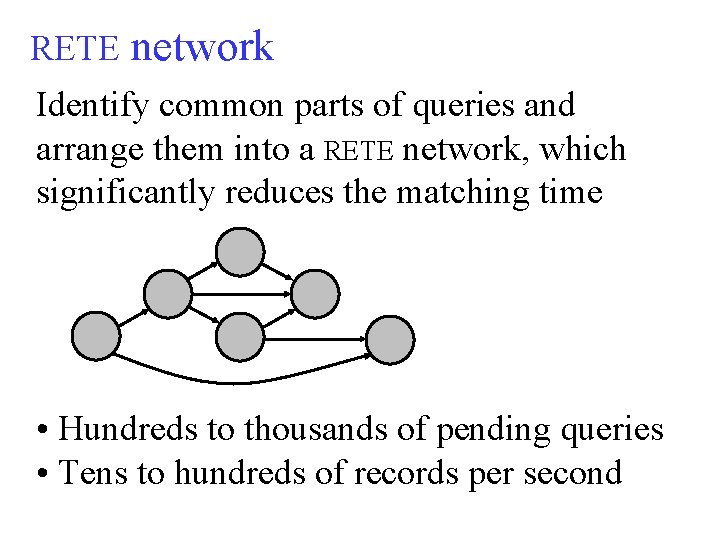

Streaming data Continuous search for matches in a stream of new records • Maintain a set of “pending” queries • Identify matches for these queries among incoming records

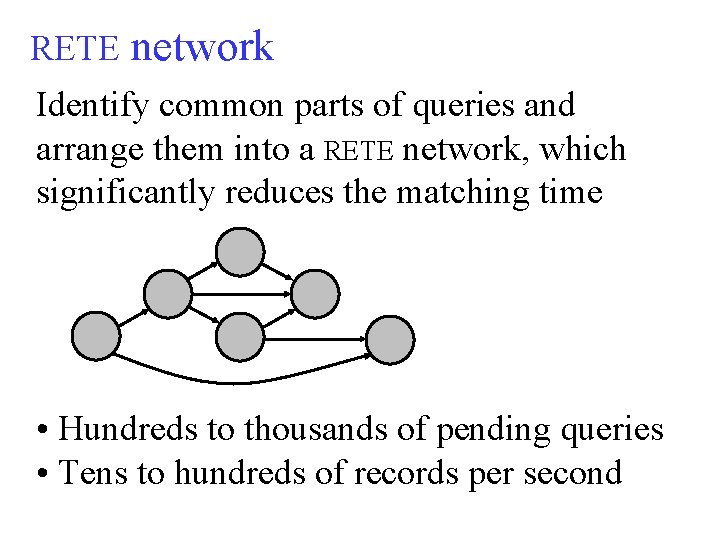

RETE network Identify common parts of queries and arrange them into a RETE network, which significantly reduces the matching time • Hundreds to thousands of pending queries • Tens to hundreds of records per second

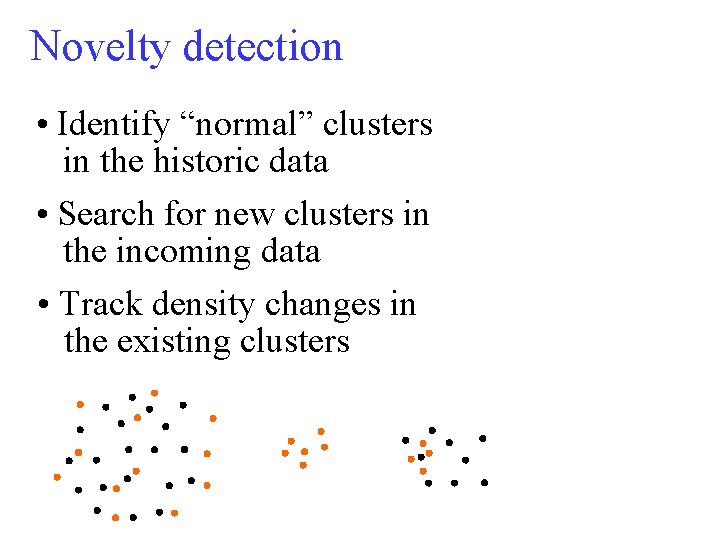

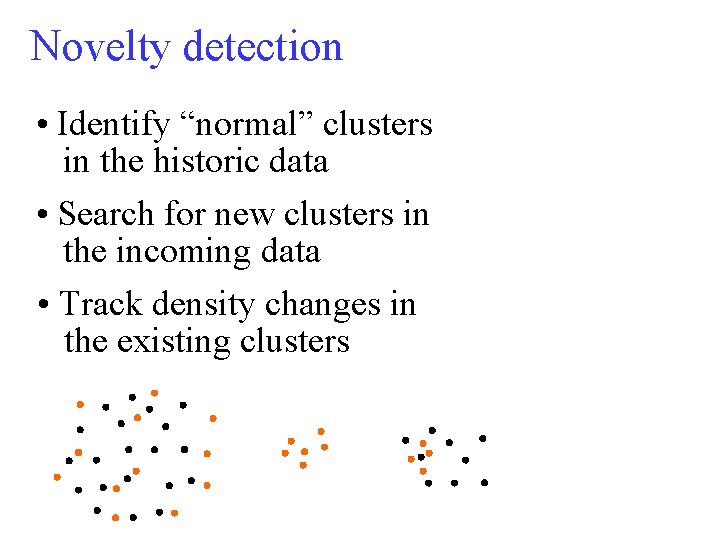

Novelty detection • Identify “normal” clusters in the historic data • Search for new clusters in the incoming data • Track density changes in the existing clusters

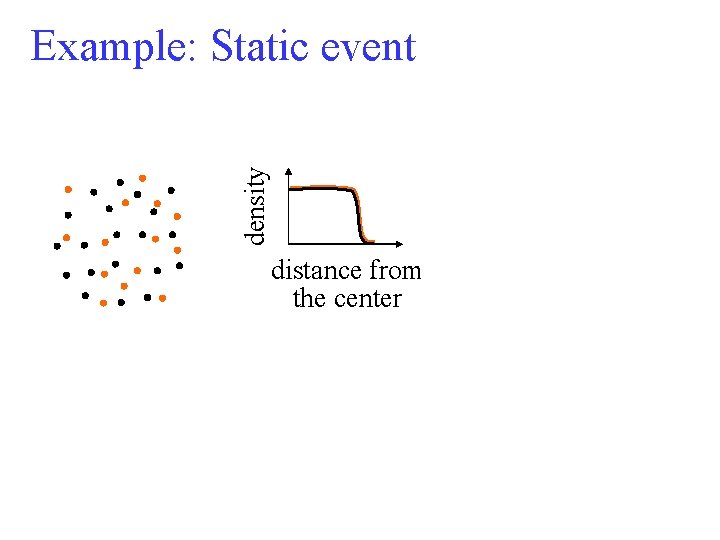

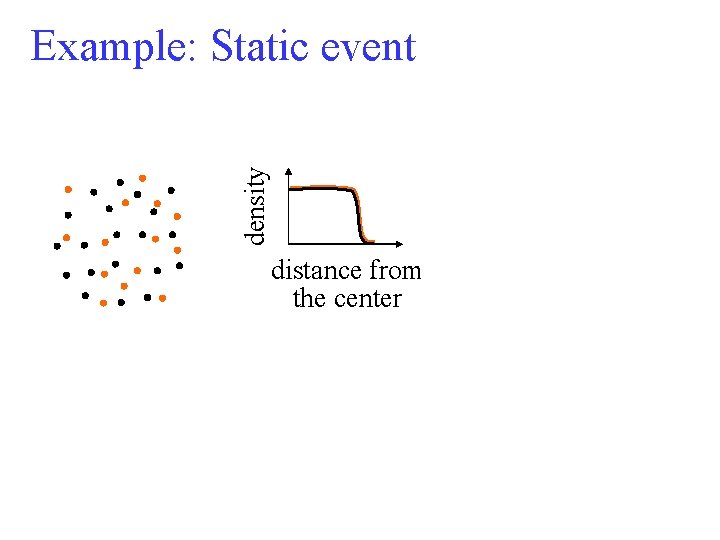

density Example: Static event distance from the center

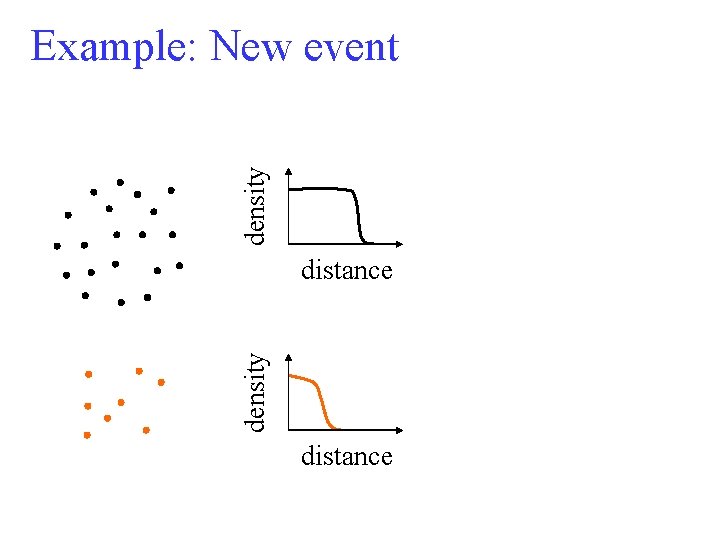

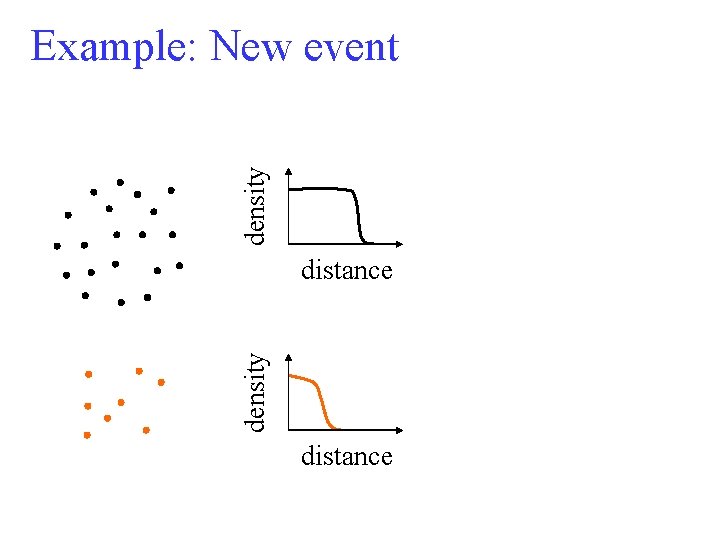

density Example: New event density distance

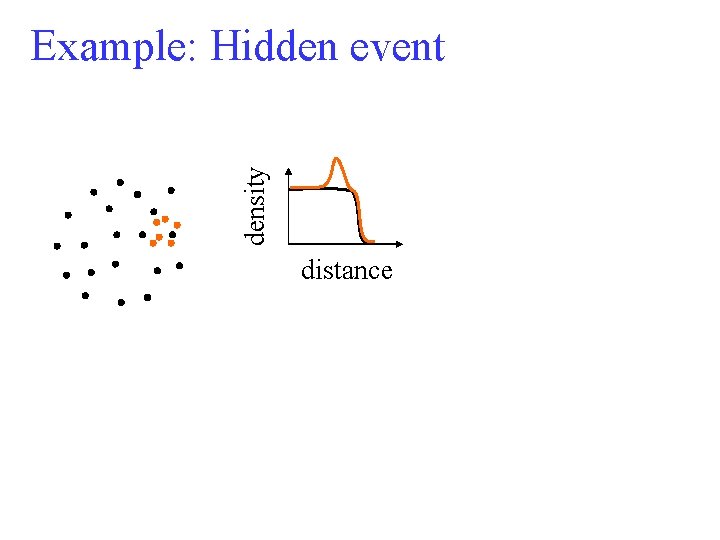

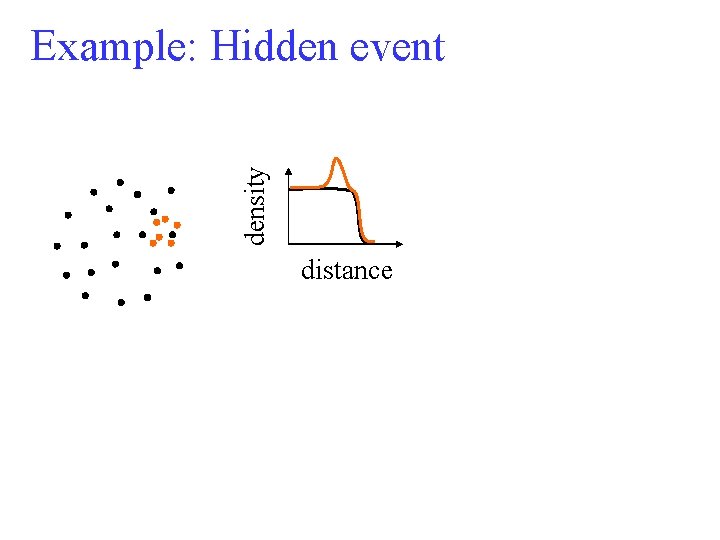

density Example: Hidden event distance

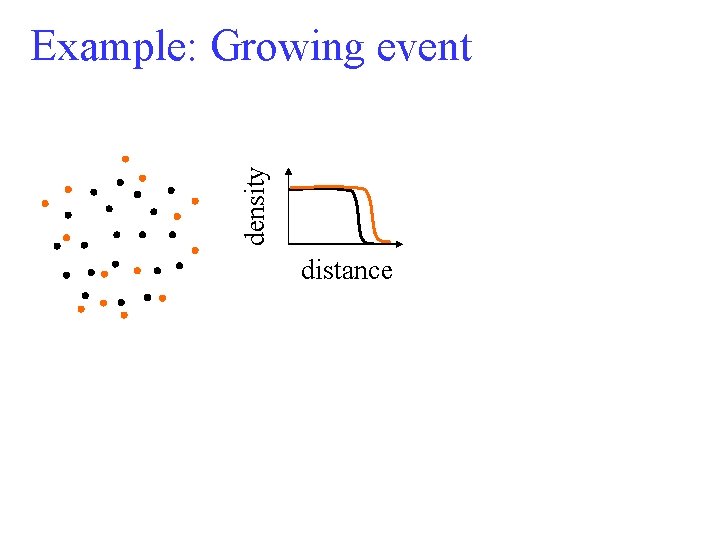

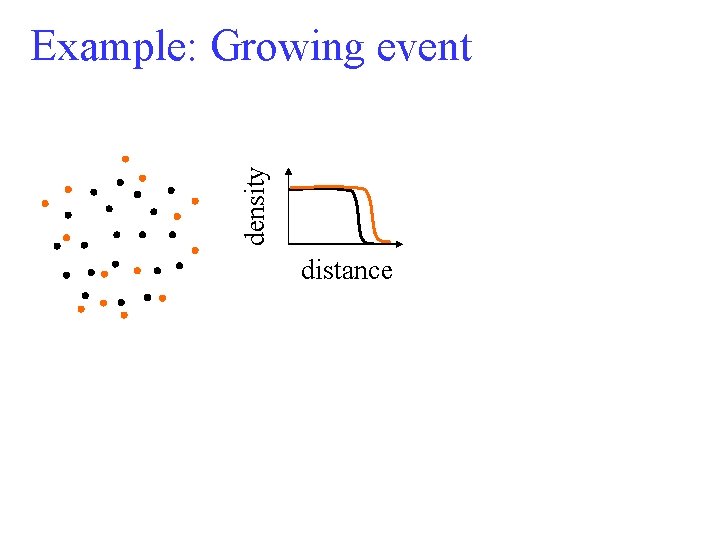

density Example: Growing event distance

Visualization • Display of records, clusters, and queries in two and three dimensions • Access to data tables and analysis results

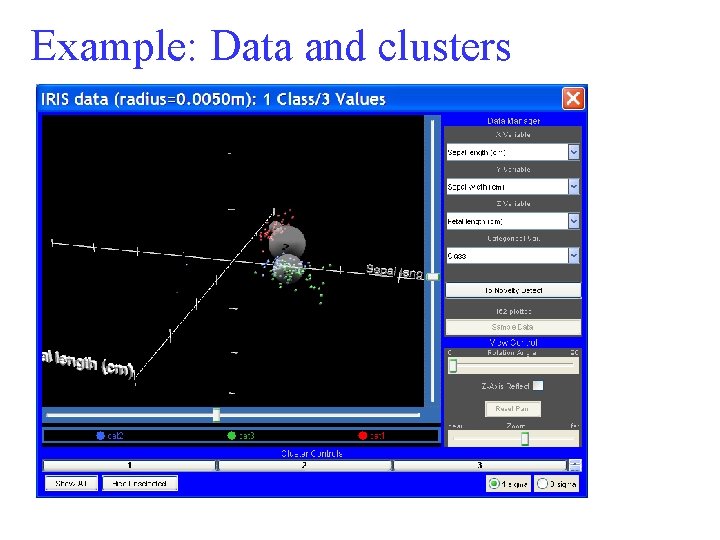

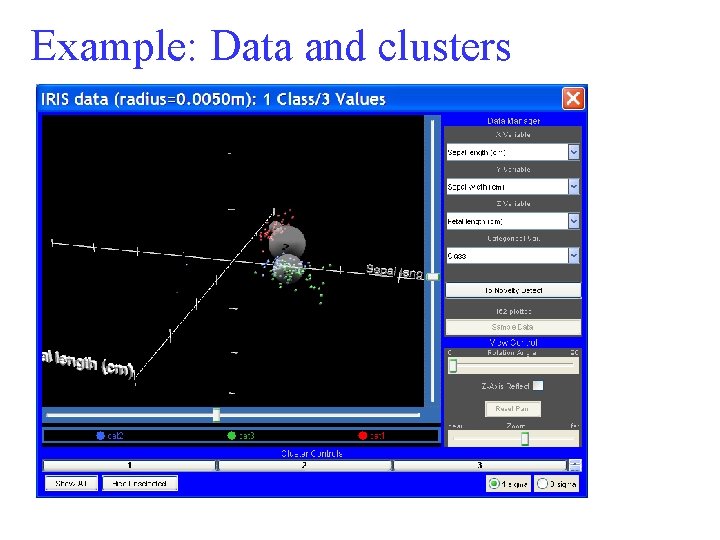

Example: Data and clusters

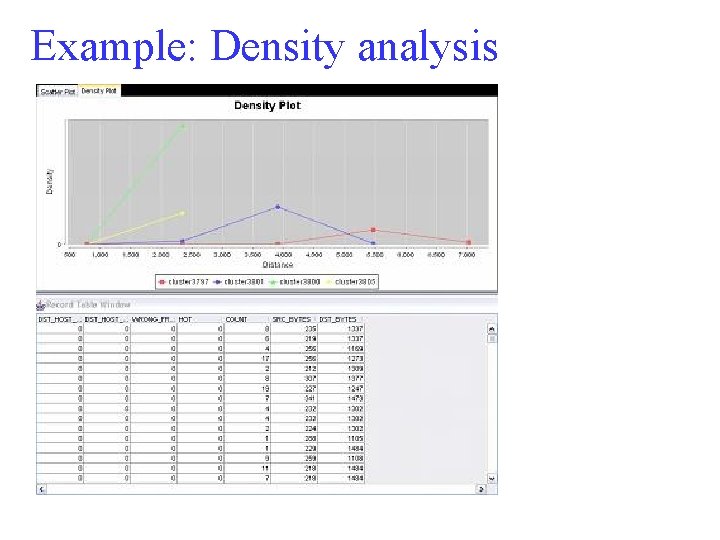

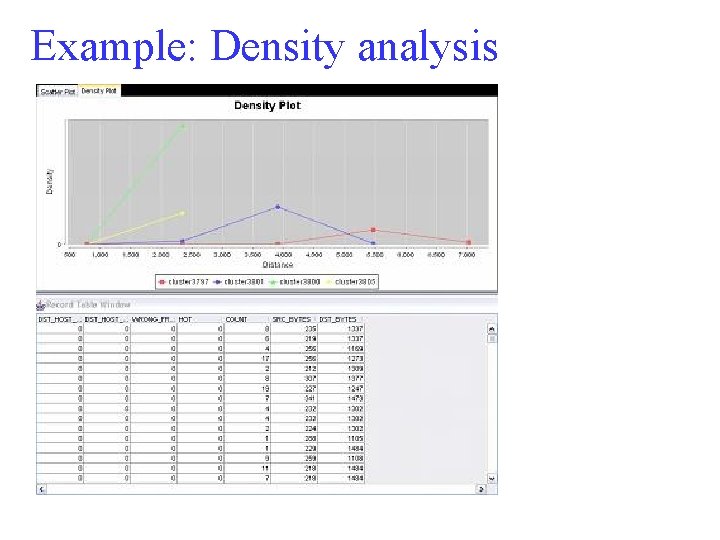

Example: Density analysis

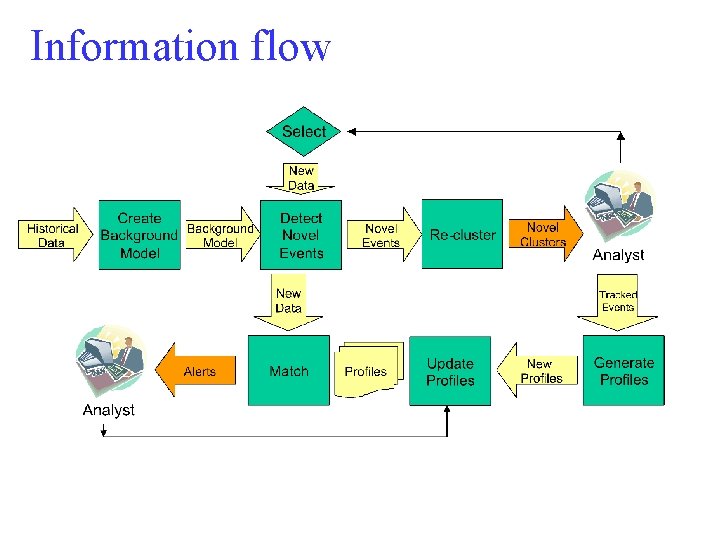

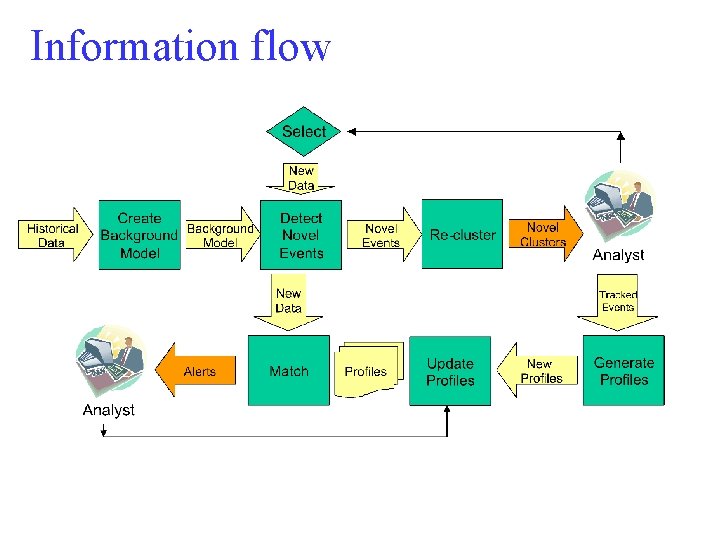

Information flow

Outline • Main results of the ARGUS project - Approximate matching - Streaming data - Novelty detection • More about approximate matching - Records and queries - Search for matches - Experimental results

Motivation Retrieval of relevant records based on partially inaccurate information • Inaccurate records • Inaccurate queries • Incomplete knowledge

Table of records We specify a table of records by a list of attributes Example We can describe patients in a hospital by their sex, age, and diagnosis

Records and queries A record includes a specific value for each attribute A query may include lists of values and numeric ranges Example Record Sex: female Age: 30 Dx: asthma Query Sex: male, female Age: 20. . 40 Dx: asthma, flu

Query types A point query includes a specific value for each attribute A region query includes lists of values or numeric ranges Example Point query Sex: female Age: 30 Dx: asthma Region query Sex: male, female Age: 20. . 40 Dx: asthma, flu

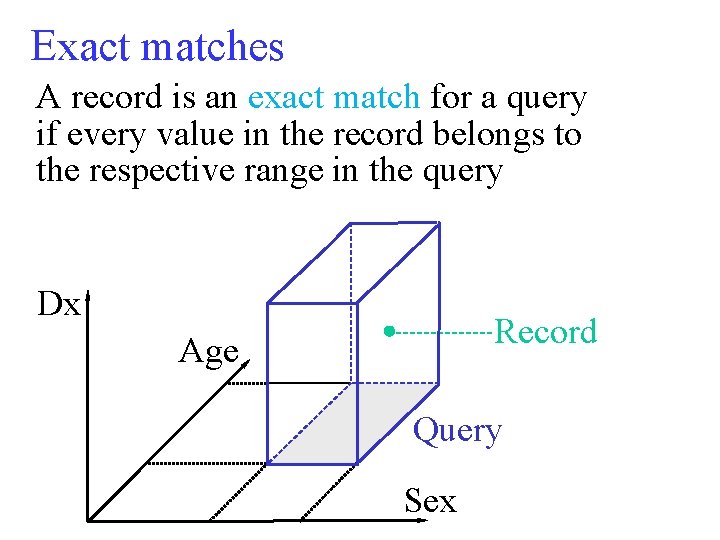

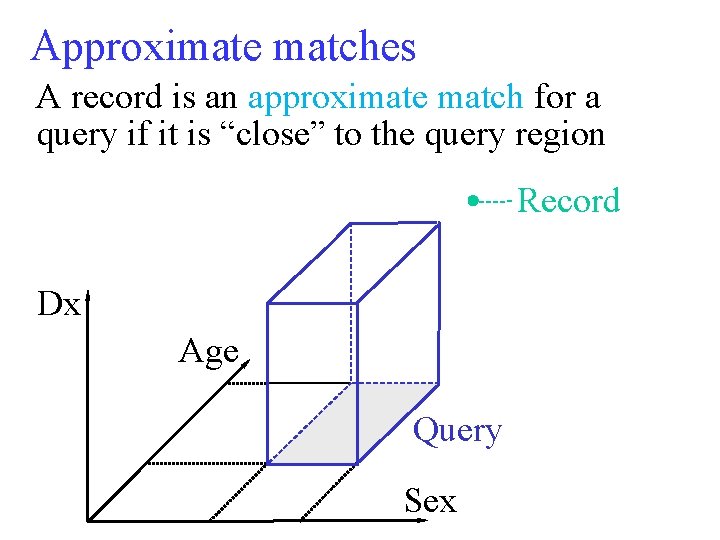

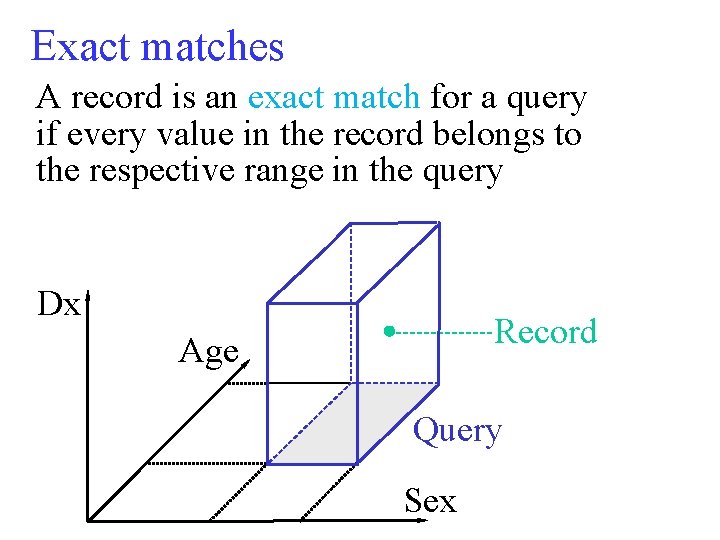

Exact matches A record is an exact match for a query if every value in the record belongs to the respective range in the query Dx Record Age Query Sex

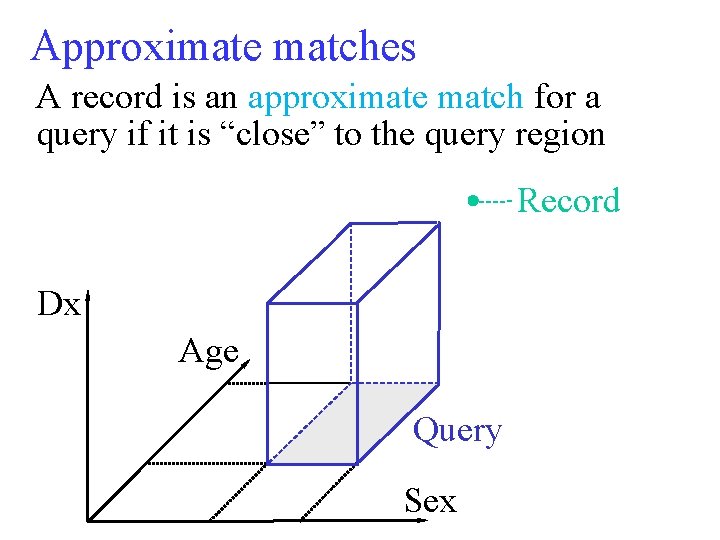

Approximate matches A record is an approximate match for a query if it is “close” to the query region Record Dx Age Query Sex

Approximate queries An approximate query includes • Point or region • Distance function • Number of matches • Distance limit

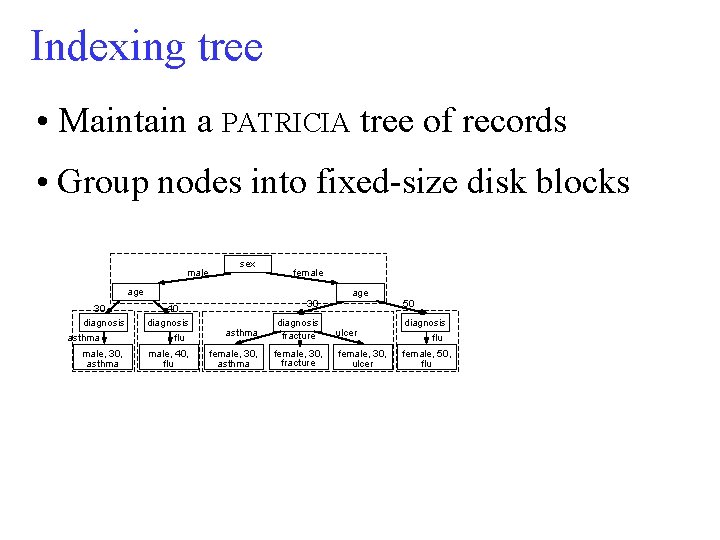

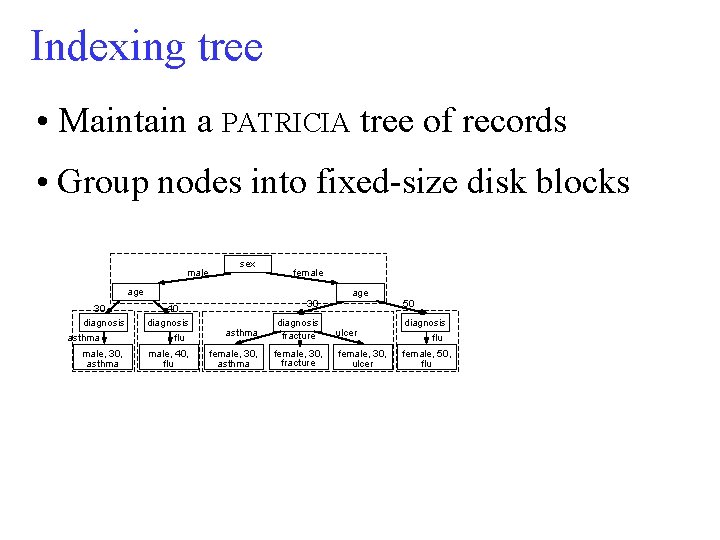

Indexing tree • Maintain a PATRICIA tree of records • Group nodes into fixed-size disk blocks male sex female age 30 diagnosis asthma male, 30, asthma 40 diagnosis flu male, 40, flu 30 asthma female, 30, asthma age diagnosis fracture ulcer female, 30, fracture female, 30, ulcer 50 diagnosis flu female, 50, flu

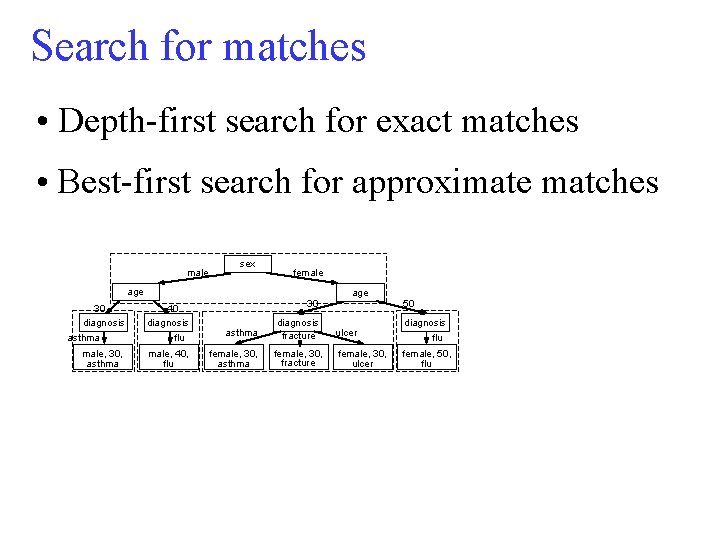

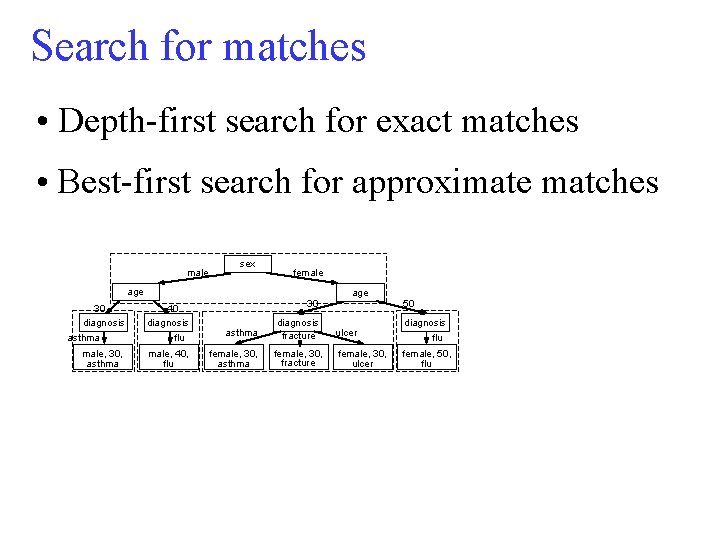

Search for matches • Depth-first search for exact matches • Best-first search for approximate matches male sex female age 30 diagnosis asthma male, 30, asthma 40 diagnosis flu male, 40, flu 30 asthma female, 30, asthma age diagnosis fracture ulcer female, 30, fracture female, 30, ulcer 50 diagnosis flu female, 50, flu

Performance Experiments with a database of all patients admitted to Massachusetts hospitals from October 2000 to September 2002 • Twenty-one attributes • 1. 7 million records Use of a Pentium computer • 2. 4 GHz CPU • 1 Gbyte memory • 400 MHz bus

Variables Control variables • Number of records • Memory size • Query type Measurements • Retrieval time

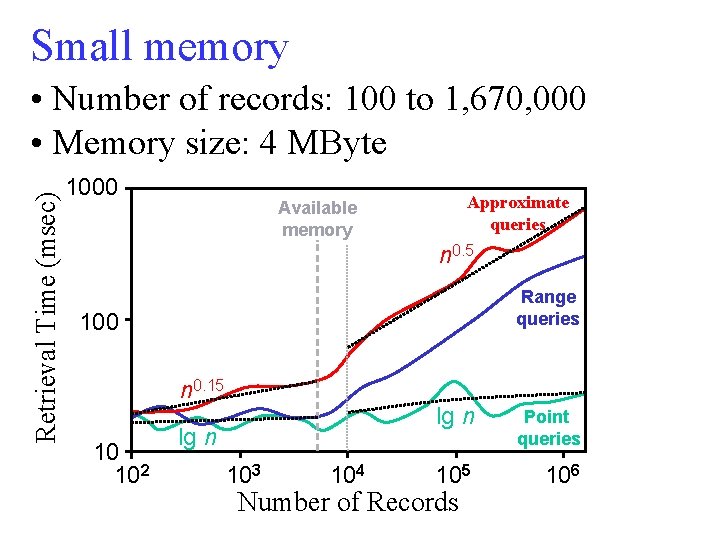

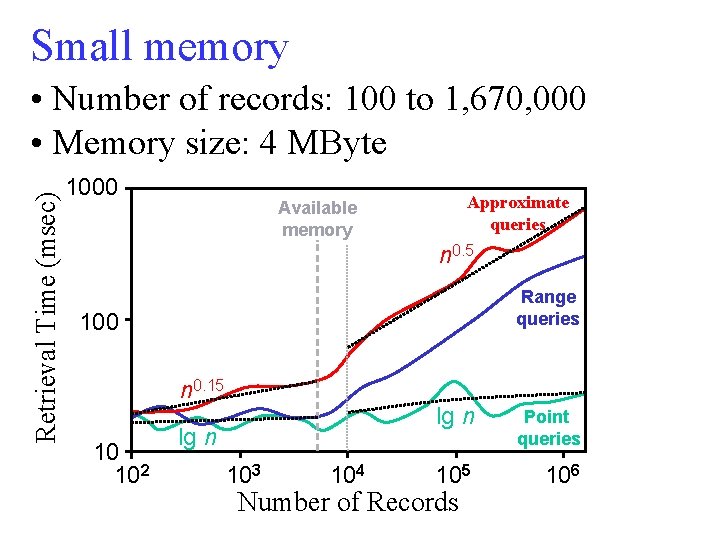

Small memory Retrieval Time (msec) • Number of records: 100 to 1, 670, 000 • Memory size: 4 MByte 1000 Approximate queries Available memory n 0. 5 Range queries 100 n 0. 15 10 102 lg n 103 104 lg n Point queries 105 106 Number of Records

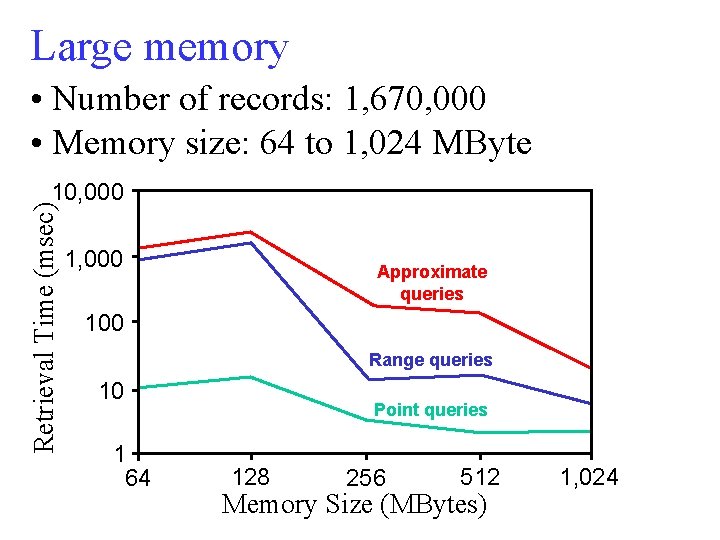

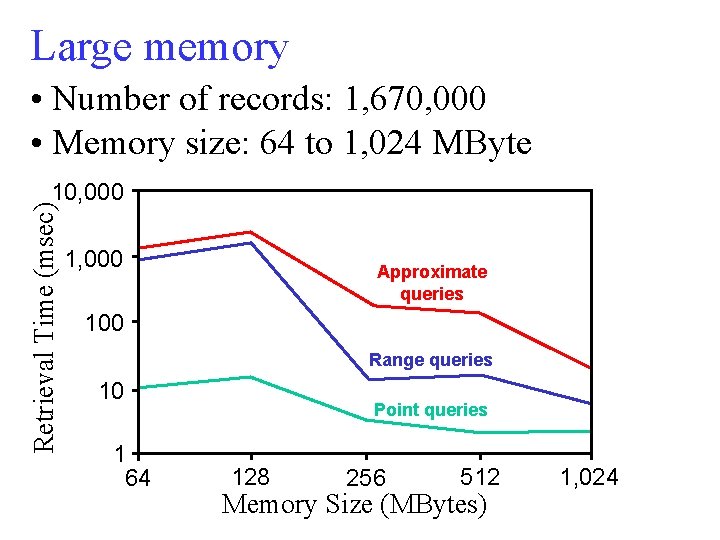

Large memory • Number of records: 1, 670, 000 • Memory size: 64 to 1, 024 MByte Retrieval Time (msec) 10, 000 1, 000 Approximate queries 100 Range queries 10 1 64 Point queries 128 256 512 Memory Size (MBytes) 1, 024

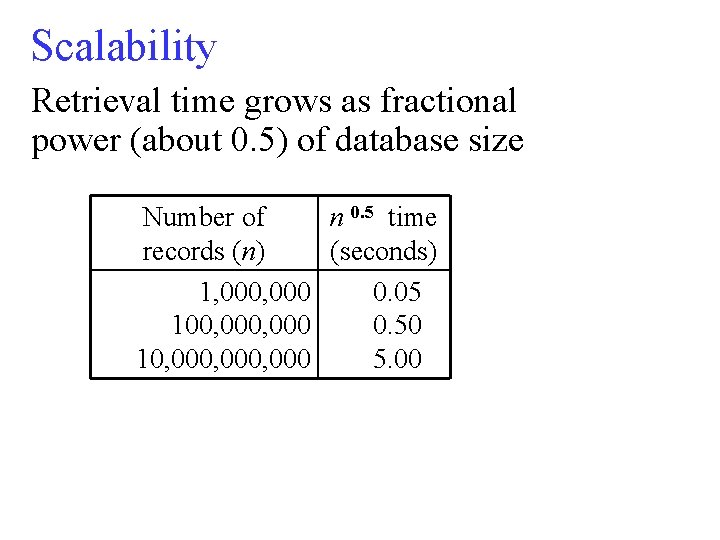

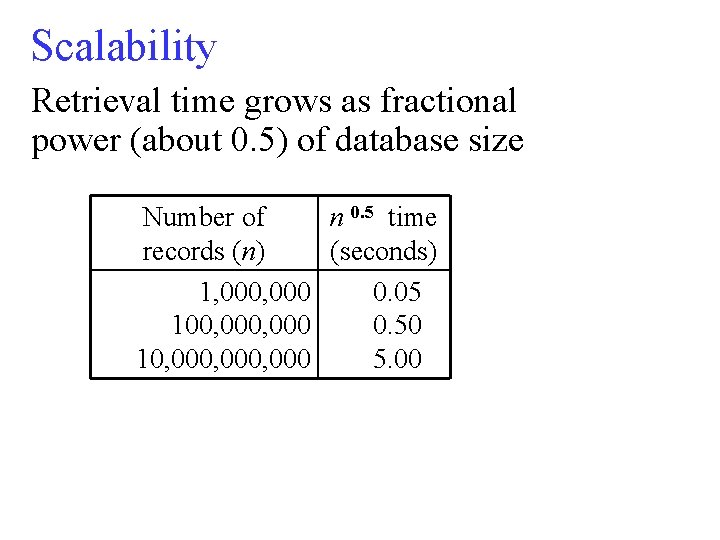

Scalability Retrieval time grows as fractional power (about 0. 5) of database size Number of n 0. 5 time records (n) (seconds) 1, 000 0. 05 100, 000 0. 50 10, 000, 000 5. 00 . . .

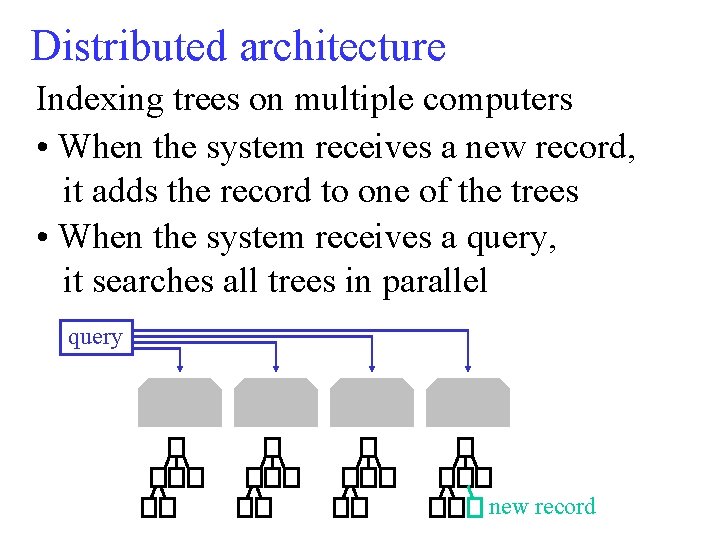

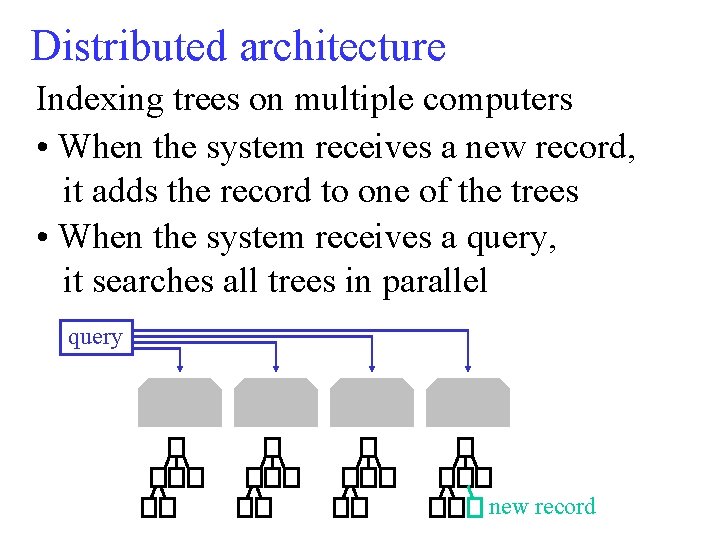

Distributed architecture Indexing trees on multiple computers • When the system receives a new record, it adds the record to one of the trees • When the system receives a query, it searches all trees in parallel query new record

Conclusions • We have developed a set of tools for analysis of massive structured data • Experiments have shown that it improves the productivity of intelligence analysts • Future work includes development of more tools and application to other domains