Nonrigid Object Tracking by Incremental Gradient Boosting Jeany

Non-rigid Object Tracking by Incremental Gradient Boosting Jeany Son 2014. 10. 2.

Outline § Tracking-by-detection approach • Non-rigid object tracking and rough segmentation Similar concept to Hough-forest › But we do not use voting › Robust to transformation (e. g. scale, rotation, non-rigid motion) § Background (Gradient boosting, online boosting) § Incremental gradient boosting § Non-rigid object tracking • Not limited on bounding box • Rough Segmentation of object 2

Gradient Boosting § Has been widely used to derive boosting procedures for • • Regression Multiple Instance Learning Semi-supervised learning etc. 3

Gradient Boost § Gradient Boosting = Gradient Descent + Boosting • Analogous to line search in steepest descent • Construct the new base-learners to be maximally correlated with the negative gradient of the loss function, associated with the whole ensemble. • Arbitrary loss functions can be applied 4

Gradient Boost § Choose new function h to be most correlated with –g(x) § Classic least-squares minimization problem 5

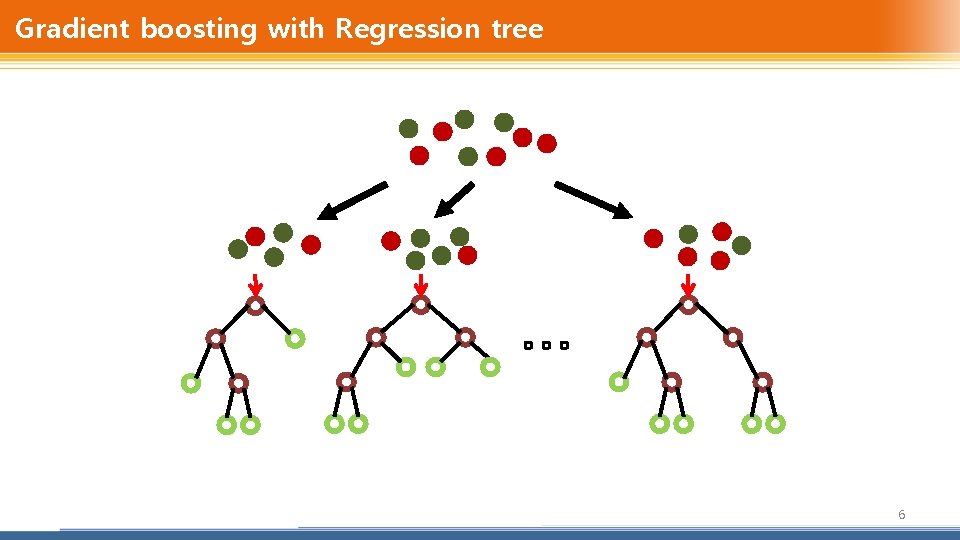

Gradient boosting with Regression tree 6

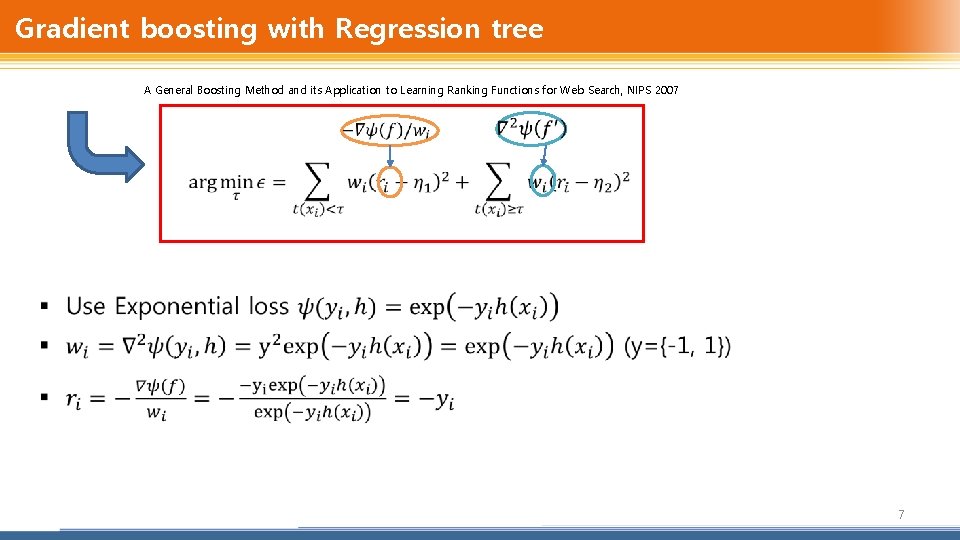

Gradient boosting with Regression tree § A General Boosting Method and its Application to Learning Ranking Functions for Web Search, NIPS 2007 7

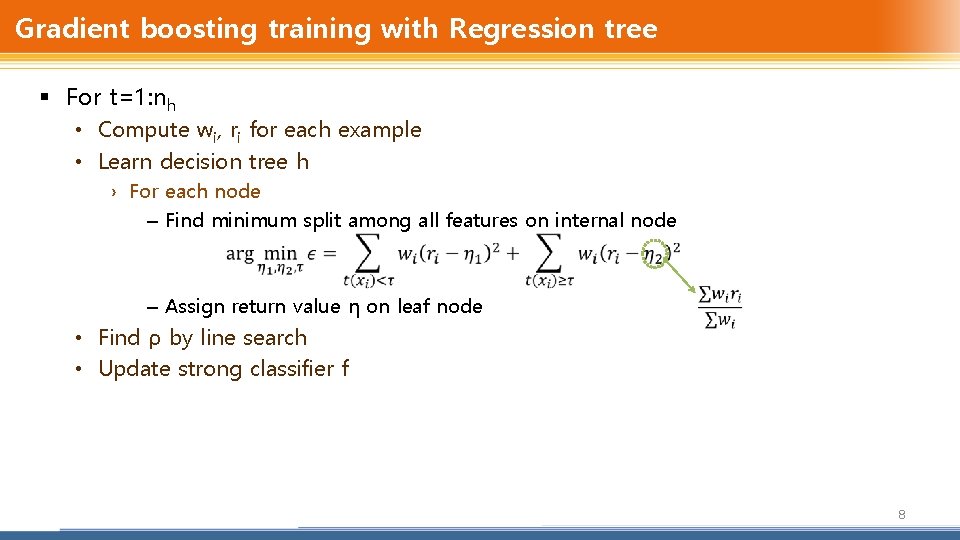

Gradient boosting training with Regression tree § For t=1: nh • Compute wi, ri for each example • Learn decision tree h › For each node – Find minimum split among all features on internal node – Assign return value η on leaf node • Find ρ by line search • Update strong classifier f 8

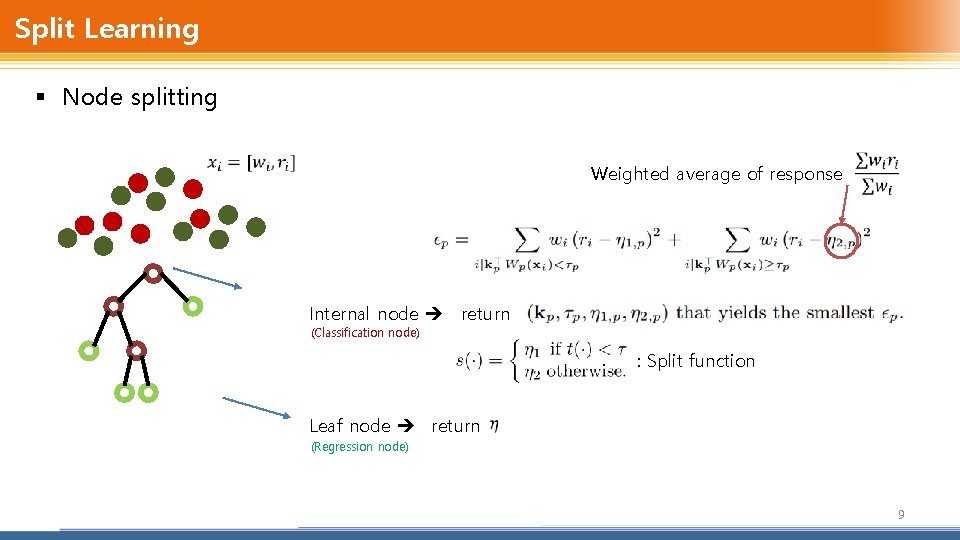

Split Learning § Node splitting Weighted average of response Internal node return (Classification node) : Split function Leaf node return (Regression node) 9

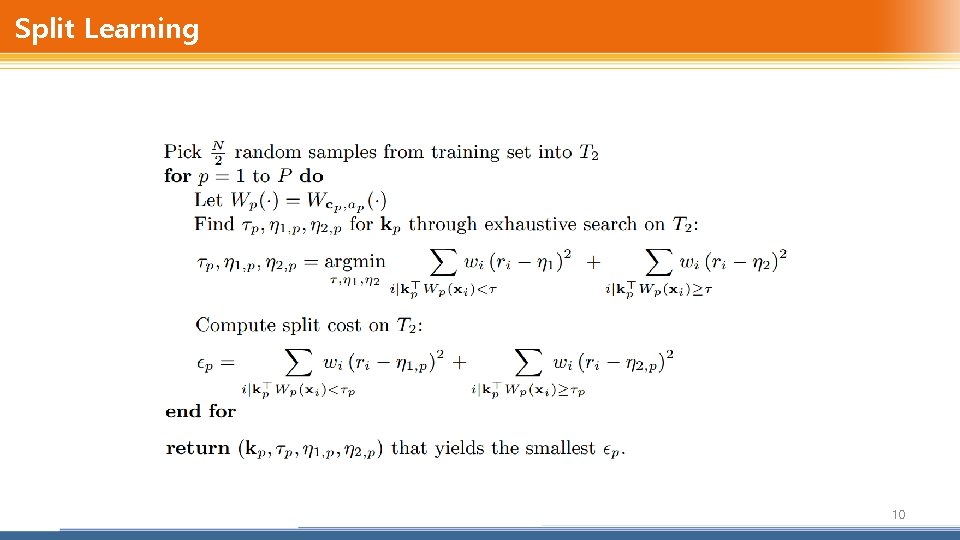

Split Learning 10

Online Ensembles § Online boosting • Real-time Tracking via On-line Boosting, BMVA 2006 • Gradient Feature Selection for Online Boosting, ICCV 2009 § Incremental boosting • Incremental Learning of Boosted Face Detector, ICCV 2007 • Tracking concept change with Incremental Boosting by Minimization of the Evolving Exponential Loss, ECML 2011 § Online Random Forest • Online Random Forest, OLCV 2009 • Hough-based Tracking of Non-Rigid Objects, ICCV 2011 11

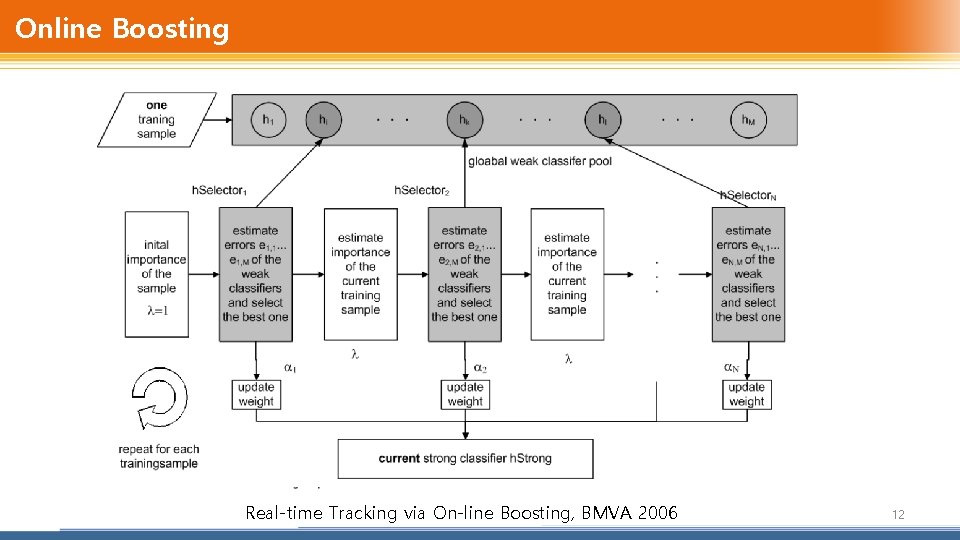

Online Boosting Real-time Tracking via On-line Boosting, BMVA 2006 12

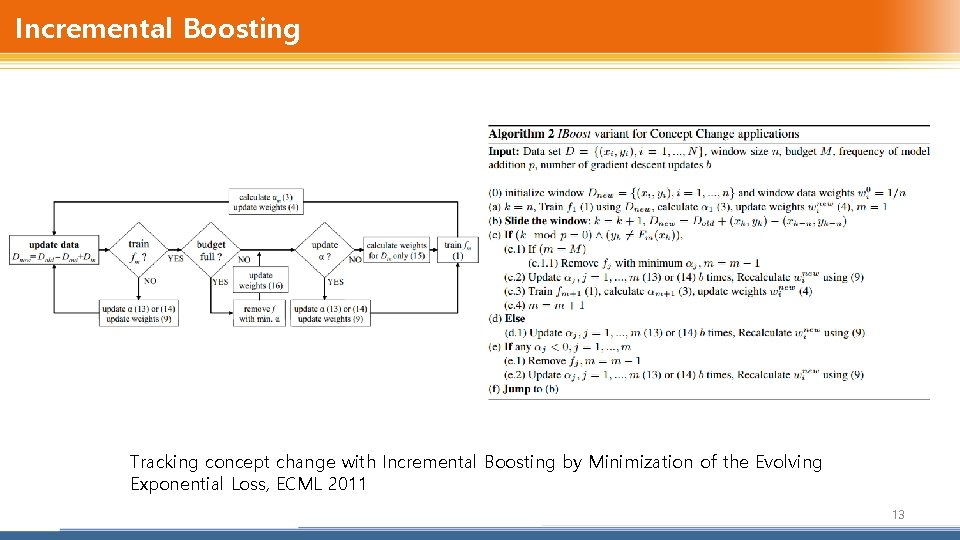

Incremental Boosting Tracking concept change with Incremental Boosting by Minimization of the Evolving Exponential Loss, ECML 2011 13

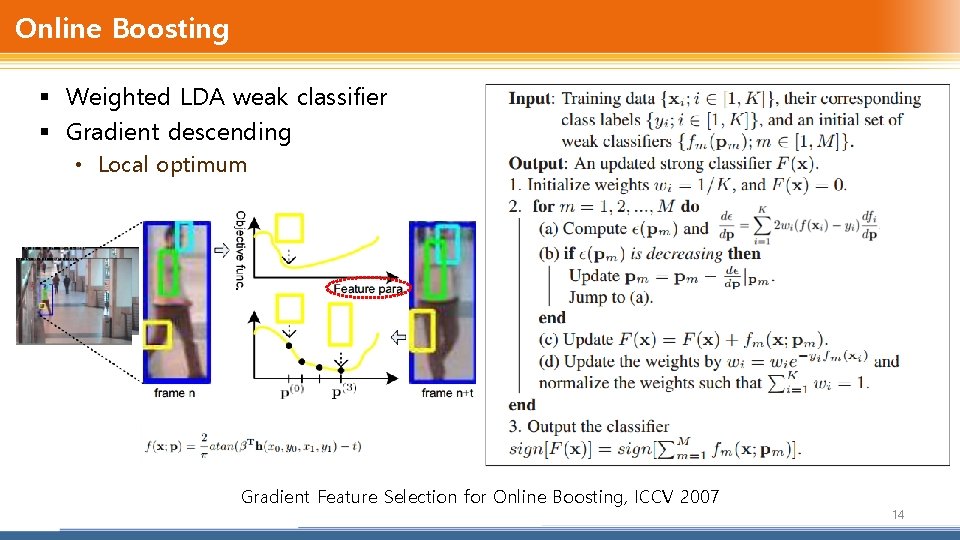

Online Boosting § Weighted LDA weak classifier § Gradient descending • Local optimum Gradient Feature Selection for Online Boosting, ICCV 2007 14

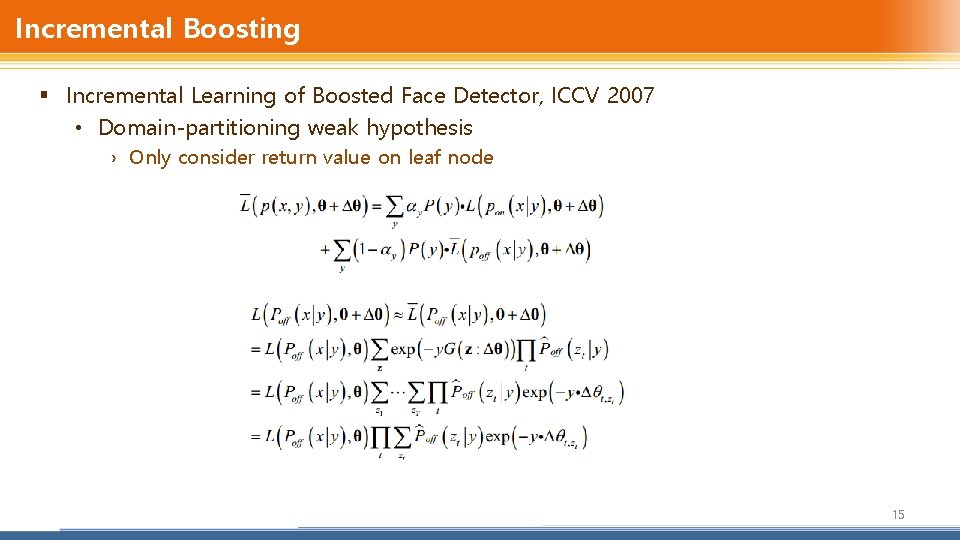

Incremental Boosting § Incremental Learning of Boosted Face Detector, ICCV 2007 • Domain-partitioning weak hypothesis › Only consider return value on leaf node 15

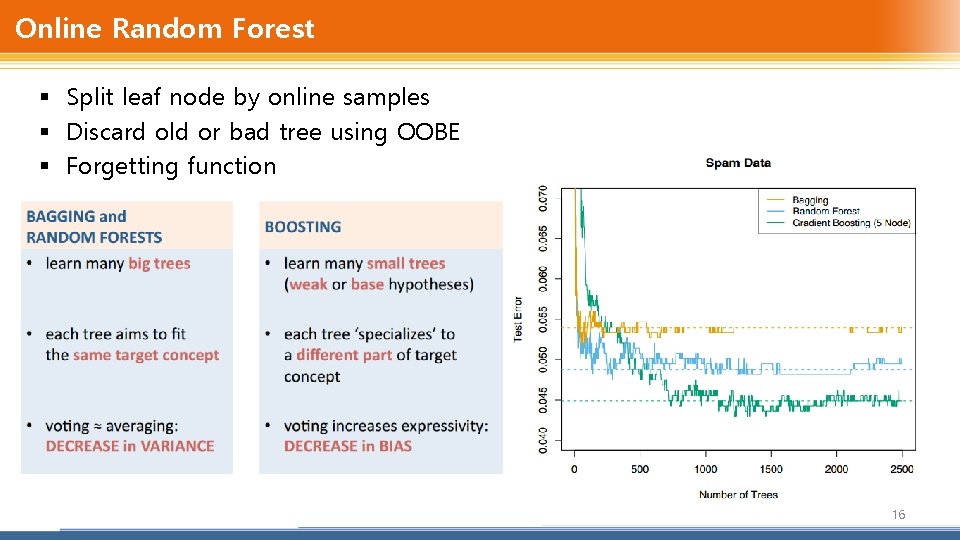

Online Random Forest § Split leaf node by online samples § Discard old or bad tree using OOBE § Forgetting function 16

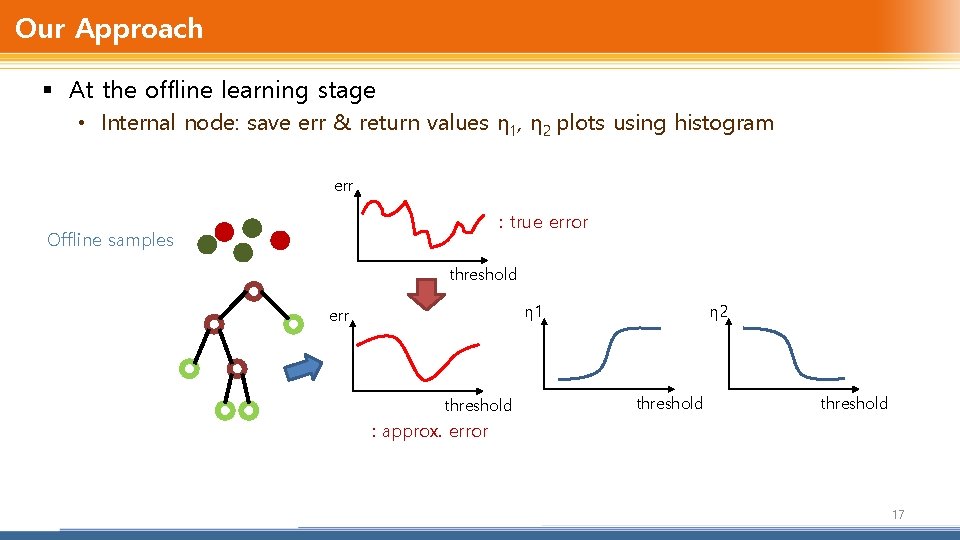

Our Approach § At the offline learning stage • Internal node: save err & return values η 1, η 2 plots using histogram err : true error Offline samples threshold η 1 err threshold η 2 threshold : approx. error 17

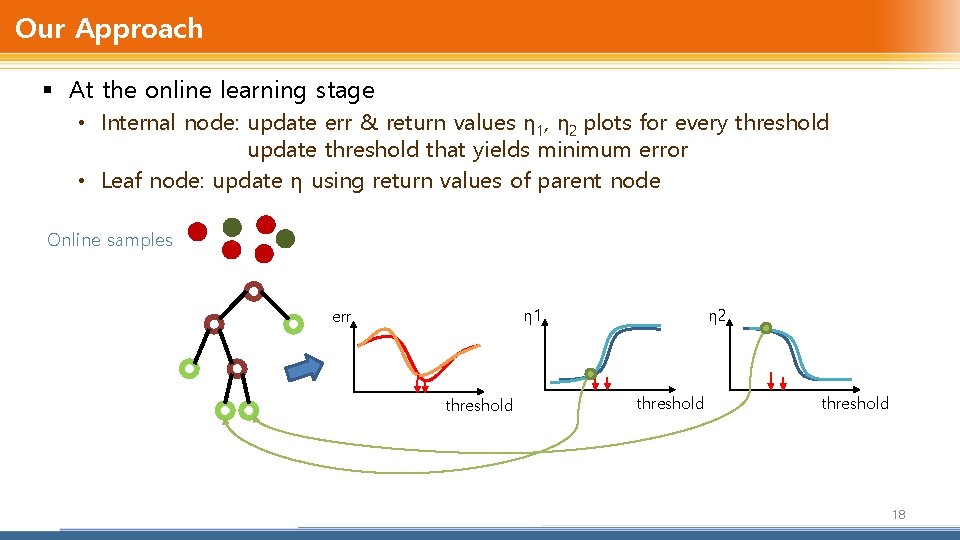

Our Approach § At the online learning stage • Internal node: update err & return values η 1, η 2 plots for every threshold update threshold that yields minimum error • Leaf node: update η using return values of parent node Online samples η 1 err threshold η 2 threshold 18

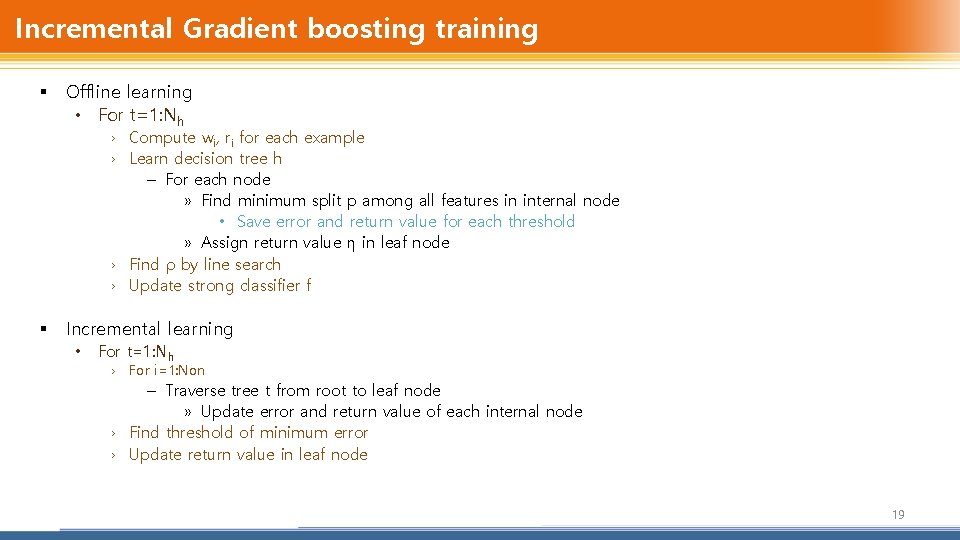

Incremental Gradient boosting training § Offline learning • For t=1: Nh › Compute wi, ri for each example › Learn decision tree h – For each node » Find minimum split p among all features in internal node • Save error and return value for each threshold » Assign return value η in leaf node › Find ρ by line search › Update strong classifier f § Incremental learning • For t=1: Nh › For i=1: Non – Traverse tree t from root to leaf node » Update error and return value of each internal node › Find threshold of minimum error › Update return value in leaf node 19

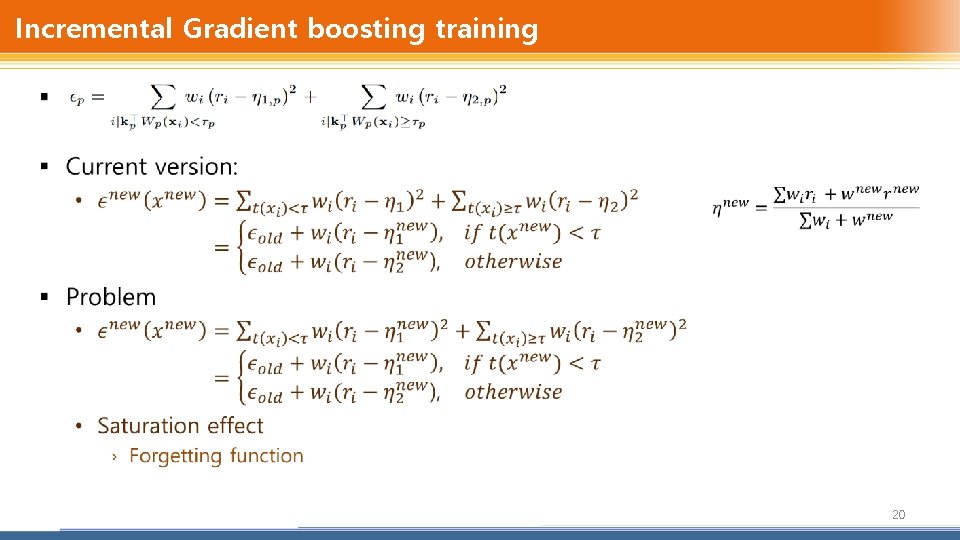

Incremental Gradient boosting training § 20

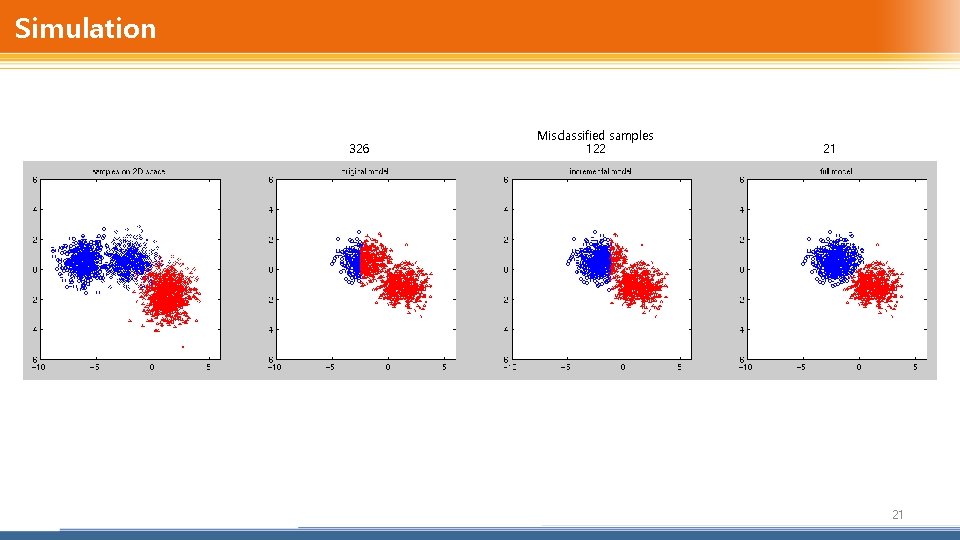

Simulation 326 Misclassified samples 122 21 21

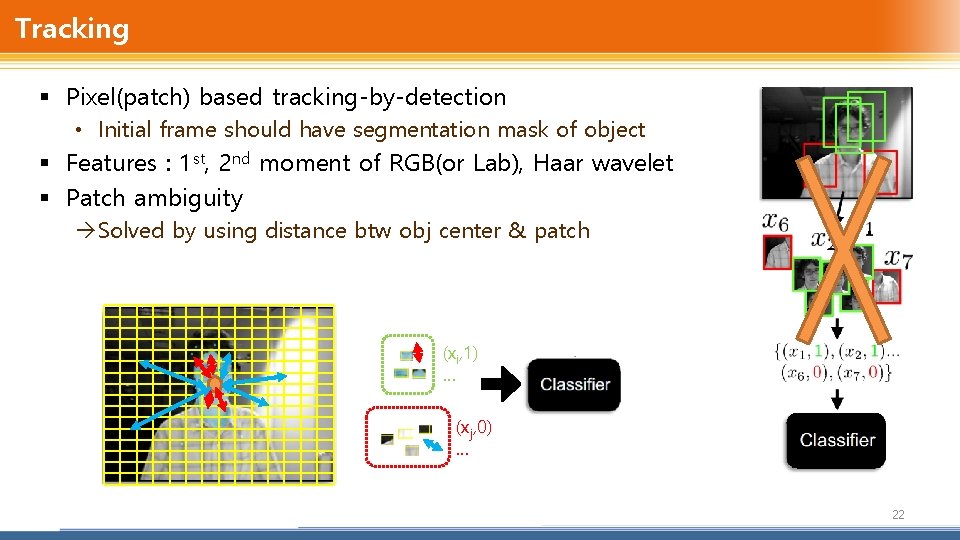

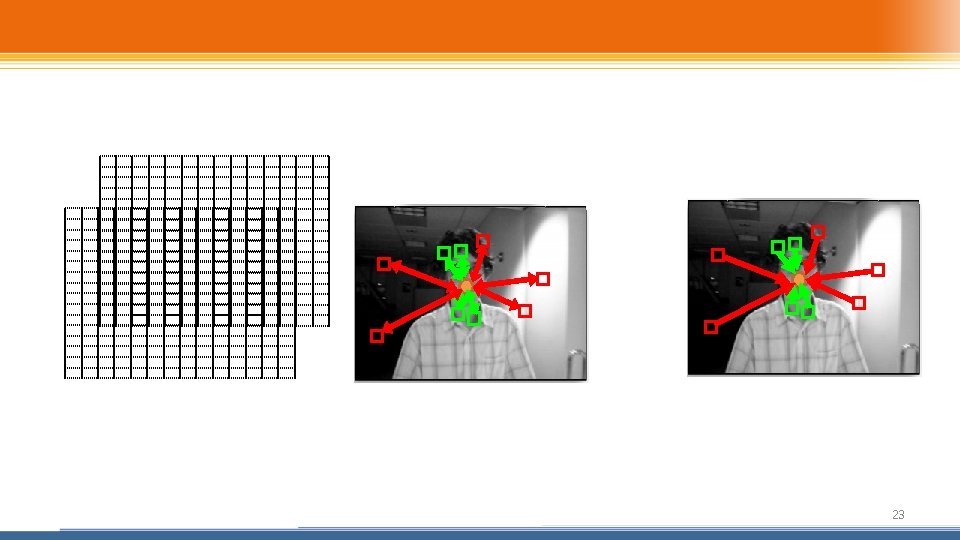

Tracking § Pixel(patch) based tracking-by-detection • Initial frame should have segmentation mask of object § Features : 1 st, 2 nd moment of RGB(or Lab), Haar wavelet § Patch ambiguity Solved by using distance btw obj center & patch (xi, 1) … (xj, 0) … 22

23

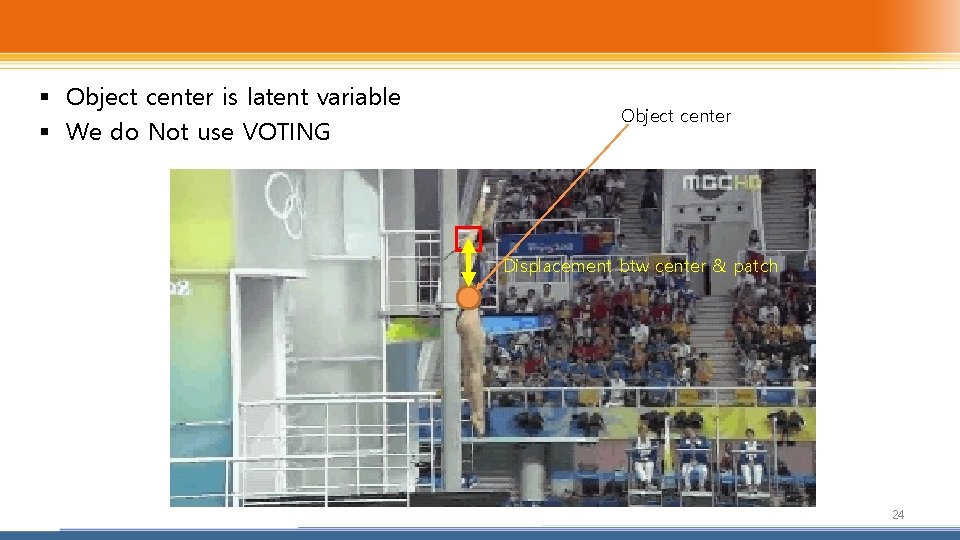

§ Object center is latent variable § We do Not use VOTING Object center Displacement btw center & patch 24

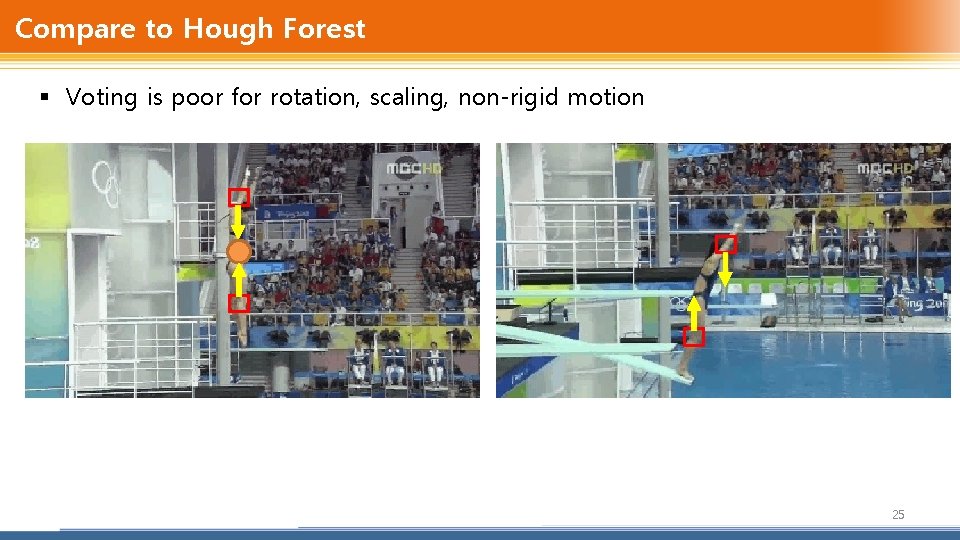

Compare to Hough Forest § Voting is poor for rotation, scaling, non-rigid motion 25

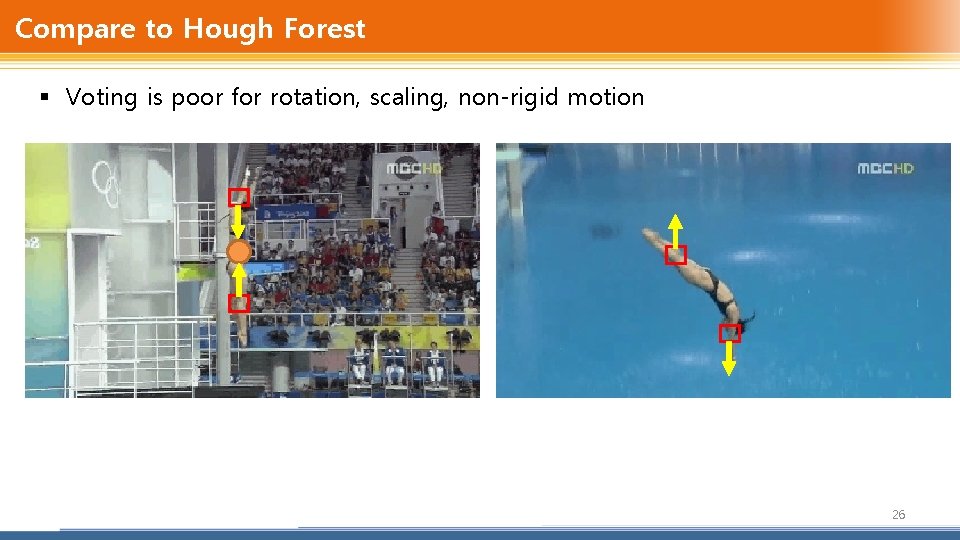

Compare to Hough Forest § Voting is poor for rotation, scaling, non-rigid motion 26

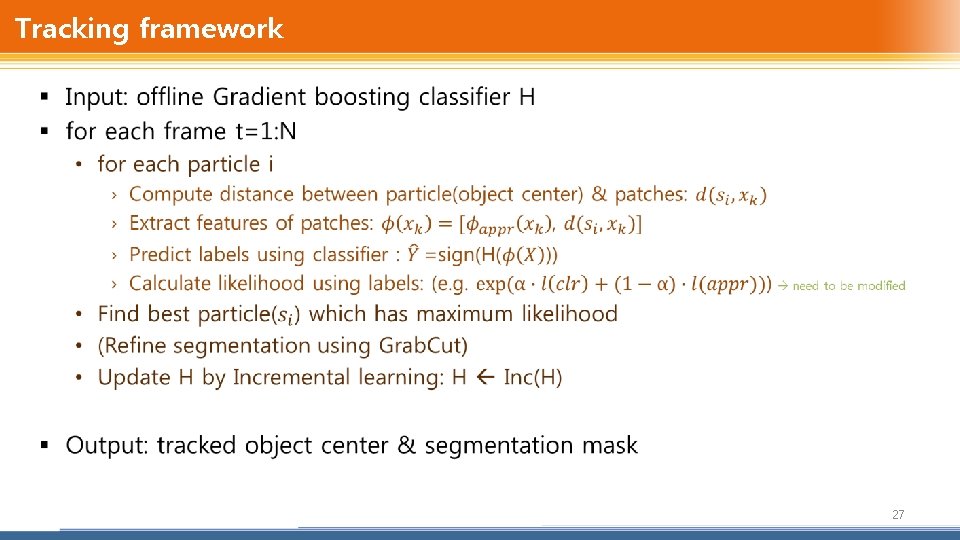

Tracking framework § 27

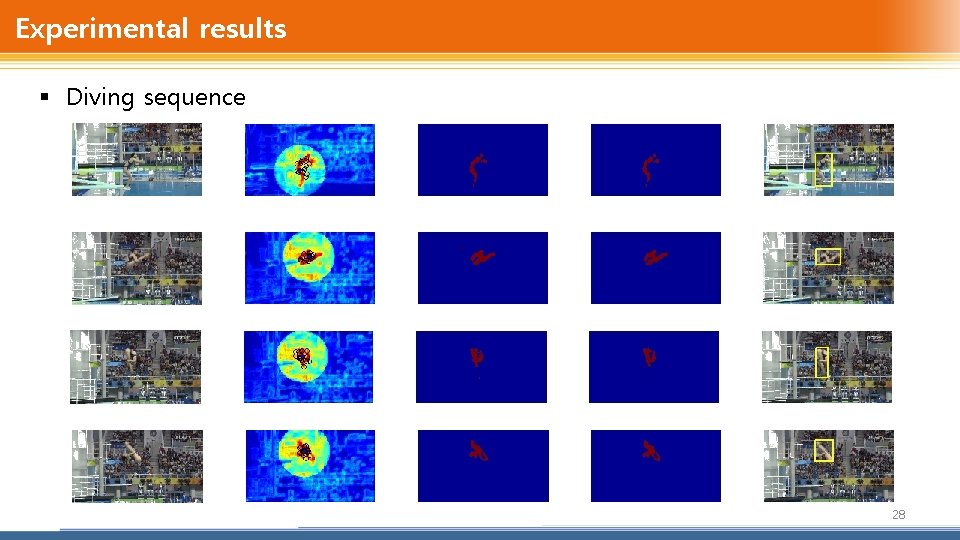

Experimental results § Diving sequence 28

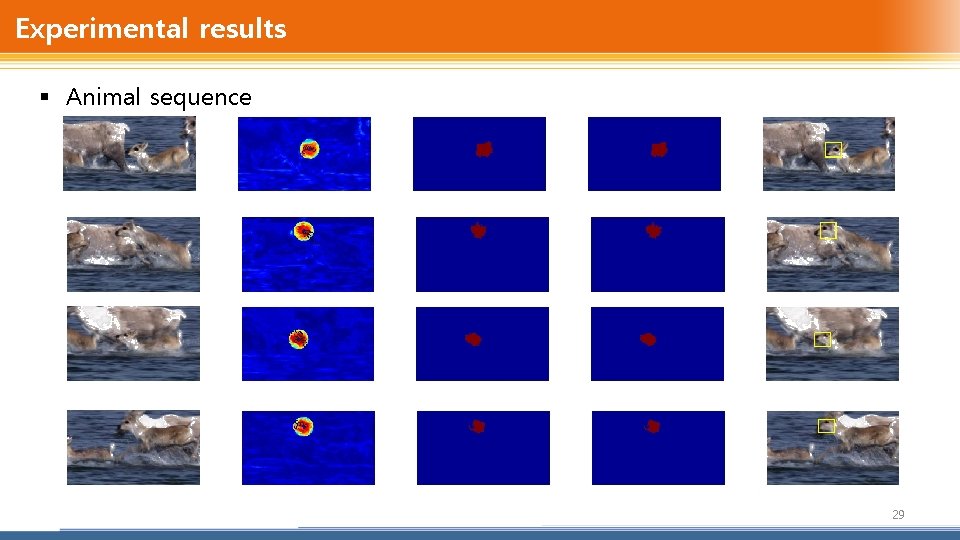

Experimental results § Animal sequence 29

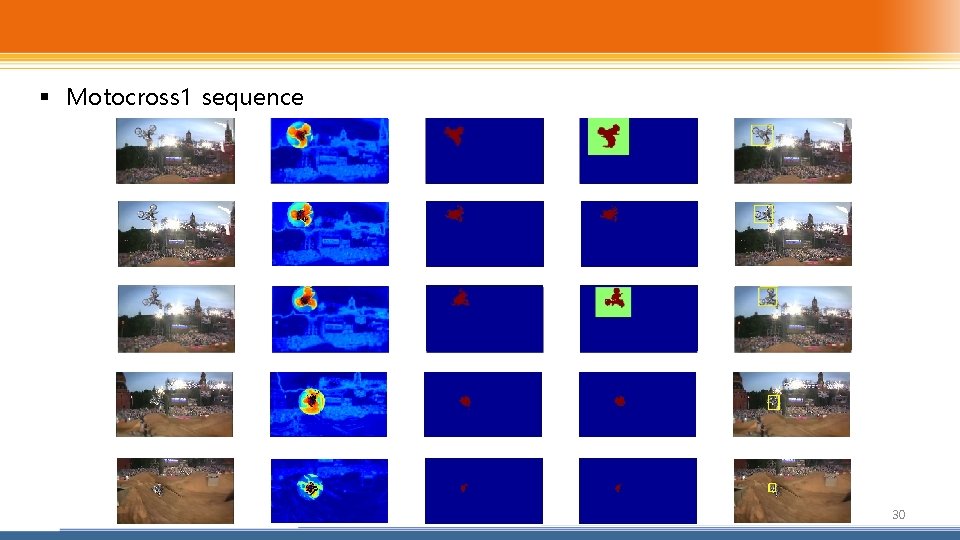

§ Motocross 1 sequence 30

Pros. & Cons. § Pros. • Find global minimum of error function • Robust to transformation of object (rotation, non-rigid) • Can adopt particle filter tracking framework › a few of particles (10~20) are used for tracking – We only consider translation of object center » State space is 2 -dim (x, y location) • Obtain not only object center but also rough segmentation § Cons. • • Slow (0. 2 fps) Sampling Accumulate online samples forgetting function Adjust only threshold feature selection Quantization error of histogram 31

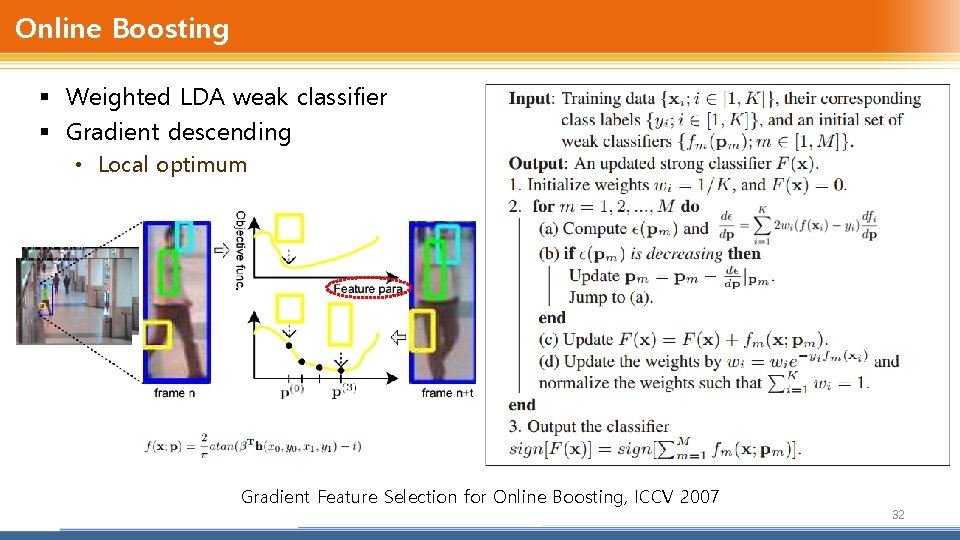

Online Boosting § Weighted LDA weak classifier § Gradient descending • Local optimum Gradient Feature Selection for Online Boosting, ICCV 2007 32

- Slides: 32