NonDataCommunication Overheads in MPI Analysis on Blue GeneP

Non-Data-Communication Overheads in MPI: Analysis on Blue Gene/P P. Balaji, A. Chan, W. Gropp, R. Thakur, E. Lusk Argonne National Laboratory University of Chicago University of Illinois, Urbana Champaign

Ultra-scale High-end Computing • Processor speeds no longer doubling every 18 -24 months – High-end Computing systems growing in parallelism • Energy usage and heat dissipation are major issues now – Energy usage is proportional to V 2 F – Lots of slow cores use lesser energy than one fast core • Consequence: – HEC systems rely less on the performance of a single core – Instead, extract parallelism out of a massive number of lowfrequency/low-power cores – E. g. , IBM Blue Gene/L, IBM Blue Gene/P, Si. Cortex Pavan Balaji, Argonne National Laboratory Euro. PVM/MPI (09/08/2008)

IBM Blue Gene/P System • Second Generation of the Blue Gene supercomputers • Extremely energy efficient design using low-power chips – Four 850 MHz cores on each PPC 450 processor • Connected using five specialized networks – Two of them (10 G and 1 G Ethernet) are used for File I/O and system management – Remaining three (3 D Torus, Global Collective network, Global Interrupt network) are used for MPI communication • Point-to-point communication goes through the torus network • Each node has six outgoing links at 425 MBps (total of 5. 1 GBps) Pavan Balaji, Argonne National Laboratory Euro. PVM/MPI (09/08/2008)

Blue Gene/P Software Stack • Three Software Stack Layers: – System Programming Interface (SPI) • Directly above the hardware • Most efficient, but very difficult to program and not portable ! – Deep Computing Messaging Framework (DCMF) • Portability layer built on top of SPI • Generalized message passing framework • Allows different stacks to be built on top – MPI • Built on top of DCMF • Most portable of the three layers • Based off of MPICH 2 (integrated into MPICH 2 as of 1. 1 a 1) Pavan Balaji, Argonne National Laboratory Euro. PVM/MPI (09/08/2008)

Issues with Scaling MPI on the BG/P • Large scale systems such as BG/P provide the capacity needed for achieving a Petaflop or higher performance • This system capacity has to be translated to capability for end users • Depends on MPI’s ability to scale to large number of cores – Pre- and post-data-communication processing in MPI • Simple computations can be expensive on modestly fast 850 MHz CPUs • Algorithmic Issues – Consider an O(N) algorithm with a small proportionality constant – “Acceptable” on 100 processors; Brutal on 100, 000 processors Pavan Balaji, Argonne National Laboratory Euro. PVM/MPI (09/08/2008)

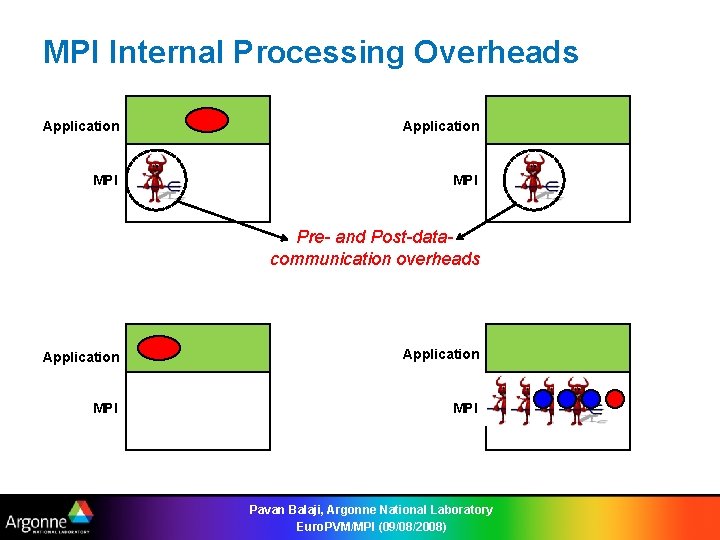

MPI Internal Processing Overheads Application MPI Pre- and Post-datacommunication overheads Application MPI Pavan Balaji, Argonne National Laboratory Euro. PVM/MPI (09/08/2008)

Presentation Outline • Introduction • Issues with Scaling MPI on Blue Gene/P • Experimental Evaluation – MPI Stack Computation Overhead – Algorithmic Inefficiencies • Concluding Remarks Pavan Balaji, Argonne National Laboratory Euro. PVM/MPI (09/08/2008)

Basic MPI Stack Overhead Application MPI DCMF Application DCMF Pavan Balaji, Argonne National Laboratory Euro. PVM/MPI (09/08/2008)

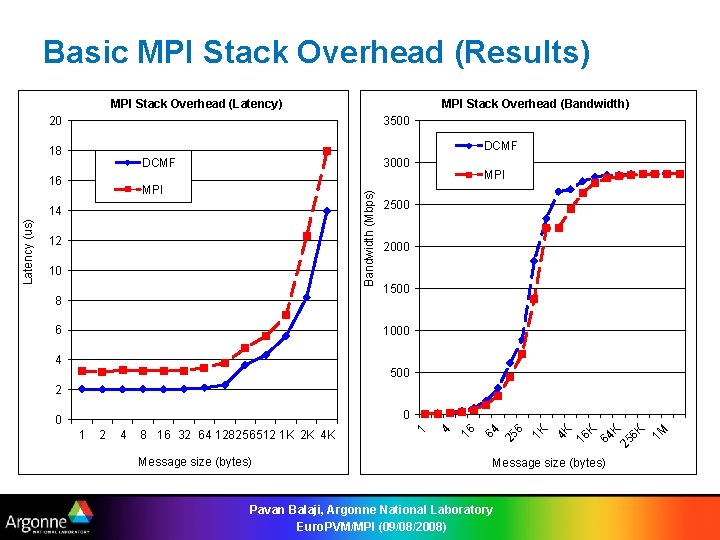

Basic MPI Stack Overhead (Results) MPI Stack Overhead (Latency) MPI Stack Overhead (Bandwidth) 3500 20 DCMF 18 3000 DCMF 16 Bandwidth (Mbps) MPI 12 10 8 6 2500 2000 1500 1000 4 500 2 Message size (bytes) 64 K 25 6 K 1 M 16 K 4 K 8 16 32 64 128256512 1 K 2 K 4 K 1 K 4 64 25 6 2 16 1 4 0 0 1 Latency (us) 14 MPI Message size (bytes) Pavan Balaji, Argonne National Laboratory Euro. PVM/MPI (09/08/2008)

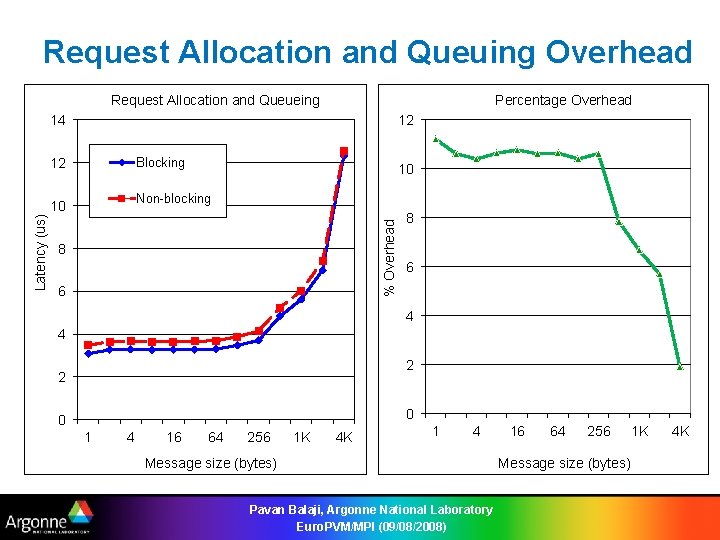

Request Allocation and Queuing • Blocking vs. Non-blocking point-to-point communication – Blocking: MPI_Send() and MPI_Recv() – Non-blocking: MPI_Isend(), MPI_Irecv() and MPI_Waitall() • Non-blocking communication potentially allows for better overlap of computation with communication, but… – …requires allocation, initialization and queuing/de-queuing of MPI_Request handles • What are we measuring? – Latency test using MPI_Send() and MPI_Recv() – Latency test using MPI_Irecv(), MPI_Isend() and MPI_Waitall() Pavan Balaji, Argonne National Laboratory Euro. PVM/MPI (09/08/2008)

Request Allocation and Queuing Overhead Request Allocation and Queueing Percentage Overhead 12 12 Blocking 10 Non-blocking 10 % Overhead Latency (us) 14 8 6 4 4 2 2 0 0 1 4 16 64 256 1 K 4 K 1 4 Message size (bytes) Pavan Balaji, Argonne National Laboratory Euro. PVM/MPI (09/08/2008) 16 64 256 Message size (bytes) 1 K 4 K

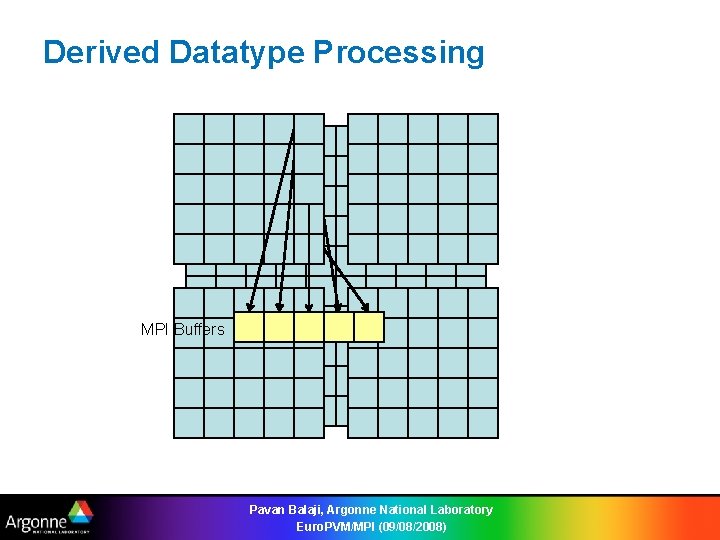

Derived Datatype Processing MPI Buffers Pavan Balaji, Argonne National Laboratory Euro. PVM/MPI (09/08/2008)

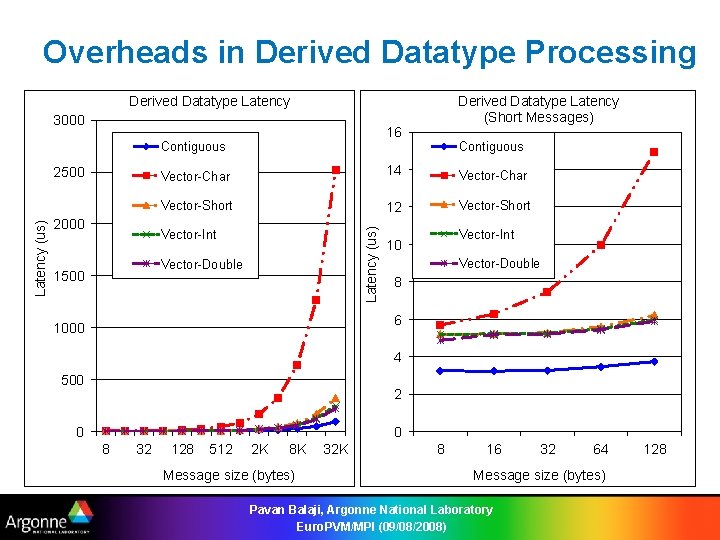

Overheads in Derived Datatype Processing Derived Datatype Latency (Short Messages) Derived Datatype Latency 3000 16 Contiguous 2000 Vector-Char 14 Vector-Char Vector-Short 12 Vector-Short Latency (us) 2500 Vector-Int Vector-Double 1500 Contiguous Vector-Int 10 Vector-Double 8 6 1000 4 500 2 0 0 8 32 128 512 2 K 8 K Message size (bytes) 32 K 8 16 32 64 Message size (bytes) Pavan Balaji, Argonne National Laboratory Euro. PVM/MPI (09/08/2008) 128

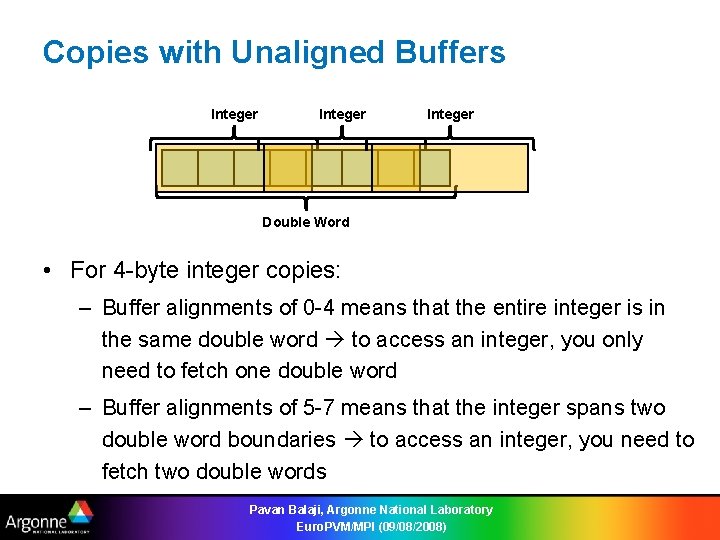

Copies with Unaligned Buffers Integer Double Word • For 4 -byte integer copies: – Buffer alignments of 0 -4 means that the entire integer is in the same double word to access an integer, you only need to fetch one double word – Buffer alignments of 5 -7 means that the integer spans two double word boundaries to access an integer, you need to fetch two double words Pavan Balaji, Argonne National Laboratory Euro. PVM/MPI (09/08/2008)

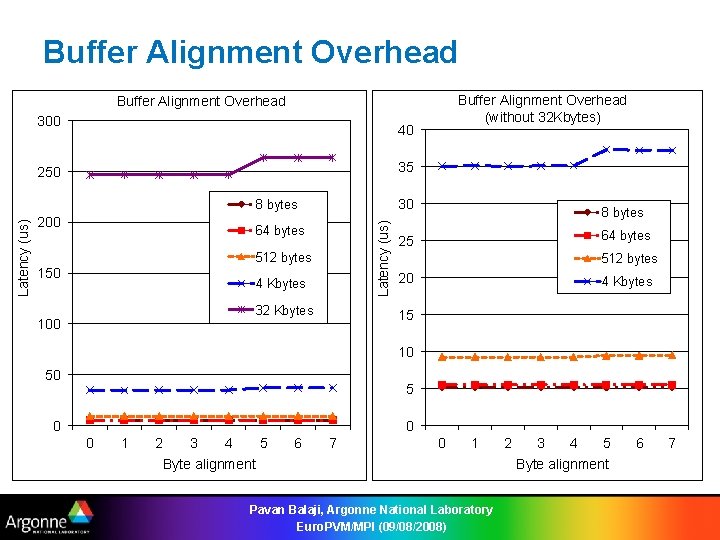

Buffer Alignment Overhead (without 32 Kbytes) Buffer Alignment Overhead 300 40 35 250 30 200 Latency (us) 8 bytes 64 bytes 512 bytes 150 4 Kbytes 32 Kbytes 100 8 bytes 64 bytes 25 512 bytes 20 4 Kbytes 15 10 50 5 0 0 0 1 2 3 4 5 Byte alignment 6 7 0 1 Pavan Balaji, Argonne National Laboratory Euro. PVM/MPI (09/08/2008) 2 3 4 5 Byte alignment 6 7

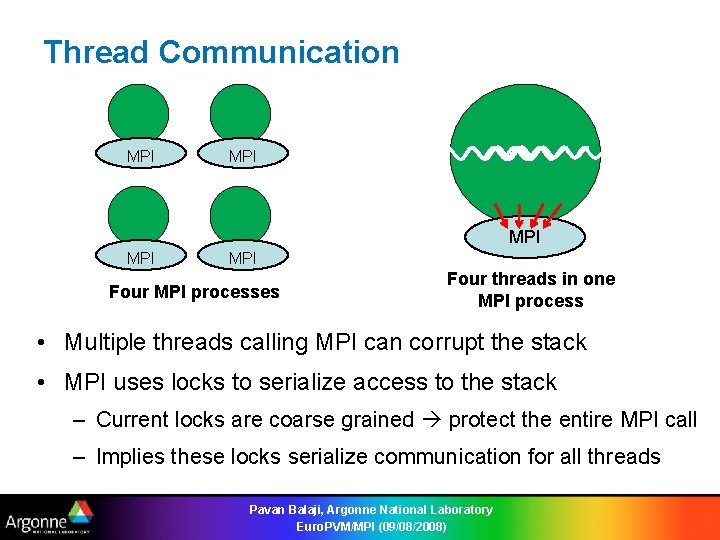

Thread Communication MPI MPI MPI Four MPI processes Four threads in one MPI process • Multiple threads calling MPI can corrupt the stack • MPI uses locks to serialize access to the stack – Current locks are coarse grained protect the entire MPI call – Implies these locks serialize communication for all threads Pavan Balaji, Argonne National Laboratory Euro. PVM/MPI (09/08/2008)

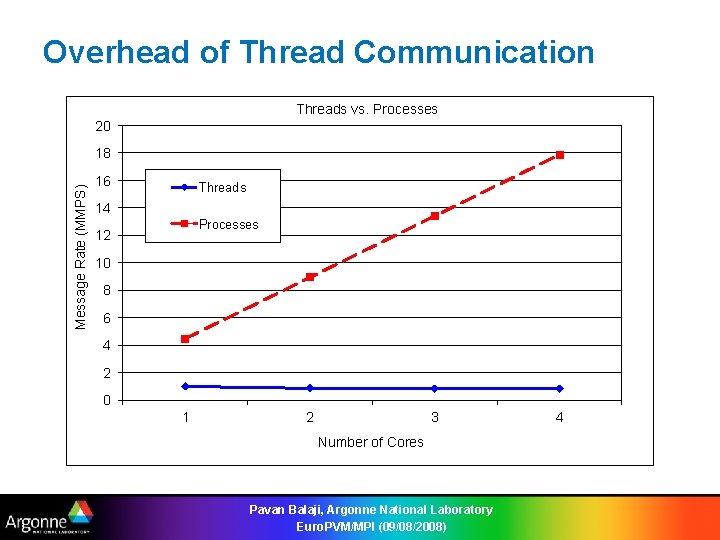

Overhead of Thread Communication Threads vs. Processes 20 Message Rate (MMPS) 18 16 Threads 14 Processes 12 10 8 6 4 2 0 1 2 3 Number of Cores Pavan Balaji, Argonne National Laboratory Euro. PVM/MPI (09/08/2008) 4

Presentation Outline • Introduction • Issues with Scaling MPI on Blue Gene/P • Experimental Evaluation – MPI Stack Computation Overhead – Algorithmic Inefficiencies • Concluding Remarks Pavan Balaji, Argonne National Laboratory Euro. PVM/MPI (09/08/2008)

Tag and Source Matching Source = 2 Tag = 1 Source = 1 Tag = 1 Source = 0 Source = 1 • Search time in most implementations is linear with respect Tag = 0 Tag = 2 to the number of requests posted Pavan Balaji, Argonne National Laboratory Euro. PVM/MPI (09/08/2008)

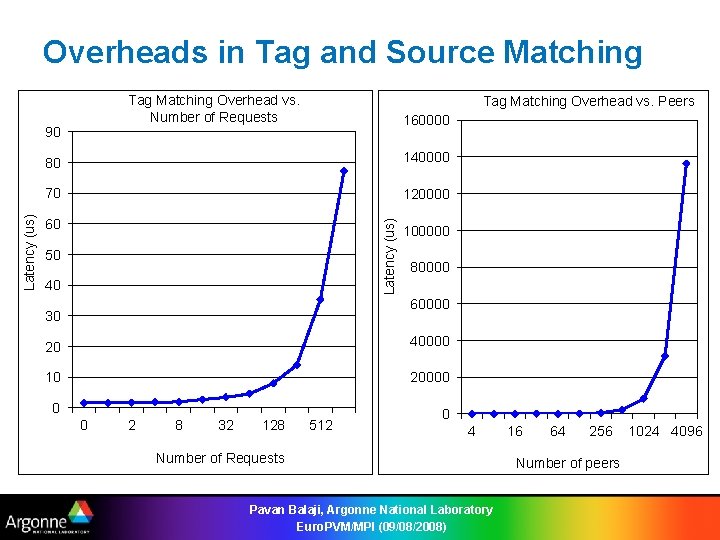

Overheads in Tag and Source Matching Tag Matching Overhead vs. Number of Requests 160000 80 140000 70 120000 60 Latency (us) 90 Tag Matching Overhead vs. Peers 50 40 100000 80000 60000 30 20 40000 10 20000 0 0 2 8 32 128 512 0 4 Number of Requests Pavan Balaji, Argonne National Laboratory Euro. PVM/MPI (09/08/2008) 16 64 256 Number of peers 1024 4096

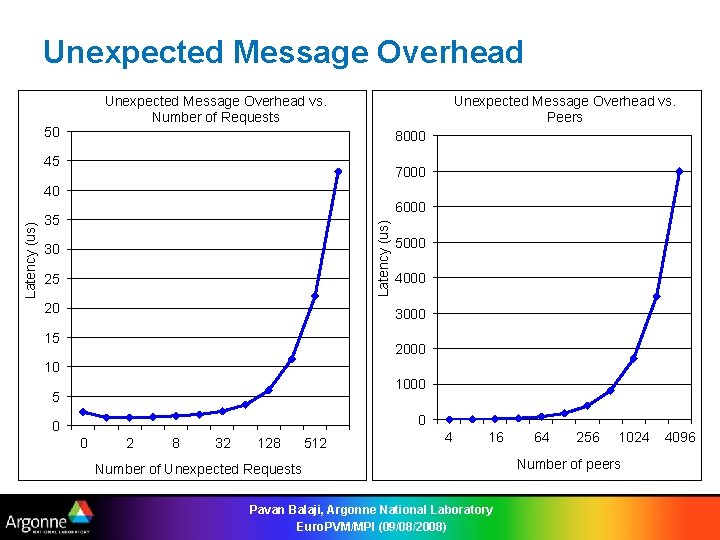

Unexpected Message Overhead vs. Number of Requests 50 Unexpected Message Overhead vs. Peers 8000 45 7000 6000 35 Latency (us) 40 30 25 20 5000 4000 3000 15 2000 10 1000 5 0 0 0 2 8 32 128 512 4 16 Number of Unexpected Requests Pavan Balaji, Argonne National Laboratory Euro. PVM/MPI (09/08/2008) 64 256 1024 Number of peers 4096

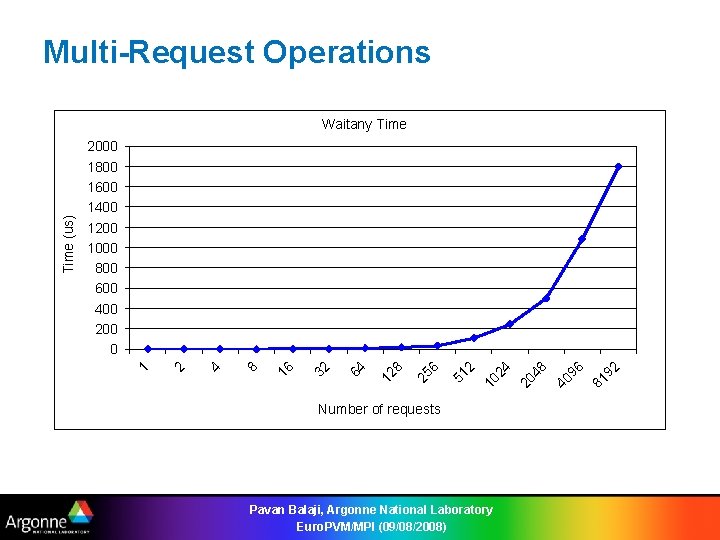

Multi-Request Operations Number of requests Pavan Balaji, Argonne National Laboratory Euro. PVM/MPI (09/08/2008) 92 81 96 40 48 20 24 10 2 51 6 25 8 12 64 32 16 8 4 2 2000 1800 1600 1400 1200 1000 800 600 400 200 0 1 Time (us) Waitany Time

Presentation Outline • Introduction • Issues with Scaling MPI on Blue Gene/P • Experimental Evaluation – MPI Stack Computation Overhead – Algorithmic Inefficiencies • Concluding Remarks Pavan Balaji, Argonne National Laboratory Euro. PVM/MPI (09/08/2008)

Concluding Remarks • Systems such as BG/P provide the capacity needed for achieving a Petaflop or higher performance • System capacity has to be translated to end-user capability – Depends on MPI’s ability to scale to large number of cores • We studied the non-data-communication overheads in MPI on BG/P – Identified several bottleneck possibilities within MPI – Stressed these bottlenecks with benchmarks – Analyzed the reasons behind such overheads Pavan Balaji, Argonne National Laboratory Euro. PVM/MPI (09/08/2008)

Thank You! Contact: Pavan Balaji: balaji@mcs. anl. gov Anthony Chan: chan@mcs. anl. gov William Gropp: wgropp@illinois. edu Rajeev Thakur: thakur@mcs. anl. gov Rusty Lusk: lusk@mcs. anl. gov Project Website: http: //www. mcs. anl. gov/research/projects/mpich 2 Pavan Balaji, Argonne National Laboratory Euro. PVM/MPI (09/08/2008)

- Slides: 25