Nonconvex Optimization for Machine Learning Prateek Jain Microsoft

![Technique: Projected Gradient Descent • 51 [Jain, Tewari, Kar’ 2014] Technique: Projected Gradient Descent • 51 [Jain, Tewari, Kar’ 2014]](https://slidetodoc.com/presentation_image_h2/4dafc48950167a6c230965d3fd87ef35/image-51.jpg)

![Statistical Guarantees • [Jain, Tewari, Kar’ 2014] 52 Statistical Guarantees • [Jain, Tewari, Kar’ 2014] 52](https://slidetodoc.com/presentation_image_h2/4dafc48950167a6c230965d3fd87ef35/image-52.jpg)

![Technique: Projected Gradient Descent • 54 [Jain, Tewari, Kar’ 2014], [Netrapalli, Jain’ 2014], [Jain, Technique: Projected Gradient Descent • 54 [Jain, Tewari, Kar’ 2014], [Netrapalli, Jain’ 2014], [Jain,](https://slidetodoc.com/presentation_image_h2/4dafc48950167a6c230965d3fd87ef35/image-54.jpg)

![Guarantees • [J. , Netrapalli’ 2015] Guarantees • [J. , Netrapalli’ 2015]](https://slidetodoc.com/presentation_image_h2/4dafc48950167a6c230965d3fd87ef35/image-55.jpg)

![General Result for Any Function • [J. , Tewari, Kar’ 2014] General Result for Any Function • [J. , Tewari, Kar’ 2014]](https://slidetodoc.com/presentation_image_h2/4dafc48950167a6c230965d3fd87ef35/image-56.jpg)

![Results: Alternating Minimization • [Jain, Netrapalli, Sanghavi’ 13] Results: Alternating Minimization • [Jain, Netrapalli, Sanghavi’ 13]](https://slidetodoc.com/presentation_image_h2/4dafc48950167a6c230965d3fd87ef35/image-61.jpg)

- Slides: 65

Non-convex Optimization for Machine Learning Prateek Jain Microsoft Research India

Outline • Optimization for Machine Learning • Non-convex Optimization • Convergence to Stationary Points • First order stationary points • Second order stationary points • Non-convex Optimization in ML • Neural Networks • Learning with Structure • Alternating Minimization • Projected Gradient Descent

Relevant Monograph (Shameless Ad)

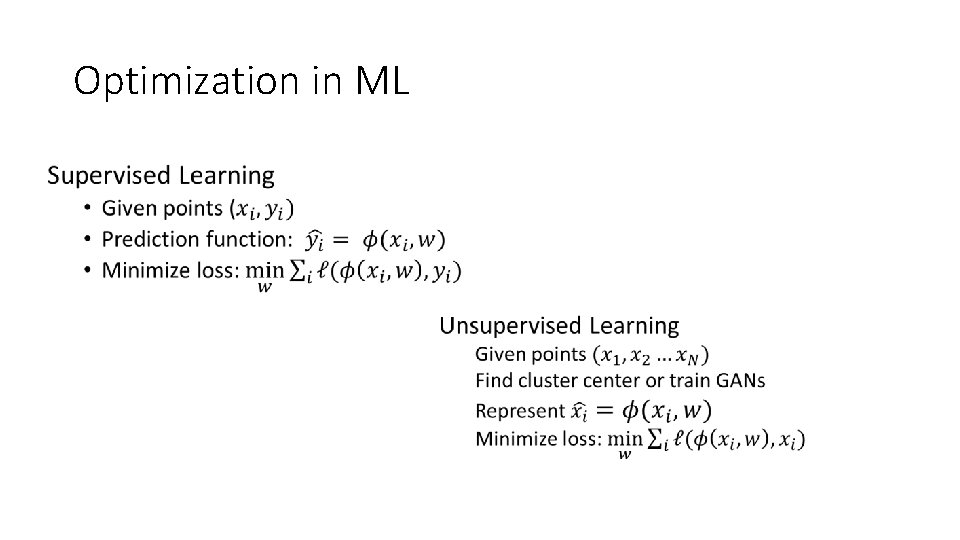

Optimization in ML •

Optimization Problems •

Convex Optimization Convex set Convex function Slide credit: Purushottam Kar

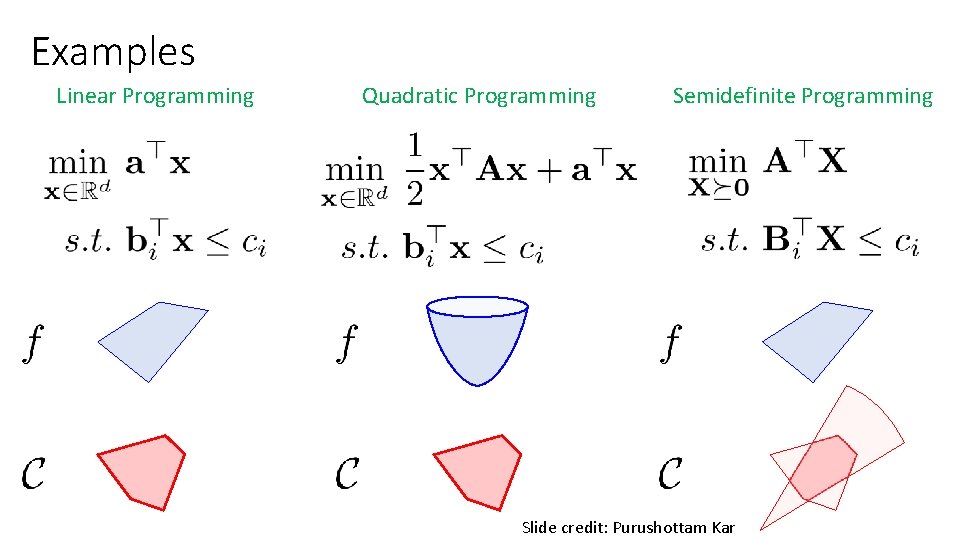

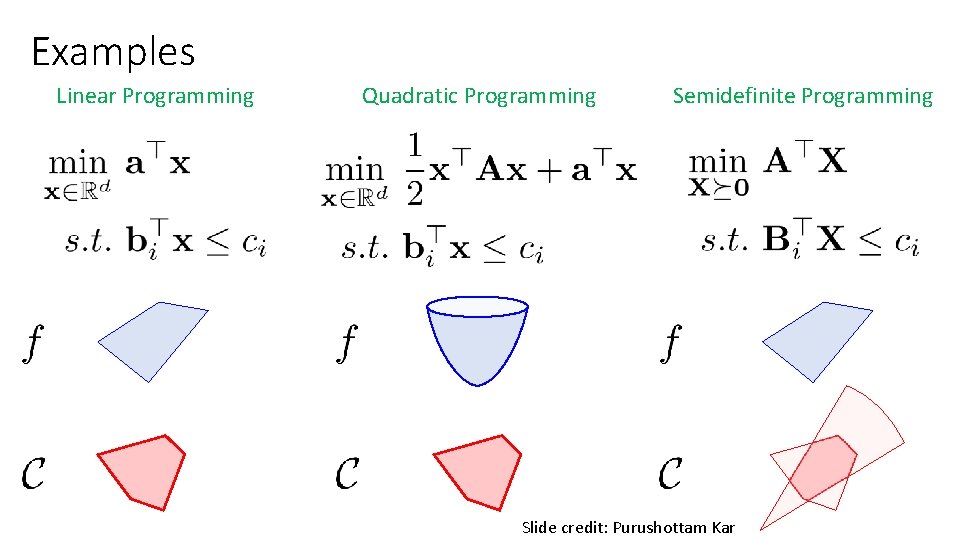

Examples Linear Programming Quadratic Programming Semidefinite Programming Slide credit: Purushottam Kar

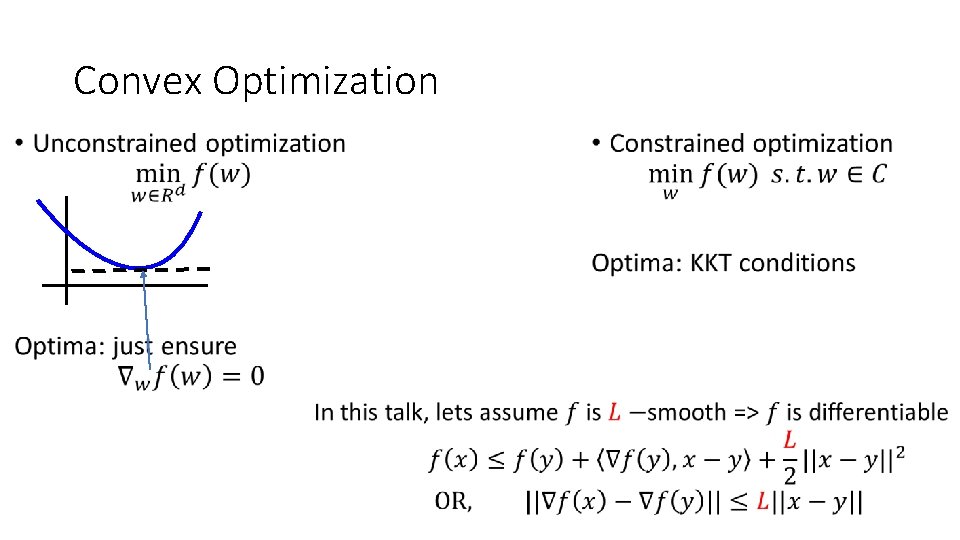

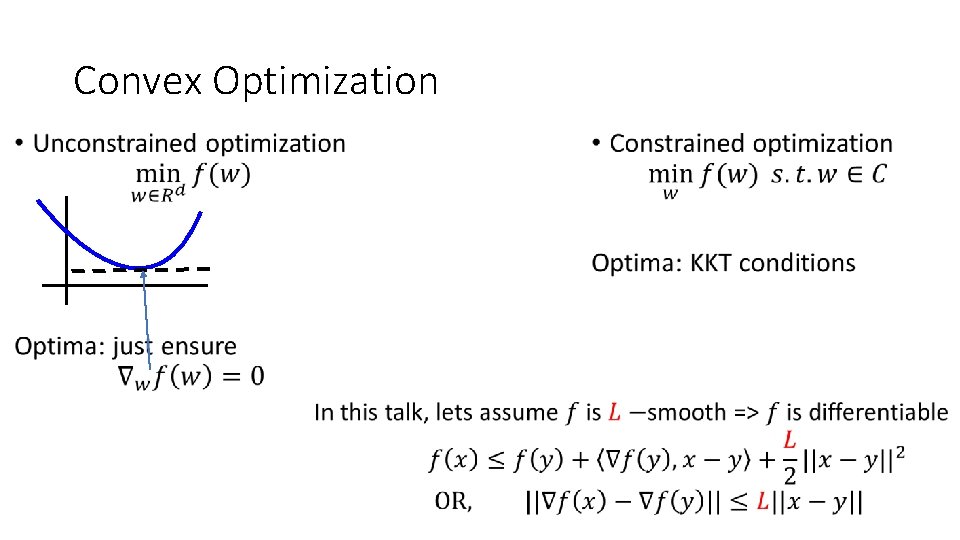

Convex Optimization •

Gradient Descent Methods •

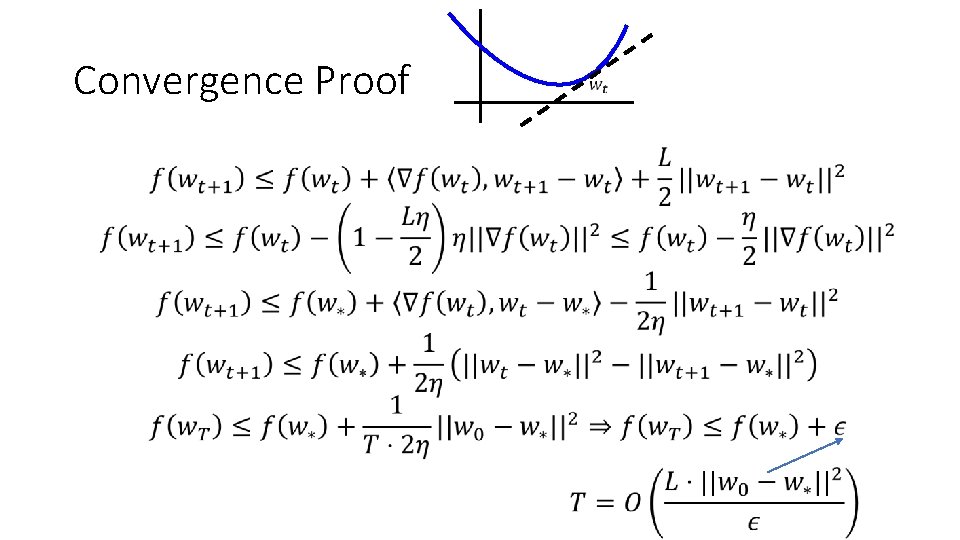

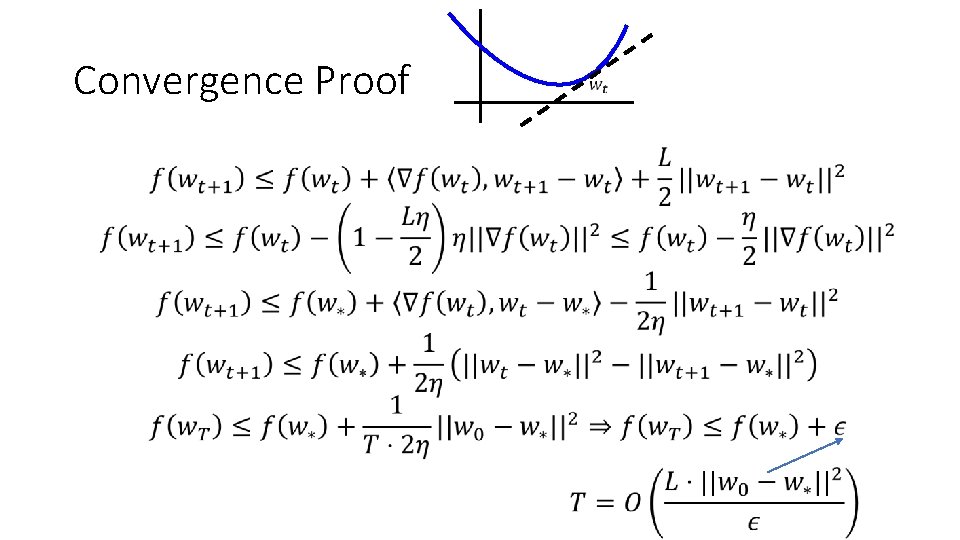

Convergence Proof •

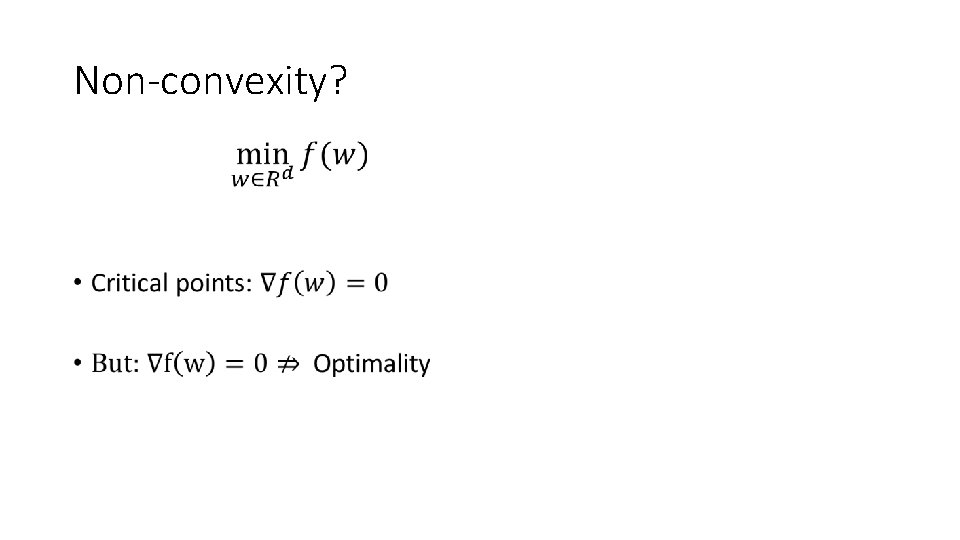

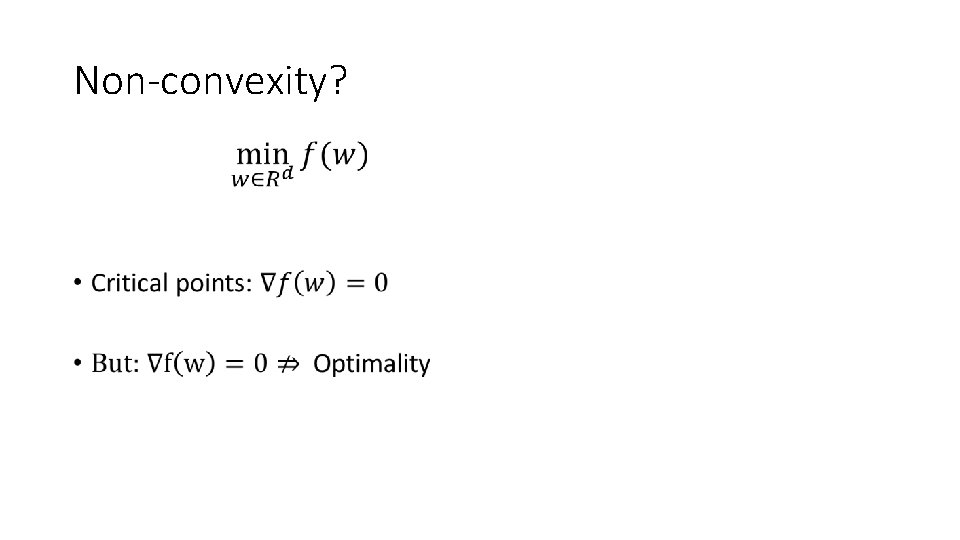

Non-convexity? •

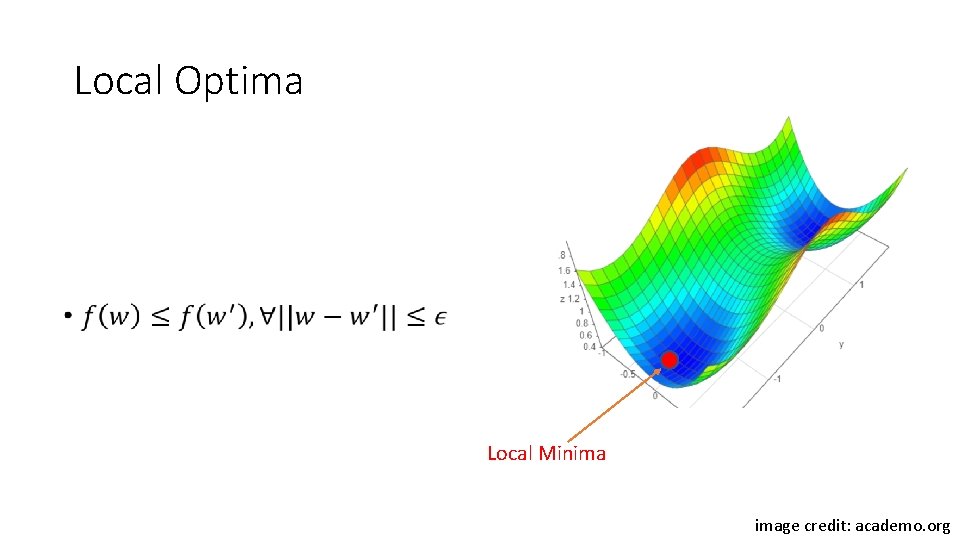

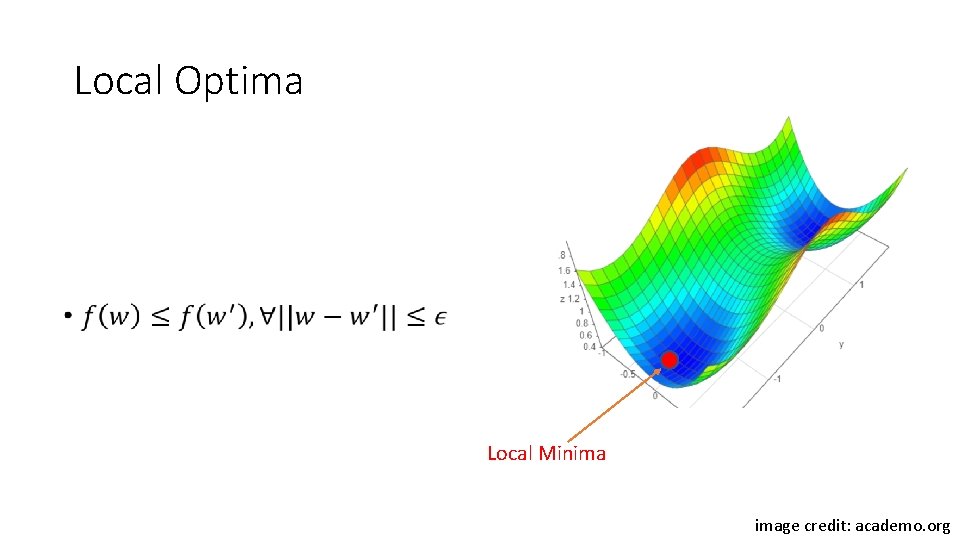

Local Optima • Local Minima image credit: academo. org

First Order Stationary Points First Order Stationary Point (FOSP) • image credit: academo. org

First Order Stationary Points • First Order Stationary Point (FOSP) image credit: academo. org

Second Order Stationary Points • Second Order Stationary Point (SOSP) image credit: academo. org

Second Order Stationary Points • Second Order Stationary Point (SOSP) image credit: academo. org

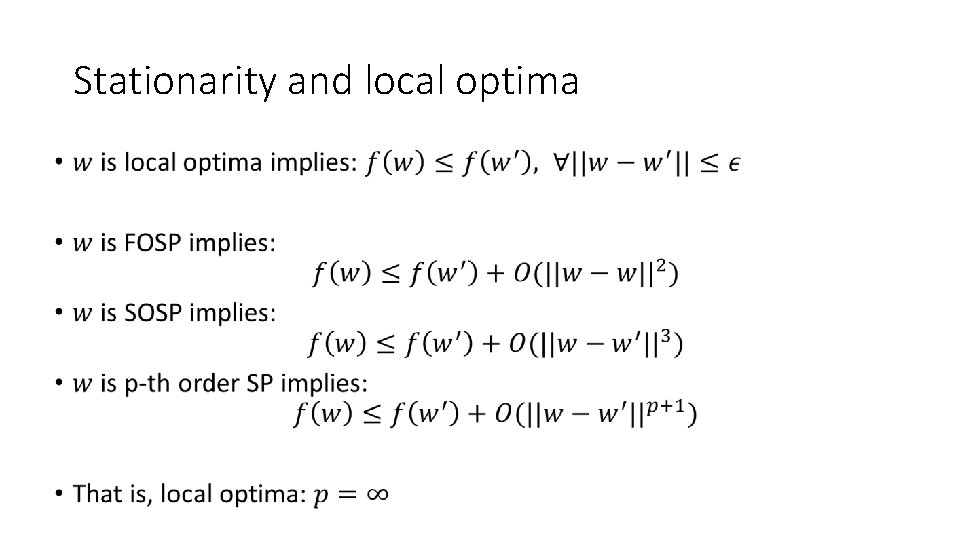

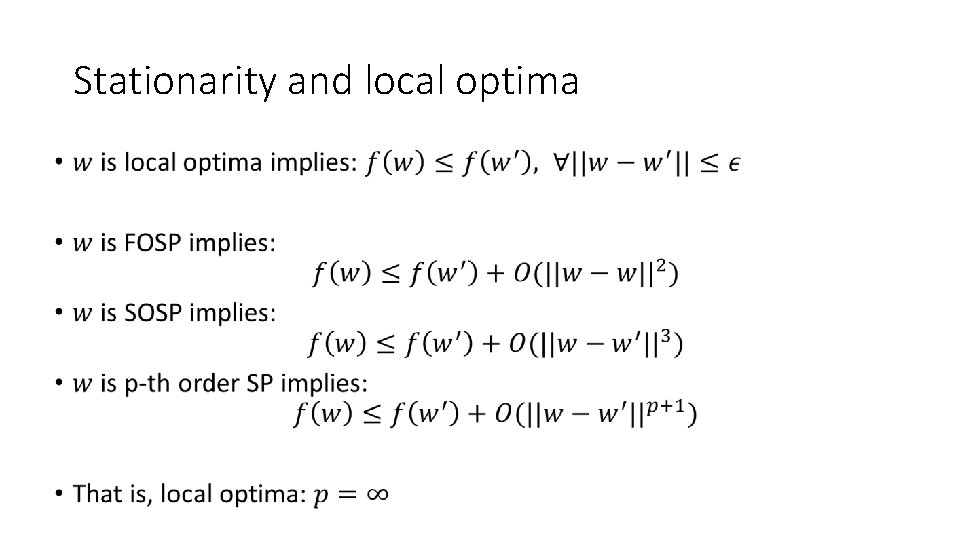

Stationarity and local optima •

Computability? First Order Stationary Point Second Order Stationary Point Third Order Stationary Point Local Optima NP-Hard Anandkumar and Ge-2016

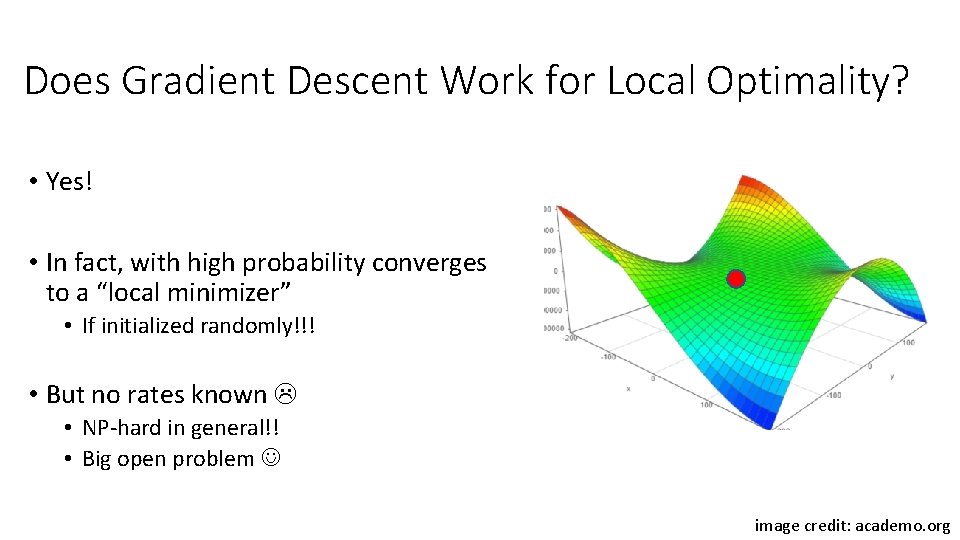

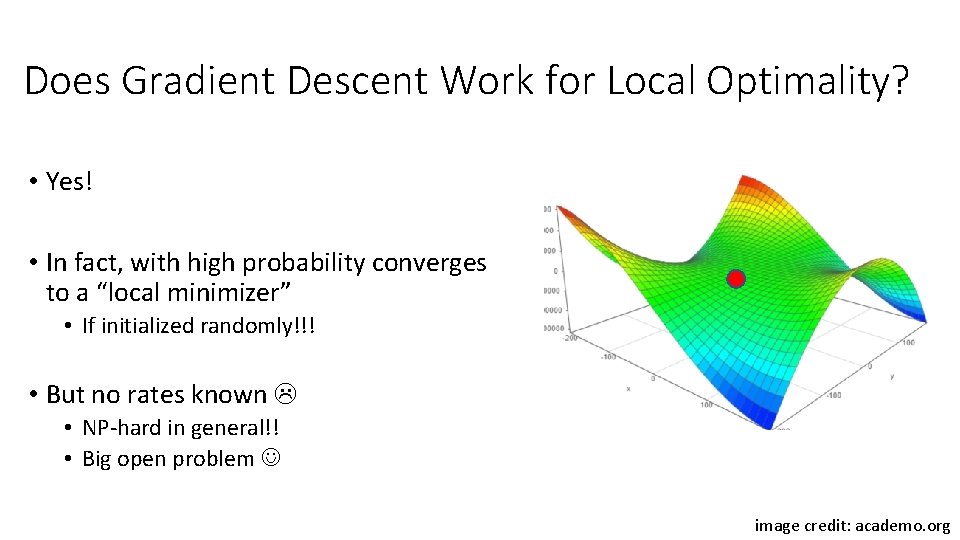

Does Gradient Descent Work for Local Optimality? • Yes! • In fact, with high probability converges to a “local minimizer” • If initialized randomly!!! • But no rates known • NP-hard in general!! • Big open problem image credit: academo. org

Finding First Order Stationary Points First Order Stationary Point (FOSP) • image credit: academo. org

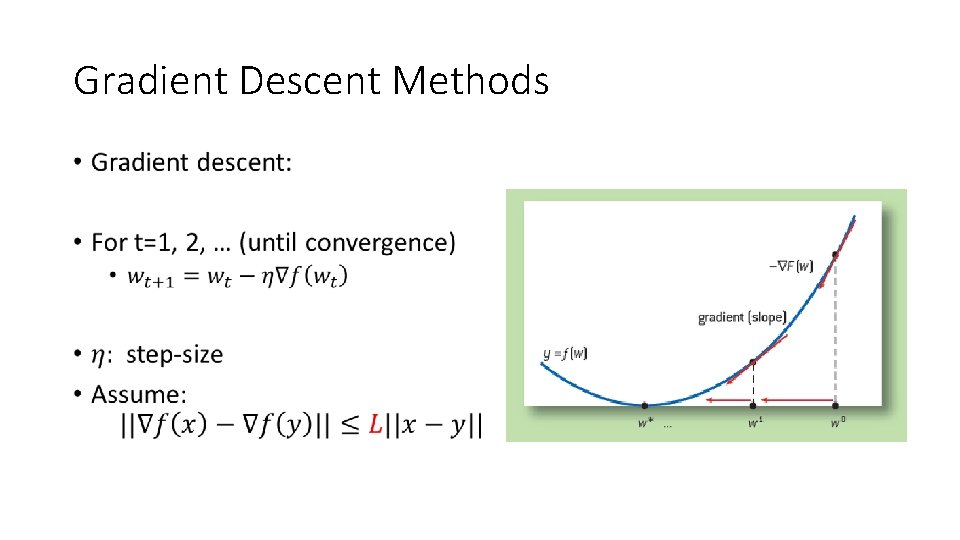

Gradient Descent Methods •

Convergence to FOSP •

Accelerated Gradient Descent for FOSP? • Ghadimi and Lan - 2013

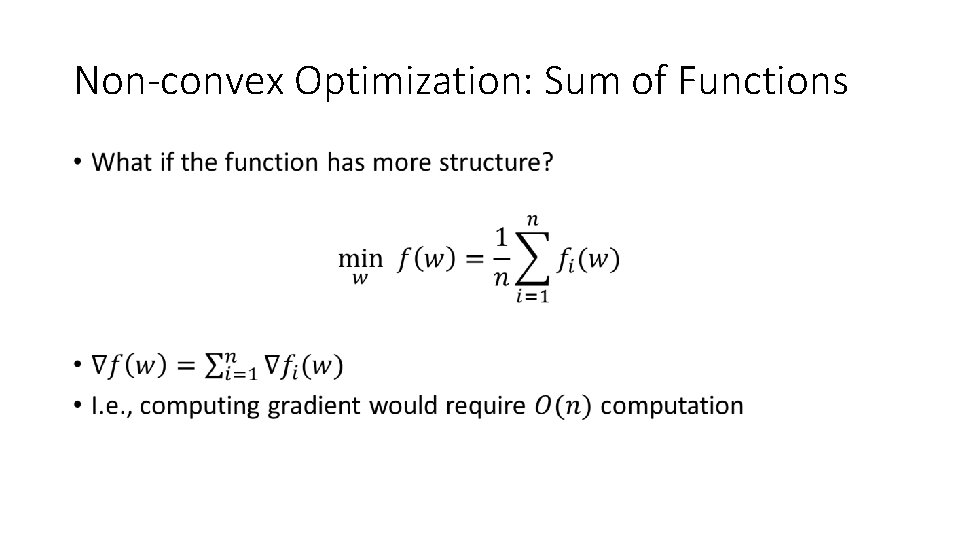

Non-convex Optimization: Sum of Functions •

Does Stochastic Gradient Descent Work? •

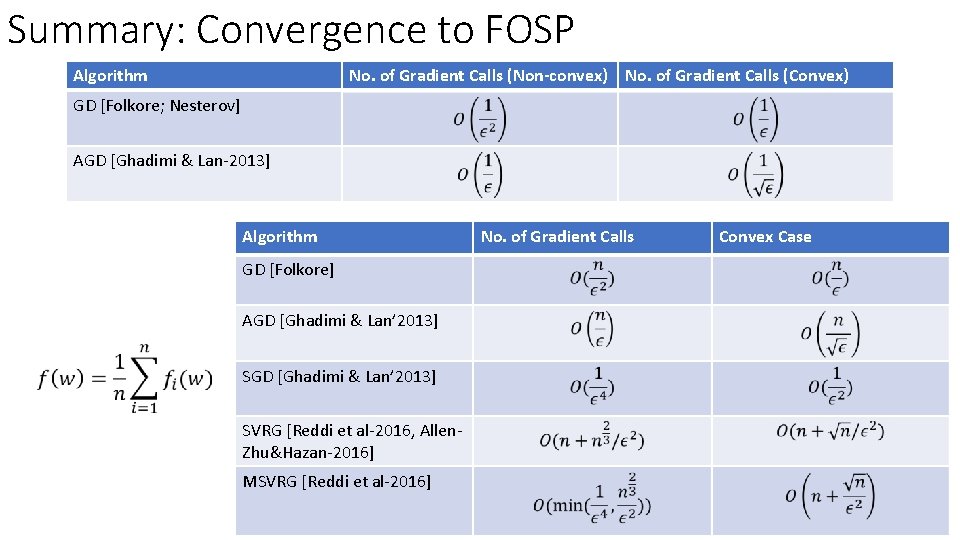

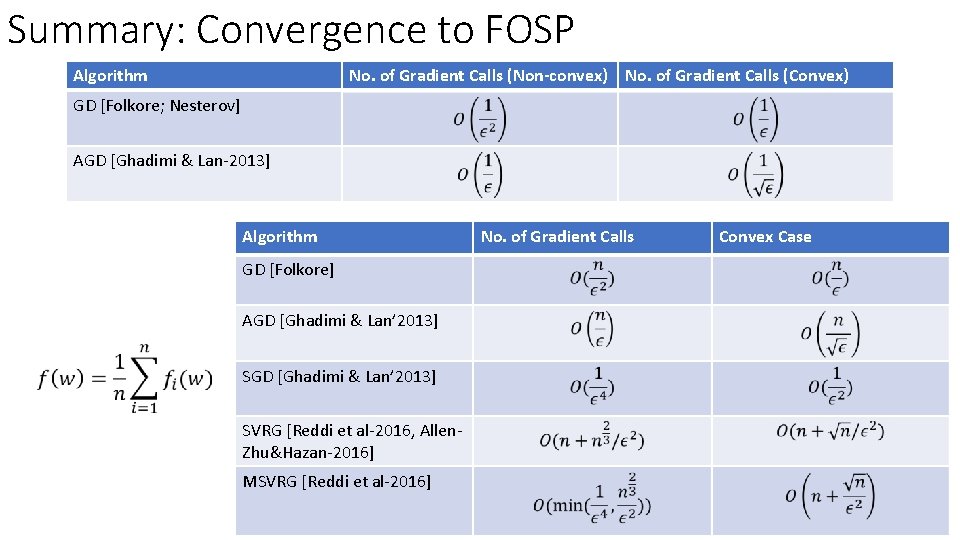

Summary: Convergence to FOSP Algorithm No. of Gradient Calls (Non-convex) No. of Gradient Calls (Convex) GD [Folkore; Nesterov] AGD [Ghadimi & Lan-2013] Algorithm GD [Folkore] AGD [Ghadimi & Lan’ 2013] SVRG [Reddi et al-2016, Allen. Zhu&Hazan-2016] MSVRG [Reddi et al-2016] No. of Gradient Calls Convex Case

Finding Second Order Stationary Points (SOSP) • Second Order Stationary Point (SOSP) image credit: academo. org

Cubic Regularization (Nesterov and Polyak-2006) •

Noisy Gradient Descent for SOSP • Ge et al-2015, Jin et al-2017

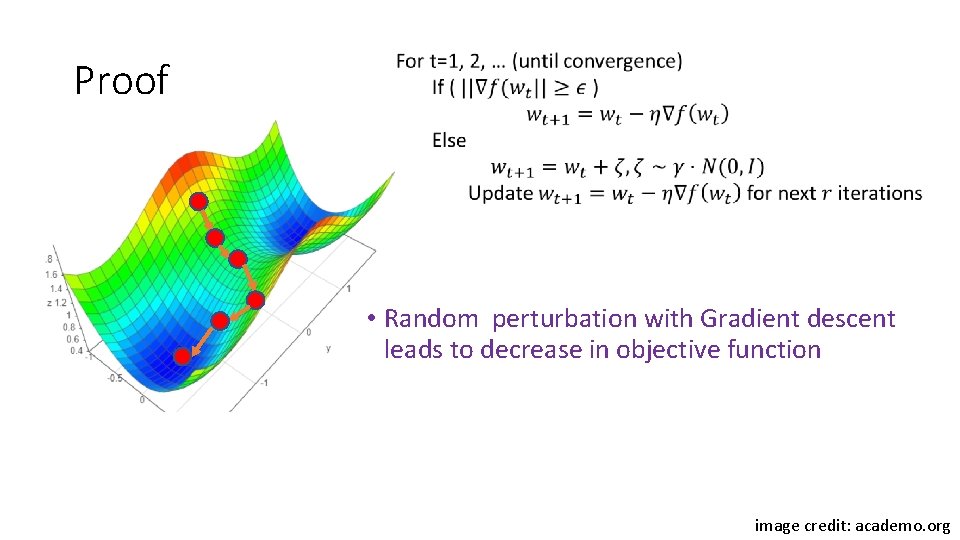

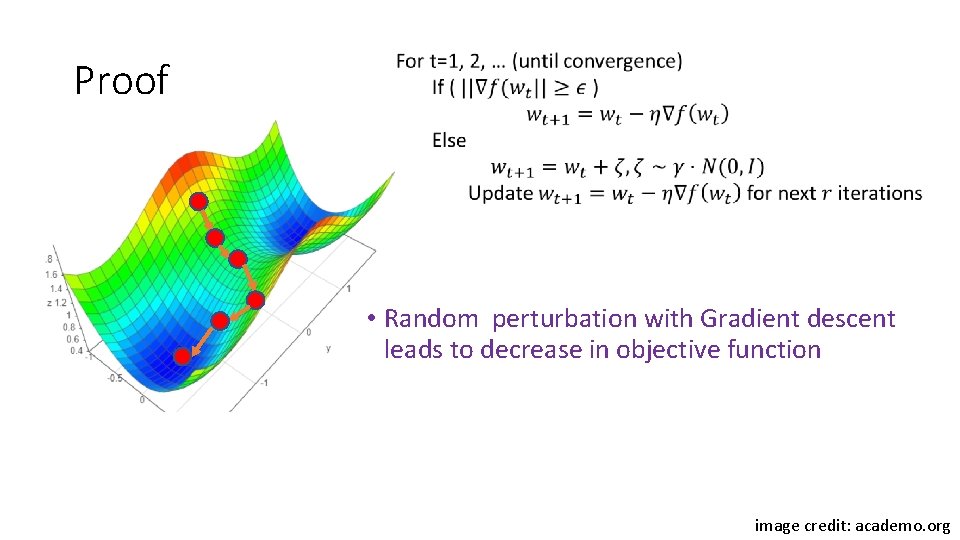

Proof • image credit: academo. org

Proof • Random perturbation with Gradient descent leads to decrease in objective function image credit: academo. org

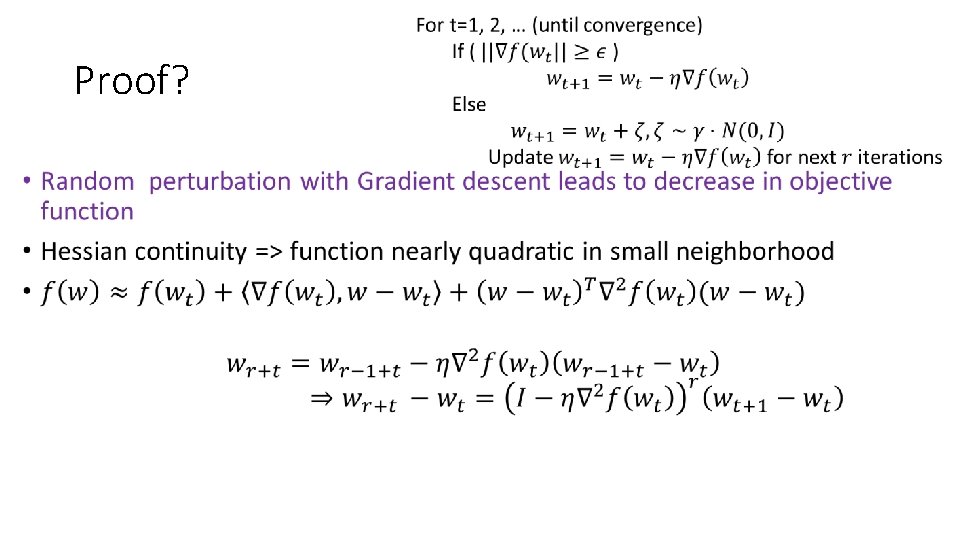

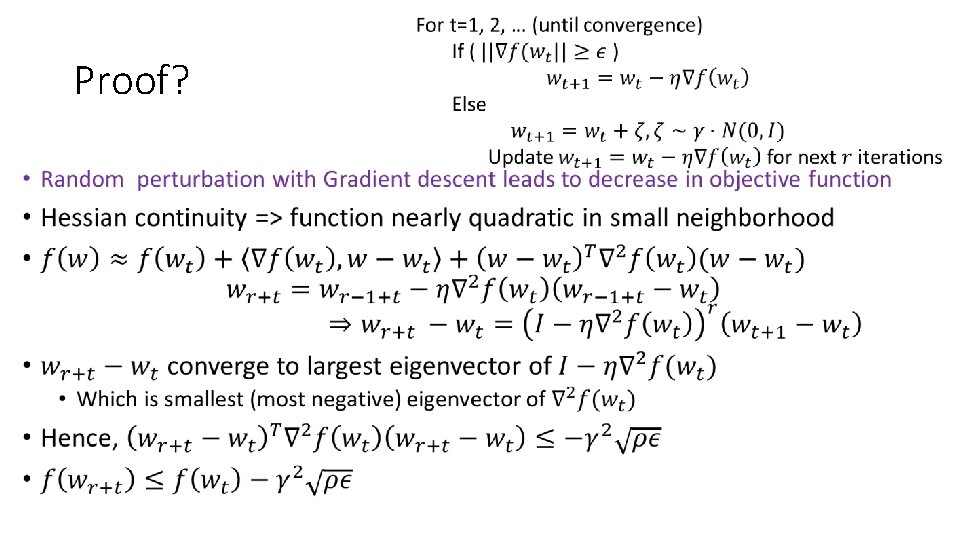

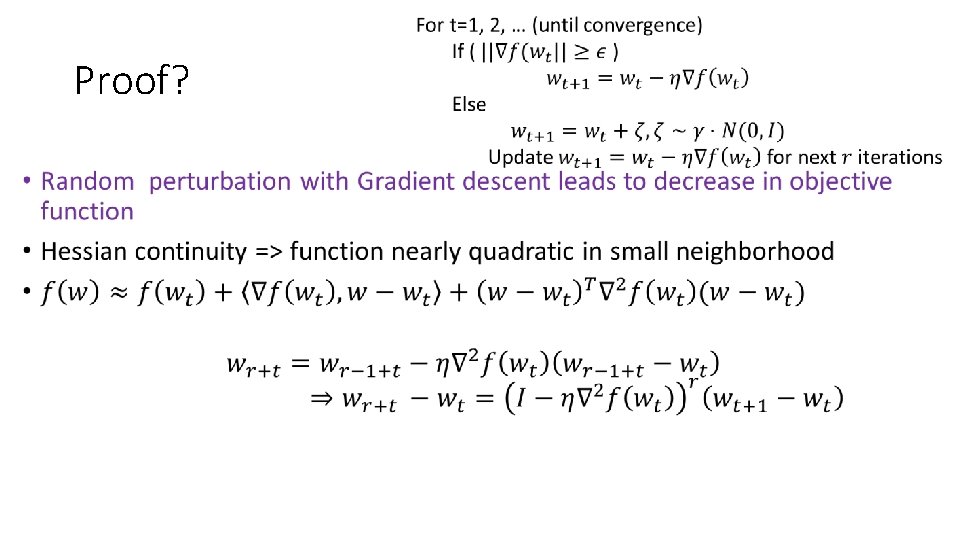

Proof? •

Proof? •

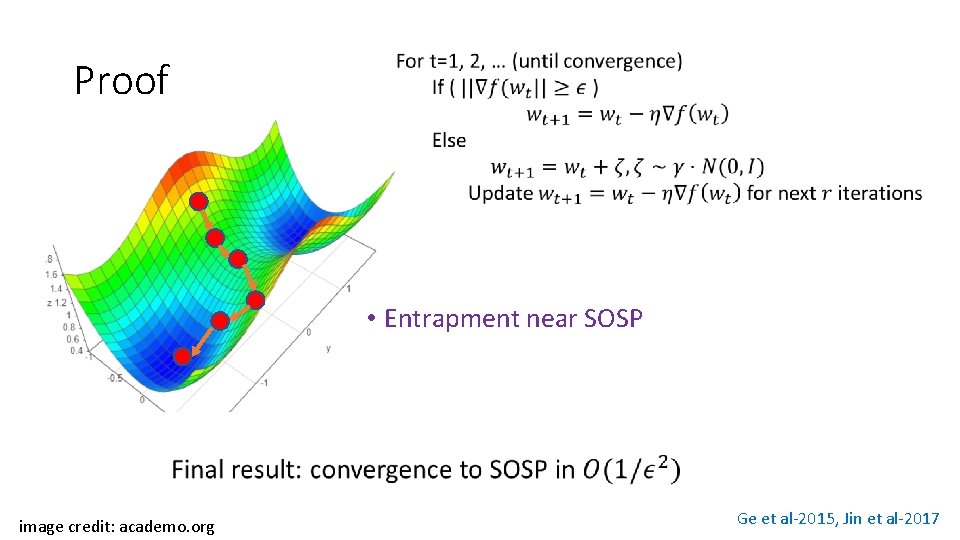

Proof • Entrapment near SOSP image credit: academo. org Ge et al-2015, Jin et al-2017

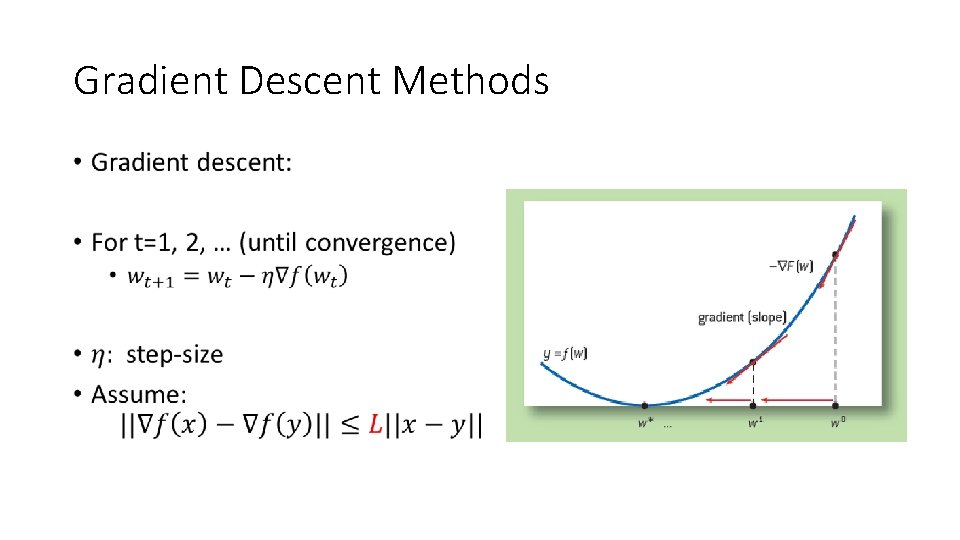

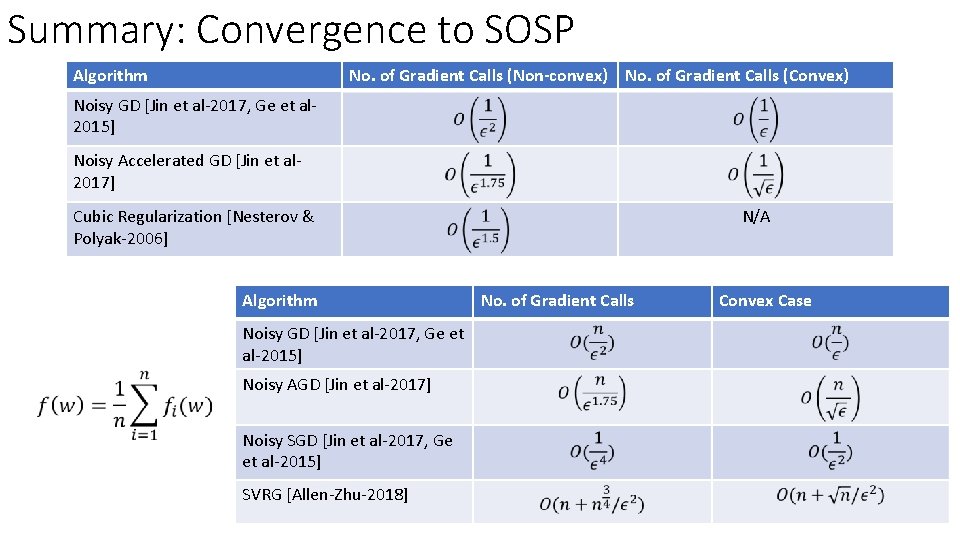

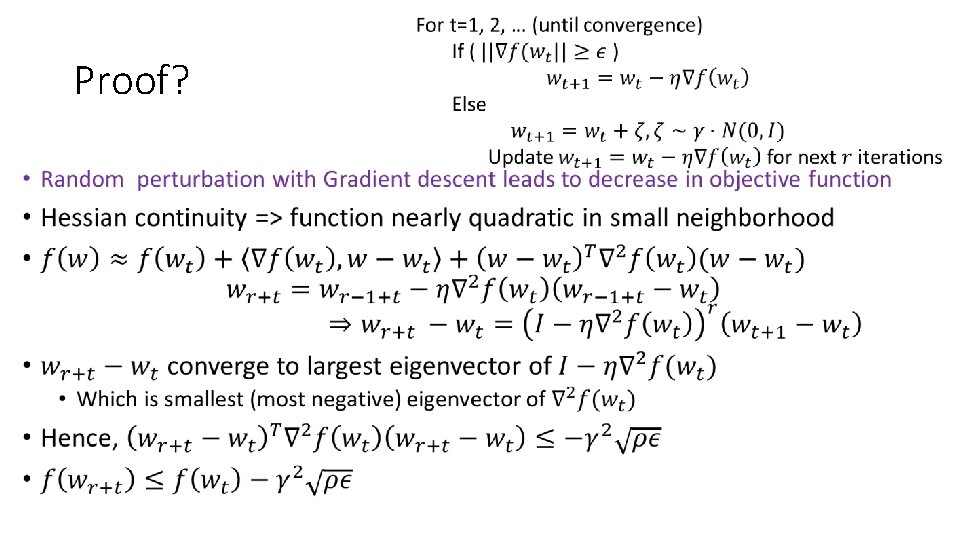

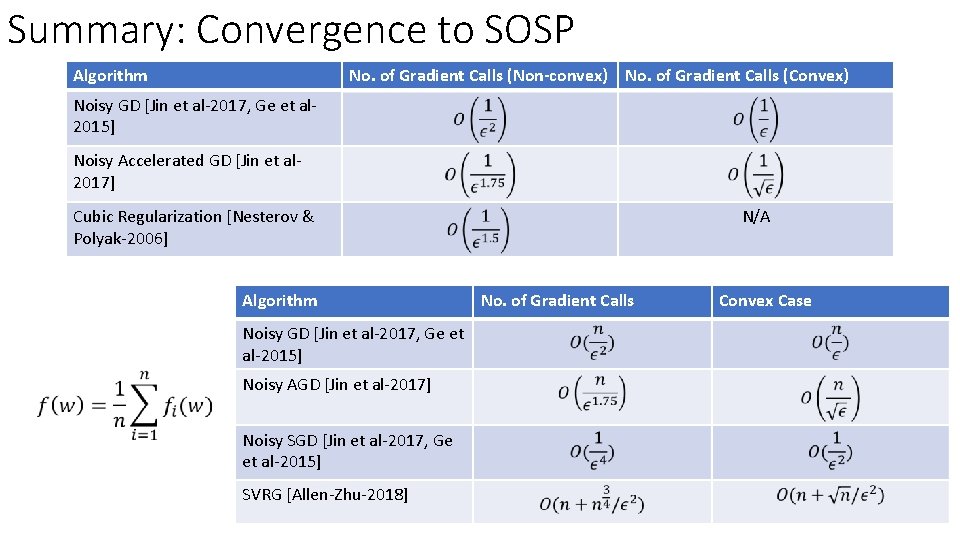

Summary: Convergence to SOSP Algorithm No. of Gradient Calls (Non-convex) No. of Gradient Calls (Convex) Noisy GD [Jin et al-2017, Ge et al 2015] Noisy Accelerated GD [Jin et al 2017] Cubic Regularization [Nesterov & Polyak-2006] Algorithm Noisy GD [Jin et al-2017, Ge et al-2015] Noisy AGD [Jin et al-2017] Noisy SGD [Jin et al-2017, Ge et al-2015] SVRG [Allen-Zhu-2018] N/A No. of Gradient Calls Convex Case

Convergence to Global Optima? • FOSP/SOSP methods can’t even guarantee local convergence • Can we guarantee global optimality for some “nicer” non-convex problems? • Yes!!! • Use statistics image credit: academo. org

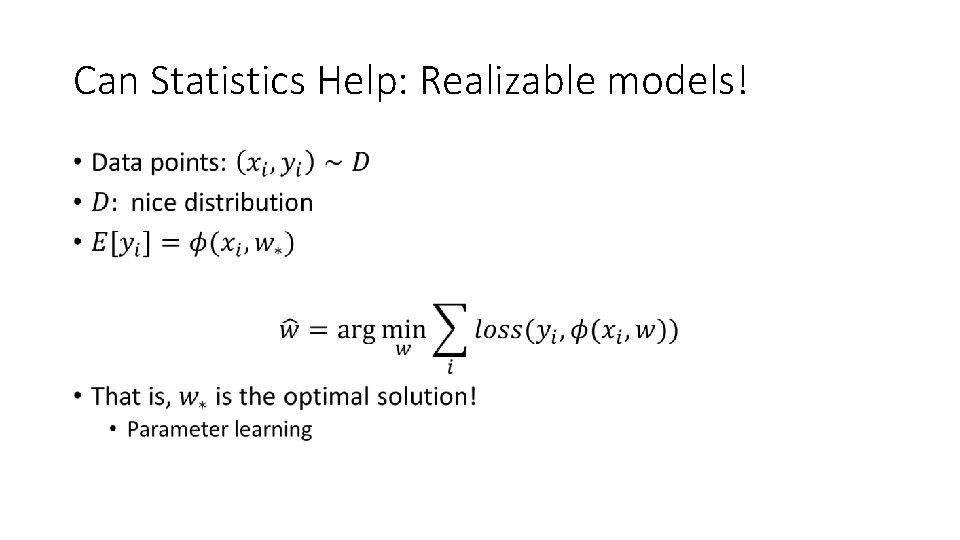

Can Statistics Help: Realizable models! •

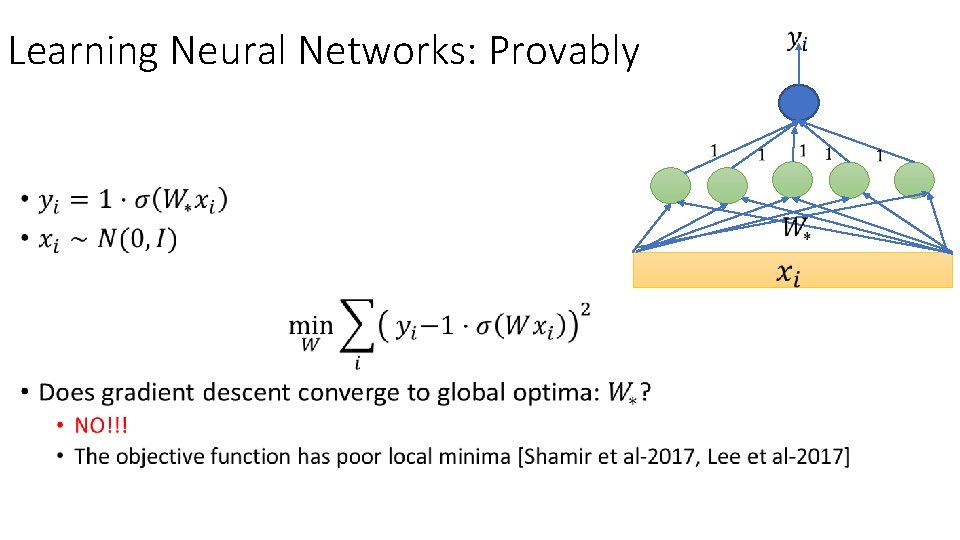

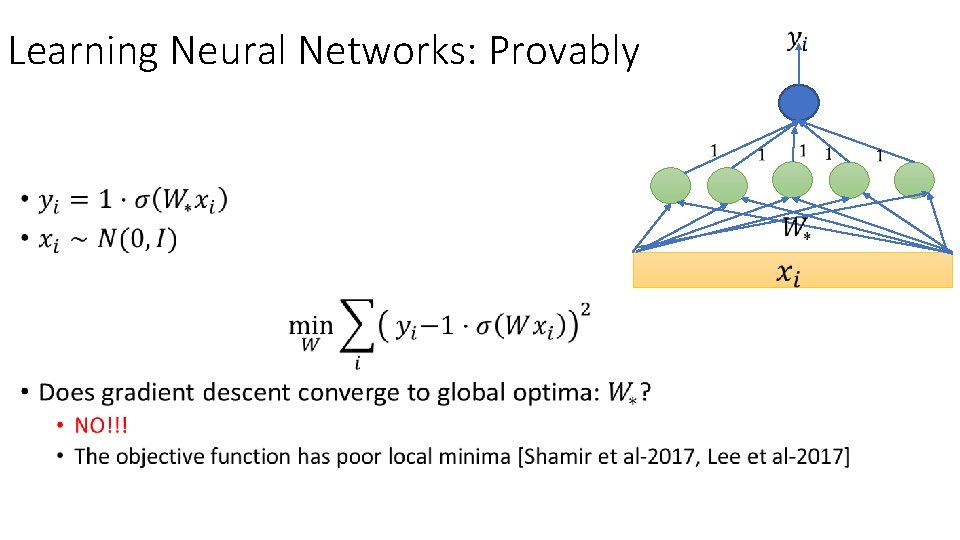

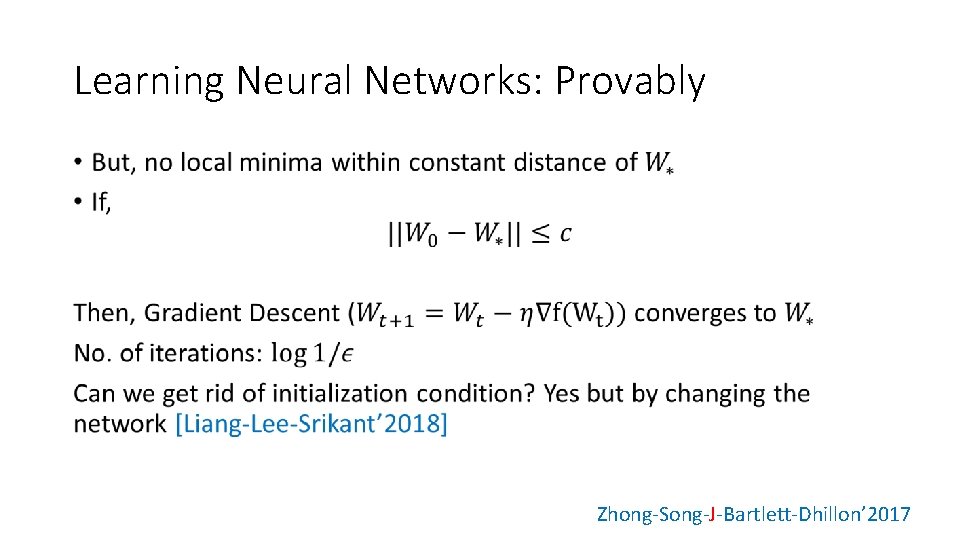

Learning Neural Networks: Provably •

Learning Neural Networks: Provably • Zhong-Song-J-Bartlett-Dhillon’ 2017

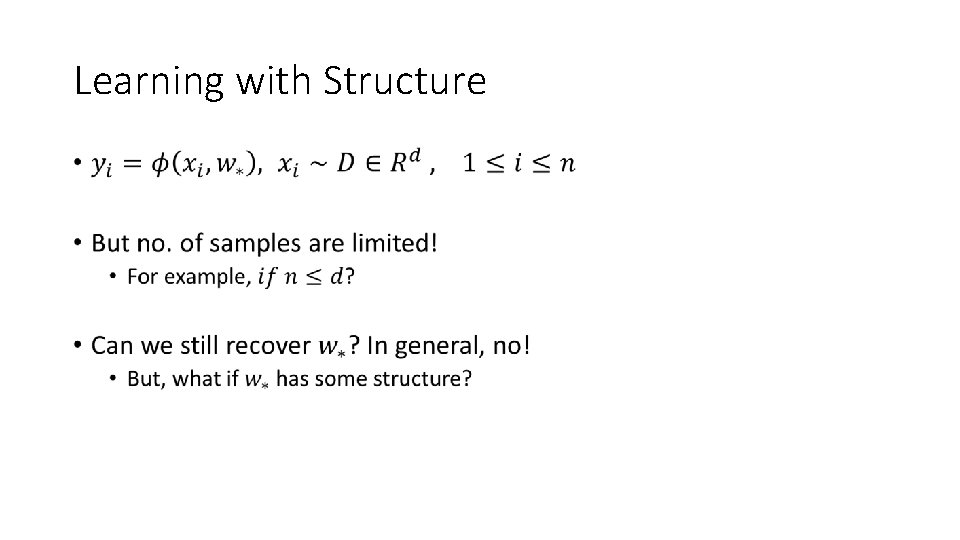

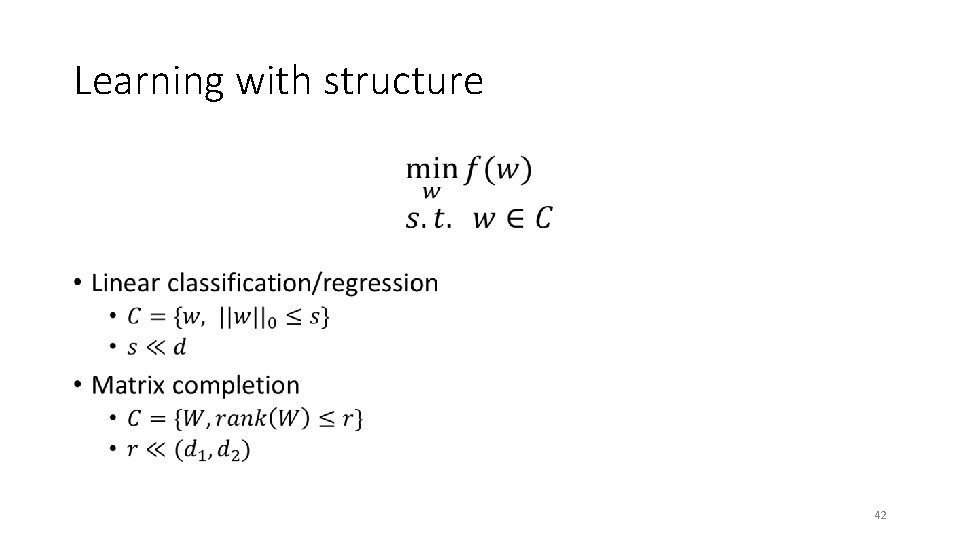

Learning with Structure •

Sparse Linear Regression n = d = 41

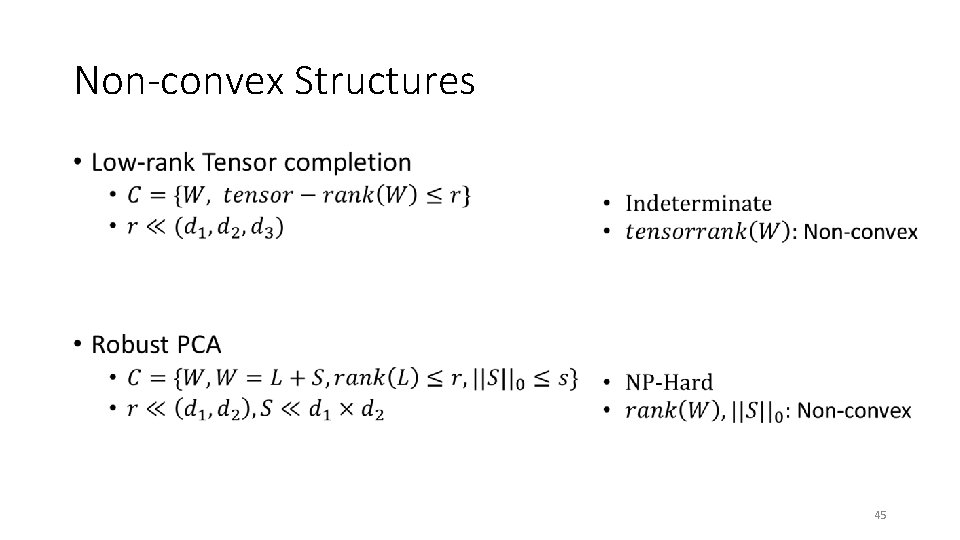

Learning with structure • 42

Other Examples • 43

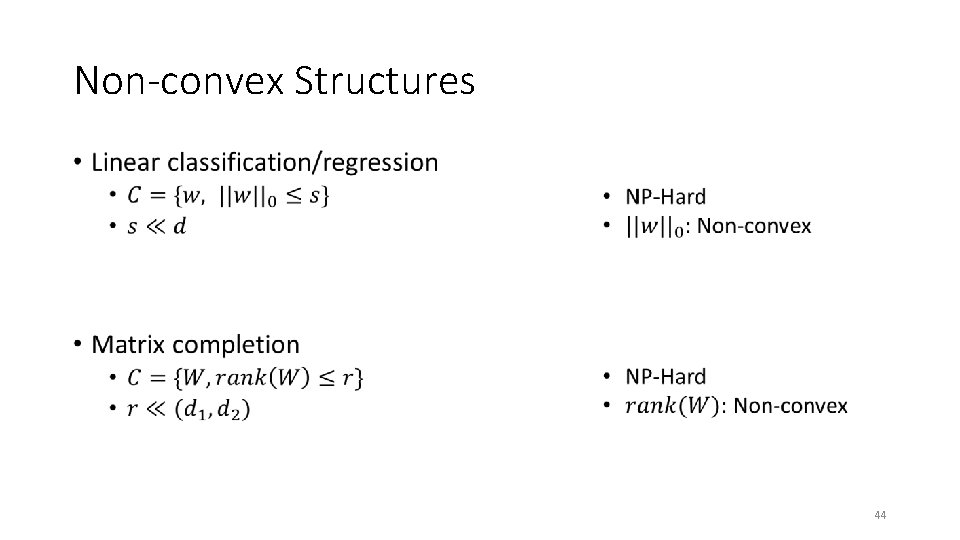

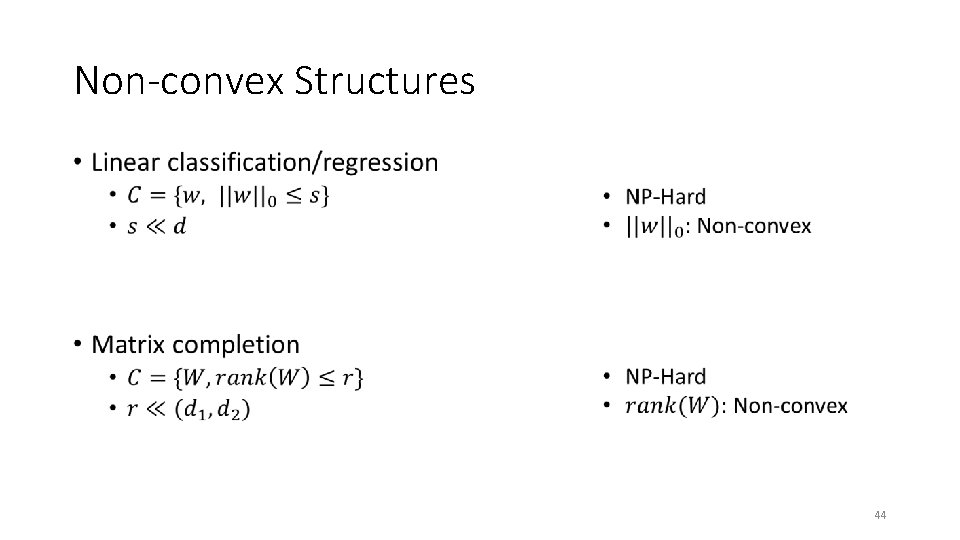

Non-convex Structures • 44

Non-convex Structures • 45

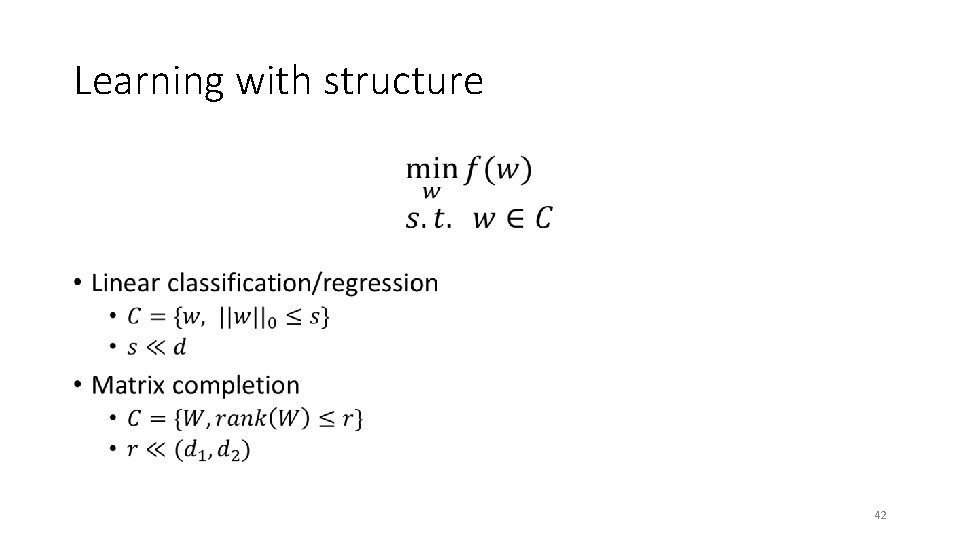

Technique: Projected Gradient Descent • 46

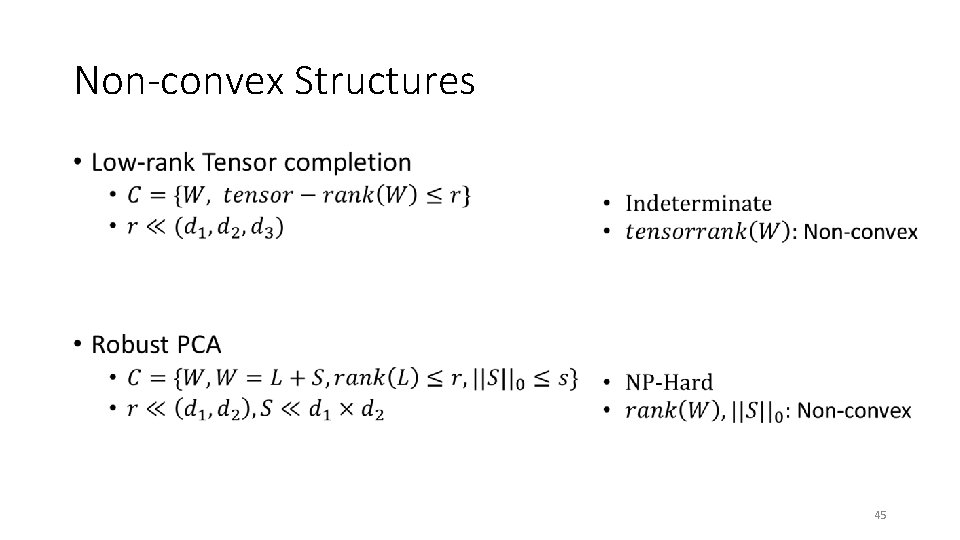

Results for Several Problems • Sparse regression [Jain et al. ’ 14, Garg and Khandekar’ 09] • Sparsity • Robust Regression [Bhatia et al. ’ 15] • Sparsity+output sparsity • Vector-value Regression [Jain & Tewari’ 15] • Sparsity+positive definite matrix • Dictionary Learning [Agarwal et al. ’ 14] • Matrix Factorization + Sparsity • Phase Sensing [Netrapalli et al. ’ 13] • System of Quadratic Equations 47

Results Contd… • 48

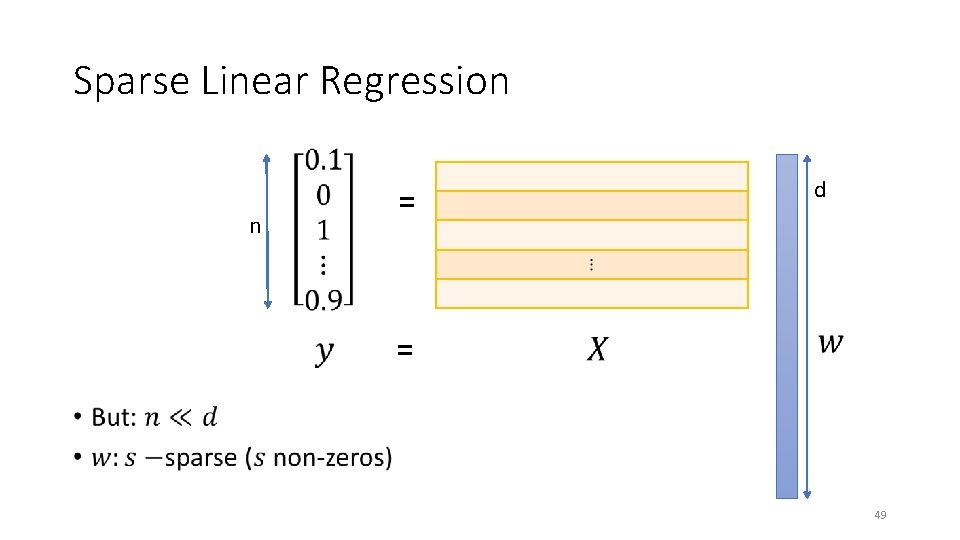

Sparse Linear Regression n = d = 49

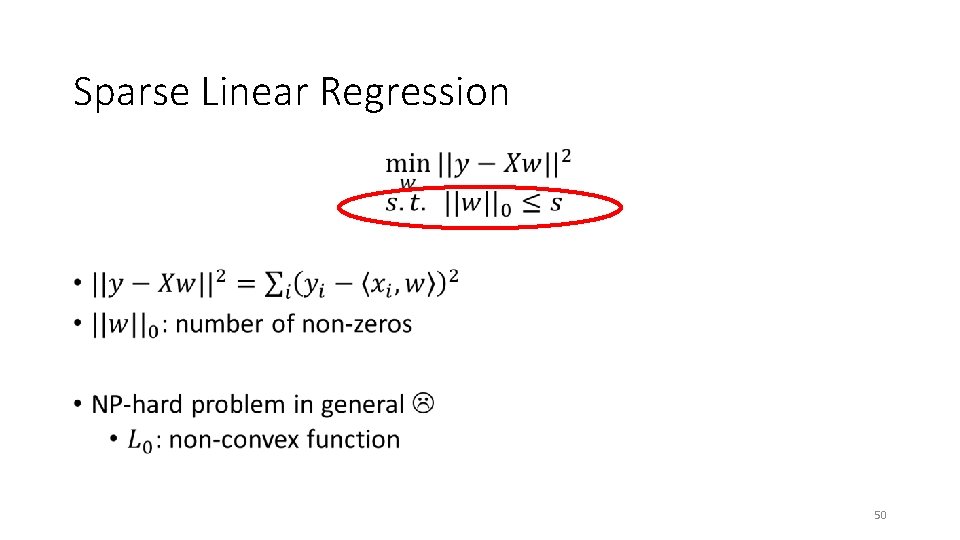

Sparse Linear Regression • 50

![Technique Projected Gradient Descent 51 Jain Tewari Kar 2014 Technique: Projected Gradient Descent • 51 [Jain, Tewari, Kar’ 2014]](https://slidetodoc.com/presentation_image_h2/4dafc48950167a6c230965d3fd87ef35/image-51.jpg)

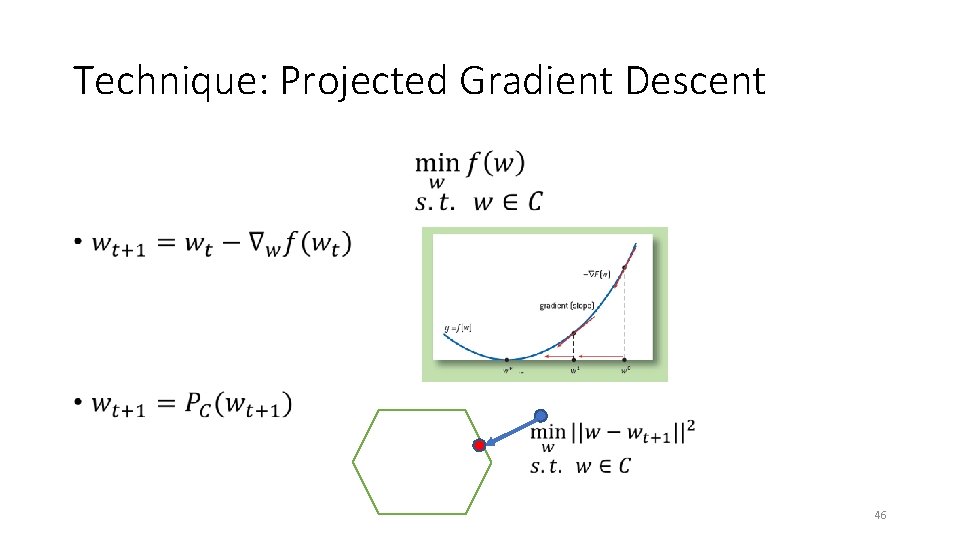

Technique: Projected Gradient Descent • 51 [Jain, Tewari, Kar’ 2014]

![Statistical Guarantees Jain Tewari Kar 2014 52 Statistical Guarantees • [Jain, Tewari, Kar’ 2014] 52](https://slidetodoc.com/presentation_image_h2/4dafc48950167a6c230965d3fd87ef35/image-52.jpg)

Statistical Guarantees • [Jain, Tewari, Kar’ 2014] 52

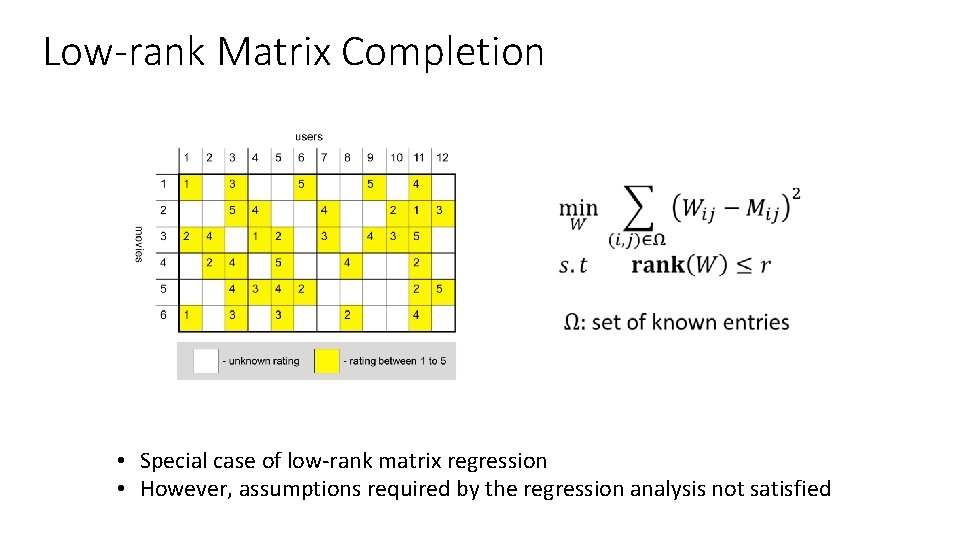

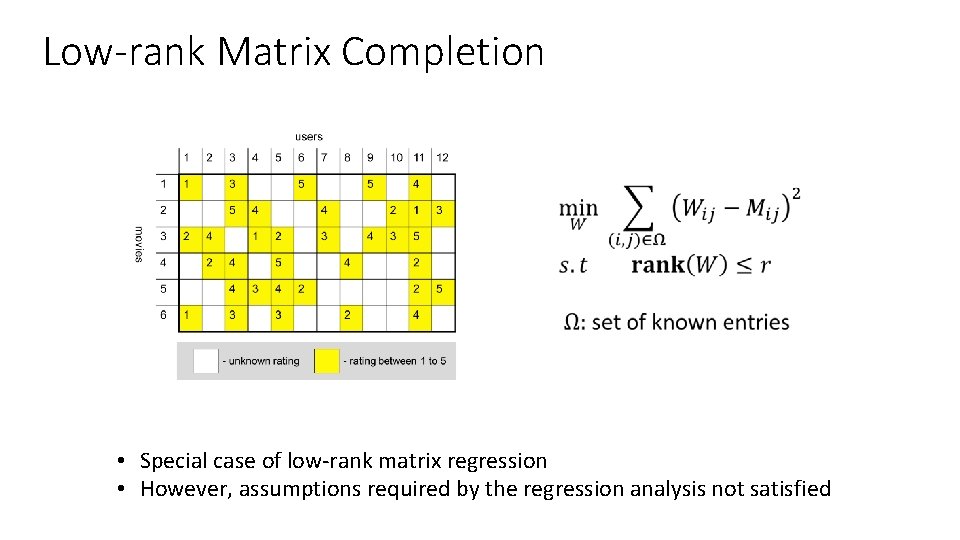

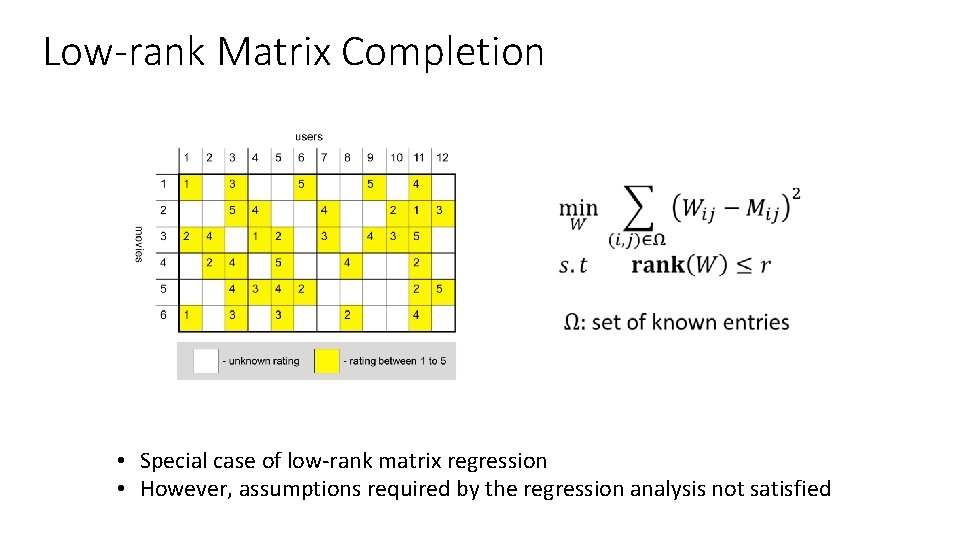

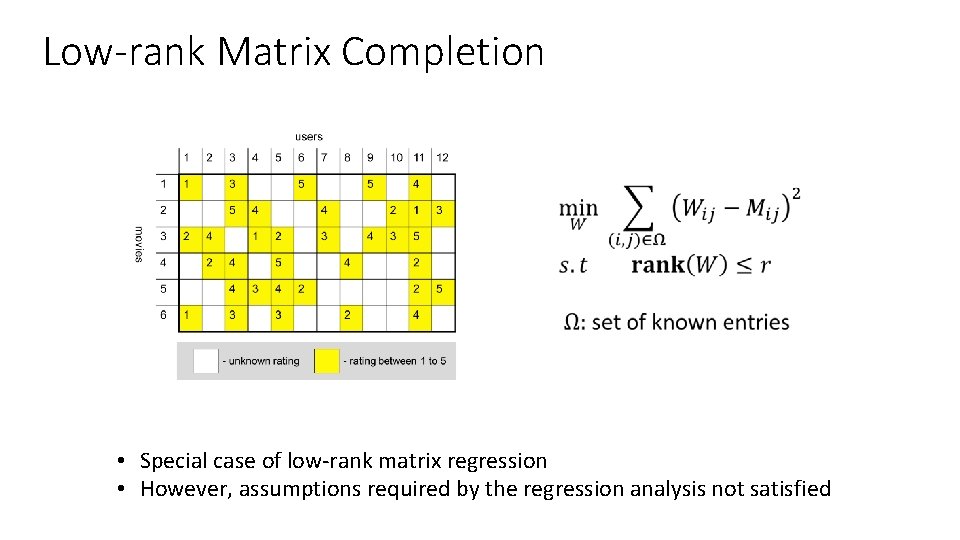

Low-rank Matrix Completion • Special case of low-rank matrix regression • However, assumptions required by the regression analysis not satisfied

![Technique Projected Gradient Descent 54 Jain Tewari Kar 2014 Netrapalli Jain 2014 Jain Technique: Projected Gradient Descent • 54 [Jain, Tewari, Kar’ 2014], [Netrapalli, Jain’ 2014], [Jain,](https://slidetodoc.com/presentation_image_h2/4dafc48950167a6c230965d3fd87ef35/image-54.jpg)

Technique: Projected Gradient Descent • 54 [Jain, Tewari, Kar’ 2014], [Netrapalli, Jain’ 2014], [Jain, Meka, Dhillon’ 2009]

![Guarantees J Netrapalli 2015 Guarantees • [J. , Netrapalli’ 2015]](https://slidetodoc.com/presentation_image_h2/4dafc48950167a6c230965d3fd87ef35/image-55.jpg)

Guarantees • [J. , Netrapalli’ 2015]

![General Result for Any Function J Tewari Kar 2014 General Result for Any Function • [J. , Tewari, Kar’ 2014]](https://slidetodoc.com/presentation_image_h2/4dafc48950167a6c230965d3fd87ef35/image-56.jpg)

General Result for Any Function • [J. , Tewari, Kar’ 2014]

Learning with Latent Variables •

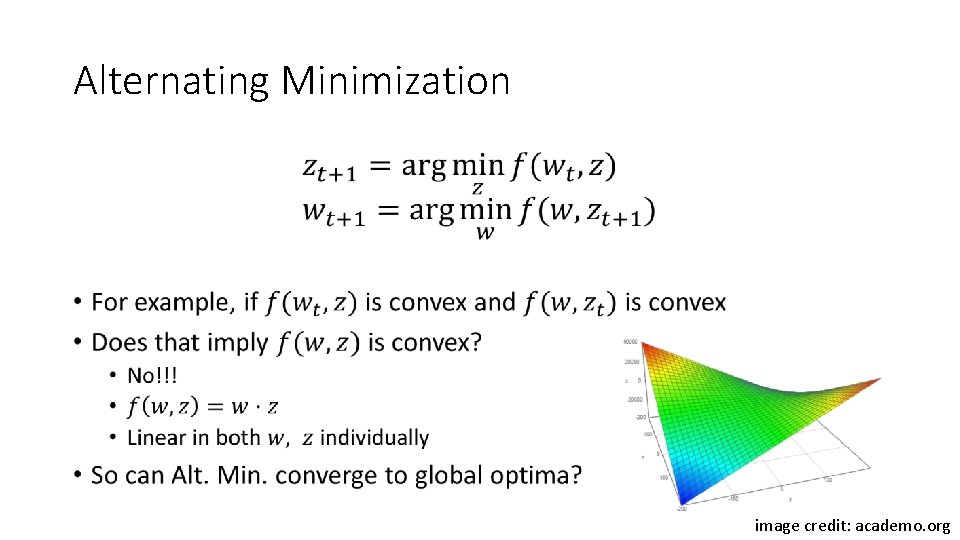

Alternating Minimization • image credit: academo. org

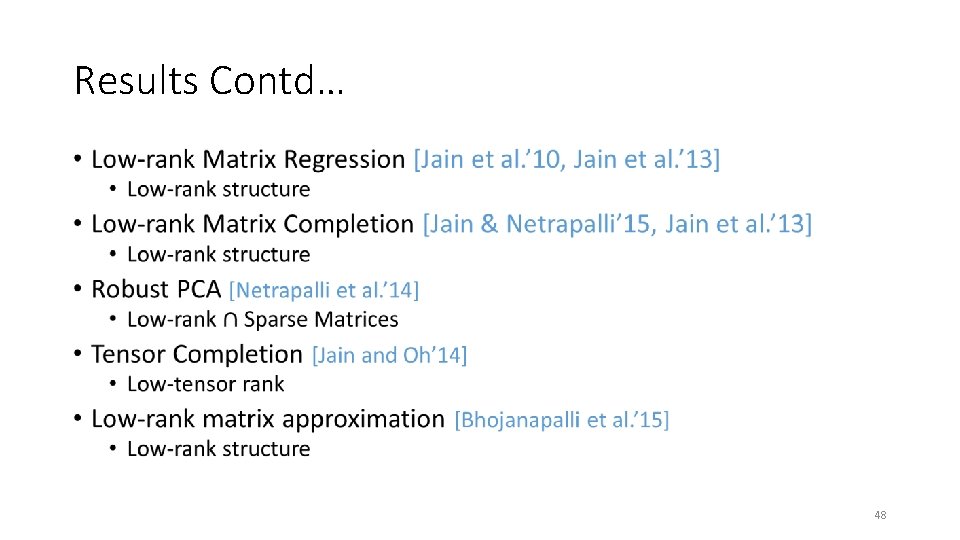

Low-rank Matrix Completion • Special case of low-rank matrix regression • However, assumptions required by the regression analysis not satisfied

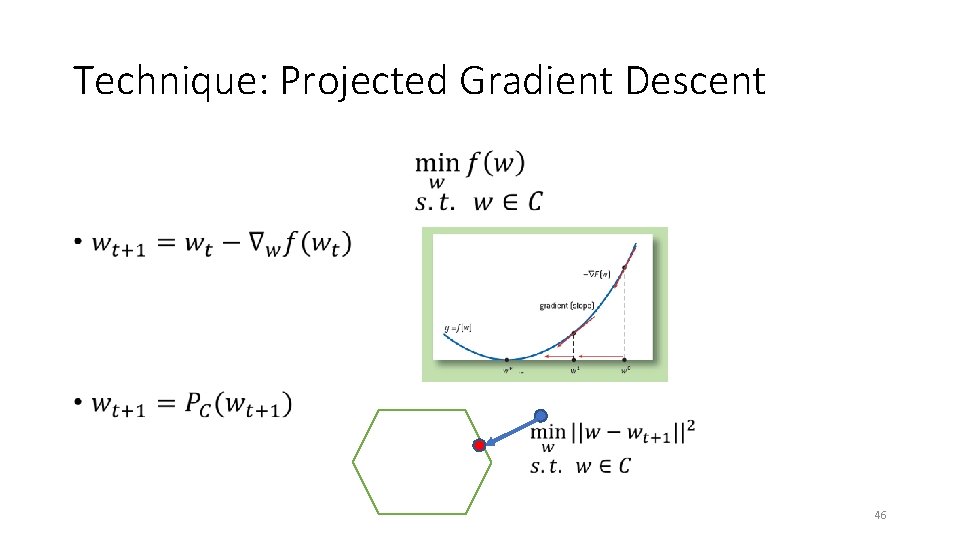

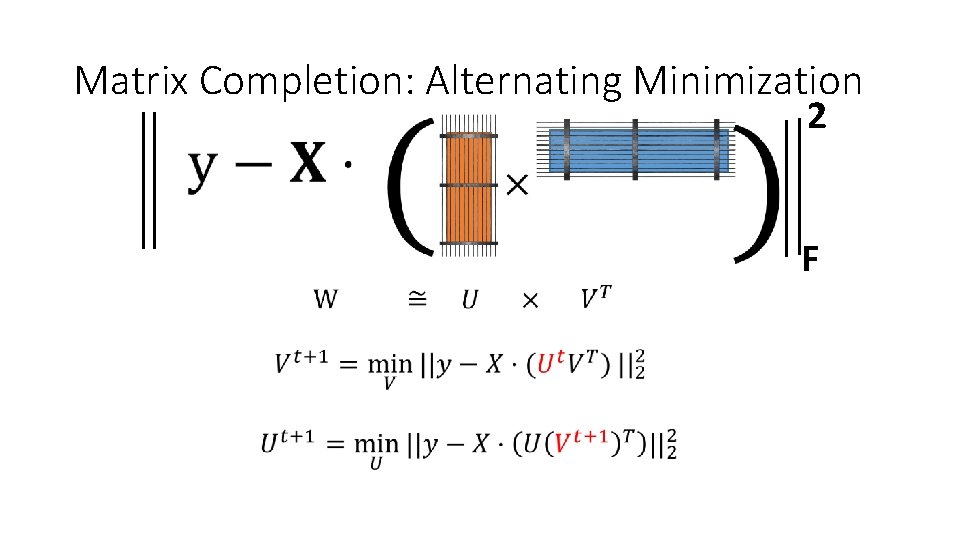

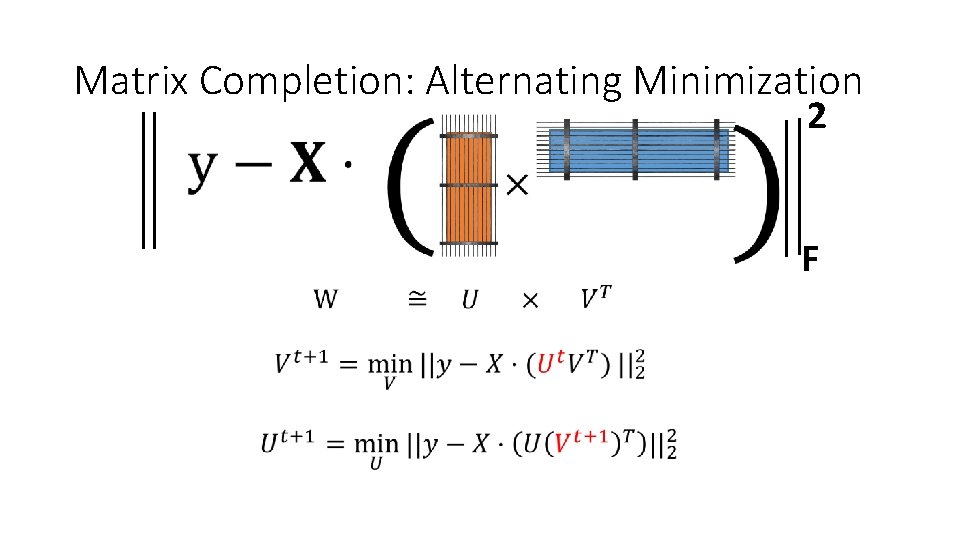

Matrix Completion: Alternating Minimization 2 F

![Results Alternating Minimization Jain Netrapalli Sanghavi 13 Results: Alternating Minimization • [Jain, Netrapalli, Sanghavi’ 13]](https://slidetodoc.com/presentation_image_h2/4dafc48950167a6c230965d3fd87ef35/image-61.jpg)

Results: Alternating Minimization • [Jain, Netrapalli, Sanghavi’ 13]

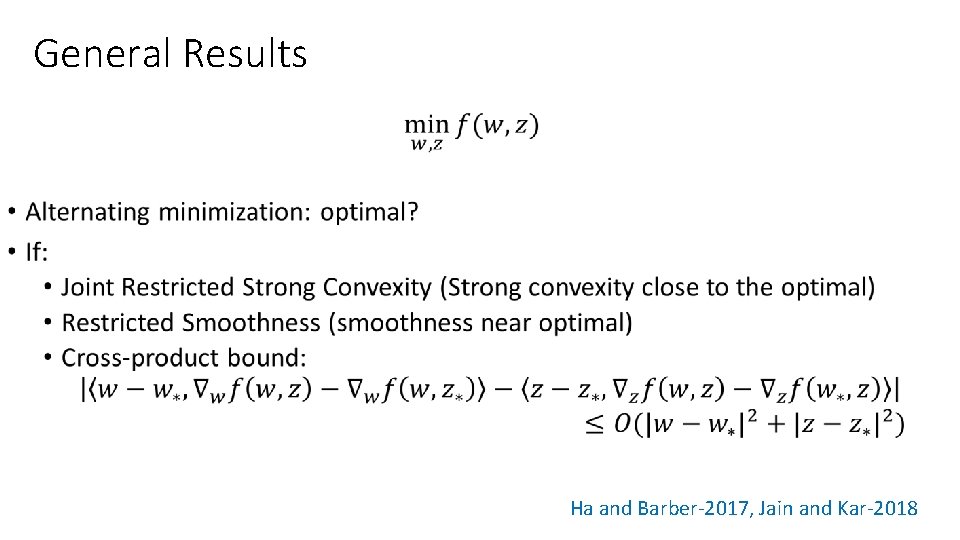

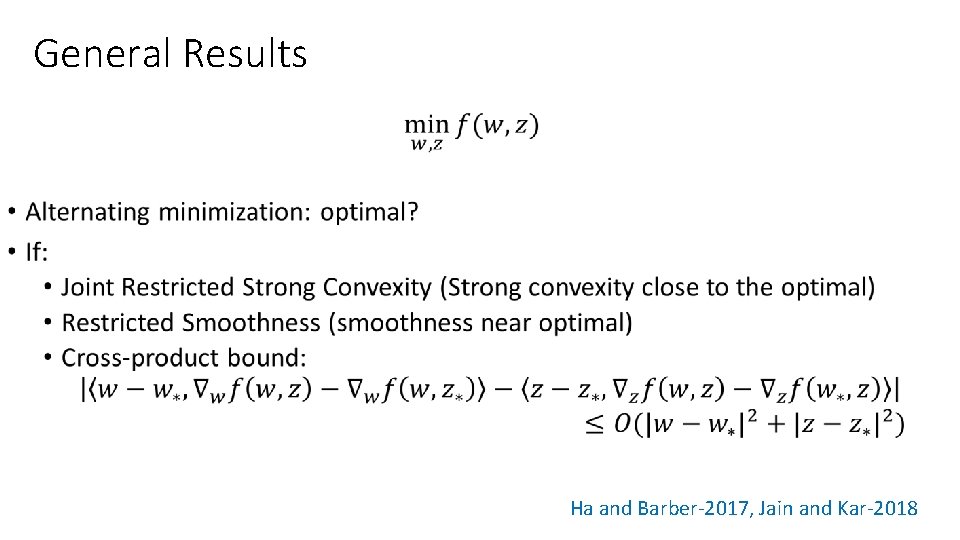

General Results • Ha and Barber-2017, Jain and Kar-2018

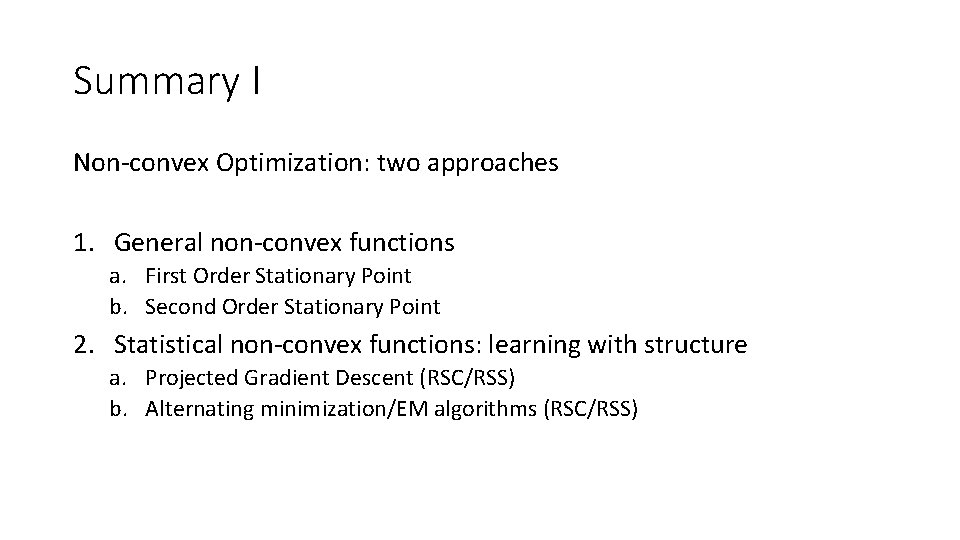

Summary I Non-convex Optimization: two approaches 1. General non-convex functions a. First Order Stationary Point b. Second Order Stationary Point 2. Statistical non-convex functions: learning with structure a. Projected Gradient Descent (RSC/RSS) b. Alternating minimization/EM algorithms (RSC/RSS)

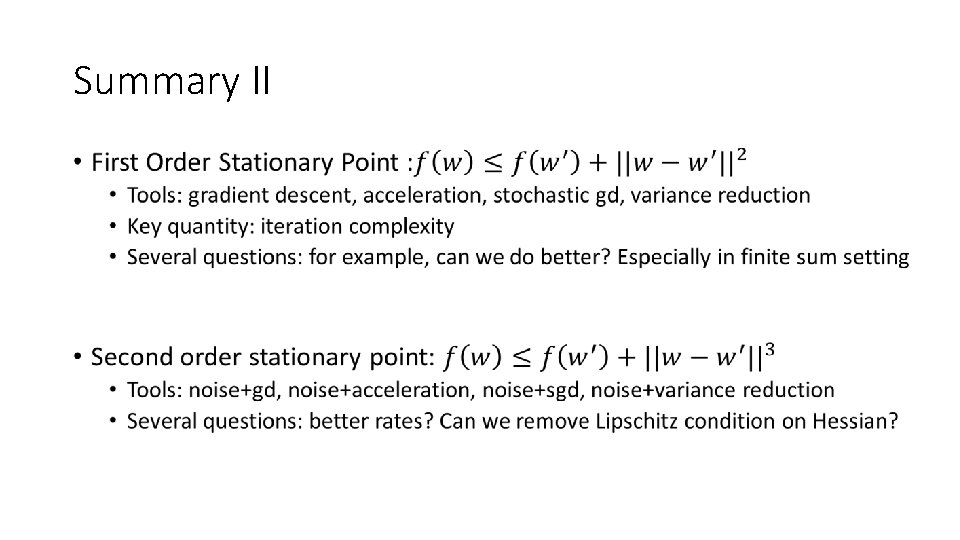

Summary II •

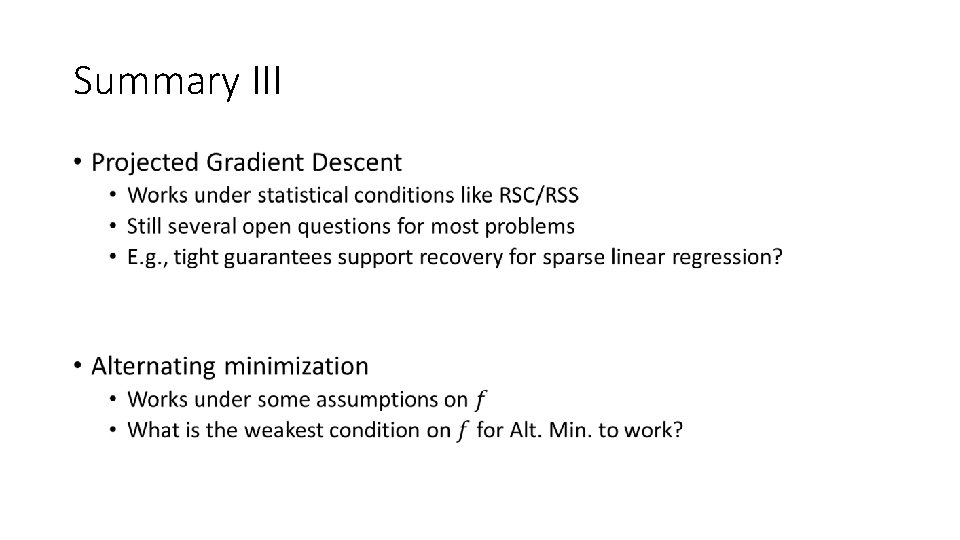

Summary III •