NLP Module 2 Outline Cohesion and Coherence Ambiguity

NLP Module - 2

Outline Cohesion and Coherence Ø Ambiguity Ø Natural Language Generation Ø

Cohesion & Coherence

Cohesion Ø Definition 1: v is the grammatical and lexical relationship within a text or sentence and can be defined as the links that hold a text together and give it meaning. v Ref ü http: //en. wikipedia. org/wiki/Cohesion_(linguistics)

Cohesion Ø Definition 2: v Cohesion is a semantic relation between an element in the text and some other element that is crucial to the interpretation of it. v Ref ü Halliday et al. , 1976 v Example: ü Ahmad belongs to Peshawar. He is in M. Sc final year

Coherence Ø Definition 1: v. A quality of sentences, paragraphs, and essays when all parts are clearly connected. v. Ref(http: //grammar. about. com/od/c/g/coherenceterm. htm: retrieved: 30 Oct, 2010)

Coherence Ø Example 1: v There once was a farmer in a small village. He worked hard day and night in his fields to fed his wife and children. Ø Example 2: v Ali was a studious student and got 900 marks. His percentage is 92. 1%.

Coherence Ø Definition 2: v Coherence is a semantic property of discourse formed through the interpretation of each individual sentence relative to the interpretation of other sentences, with "interpretation" implying interaction between the text and the reader. v Ref(Teun A. van Dijk, pp. 93) ü http: //www. criticism. com/da/coherence. php

Coherence Ø Definition 3: v Ø It is especially dealt with in text linguistics. v Ø Coherence in linguistics is what makes a text semantically meaningful. http: //en. wikipedia. org/wiki/Coherence_(linguistics), retrieval date: 27 Oct, 2010 Definition 4: v When sentences, ideas, and details fit together clearly, readers can follow along easily, and the writing is coherent. http: //home. ku. edu. tr/~doregan/Writing/Cohesion. html, Retrieved 30 Oct, 2010

Why coherence? Ø Ø The text-based features which provide cohesion in a text do not necessarily help achieve coherence, that is, they do not always contribute to the meaningfulness of a text, be it written or spoken. It has been stated that a text coheres only if the world around is also coherent.

Cohesive devices Ø Definition: v The links within the text that hold it together are called cohesive devices.

Categories of cohesive devices Ø A cohesive text is created in many different ways. In Cohesion in English, M. A. K. Halliday and Ruqaiya Hasan (1976) identify five general categories of cohesive devices that create coherence in texts: v Reference, v Ellipsis, v Substitution, v Conjunction, v Lexical Ø cohesion. Refhttp: //www. criticism. com/da/coherence. php

References or referring expressions Ø A natural language expression used to perform reference is called a referring expression and the entity that is referred to is called the referent. Ø Referring expressions are words or phrases, the semantic interpretation of which is a discourse entity (also called referent) Ø Example: v A pretty woman entered the restaurant. She sat at the table next to mine and only then I recognized her. This was Amy Garcia, my next door neighbor from 10 years ago. The woman has totally changed! Amy was at the time shy…

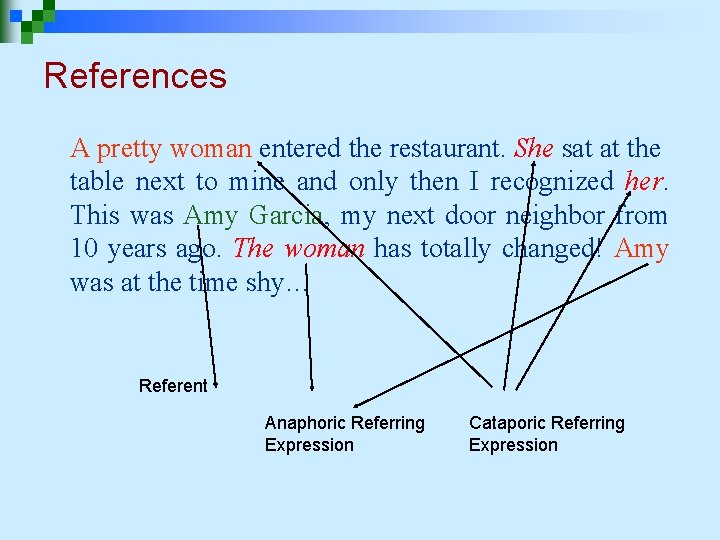

References A pretty woman entered the restaurant. She sat at the table next to mine and only then I recognized her. This was Amy Garcia, my next door neighbor from 10 years ago. The woman has totally changed! Amy was at the time shy… Referent Anaphoric Referring Expression Cataporic Referring Expression

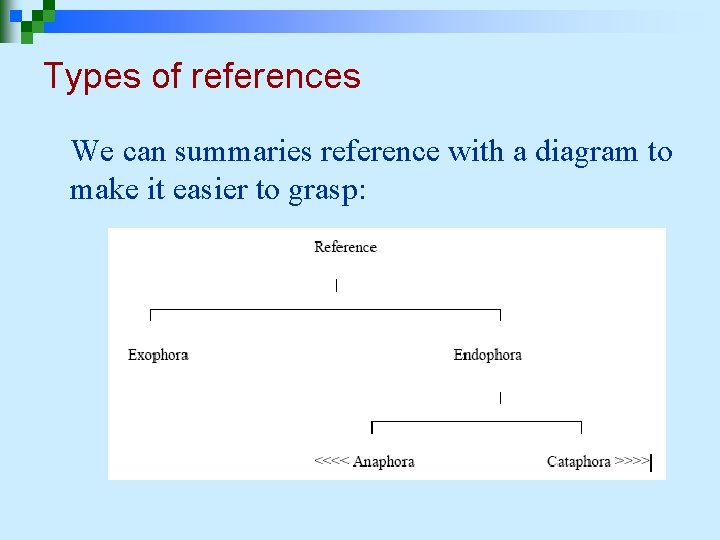

Types of references We can summaries reference with a diagram to make it easier to grasp:

Exophoric reference Ø Ø Ø Exophoric reference, depends on the context outside the text for its meaning. In linguistics, Exophora is reference to something extralinguistic. For example v "What is this? ", ü here "this" is exophoric rather than endophoric, because it refers to something extra-linguistic, i. e. there is not enough information in the utterance itself to determine what "this" refers to, but we must instead observe the non-linguistic context of the utterance (e. g. the speaker might be holding an unknown object in their hand as they ask that question. )

Endophoric reference Ø Ø The pronouns refer to items within the same text; it is endophoric reference. Endophora is a linguistic reference to something intralinguistic. v For v Example: "I saw Sally yesterday. She was lying on the beach". ü Here, "she" is intra-linguistic, and hence endophoric, because it refers to something (Sally, in this case) already mentioned in the text. ¨ (From Wikipedia, the free encyclopedia)

Endophora (Another Definition) Ø Words or phrases like pronouns are endophora when they point backwards or forwards to something in the text: v For example: ü As [he]1 was late, [Harry] 1 wanted to phone [his] [boss] 2 and tell [her] 2 what had happened. ¨ (From Wikipedia, the free encyclopedia) 1

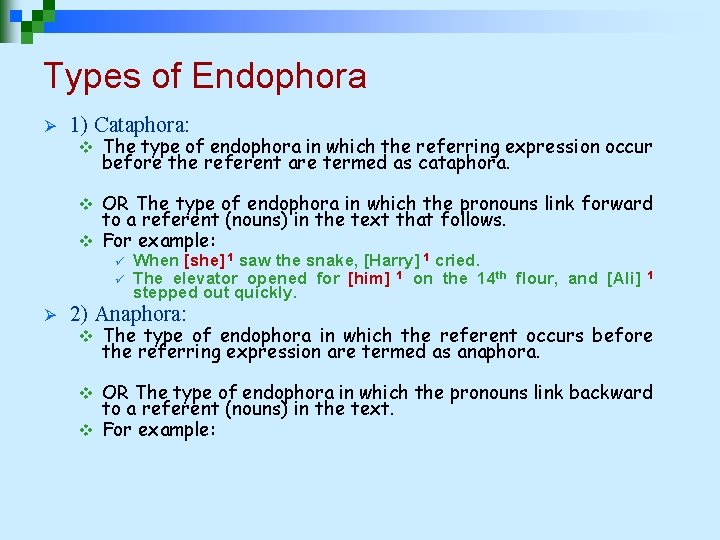

Types of Endophora Ø 1) Cataphora: v The type of endophora in which the referring expression occur before the referent are termed as cataphora. OR The type of endophora in which the pronouns link forward to a referent (nouns) in the text that follows. v For example: v ü ü Ø When [she] 1 saw the snake, [Harry] 1 cried. The elevator opened for [him] 1 on the 14 th flour, and [Ali] stepped out quickly. 1 2) Anaphora: v The type of endophora in which the referent occurs before the referring expression are termed as anaphora. OR The type of endophora in which the pronouns link backward to a referent (nouns) in the text. v For example: v

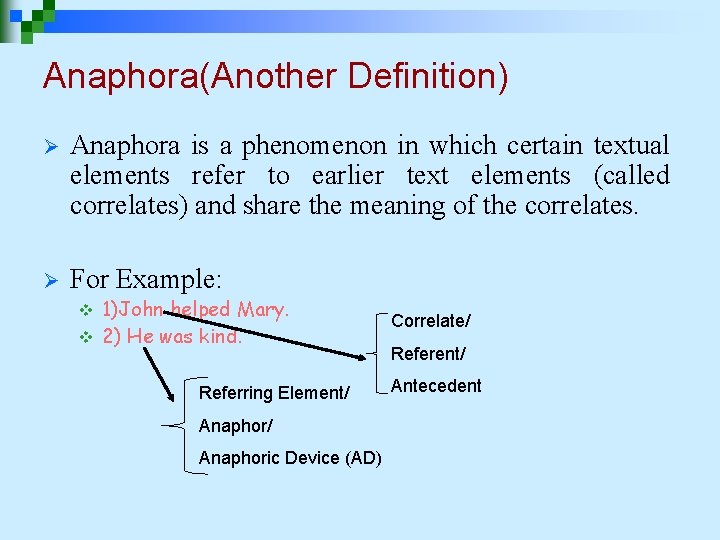

Anaphora(Another Definition) Ø Anaphora is a phenomenon in which certain textual elements refer to earlier text elements (called correlates) and share the meaning of the correlates. Ø For Example: 1)John helped Mary. v 2) He was kind. v Referring Element/ Anaphoric Device (AD) Correlate/ Referent/ Antecedent

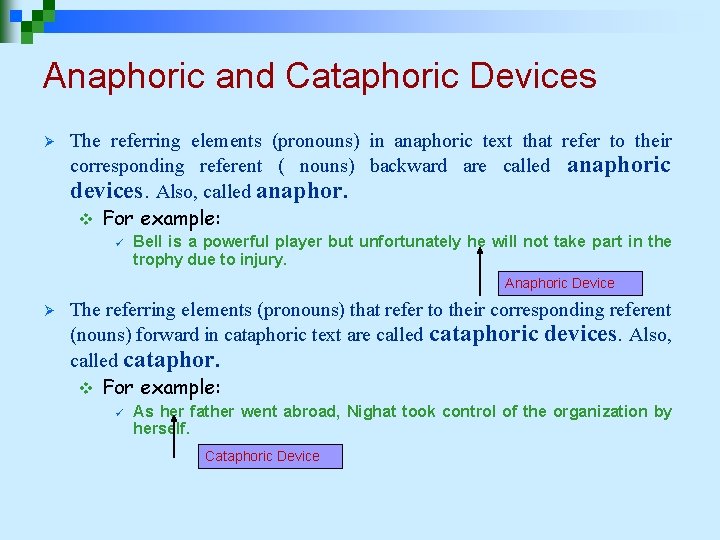

Anaphoric and Cataphoric Devices Ø The referring elements (pronouns) in anaphoric text that refer to their corresponding referent ( nouns) backward are called anaphoric devices. Also, called anaphor. v For example: ü Bell is a powerful player but unfortunately he will not take part in the trophy due to injury. Anaphoric Device Ø The referring elements (pronouns) that refer to their corresponding referent (nouns) forward in cataphoric text are called cataphoric devices. Also, called cataphor. v For example: ü As her father went abroad, Nighat took control of the organization by herself. Cataphoric Device

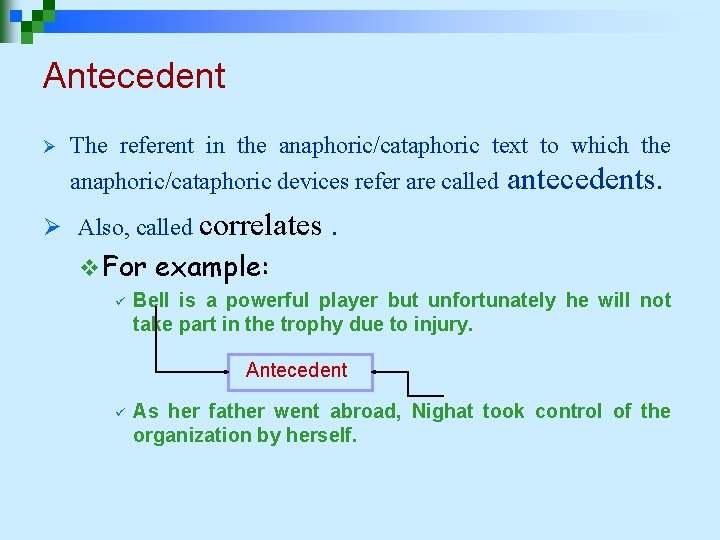

Antecedent Ø The referent in the anaphoric/cataphoric text to which the anaphoric/cataphoric devices refer are called Ø Also, called correlates v For ü antecedents. . example: Bell is a powerful player but unfortunately he will not take part in the trophy due to injury. Antecedent ü As her father went abroad, Nighat took control of the organization by herself.

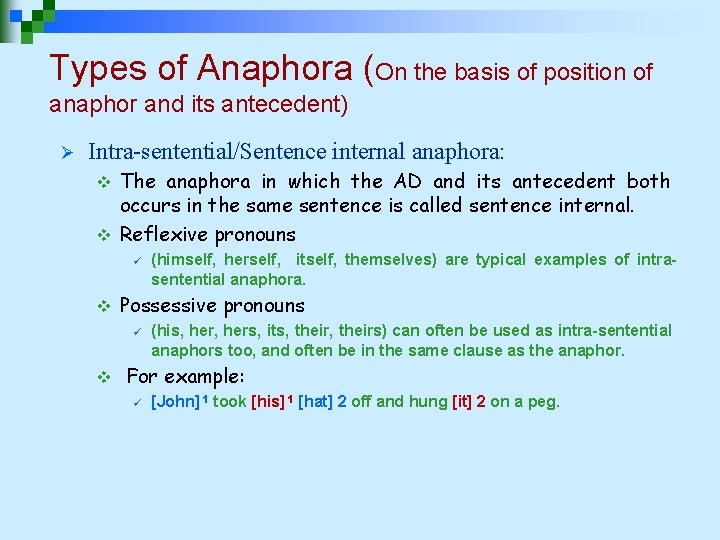

Types of Anaphora (On the basis of position of anaphor and its antecedent) Ø Intra-sentential/Sentence internal anaphora: The anaphora in which the AD and its antecedent both occurs in the same sentence is called sentence internal. v Reflexive pronouns v ü v Possessive pronouns ü v (himself, herself, itself, themselves) are typical examples of intrasentential anaphora. (his, hers, its, theirs) can often be used as intra-sentential anaphors too, and often be in the same clause as the anaphor. For example: ü [John] 1 took [his] 1 [hat] 2 off and hung [it] 2 on a peg.

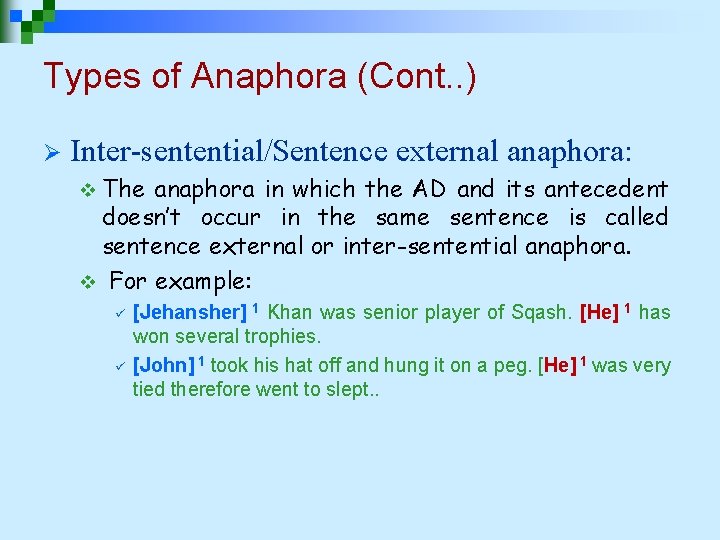

Types of Anaphora (Cont. . ) Ø Inter-sentential/Sentence external anaphora: v The anaphora in which the AD and its antecedent doesn’t occur in the same sentence is called sentence external or inter-sentential anaphora. v For example: ü ü [Jehansher] 1 Khan was senior player of Sqash. [He] 1 has won several trophies. [John] 1 took his hat off and hung it on a peg. [He] 1 was very tied therefore went to slept. .

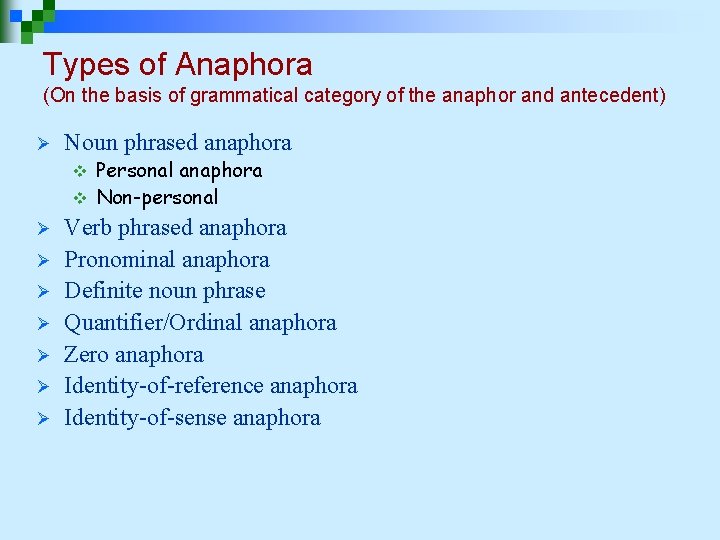

Types of Anaphora (On the basis of grammatical category of the anaphor and antecedent) Ø Noun phrased anaphora Personal anaphora v Non-personal v Ø Ø Ø Ø Verb phrased anaphora Pronominal anaphora Definite noun phrase Quantifier/Ordinal anaphora Zero anaphora Identity-of-reference anaphora Identity-of-sense anaphora

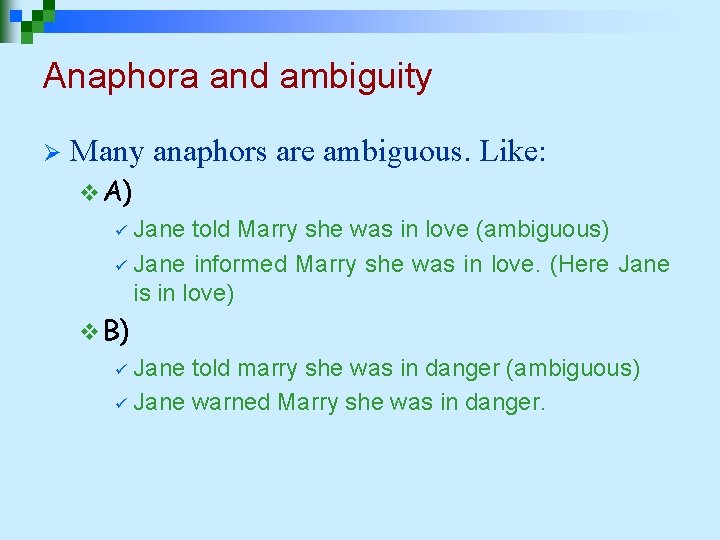

Anaphora and ambiguity Ø Many anaphors are ambiguous. Like: v A) Jane told Marry she was in love (ambiguous) ü Jane informed Marry she was in love. (Here Jane is in love) ü v B) Jane told marry she was in danger (ambiguous) ü Jane warned Marry she was in danger. ü

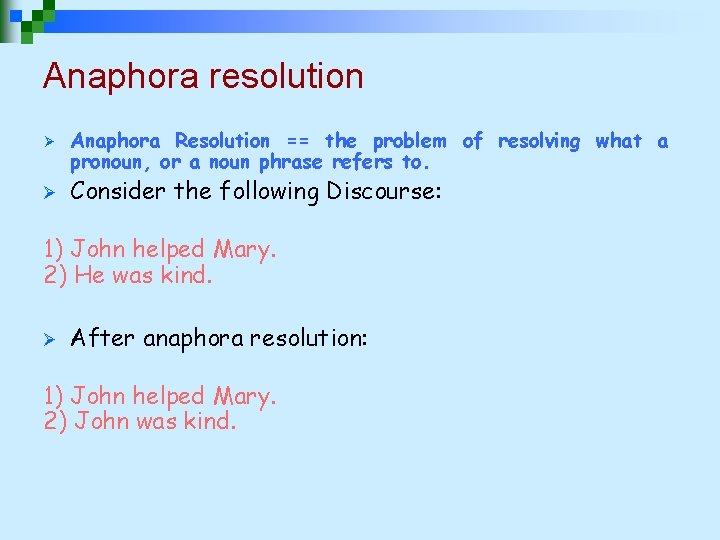

Anaphora resolution Ø Ø Anaphora Resolution == the problem of resolving what a pronoun, or a noun phrase refers to. Consider the following Discourse: 1) John helped Mary. 2) He was kind. Ø After anaphora resolution: 1) John helped Mary. 2) John was kind.

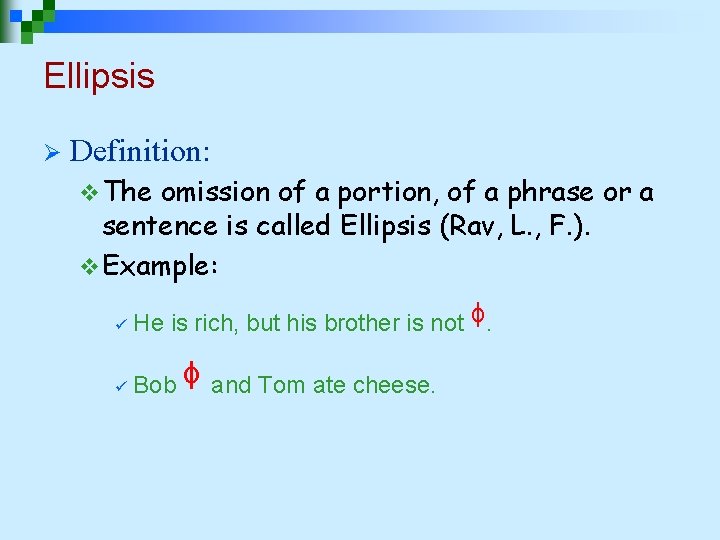

Ellipsis Ø Definition: v The omission of a portion, of a phrase or a sentence is called Ellipsis (Rav, L. , F. ). v Example: ᶲ ü He is rich, but his brother is not. ü Bob ᶲ and Tom ate cheese.

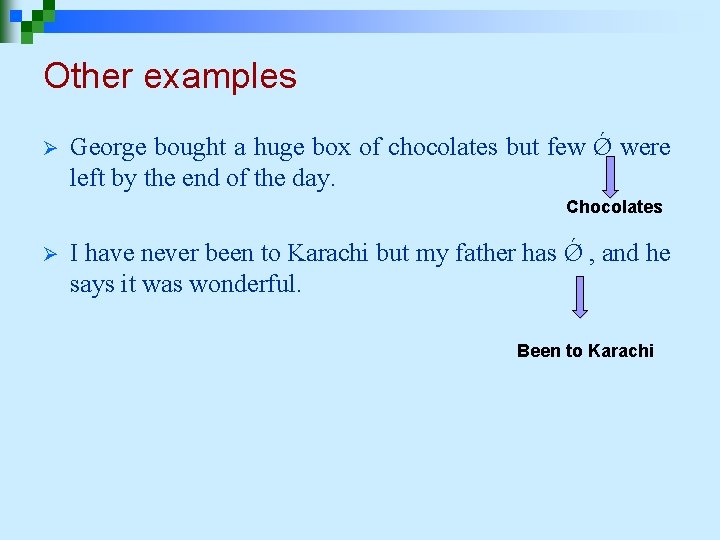

Other examples Ø George bought a huge box of chocolates but few Ǿ were left by the end of the day. Chocolates Ø I have never been to Karachi but my father has Ǿ , and he says it was wonderful. Been to Karachi

Origin of the word ellipsis Derived from Greek, the word 'ellipsis' means “the omission of words that could be understood from the context”. Ø Ellipsis is the non-expression of one or more sentence elements whose meaning can be reconstructed either from the context or from a person’s knowledge of the world. Ø

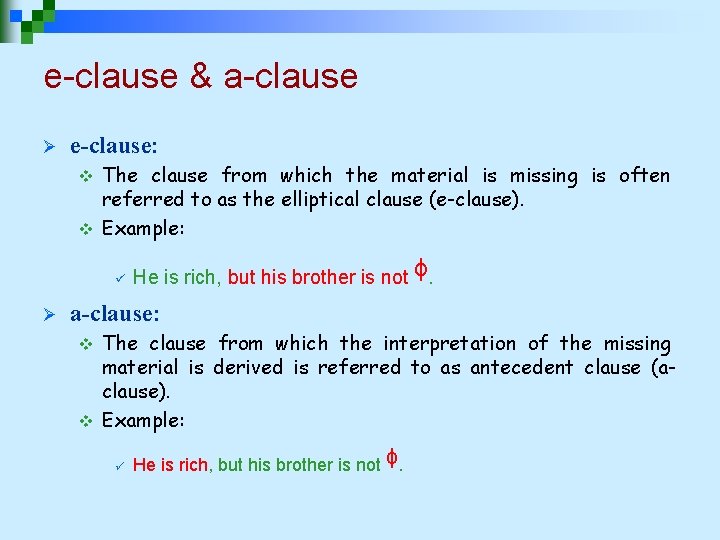

e-clause & a-clause Ø e-clause: The clause from which the material is missing is often referred to as the elliptical clause (e-clause). v Example: v ü Ø ᶲ He is rich, but his brother is not. a-clause: The clause from which the interpretation of the missing material is derived is referred to as antecedent clause (aclause). v Example: v ü ᶲ He is rich, but his brother is not.

Types of ellipsis Ø Some of the several types of ellipses are: v Noun Phrase Ellipses v Verb Phrase Ellipses v Gapping v Stripping v Sluicing v Ellipses in WH-Constructions v Ellipses in Q-Constructions

Ellipsis resolution Ellipses resolution is an important area in the research community. Ø All natural languages have the occurrences of ellipses in their text as well as speech, although different from each other. Ø For their resolution different approaches are followed by linguists as described by Shalom Lappin (Lappin and Lease). Ø

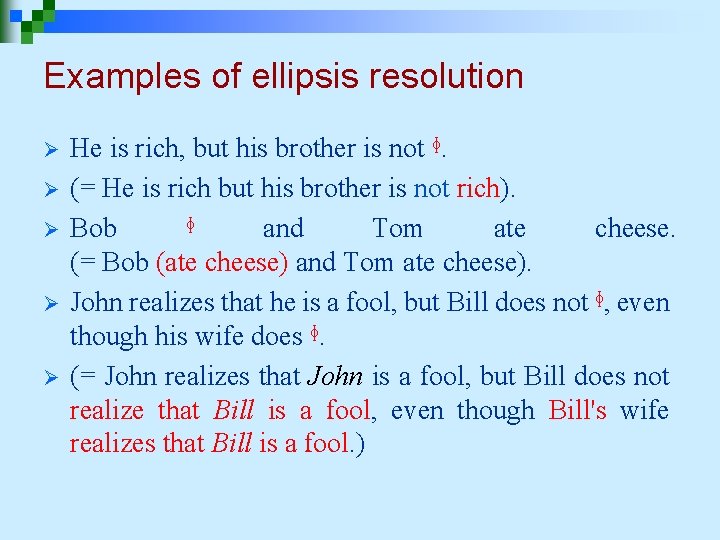

Examples of ellipsis resolution Ø Ø Ø He is rich, but his brother is not ᶲ. (= He is rich but his brother is not rich). Bob ᶲ and Tom ate cheese. (= Bob (ate cheese) and Tom ate cheese). John realizes that he is a fool, but Bill does not ᶲ, even though his wife does ᶲ. (= John realizes that John is a fool, but Bill does not realize that Bill is a fool, even though Bill's wife realizes that Bill is a fool. )

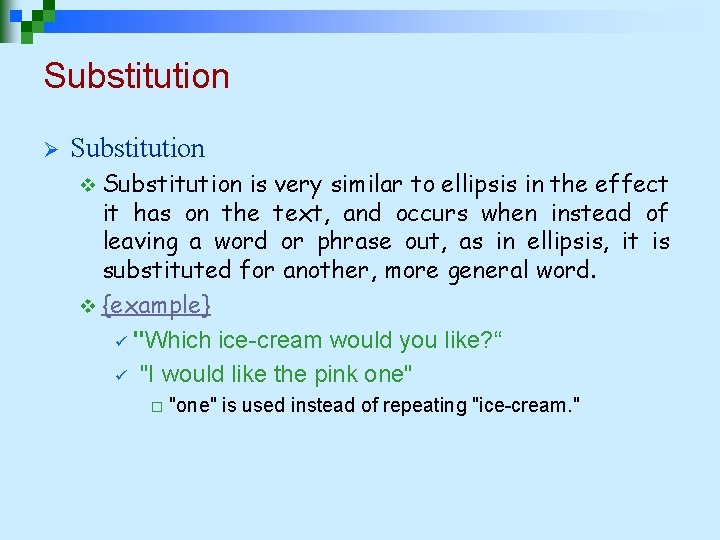

Substitution Ø Substitution v Substitution is very similar to ellipsis in the effect it has on the text, and occurs when instead of leaving a word or phrase out, as in ellipsis, it is substituted for another, more general word. v {example} ü "Which ice-cream would you like? “ ü "I would like the pink one" ¨ "one" is used instead of repeating "ice-cream. "

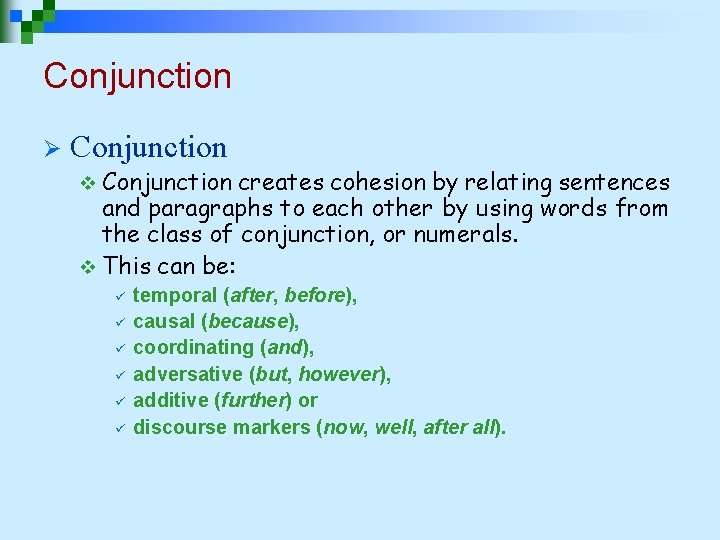

Conjunction Ø Conjunction v Conjunction creates cohesion by relating sentences and paragraphs to each other by using words from the class of conjunction, or numerals. v This can be: ü ü ü temporal (after, before), causal (because), coordinating (and), adversative (but, however), additive (further) or discourse markers (now, well, after all).

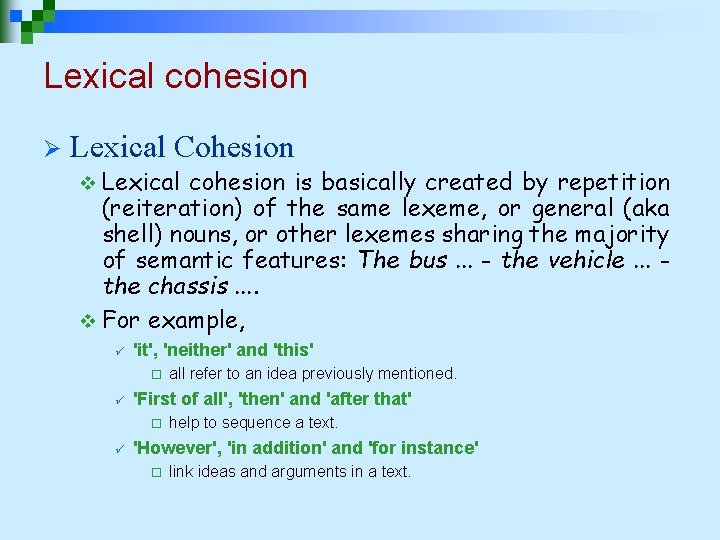

Lexical cohesion Ø Lexical Cohesion v Lexical cohesion is basically created by repetition (reiteration) of the same lexeme, or general (aka shell) nouns, or other lexemes sharing the majority of semantic features: The bus. . . - the vehicle. . . the chassis. . v For example, ü 'it', 'neither' and 'this' ¨ ü 'First of all', 'then' and 'after that' ¨ ü all refer to an idea previously mentioned. help to sequence a text. 'However', 'in addition' and 'for instance' ¨ link ideas and arguments in a text.

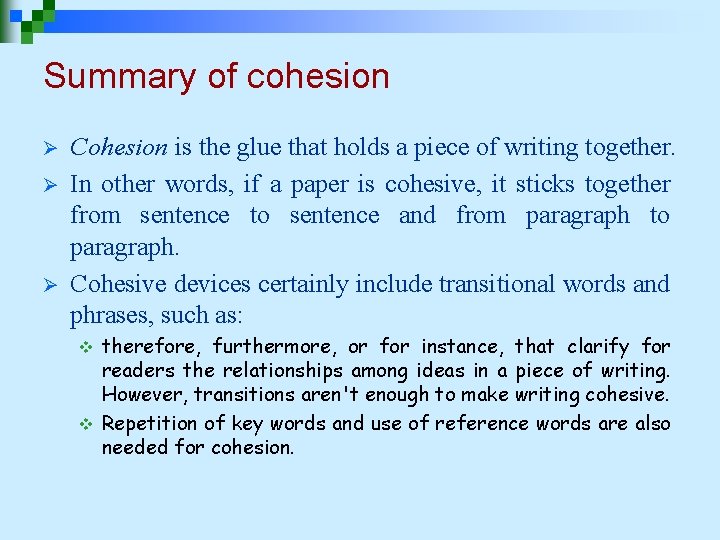

Summary of cohesion Ø Ø Ø Cohesion is the glue that holds a piece of writing together. In other words, if a paper is cohesive, it sticks together from sentence to sentence and from paragraph to paragraph. Cohesive devices certainly include transitional words and phrases, such as: therefore, furthermore, or for instance, that clarify for readers the relationships among ideas in a piece of writing. However, transitions aren't enough to make writing cohesive. v Repetition of key words and use of reference words are also needed for cohesion. v

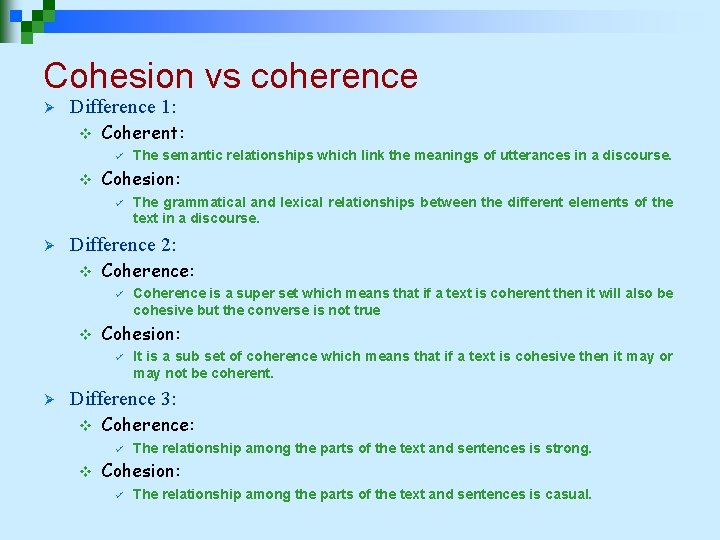

Cohesion vs coherence Ø Difference 1: v Coherent: ü v Cohesion: ü Ø The grammatical and lexical relationships between the different elements of the text in a discourse. Difference 2: v Coherence: ü v Coherence is a super set which means that if a text is coherent then it will also be cohesive but the converse is not true Cohesion: ü Ø The semantic relationships which link the meanings of utterances in a discourse. It is a sub set of coherence which means that if a text is cohesive then it may or may not be coherent. Difference 3: v Coherence: ü v The relationship among the parts of the text and sentences is strong. Cohesion: ü The relationship among the parts of the text and sentences is casual.

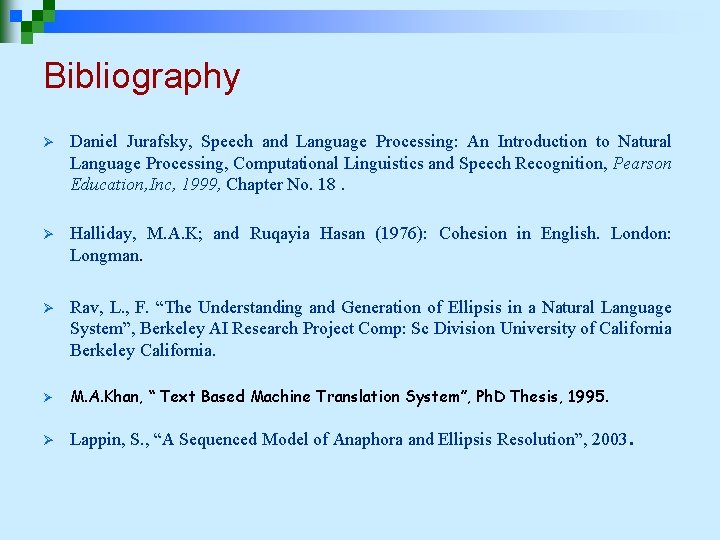

Bibliography Ø Daniel Jurafsky, Speech and Language Processing: An Introduction to Natural Language Processing, Computational Linguistics and Speech Recognition, Pearson Education, Inc, 1999, Chapter No. 18. Ø Halliday, M. A. K; and Ruqayia Hasan (1976): Cohesion in English. London: Longman. Ø Rav, L. , F. “The Understanding and Generation of Ellipsis in a Natural Language System”, Berkeley AI Research Project Comp: Sc Division University of California Berkeley California. Ø M. A. Khan, “ Text Based Machine Translation System”, Ph. D Thesis, 1995. Ø Lappin, S. , “A Sequenced Model of Anaphora and Ellipsis Resolution”, 2003 .

Ambiguity

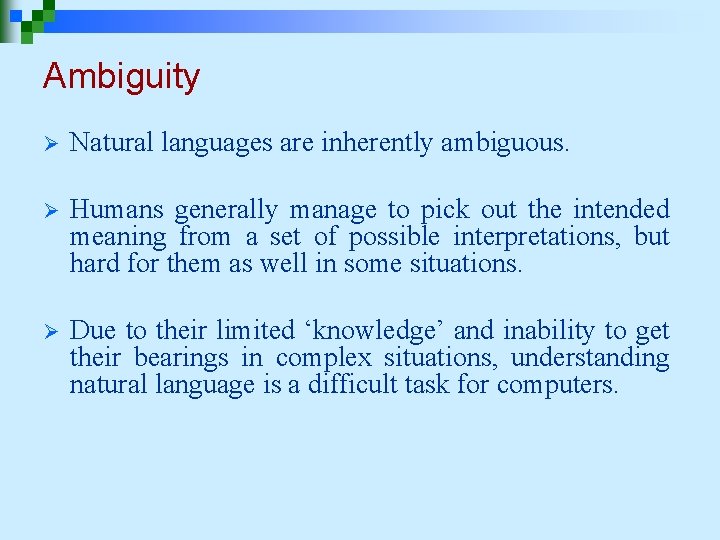

Ambiguity Ø Natural languages are inherently ambiguous. Ø Humans generally manage to pick out the intended meaning from a set of possible interpretations, but hard for them as well in some situations. Ø Due to their limited ‘knowledge’ and inability to get their bearings in complex situations, understanding natural language is a difficult task for computers.

Ambiguity: A Definition An input is termed to be ambiguous if there are multiple alternative linguistic structures that can be built for it. Ø For example: Ø v “I ü made her duck”. This simple sentence could have five meanings.

Ambiguity Example I cooked a duck for her. 1. 1. 2. 3. 4. I cooked a duck belonging to her. I created the plaster duck she owns. I caused her to quickly lower head or body. I turned her into a duck (by magic).

Ambiguity (cont. ) 1. 2. 3. These meanings are caused by a number of ambiguities, i. e. The words her and duck are syntactically ambiguous. The word make is semantically ambiguous ‘I’ or ‘eye’ and ‘made’ or ‘maid’ (if the sentence is a spoken one)

Types of Ambiguities Ø Following are the various types of ambiguities: v Lexical or Semantic ambiguity v Syntactic or Structural ambiguity v Pragmatic ambiguity v Referential ambiguity

Lexical Ambiguity (semantic ambiguity) Ø An ambiguity caused when a word has more than one meaning. v For ü example: ‘The wrestlers entered the ring’. Ring can be a proposed area for wrestling. ¨ Ring can be a golden ring wrapped around a finger. ¨

Lexical Ambiguity (cont. ) Following are some phenomenon of lexical ambiguity: 1. Homonymy 2. Polysemy 3. Synonymy 4. Hyponymy (Have already covered)

Syntactic Ambiguity Ø Ø Syntactic ambiguity occurs when a phrase or sentence has more than one underlying structure. Syntactic ambiguity arises not from the range of meanings of single words, but from the relationship between the words and clauses of a sentence, and the sentence structure implied thereby.

Syntactic Ambiguity (Cont. . ) Ø Consider the following phrases and sentences: v ‘Pakistani history teacher', v 'short men and women‘, v 'The girl hit the boy with a book‘, v 'Visiting relatives can be boring'. ü These ambiguities are said to be structural because each such phrase can be represented in two or more structurally different ways.

Syntactic Ambiguity (Cont. . ) Ø The possible meanings for ‘Time flies like an arrow’ are: v 1. Time goes by as quickly as an arrow. v 2. Time flies (N+PL) likes an arrow. v 3. Every "time fly" (N+SL) like an arrow. v 4. Someone or something named "Time" flies in a way similar to an arrow. E. g. ü "Mr. Time flies an airplane fast and straight. "

Pragmatic Ambiguity Ø Occurs when speaker and listener don’t agree on same principals of co-operative communication. v For ü ü ü etc. example: ‘I’ll meet you next Friday’ (which Friday) ‘Do you know the time? ’ ‘Can you pass the salt? ’

Referential Ambiguity Ø May be unclear what objects are referred to, e. g. , v 1) He drove the car over the lawn mower, but it wasn't hurt. v 2) Bob waved to Jim in the hallway between class. He smiled.

Reading/References Ø Ø Daniel Jurafsky, Speech and Language Processing: An Introduction to Natural Language Processing, Computational Linguistics and Speech Recognition, Pearson Education, Inc, 2000. M. A. Khan, “ Text Based Machine Translation System”, Ph. D Thesis, 1995.

Natural Language Generation

Definition 1: NLG Ø Ø Natural Language Generation (NLG) is the process of constructing natural language outputs from nonlinguistic inputs. Goal: v Ø The goal of this process can be viewed as the inverse of that of natural language understanding (NLU) NLU Vs NLG: v NLG maps from meaning to text, while NLU maps from text to meaning. (Juraffsky, Chapter 20)

Definition 2: NLG Ø Natural Language Generation (NLG) is the natural language processing task of generating natural language from a machine representation system such as a knowledge base or a logical form. (http: //en. wikipedia. org/wiki/Natural_language_generation, Retrieved: 31 Oct, 2010)

Definition 3: NLG Natural language generation is the process of deliberately constructing a natural language text in order to meet specified communicative goals. [Mc. Donald 1992]

What is NLG? Or Ingredient of NLG Ø Goal: v Ø Input: v Ø Some underlying non-linguistic representation of information Output: v Ø Computer software which produces understandable and appropriate texts in English or other human languages Documents, reports, explanations, help messages, and other kinds of texts Knowledge sources required: v Knowledge of target language and of the domain

Example System #1: Fo. G Ø Function: v Ø Input: v Ø Environment Canada (Canadian Weather Service) Developer: v Ø Graphical/numerical weather depiction User: v Ø Produces textual weather reports in English and French Co. Gen. Tex Status: v Fielded, in operational use since 1992

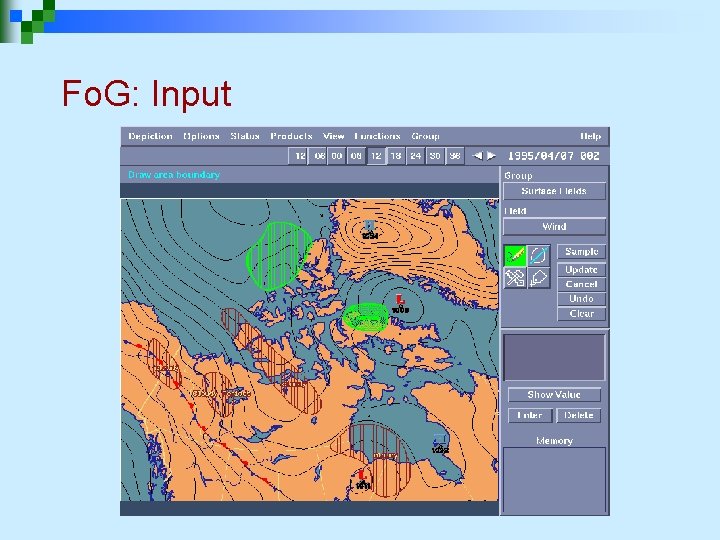

Fo. G: Input

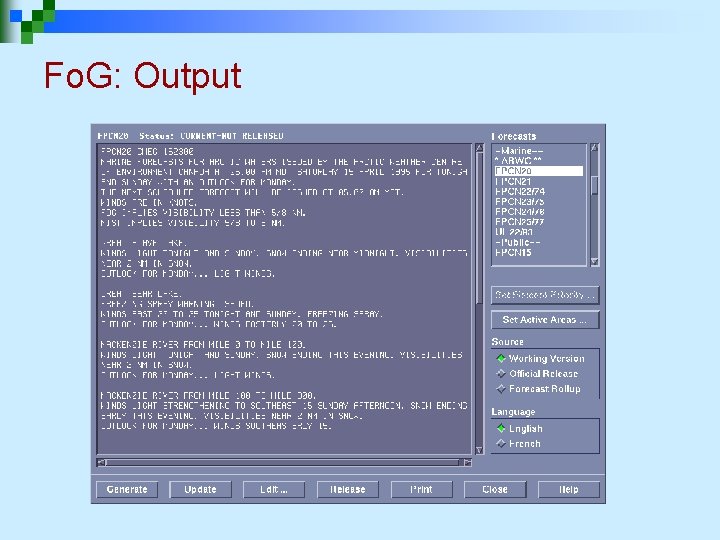

Fo. G: Output

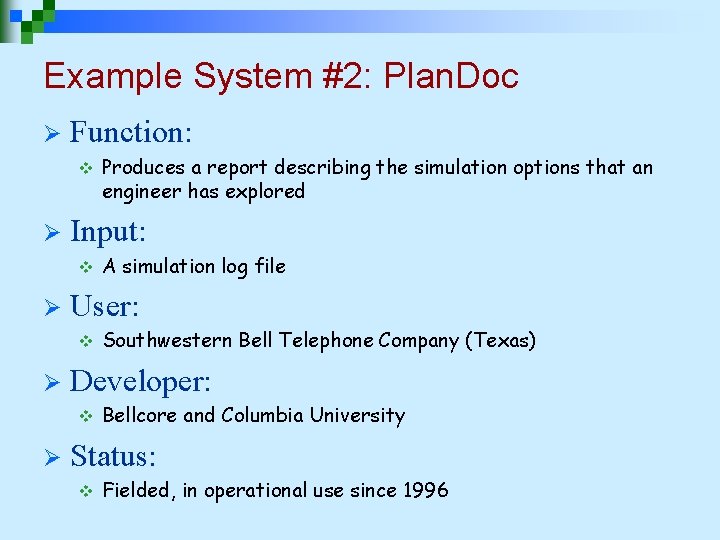

Example System #2: Plan. Doc Ø Function: v Ø Input: v Ø Southwestern Bell Telephone Company (Texas) Developer: v Ø A simulation log file User: v Ø Produces a report describing the simulation options that an engineer has explored Bellcore and Columbia University Status: v Fielded, in operational use since 1996

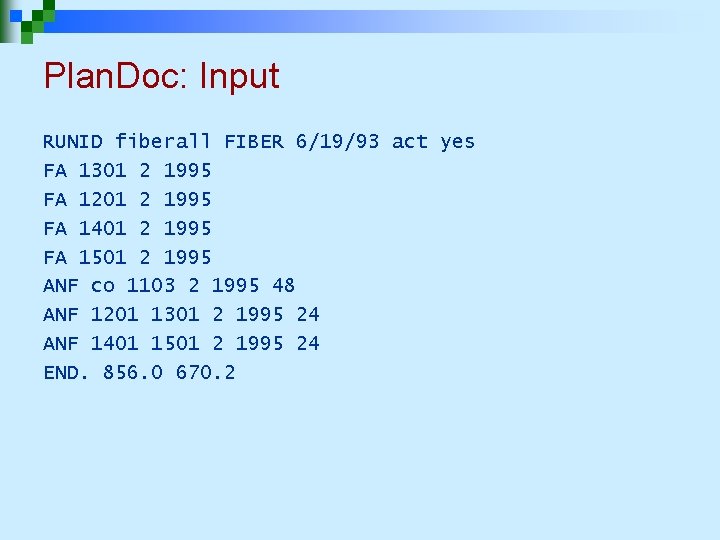

Plan. Doc: Input RUNID fiberall FIBER 6/19/93 act yes FA 1301 2 1995 FA 1201 2 1995 FA 1401 2 1995 FA 1501 2 1995 ANF co 1103 2 1995 48 ANF 1201 1301 2 1995 24 ANF 1401 1501 2 1995 24 END. 856. 0 670. 2

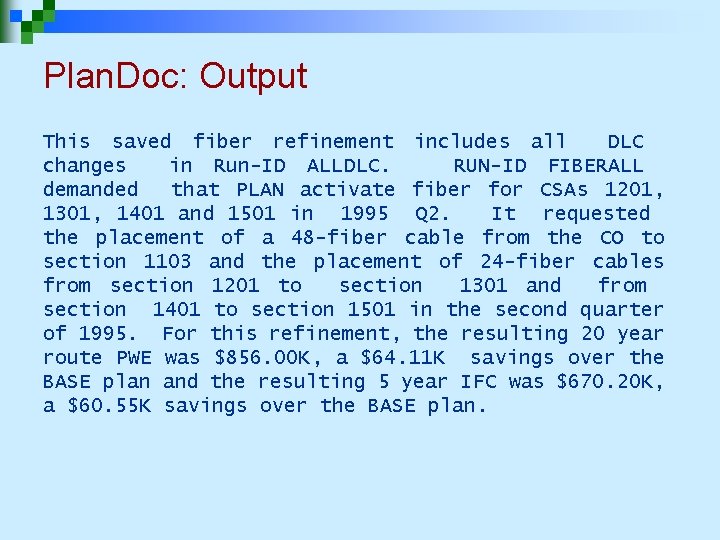

Plan. Doc: Output This saved fiber refinement includes all DLC changes in Run-ID ALLDLC. RUN-ID FIBERALL demanded that PLAN activate fiber for CSAs 1201, 1301, 1401 and 1501 in 1995 Q 2. It requested the placement of a 48 -fiber cable from the CO to section 1103 and the placement of 24 -fiber cables from section 1201 to section 1301 and from section 1401 to section 1501 in the second quarter of 1995. For this refinement, the resulting 20 year route PWE was $856. 00 K, a $64. 11 K savings over the BASE plan and the resulting 5 year IFC was $670. 20 K, a $60. 55 K savings over the BASE plan.

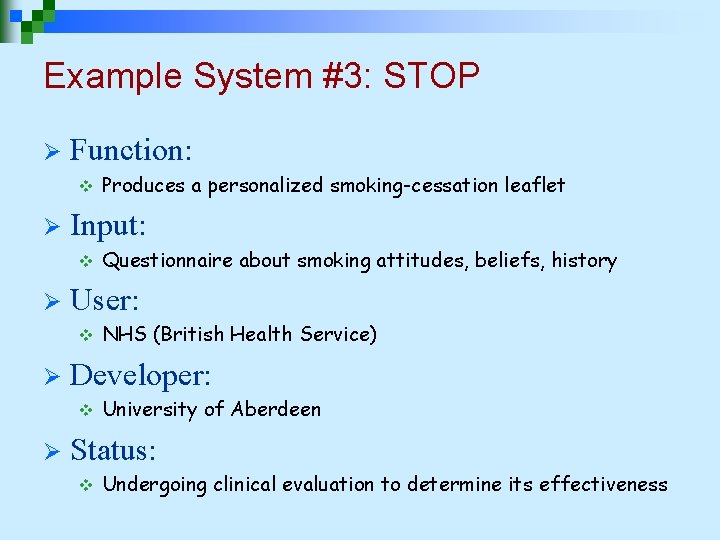

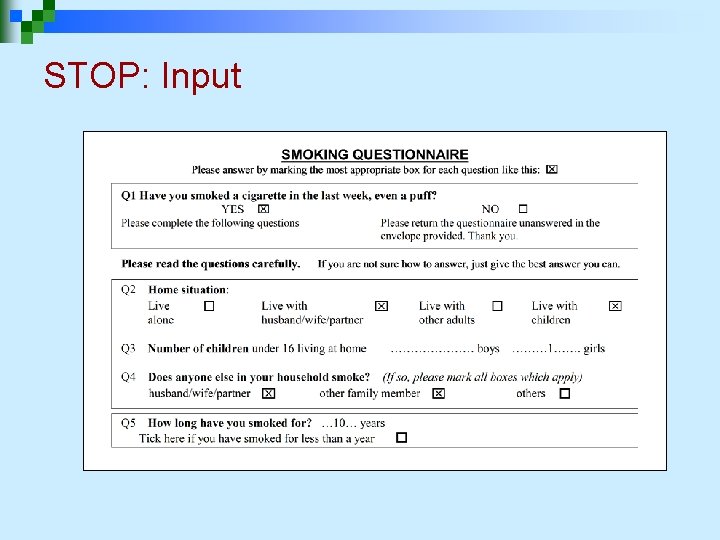

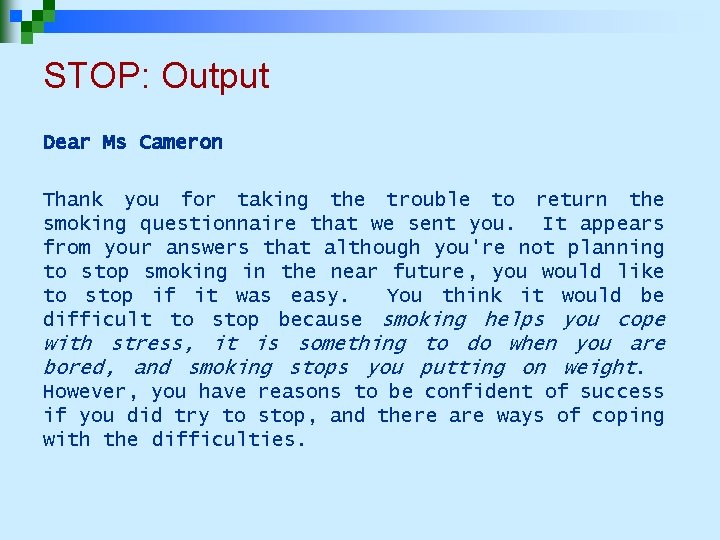

Example System #3: STOP Ø Function: v Ø Input: v Ø NHS (British Health Service) Developer: v Ø Questionnaire about smoking attitudes, beliefs, history User: v Ø Produces a personalized smoking-cessation leaflet University of Aberdeen Status: v Undergoing clinical evaluation to determine its effectiveness

STOP: Input

STOP: Output Dear Ms Cameron Thank you for taking the trouble to return the smoking questionnaire that we sent you. It appears from your answers that although you're not planning to stop smoking in the near future, you would like to stop if it was easy. You think it would be difficult to stop because smoking helps you cope with stress, it is something to do when you are bored, and smoking stops you putting on weight. However, you have reasons to be confident of success if you did try to stop, and there are ways of coping with the difficulties.

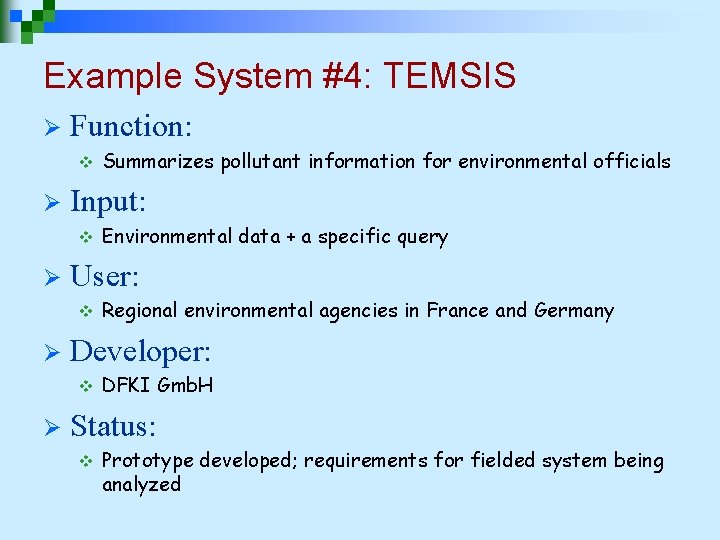

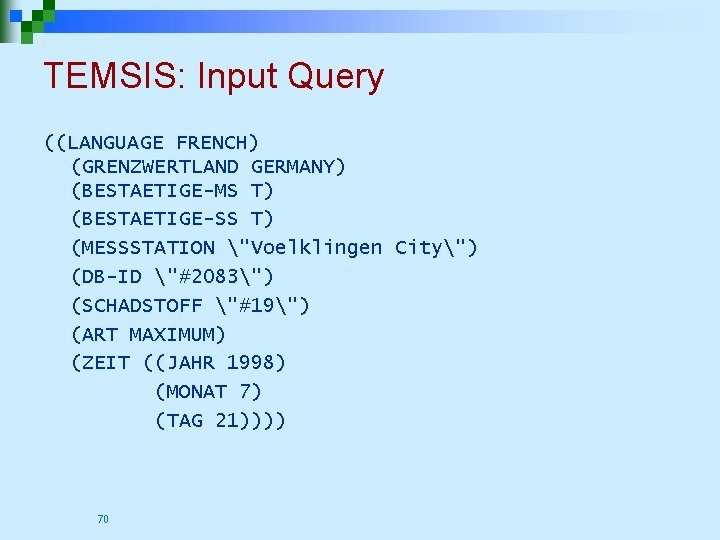

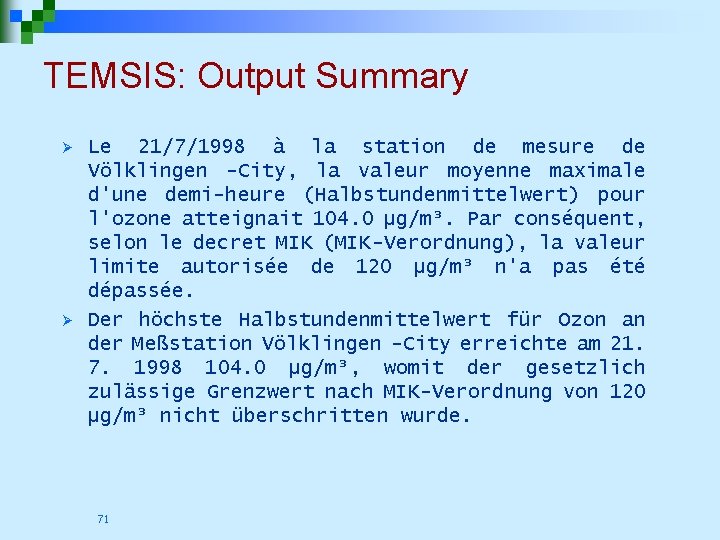

Example System #4: TEMSIS Ø Function: v Ø Input: v Ø Regional environmental agencies in France and Germany Developer: v Ø Environmental data + a specific query User: v Ø Summarizes pollutant information for environmental officials DFKI Gmb. H Status: v Prototype developed; requirements for fielded system being analyzed

TEMSIS: Input Query ((LANGUAGE FRENCH) (GRENZWERTLAND GERMANY) (BESTAETIGE-MS T) (BESTAETIGE-SS T) (MESSSTATION "Voelklingen City") (DB-ID "#2083") (SCHADSTOFF "#19") (ART MAXIMUM) (ZEIT ((JAHR 1998) (MONAT 7) (TAG 21)))) 70

TEMSIS: Output Summary Ø Ø Le 21/7/1998 à la station de mesure de Völklingen -City, la valeur moyenne maximale d'une demi-heure (Halbstundenmittelwert) pour l'ozone atteignait 104. 0 µg/m³. Par conséquent, selon le decret MIK (MIK-Verordnung), la valeur limite autorisée de 120 µg/m³ n'a pas été dépassée. Der höchste Halbstundenmittelwert für Ozon an der Meßstation Völklingen -City erreichte am 21. 7. 1998 104. 0 µg/m³, womit der gesetzlich zulässige Grenzwert nach MIK-Verordnung von 120 µg/m³ nicht überschritten wurde. 71

Types of NLG Applications Ø Automated document production v weather Ø Presentation of information to people in an understandable fashion v medical Ø forecasts, simulation reports, letters, . . . records, expert system reasoning, . . . Teaching v information Ø for students in CAL systems Entertainment v jokes (? ), stories (? ? ), poetry (? ? ? )

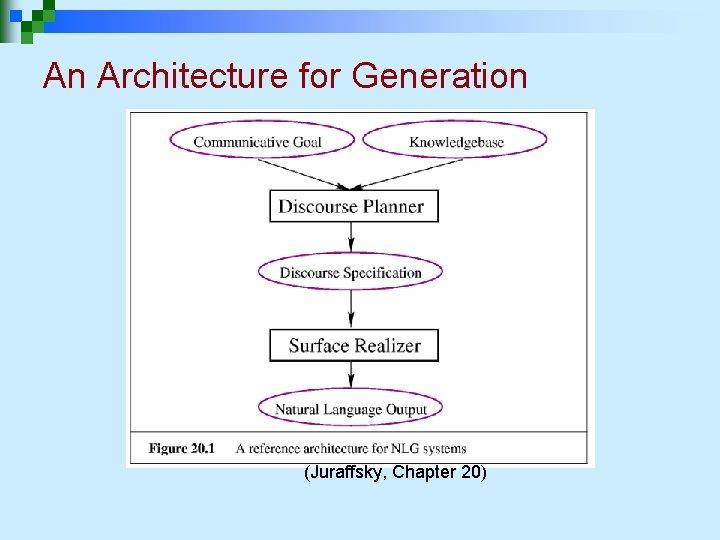

An Architecture for Generation (Juraffsky, Chapter 20)

An Architecture for Generation (Cont. . ) Ø Discourse Planner – v This component starts with a communicative goal and makes all the choices. v It selects the content from the knowledge base and then structures that content appropriately. v The resulting discourse plan will specify all the choices made for the entire communication, potentially spanning multiple sentences and including other annotations (including hypertext, figures, etc. ).

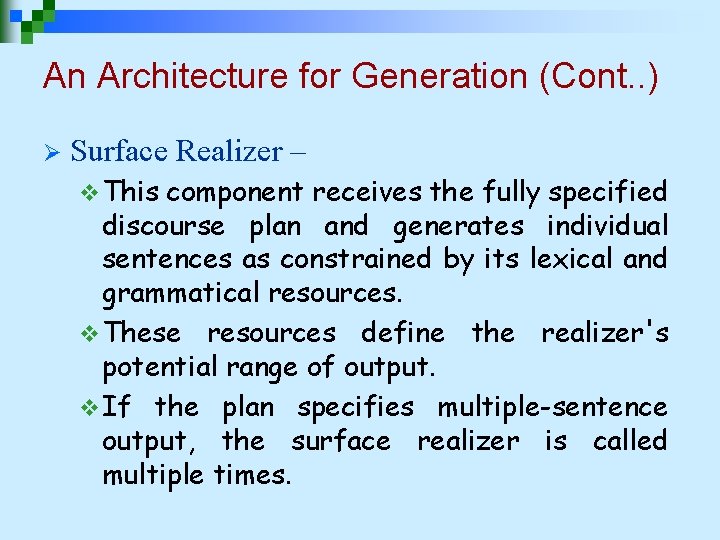

An Architecture for Generation (Cont. . ) Ø Surface Realizer – v This component receives the fully specified discourse plan and generates individual sentences as constrained by its lexical and grammatical resources. v These resources define the realizer's potential range of output. v If the plan specifies multiple-sentence output, the surface realizer is called multiple times.

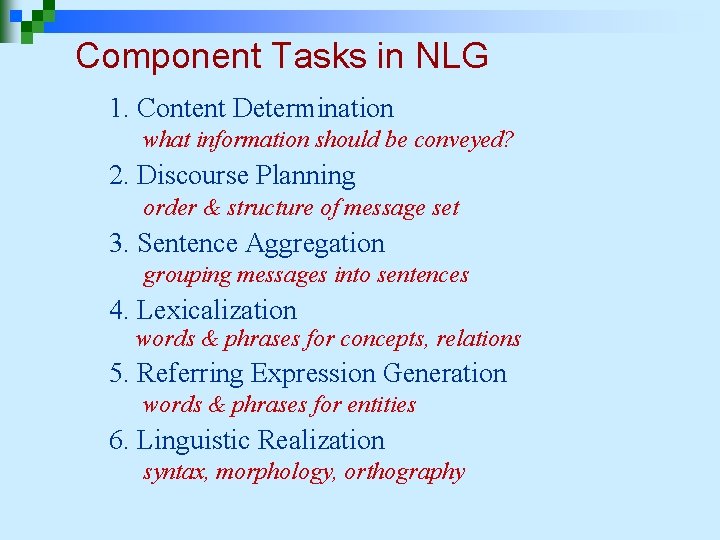

Component Tasks in NLG 1. Content Determination what information should be conveyed? 2. Discourse Planning order & structure of message set 3. Sentence Aggregation grouping messages into sentences 4. Lexicalization words & phrases for concepts, relations 5. Referring Expression Generation words & phrases for entities 6. Linguistic Realization syntax, morphology, orthography

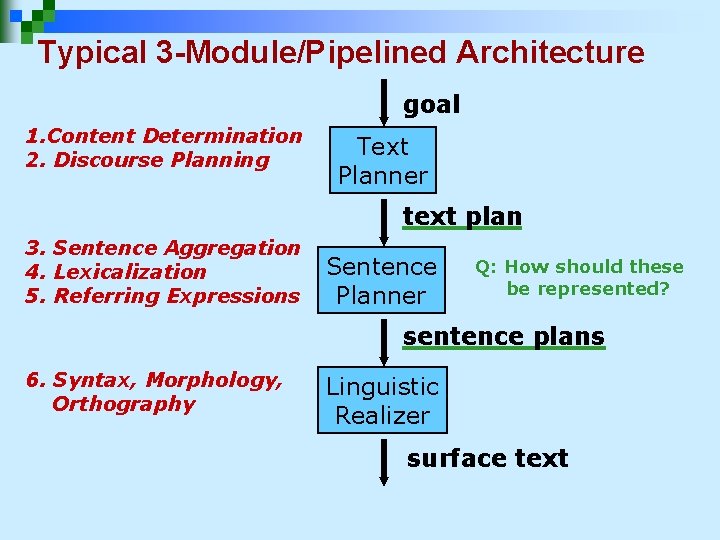

Typical 3 -Module/Pipelined Architecture goal 1. Content Determination 2. Discourse Planning Text Planner text plan 3. Sentence Aggregation 4. Lexicalization 5. Referring Expressions Sentence Planner Q: How should these be represented? sentence plans 6. Syntax, Morphology, Orthography Linguistic Realizer surface text

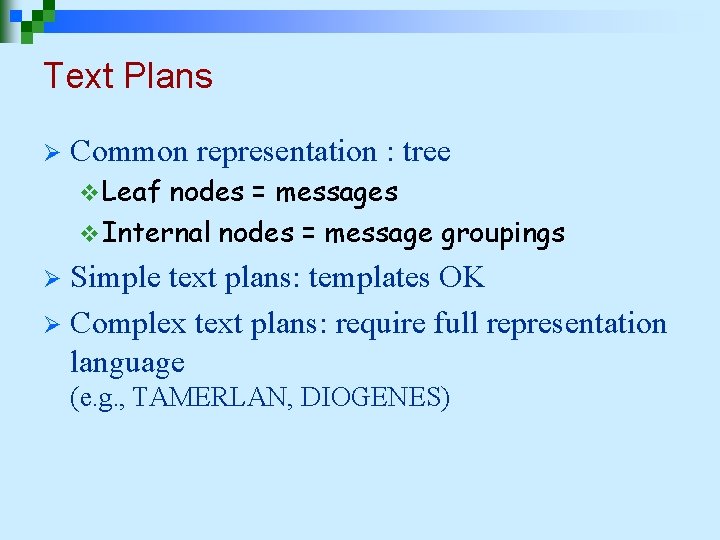

Text Plans Ø Common representation : tree v Leaf nodes = messages v Internal nodes = message groupings Simple text plans: templates OK Ø Complex text plans: require full representation language Ø (e. g. , TAMERLAN, DIOGENES)

Sentence Plans Simple: templates (select & fill) Ø Complex: abstract representation (SPL: Sentence Planning Language) Ø

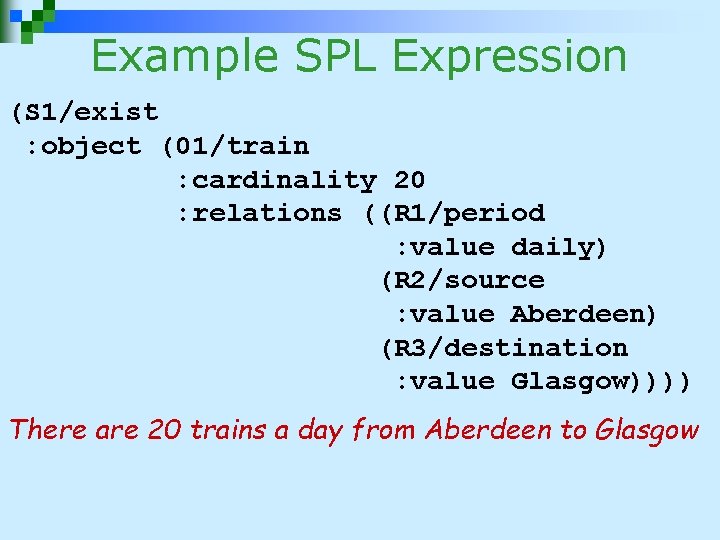

Example SPL Expression (S 1/exist : object (01/train : cardinality 20 : relations ((R 1/period : value daily) (R 2/source : value Aberdeen) (R 3/destination : value Glasgow)))) There are 20 trains a day from Aberdeen to Glasgow

Content Determination Messages (raw content) Ø User Model (influences content) Ø Is Reasoning Required? Ø Find a train from Aberdeen to Leeds (It requires two trains to get there) Ø Deep Reasoning Systems v represent the user’s goals as well as any immediate query v utilize plan recognition & reasoning

Discourse Planning Structure messages into a coherent text Ø Example: start with a summary, then give details Ø Discourse relations, e. g. : Ø v elaboration: More specifically, X v exemplification: For example, X v contrast / exception: However, X Ø Rhetorical Structure Theory (RST)

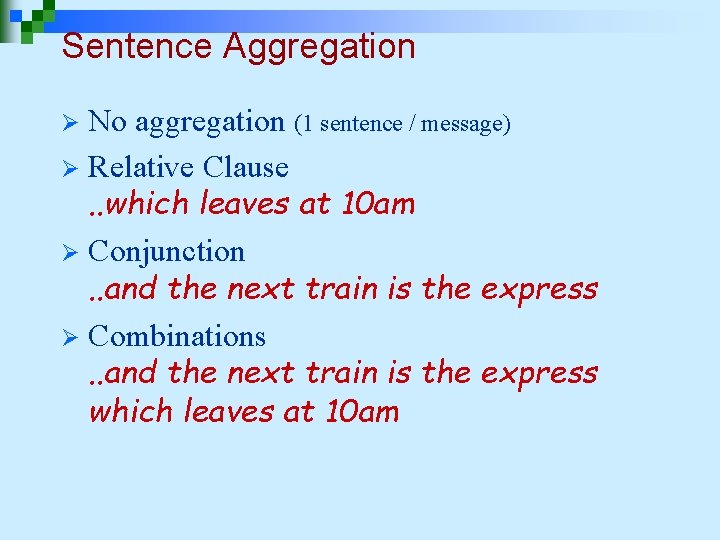

Sentence Aggregation No aggregation (1 sentence / message) Ø Relative Clause. . which leaves at 10 am Ø Conjunction. . and the next train is the express Ø Combinations. . and the next train is the express which leaves at 10 am Ø

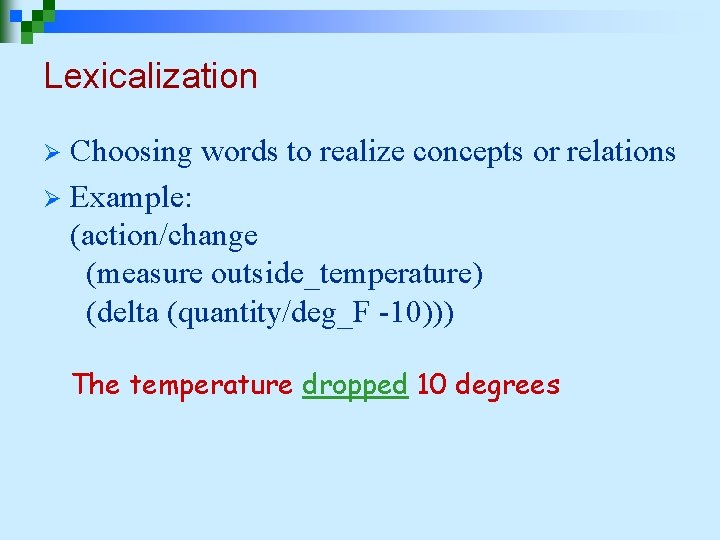

Lexicalization Choosing words to realize concepts or relations Ø Example: (action/change (measure outside_temperature) (delta (quantity/deg_F -10))) Ø The temperature dropped 10 degrees

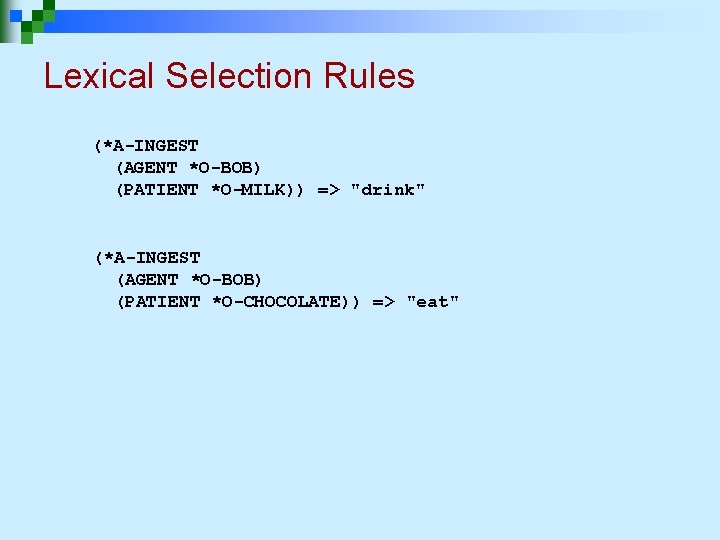

Lexical Selection Rules (*A-INGEST (AGENT *O-BOB) (PATIENT *O-MILK)) => "drink" (*A-INGEST (AGENT *O-BOB) (PATIENT *O-CHOCOLATE)) => "eat"

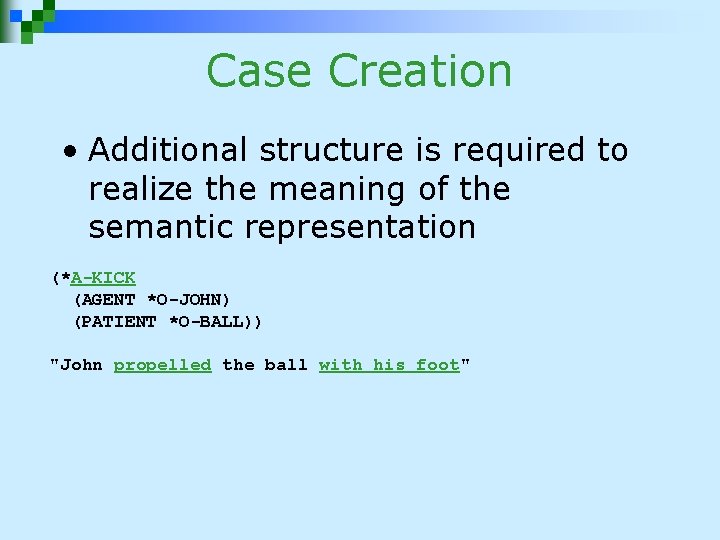

Case Creation • Additional structure is required to realize the meaning of the semantic representation (*A-KICK (AGENT *O-JOHN) (PATIENT *O-BALL)) "John propelled the ball with his foot"

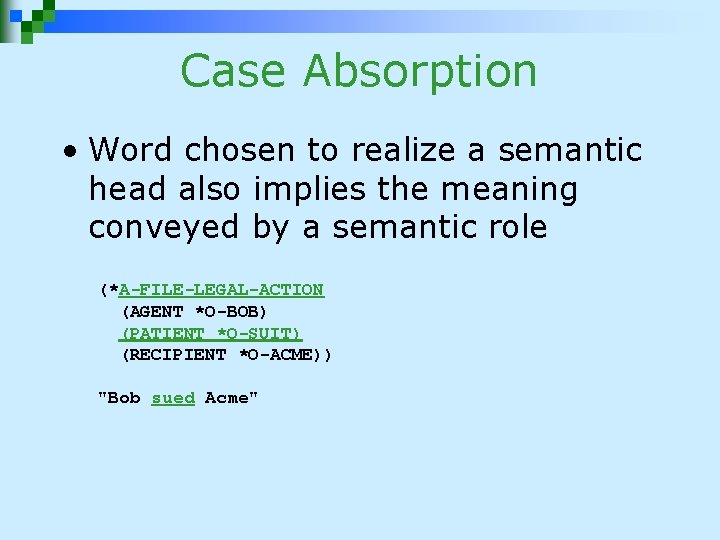

Case Absorption • Word chosen to realize a semantic head also implies the meaning conveyed by a semantic role (*A-FILE-LEGAL-ACTION (AGENT *O-BOB) (PATIENT *O-SUIT) (RECIPIENT *O-ACME)) "Bob sued Acme"

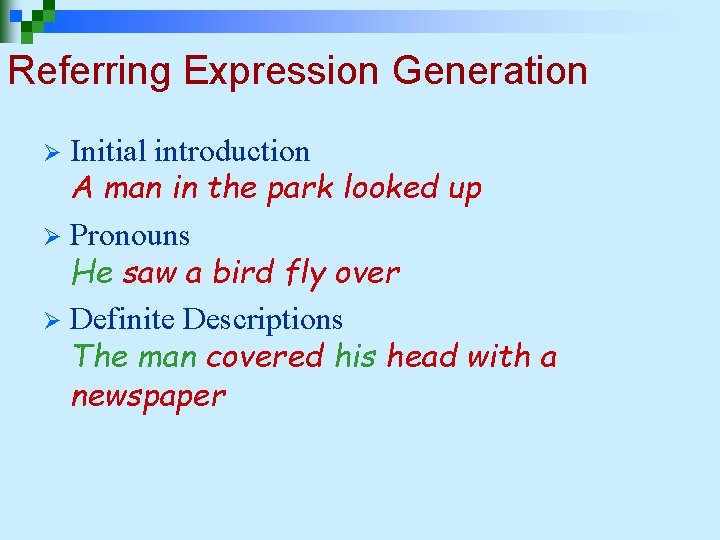

Referring Expression Generation Initial introduction A man in the park looked up Ø Pronouns He saw a bird fly over Ø Definite Descriptions The man covered his head with a newspaper Ø

![Fixing Robot Text • Start [the engine]i and run [the engine]i until [the engine]i Fixing Robot Text • Start [the engine]i and run [the engine]i until [the engine]i](http://slidetodoc.com/presentation_image_h2/f025f7dc757445e106075540b2158883/image-89.jpg)

Fixing Robot Text • Start [the engine]i and run [the engine]i until [the engine]i reaches normal operating temperature • Start []i and run [the engine]i until [it]i reaches normal operating temperature • Second example introduces ellipsis and anaphora

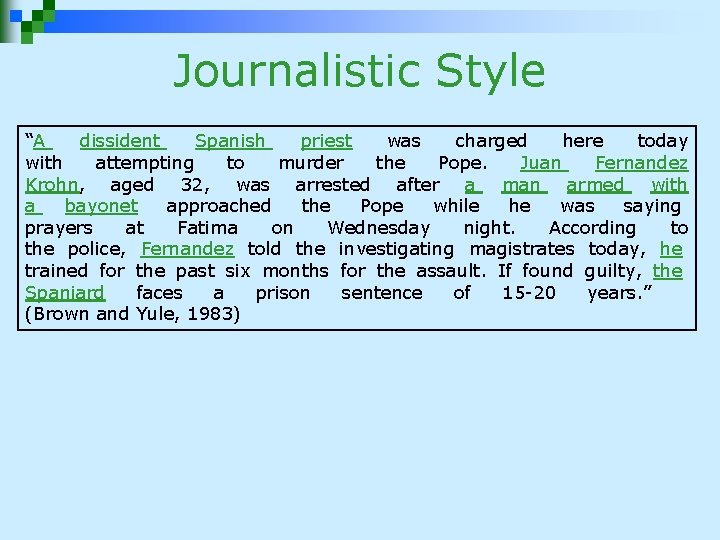

Journalistic Style “A dissident Spanish priest was charged here today with attempting to murder the Pope. Juan Fernandez Krohn, aged 32, was arrested after a man armed with a bayonet approached the Pope while he was saying prayers at Fatima on Wednesday night. According to the police, Fernandez told the investigating magistrates today, he trained for the past six months for the assault. If found guilty, the Spaniard faces a prison sentence of 15 -20 years. ” (Brown and Yule, 1983)

Reading/References Ø Ø Daniel Jurafsky, Speech and Language Processing: An Introduction to Natural Language Processing, Computational Linguistics and Speech Recognition, Pearson Education, Inc, 2000. Kamil Wiśniewski, July 12 th, 2007, Discourse Analysis. Retrieved from: http: //www. tlumaczenia-angielski. info/linguistics/discourse. htm, Retrieved date: Oct 16, 2010. M. A. Khan, “ Text Based Machine Translation System”, Ph. D Thesis, 1995. The Daily News, “Jolie was high on cocaine during TV interview: Former drug dealer”, dated: 22 Oct, 2010. http: //dailymailnews. com/1010/22/Show. Biz/index. php? id=3 Ø M. A. Khan, “MACHINE TRANSLATION BEYOND SENTENCE BOUNDARIES ”, , In Proceedings of Workshop on Proofing Tools and Language Technologies, July 1 -2, Patras University, Greece. www. mabidkhan. com/. . . /Scientific%20 Khyber, %20 Vol%201, %202004. pdf

- Slides: 91