NLP Lecture 5 Word Normalization and Stemming Normalization

- Slides: 24

NLP Lecture 5 Word Normalization and Stemming

Normalization Preprocessing of text data before storing or performing operations on it. Text normalization is the process of transforming text into a single canonical form that it might not have had before. Normalization = need to recognize tokens and reduce them to the same common/specific form Words/tokens may have many forms , but more often they are not important and we need to know only the base form of the word and can be done by Stemming and Lemmatization.

Normalization • Need to “normalize” terms • Information Retrieval: indexed text & query terms must have same form. • We want to match U. S. A. and USA • We implicitly define equivalence classes of terms • e. g. , deleting periods in a term • Alternative: asymmetric expansion: • Enter: window Search: window, windows • Enter: windows Search: Windows, window • Enter: Windows Search: Windows • Potentially more powerful, but less efficient

Normalization Countries • the US -> USA • U. S. A. -> USA Organizations • UN -> United Nations Values • Sometimes we want to enforce some specific format on some values of some types e. g: • phones (+7 (800) 1231, 8 -800 -1231 => 0078001231231) • dates, times (e. g. 25 June 2015, 25. 06. 15 => 2015. 06. 25) • currency ($400 => 400 dollars) 4

Normalization Often we don't care about specific value, only what this value mean, so we can do the following normalization: • $400 => MONEY • email@gmail. com => EMAIL • 25 June 2015 => DATE • +7 (800) 1231 => PHONE etc Spelling Corrections: • Also in Natural Languages there are spelling mistakes. In many applications it's useful to correct them. e. g. infromation -> information 5

Case folding • Applications like IR: reduce all letters to lower case • Since users tend to use lower case • Possible exception: upper case in mid-sentence? • e. g. , General Motors • Fed vs. fed • SAIL vs. sail • For sentiment analysis, MT, Information extraction • Case is helpful (US versus us is important)

Lemmatization • Reduce inflections or variant forms to base form • am, are, is be • car, cars, car's, cars' car • Lemmatization: keeping only the lemma • produce, produces, production => produce • the boy's cars are different colors the boy car be different color • Lemmatization: have to find correct dictionary headword form • Machine translation • Spanish quiero (‘I want’), quieres (‘you want’) same lemma as querer ‘want’

Morphology • Morphemes: • The small meaningful units that make up words • Stems: The core meaning-bearing units • Affixes: Bits and pieces that adhere to stems • Often with grammatical functions

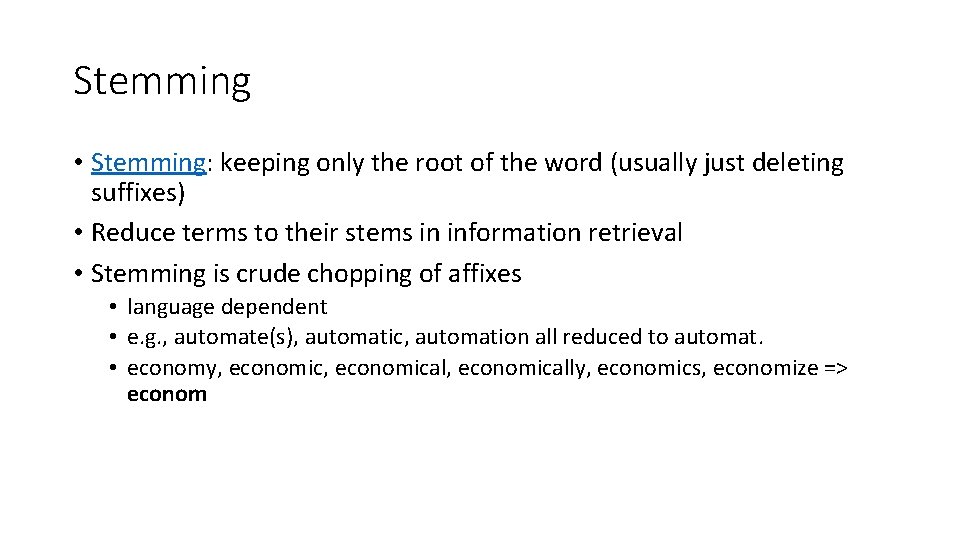

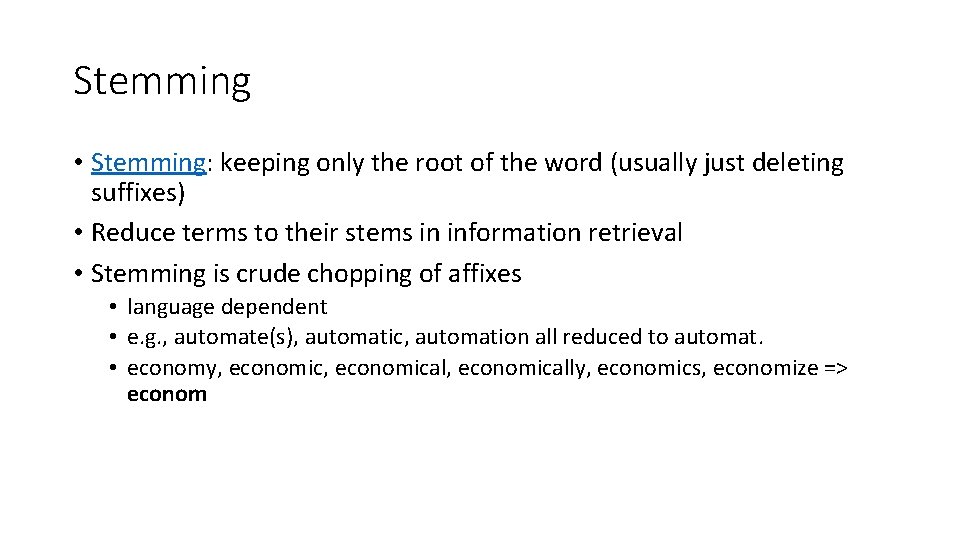

Stemming • Stemming: keeping only the root of the word (usually just deleting suffixes) • Reduce terms to their stems in information retrieval • Stemming is crude chopping of affixes • language dependent • e. g. , automate(s), automatic, automation all reduced to automat. • economy, economical, economically, economics, economize => econom

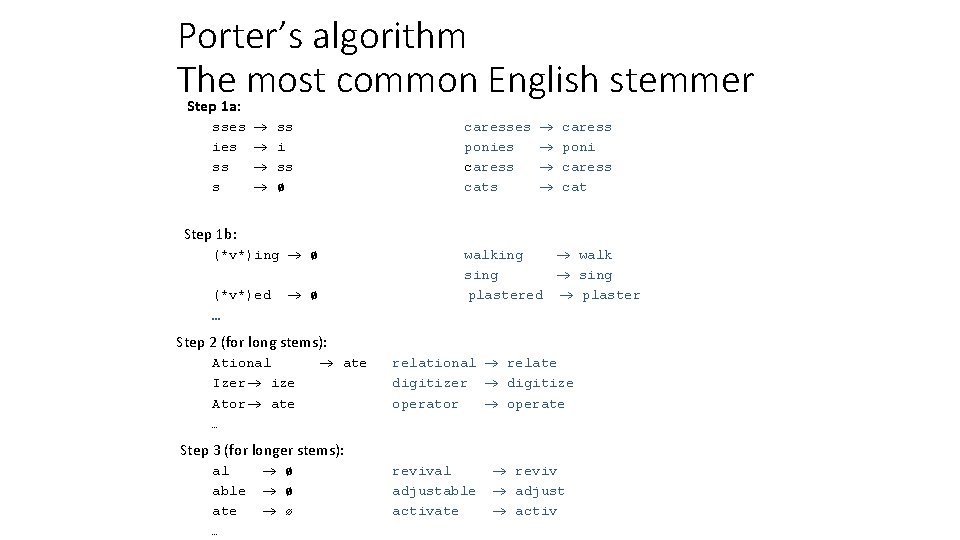

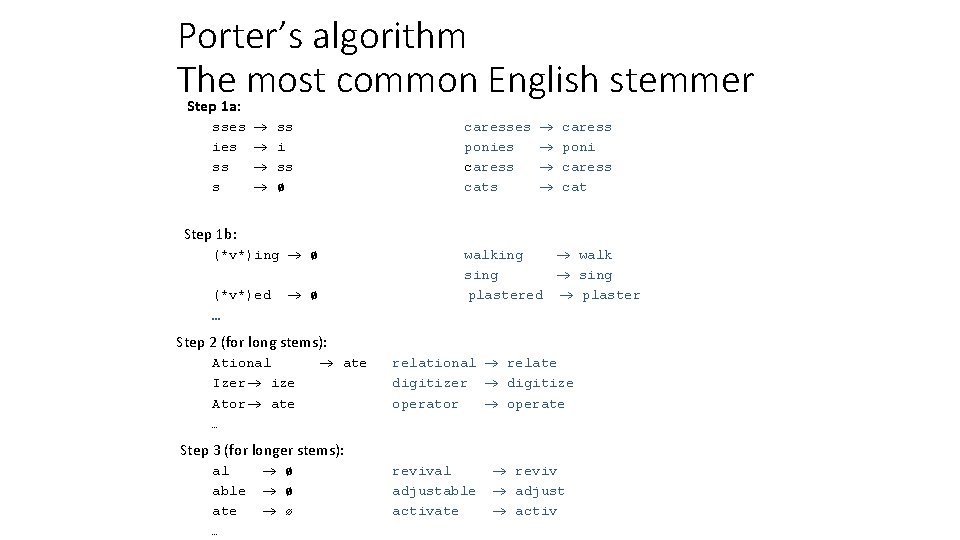

Porter’s algorithm The most common English stemmer Step 1 a: sses ies ss s ss i ss ø caresses ponies caress cats caress poni caress cat Step 1 b: (*v*)ing ø (*v*)ed … walking walk sing plastered plaster ø Step 2 (for long stems): Ational Izer ize Ator ate … ate relational relate digitizer digitize operator operate Step 3 (for longer stems): al able ate … ø ø ø revival adjustable activate reviv adjust activ

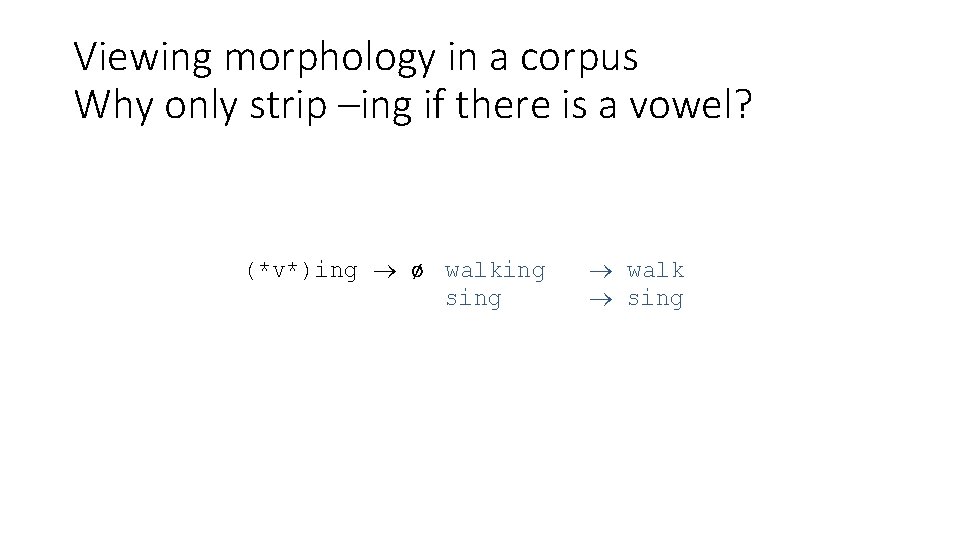

Viewing morphology in a corpus Why only strip –ing if there is a vowel? (*v*)ing ø walking sing walk sing

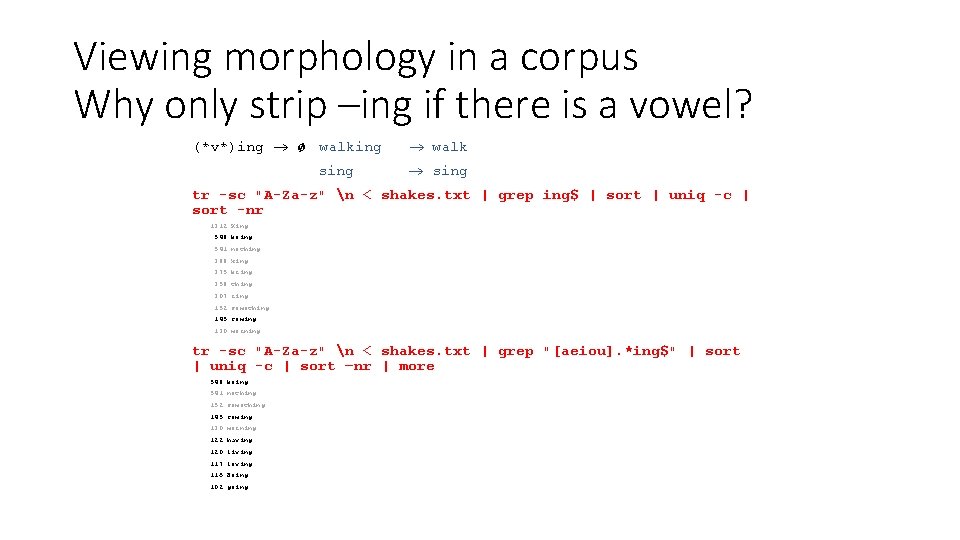

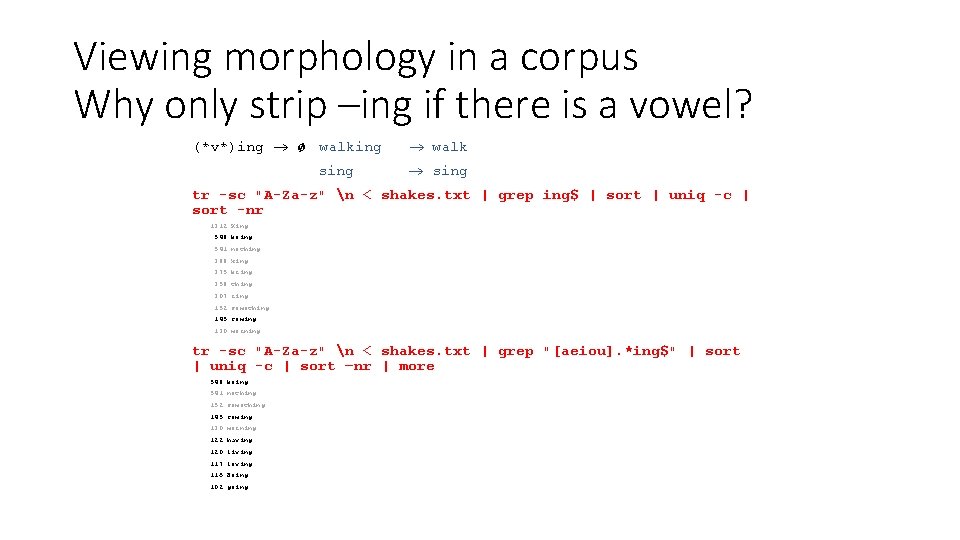

Viewing morphology in a corpus Why only strip –ing if there is a vowel? (*v*)ing ø walking sing walk sing tr -sc "A-Za-z" n < shakes. txt | grep ing$ | sort | uniq -c | sort -nr 1312 King 548 being 541 nothing 388 king 375 bring 358 thing 307 ring 152 something 145 coming 130 morning tr -sc "A-Za-z" n < shakes. txt | grep "[aeiou]. *ing$" | sort | uniq -c | sort –nr | more 548 being 541 nothing 152 something 145 coming 130 morning 122 having 120 living 117 loving 116 Being 102 going

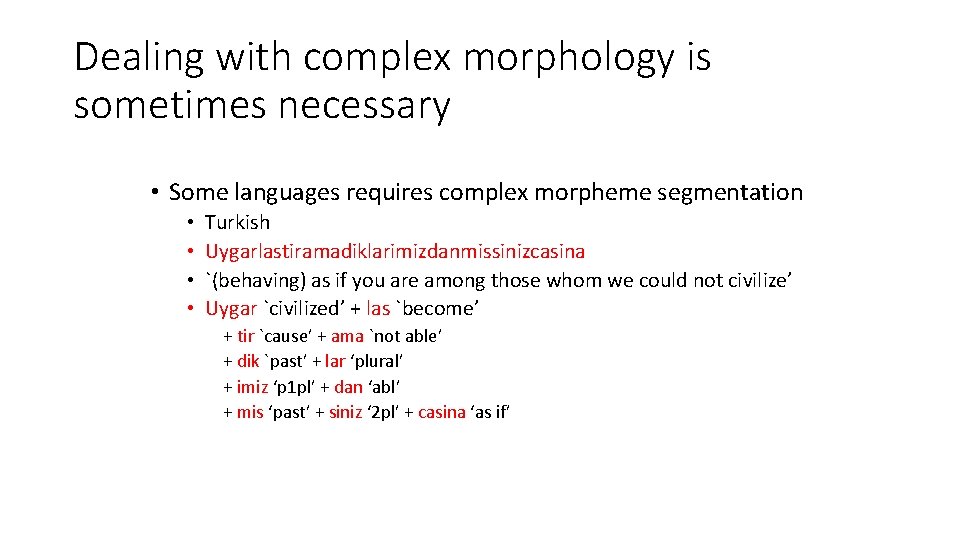

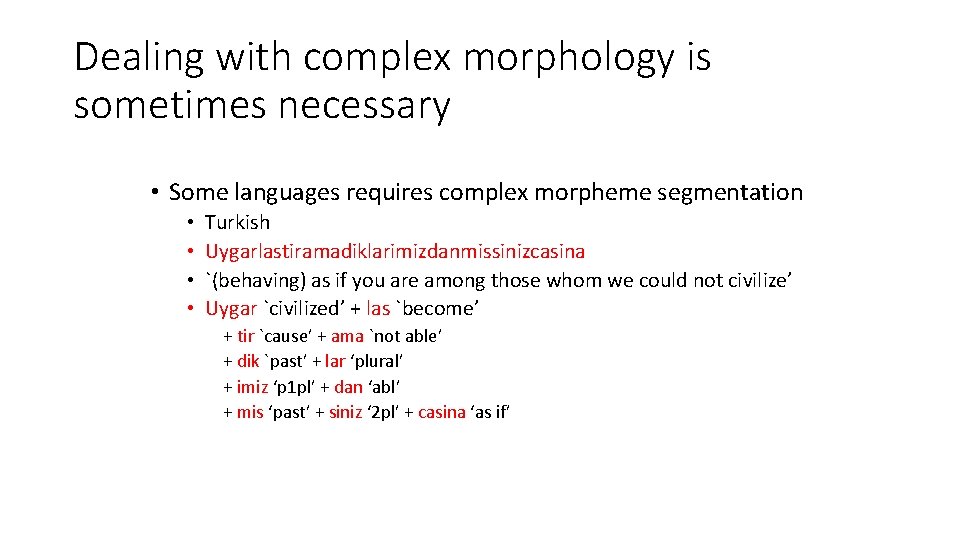

Dealing with complex morphology is sometimes necessary • Some languages requires complex morpheme segmentation • • Turkish Uygarlastiramadiklarimizdanmissinizcasina `(behaving) as if you are among those whom we could not civilize’ Uygar `civilized’ + las `become’ + tir `cause’ + ama `not able’ + dik `past’ + lar ‘plural’ + imiz ‘p 1 pl’ + dan ‘abl’ + mis ‘past’ + siniz ‘ 2 pl’ + casina ‘as if’

Sentence Segmentation and Decision Trees

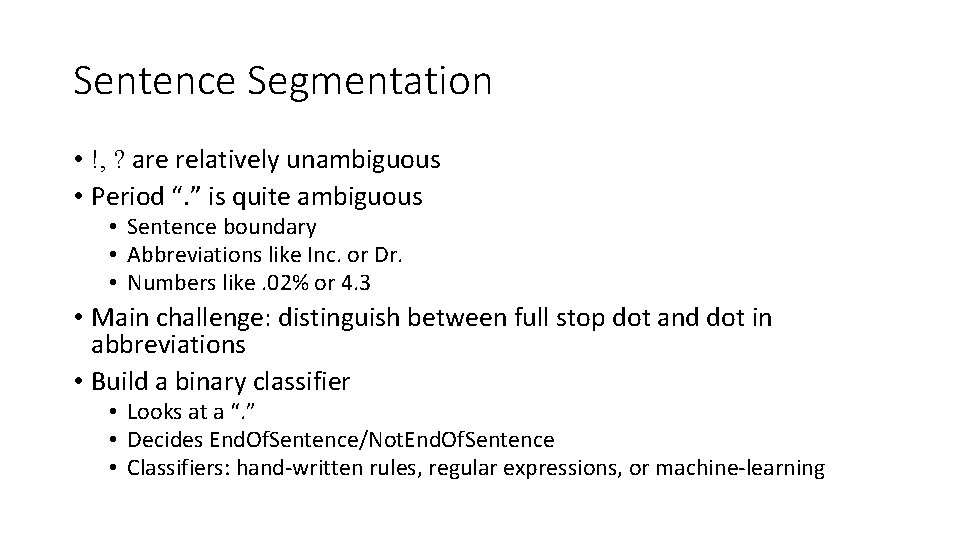

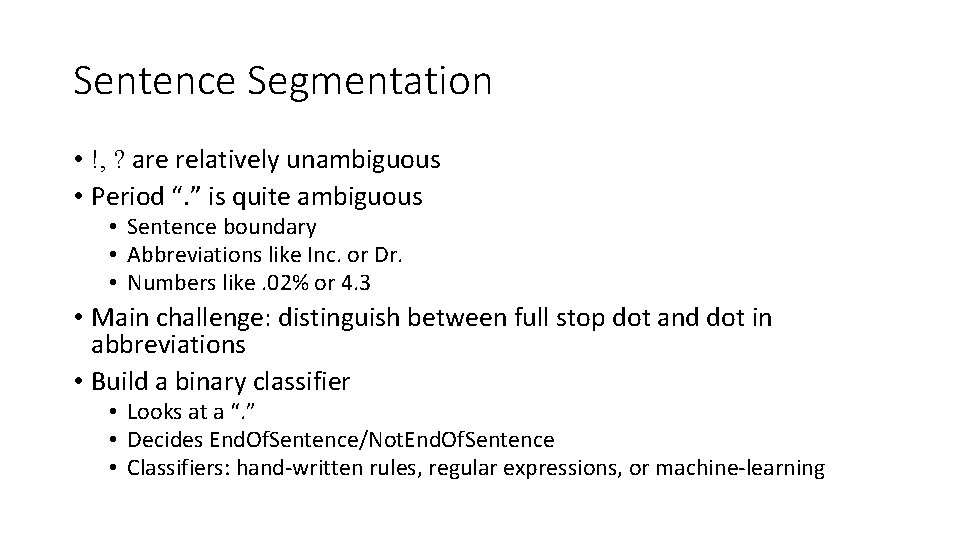

Sentence Segmentation • !, ? are relatively unambiguous • Period “. ” is quite ambiguous • Sentence boundary • Abbreviations like Inc. or Dr. • Numbers like. 02% or 4. 3 • Main challenge: distinguish between full stop dot and dot in abbreviations • Build a binary classifier • Looks at a “. ” • Decides End. Of. Sentence/Not. End. Of. Sentence • Classifiers: hand-written rules, regular expressions, or machine-learning

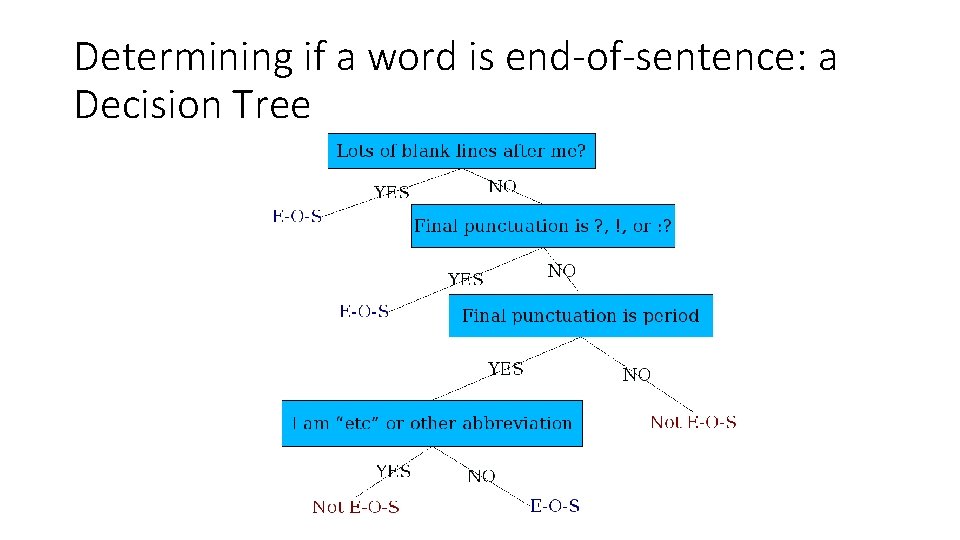

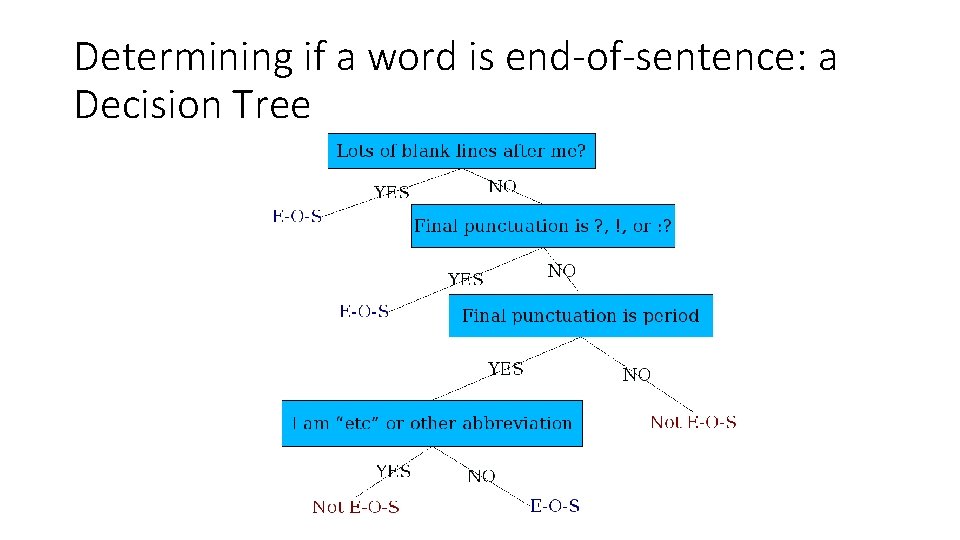

Determining if a word is end-of-sentence: a Decision Tree

More sophisticated decision tree features • Case of word with “. ”: Upper, Lower, Cap, Number • Case of word after “. ”: Upper, Lower, Cap, Number • Numeric features • Length of word with “. ” • Probability(word with “. ” occurs at end-of-s) • Probability(word after “. ” occurs at beginning-of-s)

Implementing Decision Trees • A decision tree is just an if-then-else statement • The interesting research is choosing the features • Setting up the structure is often too hard to do by hand • Hand-building only possible for very simple features, domains • For numeric features, it’s too hard to pick each threshold • Instead, structure usually learned by machine learning from a training corpus

Decision Trees and other classifiers • We can think of the questions in a decision tree • As features that could be exploited by any kind of classifier • • Logistic regression SVM (Support Vector Machine) Neural Nets etc.

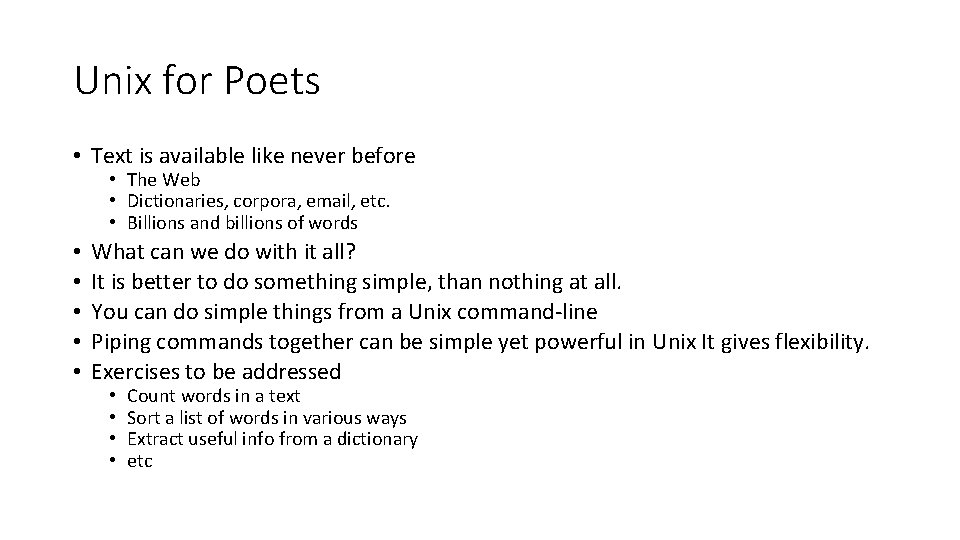

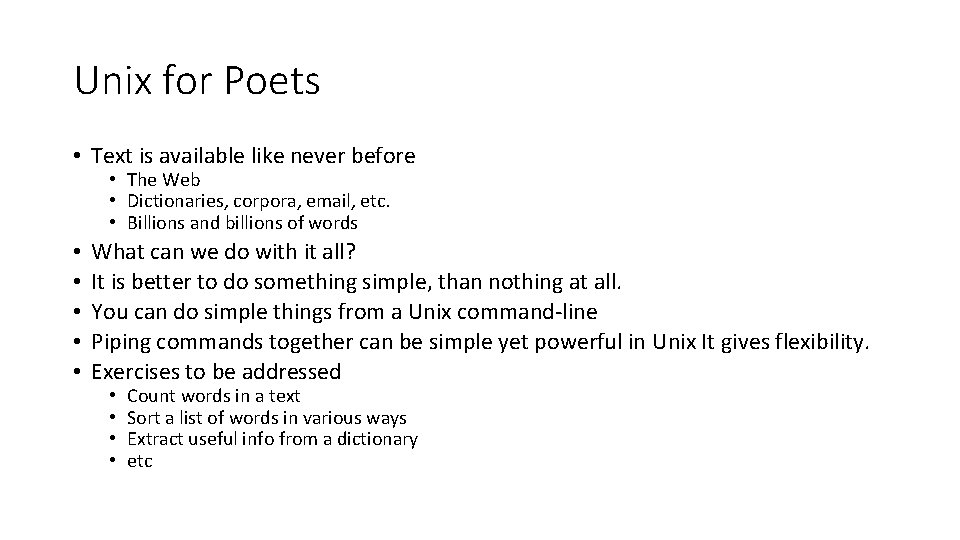

Unix for Poets

Unix for Poets • Text is available like never before • The Web • Dictionaries, corpora, email, etc. • Billions and billions of words • • • What can we do with it all? It is better to do something simple, than nothing at all. You can do simple things from a Unix command-line Piping commands together can be simple yet powerful in Unix It gives flexibility. Exercises to be addressed • • Count words in a text Sort a list of words in various ways Extract useful info from a dictionary etc

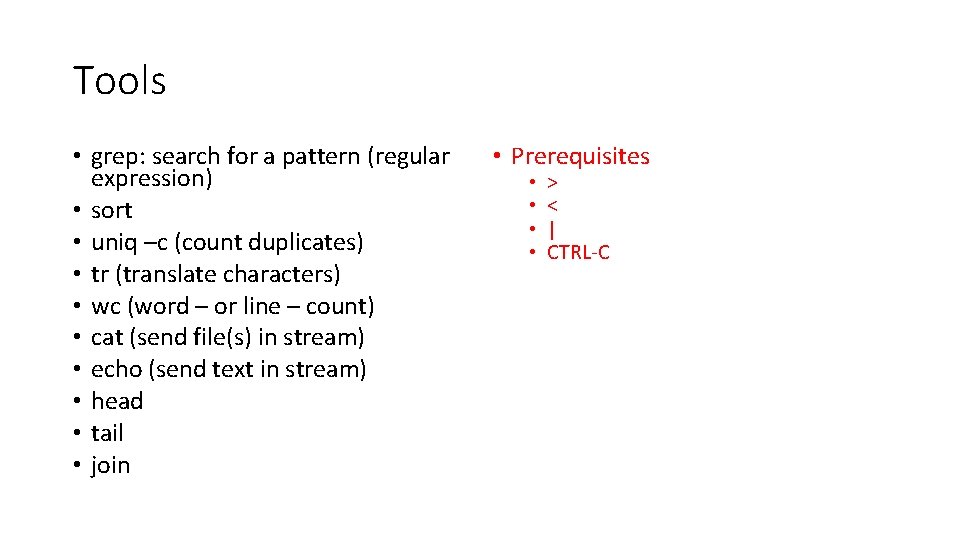

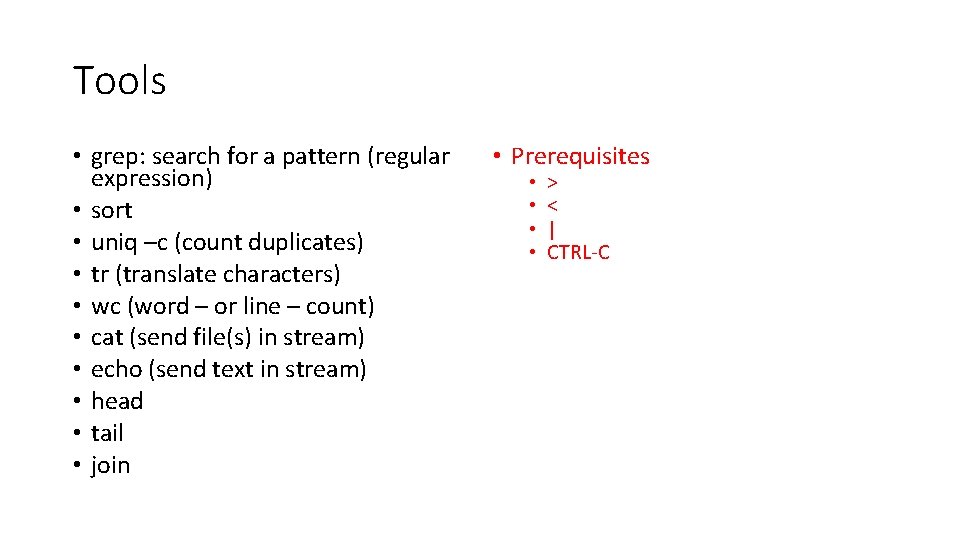

Tools • grep: search for a pattern (regular expression) • sort • uniq –c (count duplicates) • tr (translate characters) • wc (word – or line – count) • cat (send file(s) in stream) • echo (send text in stream) • head • tail • join • Prerequisites • • > < | CTRL-C

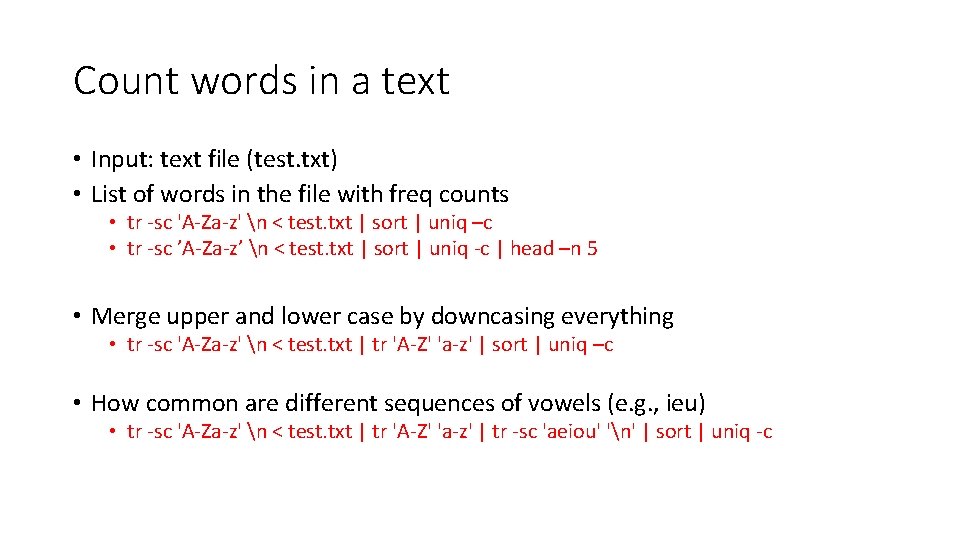

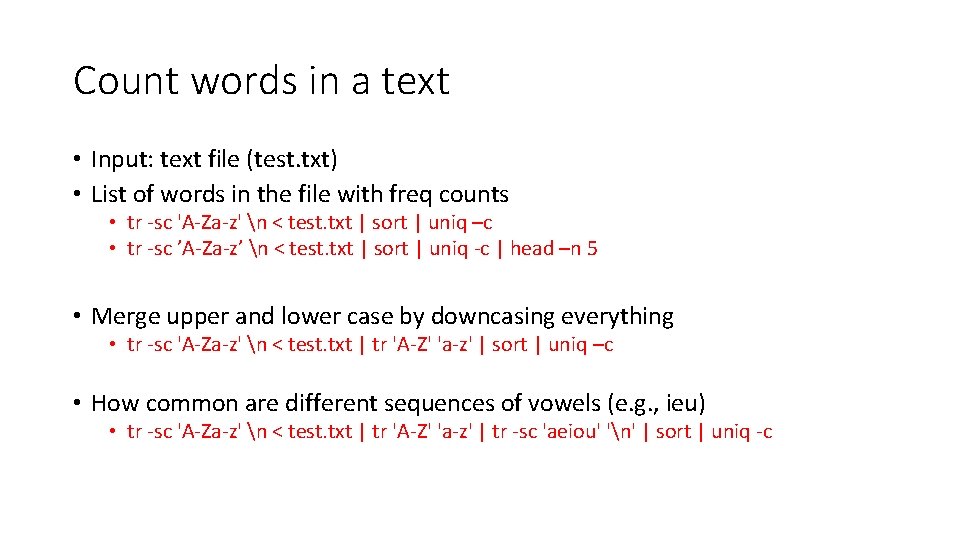

Count words in a text • Input: text file (test. txt) • List of words in the file with freq counts • tr -sc 'A-Za-z' n < test. txt | sort | uniq –c • tr -sc ’A-Za-z’ n < test. txt | sort | uniq -c | head –n 5 • Merge upper and lower case by downcasing everything • tr -sc 'A-Za-z' n < test. txt | tr 'A-Z' 'a-z' | sort | uniq –c • How common are different sequences of vowels (e. g. , ieu) • tr -sc 'A-Za-z' n < test. txt | tr 'A-Z' 'a-z' | tr -sc 'aeiou' 'n' | sort | uniq -c

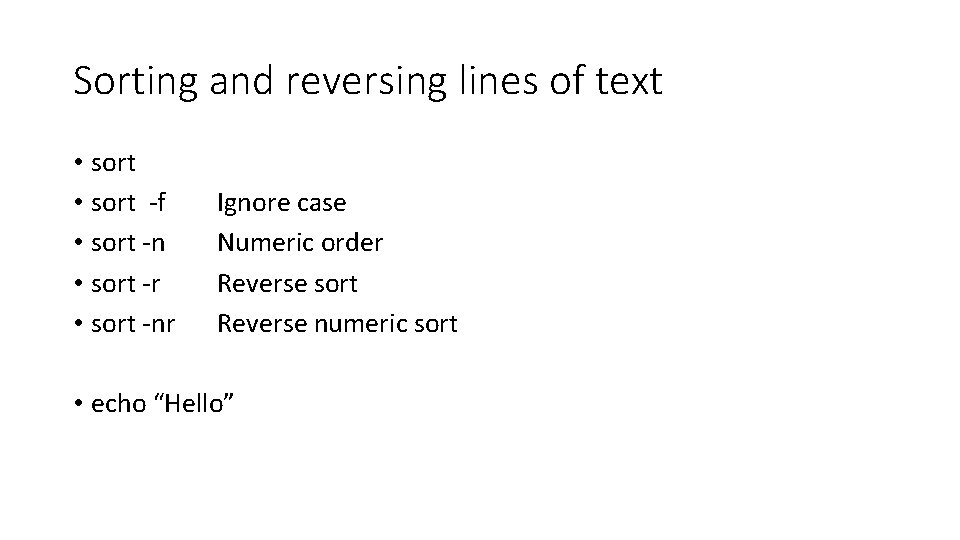

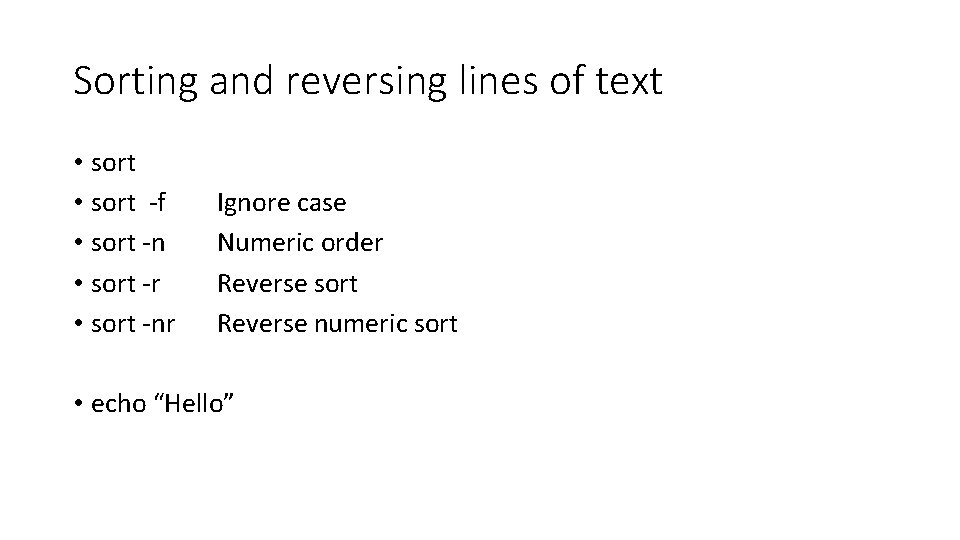

Sorting and reversing lines of text • sort -f • sort -n • sort -r • sort -nr Ignore case Numeric order Reverse sort Reverse numeric sort • echo “Hello”