NLI Identifying the CHARACTERistics of a Native Language

- Slides: 14

NLI: Identifying the CHARACTERistics of a Native Language

Introduction We show that it is possible to learn to identify the mother tongue of TOEFL test takers from the content of the essays they wrote. Our method uses a character level model based on convolutional neural networks to predict the most probable native language from a set of 11 possibilities. 2

Background Initial Thoughts How would a human approach this task? Misspellings: Specific to each language? Words? Characters? Character Embedding Motivation NLI Shared Task: Groningen (3 rd place, 1 -9 character n-grams) Google Brain 2016: Evaluated on 1 B Word benchmark Outperformed state of the art word embedding technologies Capture complex aspects of language 2

Model 3

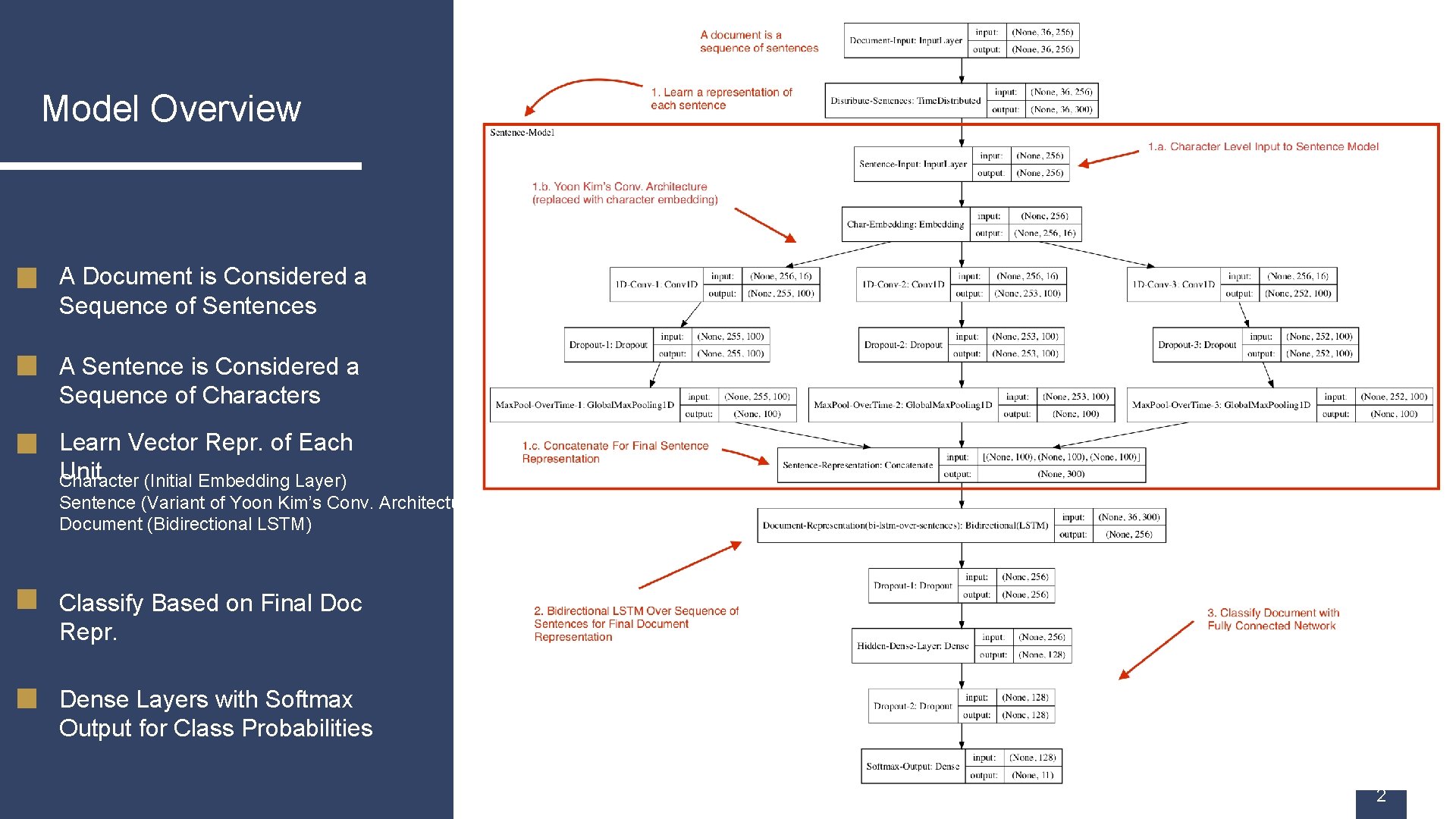

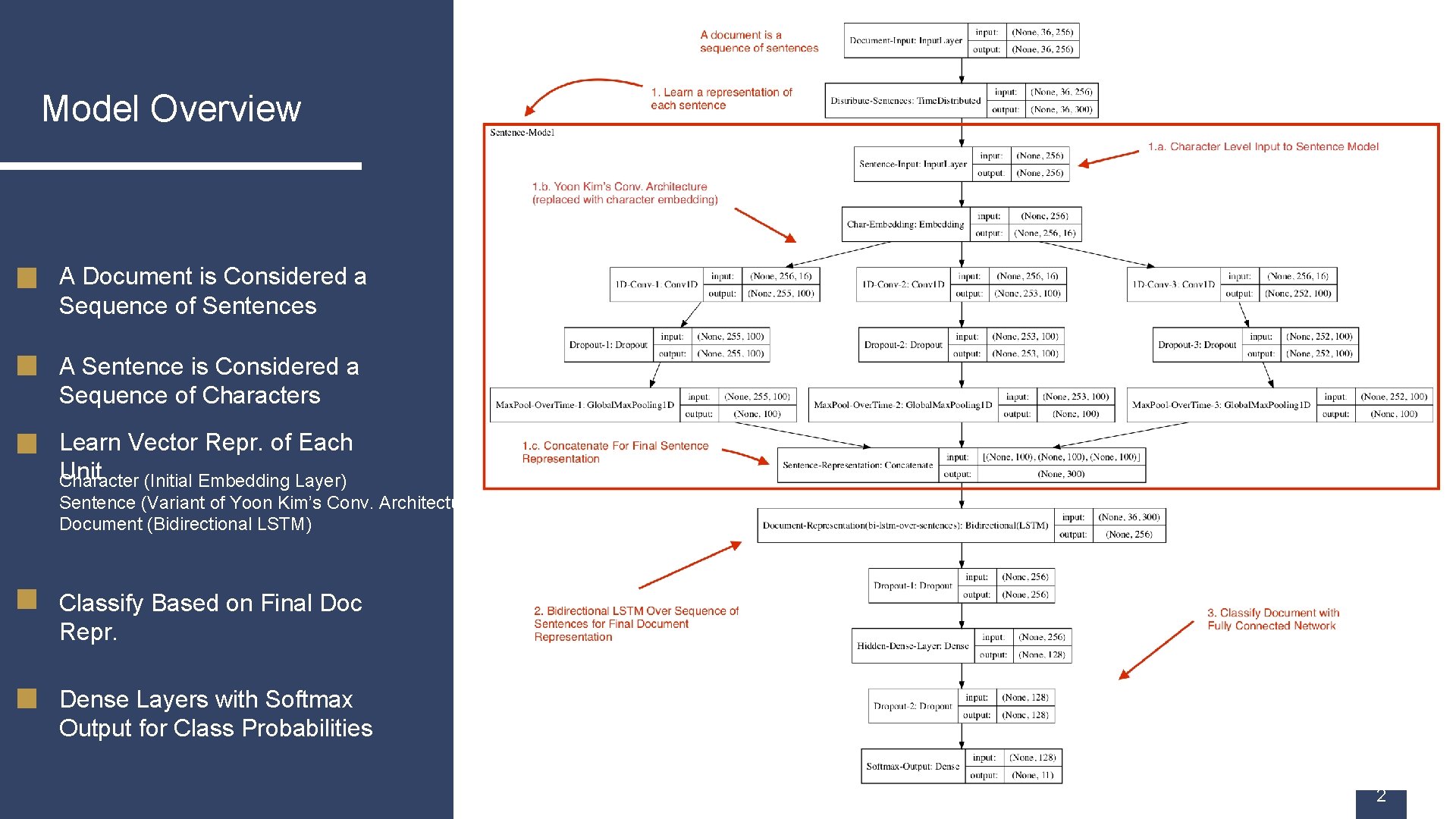

Model Overview A Document is Considered a Sequence of Sentences A Sentence is Considered a Sequence of Characters Learn Vector Repr. of Each Unit Character (Initial Embedding Layer) Sentence (Variant of Yoon Kim’s Conv. Architecture) Document (Bidirectional LSTM) Classify Based on Final Doc Repr. Dense Layers with Softmax Output for Class Probabilities 2

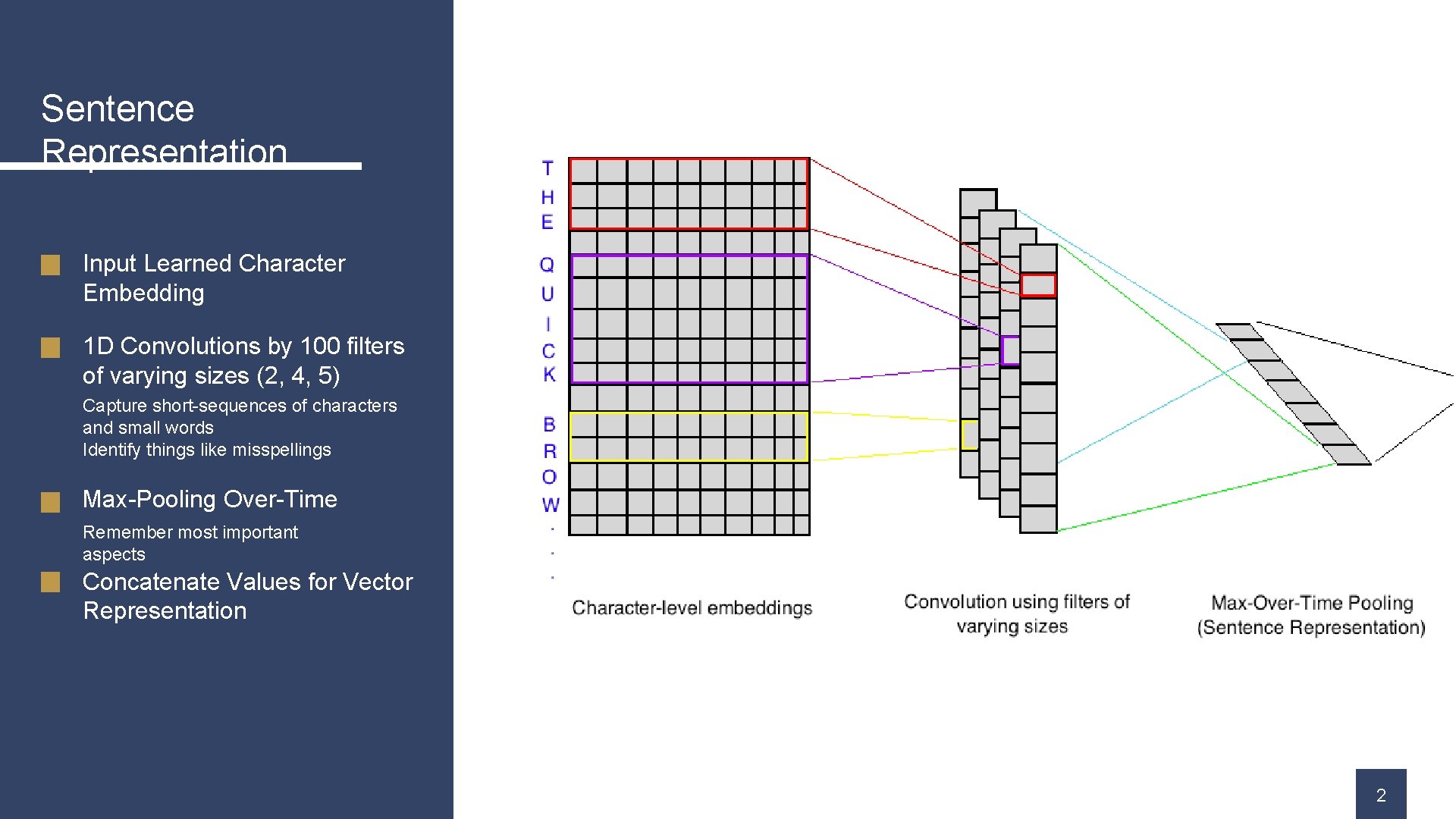

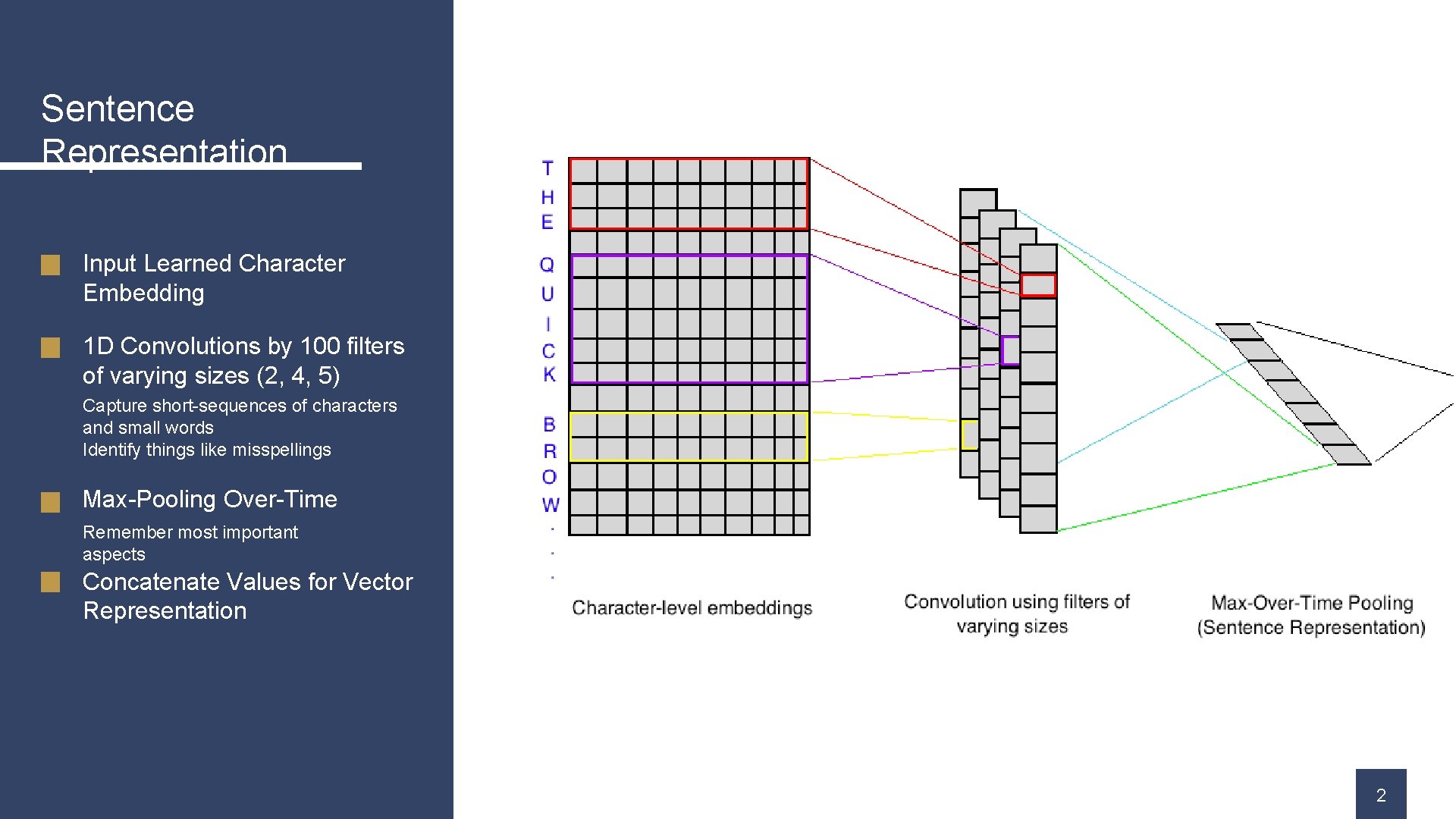

Sentence Representation • Input Learned Character Embedding 1 D Convolutions by 100 filters • 1 D Convolutions by 100 filters of of varying sizes (2, 4, 5) Capture short-sequences of characters small words • and Capture short-sequences of Identify things like misspellings characters and small words Over-Time • Max-Pooling Identify things like Remember most important misspellings aspects • Max-Pooling Over-Time Concatenate Values for Vector • Representation Remember most important aspects • Concatenate Values for Vector Representation 2

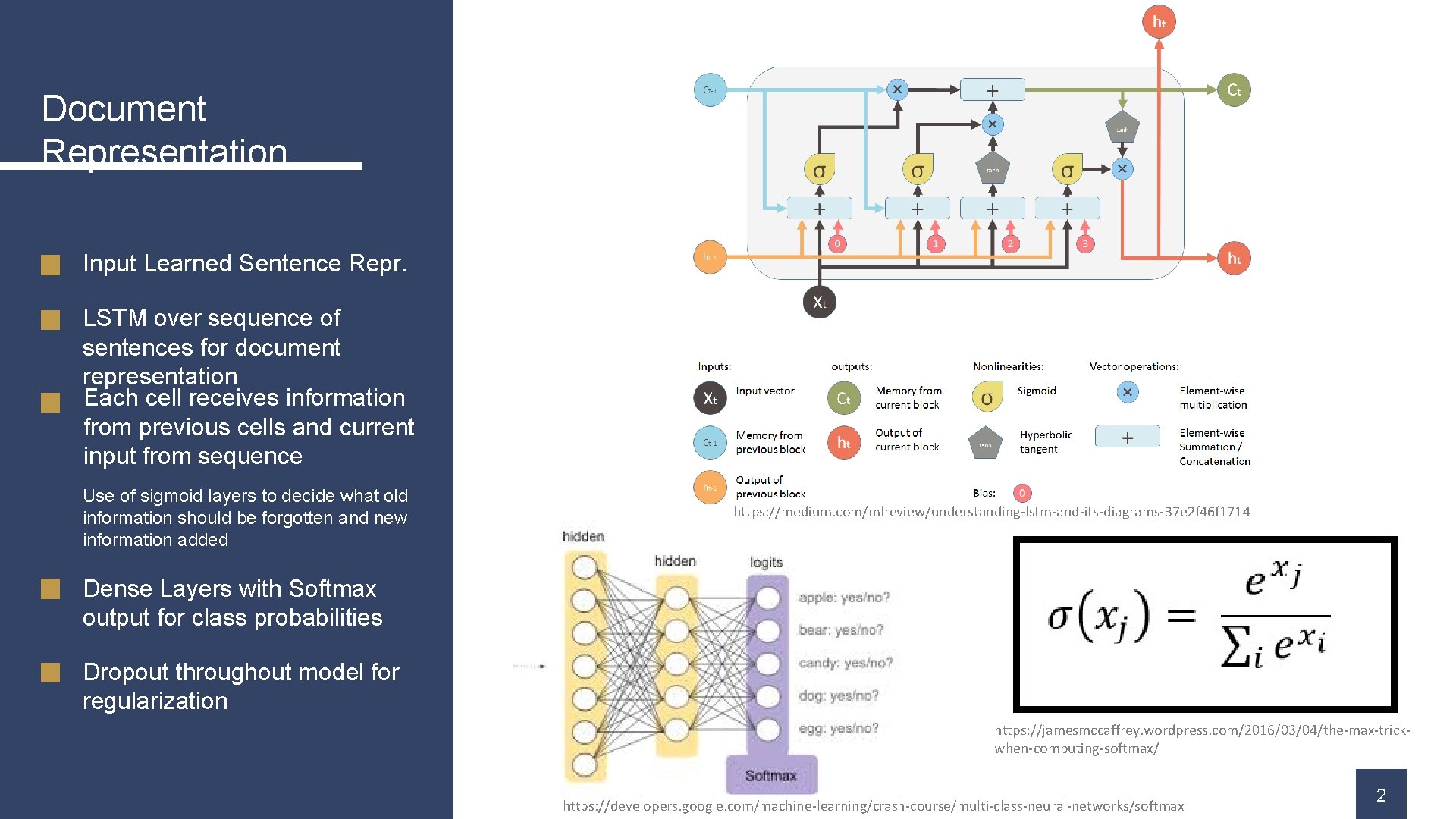

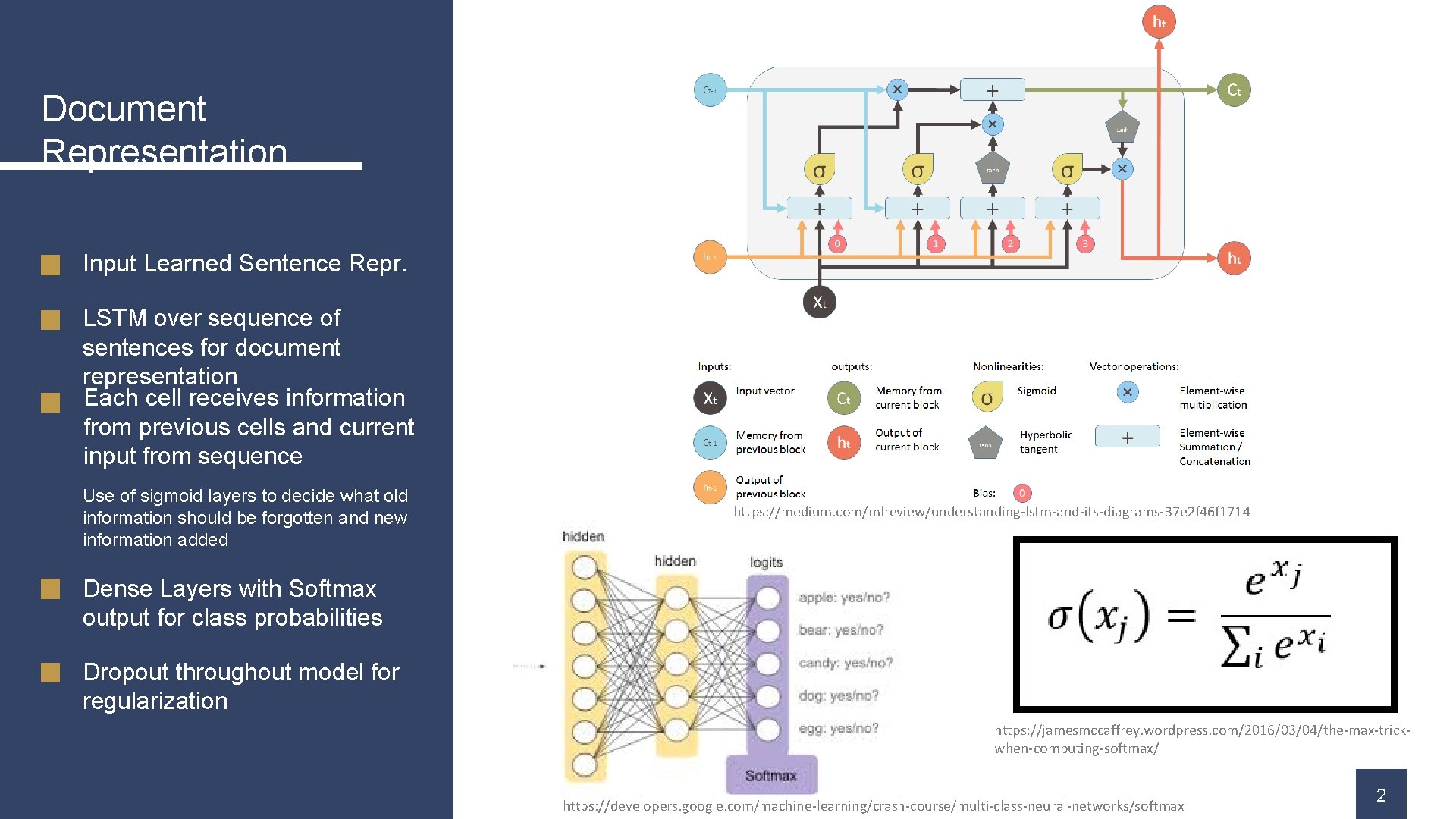

Document Representation • Input Learned Sentence Representations • LSTM over sequence of sentences forfor document sentences document representation Each cell receives information • Each cell receives information from previous cells and current input from sequence Use of sigmoid layers to decide what old be forgotten andto new • information Use of should sigmoid layers information added decide what old information Dense Layers with Softmax should be forgotten and output for class probabilities new information added • Dense Layers with Softmax Dropout throughout model for regularization output for class probabilities • Dropout throughout model for regularization https: //medium. com/mlreview/understanding-lstm-and-its-diagrams-37 e 2 f 46 f 1714 https: //jamesmccaffrey. wordpress. com/2016/03/04/the-max-trickwhen-computing-softmax/ https: //developers. google. com/machine-learning/crash-course/multi-class-neural-networks/softmax 2

Results 3

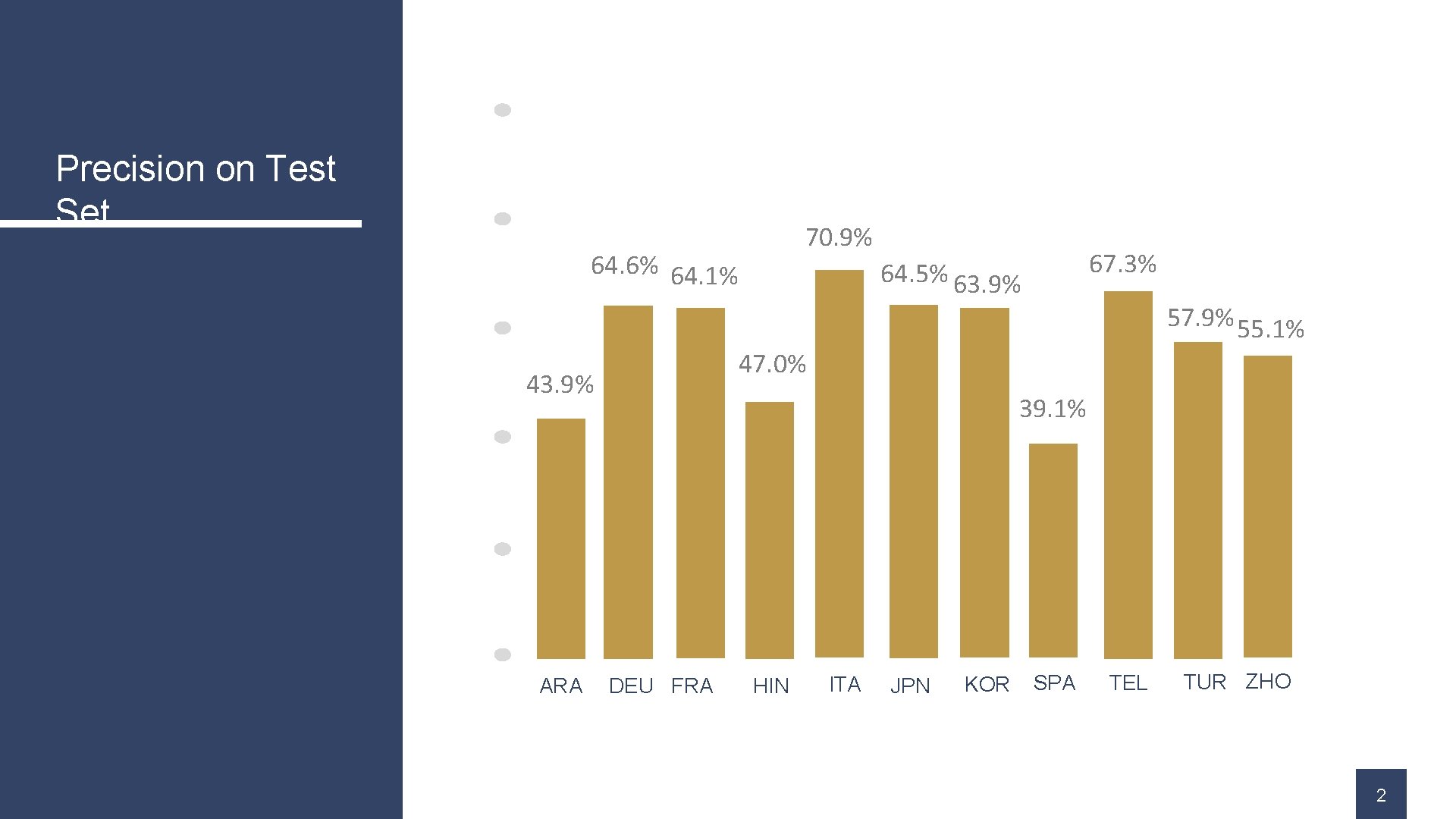

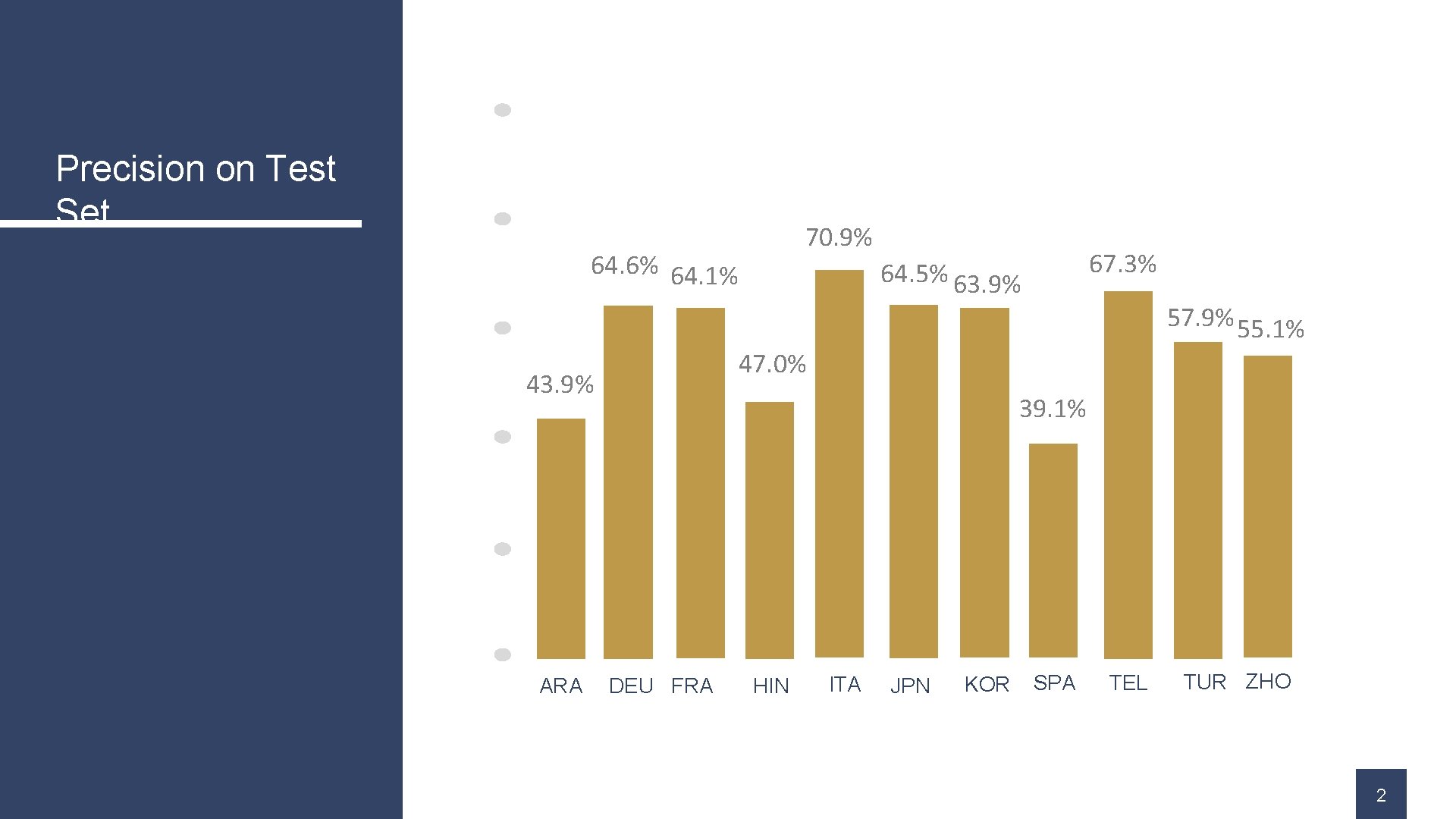

Precision on Test Set 70. 9% 64. 6% 64. 1% 67. 3% 64. 5% 63. 9% 57. 9% 55. 1% 47. 0% 43. 9% ARA 39. 1% DEU FRA HIN ITA JPN KOR SPA TEL TUR ZHO 2

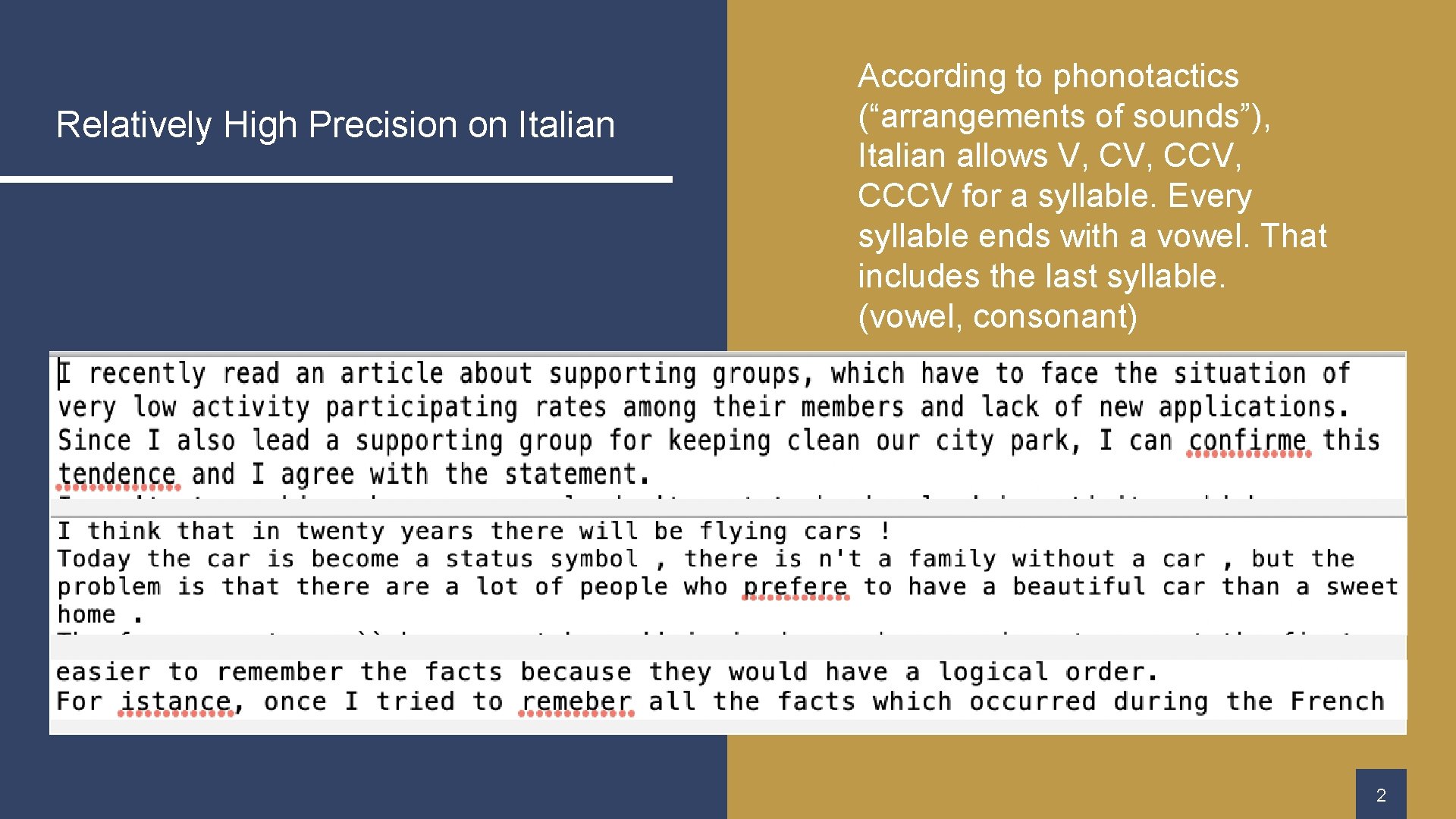

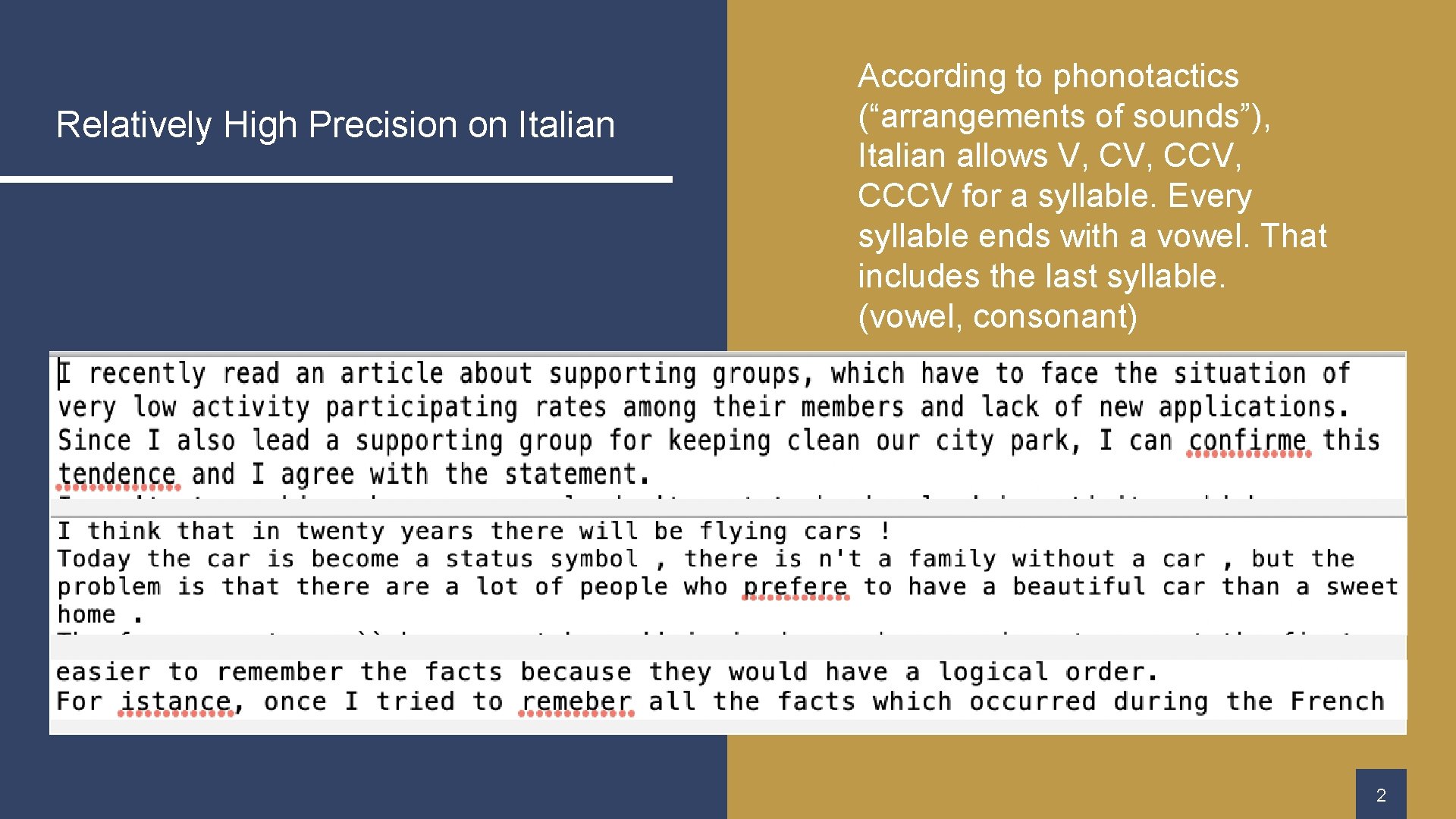

Relatively High Precision on Italian Title One According to phonotactics (“arrangements of sounds”), Italian allows V, CCV, CCCV for a syllable. Every syllable ends with a vowel. That includes the last syllable. (vowel, consonant) 2

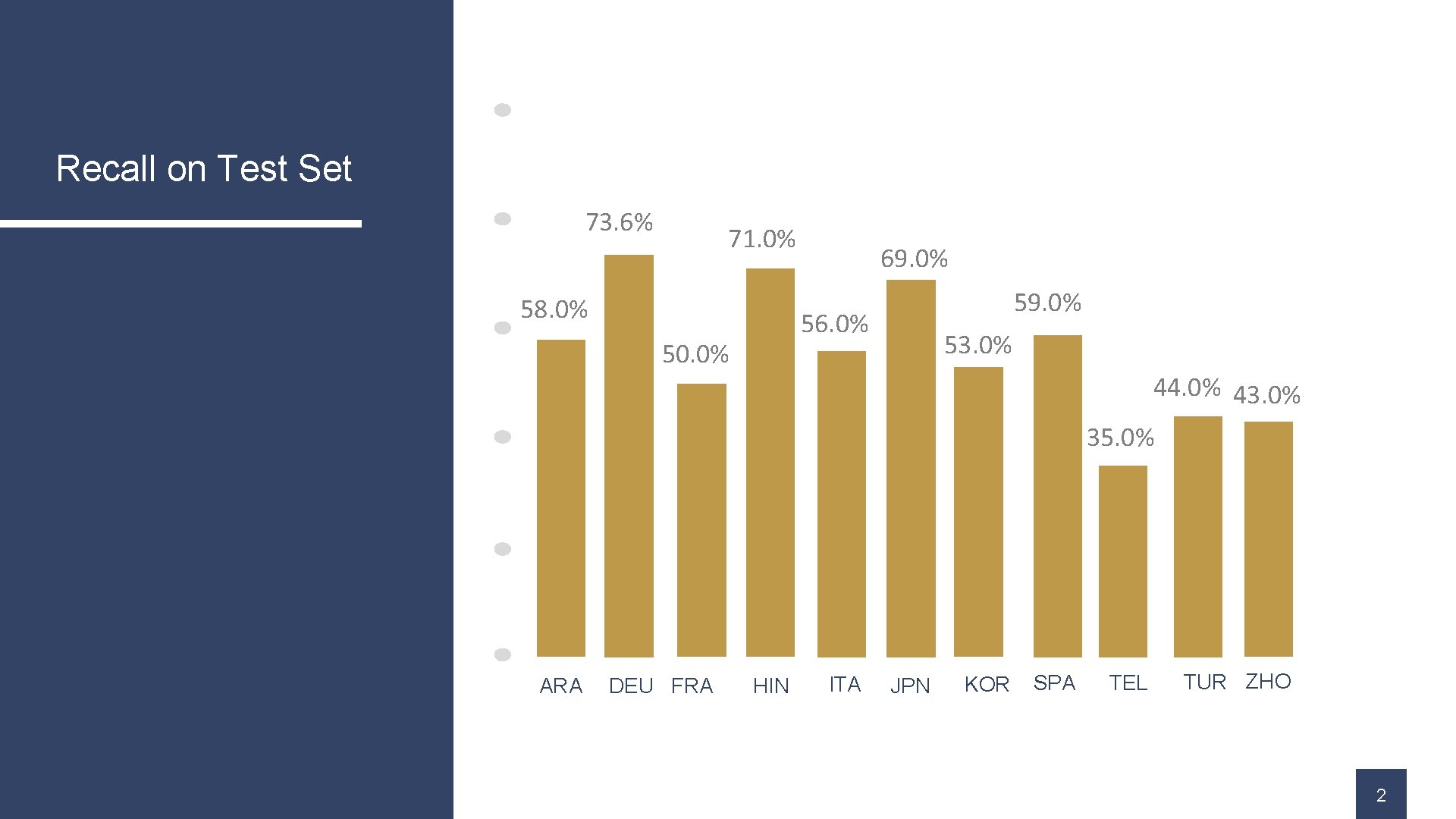

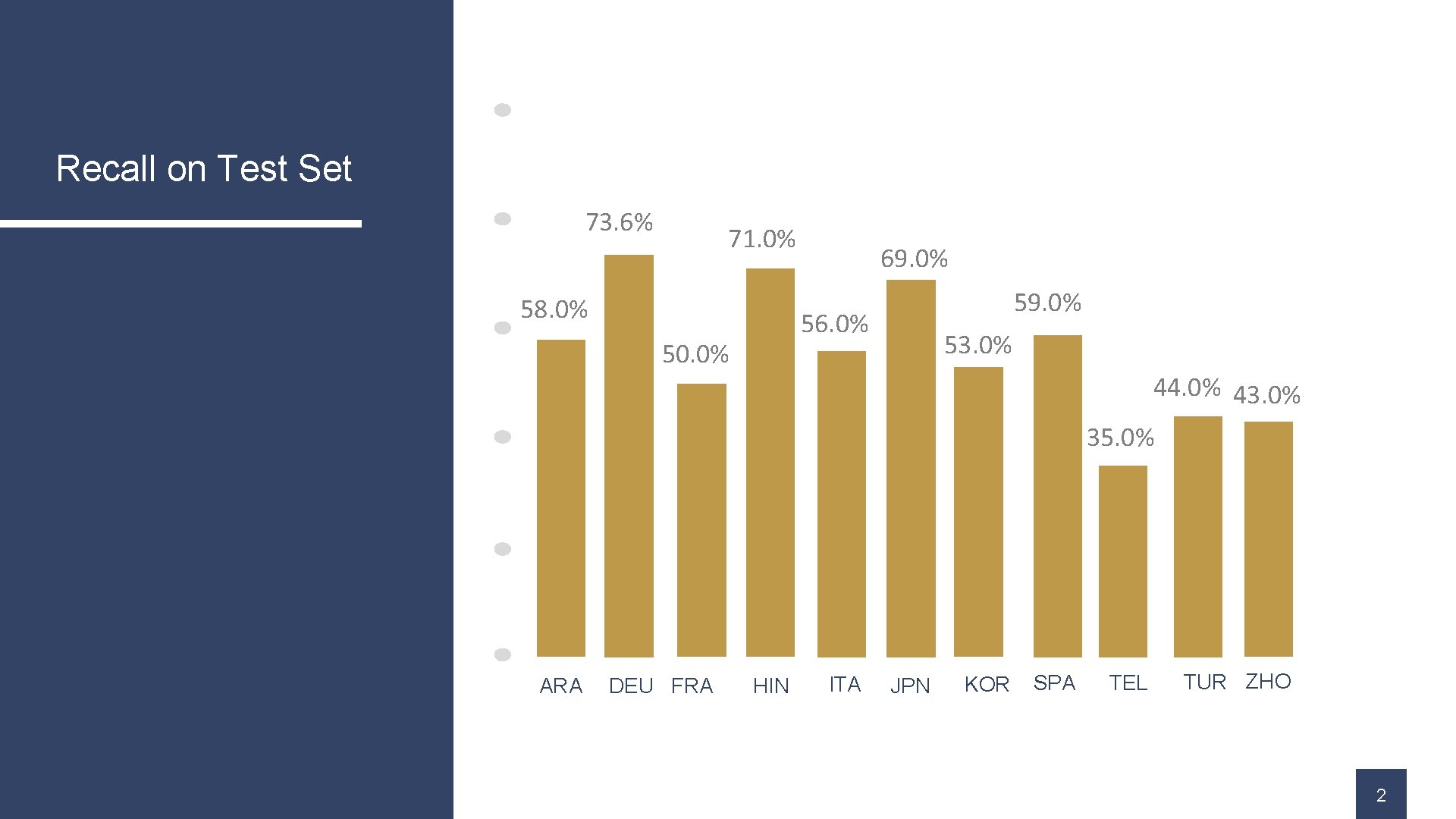

Recall on Test Set 73. 6% 71. 0% 58. 0% 69. 0% 56. 0% 50. 0% 53. 0% 44. 0% 43. 0% 35. 0% ARA DEU FRA HIN ITA JPN KOR SPA TEL TUR ZHO 2

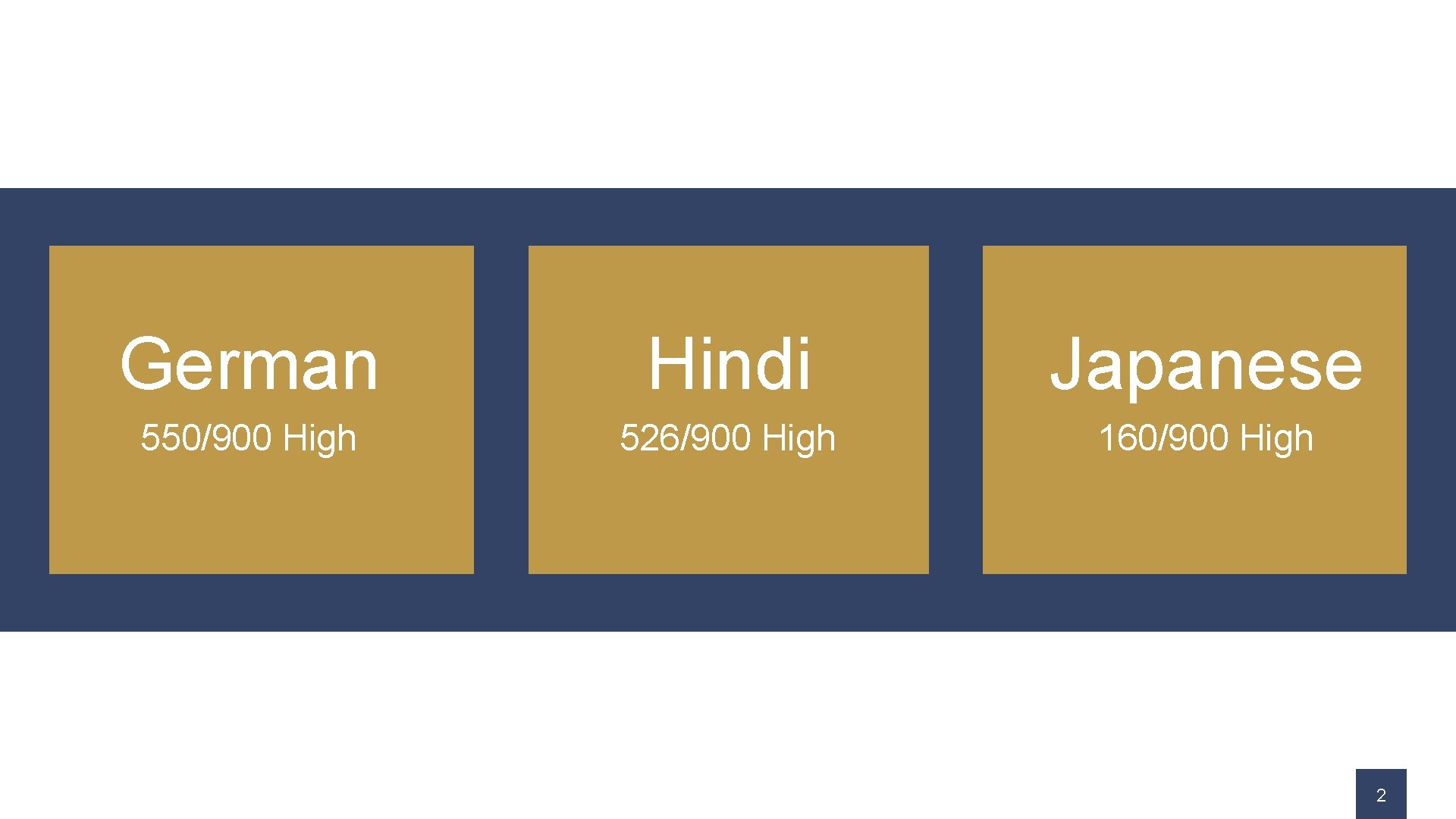

German Hindi Japanese 550/900 High 526/900 High 160/900 High 2

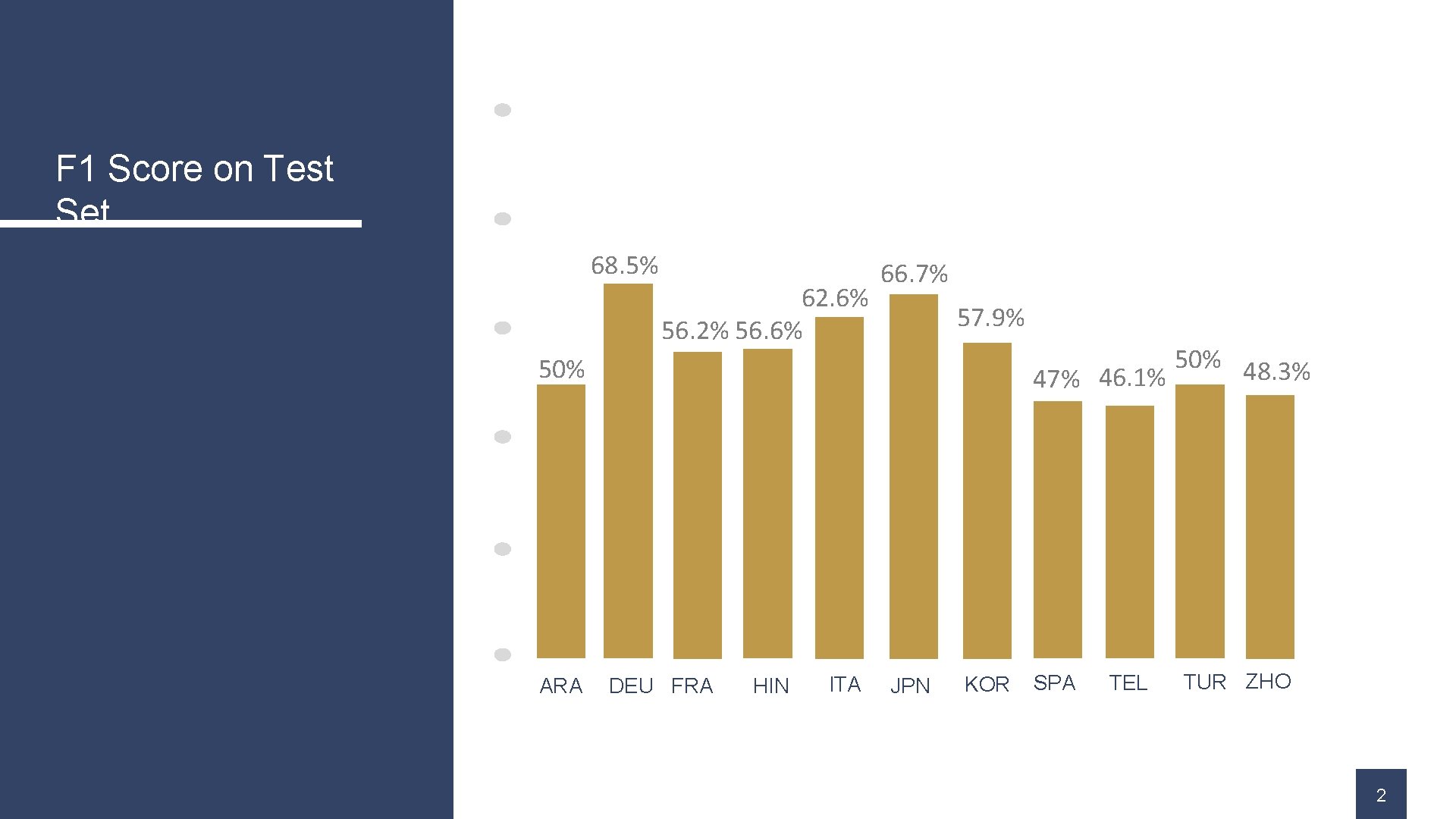

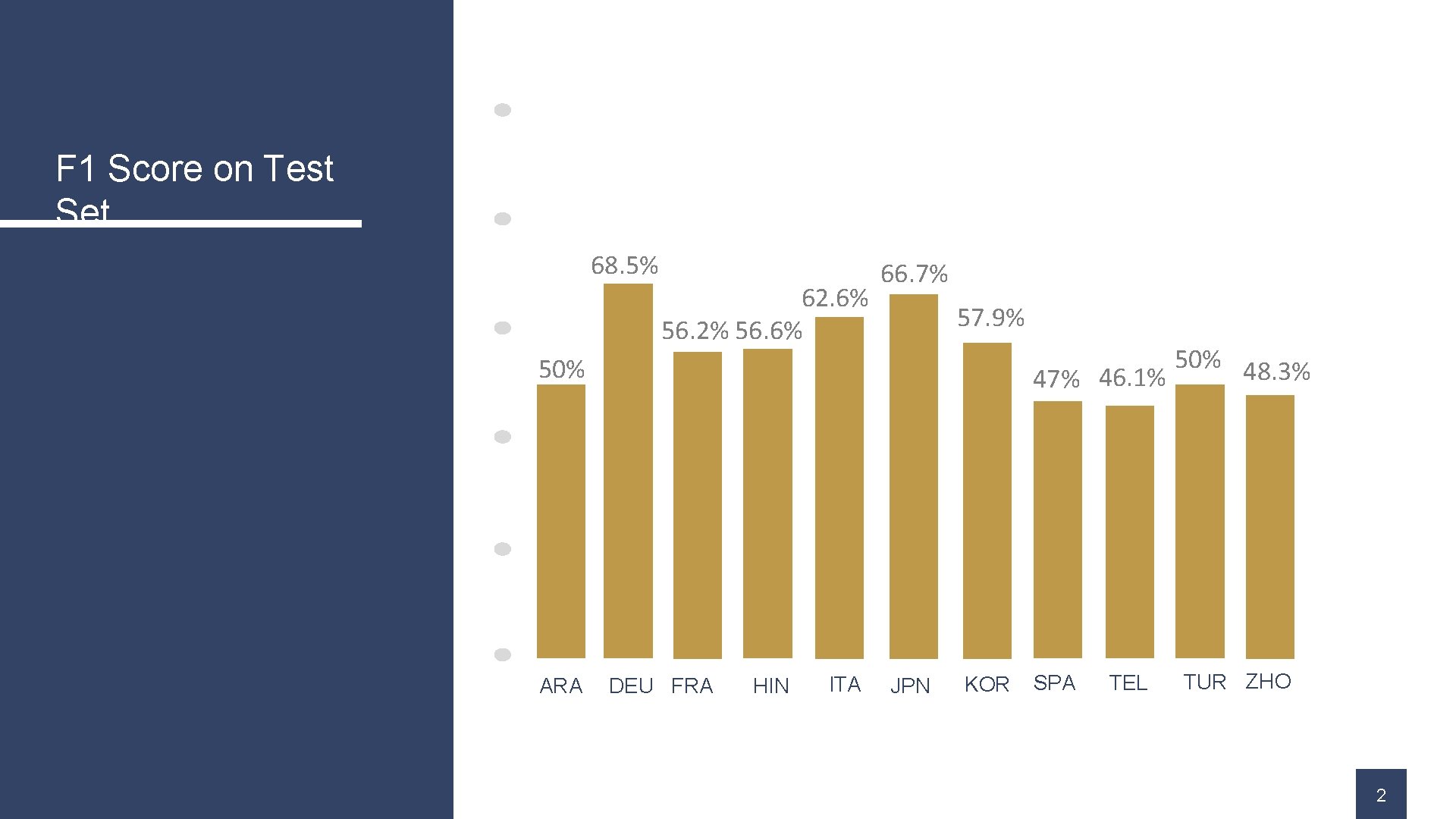

F 1 Score on Test Set 68. 5% 62. 6% 56. 2% 56. 6% 66. 7% 57. 9% 50% 48. 3% 47% 46. 1% 50% ARA DEU FRA HIN ITA JPN KOR SPA TEL TUR ZHO 2

Thanks! ANY QUESTIONS? Team Members: Anthony Sicilia Brian Falkenstein Yunkai Tang