Niagara A 32 Way Multithreaded Sparc Processor Kongetira

- Slides: 16

Niagara: A 32 -Way Multithreaded Sparc Processor Kongetira, Aingaran, Olukotun Presentation by: Mohamed Abuobaida Mohamed For COE 502 : Parallel Processing Architectures

Outline • • Introduction Motivation for Niagara Overview Sparc Pipeline Thread Selection Policy Integer Register File Memory Conclusion

Introduction • A multithreaded processor is one that enables more than one thread to exist on the CPU at the same time • Types of Multithreading: • • Coarse-grained: switch happens on event Fine-grained: switch every cycle or event Simultaneous Multithreading: Minimal resource sharing Chip multiprocessing: more than one CPU on the same chip

Motivation for Niagara • ILP does not provide enough parallelism for two reasons: • Memory latency • Inherently low application ILP • Designed to improve throughput • Total work done across multiple threads • Another target is power consumption • Reduced clock frequency • Sharing of pipelines

Motivation for Niagara • Designed for high performance server applications • Have client request-level parallelism (TLP) • Shared memory single-issue CPUs perform better than complex multiple issue CPUs • Combines CMP and fine-grained multithreading

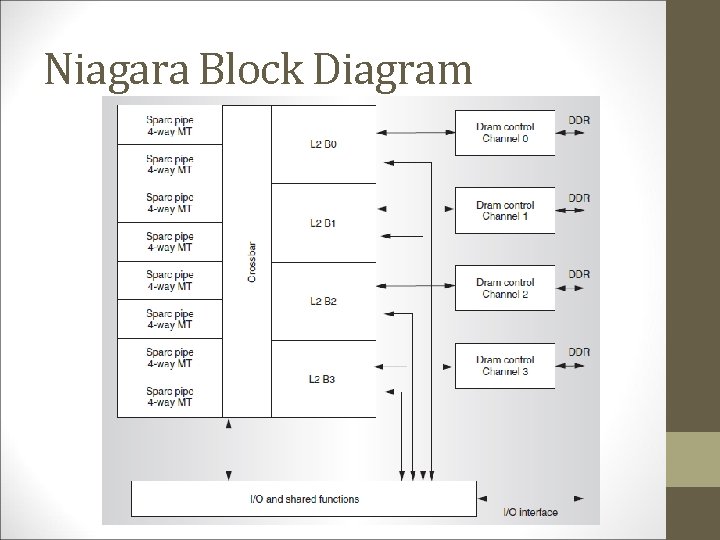

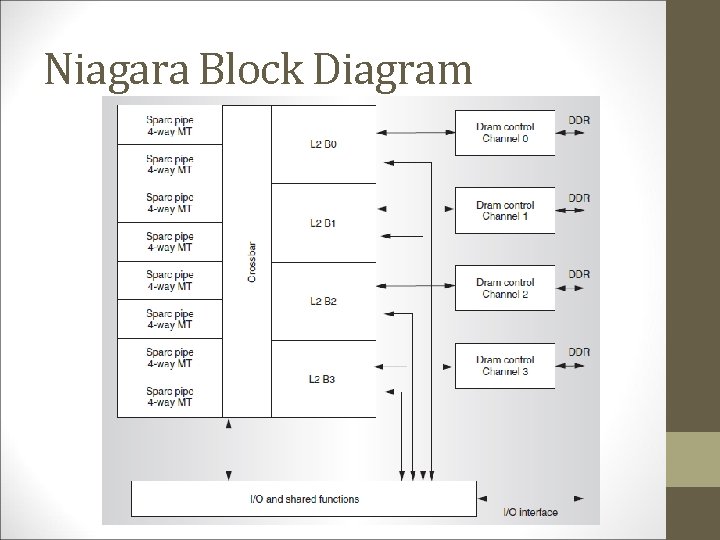

Niagara Overview • Supports 32 hardware threads • Each 4 threads are a group which shares a pipeline called Sparc pipe (total 8 Sparc pipes) • Each Sparc pipe has seprate I- and D-cache • Memory latency is hidden by thread switching at a cost of zero cycles • Shared 3 MB L 2 cache: 12 -way set associative • Crossbar interconnect between pipes, L 2 cache and other CPU shared resources with bandwidth: 200 GB/s

Niagara Block Diagram

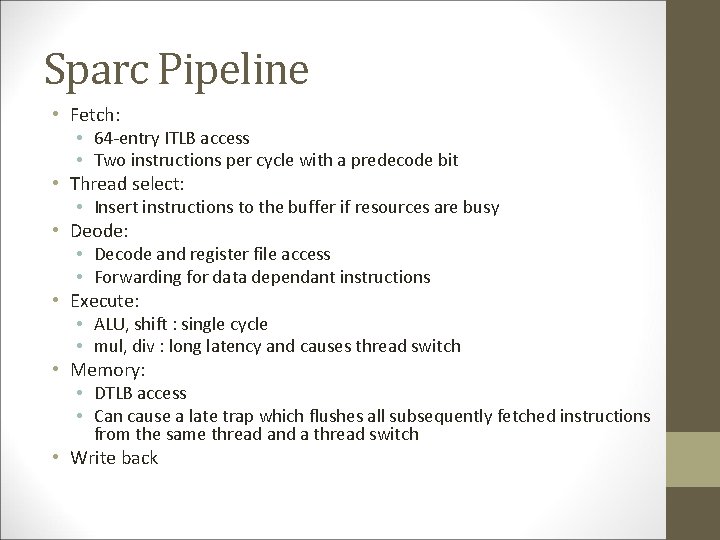

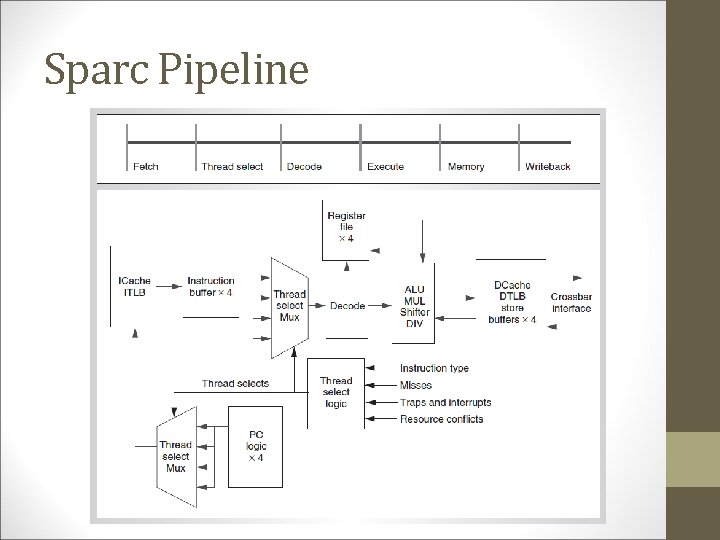

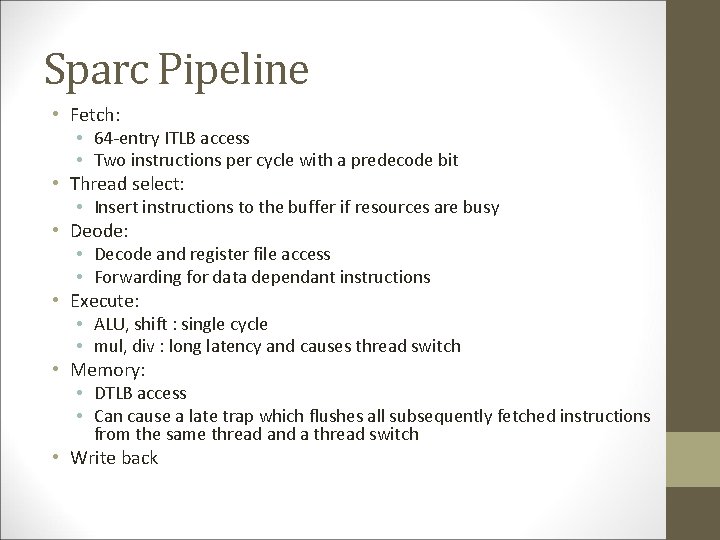

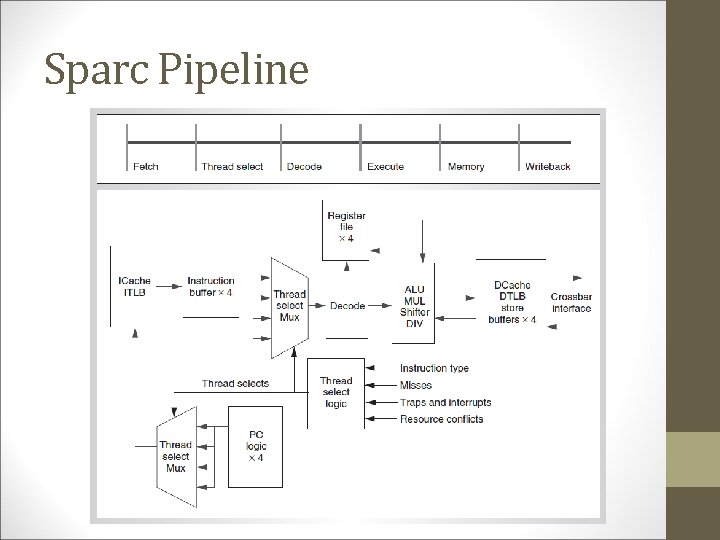

Sparc Pipeline • Single-issue, six-stage pipeline • fetch, thread select, decode, execute, memory, write back • Each pipeline supports 4 threads • Each thread has unique instruction and store buffer and register set • L 1 cache, TLBs, FUs are shared • Pipeline registers are shared except the fetch and thread select stages

Sparc Pipeline • Fetch: • 64 -entry ITLB access • Two instructions per cycle with a predecode bit • Thread select: • Insert instructions to the buffer if resources are busy • Deode: • Decode and register file access • Forwarding for data dependant instructions • Execute: • ALU, shift : single cycle • mul, div : long latency and causes thread switch • Memory: • DTLB access • Can cause a late trap which flushes all subsequently fetched instructions from the same thread and a thread switch • Write back

Sparc Pipeline

Thread Selection Policy • • Switch every cycle Least recently used thread has high priority Speculative instructions (fetched after load) have low priority Deselection of threads can happen because of long latency instructions like mul and div or by traps discovered later as in cache miss

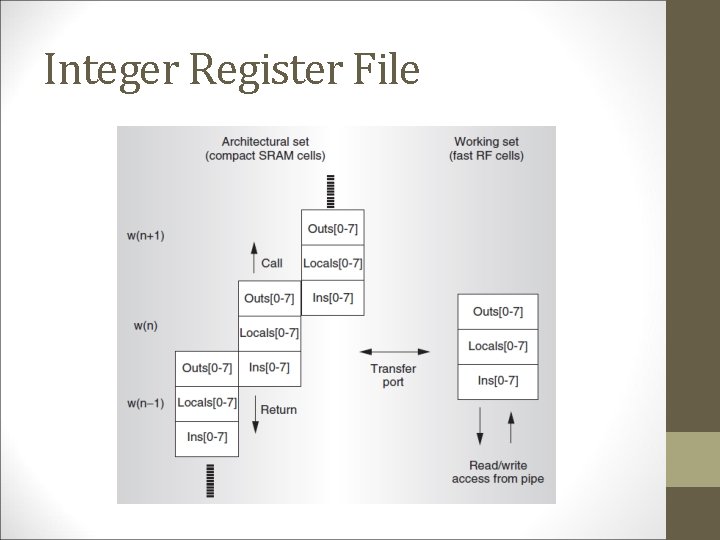

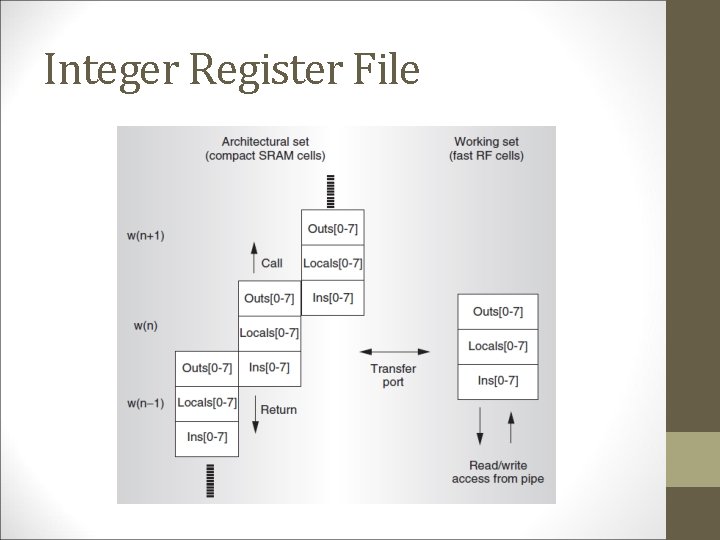

Integer Register File • 3 read ports • For single issue, store and few 3 -source instructions • 2 write ports • For single issue and long latency operations • Register window implementation • • A window has ins, outs and locals Each thread has 8 windows A new window is added by procedure calls, slide up Procedure return, slide down

Integer Register File • • • Divided into working set and architectural set Working set is implemented by fast register file cells Architectural consists of SRAM cells Sets are linked by a transfer port Window change triggers thread switch Threads share the read circuit but not the registers

Integer Register File

Memory • L 1 I-cache is 16 KB 4 -way set associative with block size of 32 B • Random replacement policy • L 1 D-cache is 8 KB 4 -way set associative with block size of 16 B write through policy • Simple coherence protocol • L 2 implements write back policy

Conclusion • • • Designed for power Requires no special compiler Exploits TLP Useful for client-server applications Implements fine-grain multithreading and CMP