New Parallel Programming Languages for HPC Iris Christadler

![My favorite “Dongarra” slides [http: //www. netlib. org/utk/people/Jack. Dongarra/SLIDES/dongarra-isc 2004. pdf] [http: //www. netlib. My favorite “Dongarra” slides [http: //www. netlib. org/utk/people/Jack. Dongarra/SLIDES/dongarra-isc 2004. pdf] [http: //www. netlib.](https://slidetodoc.com/presentation_image_h2/7aba8a321f5a0e5e98cfc6954d3392c4/image-7.jpg)

- Slides: 23

New Parallel Programming Languages for HPC Iris Christadler, Leibniz Supercomputing Centre, Germany August 2011, PDC/KTH Summer School

Outline 1. The free lunch is over Multicore CPUs are ubiquitous 2. Hardware accelerators New languages enter the HPC world 3. The quest for a parallel language Examples of emerging languages 4. Reality Check 2 D Stencil Computation New Parallel Programming Languages, Iris Christadler, LRZ August 2011 2

The free lunch is over “But if you want your application to benefit from the continued exponential throughput advances in new processors, it will need to be a well-written concurrent application. And that’s easier said than done, because not all problems are inherently parallelizable and because concurrent programming is hard. ” The Free Lunch Is Over A Fundamental Turn Toward Concurrency in Software By Herb Sutter [http: //www. gotw. ca/publications/concurrency-ddj. htm] New Parallel Programming Languages, Iris Christadler, LRZ August 2011 3

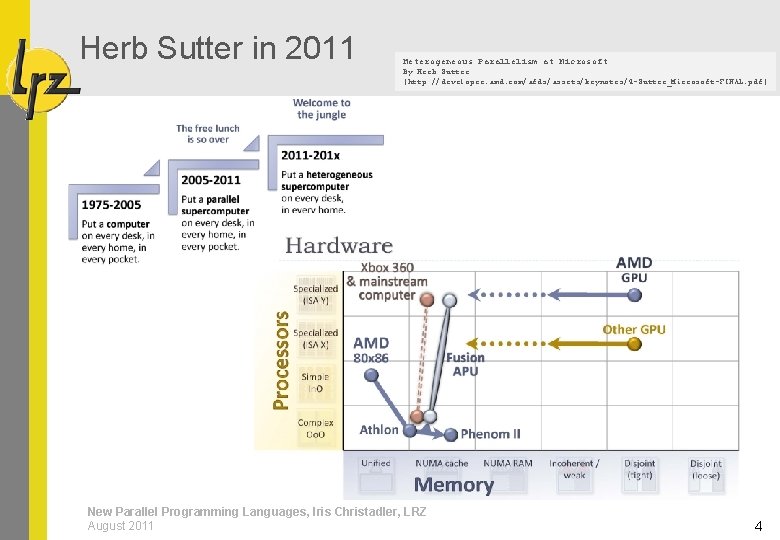

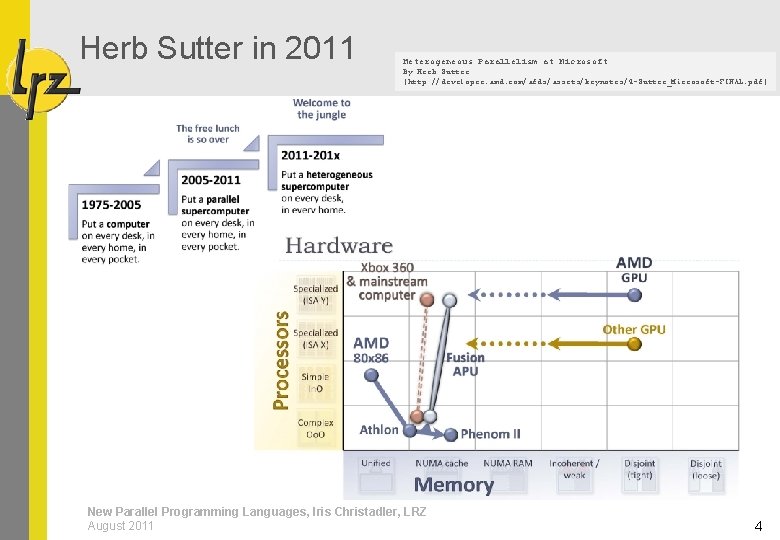

Herb Sutter in 2011 Heterogeneous Parallelism at Microsoft By Herb Sutter [http: //developer. amd. com/afds/assets/keynotes/4 -Sutter_Microsoft-FINAL. pdf] New Parallel Programming Languages, Iris Christadler, LRZ August 2011 4

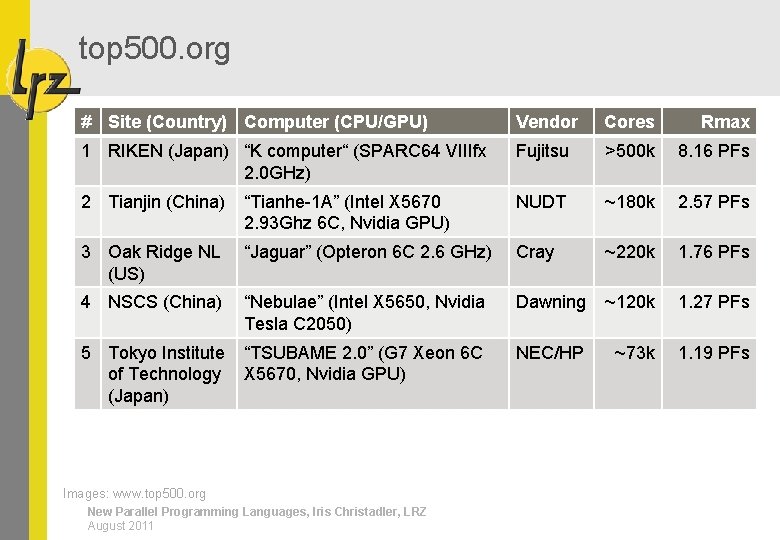

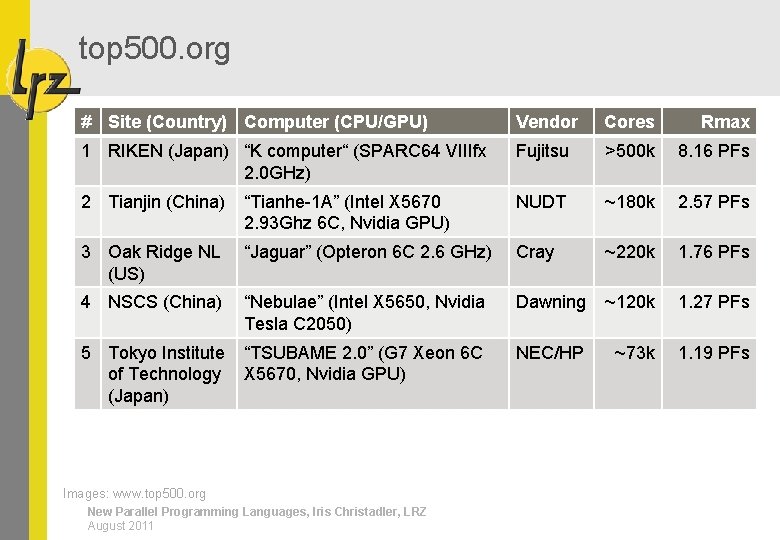

top 500. org # Site (Country) Computer (CPU/GPU) Vendor Cores Rmax 1 RIKEN (Japan) “K computer“ (SPARC 64 VIIIfx 2. 0 GHz) Fujitsu >500 k 8. 16 PFs 2 Tianjin (China) “Tianhe-1 A” (Intel X 5670 2. 93 Ghz 6 C, Nvidia GPU) NUDT ~180 k 2. 57 PFs 3 Oak Ridge NL (US) “Jaguar” (Opteron 6 C 2. 6 GHz) Cray ~220 k 1. 76 PFs 4 NSCS (China) “Nebulae” (Intel X 5650, Nvidia Tesla C 2050) Dawning ~120 k 1. 27 PFs 5 Tokyo Institute of Technology (Japan) “TSUBAME 2. 0” (G 7 Xeon 6 C X 5670, Nvidia GPU) NEC/HP ~73 k 1. 19 PFs Images: www. top 500. org New Parallel Programming Languages, Iris Christadler, LRZ August 2011

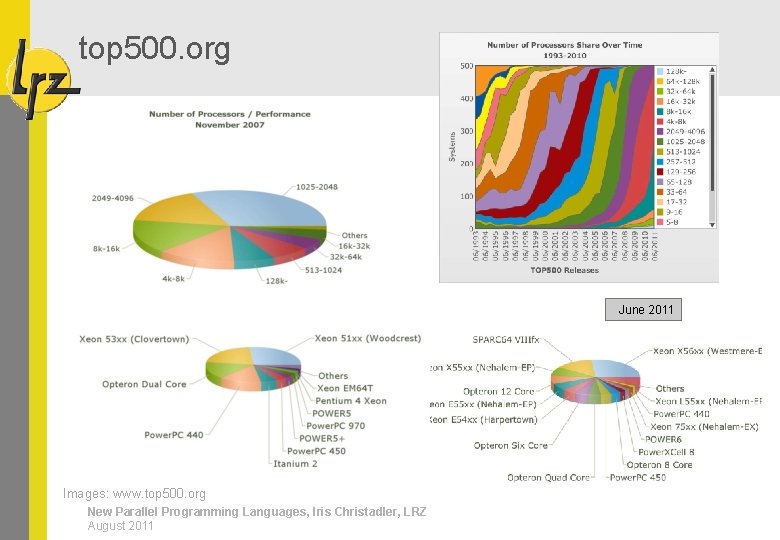

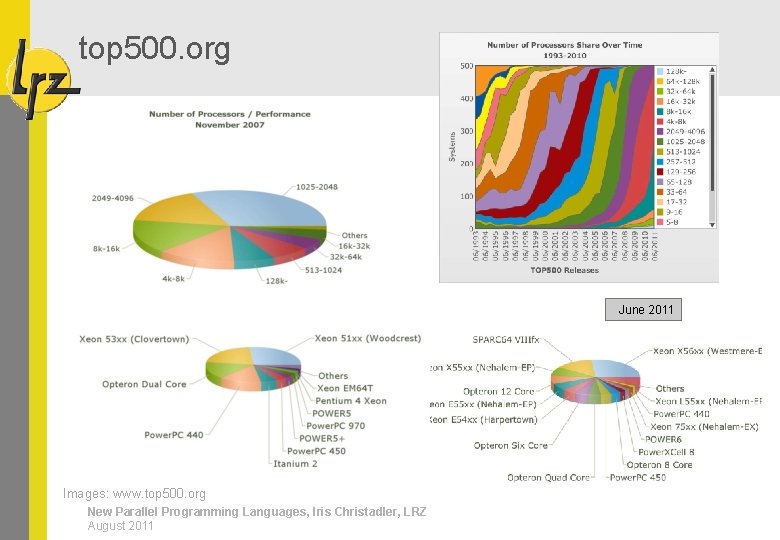

top 500. org June 2011 Images: www. top 500. org New Parallel Programming Languages, Iris Christadler, LRZ August 2011

![My favorite Dongarra slides http www netlib orgutkpeopleJack DongarraSLIDESdongarraisc 2004 pdf http www netlib My favorite “Dongarra” slides [http: //www. netlib. org/utk/people/Jack. Dongarra/SLIDES/dongarra-isc 2004. pdf] [http: //www. netlib.](https://slidetodoc.com/presentation_image_h2/7aba8a321f5a0e5e98cfc6954d3392c4/image-7.jpg)

My favorite “Dongarra” slides [http: //www. netlib. org/utk/people/Jack. Dongarra/SLIDES/dongarra-isc 2004. pdf] [http: //www. netlib. org/utk/people/Jack. Dongarra/SLIDES/sc 09 -exascale-panel. pdf] New Parallel Programming Languages, Iris Christadler, LRZ August 2011

New languages enter the HPC world HARDWARE ACCELERATORS New Parallel Programming Languages, Iris Christadler, LRZ August 2011 8

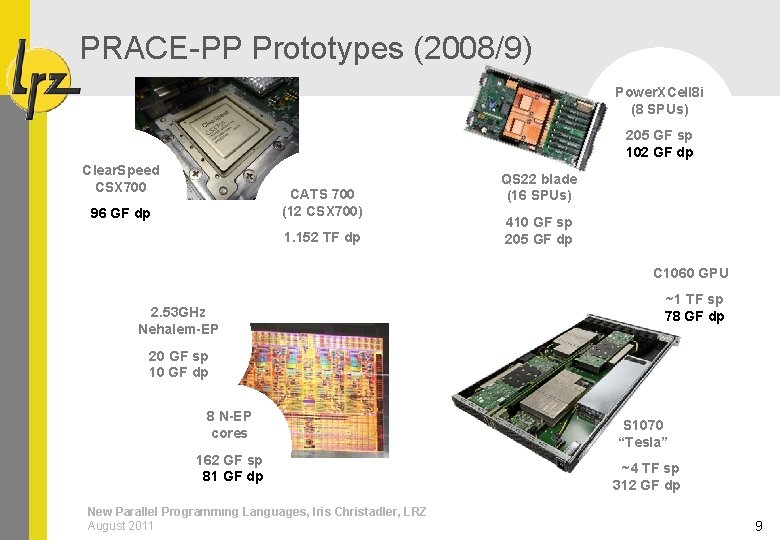

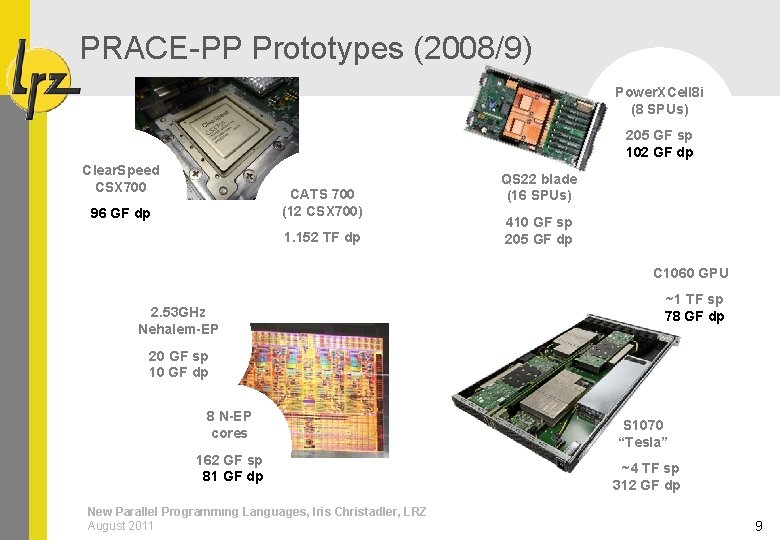

PRACE-PP Prototypes (2008/9) Power. XCell 8 i (8 SPUs) 205 GF sp 102 GF dp Clear. Speed CSX 700 CATS 700 (12 CSX 700) 96 GF dp 1. 152 TF dp QS 22 blade (16 SPUs) 410 GF sp 205 GF dp C 1060 GPU 2. 53 GHz Nehalem-EP ~1 TF sp 78 GF dp 20 GF sp 10 GF dp 8 N-EP cores 162 GF sp 81 GF dp New Parallel Programming Languages, Iris Christadler, LRZ August 2011 S 1070 “Tesla” ~4 TF sp 312 GF dp 9

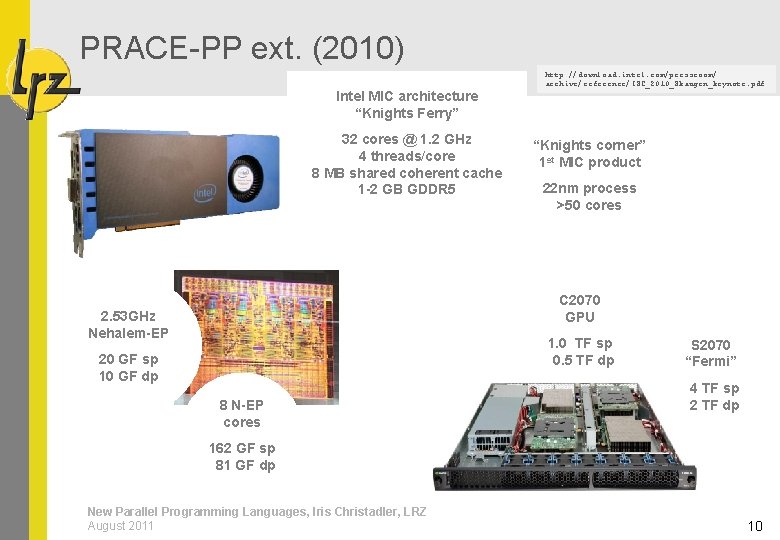

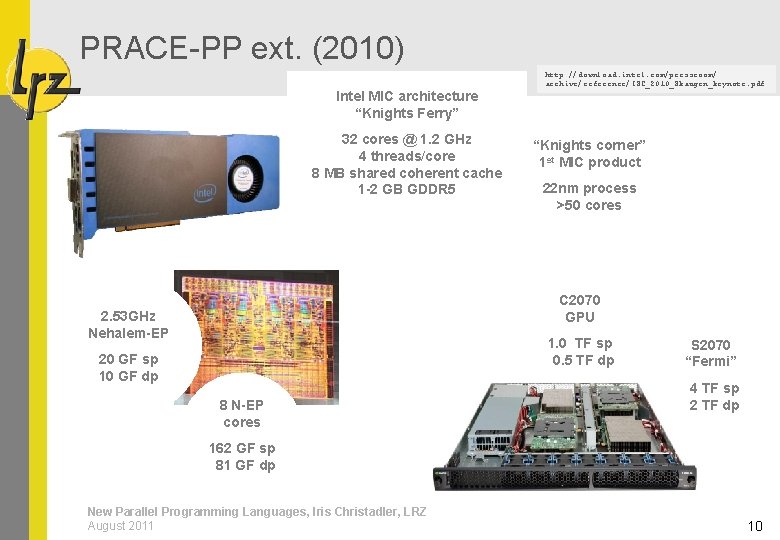

PRACE-PP ext. (2010) Intel MIC architecture “Knights Ferry” 32 cores @ 1. 2 GHz 4 threads/core 8 MB shared coherent cache 1 -2 GB GDDR 5 http: //download. intel. com/pressroom/ archive/reference/ISC_2010_Skaugen_keynote. pdf “Knights corner” 1 st MIC product 22 nm process >50 cores C 2070 GPU 2. 53 GHz Nehalem-EP 1. 0 TF sp 0. 5 TF dp 20 GF sp 10 GF dp 8 N-EP cores S 2070 “Fermi” 4 TF sp 2 TF dp 162 GF sp 81 GF dp New Parallel Programming Languages, Iris Christadler, LRZ August 2011 10

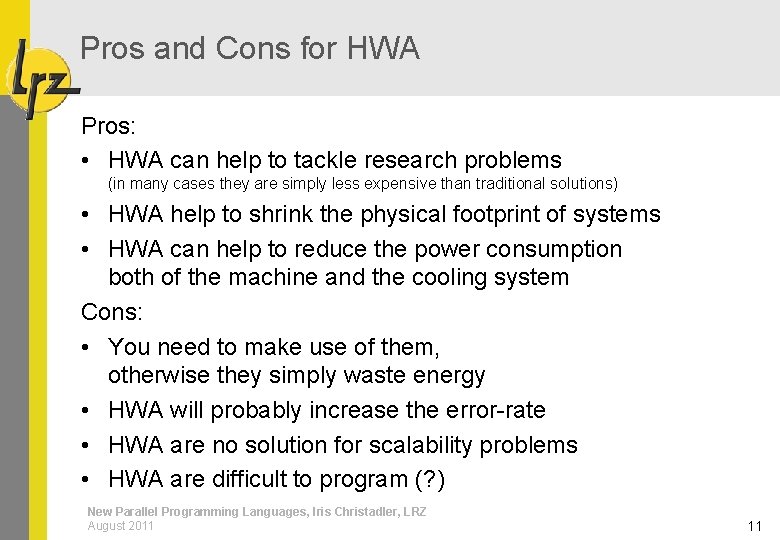

Pros and Cons for HWA Pros: • HWA can help to tackle research problems (in many cases they are simply less expensive than traditional solutions) • HWA help to shrink the physical footprint of systems • HWA can help to reduce the power consumption both of the machine and the cooling system Cons: • You need to make use of them, otherwise they simply waste energy • HWA will probably increase the error-rate • HWA are no solution for scalability problems • HWA are difficult to program (? ) New Parallel Programming Languages, Iris Christadler, LRZ August 2011 11

Examples of emerging languages THE QUEST FOR A PARALLEL LANGUAGE New Parallel Programming Languages, Iris Christadler, LRZ August 2011 12

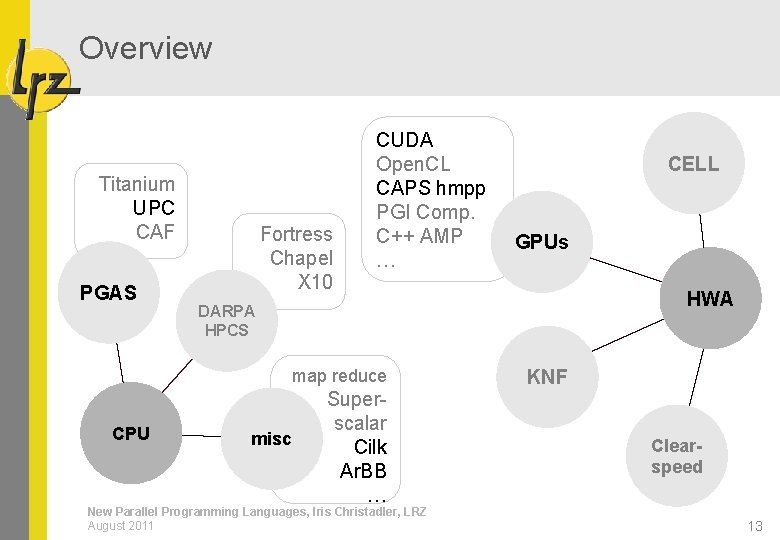

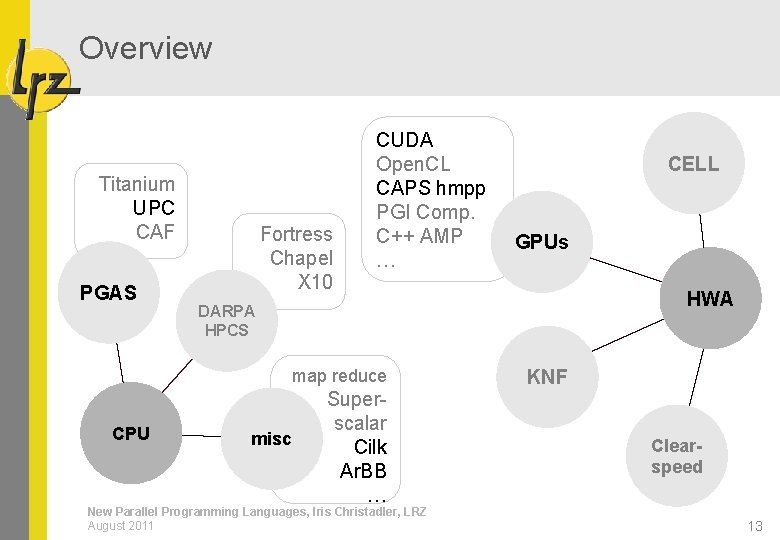

Overview Titanium UPC CAF PGAS Fortress Chapel X 10 CUDA Open. CL CAPS hmpp PGI Comp. C++ AMP … GPUs HWA DARPA HPCS map reduce CPU CELL misc Superscalar Cilk Ar. BB … New Parallel Programming Languages, Iris Christadler, LRZ August 2011 KNF Clearspeed 13

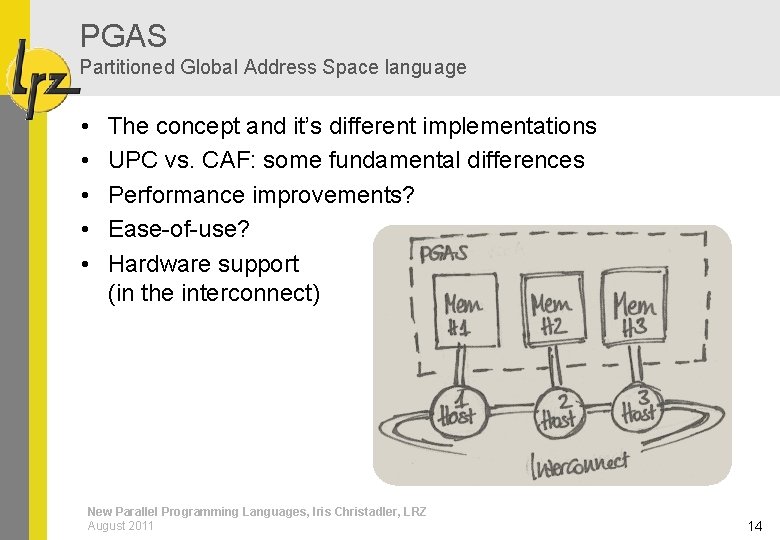

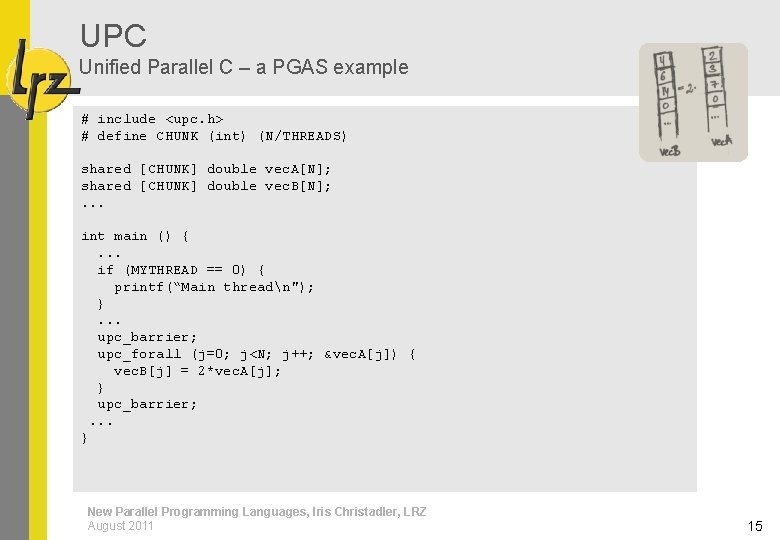

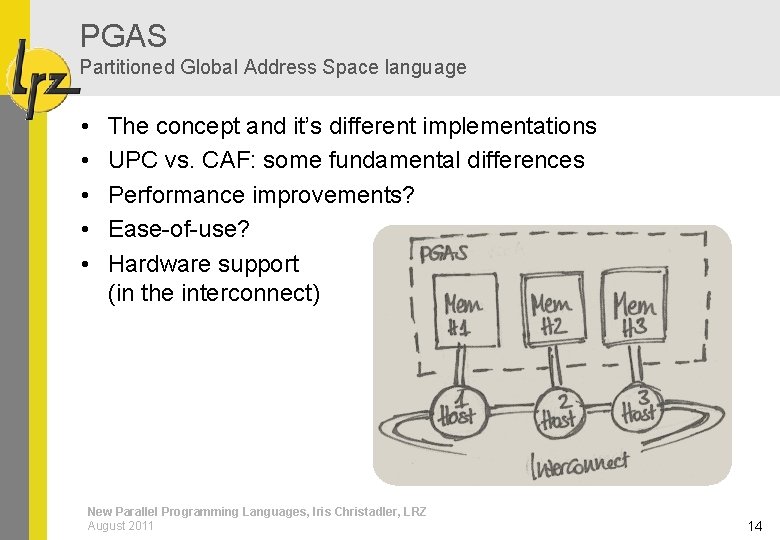

PGAS Partitioned Global Address Space language • • • The concept and it’s different implementations UPC vs. CAF: some fundamental differences Performance improvements? Ease-of-use? Hardware support (in the interconnect) New Parallel Programming Languages, Iris Christadler, LRZ August 2011 14

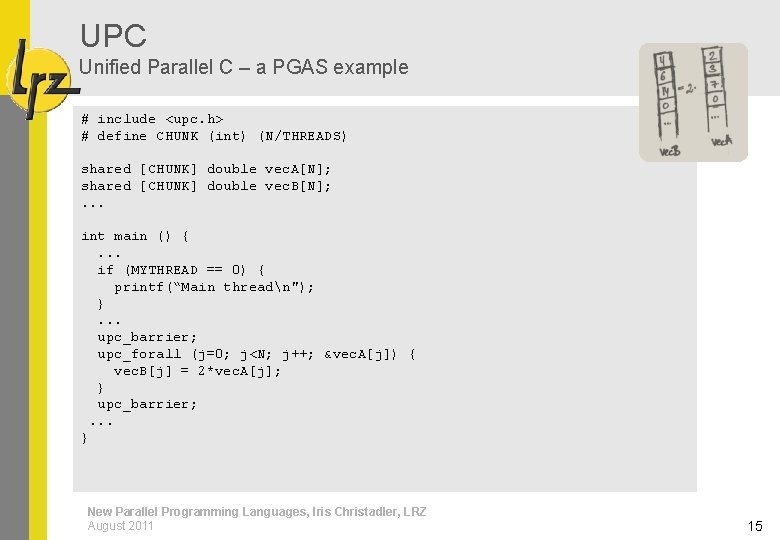

UPC Unified Parallel C – a PGAS example # include <upc. h> # define CHUNK (int) (N/THREADS) shared [CHUNK] double vec. A[N]; shared [CHUNK] double vec. B[N]; . . . int main () {. . . if (MYTHREAD == 0) { printf(“Main threadn"); }. . . upc_barrier; upc_forall (j=0; j<N; j++; &vec. A[j]) { vec. B[j] = 2*vec. A[j]; } upc_barrier; . . . } New Parallel Programming Languages, Iris Christadler, LRZ August 2011 15

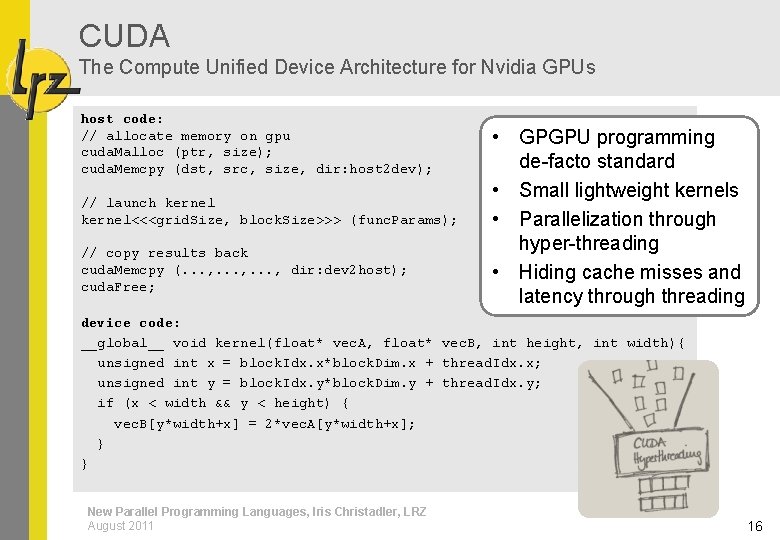

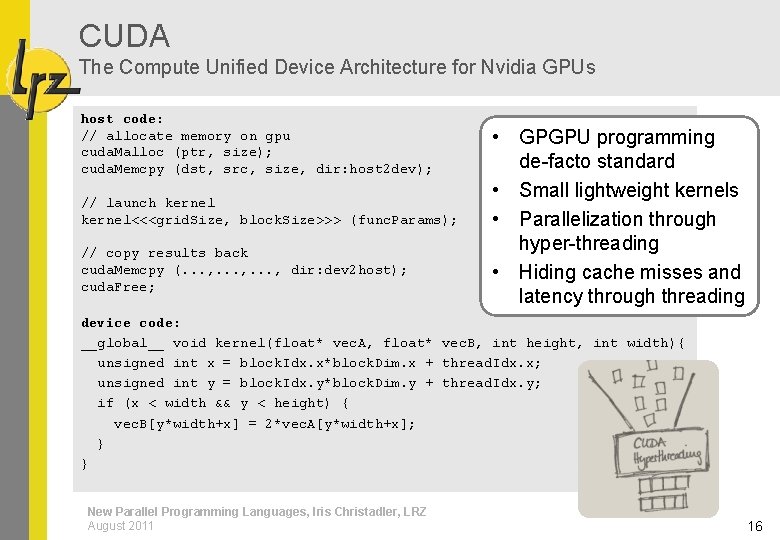

CUDA The Compute Unified Device Architecture for Nvidia GPUs host code: // allocate memory on gpu cuda. Malloc (ptr, size); cuda. Memcpy (dst, src, size, dir: host 2 dev); // launch kernel<<<grid. Size, block. Size>>> (func. Params); // copy results back cuda. Memcpy (. . . , dir: dev 2 host); cuda. Free; • GPGPU programming de-facto standard • Small lightweight kernels • Parallelization through hyper-threading • Hiding cache misses and latency through threading device code: __global__ void kernel(float* vec. A, float* vec. B, int height, int width){ unsigned int x = block. Idx. x*block. Dim. x + thread. Idx. x; unsigned int y = block. Idx. y*block. Dim. y + thread. Idx. y; if (x < width && y < height) { vec. B[y*width+x] = 2*vec. A[y*width+x]; } } New Parallel Programming Languages, Iris Christadler, LRZ August 2011 16

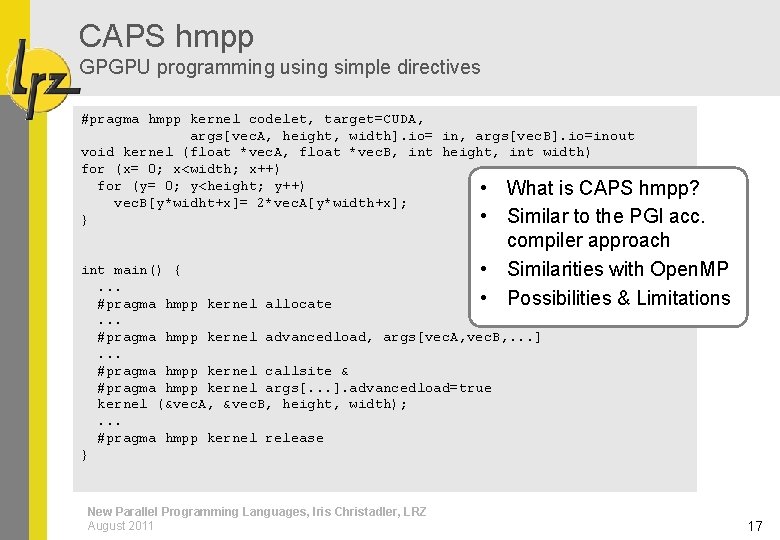

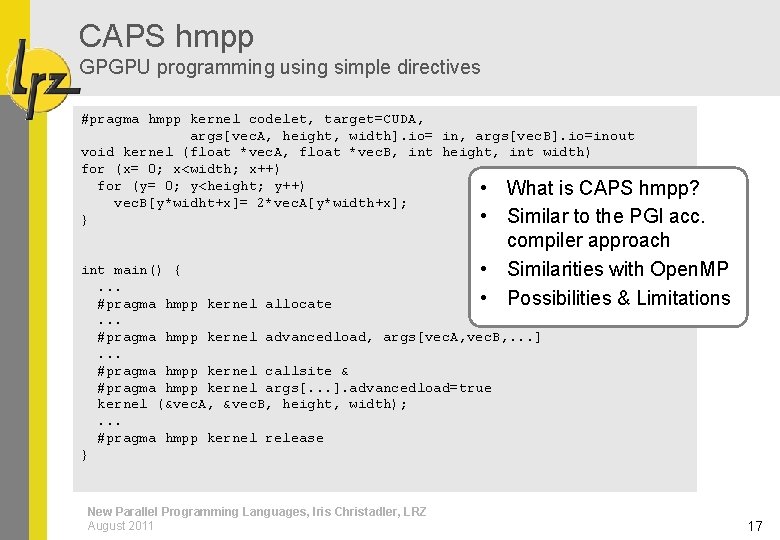

CAPS hmpp GPGPU programming using simple directives #pragma hmpp kernel codelet, target=CUDA, args[vec. A, height, width]. io= in, args[vec. B]. io=inout void kernel (float *vec. A, float *vec. B, int height, int width) for (x= 0; x<width; x++) for (y= 0; y<height; y++) • What is CAPS hmpp? vec. B[y*widht+x]= 2*vec. A[y*width+x]; • Similar to the PGI acc. } compiler approach • Similarities with Open. MP • Possibilities & Limitations int main() {. . . #pragma hmpp kernel allocate. . . #pragma hmpp kernel advancedload, args[vec. A, vec. B, . . . ]. . . #pragma hmpp kernel callsite & #pragma hmpp kernel args[. . . ]. advancedload=true kernel (&vec. A, &vec. B, height, width); . . . #pragma hmpp kernel release } New Parallel Programming Languages, Iris Christadler, LRZ August 2011 17

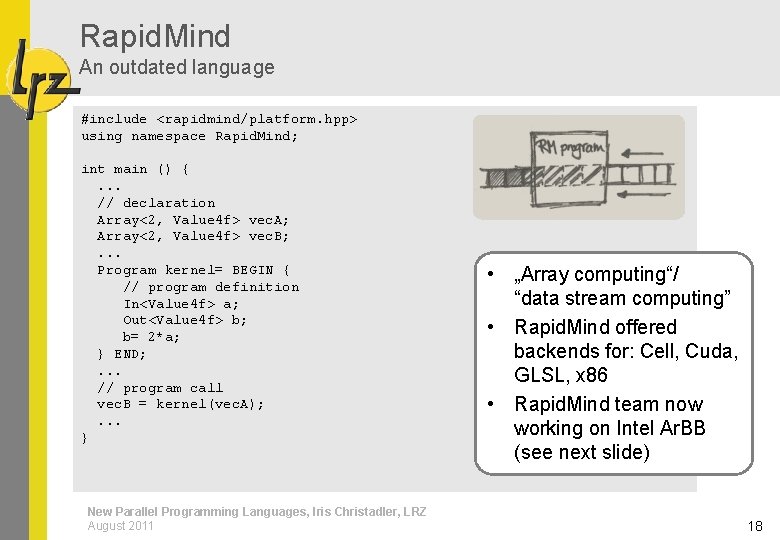

Rapid. Mind An outdated language #include <rapidmind/platform. hpp> using namespace Rapid. Mind; int main () {. . . // declaration Array<2, Value 4 f> vec. A; Array<2, Value 4 f> vec. B; . . . Program kernel= BEGIN { // program definition In<Value 4 f> a; Out<Value 4 f> b; b= 2*a; } END; . . . // program call vec. B = kernel(vec. A); . . . } New Parallel Programming Languages, Iris Christadler, LRZ August 2011 • „Array computing“/ “data stream computing” • Rapid. Mind offered backends for: Cell, Cuda, GLSL, x 86 • Rapid. Mind team now working on Intel Ar. BB (see next slide) 18

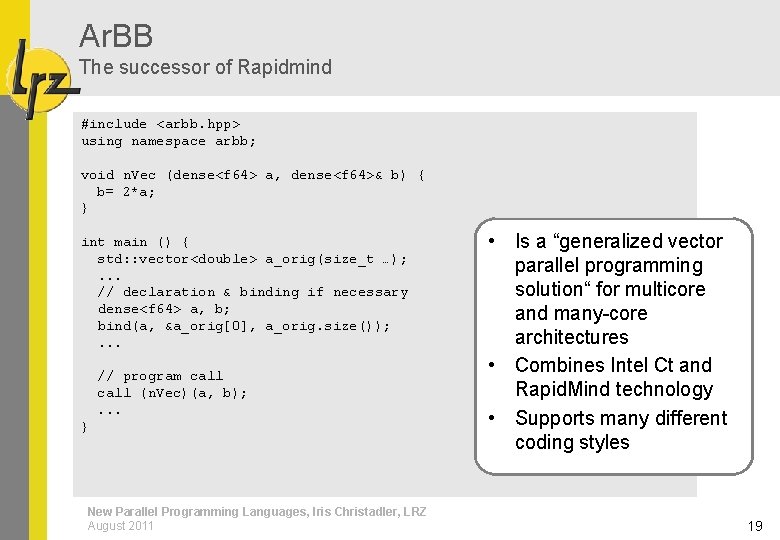

Ar. BB The successor of Rapidmind #include <arbb. hpp> using namespace arbb; void n. Vec (dense<f 64> a, dense<f 64>& b) { b= 2*a; } int main () { std: : vector<double> a_orig(size_t …); . . . // declaration & binding if necessary dense<f 64> a, b; bind(a, &a_orig[0], a_orig. size()); . . . // program call (n. Vec)(a, b); . . . } New Parallel Programming Languages, Iris Christadler, LRZ August 2011 • Is a “generalized vector parallel programming solution“ for multicore and many-core architectures • Combines Intel Ct and Rapid. Mind technology • Supports many different coding styles 19

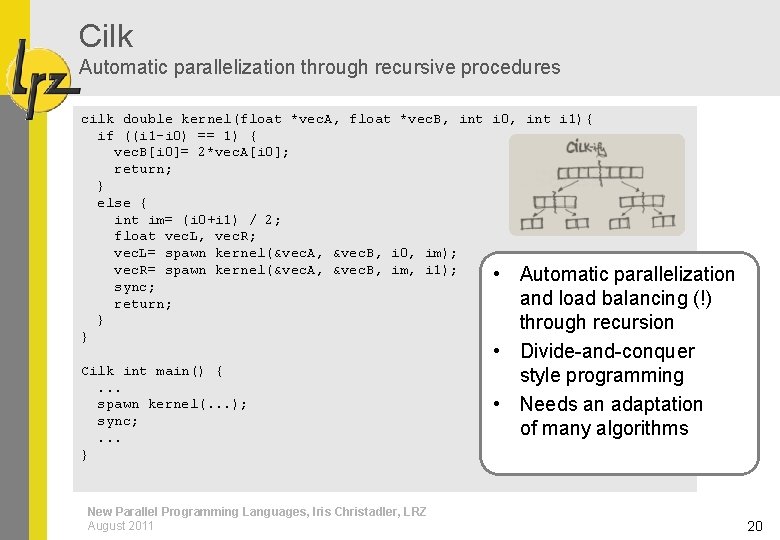

Cilk Automatic parallelization through recursive procedures cilk double kernel(float *vec. A, float *vec. B, int i 0, int i 1){ if ((i 1 -i 0) == 1) { vec. B[i 0]= 2*vec. A[i 0]; return; } else { int im= (i 0+i 1) / 2; float vec. L, vec. R; vec. L= spawn kernel(&vec. A, &vec. B, i 0, im); vec. R= spawn kernel(&vec. A, &vec. B, im, i 1); • Automatic parallelization sync; and load balancing (!) return; } through recursion } Cilk int main() {. . . spawn kernel(. . . ); sync; . . . } New Parallel Programming Languages, Iris Christadler, LRZ August 2011 • Divide-and-conquer style programming • Needs an adaptation of many algorithms 20

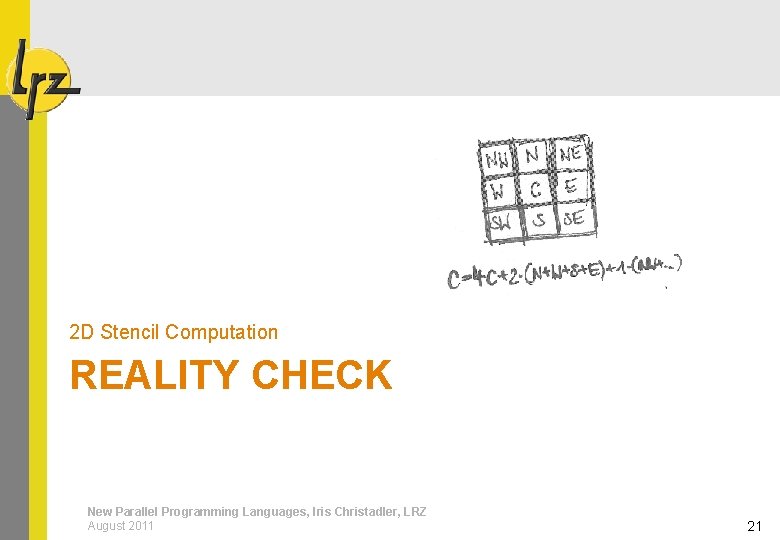

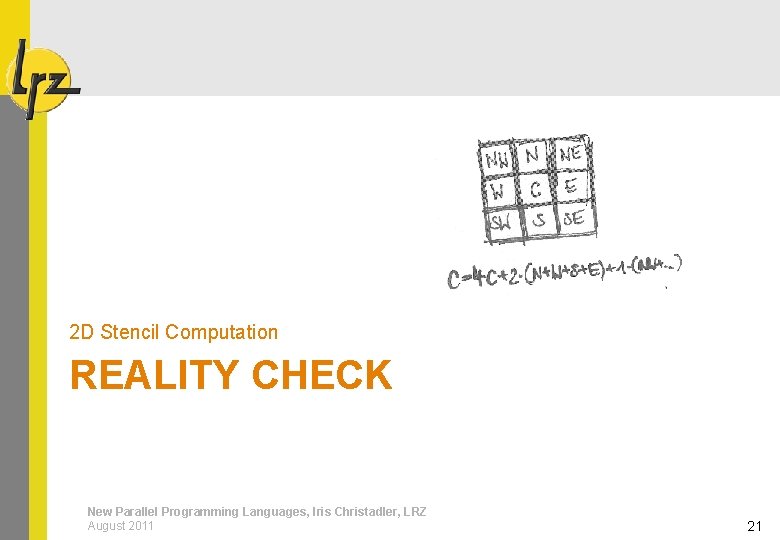

2 D Stencil Computation REALITY CHECK New Parallel Programming Languages, Iris Christadler, LRZ August 2011 21

Wrap-Up • There is a need for a new parallel paradigm (in HPC & the rest of the IT-world) • Hardware accelerators have shown that HPC-folks are willing to use new languages if the performance gain is large enough • Languages & paradigms: – – – UPC CUDA CAPS hmpp Ar. BB Cilk For more information please have a look at the PRACE Deliverables D 6. 6 and D 8. 3. 2 (www. prace-project. eu) New Parallel Programming Languages, Iris Christadler, LRZ August 2011 22

Contact details: Iris Christadler (christadler@lrz. de), LRZ, Germany THANK YOU FOR YOUR ATTENTION! New Parallel Programming Languages, Iris Christadler, LRZ August 2011 23