New Algorithms for Efficient HighDimensional Nonparametric Classification Ting

- Slides: 14

New Algorithms for Efficient High-Dimensional Nonparametric Classification Ting Liu, Andrew W. Moore, and Alexander Gray

Overview o Introduction n n o New algorithms for k-NN classification n n o o k Nearest Neighbors (k-NN) KNS 1: conventional k-NN search KNS 2: for skewed-class data KNS 3: ”are at least t of k-NN positive”? Results Comments

Introduction: o k-NN n n n Nonparametric classification method. Given a data set of n data points, it finds the k closest points to a query point , and chooses the label corresponding to the majority. Computational complexity is too high in many solutions, especially for the highdimensional case.

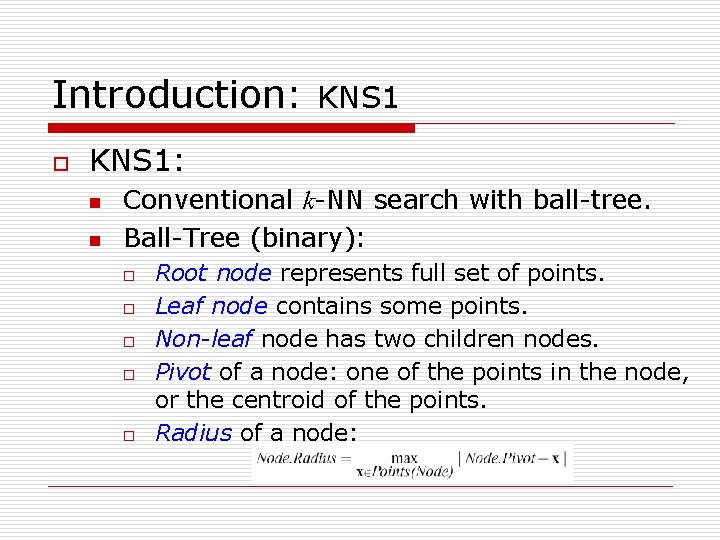

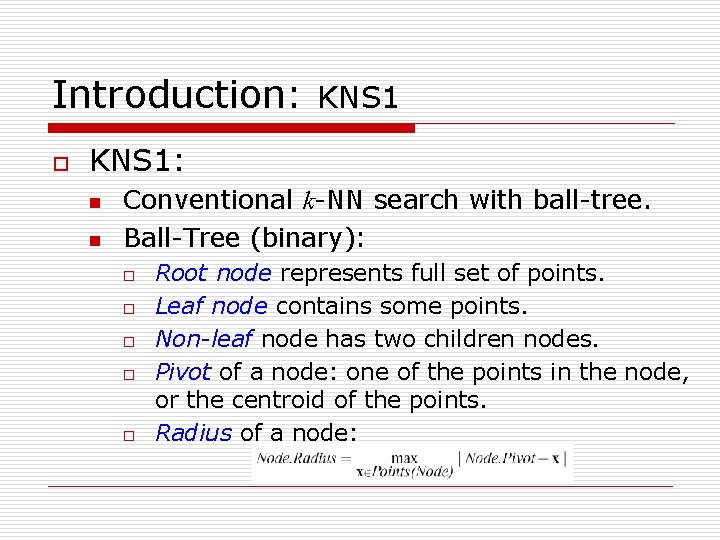

Introduction: o KNS 1: n n Conventional k-NN search with ball-tree. Ball-Tree (binary): o o o Root node represents full set of points. Leaf node contains some points. Non-leaf node has two children nodes. Pivot of a node: one of the points in the node, or the centroid of the points. Radius of a node:

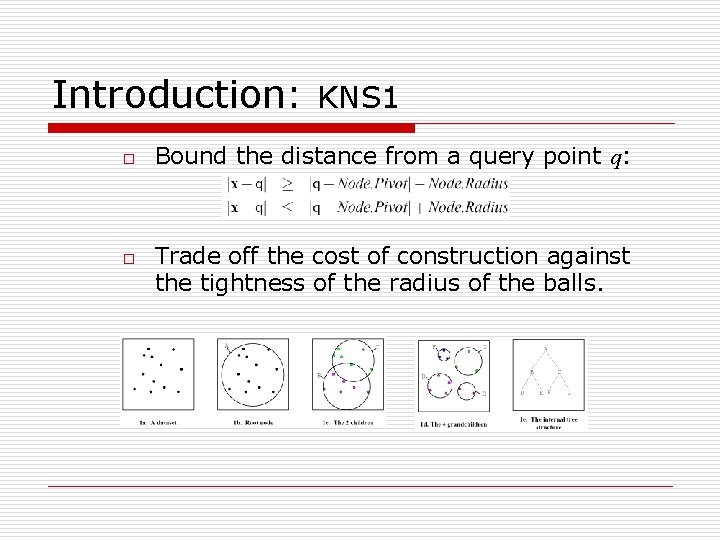

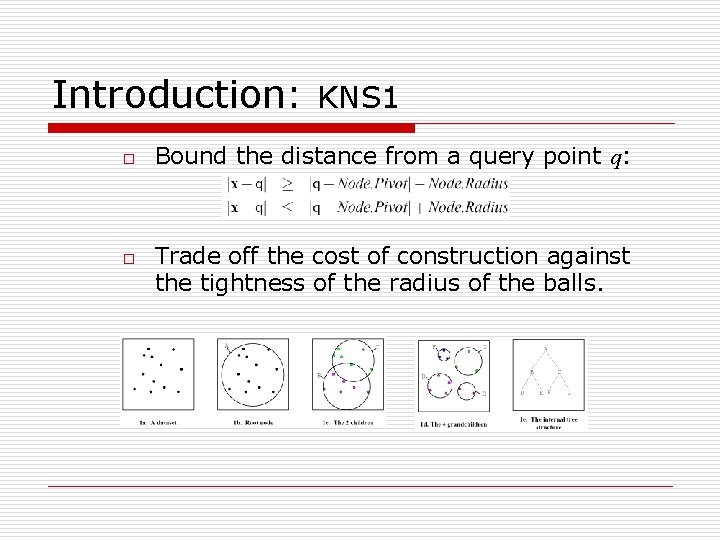

Introduction: o o KNS 1 Bound the distance from a query point q: Trade off the cost of construction against the tightness of the radius of the balls.

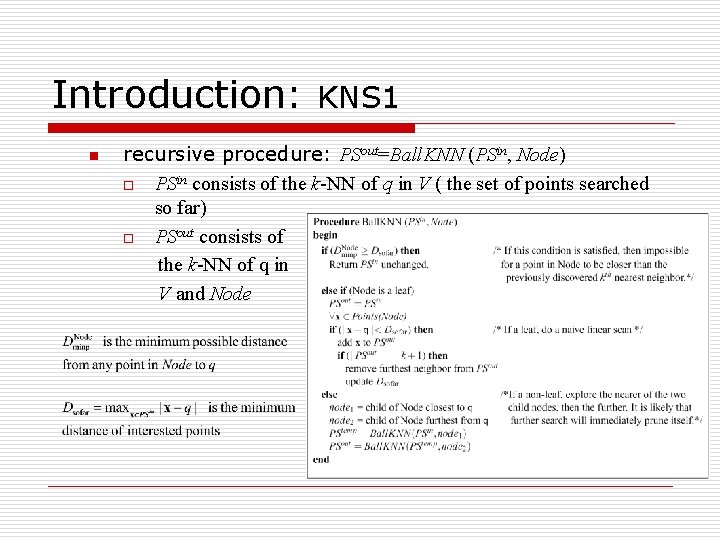

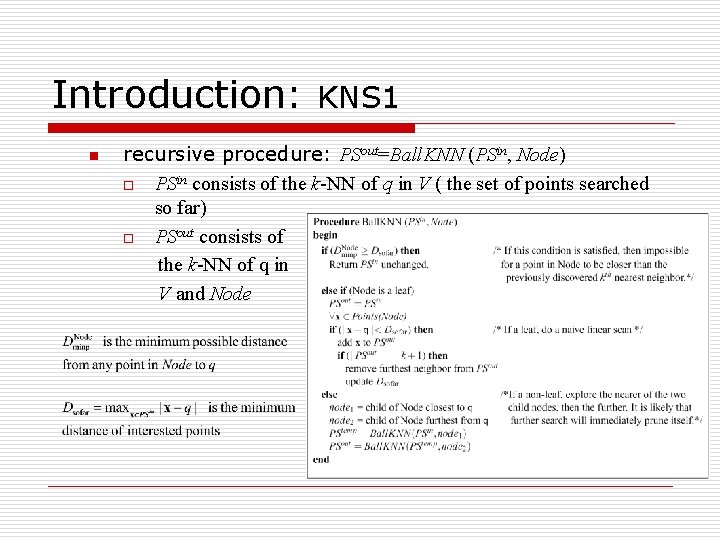

Introduction: n KNS 1 recursive procedure: PSout=Ball. KNN (PSin, Node) o PSin consists of the k-NN of q in V ( the set of points searched so far) o PSout consists of the k-NN of q in V and Node

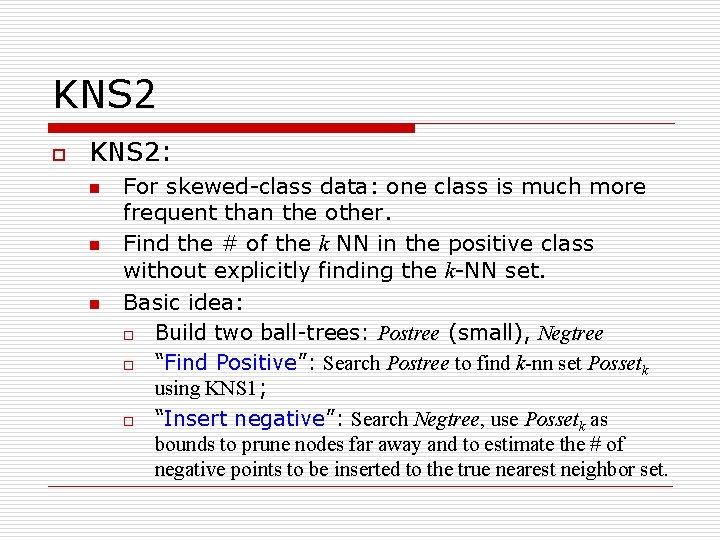

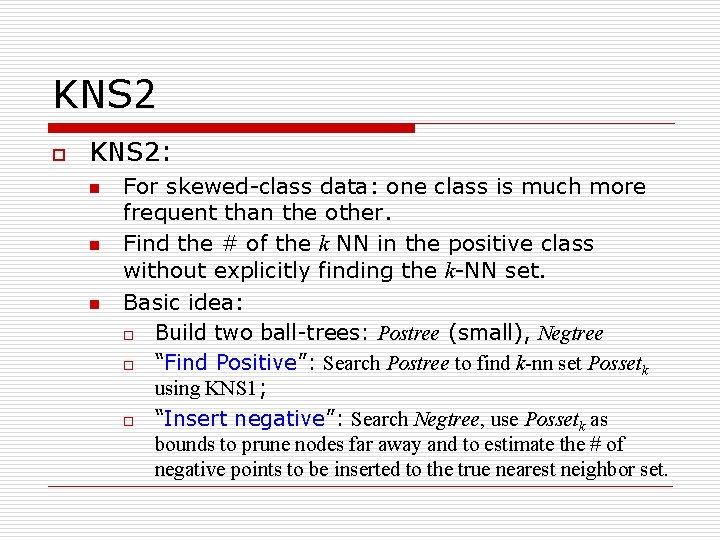

KNS 2 o KNS 2: n n n For skewed-class data: one class is much more frequent than the other. Find the # of the k NN in the positive class without explicitly finding the k-NN set. Basic idea: o Build two ball-trees: Postree (small), Negtree o “Find Positive”: Search Postree to find k-nn set Possetk using KNS 1; o “Insert negative”: Search Negtree, use Possetk as bounds to prune nodes far away and to estimate the # of negative points to be inserted to the true nearest neighbor set.

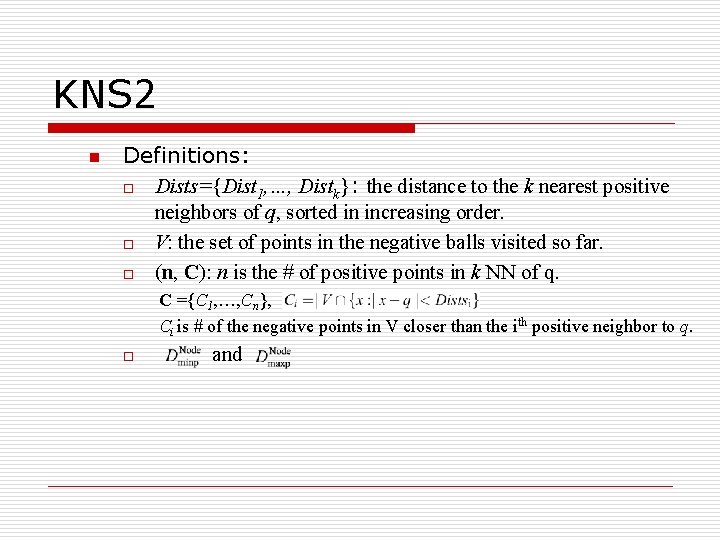

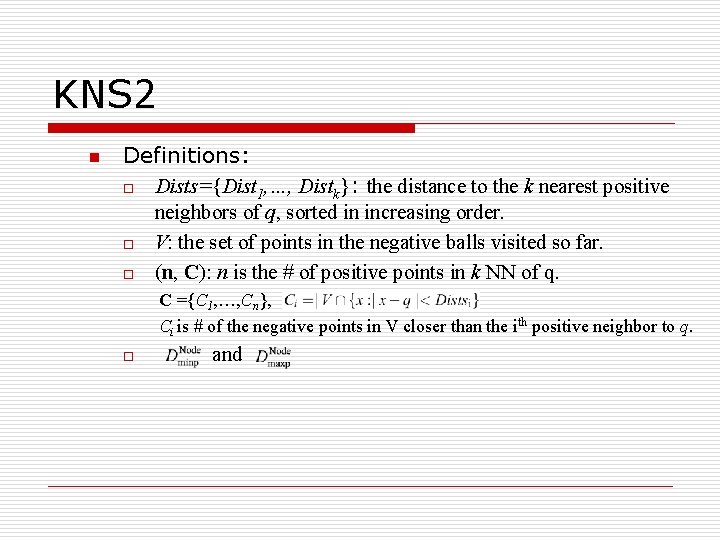

KNS 2 n Definitions: o Dists={Dist 1, …, Distk}: the distance to the k nearest positive neighbors of q, sorted in increasing order. o V: the set of points in the negative balls visited so far. o (n, C): n is the # of positive points in k NN of q. C ={C 1, …, Cn}, Ci is # of the negative points in V closer than the ith positive neighbor to q. o and

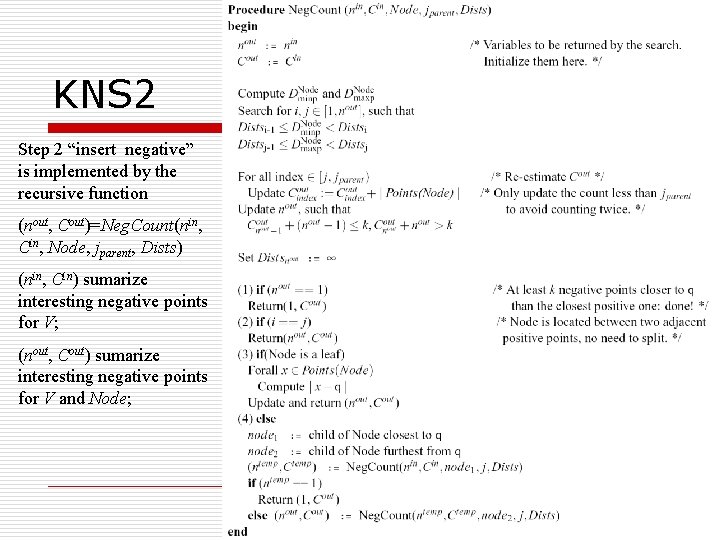

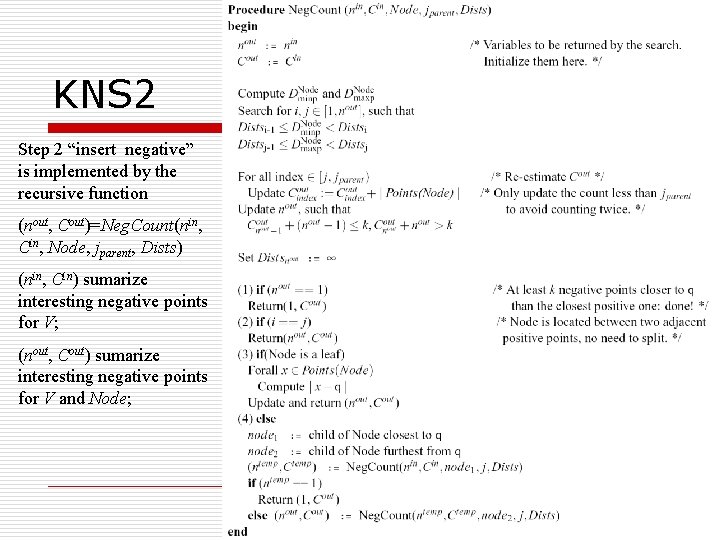

KNS 2 Step 2 “insert negative” is implemented by the recursive function (nout, Cout)=Neg. Count(nin, Cin, Node, jparent, Dists) (nin, Cin) sumarize interesting negative points for V; (nout, Cout) sumarize interesting negative points for V and Node;

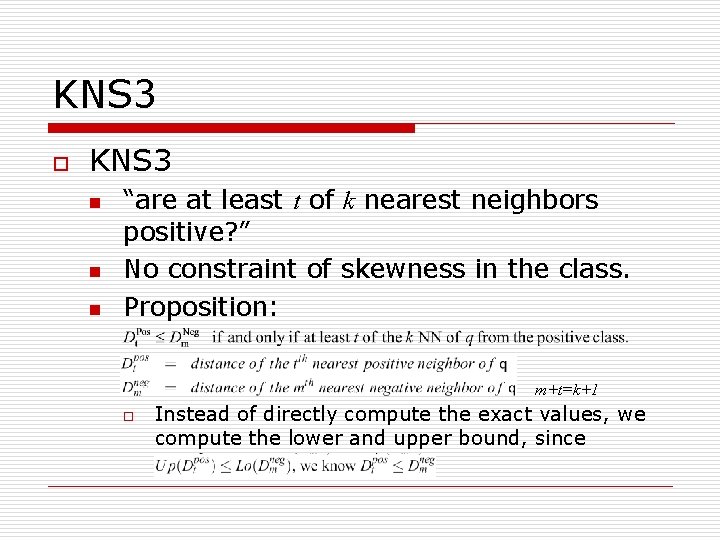

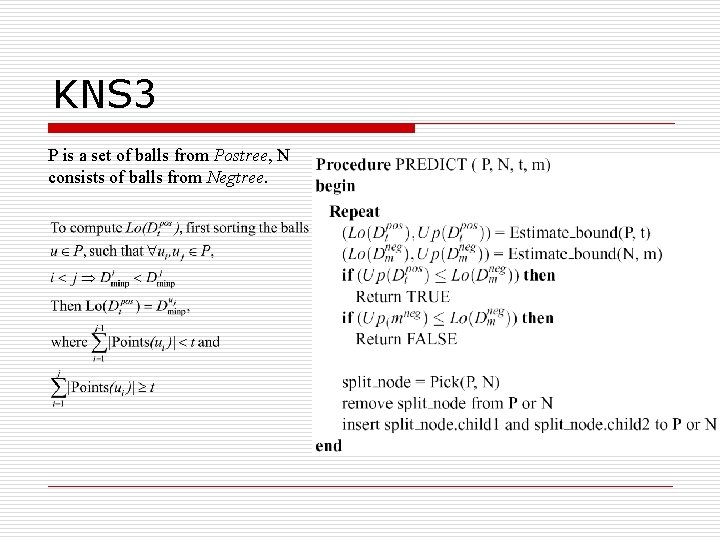

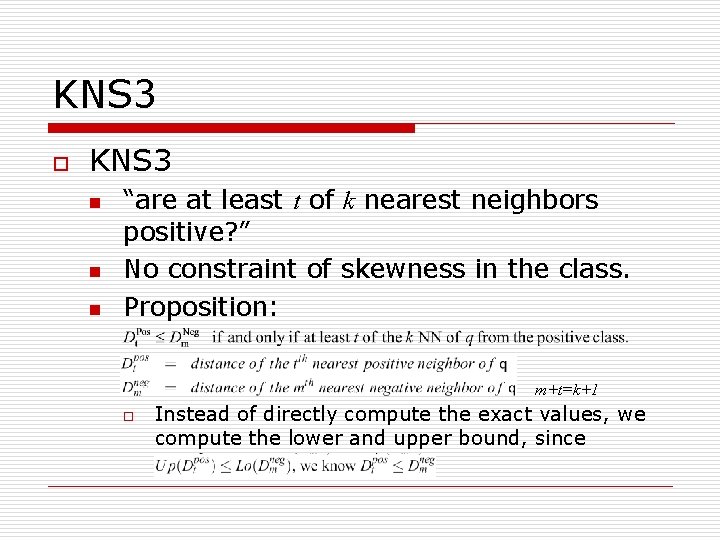

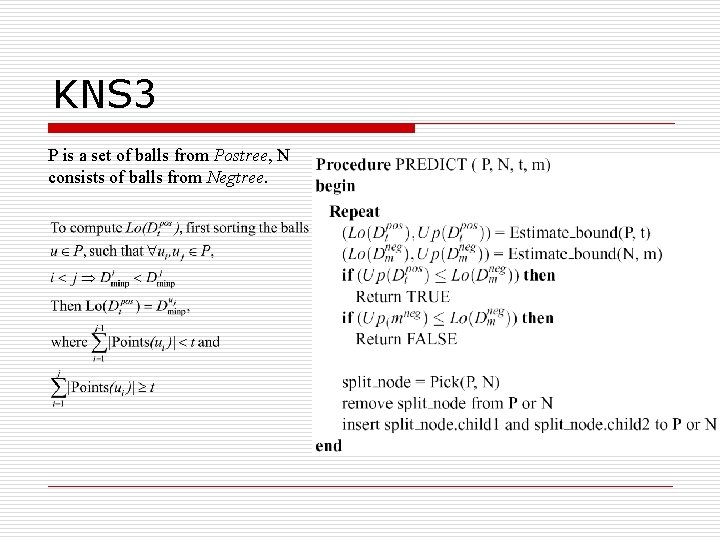

KNS 3 o KNS 3 n n n “are at least t of k nearest neighbors positive? ” No constraint of skewness in the class. Proposition: m+t=k+1 o Instead of directly compute the exact values, we compute the lower and upper bound, since

KNS 3 P is a set of balls from Postree, N consists of balls from Negtree.

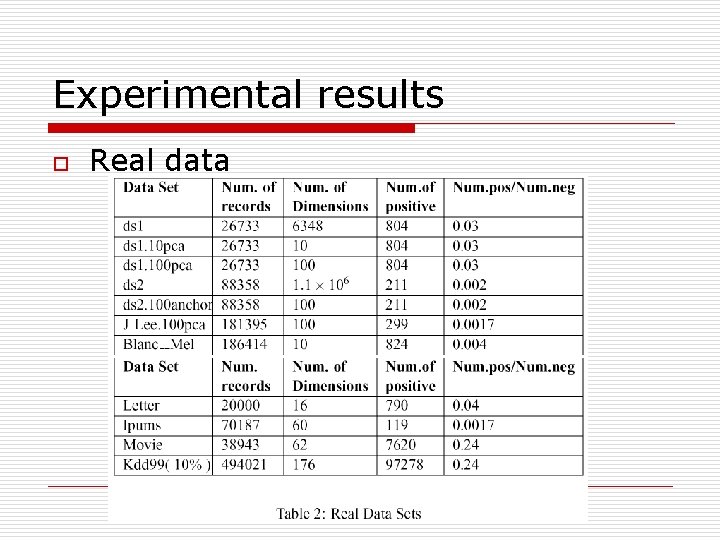

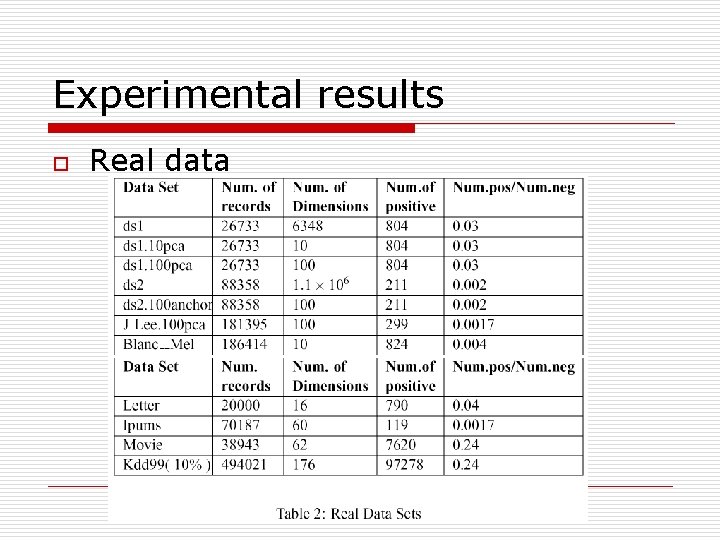

Experimental results o Real data

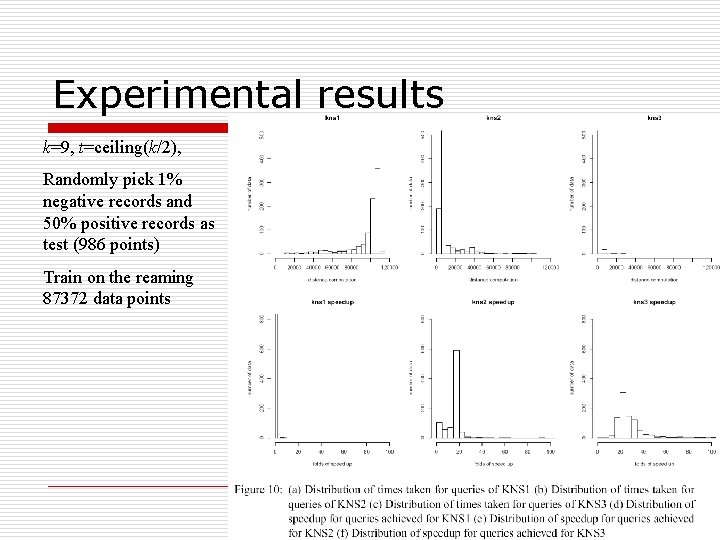

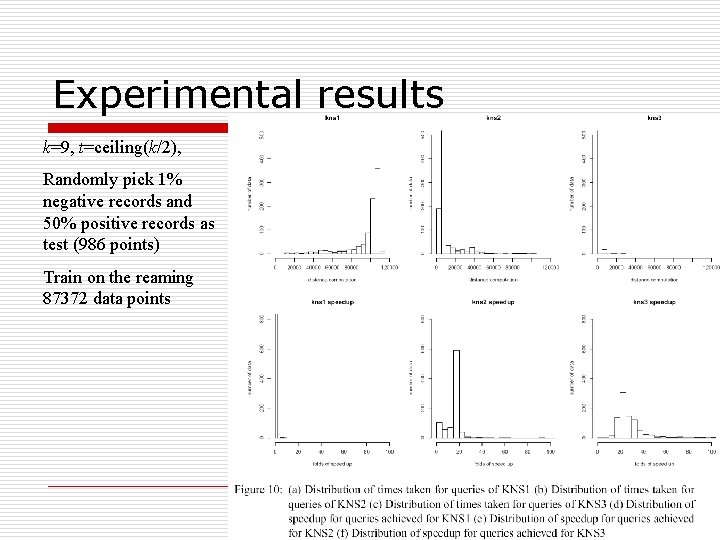

Experimental results k=9, t=ceiling(k/2), Randomly pick 1% negative records and 50% positive records as test (986 points) Train on the reaming 87372 data points

Comments o Why k-NN? Baseline o No free lunch: n n For uniform high-dimensional data, no benefits. Results mean the intrinsic dimensionality is much lower.