Neural Networks Part 3 Training with Backpropagation CSE

Neural Networks Part 3 – Training with Backpropagation CSE 4309 – Machine Learning Vassilis Athitsos Computer Science and Engineering Department University of Texas at Arlington 1

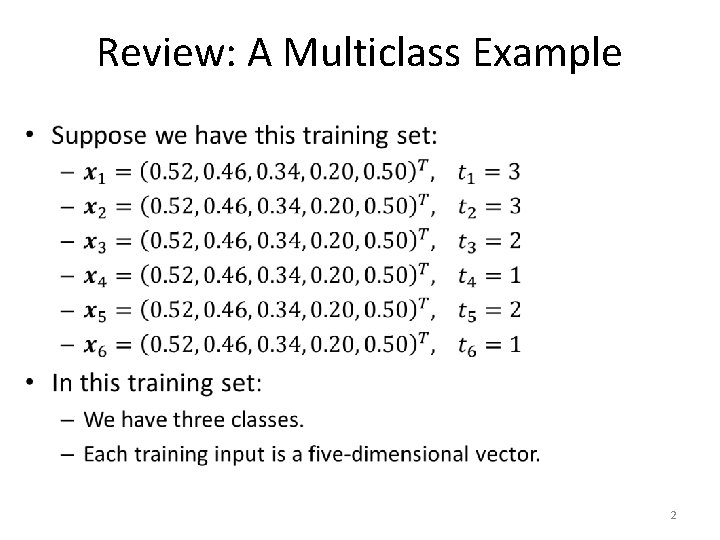

Review: A Multiclass Example • 2

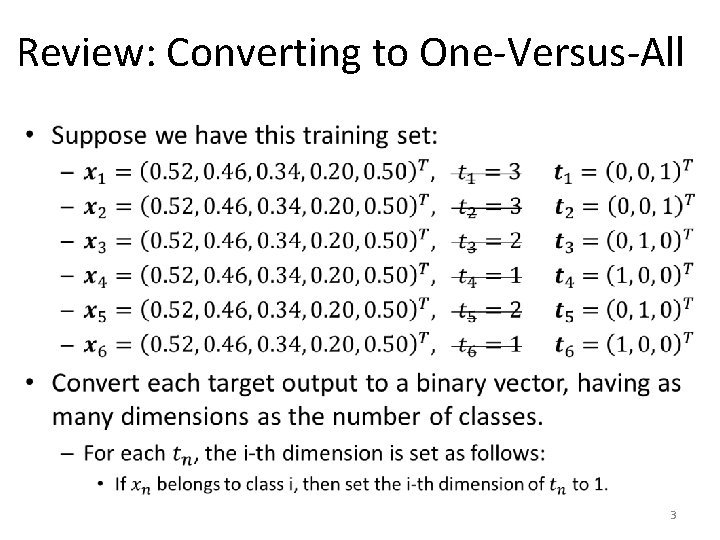

Review: Converting to One-Versus-All • 3

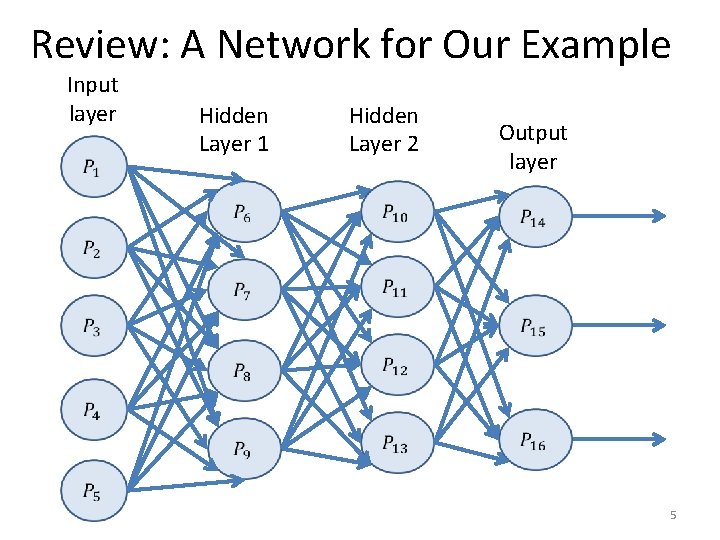

Review: Multiclass Neural Networks • For perceptrons, we saw that we can perform multiclass (i. e. , for more than two classes) classification by training one perceptron for each class. • For neural networks, we will train a SINGLE neural network, with MULTIPLE output units. – The number of output units will be equal to the number of classes. 4

Review: A Network for Our Example Input layer Hidden Layer 1 Hidden Layer 2 Output layer 5

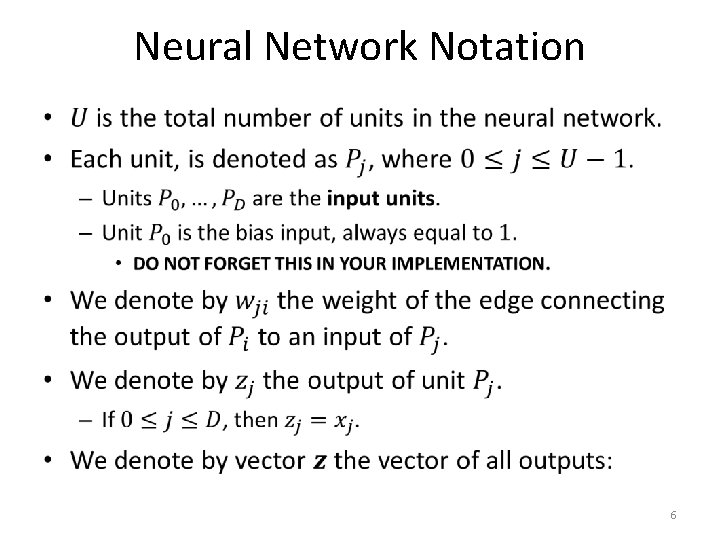

Neural Network Notation • 6

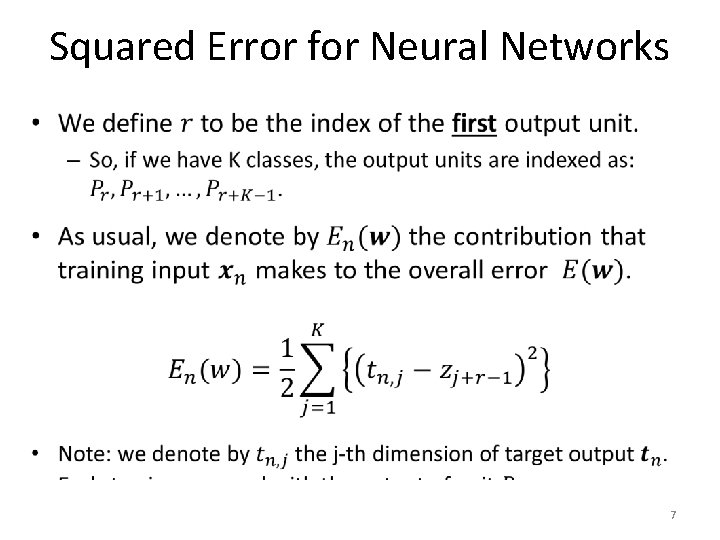

Squared Error for Neural Networks • 7

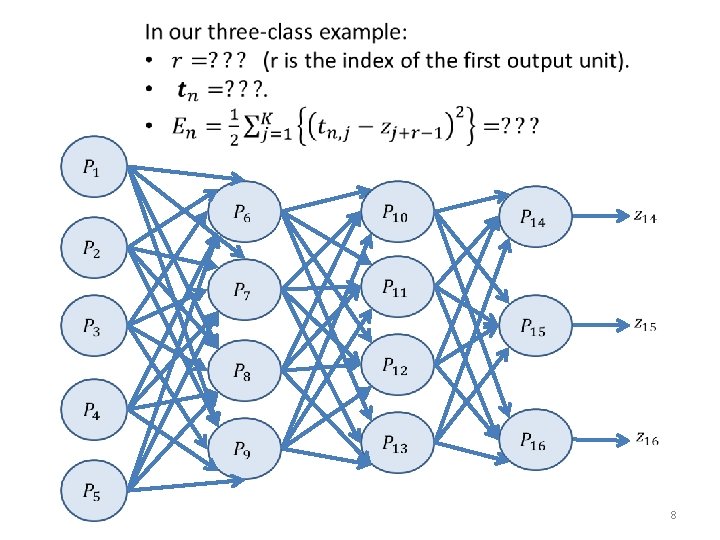

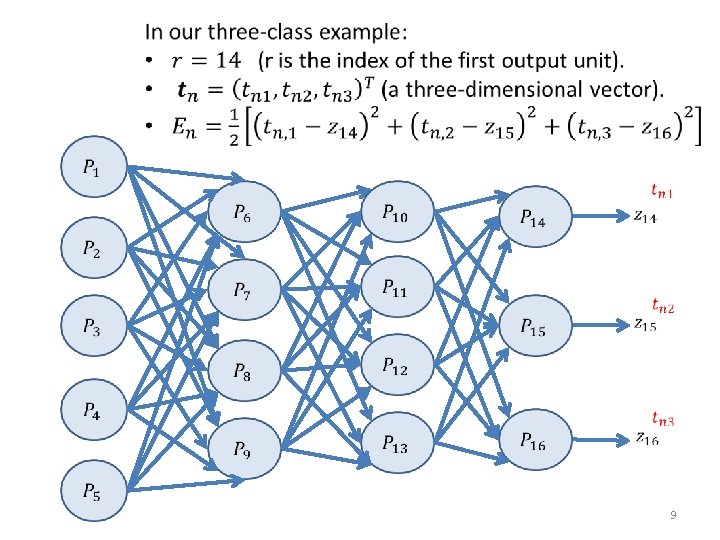

8

9

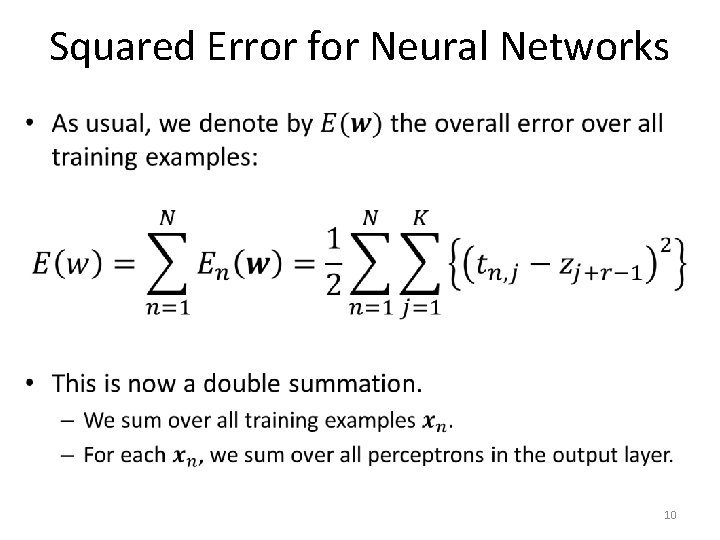

Squared Error for Neural Networks • 10

Training Neural Networks • 11

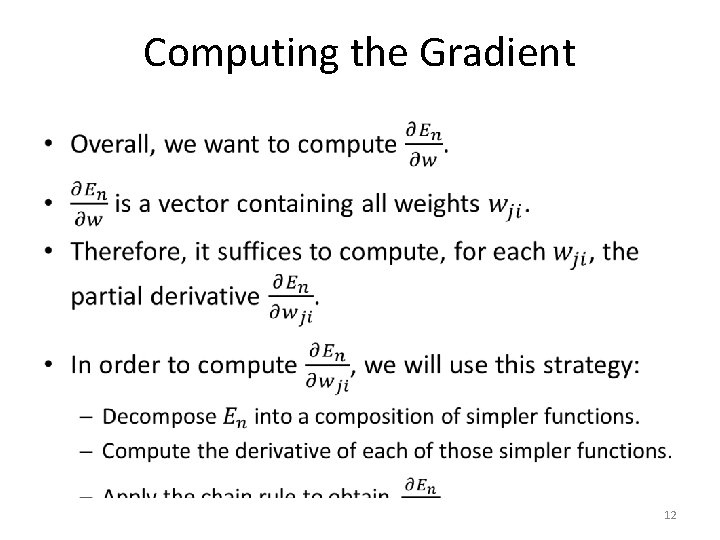

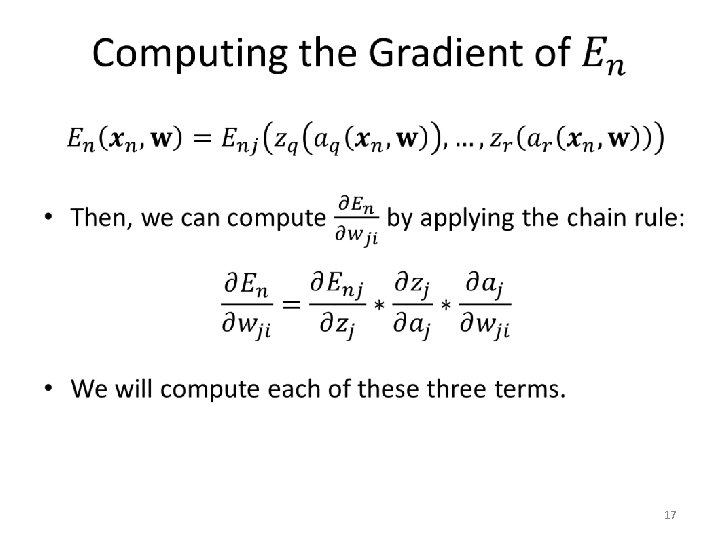

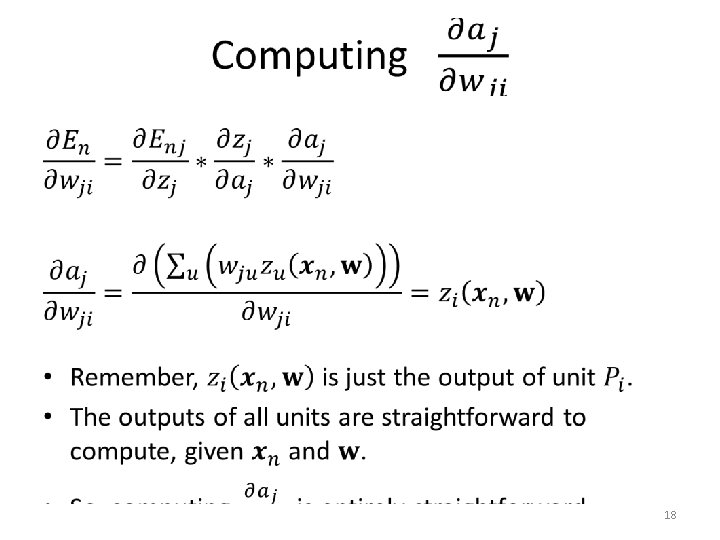

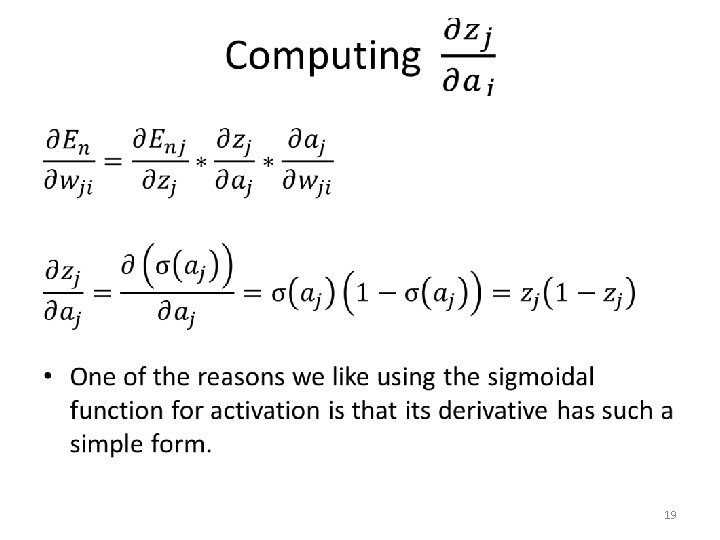

Computing the Gradient • 12

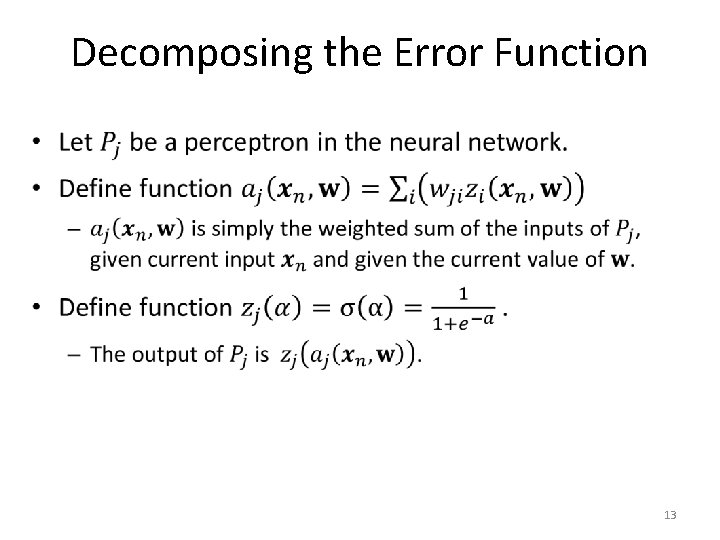

Decomposing the Error Function • 13

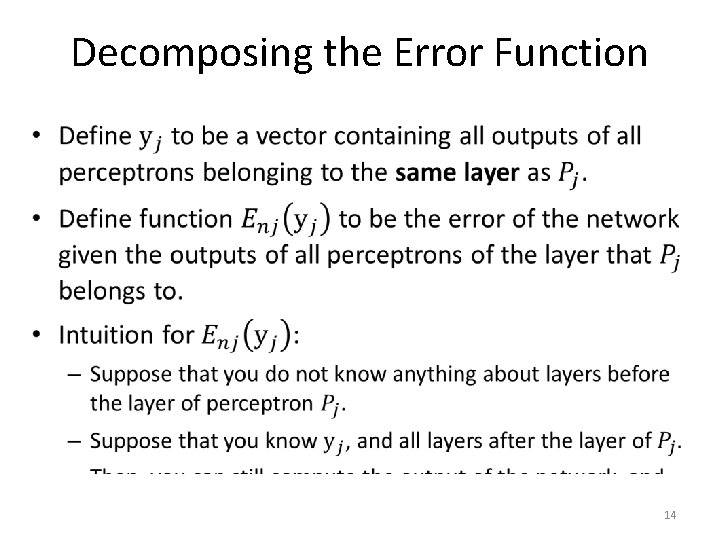

Decomposing the Error Function • 14

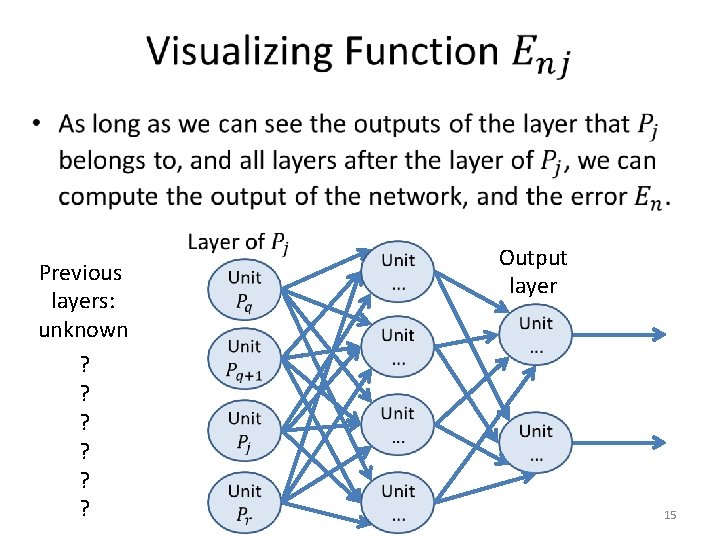

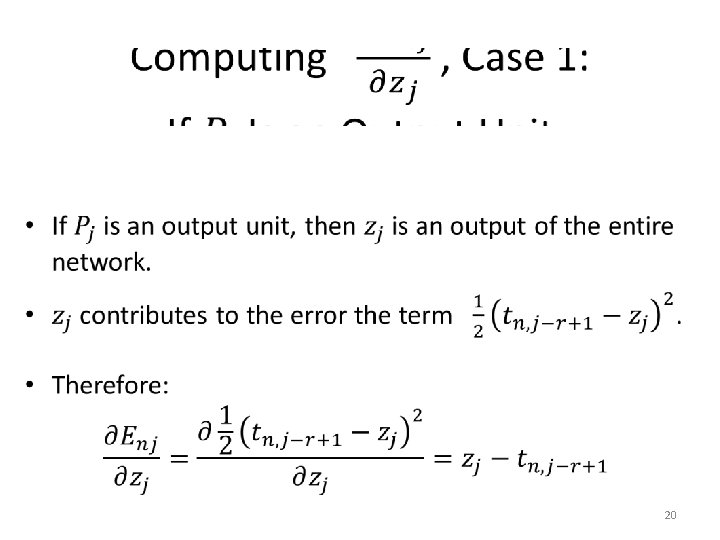

• Previous layers: unknown ? ? ? Output layer 15

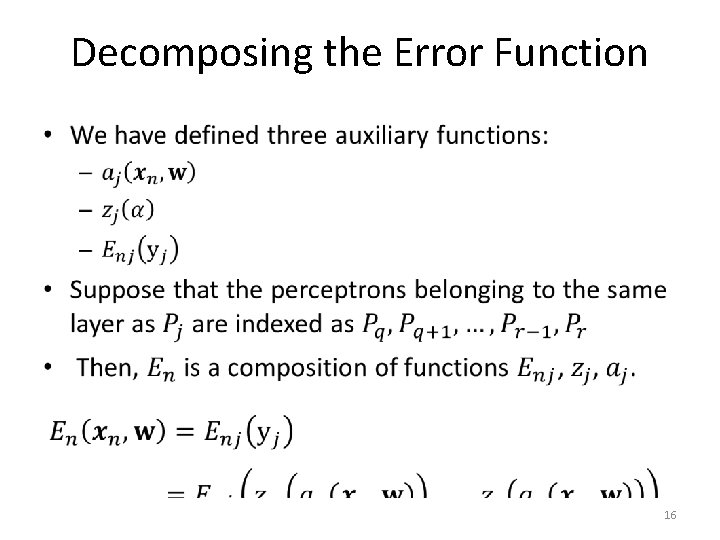

Decomposing the Error Function • 16

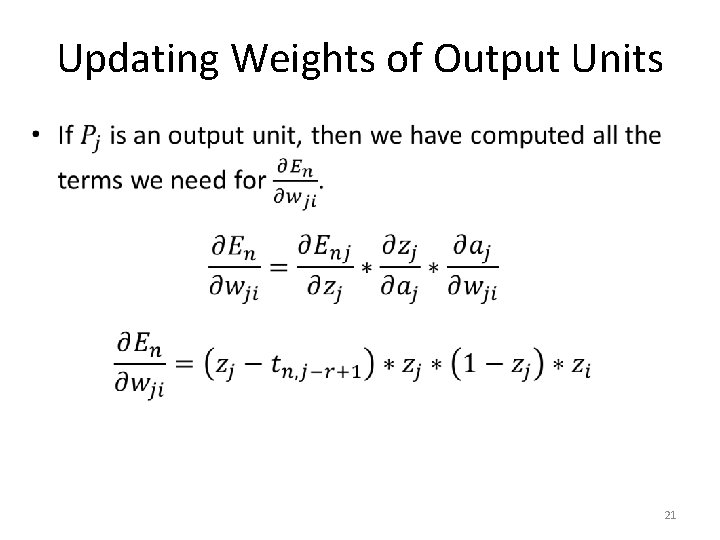

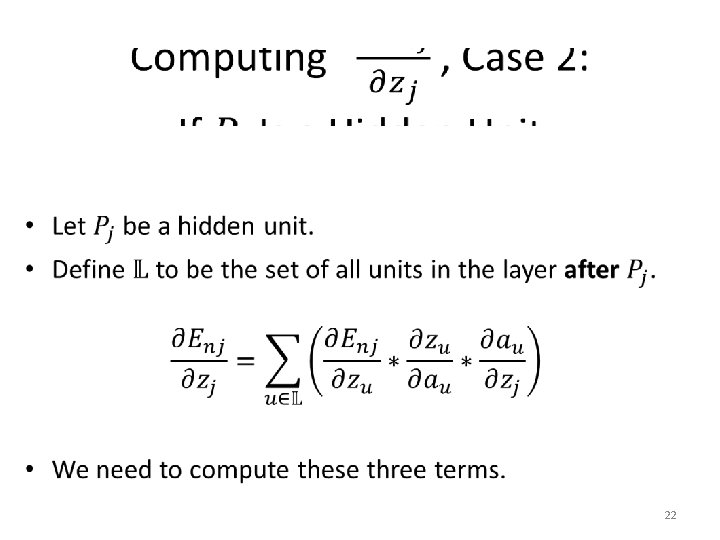

Updating Weights of Output Units • 21

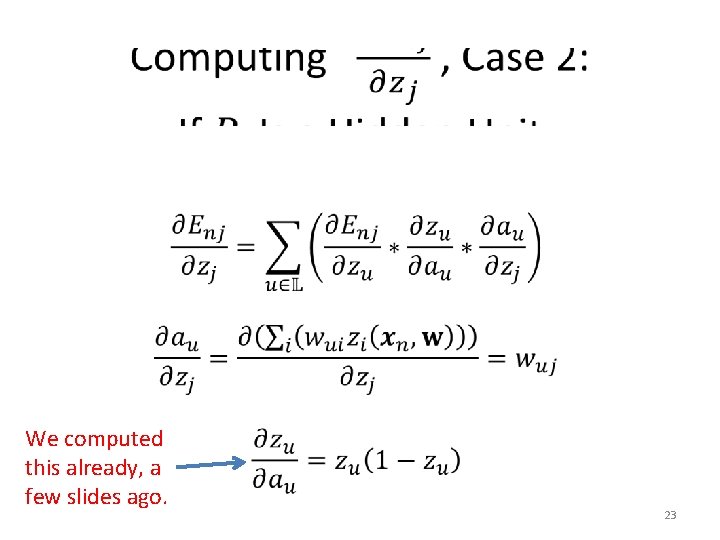

• We computed this already, a few slides ago. 23

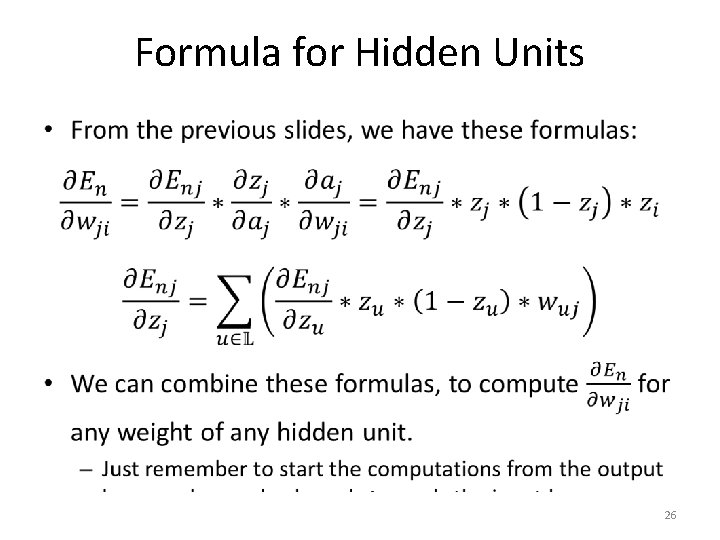

Formula for Hidden Units • 26

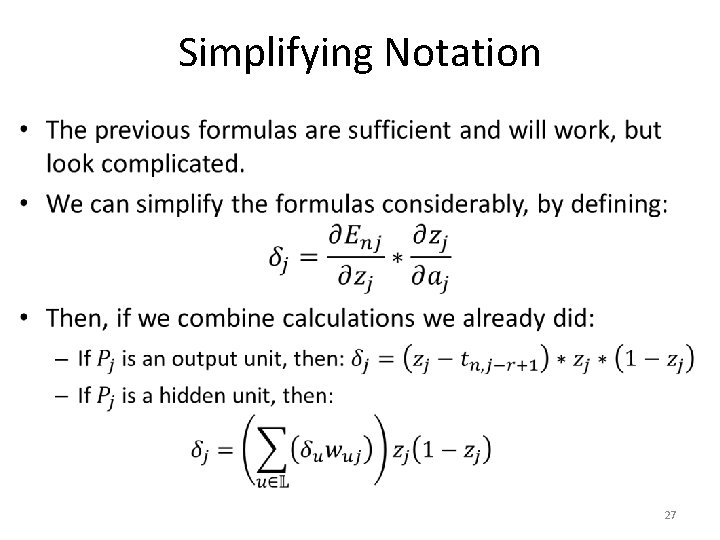

Simplifying Notation • 27

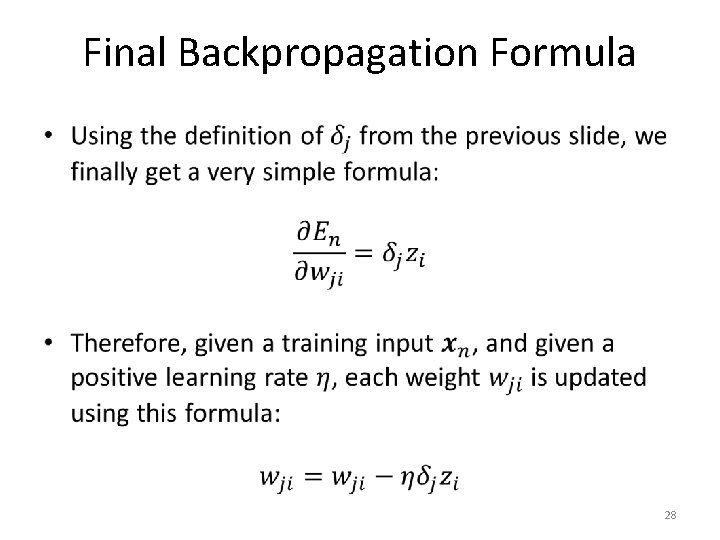

Final Backpropagation Formula • 28

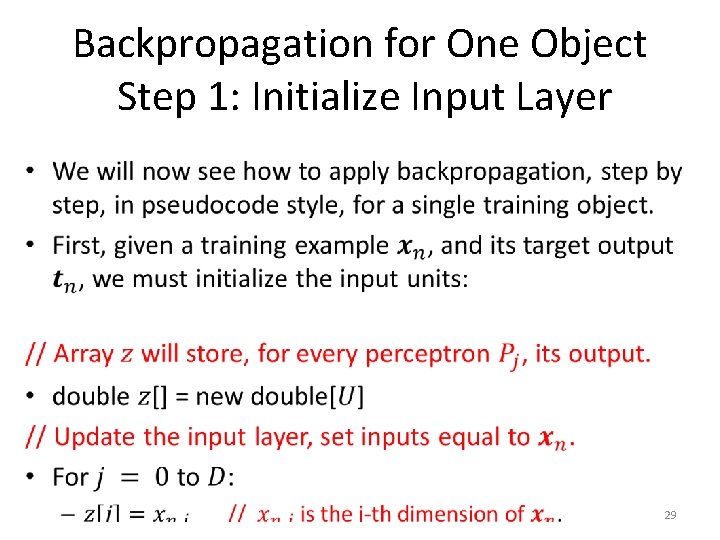

Backpropagation for One Object Step 1: Initialize Input Layer • 29

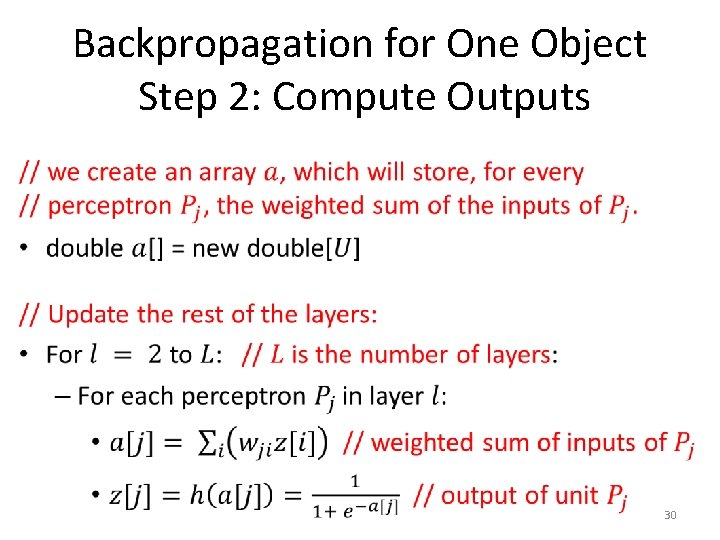

Backpropagation for One Object Step 2: Compute Outputs • 30

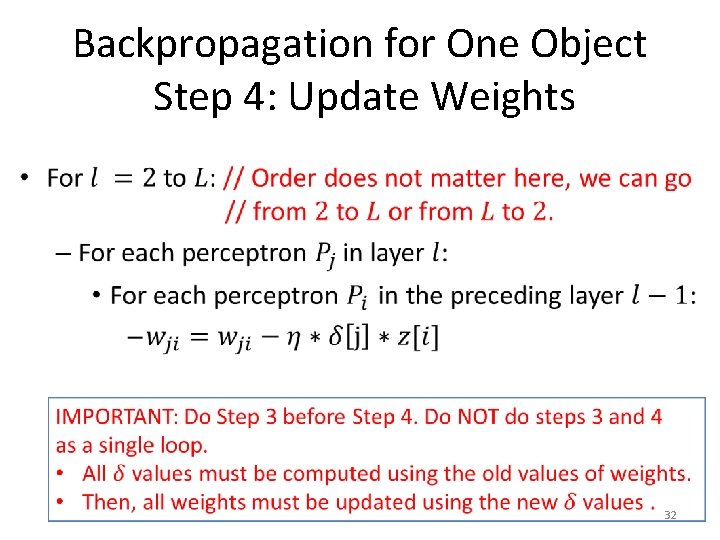

Backpropagation for One Object Step 4: Update Weights • 32

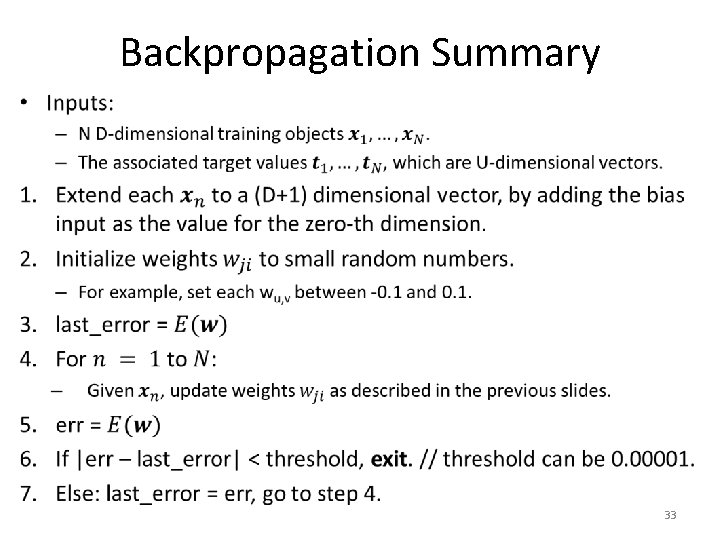

Backpropagation Summary • 33

Common Bug: Omitting the Bias Input • 34

Classification with Neural Networks • 35

Structure of Neural Networks • Backpropagation describes how to learn weights. • However, it does not describe how to learn the structure: – How many layers? – How many units at each layer? • These are parameters that we have to choose somehow. • A good way to choose such parameters is by using a validation set, containing examples and their class labels. – The validation set should be separate (disjoint) from the training set. 36

Structure of Neural Networks • To choose the best structure for a neural network using a validation set, we try many different parameters (number of layers, number of units per layer). • For each choice of parameters: – We train several neural networks using backpropagation. – We measure how well each neural network classifies the validation examples. – Why not train just one neural network? 37

Structure of Neural Networks • To choose the best structure for a neural network using a validation set, we try many different parameters (number of layers, number of units per layer). • For each choice of parameters: – We train several neural networks using backpropagation. – We measure how well each neural network classifies the validation examples. – Why not train just one neural network? – Each network is randomly initialized, so after backpropagation it can end up being different from the other networks. • At the end, we select the neural network that did best on the validation set. 38

- Slides: 38