Neural Networks Improving Performance in Xray Lithography Applications

- Slides: 14

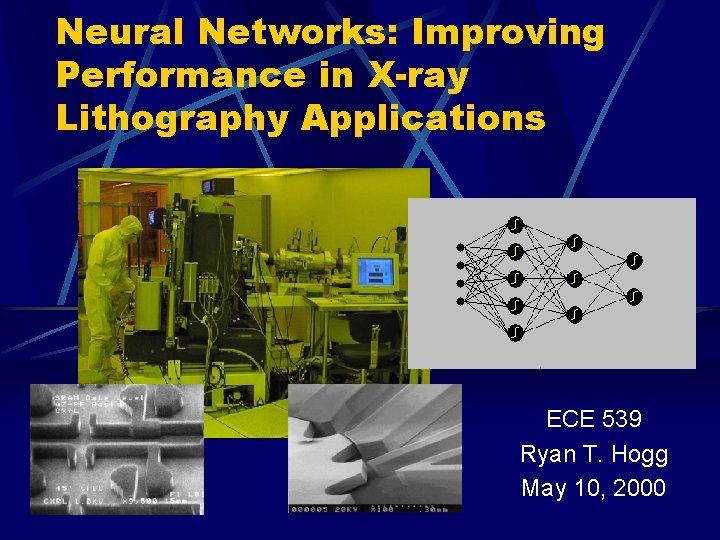

Neural Networks: Improving Performance in X-ray Lithography Applications ECE 539 Ryan T. Hogg May 10, 2000

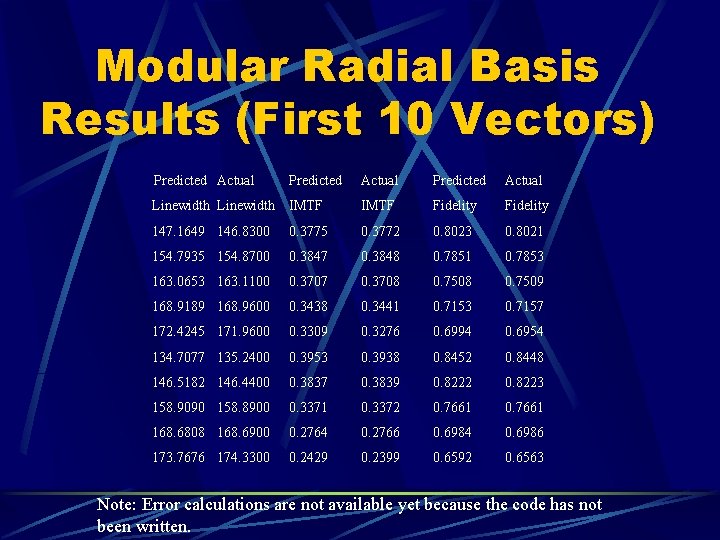

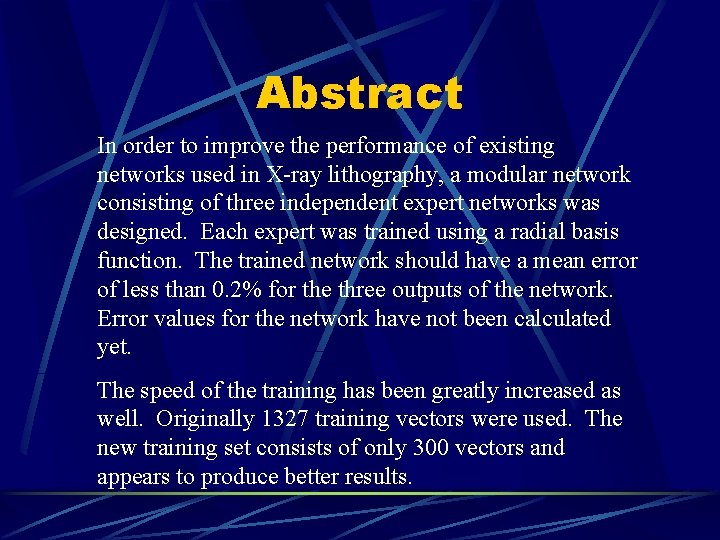

Abstract In order to improve the performance of existing networks used in X-ray lithography, a modular network consisting of three independent expert networks was designed. Each expert was trained using a radial basis function. The trained network should have a mean error of less than 0. 2% for the three outputs of the network. Error values for the network have not been calculated yet. The speed of the training has been greatly increased as well. Originally 1327 training vectors were used. The new training set consists of only 300 vectors and appears to produce better results.

Outline The problem Existing solution Proposed solution I. II. III. IV. V. Modular network layout Network options Network results Future Improvements Conclusion

Problem: X-ray Lithography Parameters The manufacturing of semiconductor devices using X-ray Lithography requires the evaluation of the following multivariate function: [linewidth, IMTF, fidelity] = F(absorber thickness, gap, bias ) Having a neural network to calculate these values with a minimal amount of error would be an invaluable tool to any lithographer. IMTF = Integrated Modulation Transfer Function

The Radial Basis Solution Currently there is a neural network that uses a radial basis function to compute these three variables. The error performance on the testing set is: Mean Error: 0. 2% to 0. 4% Maximum Error: 4% The goal of this project is to improve these percentages, ideally obtaining a maximum error of less than 0. 1%.

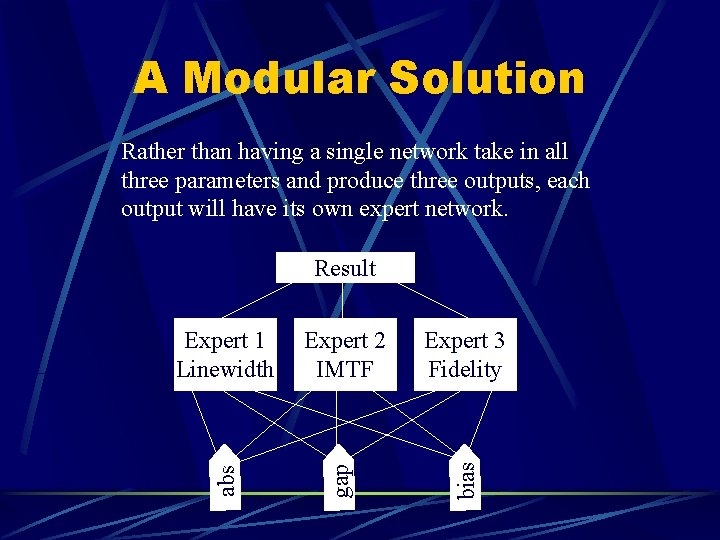

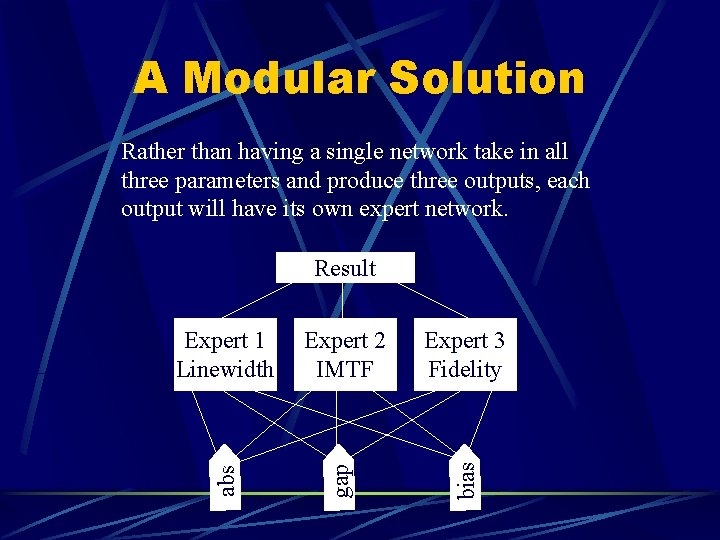

A Modular Solution Rather than having a single network take in all three parameters and produce three outputs, each output will have its own expert network. Expert 1 Linewidth Expert 2 IMTF Expert 3 Fidelity abs gap bias Result

Battle of the Networks Initially two different types of networks were trained to see which one was better: • Multilayer Perceptron (One Hidden Layer) - Fast training, but low learning rate - Large mean square error • Radial Basis Function Neural Network - Slower training - Much lower error

MLP Approach • The three inputs and three outputs were converted to 32 -bit binary numbers for more effective training. This way, each expert network had 96 inputs and 32 outputs. • The format of the binary representation was as follows: s b 7 b 6 b 5 b 4 b 3 b 2 b 1 b 0 b-1 b-2…b-23 • There were two sets of weights wh and wo. Each hidden neuron had three weighted inputs and a bias. The output of each hidden neuron was weighed and summed together in each output node along with a bias.

MLP Results After training with several different learning rates and momentum constants, it became clear that the MLP was not learning at an adequate rate to achieve the low level of error that was desired. In some cases the level of error was greater than 100%!

Setting up the RBN Select smoothing factor (h) l Numerous values of h were tested, mainly powers of ten. Most of the values generated inaccurate predictions, but one value performed with remarkable results: h = 0. 0001 Determine size of training set to use l To increase the training rate of the RBN, the size of the training set was reduced. Initially only the first two hundred vectors were used. This gave good results for the larger-valued test sets.

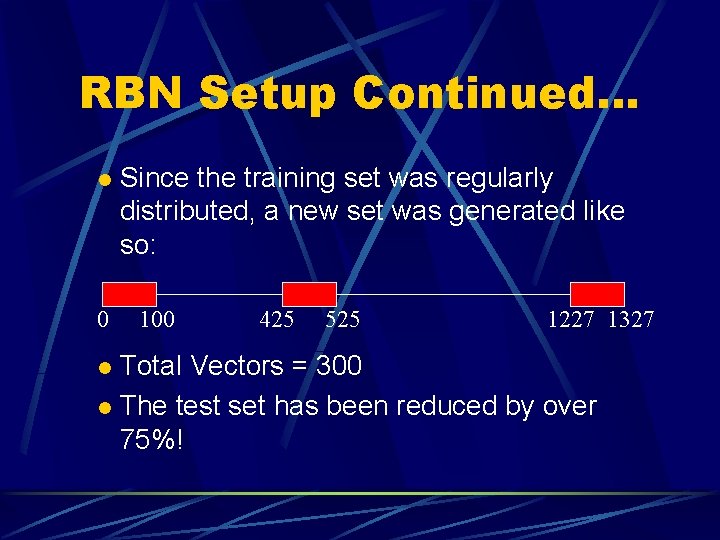

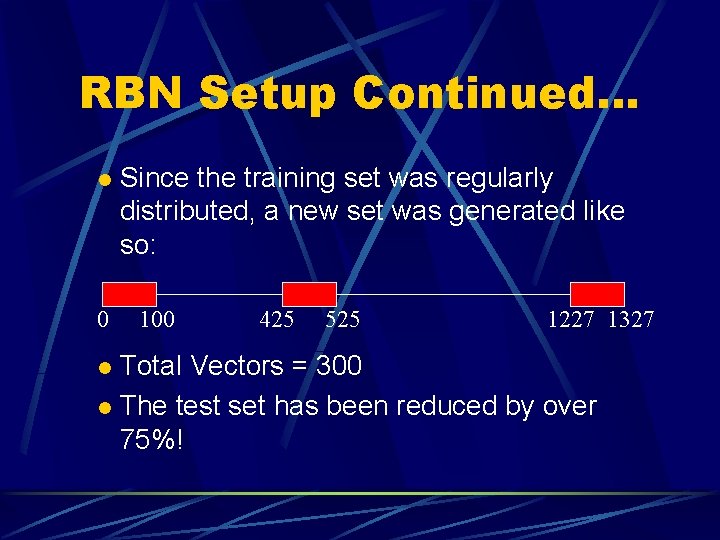

RBN Setup Continued… l 0 Since the training set was regularly distributed, a new set was generated like so: 100 425 525 1227 1327 Total Vectors = 300 l The test set has been reduced by over 75%! l

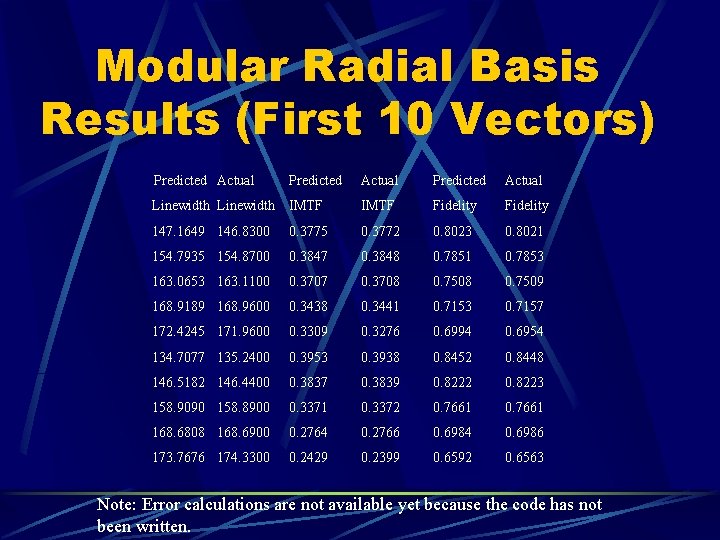

Modular Radial Basis Results (First 10 Vectors) Predicted Actual Linewidth IMTF Fidelity 147. 1649 146. 8300 0. 3775 0. 3772 0. 8023 0. 8021 154. 7935 154. 8700 0. 3847 0. 3848 0. 7851 0. 7853 163. 0653 163. 1100 0. 3707 0. 3708 0. 7509 168. 9189 168. 9600 0. 3438 0. 3441 0. 7153 0. 7157 172. 4245 171. 9600 0. 3309 0. 3276 0. 6994 0. 6954 134. 7077 135. 2400 0. 3953 0. 3938 0. 8452 0. 8448 146. 5182 146. 4400 0. 3837 0. 3839 0. 8222 0. 8223 158. 9090 158. 8900 0. 3371 0. 3372 0. 7661 168. 6808 168. 6900 0. 2764 0. 2766 0. 6984 0. 6986 173. 7676 174. 3300 0. 2429 0. 2399 0. 6592 0. 6563 Note: Error calculations are not available yet because the code has not been written.

Future Improvements Adjust the smoothing factor for better results. Write code to calculate mean error and maximum error for the testing set. Possibly reduce training set even further.

Conclusions MLP is not suited well for this type of function. Radial basis functions work well here, which is why one was used in the first place. However, creating an expert network for each output produces even better results.