Neural Networks as Universal Approximators Yotam Amar and

![Theorem 1 [Cybenko 89’] Theorem 1 [Cybenko 89’]](https://slidetodoc.com/presentation_image_h/a4bdf0433e587b76b0f3e6af6b2d881d/image-10.jpg)

![Theorem 1 [Cybenko 89’] Theorem 1 [Cybenko 89’]](https://slidetodoc.com/presentation_image_h/a4bdf0433e587b76b0f3e6af6b2d881d/image-11.jpg)

![Proof of Theorem 1 [Cybenko 89’] Proof of Theorem 1 [Cybenko 89’]](https://slidetodoc.com/presentation_image_h/a4bdf0433e587b76b0f3e6af6b2d881d/image-12.jpg)

![Proof of Theorem 1 [Cybenko 89’] Proof of Theorem 1 [Cybenko 89’]](https://slidetodoc.com/presentation_image_h/a4bdf0433e587b76b0f3e6af6b2d881d/image-13.jpg)

![Proof of Theorem 1 [Cybenko 89’] Proof of Theorem 1 [Cybenko 89’]](https://slidetodoc.com/presentation_image_h/a4bdf0433e587b76b0f3e6af6b2d881d/image-14.jpg)

![Proof of Theorem 1 [Cybenko 89’] Proof of Theorem 1 [Cybenko 89’]](https://slidetodoc.com/presentation_image_h/a4bdf0433e587b76b0f3e6af6b2d881d/image-15.jpg)

![Proof of Theorem 1 [Cybenko 89’] Proof of Theorem 1 [Cybenko 89’]](https://slidetodoc.com/presentation_image_h/a4bdf0433e587b76b0f3e6af6b2d881d/image-16.jpg)

![Theorem 1 [Cybenko 89’] Theorem 1 [Cybenko 89’]](https://slidetodoc.com/presentation_image_h/a4bdf0433e587b76b0f3e6af6b2d881d/image-17.jpg)

![Lemma 1 [Cybenko 89’] y s z a Cr uou tin n Co Lemma 1 [Cybenko 89’] y s z a Cr uou tin n Co](https://slidetodoc.com/presentation_image_h/a4bdf0433e587b76b0f3e6af6b2d881d/image-18.jpg)

![Theorem 2 [Cybenko 89’] Theorem 2 [Cybenko 89’]](https://slidetodoc.com/presentation_image_h/a4bdf0433e587b76b0f3e6af6b2d881d/image-19.jpg)

![Barron Theorem [Bar 93] Barron Theorem [Bar 93]](https://slidetodoc.com/presentation_image_h/a4bdf0433e587b76b0f3e6af6b2d881d/image-30.jpg)

![Multilayer Barron Theorem [2017] g X Multilayer Barron Theorem [2017] g X](https://slidetodoc.com/presentation_image_h/a4bdf0433e587b76b0f3e6af6b2d881d/image-34.jpg)

![Multilayer Barron Theorem [2017] Multilayer Barron Theorem [2017]](https://slidetodoc.com/presentation_image_h/a4bdf0433e587b76b0f3e6af6b2d881d/image-35.jpg)

![Multilayer Barron Theorem [2017] Why is this important? Because there are complex functions that Multilayer Barron Theorem [2017] Why is this important? Because there are complex functions that](https://slidetodoc.com/presentation_image_h/a4bdf0433e587b76b0f3e6af6b2d881d/image-36.jpg)

![Multilayer Barron Theorem [2017] What’s next? Multilayer Barron Theorem [2017] What’s next?](https://slidetodoc.com/presentation_image_h/a4bdf0433e587b76b0f3e6af6b2d881d/image-37.jpg)

![Theorem 2 [Cybenko 89’] Theorem 2 [Cybenko 89’]](https://slidetodoc.com/presentation_image_h/a4bdf0433e587b76b0f3e6af6b2d881d/image-43.jpg)

![Proof of Theorem 1 [Cybenko 89’] Proof of Theorem 1 [Cybenko 89’]](https://slidetodoc.com/presentation_image_h/a4bdf0433e587b76b0f3e6af6b2d881d/image-44.jpg)

![Theorem 3 [Cybenko 89’] Theorem 3 [Cybenko 89’]](https://slidetodoc.com/presentation_image_h/a4bdf0433e587b76b0f3e6af6b2d881d/image-45.jpg)

![Multilayer Barron Theorem [2017] Why is this important? A network with multiple hidden layers Multilayer Barron Theorem [2017] Why is this important? A network with multiple hidden layers](https://slidetodoc.com/presentation_image_h/a4bdf0433e587b76b0f3e6af6b2d881d/image-46.jpg)

- Slides: 46

Neural Networks as Universal Approximators Yotam Amar and Heli Ben Hamu Advanced Reading in Deep-Learning and Vision Spring 2017

Motivation Practical uses: • • Replicating black box functions: decryption functions More effective implementation Visualization tools of neural networks Optimization of functions using backpropagation of the approximation Theoretical uses: • Understanding NN as hypothesis class • What NN can be used for? (except vision)

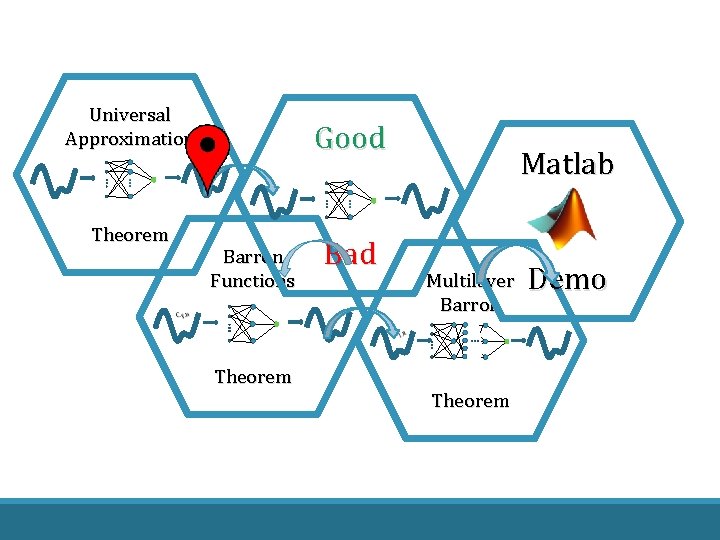

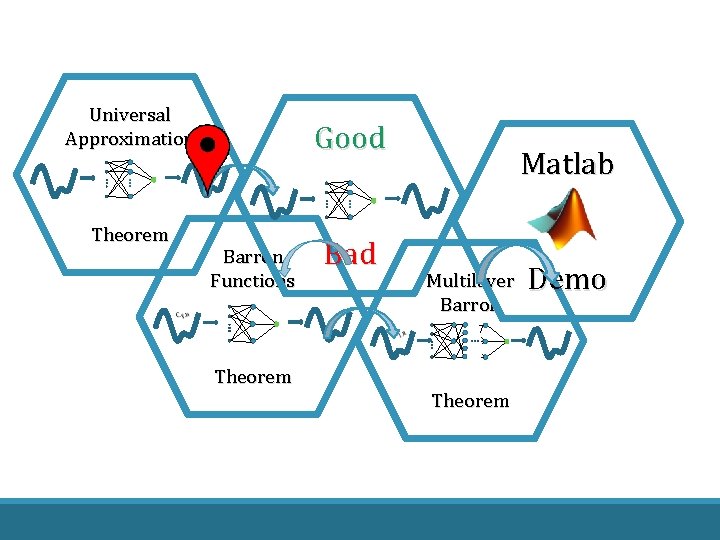

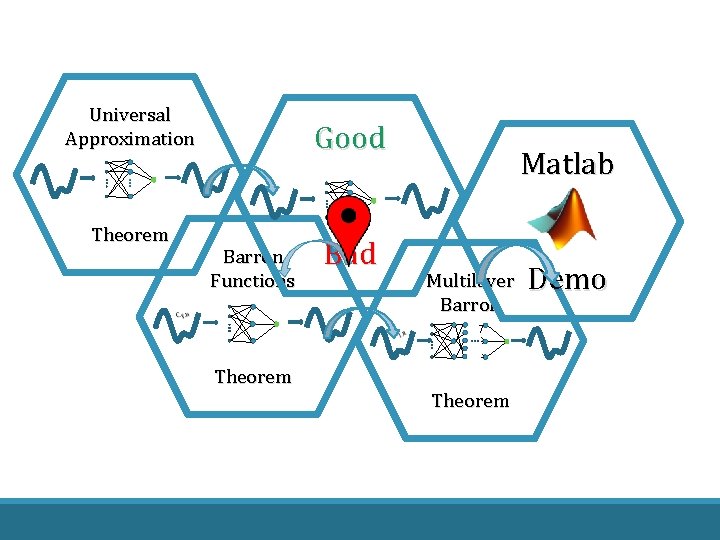

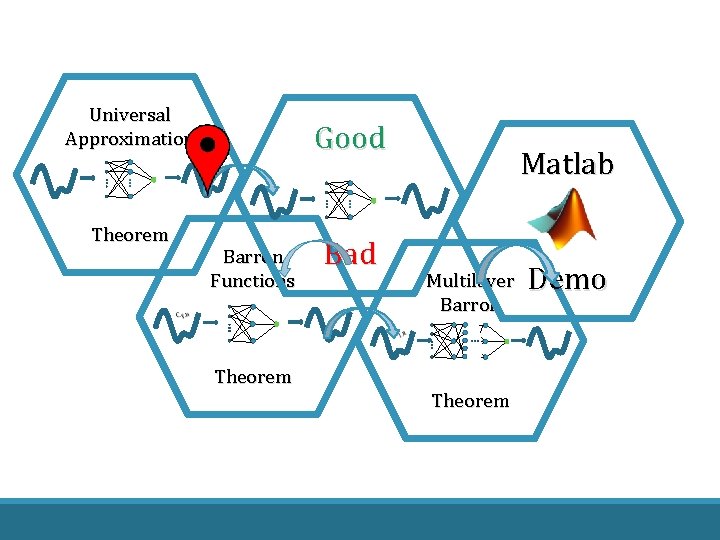

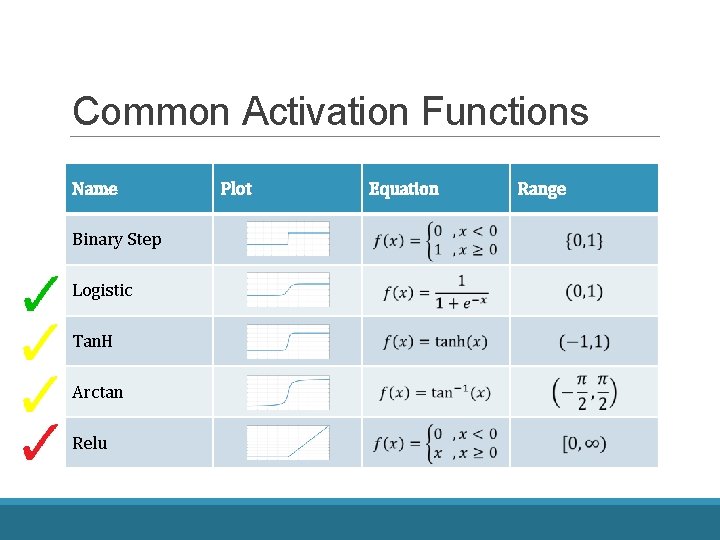

Universal Approximation Good Theorem Barron Functions Matlab Bad Multilayer Barron Theorem Demo

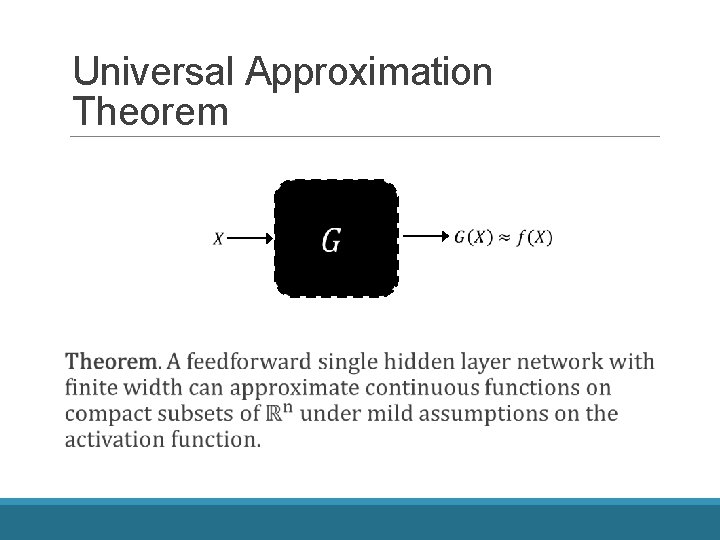

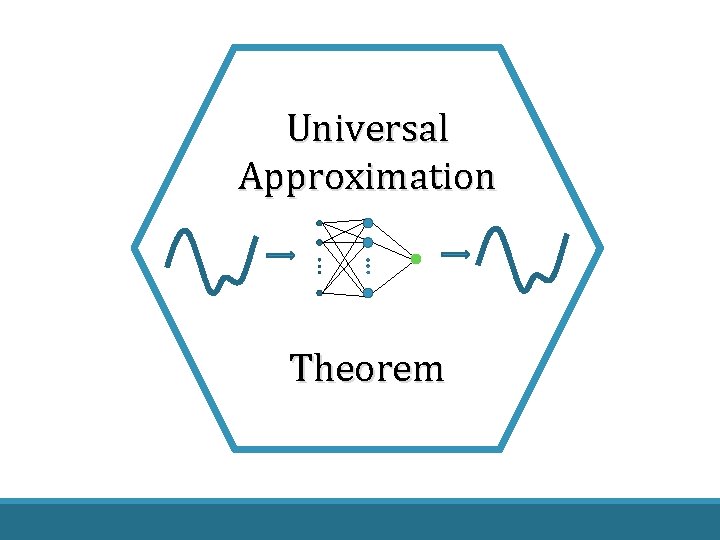

Universal Approximation Theorem

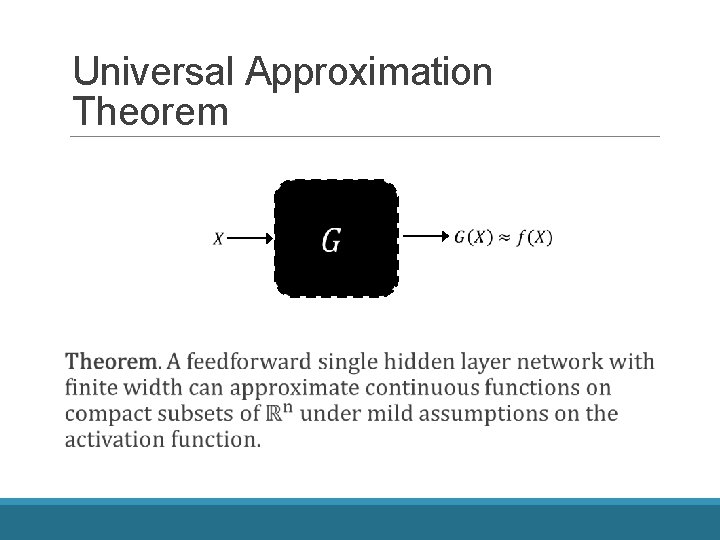

Universal Approximation Theorem

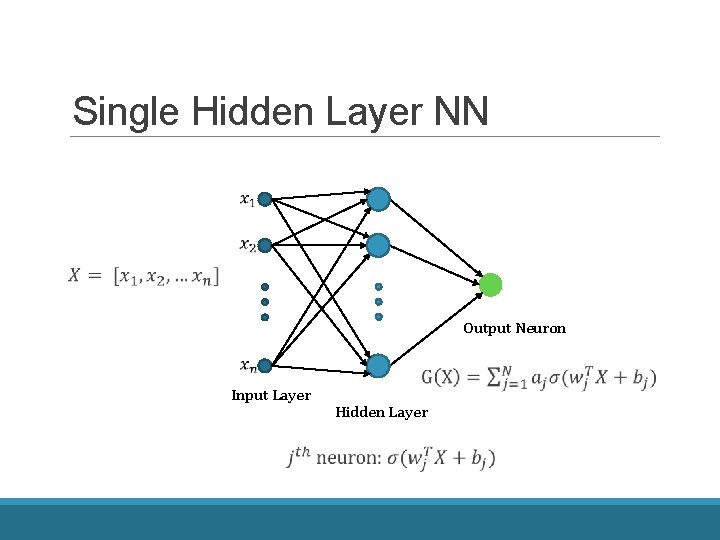

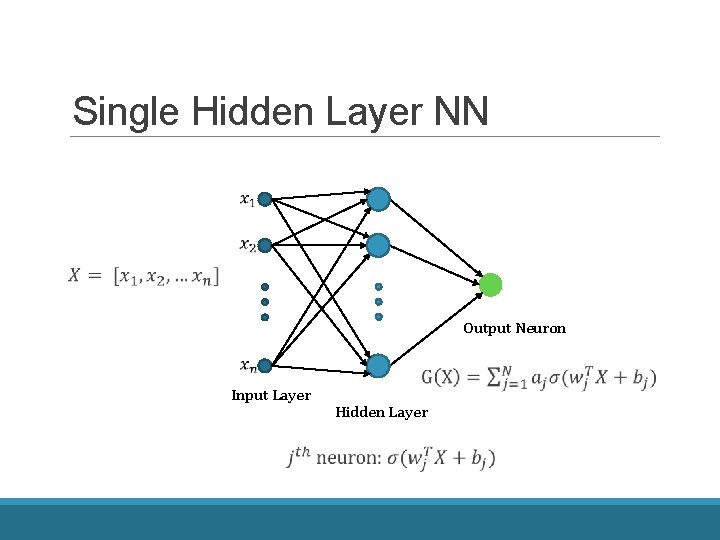

Single Hidden Layer NN Output Neuron Input Layer Hidden Layer

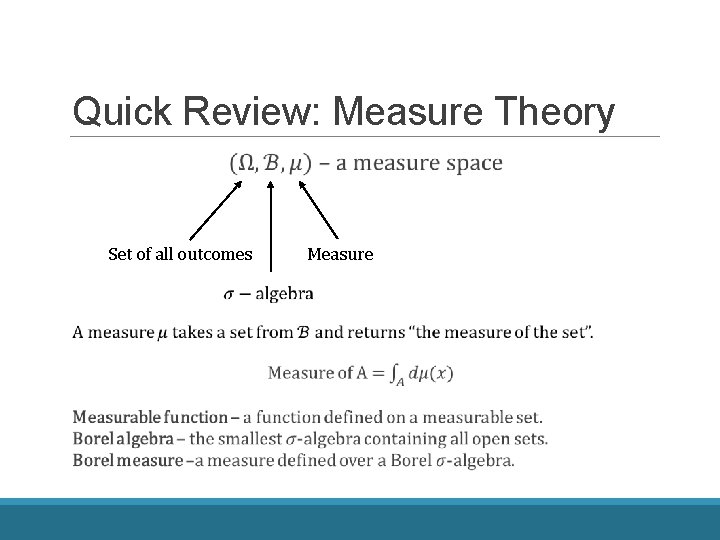

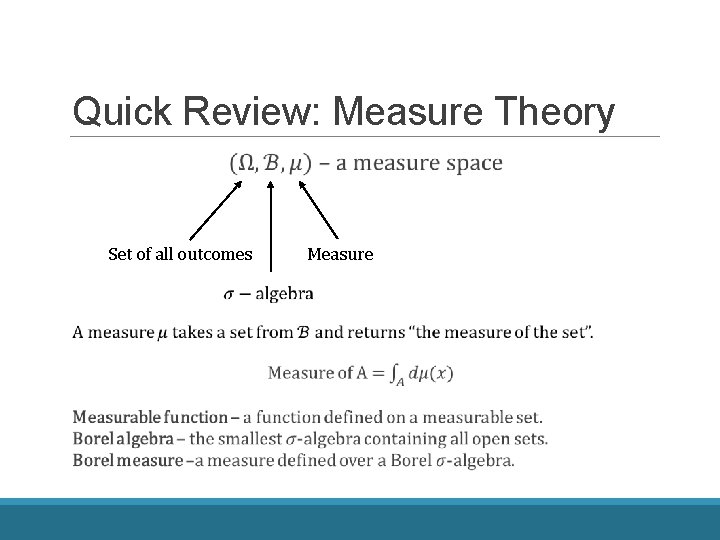

Quick Review: Measure Theory Set of all outcomes Measure

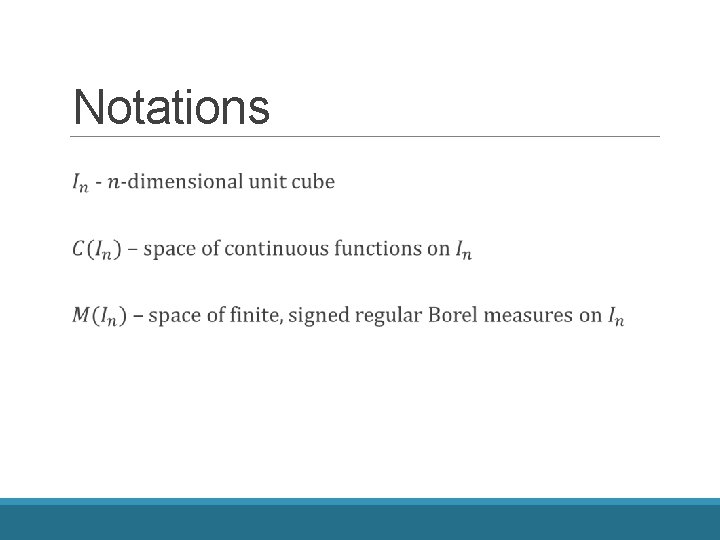

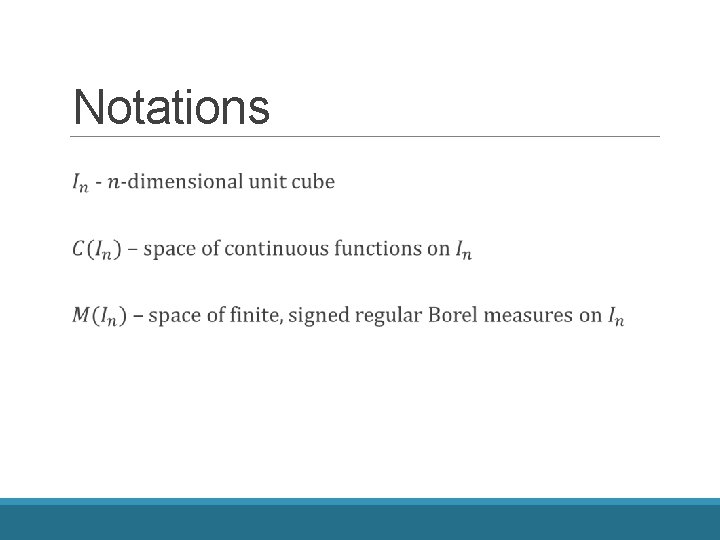

Notations

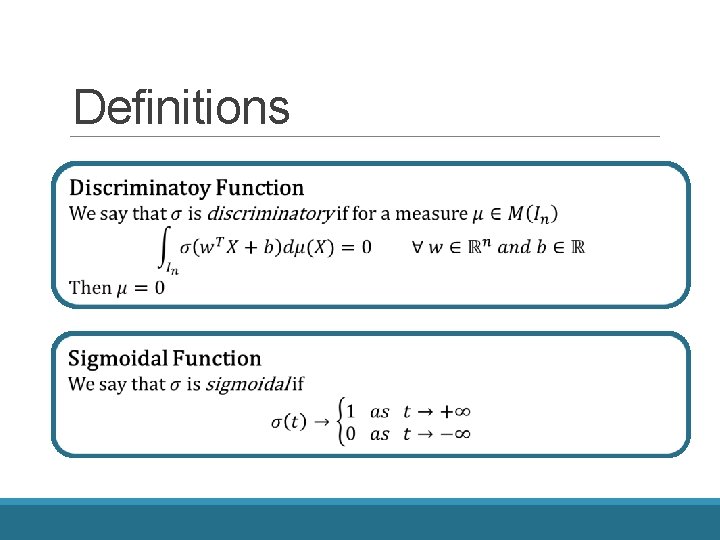

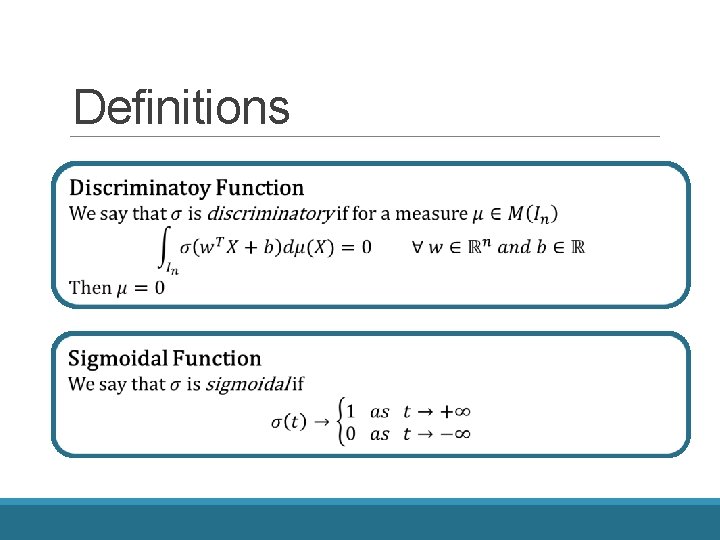

Definitions

![Theorem 1 Cybenko 89 Theorem 1 [Cybenko 89’]](https://slidetodoc.com/presentation_image_h/a4bdf0433e587b76b0f3e6af6b2d881d/image-10.jpg)

Theorem 1 [Cybenko 89’]

![Theorem 1 Cybenko 89 Theorem 1 [Cybenko 89’]](https://slidetodoc.com/presentation_image_h/a4bdf0433e587b76b0f3e6af6b2d881d/image-11.jpg)

Theorem 1 [Cybenko 89’]

![Proof of Theorem 1 Cybenko 89 Proof of Theorem 1 [Cybenko 89’]](https://slidetodoc.com/presentation_image_h/a4bdf0433e587b76b0f3e6af6b2d881d/image-12.jpg)

Proof of Theorem 1 [Cybenko 89’]

![Proof of Theorem 1 Cybenko 89 Proof of Theorem 1 [Cybenko 89’]](https://slidetodoc.com/presentation_image_h/a4bdf0433e587b76b0f3e6af6b2d881d/image-13.jpg)

Proof of Theorem 1 [Cybenko 89’]

![Proof of Theorem 1 Cybenko 89 Proof of Theorem 1 [Cybenko 89’]](https://slidetodoc.com/presentation_image_h/a4bdf0433e587b76b0f3e6af6b2d881d/image-14.jpg)

Proof of Theorem 1 [Cybenko 89’]

![Proof of Theorem 1 Cybenko 89 Proof of Theorem 1 [Cybenko 89’]](https://slidetodoc.com/presentation_image_h/a4bdf0433e587b76b0f3e6af6b2d881d/image-15.jpg)

Proof of Theorem 1 [Cybenko 89’]

![Proof of Theorem 1 Cybenko 89 Proof of Theorem 1 [Cybenko 89’]](https://slidetodoc.com/presentation_image_h/a4bdf0433e587b76b0f3e6af6b2d881d/image-16.jpg)

Proof of Theorem 1 [Cybenko 89’]

![Theorem 1 Cybenko 89 Theorem 1 [Cybenko 89’]](https://slidetodoc.com/presentation_image_h/a4bdf0433e587b76b0f3e6af6b2d881d/image-17.jpg)

Theorem 1 [Cybenko 89’]

![Lemma 1 Cybenko 89 y s z a Cr uou tin n Co Lemma 1 [Cybenko 89’] y s z a Cr uou tin n Co](https://slidetodoc.com/presentation_image_h/a4bdf0433e587b76b0f3e6af6b2d881d/image-18.jpg)

Lemma 1 [Cybenko 89’] y s z a Cr uou tin n Co

![Theorem 2 Cybenko 89 Theorem 2 [Cybenko 89’]](https://slidetodoc.com/presentation_image_h/a4bdf0433e587b76b0f3e6af6b2d881d/image-19.jpg)

Theorem 2 [Cybenko 89’]

Universal Approximation Good Theorem Barron Functions Matlab Bad Multilayer Barron Theorem Demo

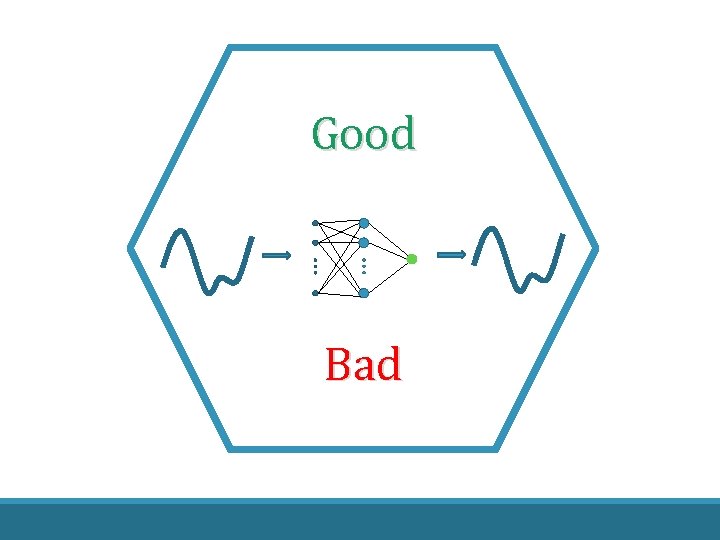

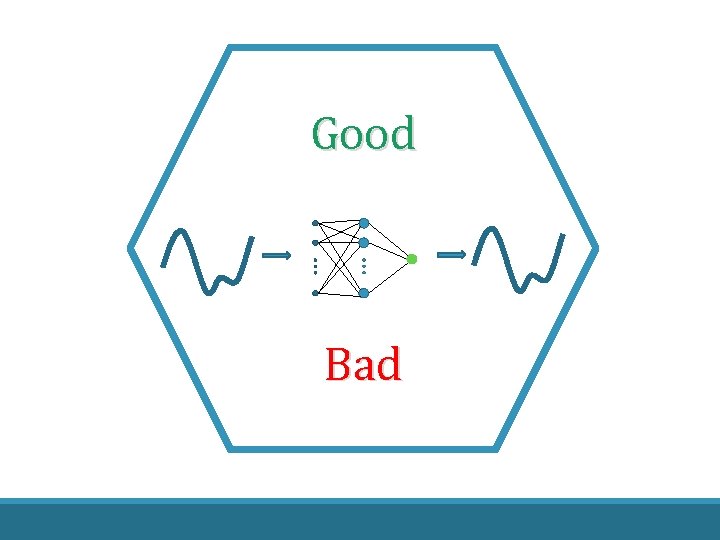

Good Bad

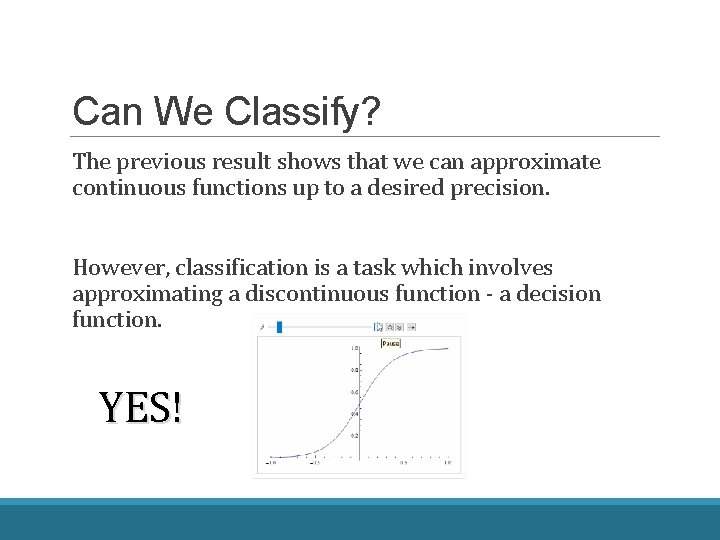

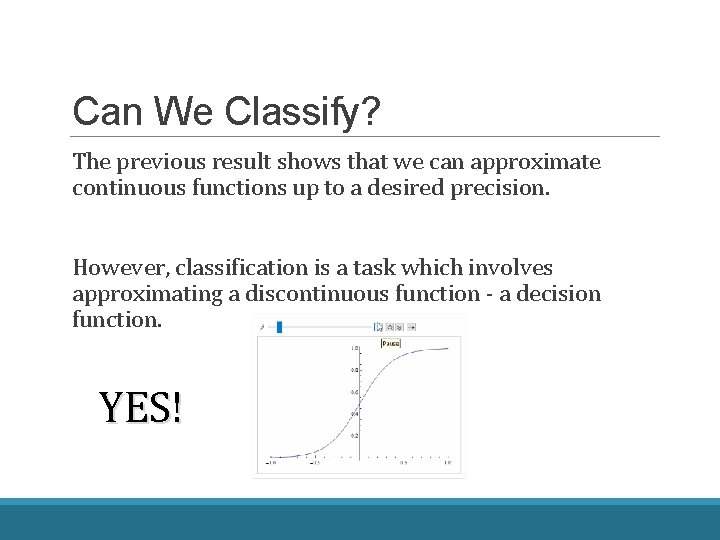

Can We Classify? The previous result shows that we can approximate continuous functions up to a desired precision. However, classification is a task which involves approximating a discontinuous function - a decision function. YES!

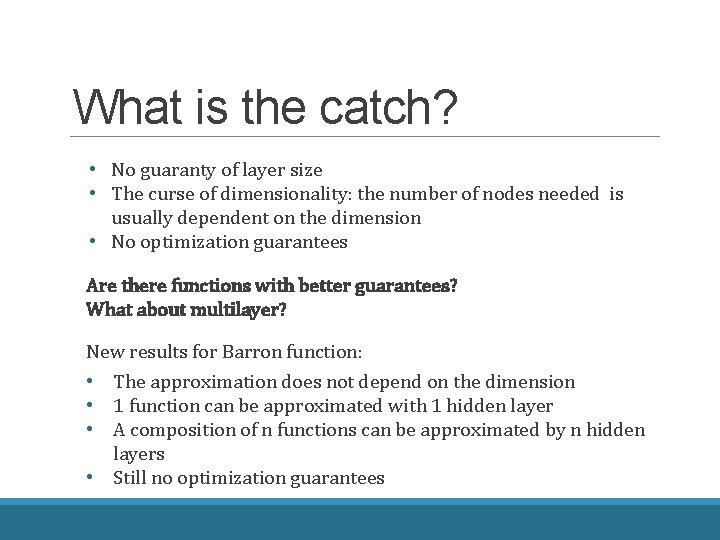

What is the catch? • No guaranty of layer size • The curse of dimensionality: the number of nodes needed is usually dependent on the dimension • No optimization guarantees Are there functions with better guarantees? What about multilayer? New results for Barron function: • The approximation does not depend on the dimension • 1 function can be approximated with 1 hidden layer • A composition of n functions can be approximated by n hidden layers • Still no optimization guarantees

Universal Approximation Good Theorem Barron Functions Matlab Bad Multilayer Barron Theorem Demo

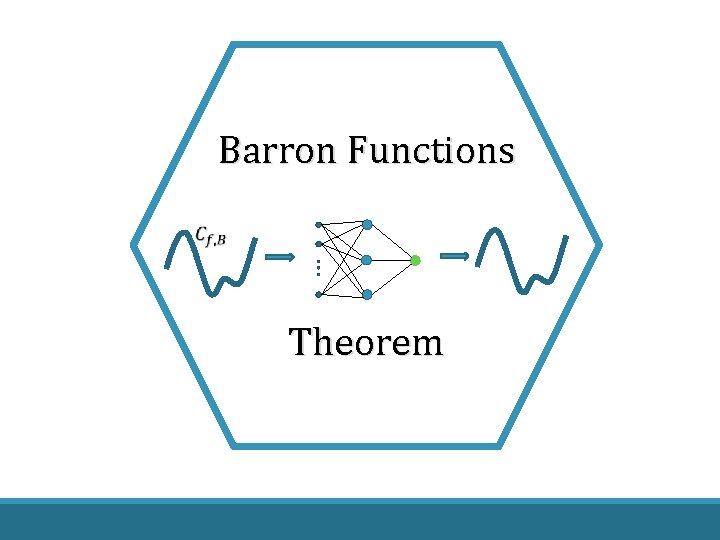

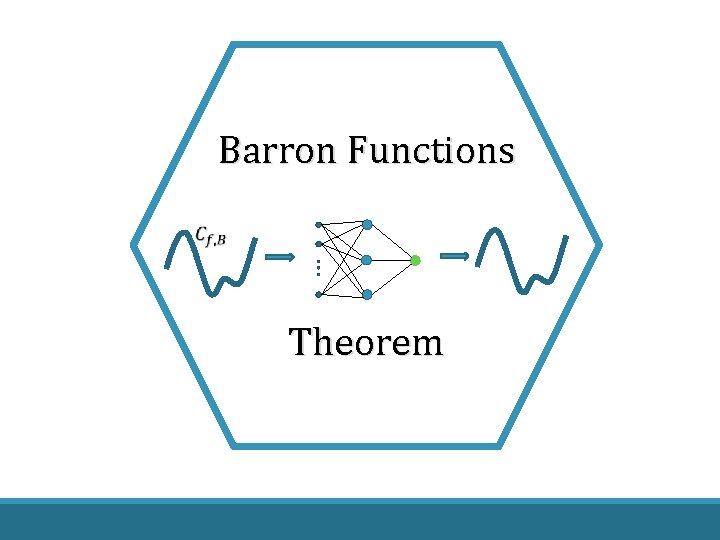

Barron Functions Theorem

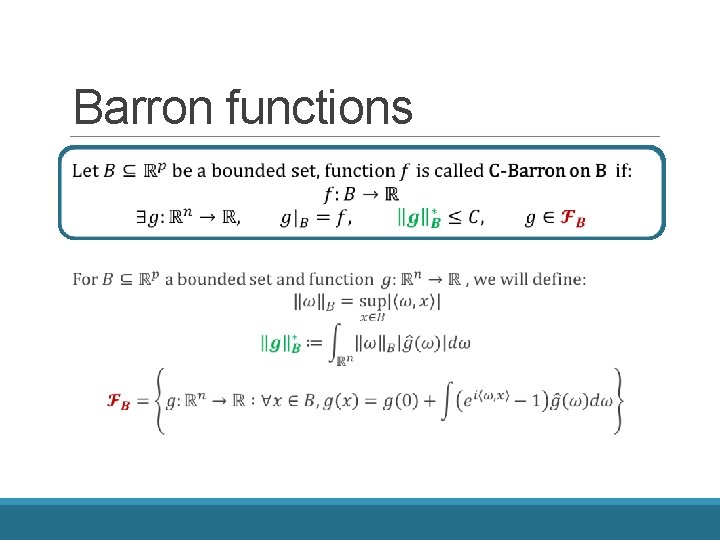

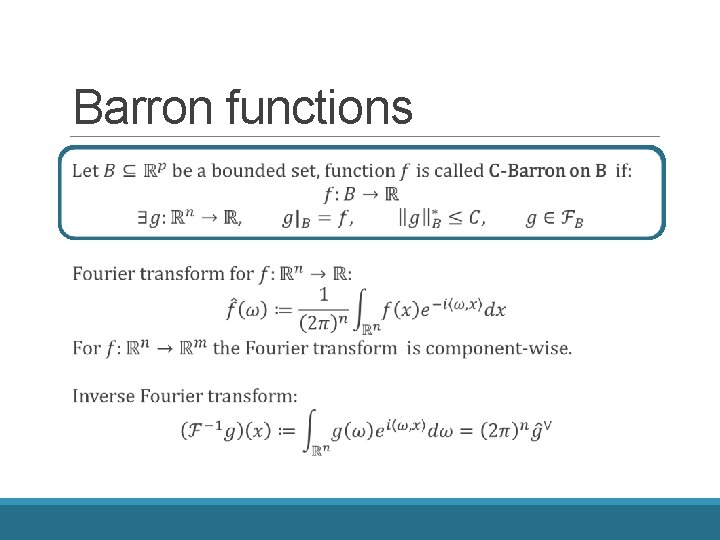

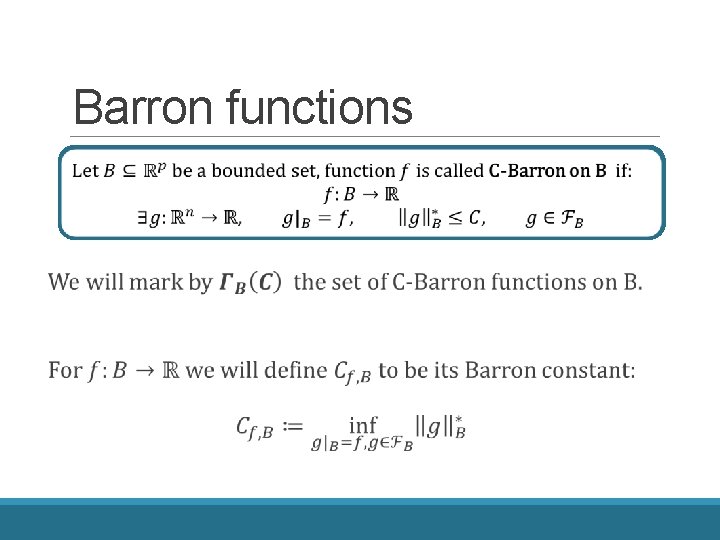

Barron functions

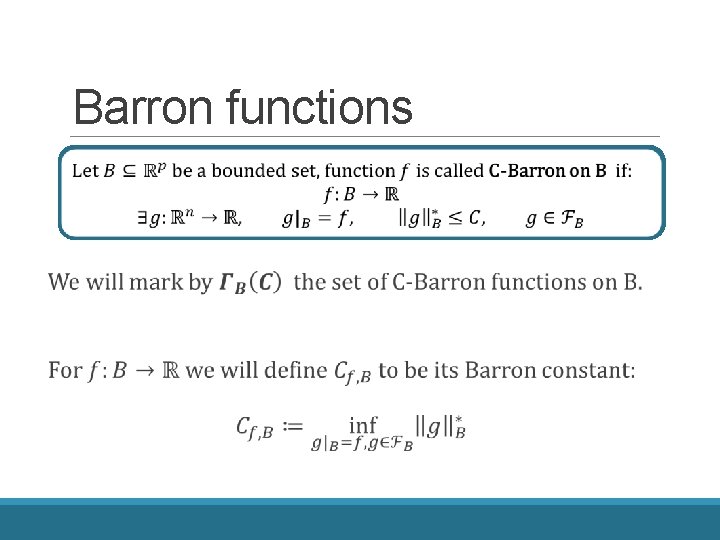

Barron functions

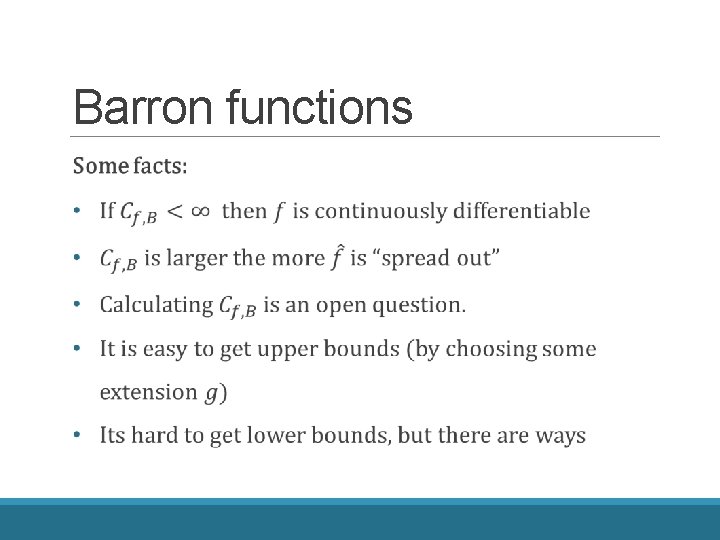

Barron functions

Barron functions

![Barron Theorem Bar 93 Barron Theorem [Bar 93]](https://slidetodoc.com/presentation_image_h/a4bdf0433e587b76b0f3e6af6b2d881d/image-30.jpg)

Barron Theorem [Bar 93]

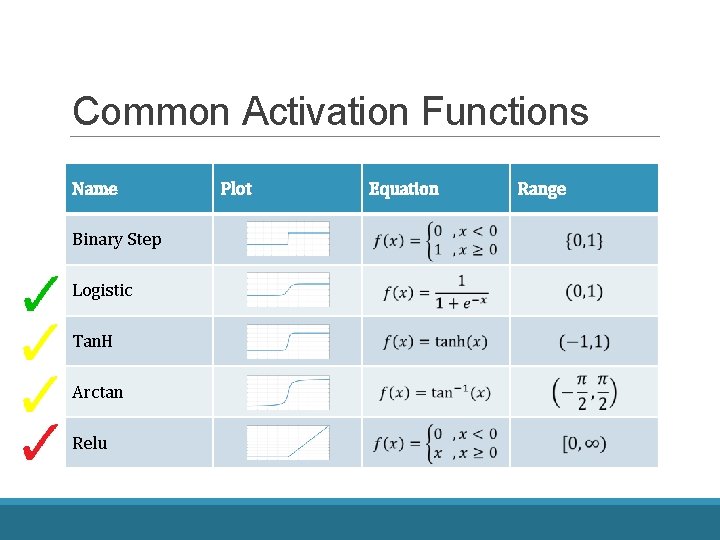

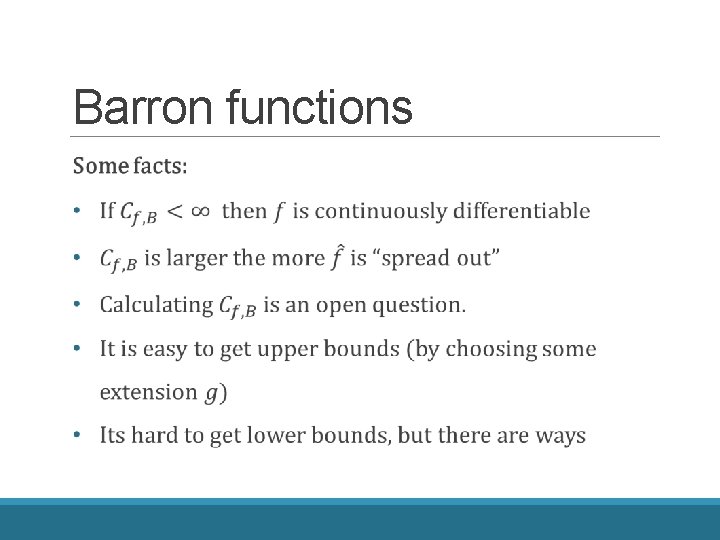

Common Activation Functions Name Binary Step Logistic Tan. H Arctan Relu Plot Equation Range

Universal Approximation Good Theorem Barron Functions Matlab Bad Multilayer Barron Theorem Demo

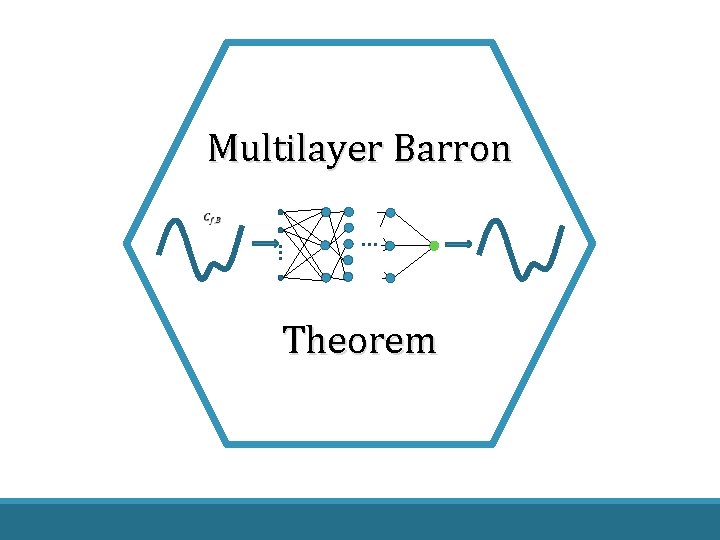

Multilayer Barron Theorem

![Multilayer Barron Theorem 2017 g X Multilayer Barron Theorem [2017] g X](https://slidetodoc.com/presentation_image_h/a4bdf0433e587b76b0f3e6af6b2d881d/image-34.jpg)

Multilayer Barron Theorem [2017] g X

![Multilayer Barron Theorem 2017 Multilayer Barron Theorem [2017]](https://slidetodoc.com/presentation_image_h/a4bdf0433e587b76b0f3e6af6b2d881d/image-35.jpg)

Multilayer Barron Theorem [2017]

![Multilayer Barron Theorem 2017 Why is this important Because there are complex functions that Multilayer Barron Theorem [2017] Why is this important? Because there are complex functions that](https://slidetodoc.com/presentation_image_h/a4bdf0433e587b76b0f3e6af6b2d881d/image-36.jpg)

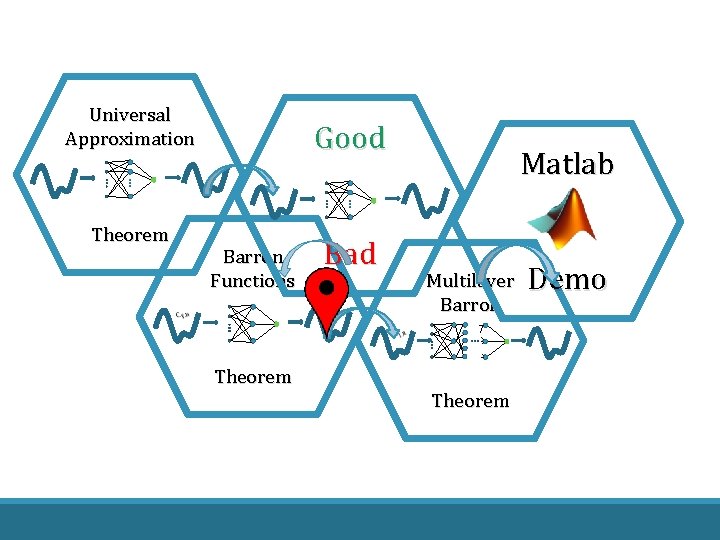

Multilayer Barron Theorem [2017] Why is this important? Because there are complex functions that are combination of baron functions

![Multilayer Barron Theorem 2017 Whats next Multilayer Barron Theorem [2017] What’s next?](https://slidetodoc.com/presentation_image_h/a4bdf0433e587b76b0f3e6af6b2d881d/image-37.jpg)

Multilayer Barron Theorem [2017] What’s next?

Universal Approximation Good Theorem Barron Functions Matlab Bad Multilayer Barron Theorem Demo

Matlab Demo

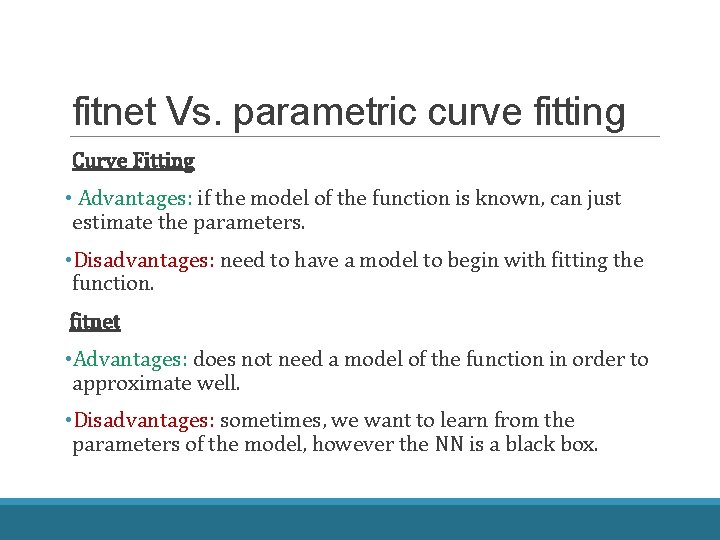

fitnet Vs. parametric curve fitting Curve Fitting • Advantages: if the model of the function is known, can just estimate the parameters. • Disadvantages: need to have a model to begin with fitting the function. fitnet • Advantages: does not need a model of the function in order to approximate well. • Disadvantages: sometimes, we want to learn from the parameters of the model, however the NN is a black box.

Questions

References • George Cybenko, 1989, Approximation by superpositions of a sigmoidal function • Rudin, Walter (1991). Functional analysis. Mc. Graw-Hill Science/Engineering/Math (Hahn Banach Theorem) • Riesz Representation Theorem - Wolfram • Holden Lee, Rong Ge, Andrej Risteski, Tengyu Ma, Sanjeev Arora, On the ability of neural nets to express distributions

![Theorem 2 Cybenko 89 Theorem 2 [Cybenko 89’]](https://slidetodoc.com/presentation_image_h/a4bdf0433e587b76b0f3e6af6b2d881d/image-43.jpg)

Theorem 2 [Cybenko 89’]

![Proof of Theorem 1 Cybenko 89 Proof of Theorem 1 [Cybenko 89’]](https://slidetodoc.com/presentation_image_h/a4bdf0433e587b76b0f3e6af6b2d881d/image-44.jpg)

Proof of Theorem 1 [Cybenko 89’]

![Theorem 3 Cybenko 89 Theorem 3 [Cybenko 89’]](https://slidetodoc.com/presentation_image_h/a4bdf0433e587b76b0f3e6af6b2d881d/image-45.jpg)

Theorem 3 [Cybenko 89’]

![Multilayer Barron Theorem 2017 Why is this important A network with multiple hidden layers Multilayer Barron Theorem [2017] Why is this important? A network with multiple hidden layers](https://slidetodoc.com/presentation_image_h/a4bdf0433e587b76b0f3e6af6b2d881d/image-46.jpg)

Multilayer Barron Theorem [2017] Why is this important? A network with multiple hidden layers of poly(n) width can approximate functions that need exponential number of nodes with 1 hidden layer.