Neural Networks And their Applications To Speech Recognition

- Slides: 20

Neural Networks And their Applications To Speech Recognition • A verity of knowledge sources are required in the AI approach to speech recognition • Two key concepts of AI are – Automatic knowledge learning – adaptation • These concepts can be implemented by Neural Network approach. 1

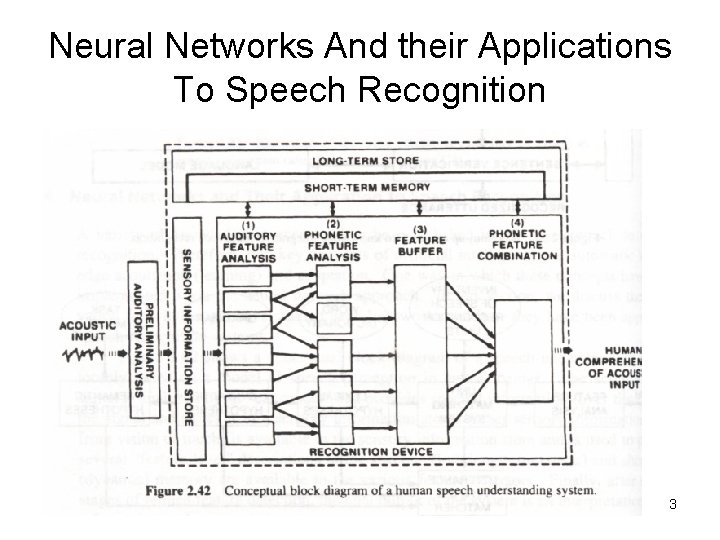

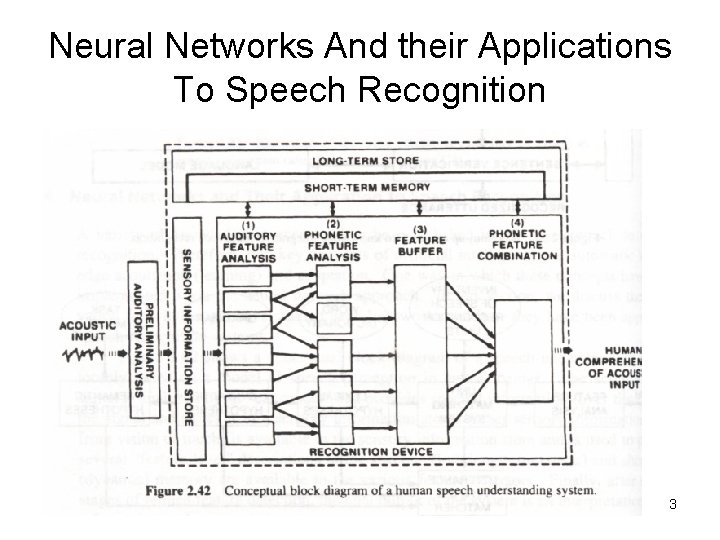

Neural Networks And their Applications To Speech Recognition • The acoustic signal is analyzed by an “ear model” that provides spectral information about the signal and stores it in sensory information store. • Other sensory information (eg. From vision) is available in sensory information store and is used to provide several “feature-level” descriptions of the speech. • Both long (static) term and short (dynamic) term memory are available to various feature detectors. • Finally after several stages of feature detection, the final output of the system is an interpretation of the information in the acoustic input. 2

Neural Networks And their Applications To Speech Recognition 3

Neural Networks And their Applications To Speech Recognition • The human speech understanding model is a neural net. • Various feature analysis represent processing at various levels in the neural pathways to the brain 4

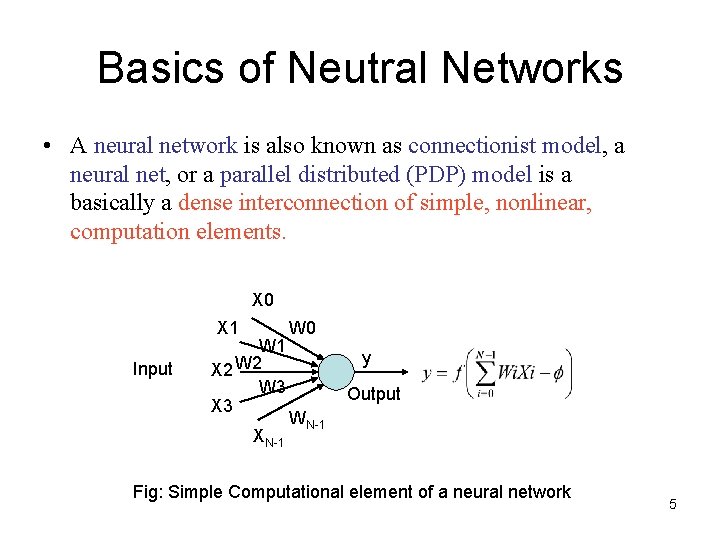

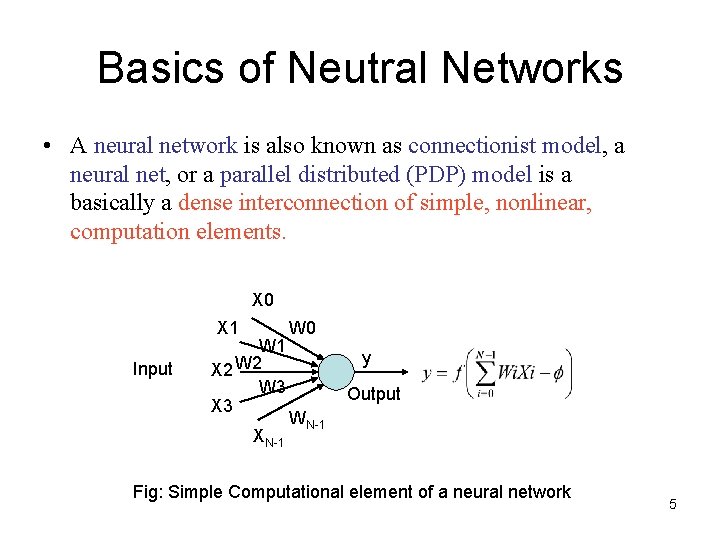

Basics of Neutral Networks • A neural network is also known as connectionist model, a neural net, or a parallel distributed (PDP) model is a basically a dense interconnection of simple, nonlinear, computation elements. X 0 X 1 Input W 1 X 2 W 3 XN-1 W 0 y Output WN-1 Fig: Simple Computational element of a neural network 5

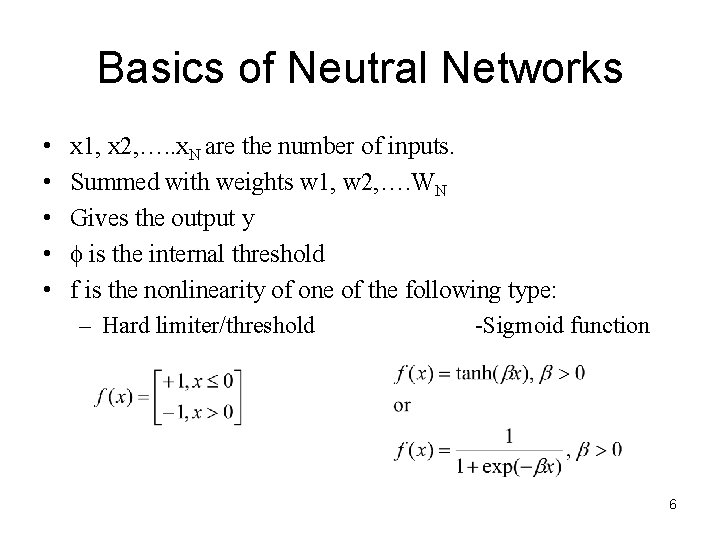

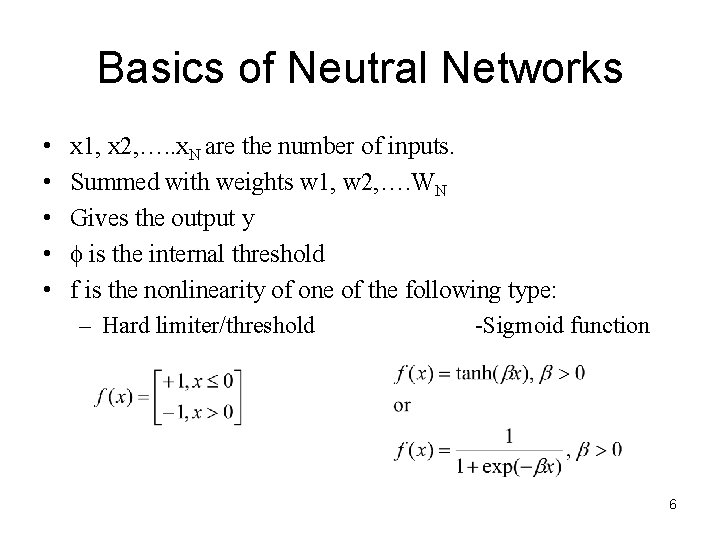

Basics of Neutral Networks • • • x 1, x 2, …. . x. N are the number of inputs. Summed with weights w 1, w 2, …. WN Gives the output y is the internal threshold f is the nonlinearity of one of the following type: – Hard limiter/threshold -Sigmoid function 6

Neutral Network Topologies • Network Topology (configuration of communication network): How the simple computational elements are interconnected • Three standard topologies: – Single/multilayer perceptrons – Hopfield or recurrent network – Kohonen or self-organizing networks 7

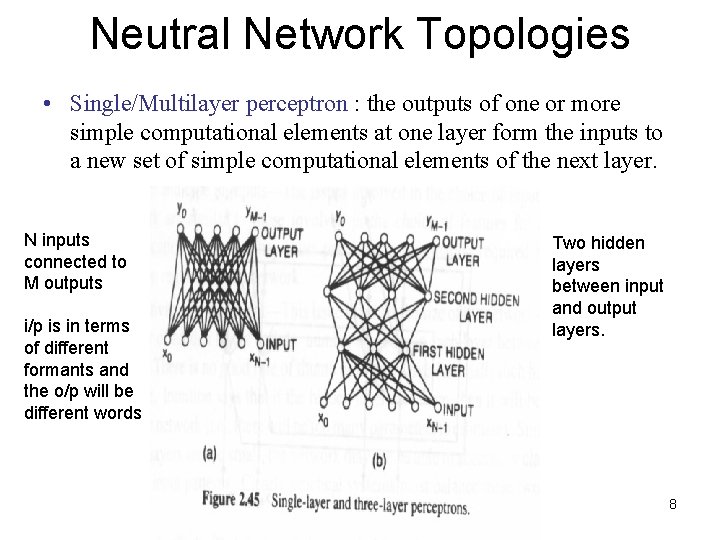

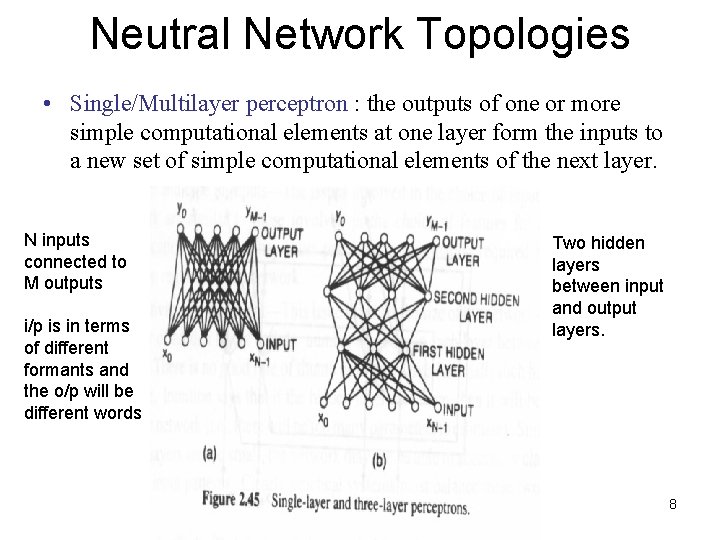

Neutral Network Topologies • Single/Multilayer perceptron : the outputs of one or more simple computational elements at one layer form the inputs to a new set of simple computational elements of the next layer. N inputs connected to M outputs i/p is in terms of different formants and the o/p will be different words Two hidden layers between input and output layers. 8

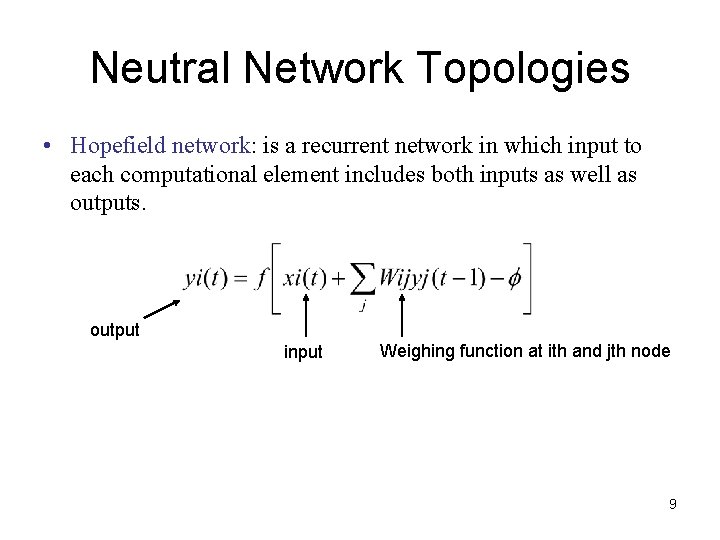

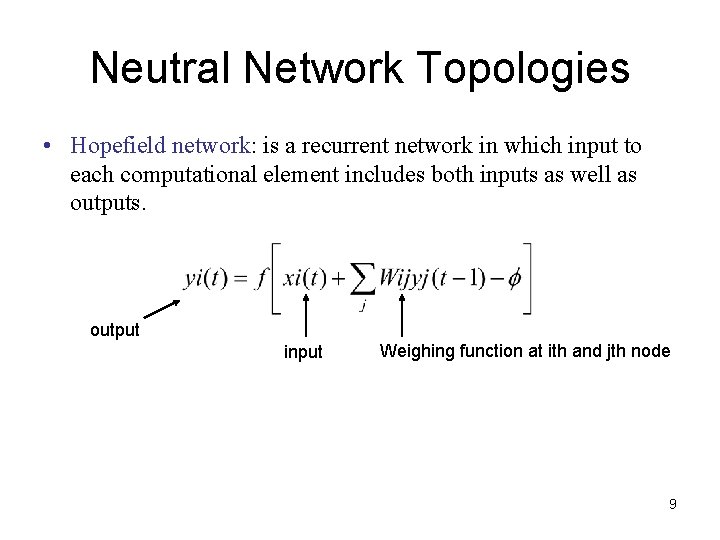

Neutral Network Topologies • Hopefield network: is a recurrent network in which input to each computational element includes both inputs as well as outputs. output input Weighing function at ith and jth node 9

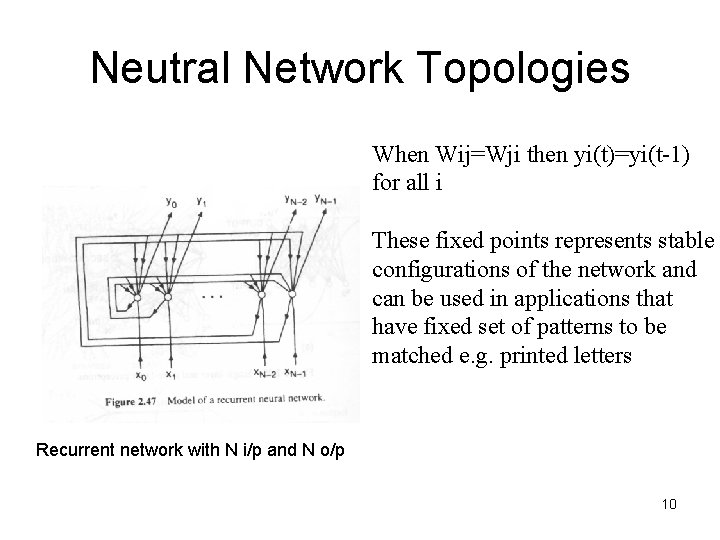

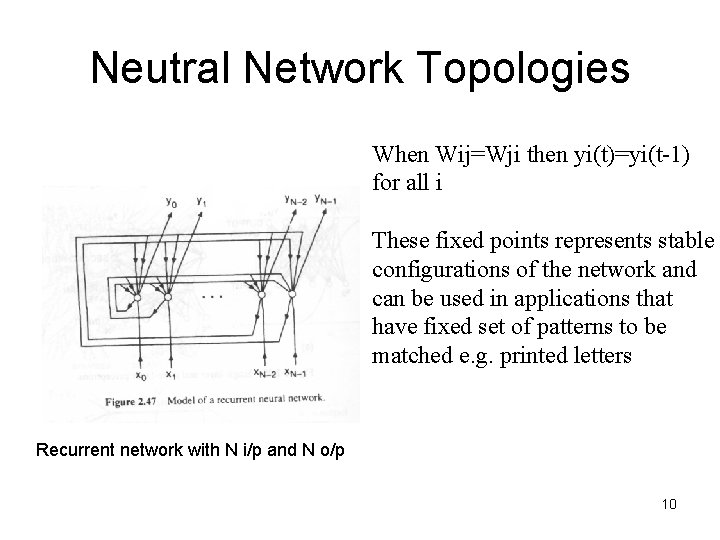

Neutral Network Topologies When Wij=Wji then yi(t)=yi(t-1) for all i These fixed points represents stable configurations of the network and can be used in applications that have fixed set of patterns to be matched e. g. printed letters Recurrent network with N i/p and N o/p 10

Neutral Network Topologies • Kohonen network topology: • self organizing feature map • It is a clustering procedure for providing a codebook of stable patterns in the input space. • Example is “vector quantization” method which will be discussed in detail in next chapter. 12

Network Characteristics Four Characteristics: 1. Number and type of inputs: the issues involved in choice of inputs to a neural network are similar to those involved in the choice of features for any pattern classification system. They must provide the information required to make the decision. 2. Connectivity of the network: this involves size of the network i. e no. of hidden layers and no. of nodes in each layer between i/p and o/p 1. If hidden layers are too large then it will be difficult to train the network (many parameters to consider) 2. If too small then may not be able to accurately classify all the desired i/p patterns. 3. So practical system must balance these two competing 13 effects

Network Characteristics • • Choice of offset: the choice of the values for the weights and the offset (threshold) must be maid as the part of the training procedure. Choice of nonlinearity: f (nonlinearity) must be continuous and differentiable for the training algorithm to be applicable. 14

Training of Neural Network Parameters For multilayered perceptrons a simple iterative, convergent procedure exists for choosing a set of parameters whose values asymptotically approaches a stationery point with a certain optimality property. 15

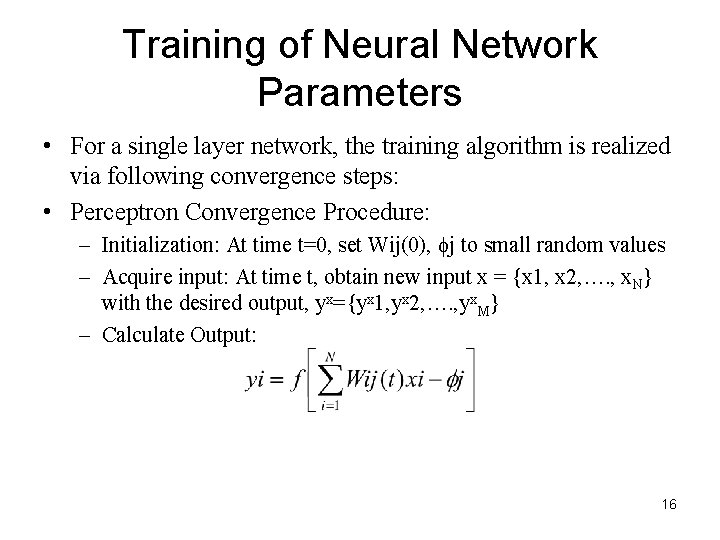

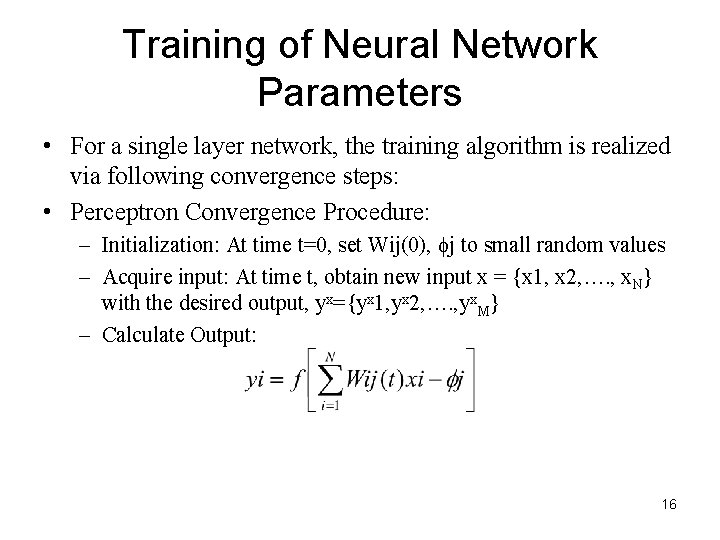

Training of Neural Network Parameters • For a single layer network, the training algorithm is realized via following convergence steps: • Perceptron Convergence Procedure: – Initialization: At time t=0, set Wij(0), j to small random values – Acquire input: At time t, obtain new input x = {x 1, x 2, …. , x. N} with the desired output, yx={yx 1, yx 2, …. , yx. M} – Calculate Output: 16

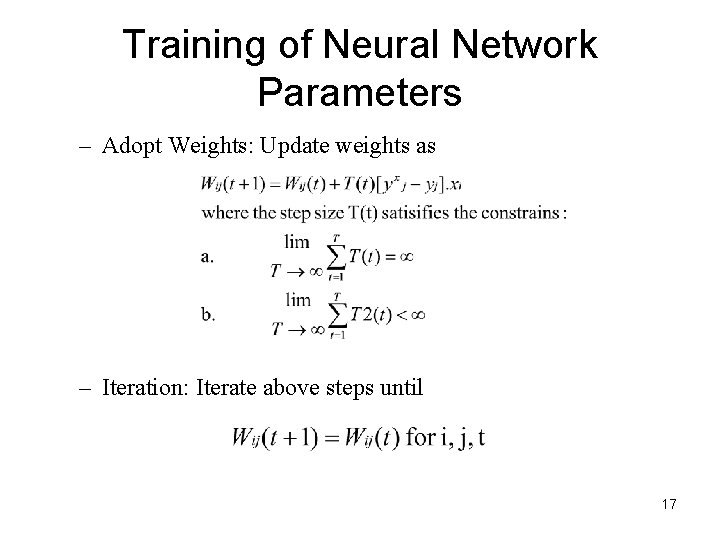

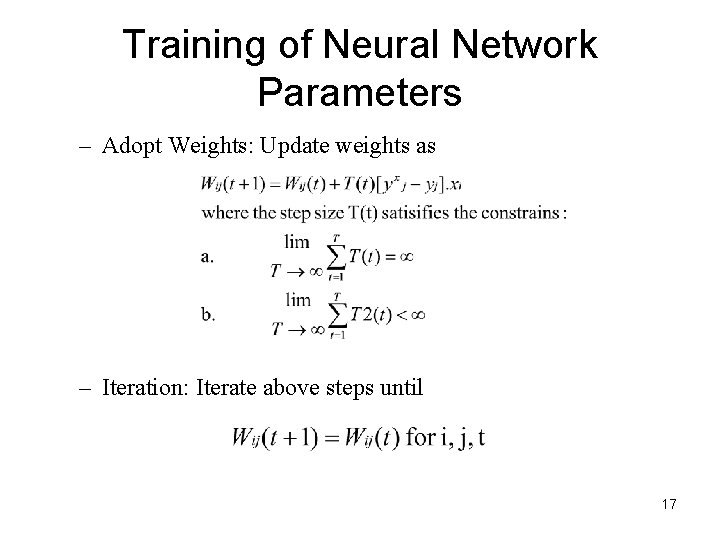

Training of Neural Network Parameters – Adopt Weights: Update weights as – Iteration: Iterate above steps until 17

Advantages of Neural Networks • They can readily implement a massive degree of parallel computation. • Least sensitive to noise or defects within the structure • Robust • The connection weights of the network need not to be constrained to be fixed they can be adopted in real time to improve the performance. • Because of the nonlinearities in each computational element a sufficiently large neural network can approximate any nonlinearity. 19

Neural Network Structures For Speech Recognition • Conventional Artificial Neural networks are structured to deal with static pattern • Speech is dynamic in nature • (TDNN) time delay neural network is used 20

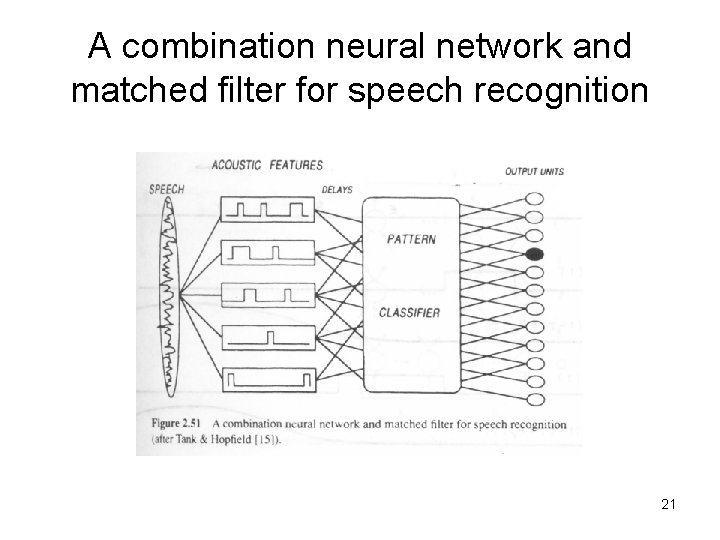

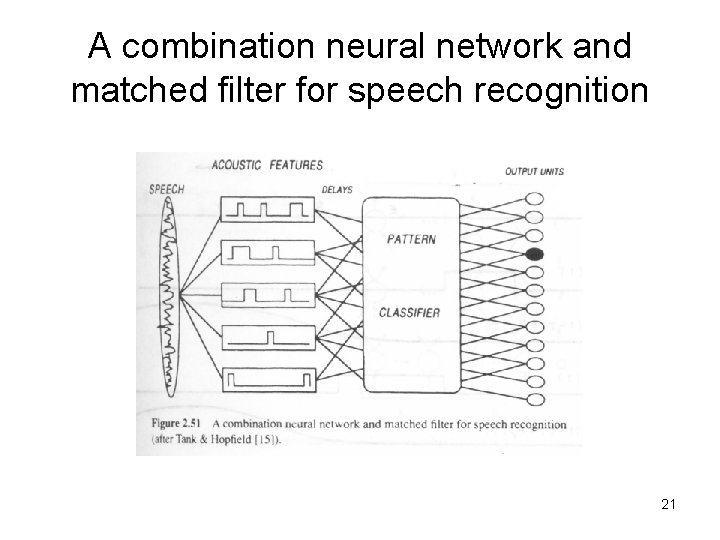

A combination neural network and matched filter for speech recognition 21

A combination neural network and matched filter for speech recognition • The acoustic features of the speech are estimated via conventional neural network architectures • The pattern classifier takes the detected acoustic feature vectors (delayed appropriately) and convolves them with filters “matched” to the acoustic features • And sums up the result over time • At the appropriate time (at the end of the speech) the o/p units indicates the presence of the speech. 22