Neural network and deep learning Chunyan Xu Nanjing

- Slides: 54

Neural network and deep learning Chunyan Xu Nanjing University of Science and Technology 2019 -06 -17

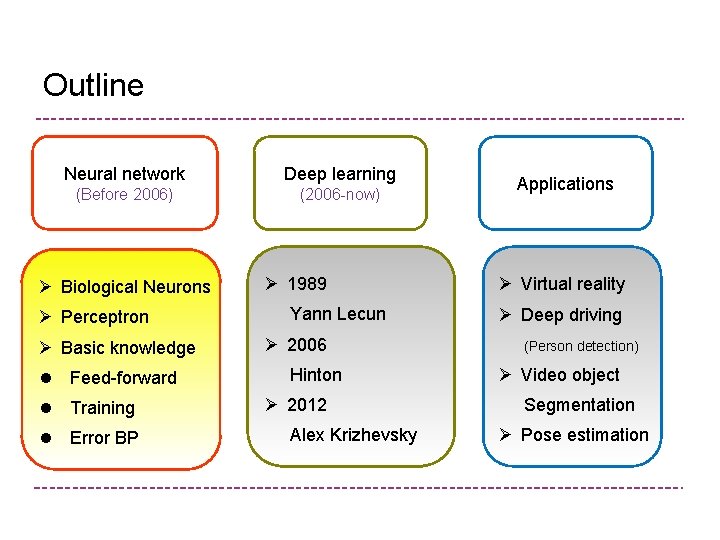

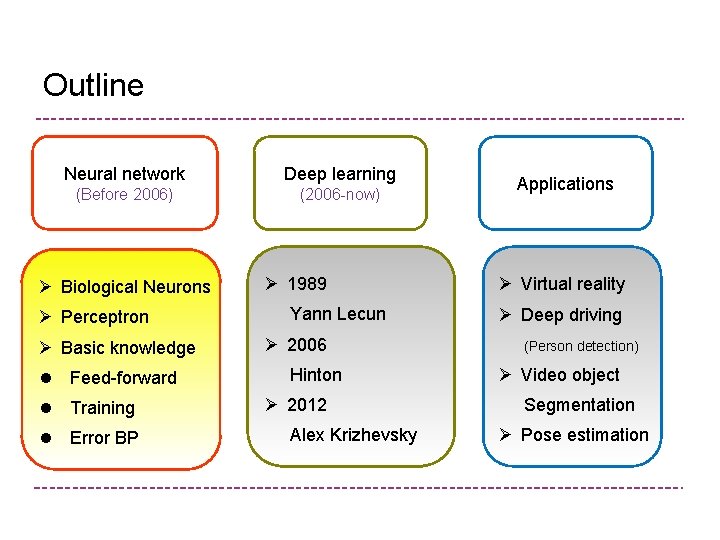

Outline Neural network Deep learning (Before 2006) (2006 -now) Applications Ø Biological Neurons Ø 1989 Ø Virtual reality Ø Perceptron Yann Lecun Ø Deep driving Ø Basic knowledge Ø 2006 (Person detection) l Feed-forward Hinton Ø Video object l Training Ø 2012 Segmentation l Error BP Alex Krizhevsky Ø Pose estimation

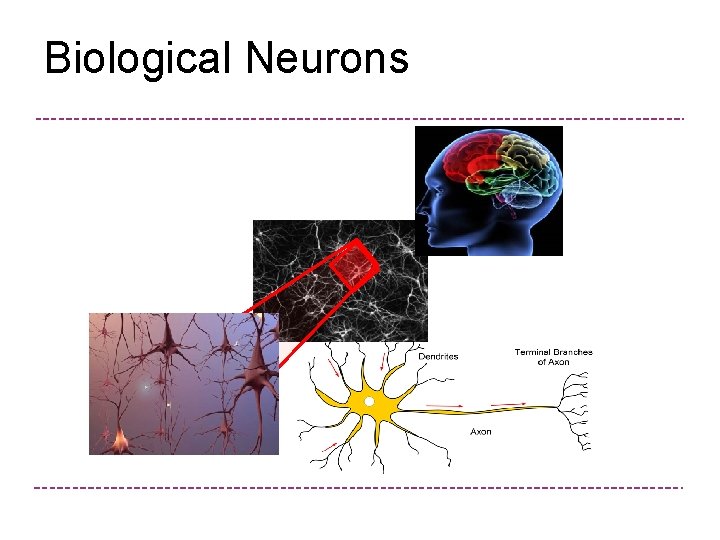

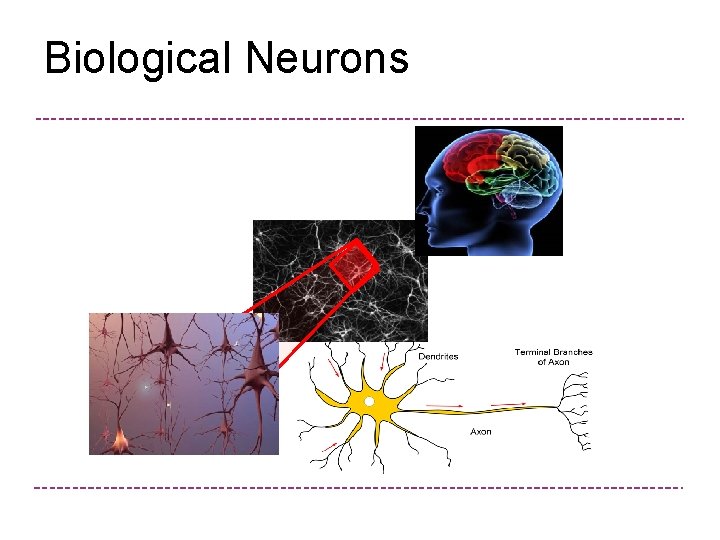

Biological Neurons

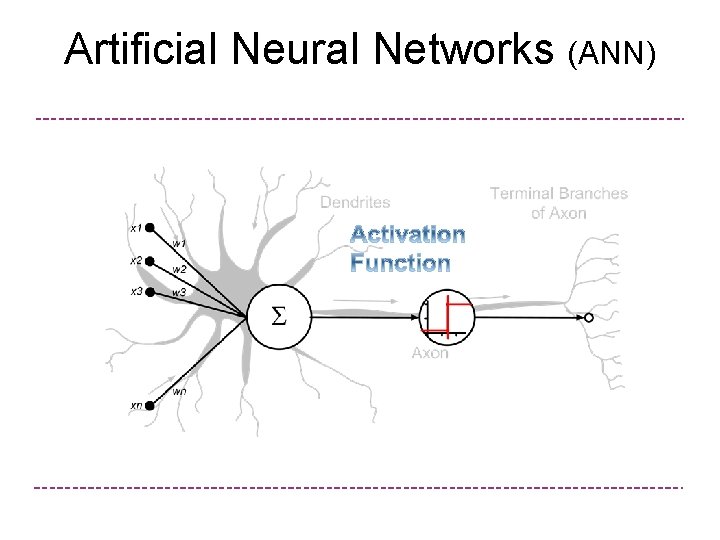

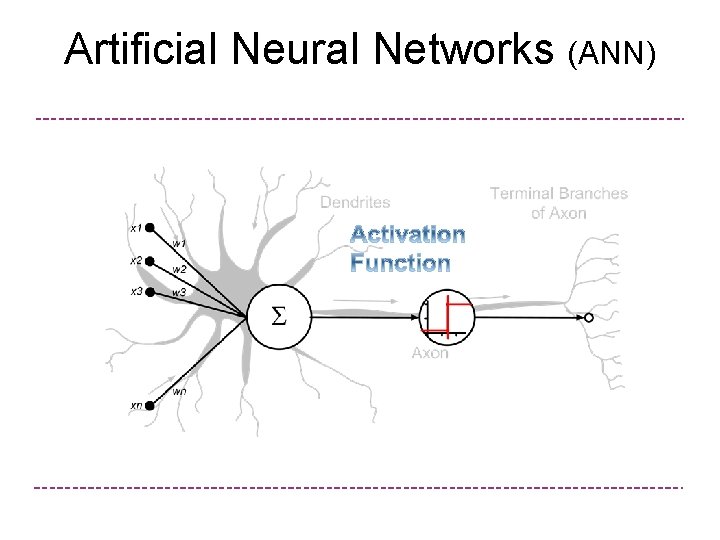

Artificial Neural Networks (ANN)

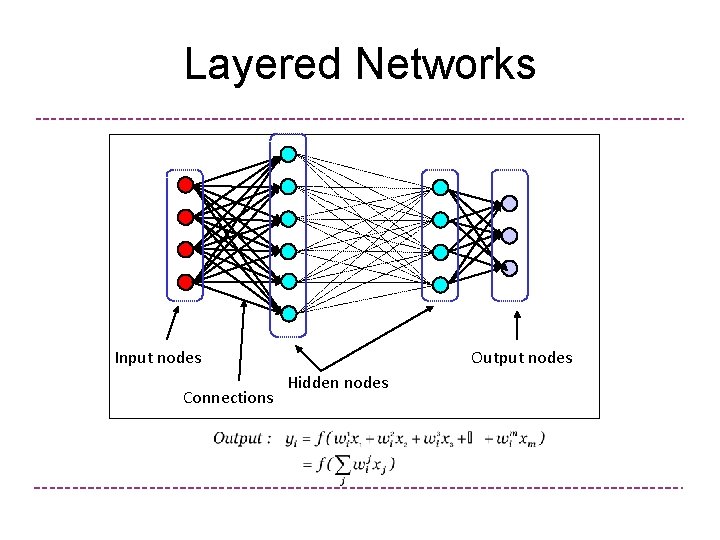

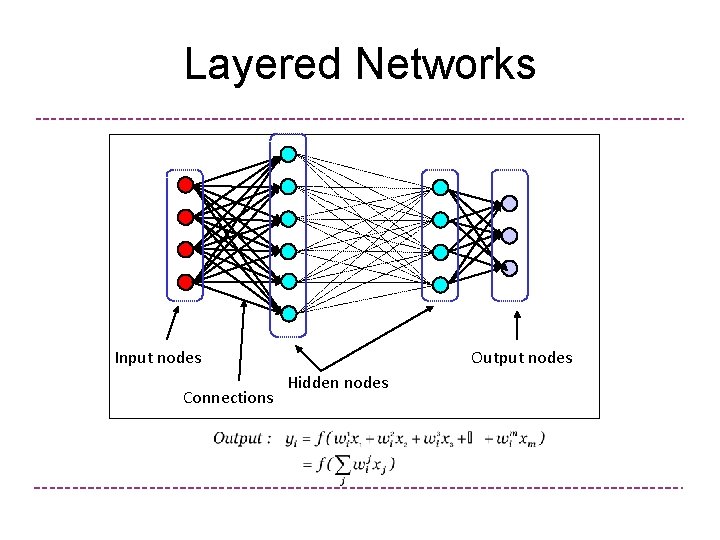

Layered Networks Input nodes Connections Output nodes Hidden nodes

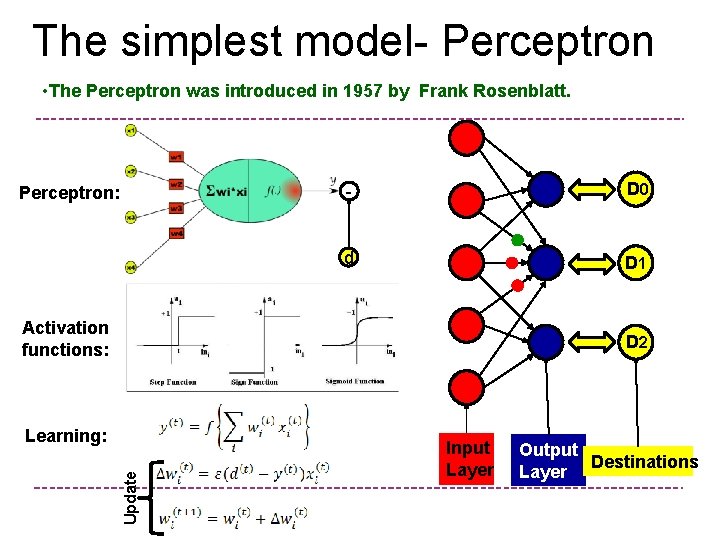

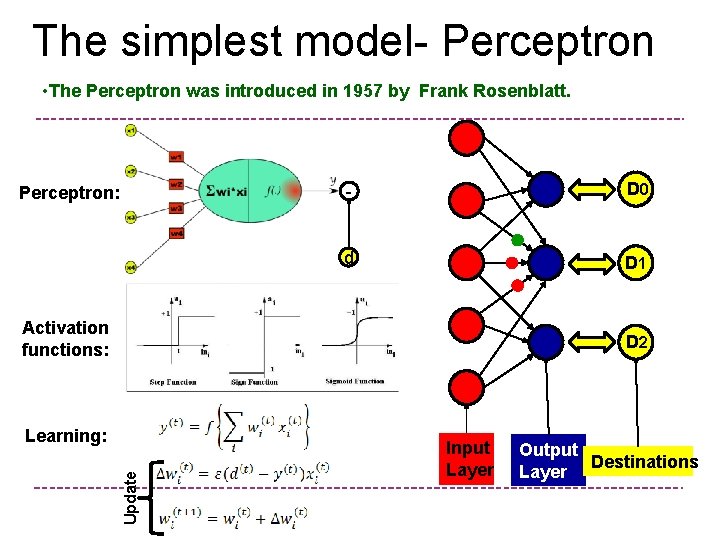

The simplest model- Perceptron • The Perceptron was introduced in 1957 by Frank Rosenblatt. Perceptron: - D 0 d D 1 Activation functions: D 2 Update Learning: Input Layer Output Destinations Layer

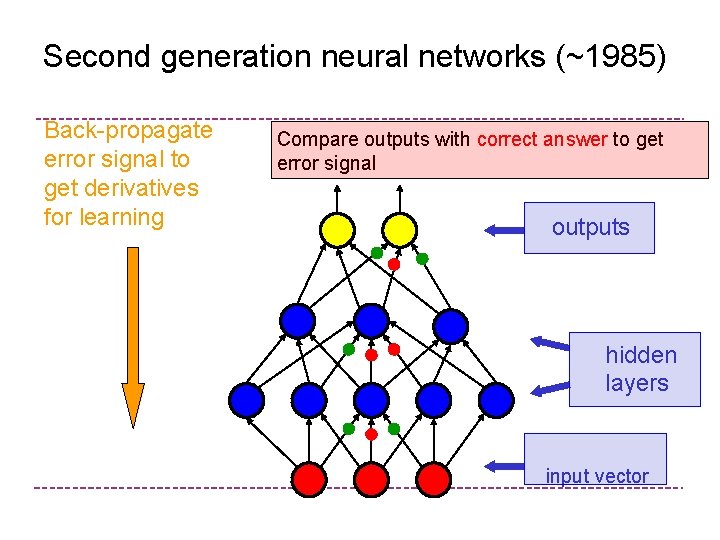

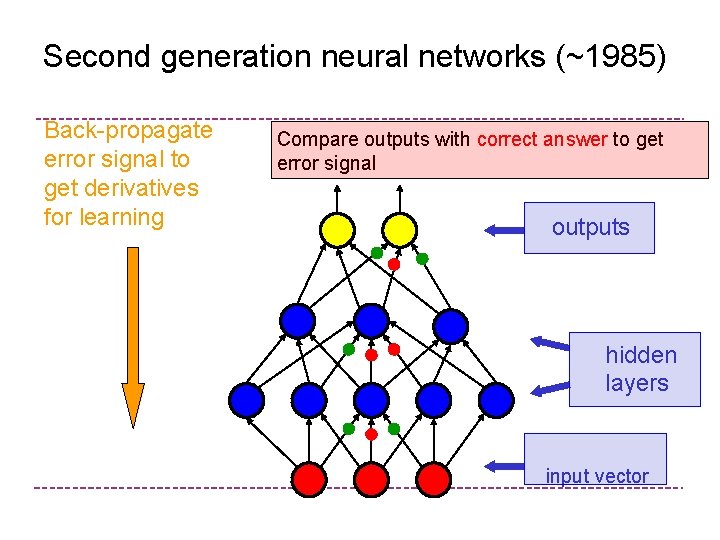

Second generation neural networks (~1985) Back-propagate Compare outputs with correct answer to get error signal to error signal get derivatives for learning outputs hidden layers input vector

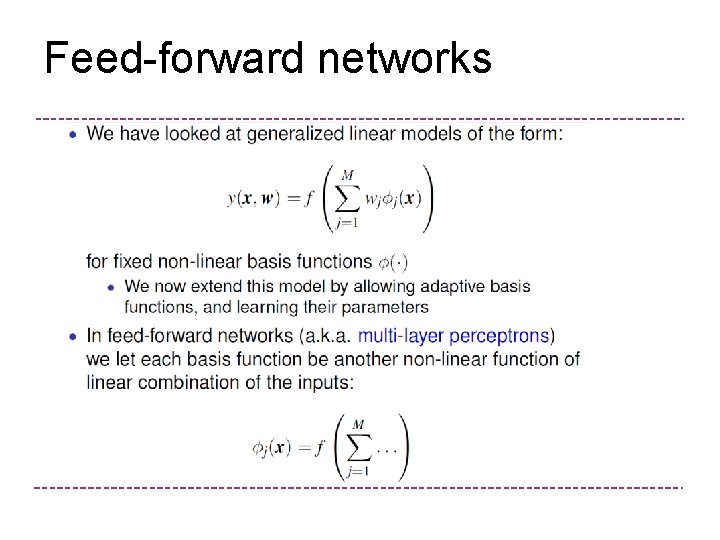

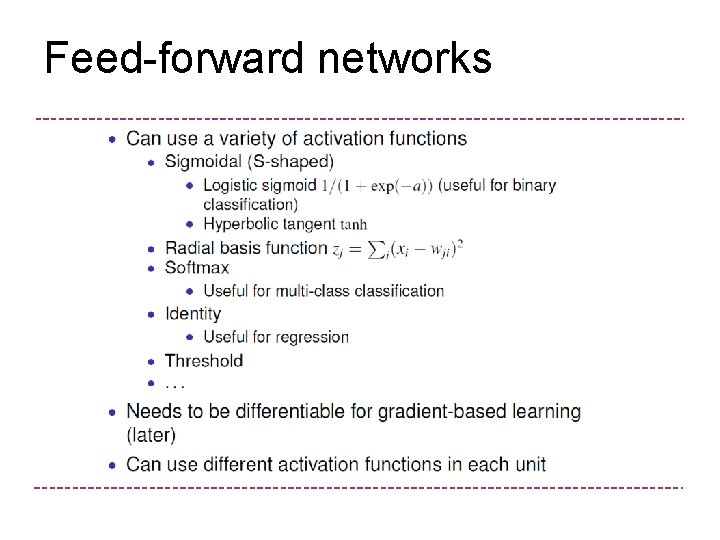

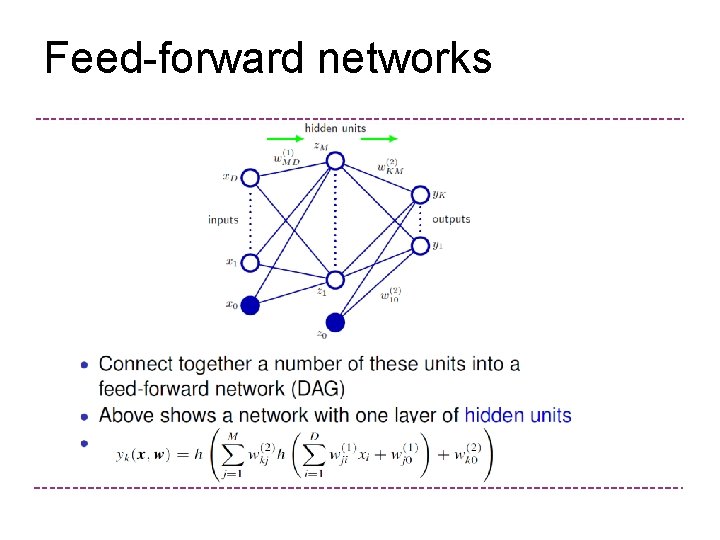

Some basic knowledge Ø Feed-forward Networks Ø Network Training Ø Error Back-propagation

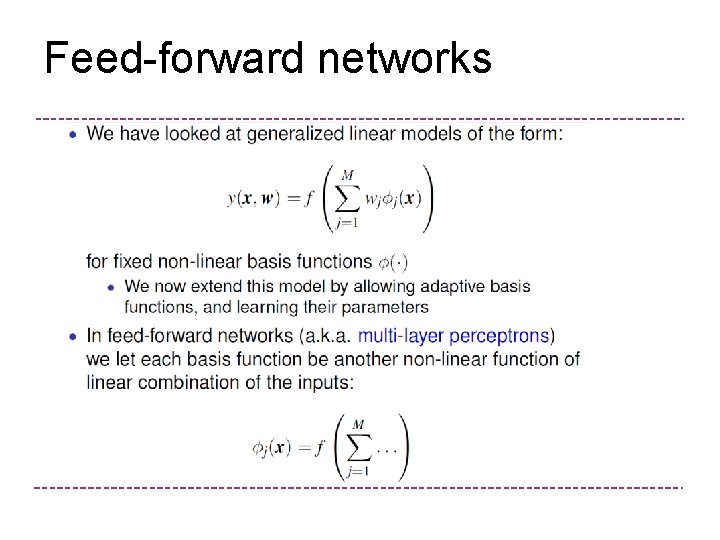

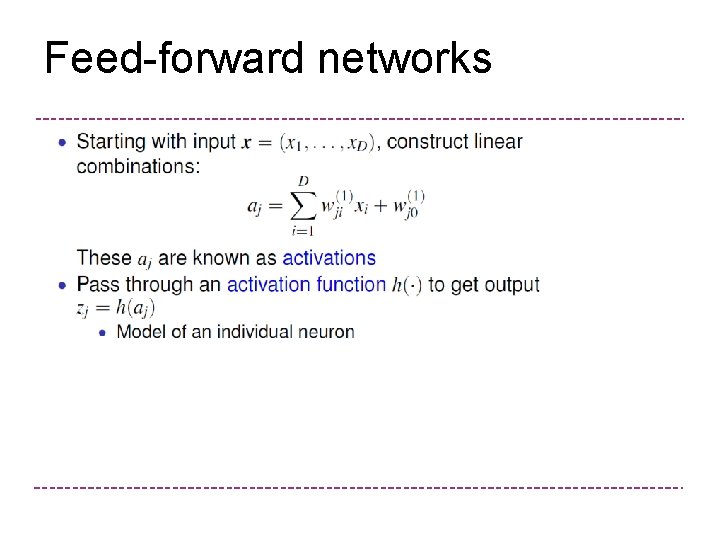

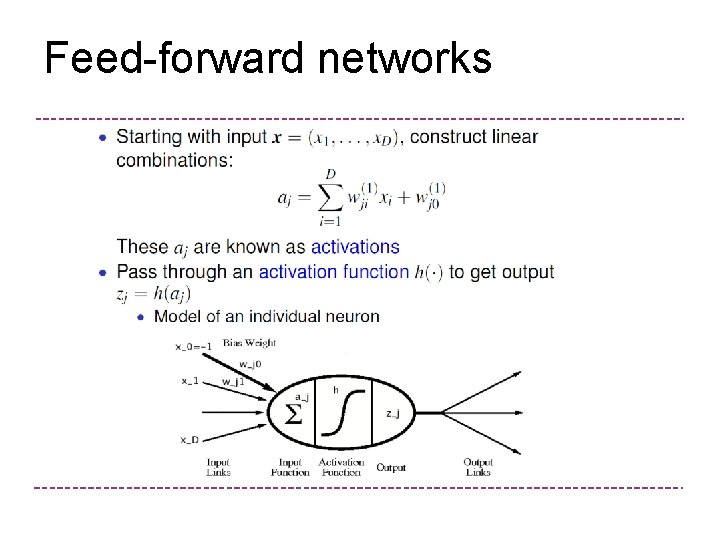

Feed-forward networks

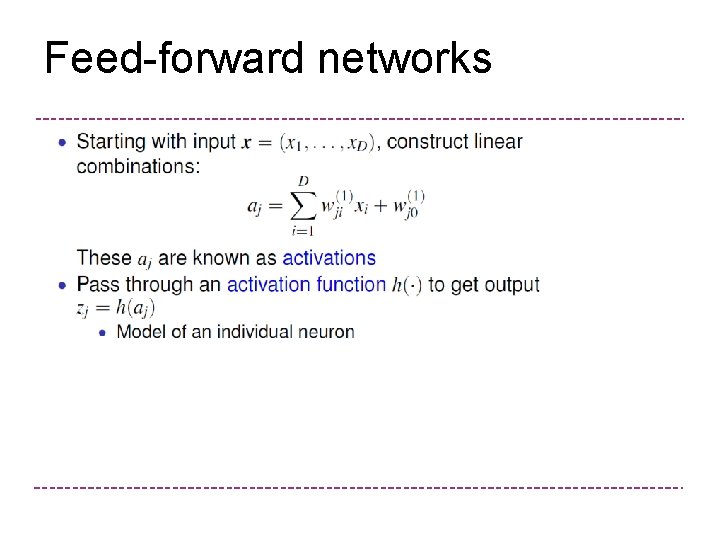

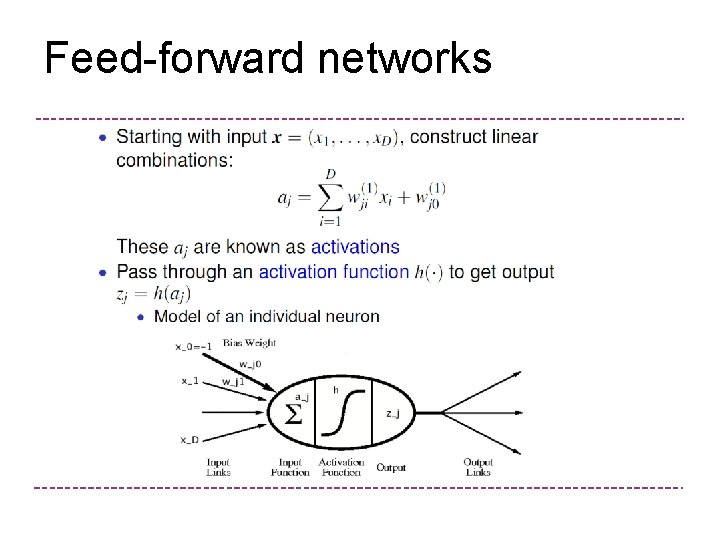

Feed-forward networks

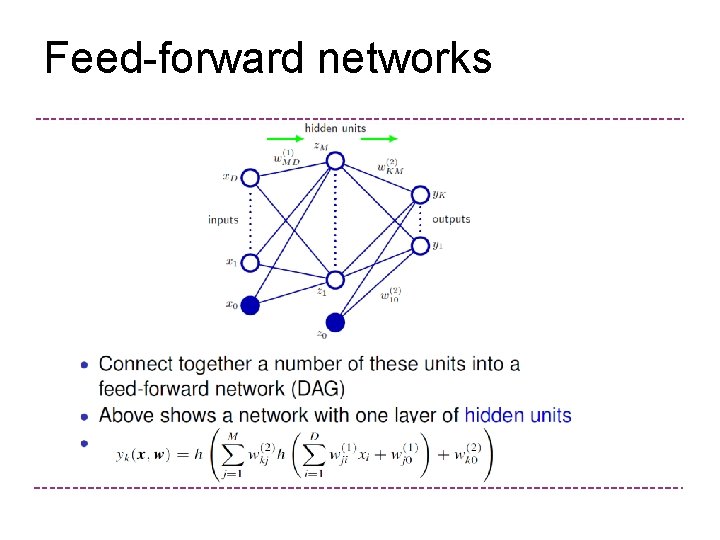

Feed-forward networks

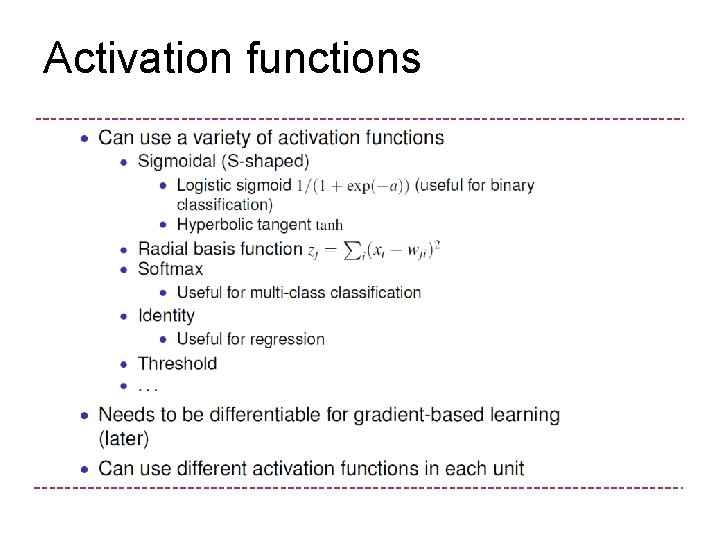

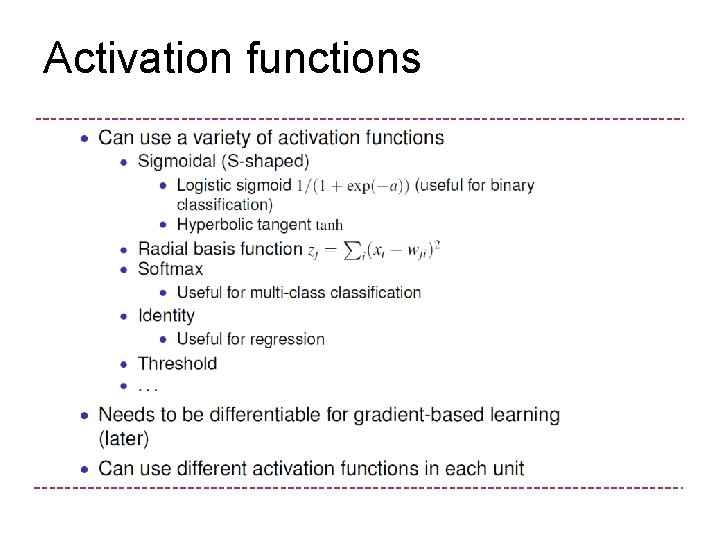

Activation functions

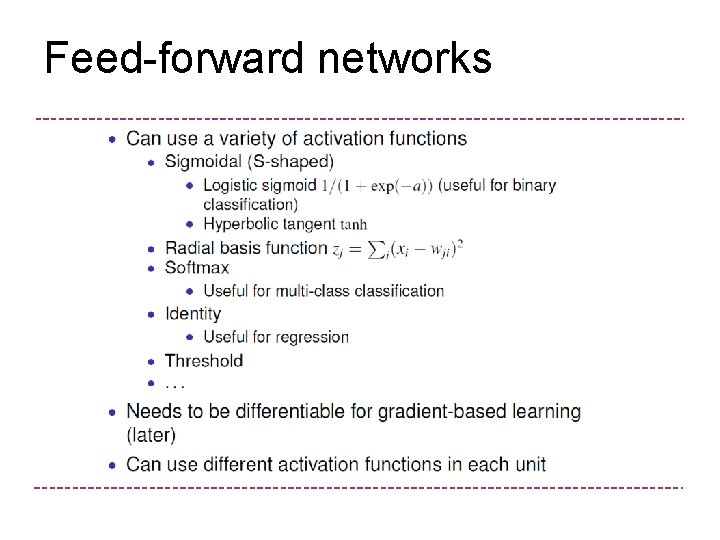

Feed-forward networks

Feed-forward networks

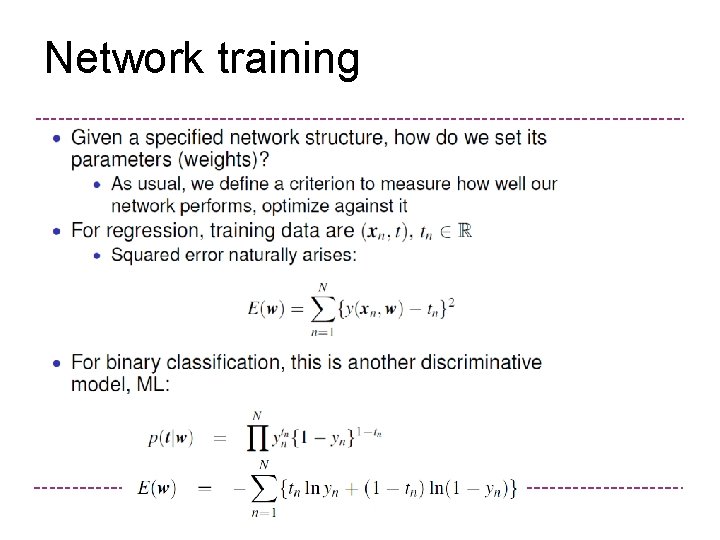

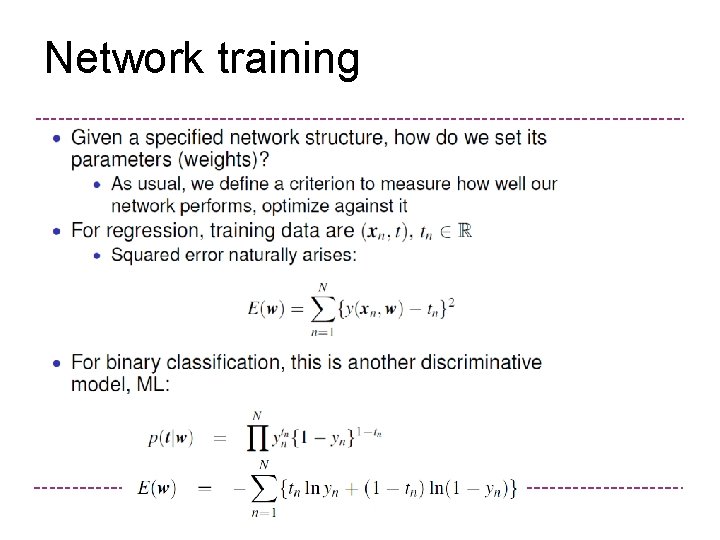

Network training

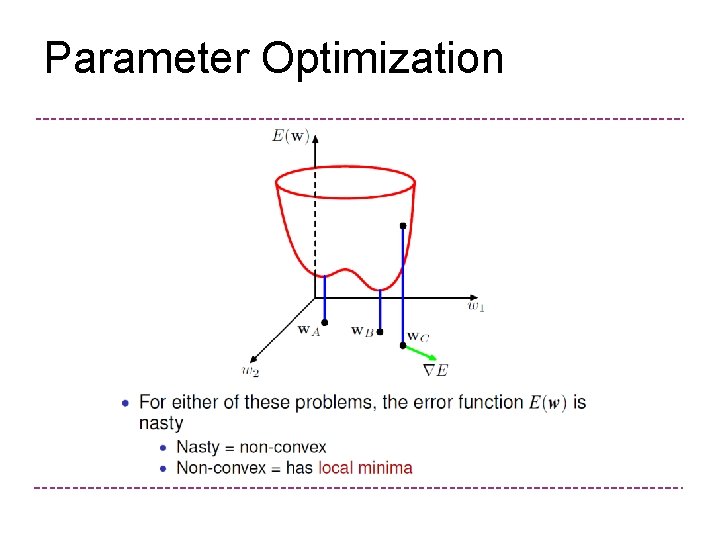

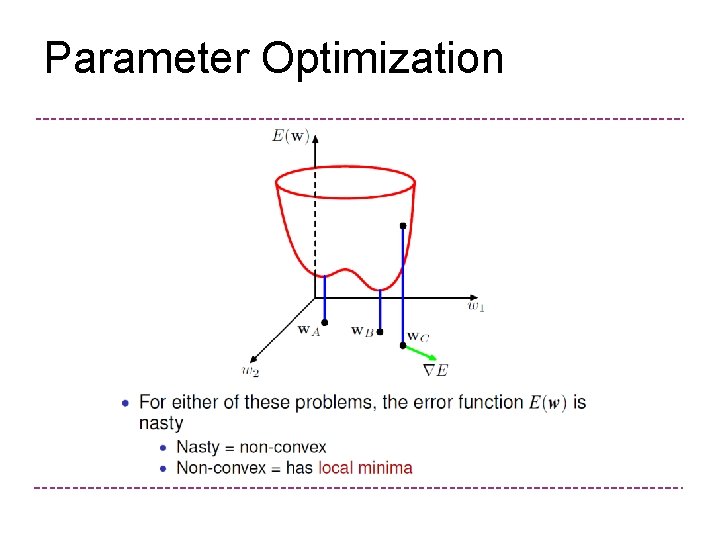

Parameter Optimization

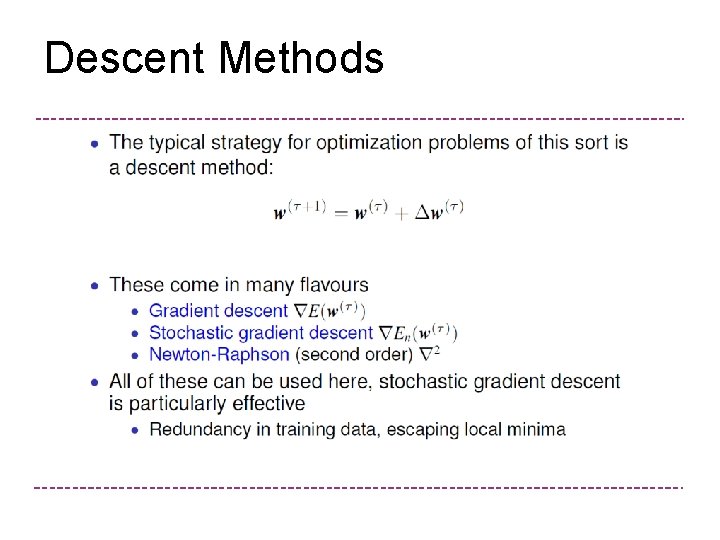

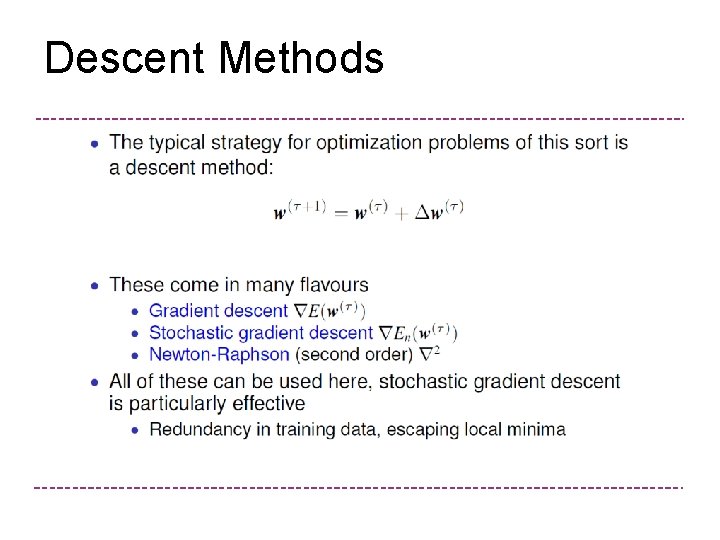

Descent Methods

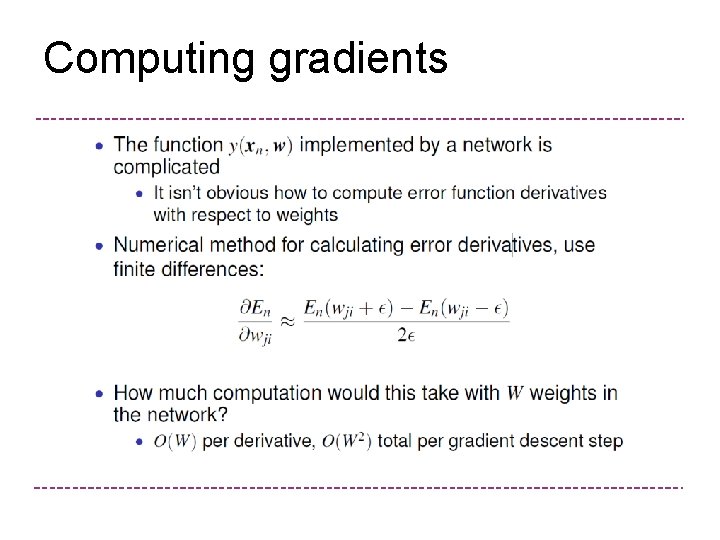

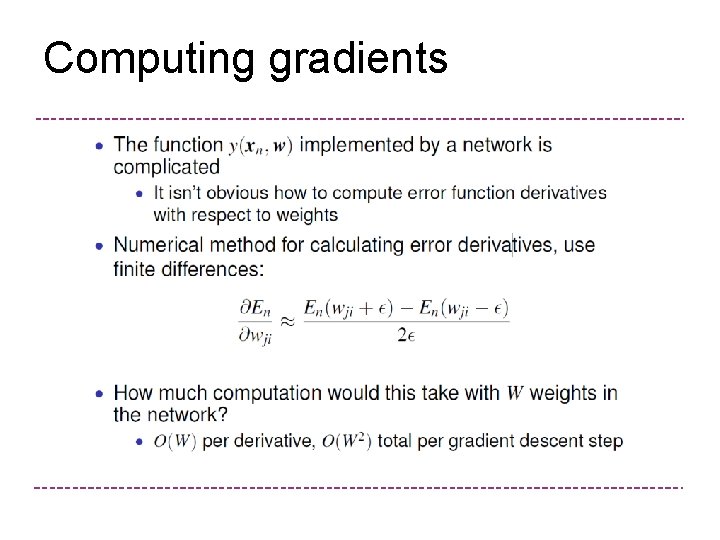

Computing gradients

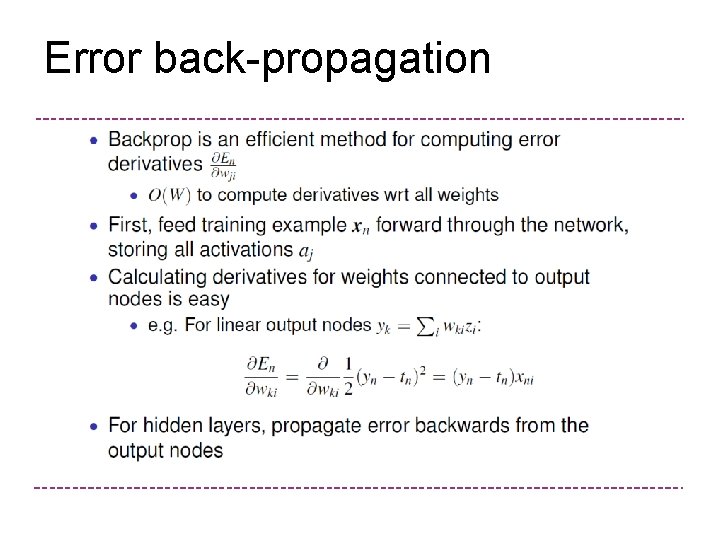

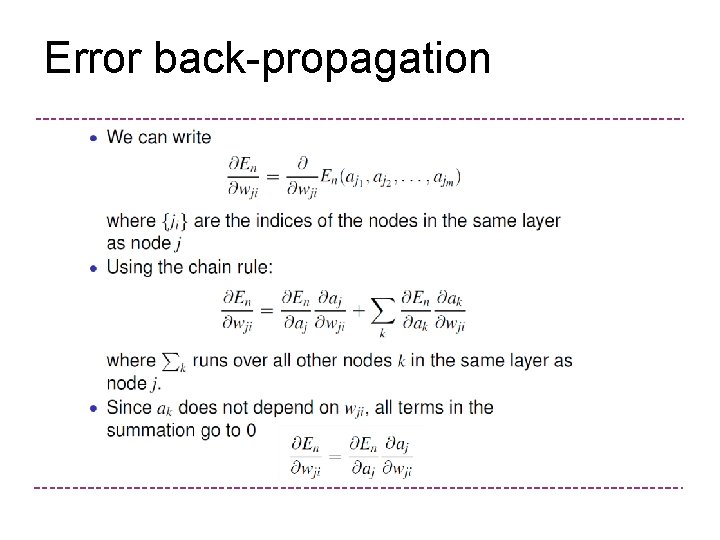

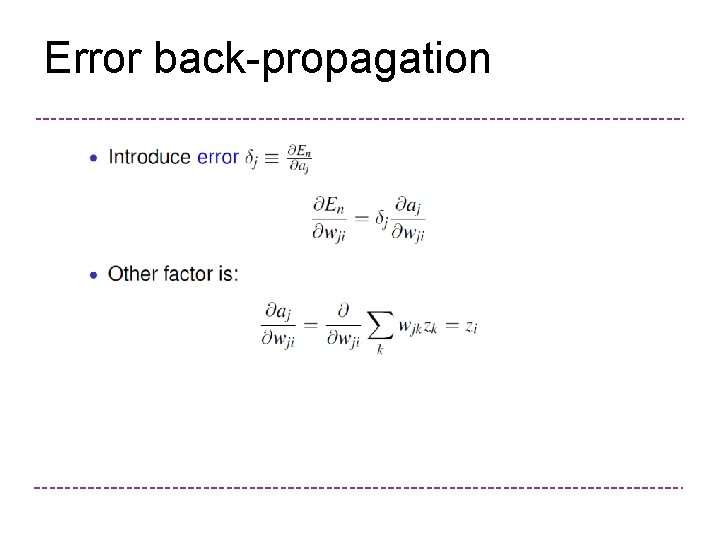

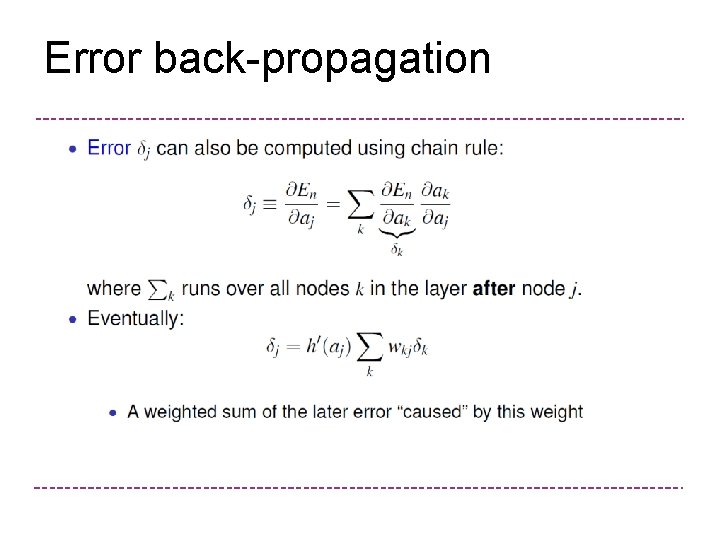

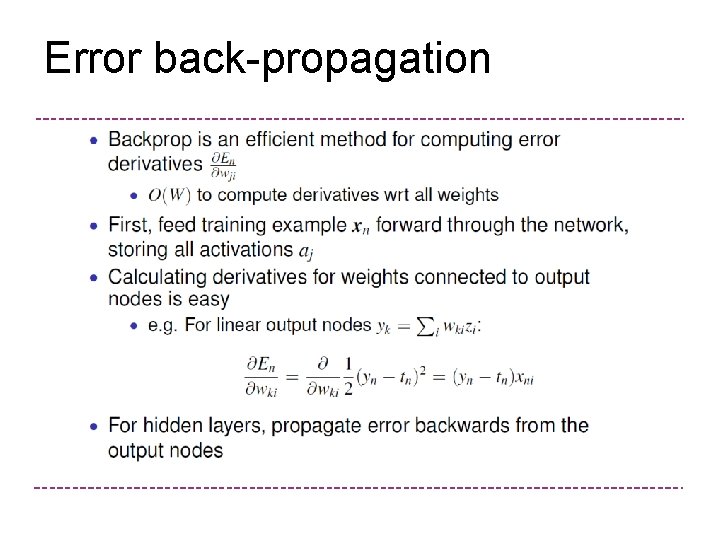

Error back-propagation

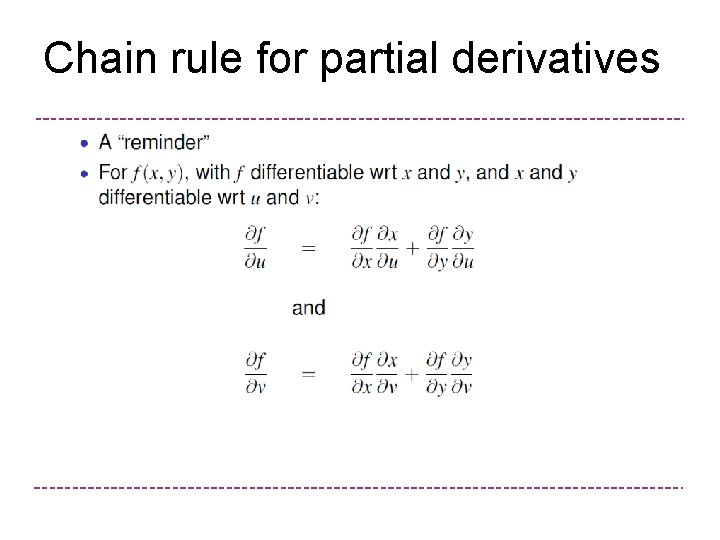

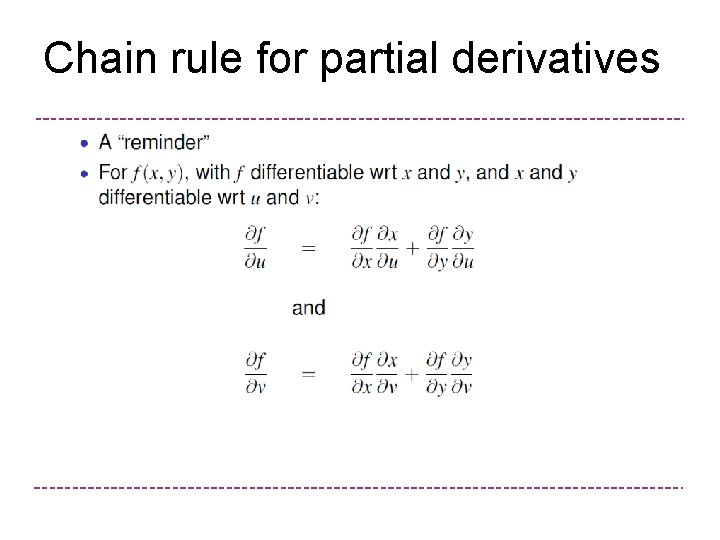

Chain rule for partial derivatives

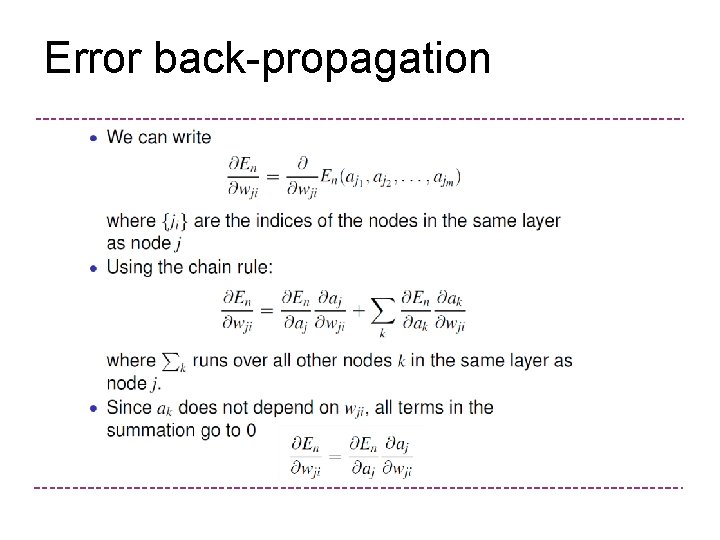

Error back-propagation

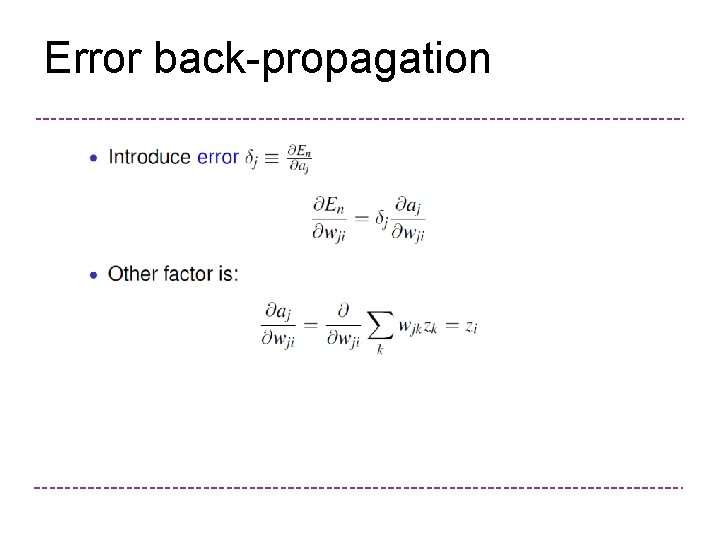

Error back-propagation

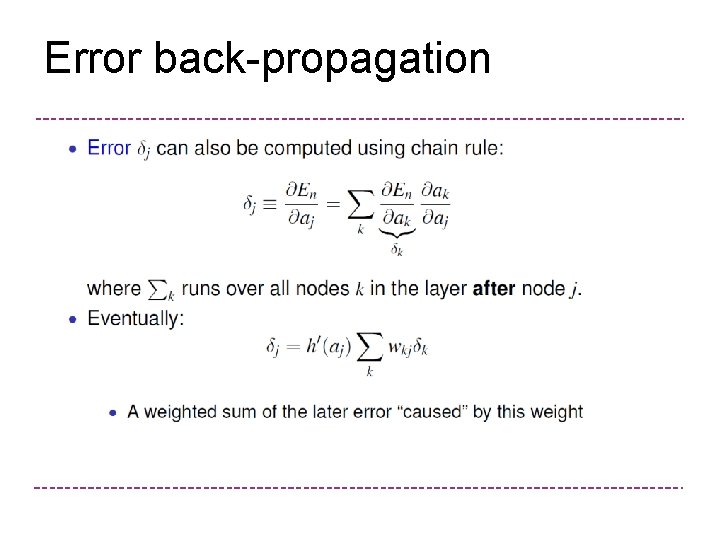

Error back-propagation

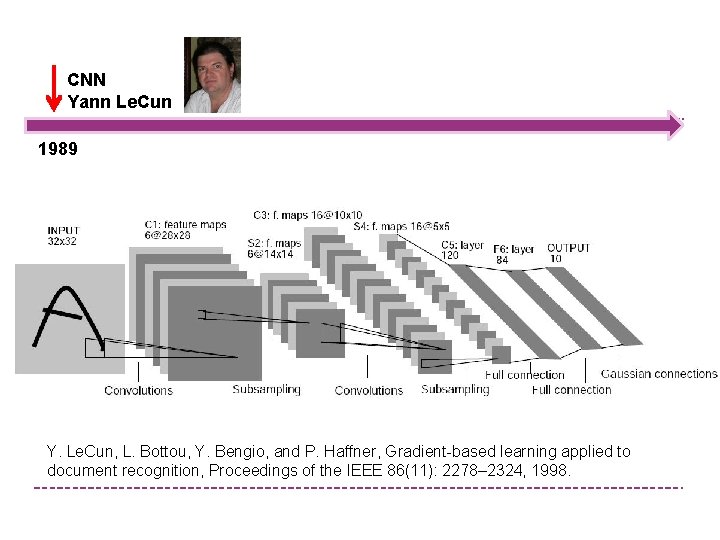

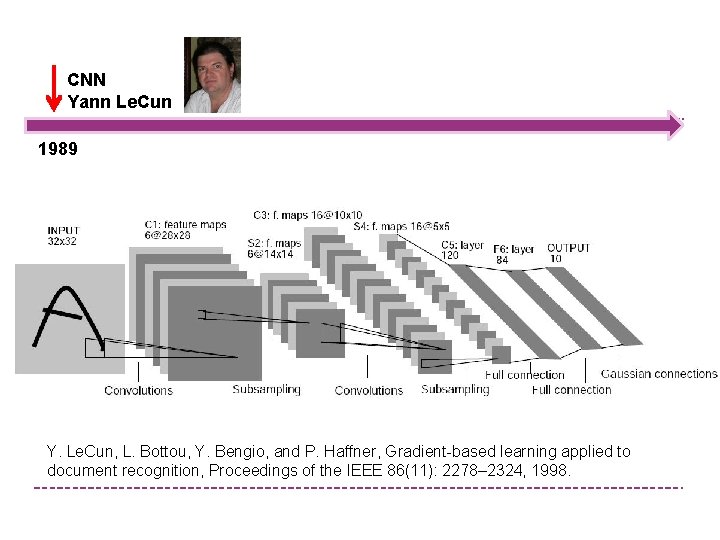

CNN Yann Le. Cun 1989 Y. Le. Cun, L. Bottou, Y. Bengio, and P. Haffner, Gradient-based learning applied to document recognition, Proceedings of the IEEE 86(11): 2278– 2324, 1998.

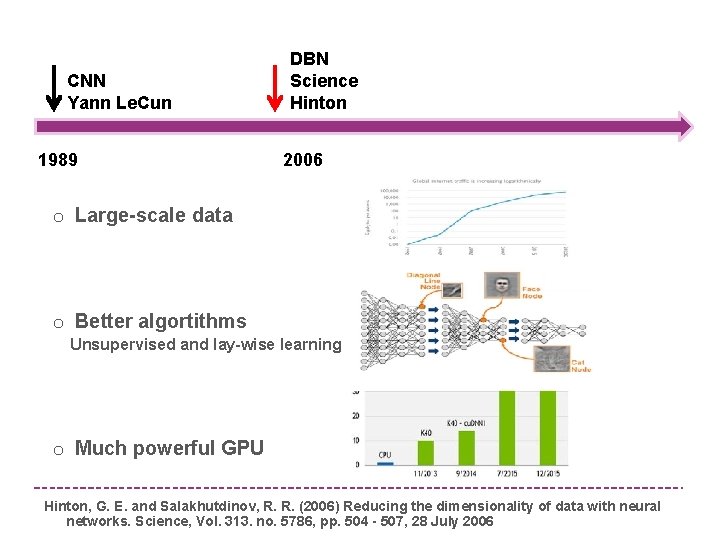

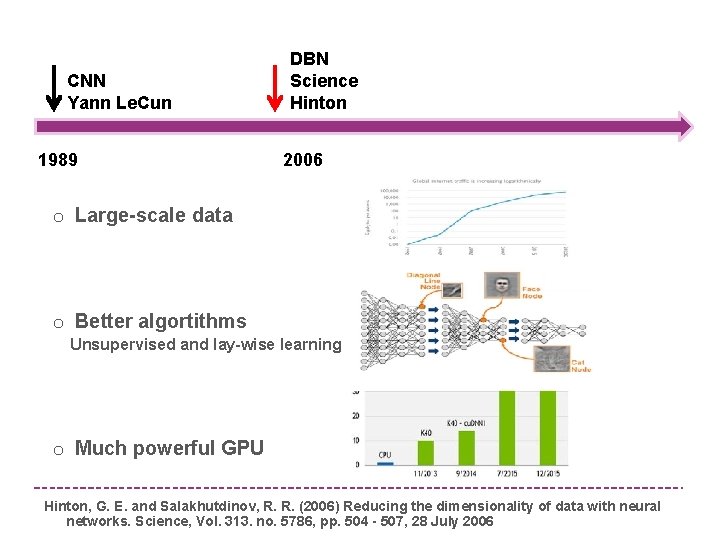

CNN Yann Le. Cun DBN Science Hinton 1989 2006 o Large-scale data o Better algortithms Unsupervised and lay-wise learning o Much powerful GPU Hinton, G. E. and Salakhutdinov, R. R. (2006) Reducing the dimensionality of data with neural networks. Science, Vol. 313. no. 5786, pp. 504 - 507, 28 July 2006

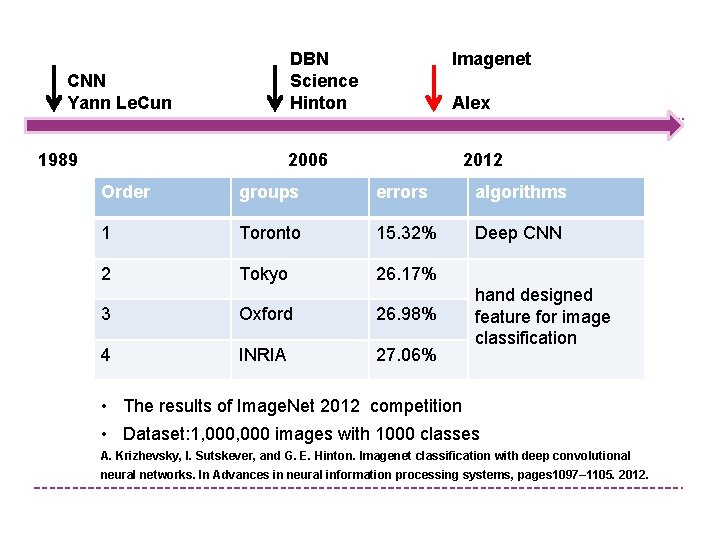

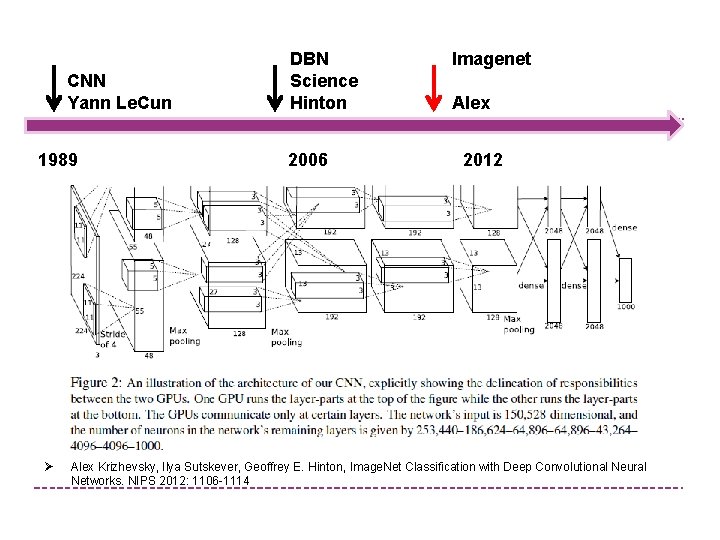

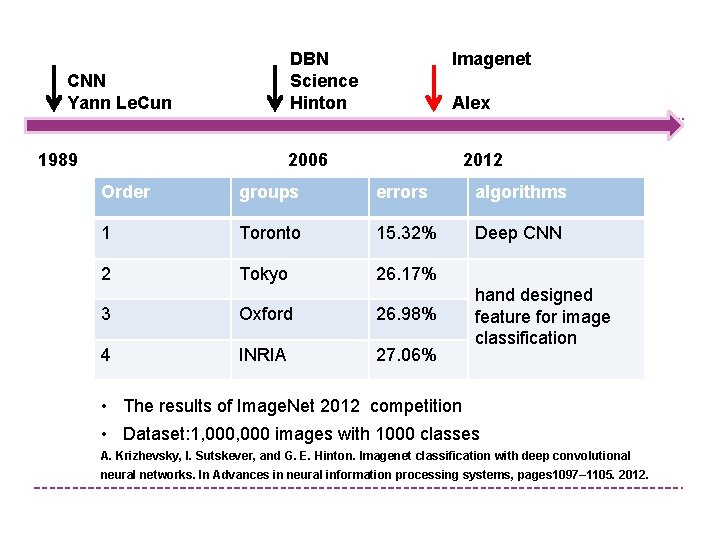

DBN Science Hinton CNN Yann Le. Cun Imagenet Alex 1989 2006 2012 Order groups errors algorithms 1 Toronto 15. 32% Deep CNN 2 Tokyo 26. 17% 3 Oxford 26. 98% 4 INRIA 27. 06% hand designed feature for image classification • The results of Image. Net 2012 competition • Dataset: 1, 000 images with 1000 classes A. Krizhevsky, I. Sutskever, and G. E. Hinton. Imagenet classification with deep convolutional neural networks. In Advances in neural information processing systems, pages 1097– 1105. 2012.

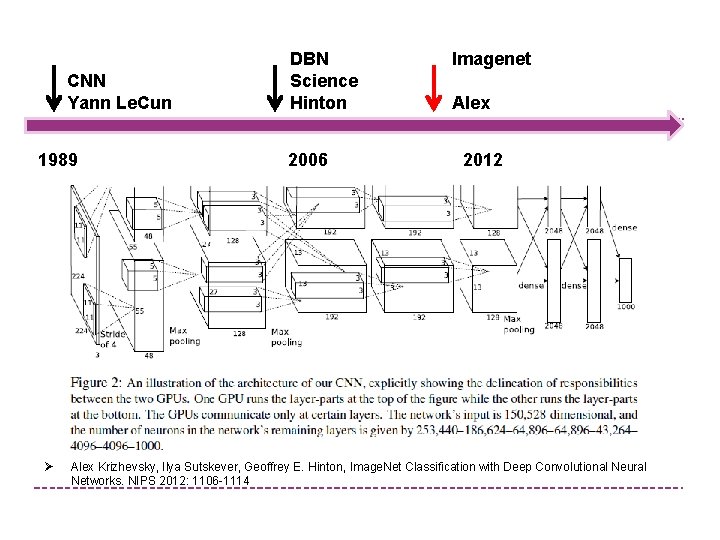

CNN Yann Le. Cun DBN Science Hinton Imagenet Alex 1989 2006 2012 Ø Alex Krizhevsky, Ilya Sutskever, Geoffrey E. Hinton, Image. Net Classification with Deep Convolutional Neural Networks. NIPS 2012: 1106 -1114

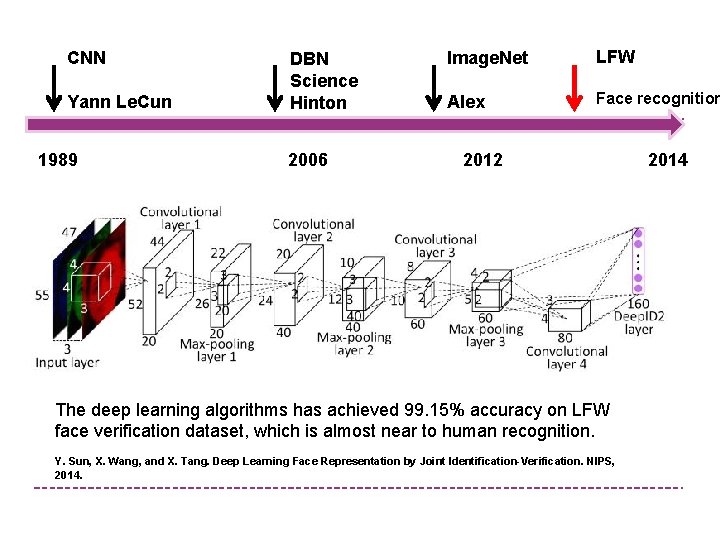

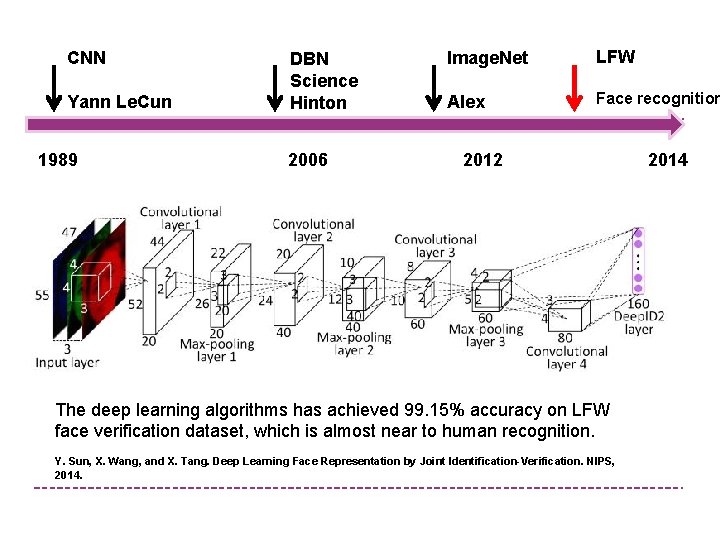

CNN Yann Le. Cun DBN Science Hinton Image. Net LFW Alex Face recognition 1989 2006 2012 2014 The deep learning algorithms has achieved 99. 15% accuracy on LFW face verification dataset, which is almost near to human recognition. Y. Sun, X. Wang, and X. Tang. Deep Learning Face Representation by Joint Identification-Verification. NIPS, 2014.

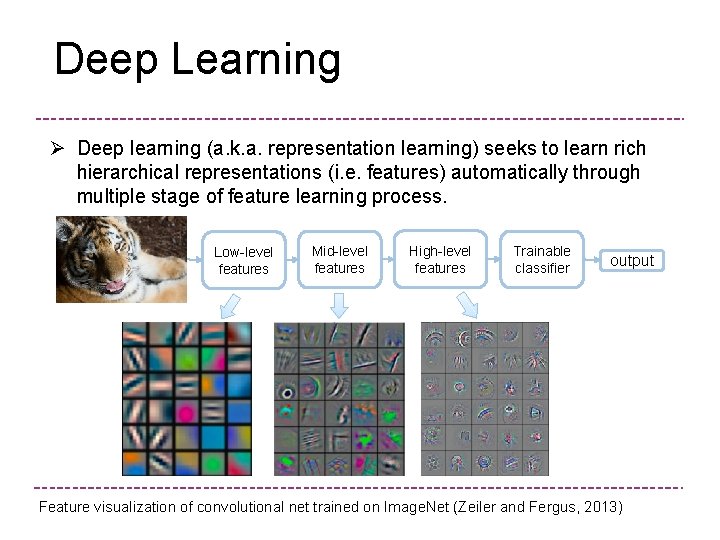

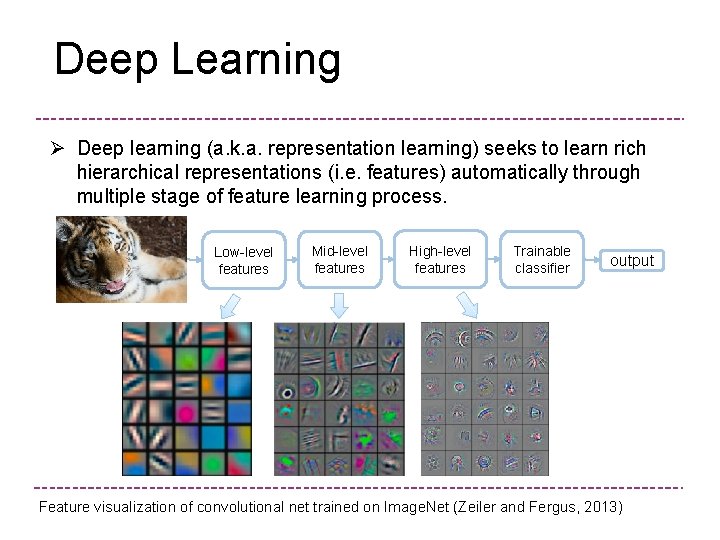

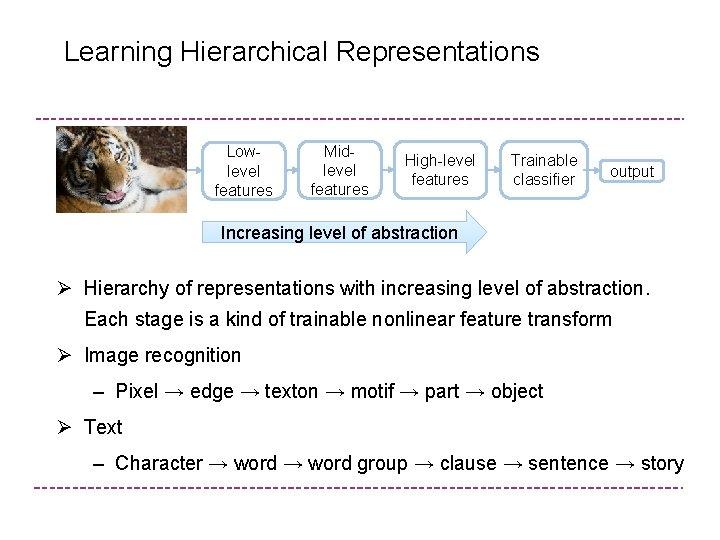

Deep Learning Ø Deep learning (a. k. a. representation learning) seeks to learn rich hierarchical representations (i. e. features) automatically through multiple stage of feature learning process. Low-level features Mid-level features High-level features Trainable classifier output Feature visualization of convolutional net trained on Image. Net (Zeiler and Fergus, 2013)

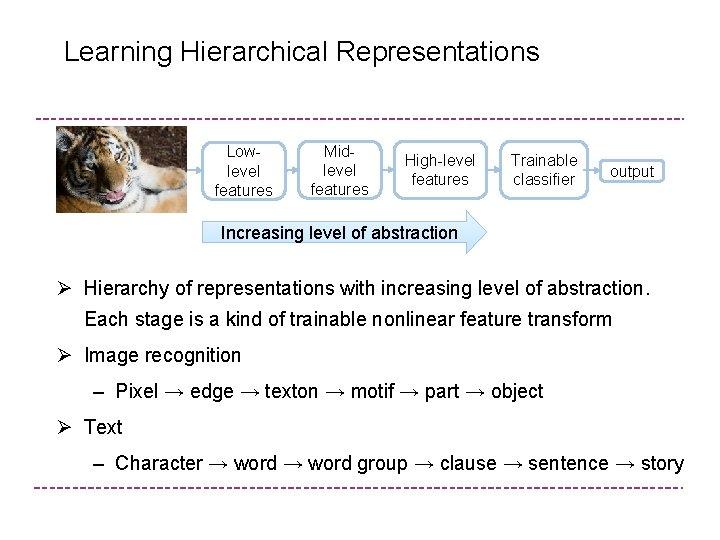

Learning Hierarchical Representations Lowlevel features Midlevel features High-level features Trainable classifier output Increasing level of abstraction Ø Hierarchy of representations with increasing level of abstraction. Each stage is a kind of trainable nonlinear feature transform Ø Image recognition – Pixel → edge → texton → motif → part → object Ø Text – Character → word group → clause → sentence → story

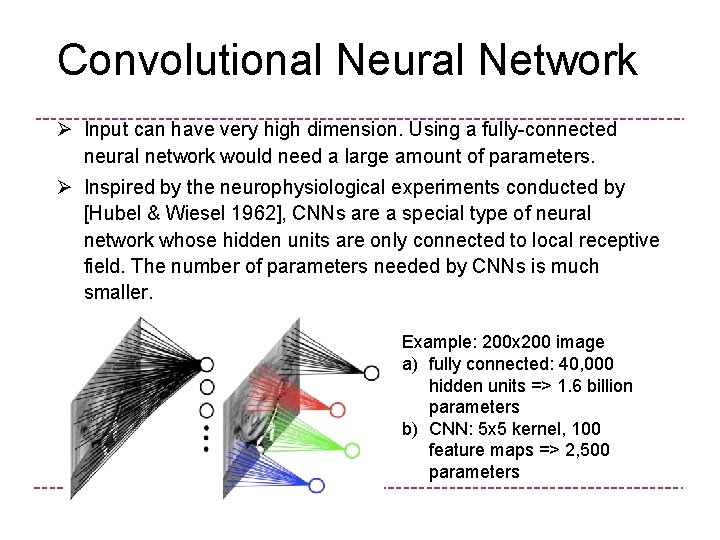

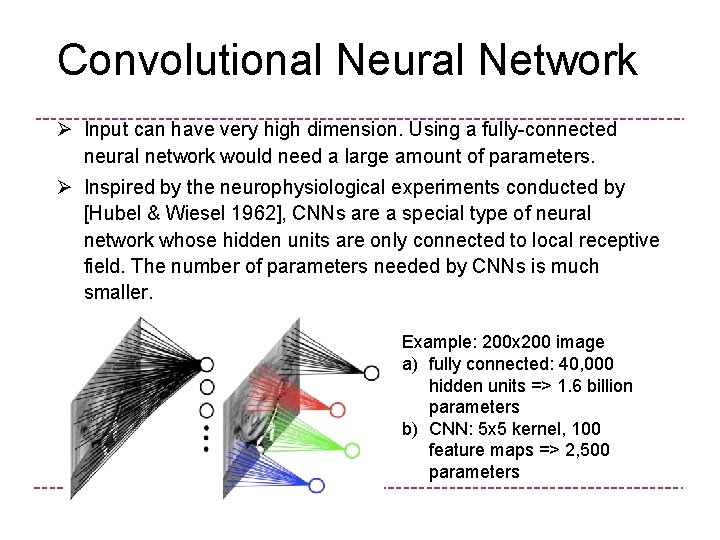

Convolutional Neural Network Ø Input can have very high dimension. Using a fully-connected neural network would need a large amount of parameters. Ø Inspired by the neurophysiological experiments conducted by [Hubel & Wiesel 1962], CNNs are a special type of neural network whose hidden units are only connected to local receptive field. The number of parameters needed by CNNs is much smaller. Example: 200 x 200 image a) fully connected: 40, 000 hidden units => 1. 6 billion parameters b) CNN: 5 x 5 kernel, 100 feature maps => 2, 500 parameters

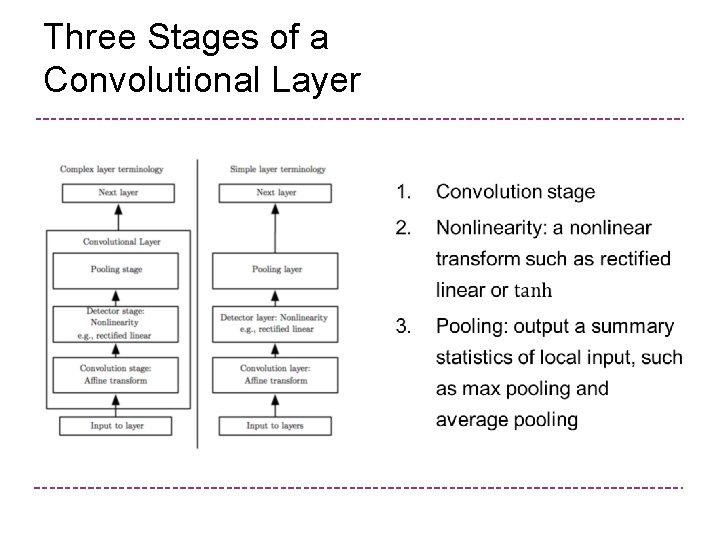

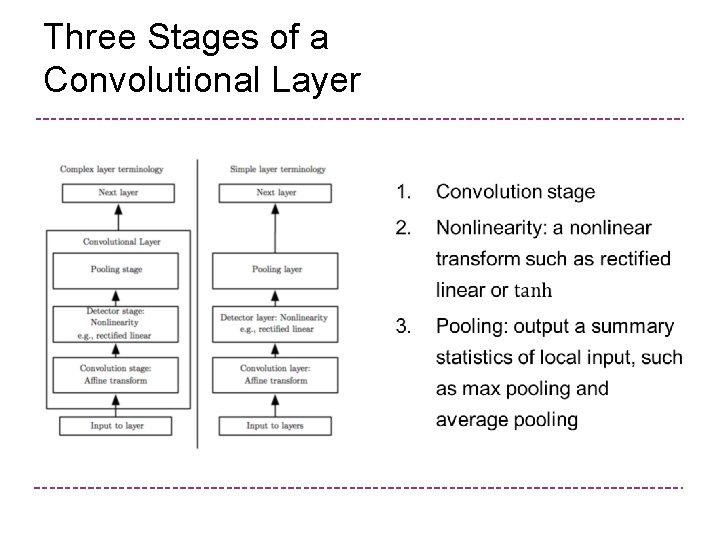

Three Stages of a Convolutional Layer Ø

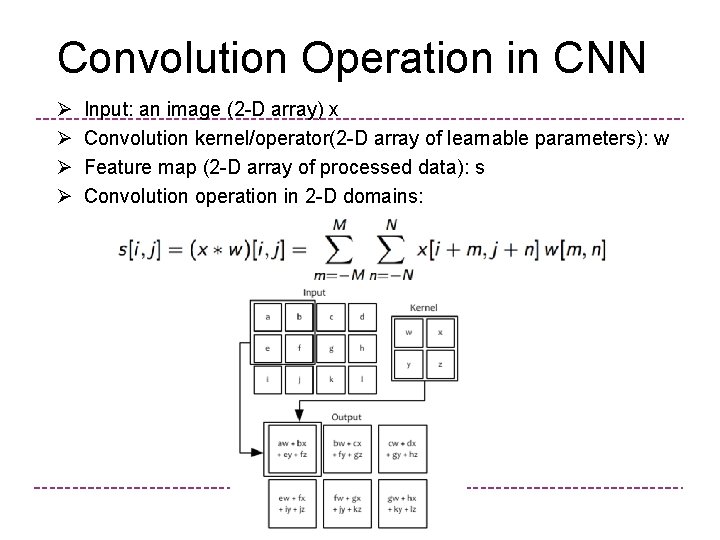

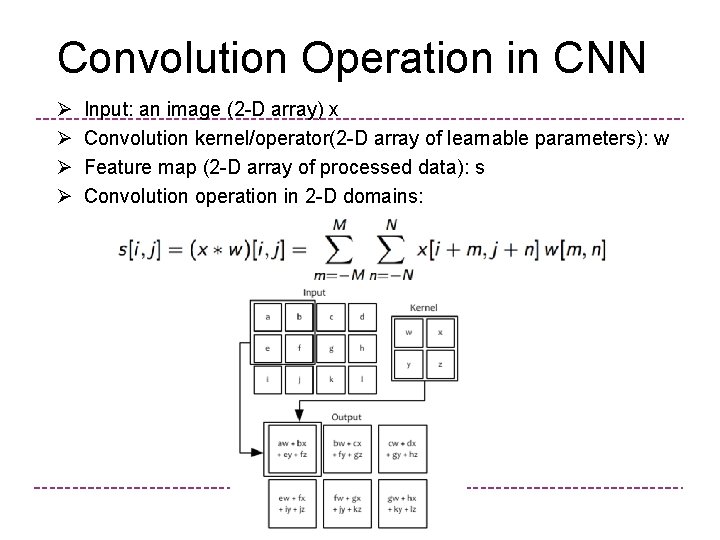

Convolution Operation in CNN Ø Ø Input: an image (2 -D array) x Convolution kernel/operator(2 -D array of learnable parameters): w Feature map (2 -D array of processed data): s Convolution operation in 2 -D domains:

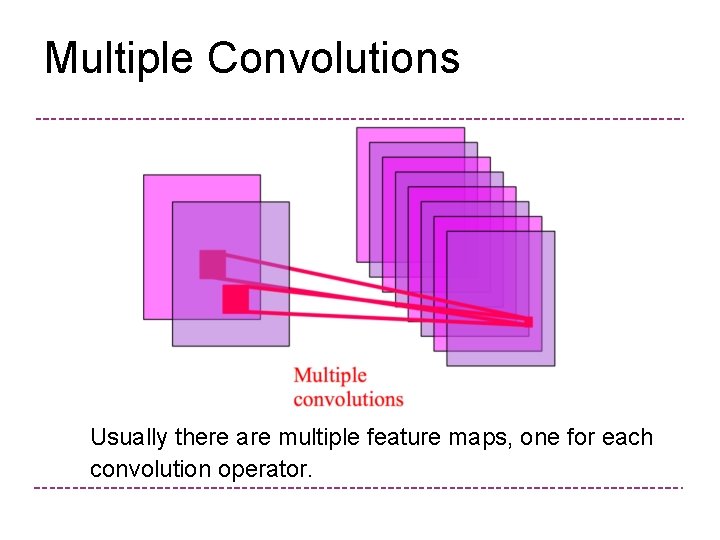

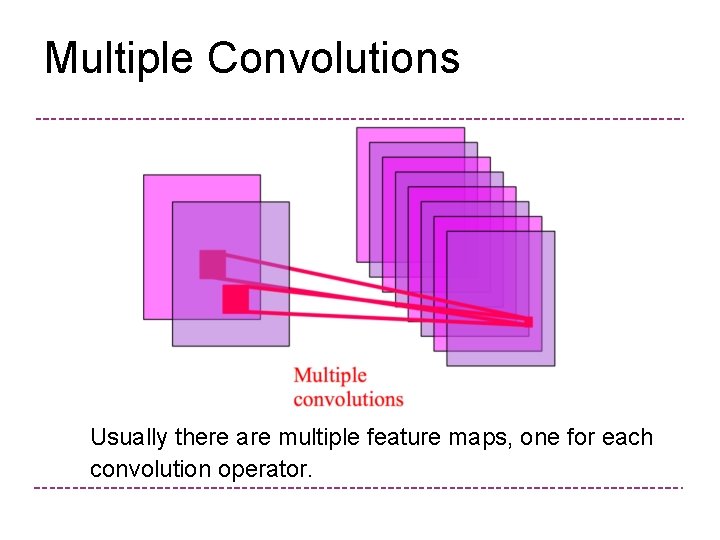

Multiple Convolutions Usually there are multiple feature maps, one for each convolution operator.

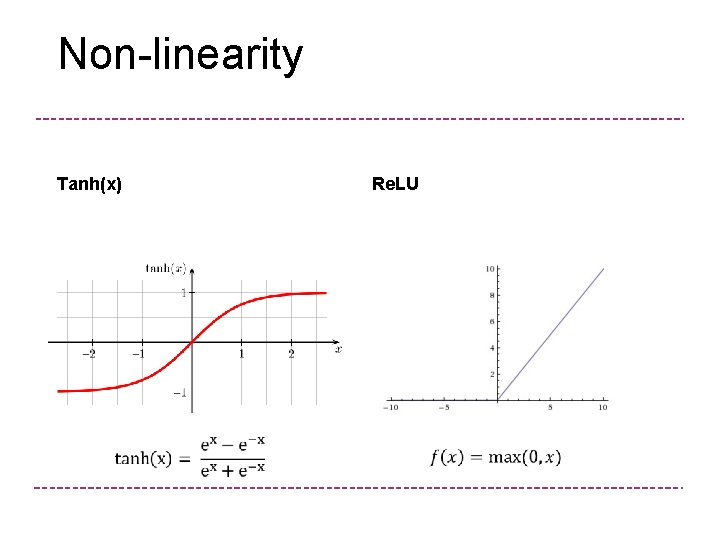

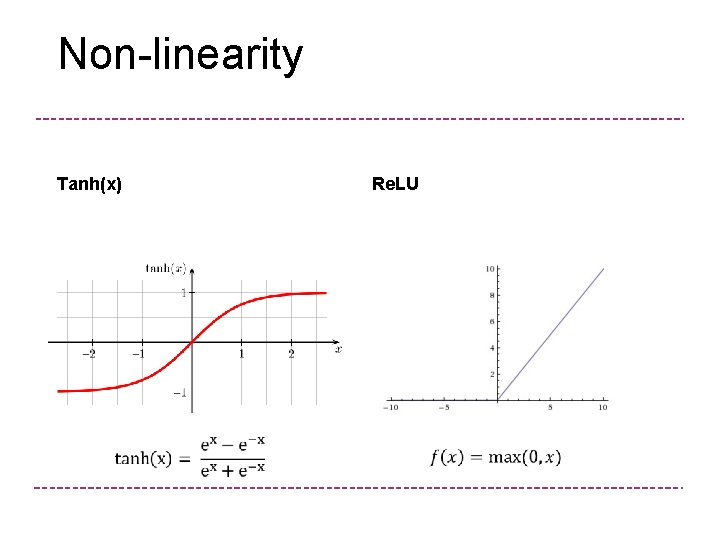

Non-linearity Tanh(x) Re. LU

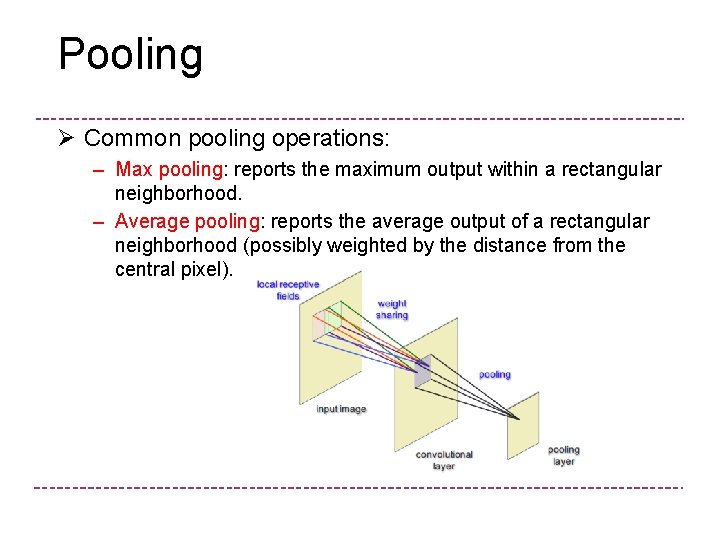

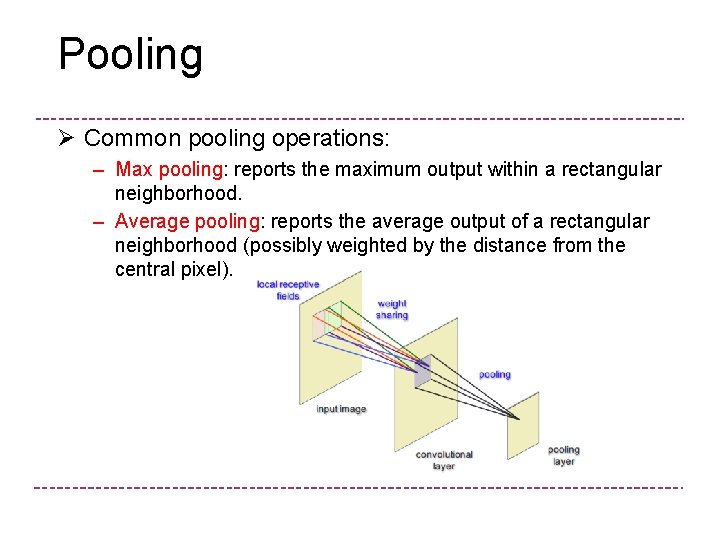

Pooling Ø Common pooling operations: – Max pooling: reports the maximum output within a rectangular neighborhood. – Average pooling: reports the average output of a rectangular neighborhood (possibly weighted by the distance from the central pixel).

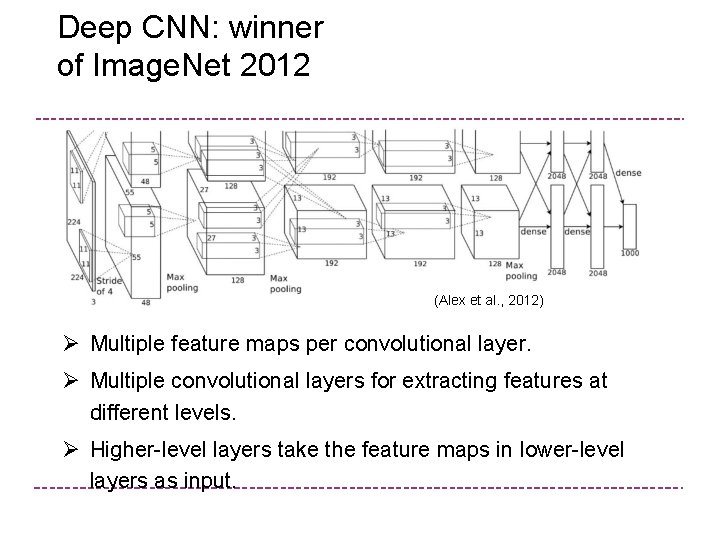

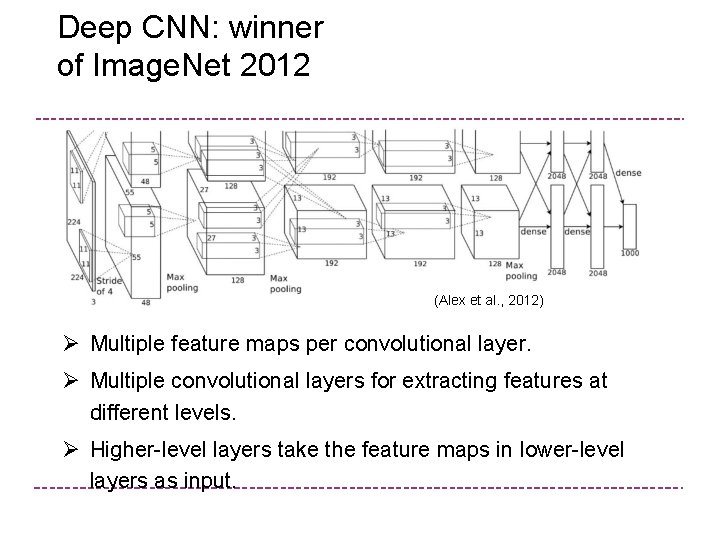

Deep CNN: winner of Image. Net 2012 (Alex et al. , 2012) Ø Multiple feature maps per convolutional layer. Ø Multiple convolutional layers for extracting features at different levels. Ø Higher-level layers take the feature maps in lower-level layers as input.

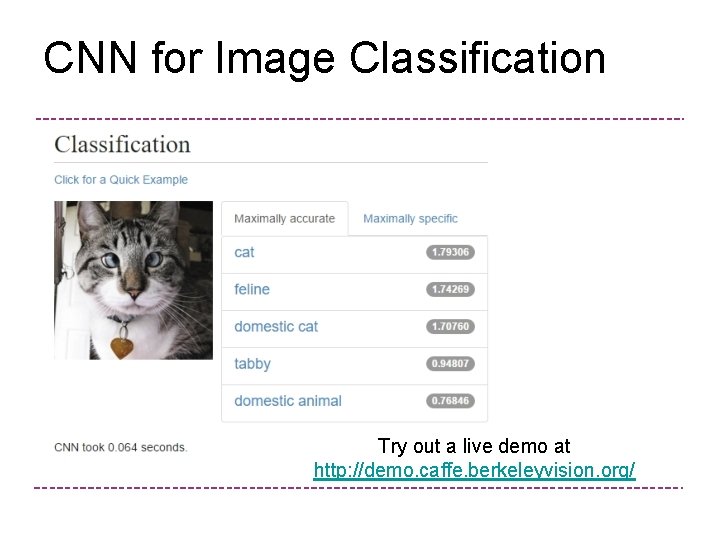

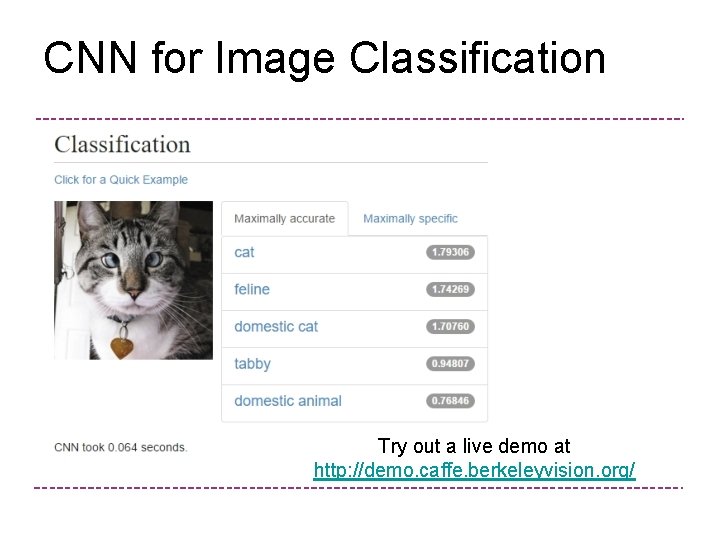

CNN for Image Classification Try out a live demo at http: //demo. caffe. berkeleyvision. org/

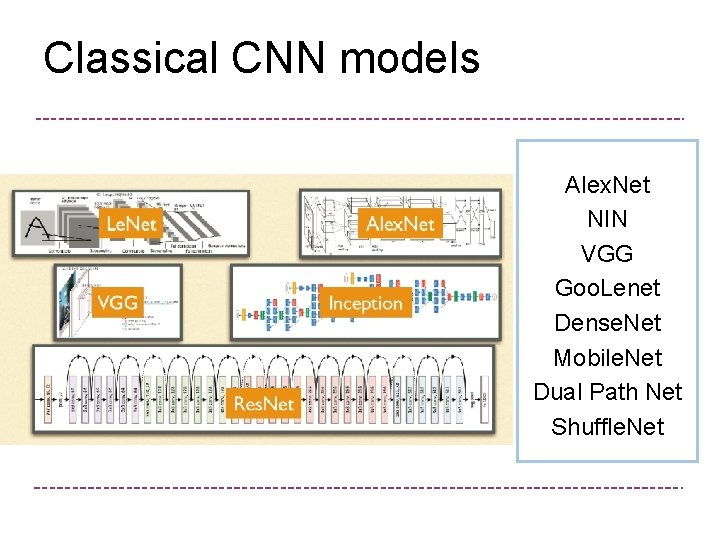

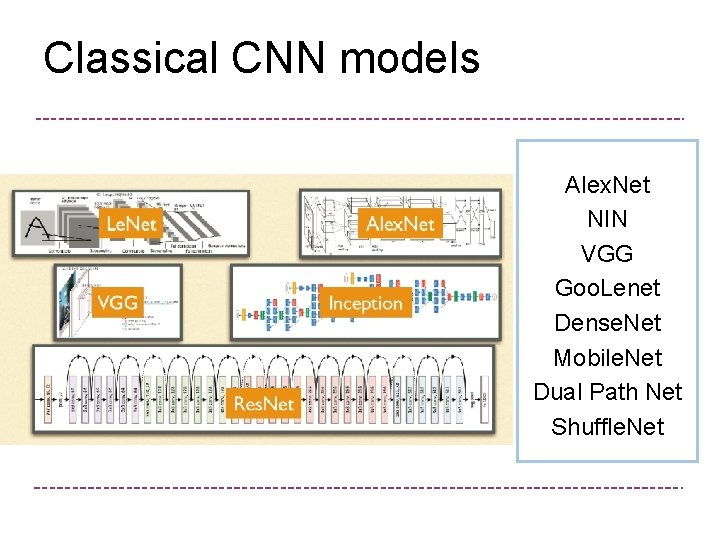

Classical CNN models Alex. Net NIN VGG Goo. Lenet Dense. Net Mobile. Net Dual Path Net Shuffle. Net

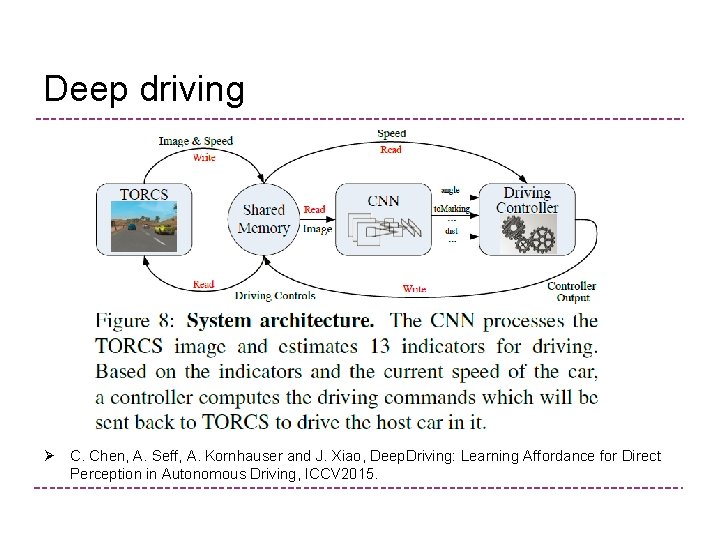

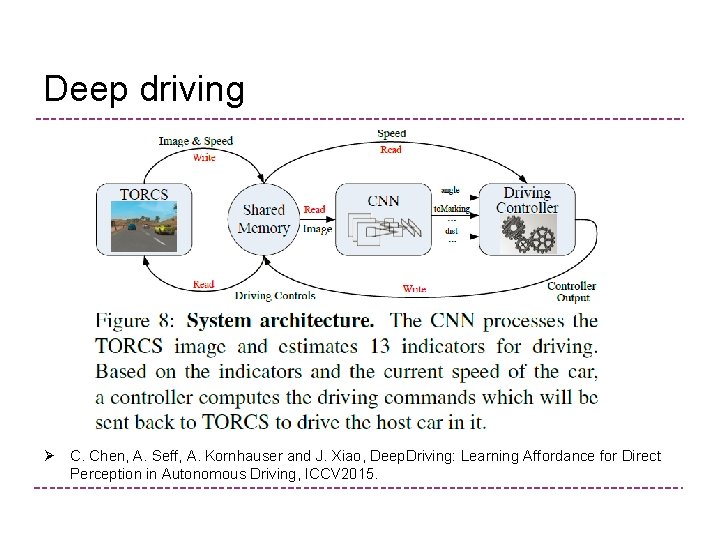

Deep driving Ø C. Chen, A. Seff, A. Kornhauser and J. Xiao, Deep. Driving: Learning Affordance for Direct Perception in Autonomous Driving, ICCV 2015.

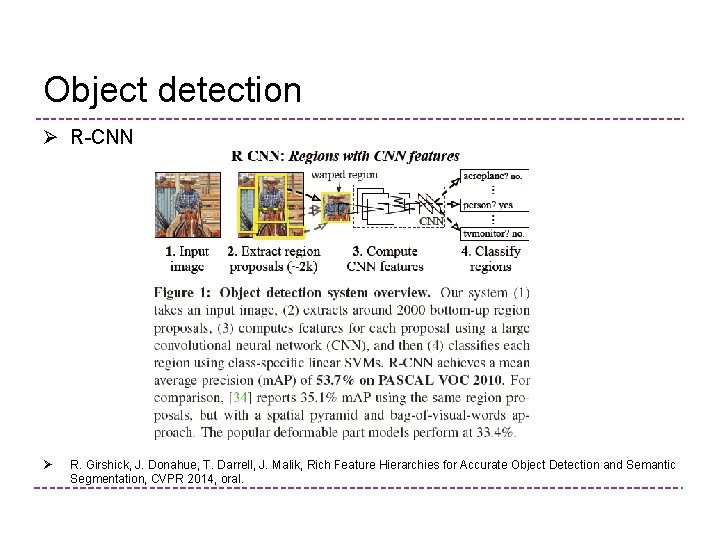

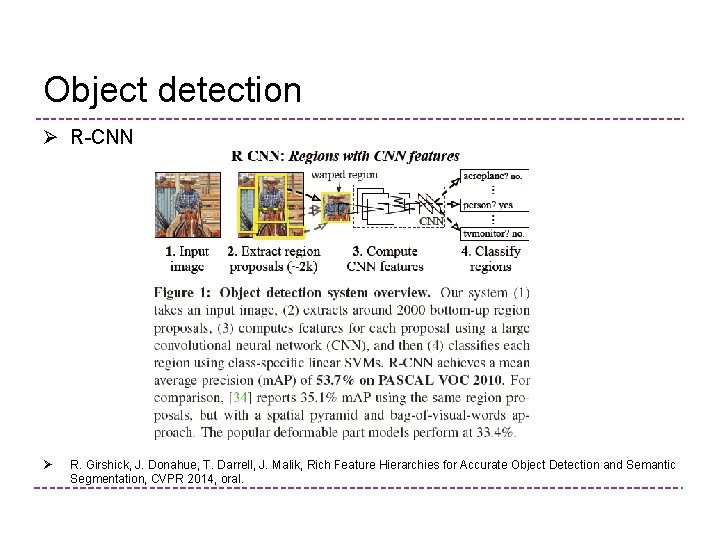

Object detection Ø R-CNN Ø R. Girshick, J. Donahue, T. Darrell, J. Malik, Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation, CVPR 2014, oral.

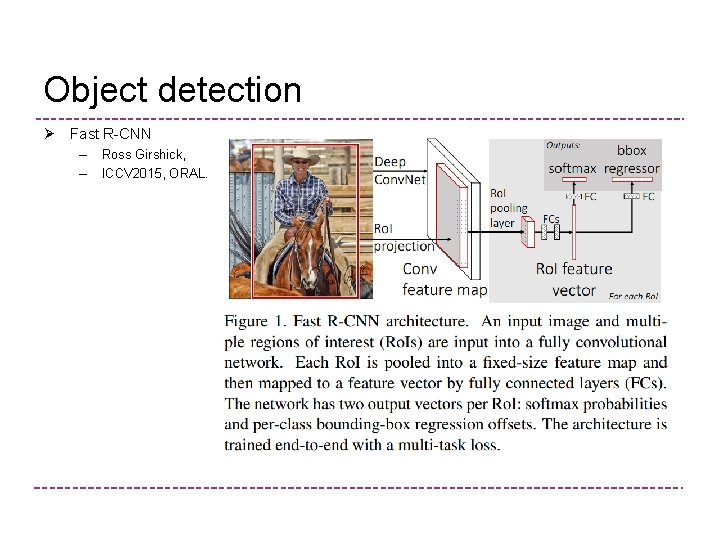

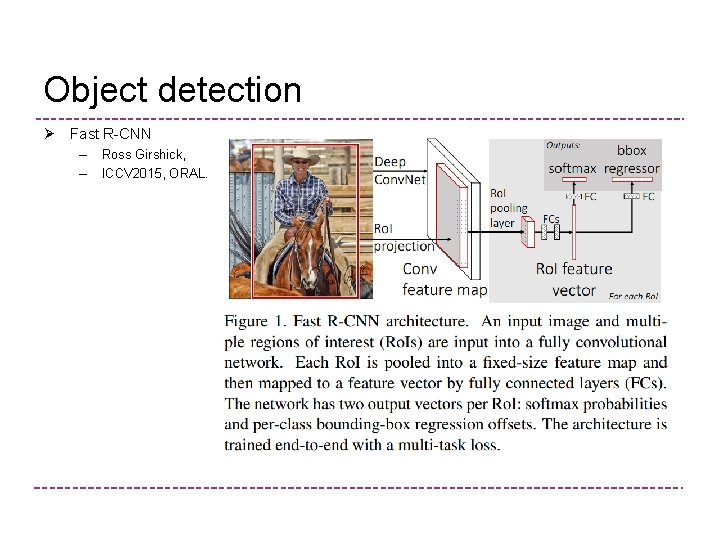

Object detection Ø Fast R-CNN – – Ross Girshick, ICCV 2015, ORAL.

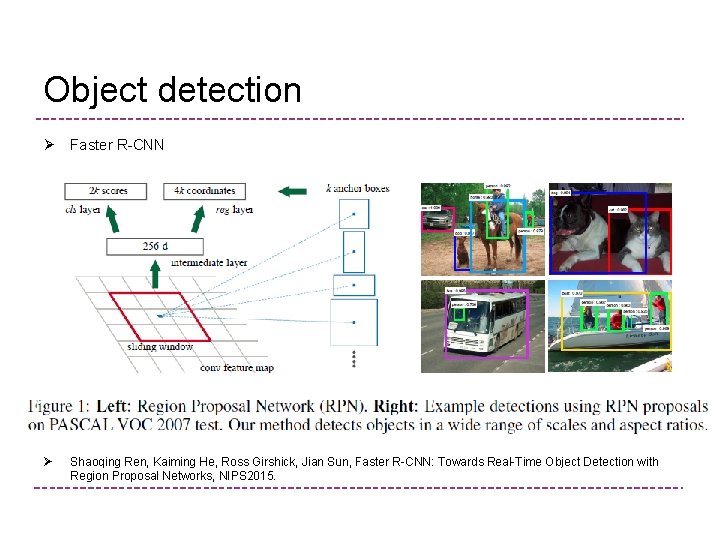

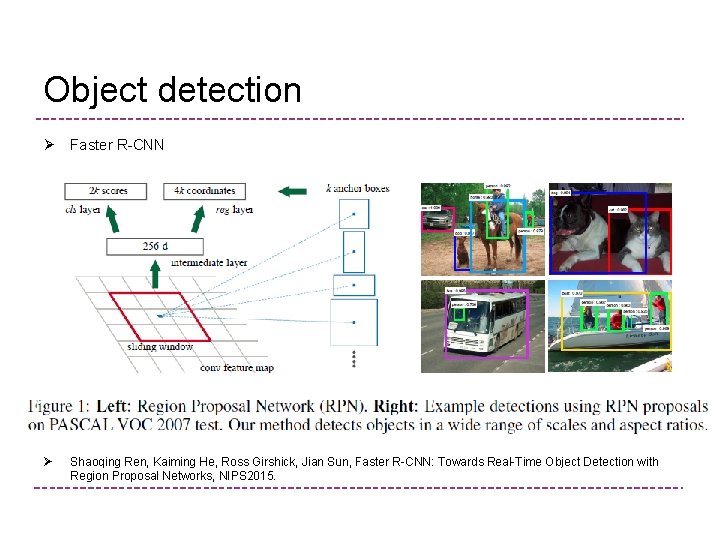

Object detection Ø Faster R-CNN Ø Shaoqing Ren, Kaiming He, Ross Girshick, Jian Sun, Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks, NIPS 2015.

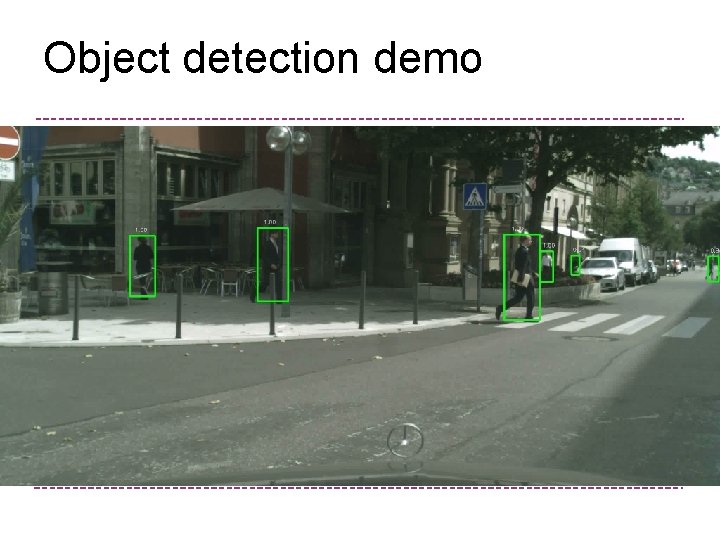

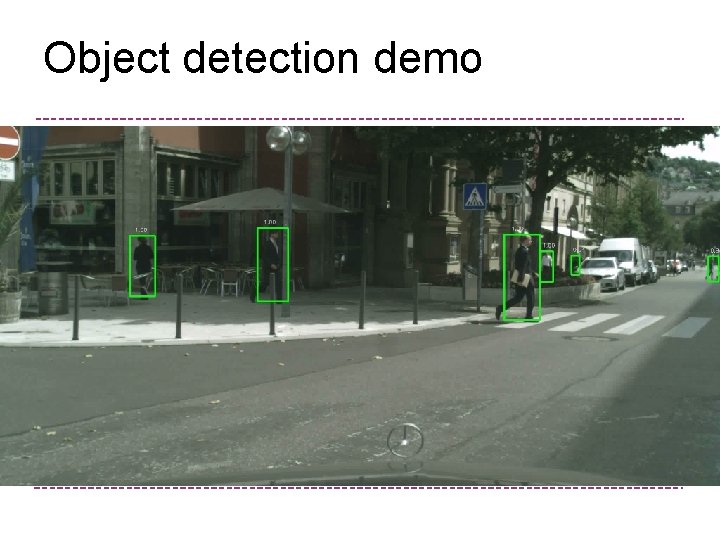

Object detection demo

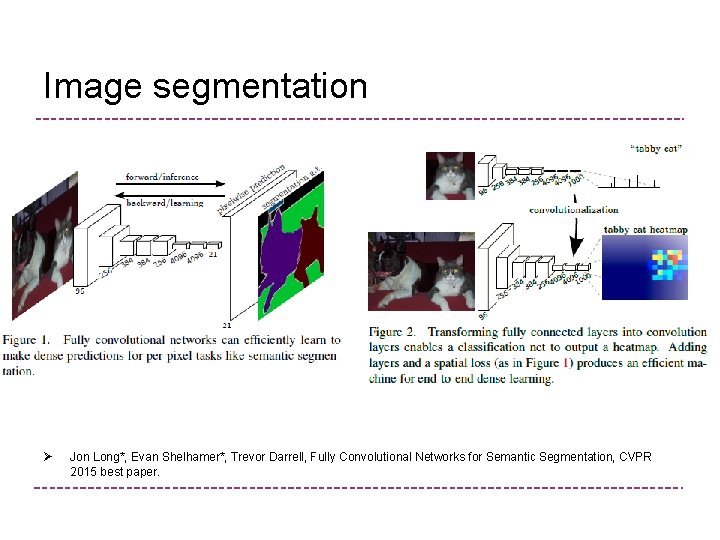

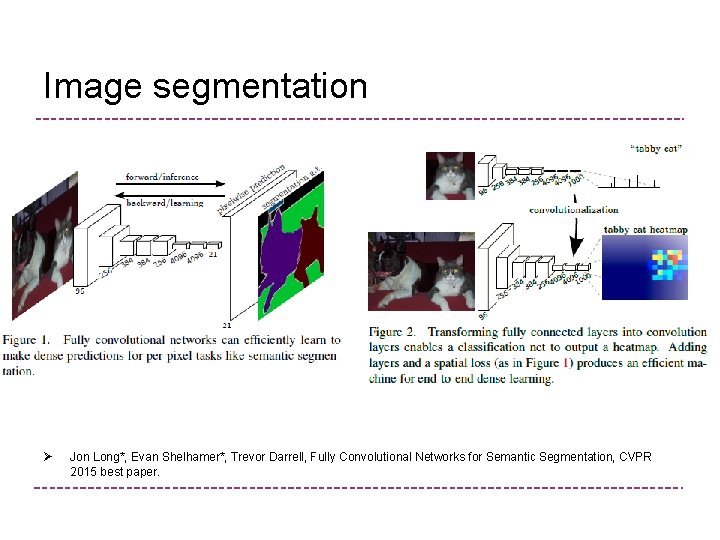

Image segmentation Ø Jon Long*, Evan Shelhamer*, Trevor Darrell, Fully Convolutional Networks for Semantic Segmentation, CVPR 2015 best paper.

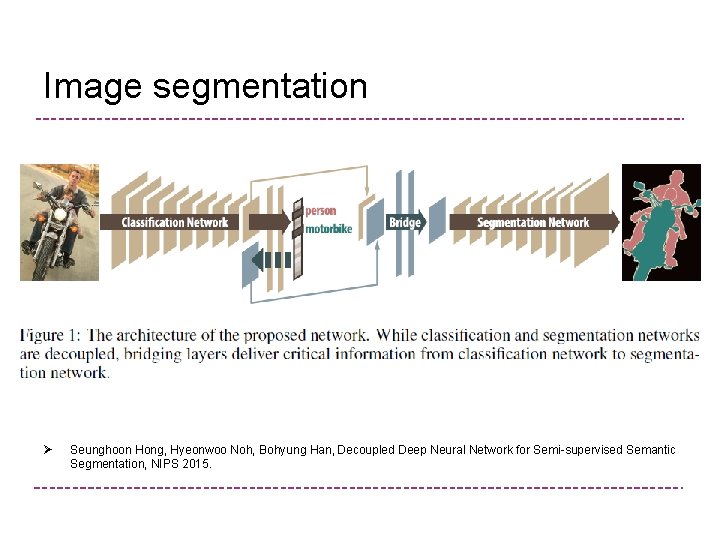

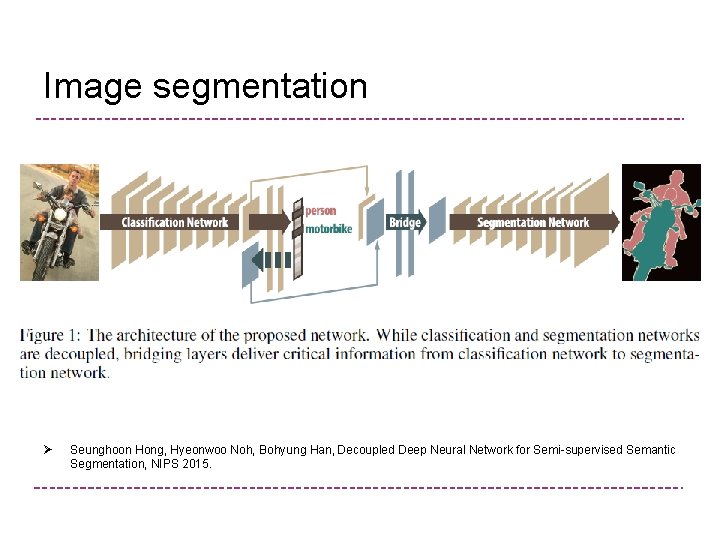

Image segmentation Ø Seunghoon Hong, Hyeonwoo Noh, Bohyung Han, Decoupled Deep Neural Network for Semi-supervised Semantic Segmentation, NIPS 2015.

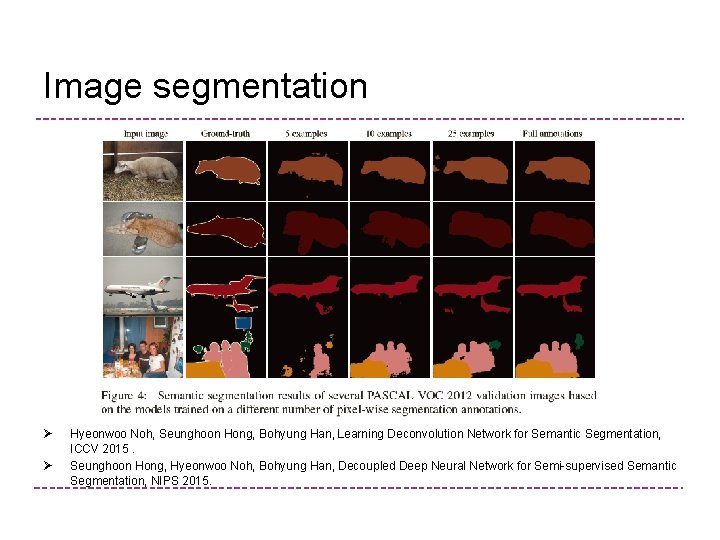

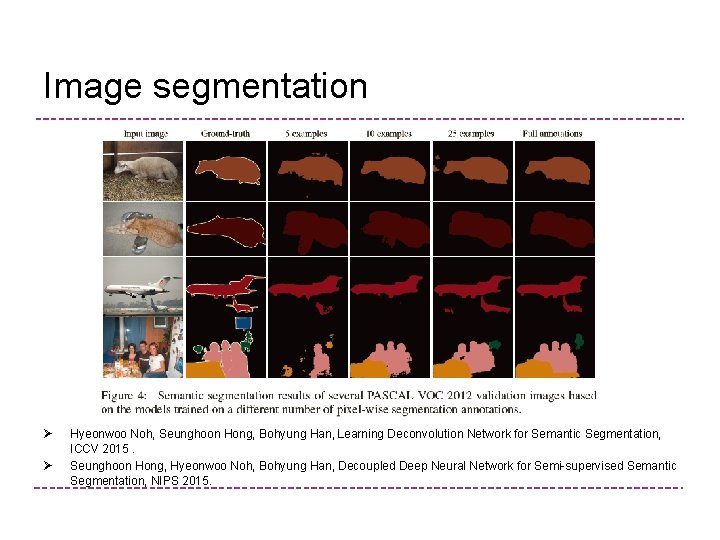

Image segmentation Ø Ø Hyeonwoo Noh, Seunghoon Hong, Bohyung Han, Learning Deconvolution Network for Semantic Segmentation, ICCV 2015. Seunghoon Hong, Hyeonwoo Noh, Bohyung Han, Decoupled Deep Neural Network for Semi-supervised Semantic Segmentation, NIPS 2015.

Segmentation demo

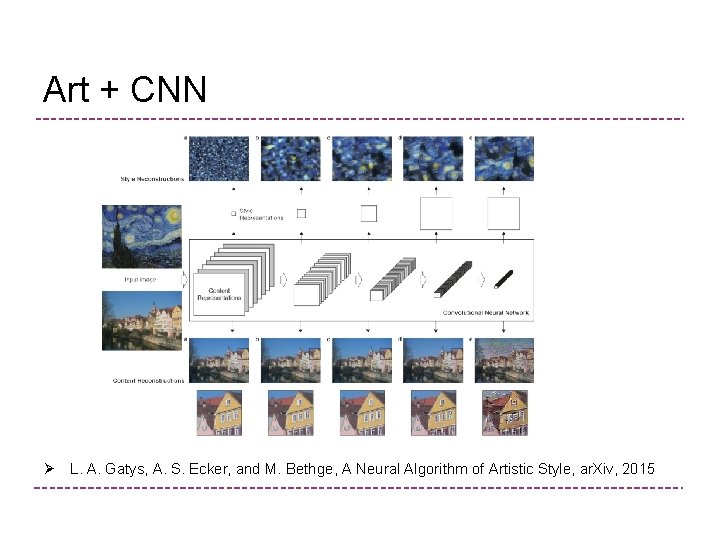

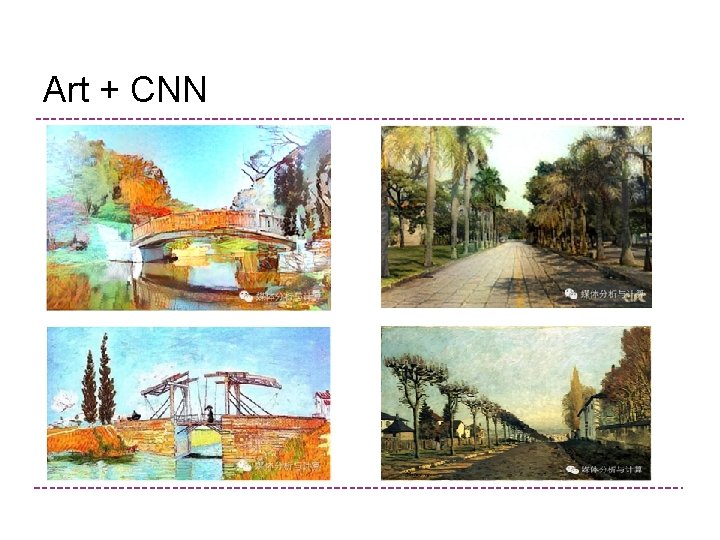

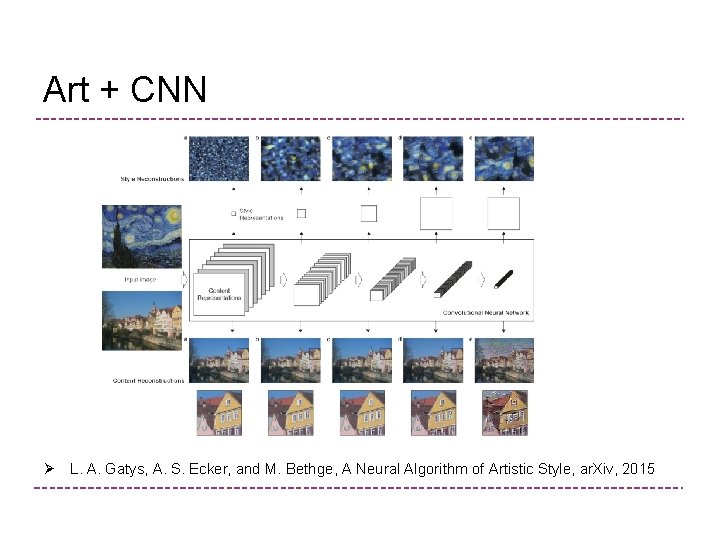

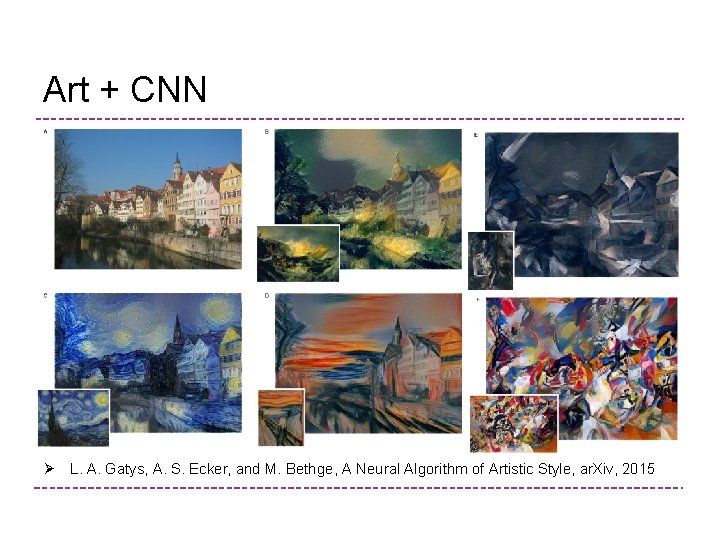

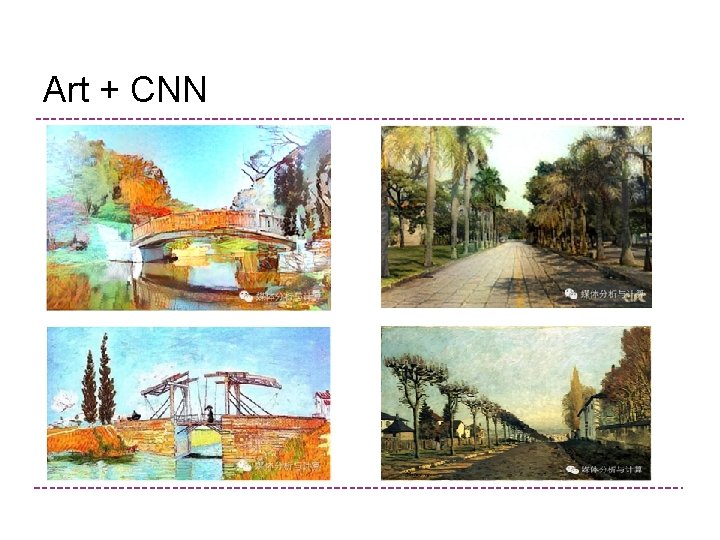

Art + CNN Ø L. A. Gatys, A. S. Ecker, and M. Bethge, A Neural Algorithm of Artistic Style, ar. Xiv, 2015

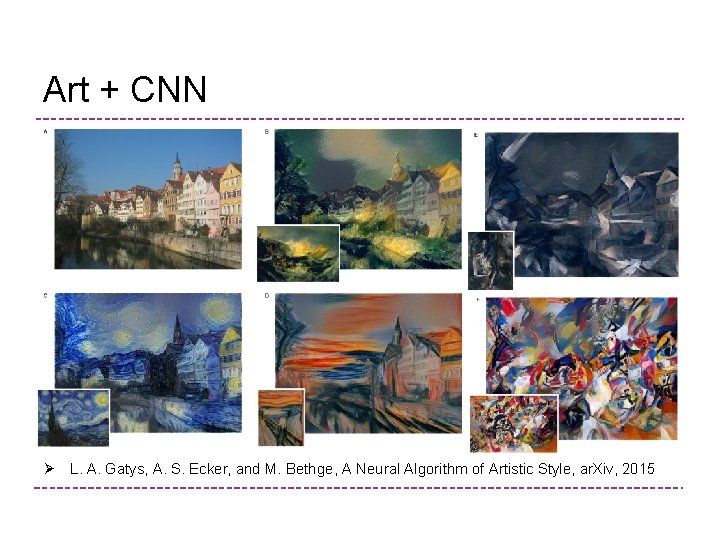

Art + CNN Ø L. A. Gatys, A. S. Ecker, and M. Bethge, A Neural Algorithm of Artistic Style, ar. Xiv, 2015

Art + CNN

Email: cyx@njust. edu. cn