Neural Machine Translation by Jointly Learning to Align

Neural Machine Translation by Jointly Learning to Align and Translate Presented by: Minhao Cheng, Pan Xu, Md Rizwan Parvez

Outline ● Problem setting ● Seq 2 seq model ○ ○ ● ● RNN/LSTM/GRU Autoencoder Attention Mechanism Pipeline and Model Architecture Experiment Results Extended Method ○ Self-attentive (transformer)

Problem Setting ● Input: sentence (word sequence) in the source language ● Output: sentence (word sequence) in the target language ● Model: seq 2 seq

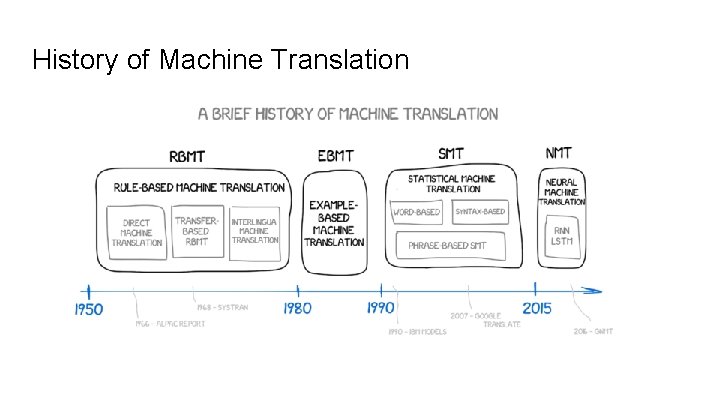

History of Machine Translation

History of Machine Translation ● Rule-based ○ used mostly in the creation of dictionaries and grammar programs ● Example-based ○ on the idea of analogy. ● Statistical ○ using statistical methods based on bilingual text corpora ● Neural ○ Deep learning

Neural machine translation (NMT) ● RNN/LSTM/GRU ○ Why? ■ Input/output length variant ■ Order dependent ● Seq 2 Seq Model

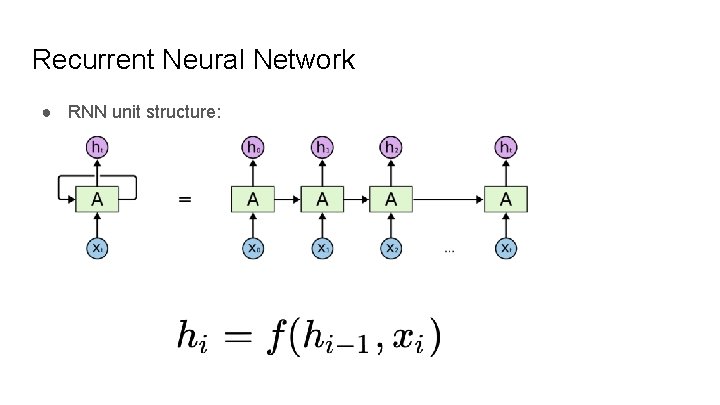

Recurrent Neural Network ● RNN unit structure:

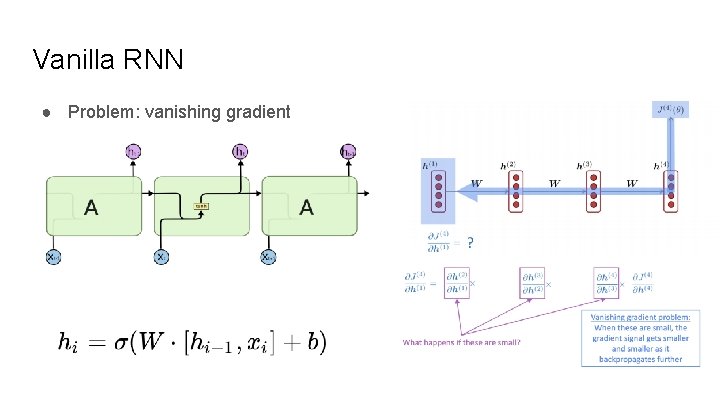

Vanilla RNN ● Problem: vanishing gradient

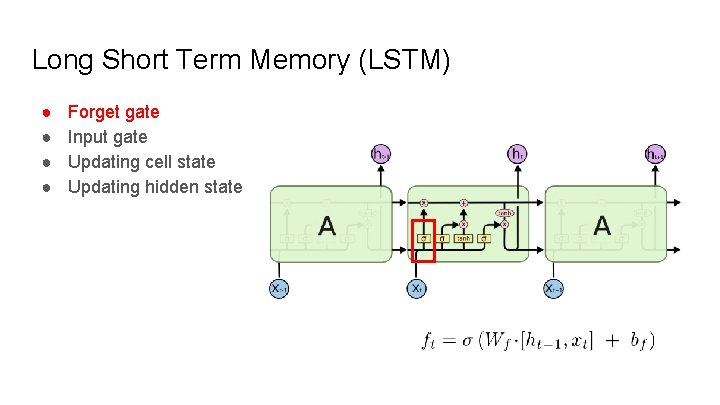

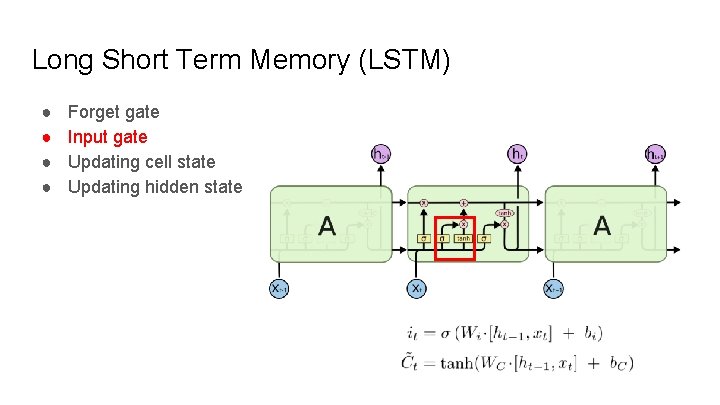

Long Short Term Memory (LSTM) ● ● Forget gate Input gate Updating cell state Updating hidden state

Long Short Term Memory (LSTM) ● ● Forget gate Input gate Updating cell state Updating hidden state

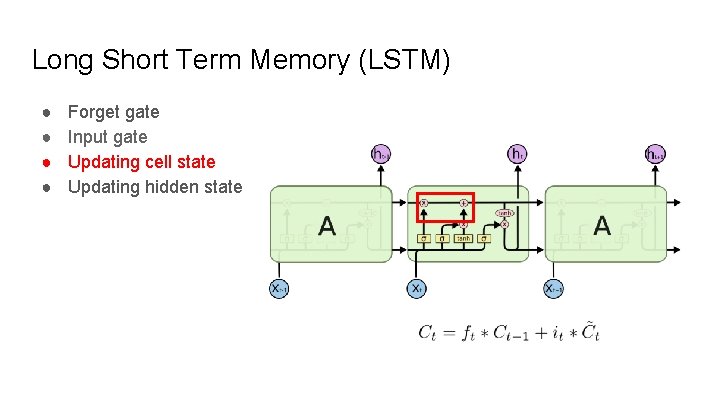

Long Short Term Memory (LSTM) ● ● Forget gate Input gate Updating cell state Updating hidden state

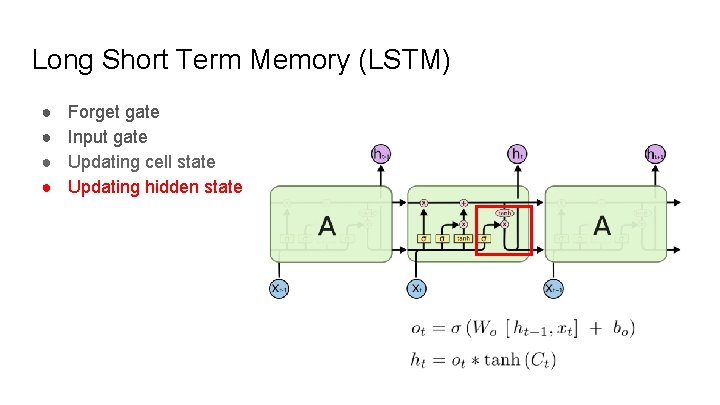

Long Short Term Memory (LSTM) ● ● Forget gate Input gate Updating cell state Updating hidden state

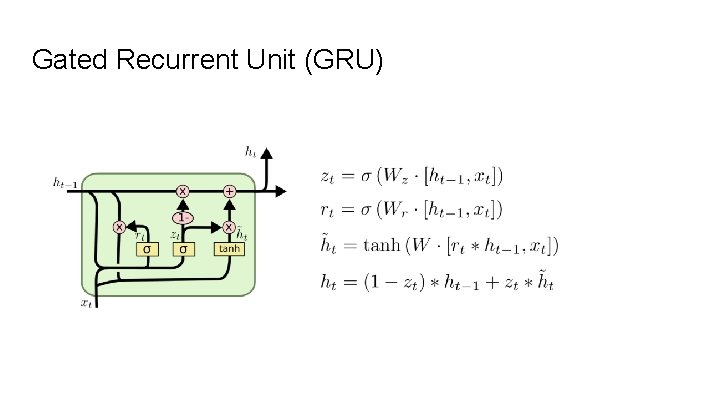

Gated Recurrent Unit (GRU)

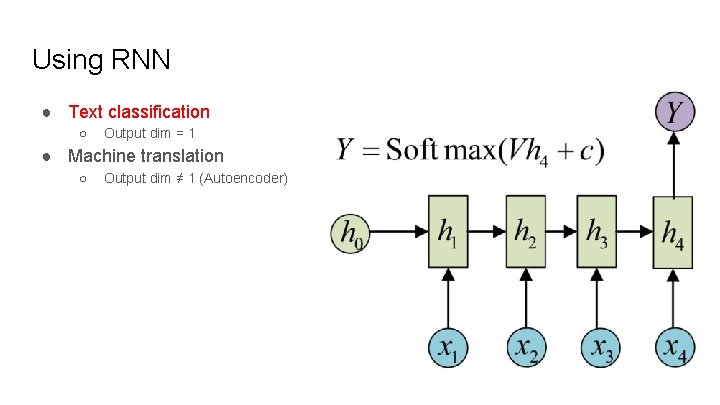

Using RNN ● Text classification ○ Output dim = 1 ● Machine translation ○ Output dim ≠ 1 (Autoencoder)

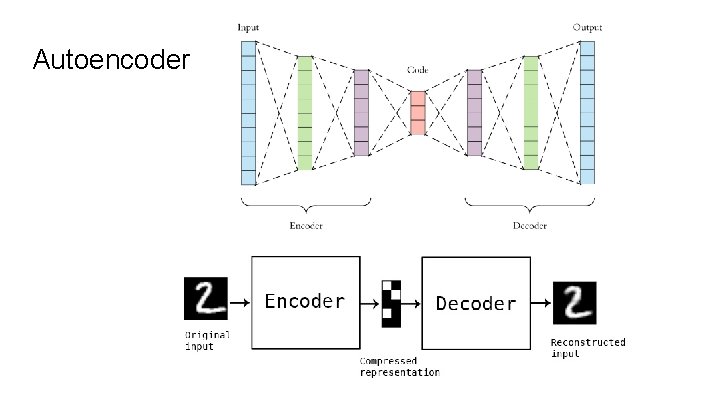

Autoencoder

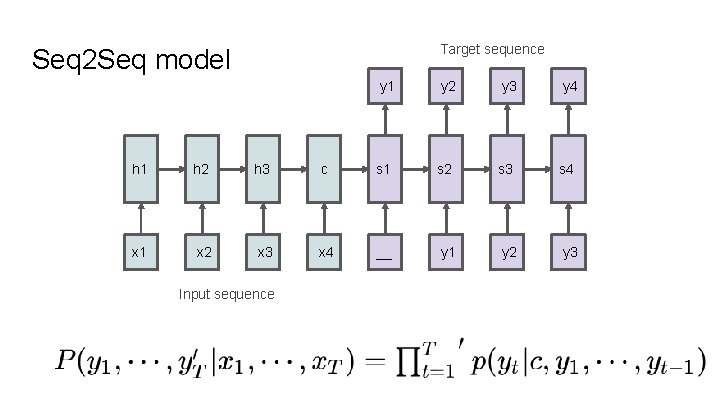

Target sequence Seq 2 Seq model y 1 y 2 y 3 y 4 h 1 h 2 h 3 c s 1 s 2 s 3 s 4 x 1 x 2 x 3 x 4 __ y 1 y 2 y 3 Input sequence

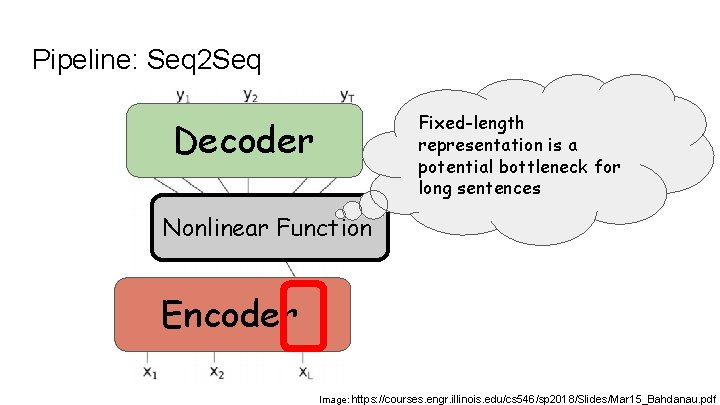

Pipeline: Seq 2 Seq Fixed-length representation is a potential bottleneck for long sentences Decoder Nonlinear Function Encoder Image: https: //courses. engr. illinois. edu/cs 546/sp 2018/Slides/Mar 15_Bahdanau. pdf

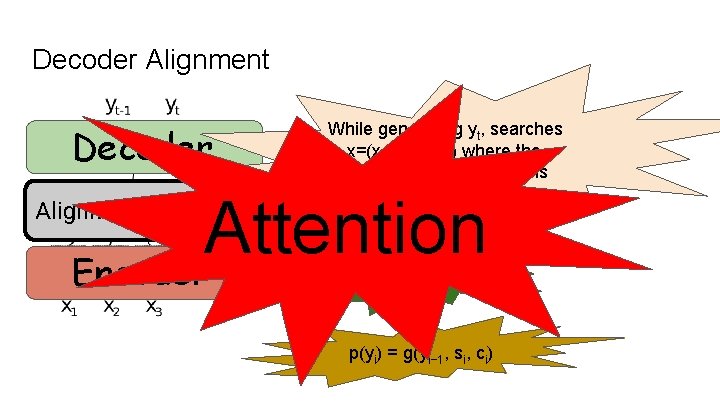

Decoder Alignment Decoder While generating yt, searches in x=(x 1 , …, x. T ) where the most relevant information is concentrated. Attention Encoder Alignment model ct =∑αt, j hj p(yi) = g(yi− 1, si, ci)

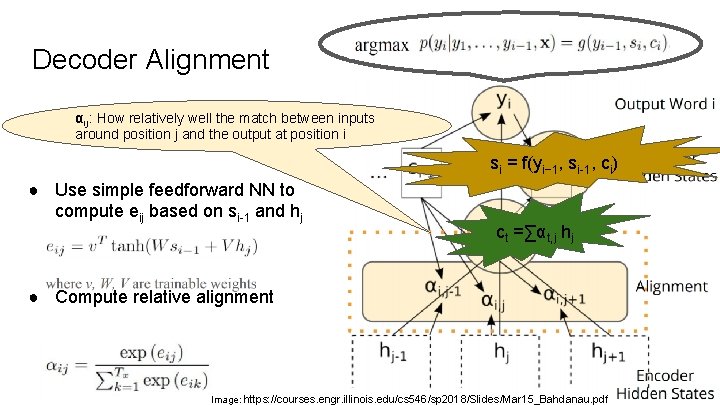

Decoder Alignment αij: How relatively well the match between inputs around position j and the output at position i si = f(yi− 1, si-1, ci) ● Use simple feedforward NN to compute eij based on si-1 and hj ct =∑αt, j hj ● Compute relative alignment Image: https: //courses. engr. illinois. edu/cs 546/sp 2018/Slides/Mar 15_Bahdanau. pdf

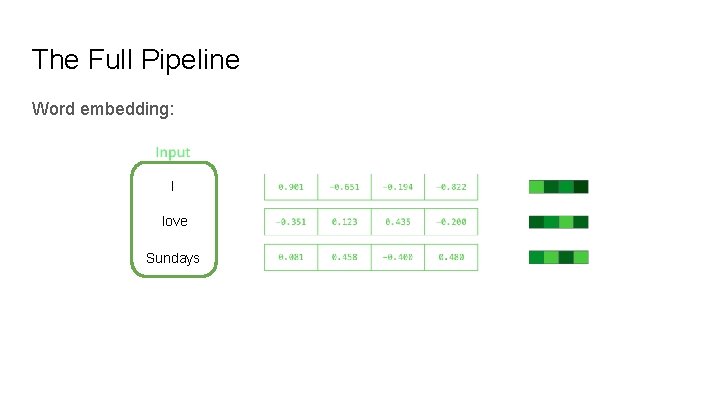

The Full Pipeline Word embedding: I love Sundays

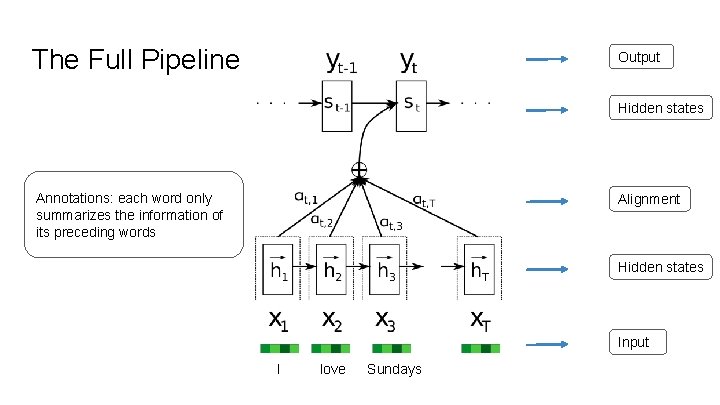

The Full Pipeline Output Hidden states Annotations: each word only summarizes the information of its preceding words Alignment Hidden states Input I love Sundays

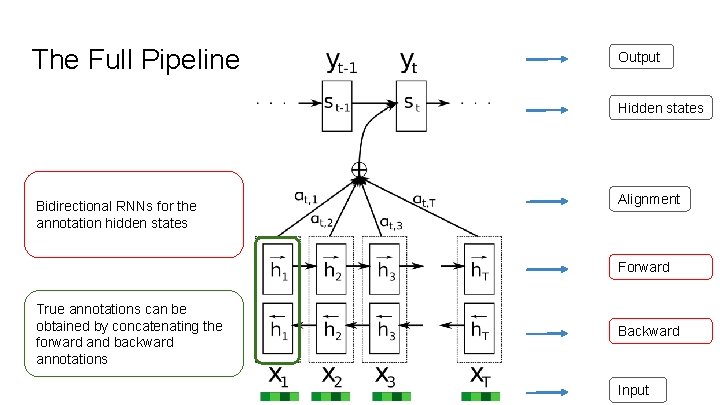

The Full Pipeline Output Hidden states Bidirectional RNNs for the annotation hidden states Alignment Forward True annotations can be obtained by concatenating the forward and backward annotations Backward Input

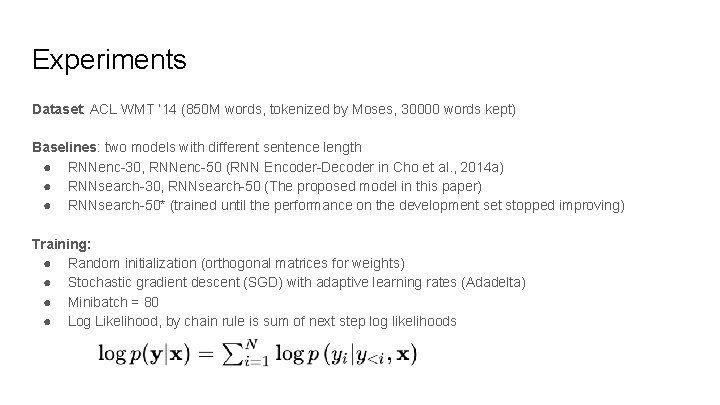

Experiments Dataset: ACL WMT ‘ 14 (850 M words, tokenized by Moses, 30000 words kept) Baselines: two models with different sentence length ● RNNenc-30, RNNenc-50 (RNN Encoder-Decoder in Cho et al. , 2014 a) ● RNNsearch-30, RNNsearch-50 (The proposed model in this paper) ● RNNsearch-50* (trained until the performance on the development set stopped improving) Training: ● Random initialization (orthogonal matrices for weights) ● Stochastic gradient descent (SGD) with adaptive learning rates (Adadelta) ● Minibatch = 80 ● Log Likelihood, by chain rule is sum of next step log likelihoods

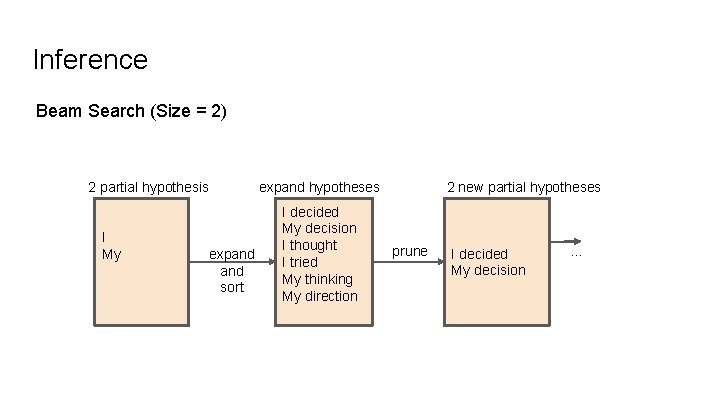

Inference Beam Search (Size = 2) 2 partial hypothesis I My expand sort expand hypotheses I decided My decision I thought I tried My thinking My direction 2 new partial hypotheses prune I decided My decision …

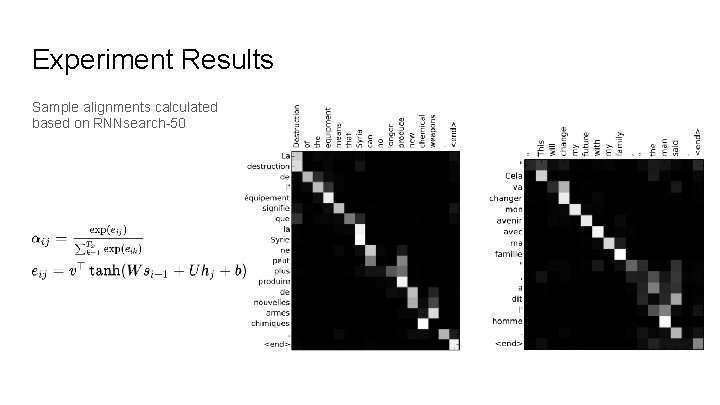

Experiment Results Sample alignments calculated based on RNNsearch-50

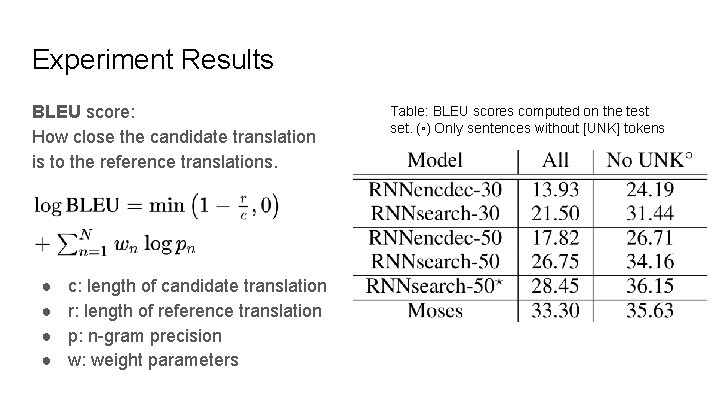

Experiment Results BLEU score: How close the candidate translation is to the reference translations. ● ● c: length of candidate translation r: length of reference translation p: n-gram precision w: weight parameters Table: BLEU scores computed on the test set. (◦) Only sentences without [UNK] tokens

Reference https: //jalammar. github. io/visualizing-neural-machine-translation-mechanics-of-seq 2 seq-models-with-attention/ http: //colah. github. io/posts/2015 -08 -Understanding-LSTMs/ https: //arxiv. org/pdf/1409. 0473. pdf https: //papers. nips. cc/paper/7181 -attention-is-all-you-need. pdf https: //docs. google. com/presentation/d/1 qu. IMx. EEPEf 5 Ek. RHc 2 USQao. JRC 4 QNX 6_Komd. ZTBMBWjk/edit#slid e=id. g 1 f 9 e 4 ca 2 dd_0_23 https: //pdfs. semanticscholar. org/8873/7 a 86 b 0 ddcc 54389 bf 3 aa 1 aaf 62030 deec 9 e 6. pdf https: //www. freecodecamp. org/news/a-history-of-machine-translation-from-the-cold-war-to-deep-learningf 1 d 335 ce 8 b 5/ https: //courses. engr. illinois. edu/cs 546/sp 2018/Slides/Mar 15_Bahdanau. pdf

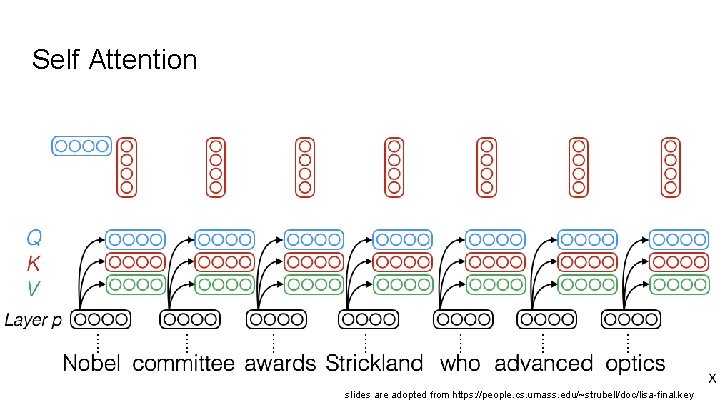

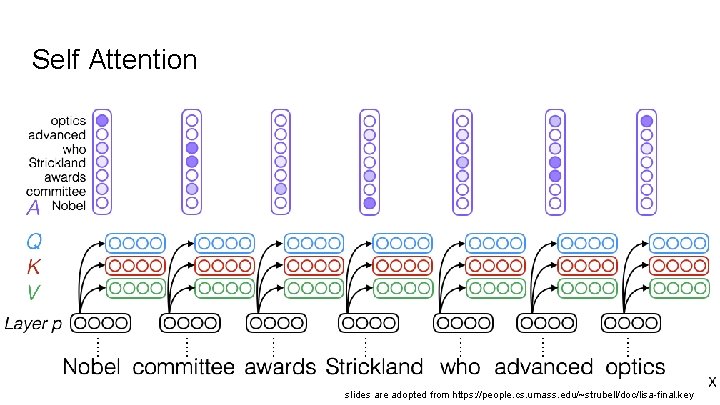

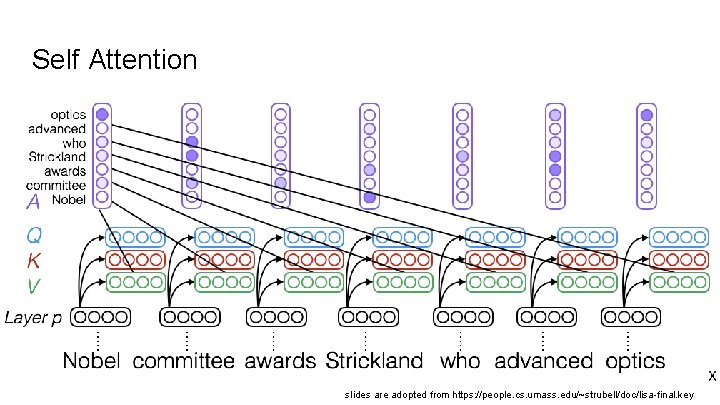

![Self Attention [Vaswani et al. 2017] slides are adopted from https: //people. cs. umass. Self Attention [Vaswani et al. 2017] slides are adopted from https: //people. cs. umass.](http://slidetodoc.com/presentation_image_h/19f584fdead35cf7613e3310ae2e652e/image-28.jpg)

Self Attention [Vaswani et al. 2017] slides are adopted from https: //people. cs. umass. edu/~strubell/doc/lisa-final. key

Self Attention slides are adopted from https: //people. cs. umass. edu/~strubell/doc/lisa-final. key

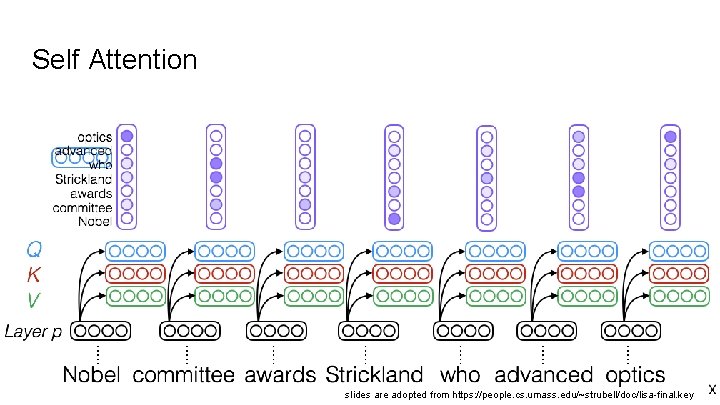

Self Attention slides are adopted from https: //people. cs. umass. edu/~strubell/doc/lisa-final. key

Self Attention slides are adopted from https: //people. cs. umass. edu/~strubell/doc/lisa-final. key

Self Attention slides are adopted from https: //people. cs. umass. edu/~strubell/doc/lisa-final. key

Thank You!!!

- Slides: 33